fa04_eldenfm1.qxp 2/28/2007 3:24 PM Page 1

Matrix Methods

in Data Mining

and Pattern

Recognition

�

fa04_eldenfm1.qxp 2/28/2007 3:24 PM Page 2

Fundamentals of Algorithms

Editor-in-Chief: Nicholas J. Higham, University of Manchester

The SIAM series on Fundamentals of Algorithms is a collection of short user-oriented books on state-

of-the-art numerical methods. Written by experts, the books provide readers with sufficient knowledge

to choose an appropriate method for an application and to understand the method’s strengths and

limitations. The books cover a range of topics drawn from numerical analysis and scientific computing.

The intended audiences are researchers and practitioners using the methods and upper level

undergraduates in mathematics, engineering, and computational science.

Books in this series not only provide the mathematical background for a method or class of methods

used in solving a specific problem but also explain how the method can be developed into an

algorithm and translated into software. The books describe the range of applicability of a method and

give guidance on troubleshooting solvers and interpreting results. The theory is presented at a level

accessible to the practitioner. MATLAB® software is the preferred language for codes presented since it

can be used across a wide variety of platforms and is an excellent environment for prototyping,

testing, and problem solving.

The series is intended to provide guides to numerical algorithms that are readily accessible, contain

practical advice not easily found elsewhere, and include understandable codes that implement the

algorithms.

Editorial Board

Peter Benner

Technische Universität Chemnitz

John R. Gilbert

University of California, Santa Barbara

Michael T. Heath

University of Illinois, Urbana-Champaign

C. T. Kelley

North Carolina State University

Cleve Moler

The MathWorks

James G. Nagy

Emory University

Series Volumes

Dianne P. O’Leary

University of Maryland

Robert D. Russell

Simon Fraser University

Robert D. Skeel

Purdue University

Danny Sorensen

Rice University

Andrew J. Wathen

Oxford University

Henry Wolkowicz

University of Waterloo

Eldén, L., Matrix Methods in Data Mining and Pattern Recognition

Hansen, P. C., Nagy, J. G., and O’Leary, D. P., Deblurring Images: Matrices, Spectra, and Filtering

Davis, T. A., Direct Methods for Sparse Linear Systems

Kelley, C. T., Solving Nonlinear Equations with Newton’s Method

�

fa04_eldenfm1.qxp 2/28/2007 3:24 PM Page 3

Lars Eldén

Linköping University

Linköping, Sweden

Matrix Methods

in Data Mining

and Pattern

Recognition

Society for Industrial and Applied Mathematics

Philadelphia

�

fa04_eldenfm1.qxp 2/28/2007 3:24 PM Page 4

Copyright © 2007 by the Society for Industrial and Applied Mathematics.

10 9 8 7 6 5 4 3 2 1

All rights reserved. Printed in the United States of America. No part of this book may be reproduced,

stored, or transmitted in any manner without the written permission of the publisher. For information,

write to the Society for Industrial and Applied Mathematics, 3600 University City Science Center,

Philadelphia, PA 19104-2688.

Trademarked names may be used in this book without the inclusion of a trademark symbol. These

names are used in an editorial context only; no infringement of trademark is intended.

Google is a trademark of Google, Inc.

MATLAB is a registered trademark of The MathWorks, Inc. For MATLAB product information, please

contact The MathWorks, Inc., 3 Apple Hill Drive, Natick, MA 01760-2098 USA, 508-647-7000,

Fax: 508-647-7101, info@mathworks.com, www.mathworks.com

Figures 6.2, 10.1, 10.7, 10.9, 10.11, 11.1, and 11.3 are from L. Eldén, Numerical linear algebra in

data mining, Acta Numer., 15:327–384, 2006. Reprinted with the permission of Cambridge University

Press.

Figures 14.1, 14.3, and 14.4 were constructed by the author from images appearing in P. N.

Belhumeur, J. P. Hespanha, and D. J. Kriegman, Eigenfaces vs. fisherfaces: Recognition using class

specific linear projection, IEEE Trans. Pattern Anal. Mach. Intell., 19:711–720, 1997.

Library of Congress Cataloging-in-Publication Data

Eldén, Lars, 1944-

Matrix methods in data mining and pattern recognition / Lars Eldén.

p. cm. — (Fundamentals of algorithms ; 04)

Includes bibliographical references and index.

ISBN 978-0-898716-26-9 (pbk. : alk. paper)

1. Data mining. 2. Pattern recognition systems—Mathematical models. 3. Algebras,

Linear. I. Title.

QA76.9.D343E52 2007

05.74—dc20

2006041348

is a registered trademark.

�

book

2007/2/2

page v

Contents

Preface

I

1

2

3

4

Linear Algebra Concepts and Matrix Decompositions

Vectors and Matrices in Data Mining and Pattern Recognition

Data Mining and Pattern Recognition . . . . . . . . . . . . . . .

1.1

Vectors and Matrices . . . . . . . . . . . . . . . . . . . . . . . .

1.2

Purpose of the Book . . . . . . . . . . . . . . . . . . . . . . . .

1.3

1.4

Programming Environments . . . . . . . . . . . . . . . . . . . .

Floating Point Computations . . . . . . . . . . . . . . . . . . . .

1.5

1.6

Notation and Conventions

. . . . . . . . . . . . . . . . . . . . .

Vectors and Matrices

2.1

2.2

2.3

2.4

2.5

2.6

Matrix-Vector Multiplication . . . . . . . . . . . . . . . . . . . .

Matrix-Matrix Multiplication . . . . . . . . . . . . . . . . . . .

Inner Product and Vector Norms

. . . . . . . . . . . . . . . . .

Matrix Norms . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Linear Independence: Bases

. . . . . . . . . . . . . . . . . . . .

The Rank of a Matrix . . . . . . . . . . . . . . . . . . . . . . . .

Linear Systems and Least Squares

3.1

3.2

3.3

3.4

3.5

3.6

LU Decomposition . . . . . . . . . . . . . . . . . . . . . . . . . .

Symmetric, Positive Definite Matrices . . . . . . . . . . . . . . .

Perturbation Theory and Condition Number . . . . . . . . . . .

Rounding Errors in Gaussian Elimination . . . . . . . . . . . . .

Banded Matrices . . . . . . . . . . . . . . . . . . . . . . . . . . .

The Least Squares Problem . . . . . . . . . . . . . . . . . . . .

Orthogonality

4.1

4.2

4.3

4.4

Orthogonal Vectors and Matrices

. . . . . . . . . . . . . . . . .

Elementary Orthogonal Matrices . . . . . . . . . . . . . . . . . .

Number of Floating Point Operations . . . . . . . . . . . . . . .

Orthogonal Transformations in Floating Point Arithmetic

. . .

v

ix

3

3

4

7

8

8

11

13

13

15

17

18

20

21

23

23

25

26

27

29

31

37

38

40

45

46

�

book

2007/2/23

page vi

Contents

QR Decomposition

5.1

5.2

5.3

5.4

5.5

5.6

Orthogonal Transformation to Triangular Form . . . . . . . . .

Solving the Least Squares Problem . . . . . . . . . . . . . . . .

Computing or Not Computing Q . . . . . . . . . . . . . . . . .

Flop Count for QR Factorization . . . . . . . . . . . . . . . . .

Error in the Solution of the Least Squares Problem . . . . . . .

Updating the Solution of a Least Squares Problem . . . . . . . .

Singular Value Decomposition

6.1

6.2

6.3

6.4

6.5

6.6

The Decomposition . . . . . . . . . . . . . . . . . . . . . . . . .

Fundamental Subspaces . . . . . . . . . . . . . . . . . . . . . . .

Matrix Approximation . . . . . . . . . . . . . . . . . . . . . . .

Principal Component Analysis . . . . . . . . . . . . . . . . . . .

Solving Least Squares Problems . . . . . . . . . . . . . . . . . .

Condition Number and Perturbation Theory for the Least Squares

Problem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Rank-Deficient and Underdetermined Systems . . . . . . . . . .

Computing the SVD . . . . . . . . . . . . . . . . . . . . . . . .

Complete Orthogonal Decomposition . . . . . . . . . . . . . . .

6.7

6.8

6.9

Reduced-Rank Least Squares Models

7.1

7.2

Truncated SVD: Principal Component Regression . . . . . . . .

A Krylov Subspace Method . . . . . . . . . . . . . . . . . . . .

Tensor Decomposition

8.1

8.2

8.3

8.4

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Basic Tensor Concepts

. . . . . . . . . . . . . . . . . . . . . . .

A Tensor SVD . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Approximating a Tensor by HOSVD . . . . . . . . . . . . . . .

47

47

51

52

53

53

54

57

57

61

63

66

66

69

70

72

72

75

77

80

91

91

92

94

96

Clustering and Nonnegative Matrix Factorization

9.1

9.2

101

The k-Means Algorithm . . . . . . . . . . . . . . . . . . . . . . 102

Nonnegative Matrix Factorization . . . . . . . . . . . . . . . . . 106

vi

5

6

7

8

9

II Data Mining Applications

10 Classification of Handwritten Digits

113

Handwritten Digits and a Simple Algorithm . . . . . . . . . . . 113

Classification Using SVD Bases

. . . . . . . . . . . . . . . . . . 115

Tangent Distance . . . . . . . . . . . . . . . . . . . . . . . . . . 122

10.1

10.2

10.3

11.1

11.2

11.3

11.4

11 Text Mining

129

. . . . . . . . . . . . 130

Preprocessing the Documents and Queries

The Vector Space Model

. . . . . . . . . . . . . . . . . . . . . . 131

Latent Semantic Indexing . . . . . . . . . . . . . . . . . . . . . . 135

Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

�

book

2007/2/23

page vii

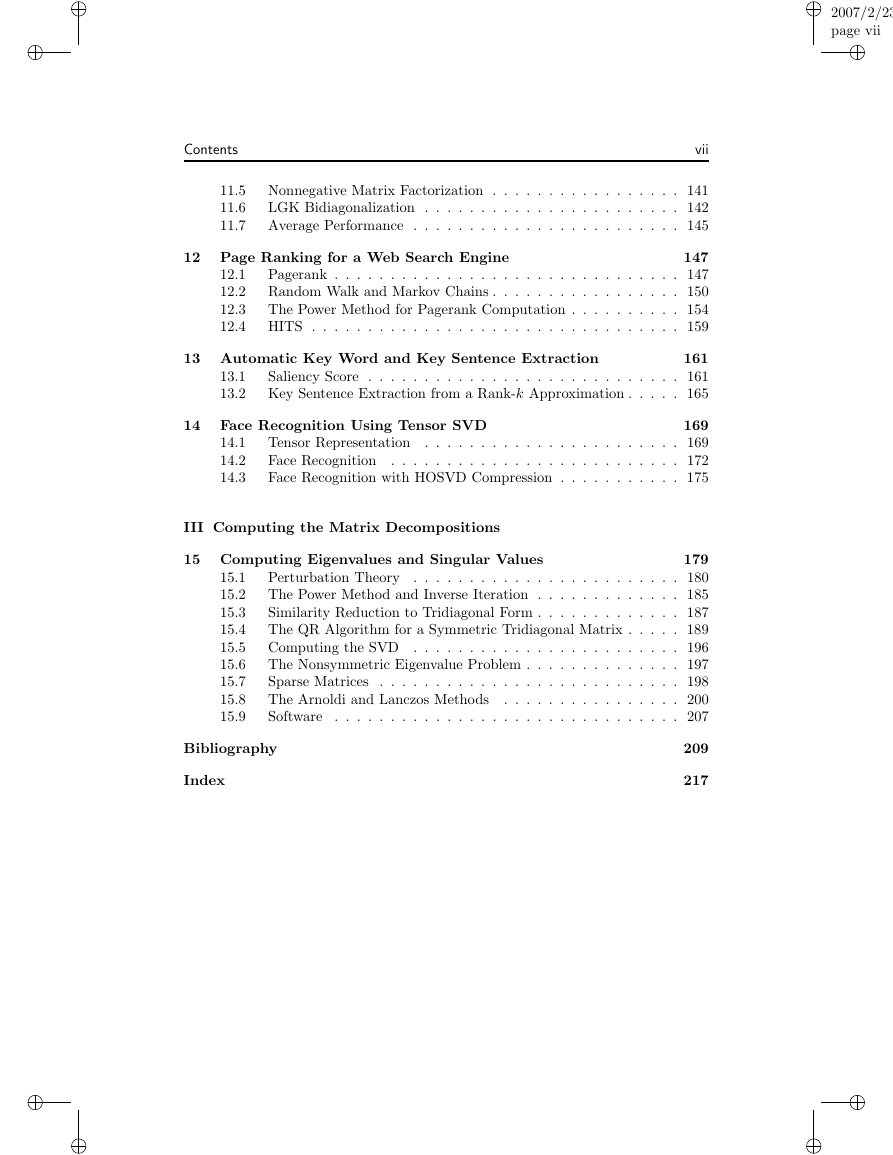

Contents

vii

11.5

11.6

11.7

Nonnegative Matrix Factorization . . . . . . . . . . . . . . . . . 141

LGK Bidiagonalization . . . . . . . . . . . . . . . . . . . . . . . 142

Average Performance . . . . . . . . . . . . . . . . . . . . . . . . 145

12 Page Ranking for a Web Search Engine

147

Pagerank . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Random Walk and Markov Chains . . . . . . . . . . . . . . . . . 150

The Power Method for Pagerank Computation . . . . . . . . . . 154

HITS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

12.1

12.2

12.3

12.4

13 Automatic Key Word and Key Sentence Extraction

161

13.1

Saliency Score . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

13.2 Key Sentence Extraction from a Rank-k Approximation . . . . . 165

14 Face Recognition Using Tensor SVD

169

Tensor Representation . . . . . . . . . . . . . . . . . . . . . . . 169

Face Recognition . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Face Recognition with HOSVD Compression . . . . . . . . . . . 175

14.1

14.2

14.3

III Computing the Matrix Decompositions

15 Computing Eigenvalues and Singular Values

179

Perturbation Theory . . . . . . . . . . . . . . . . . . . . . . . . 180

The Power Method and Inverse Iteration . . . . . . . . . . . . . 185

Similarity Reduction to Tridiagonal Form . . . . . . . . . . . . . 187

The QR Algorithm for a Symmetric Tridiagonal Matrix . . . . . 189

Computing the SVD . . . . . . . . . . . . . . . . . . . . . . . . 196

The Nonsymmetric Eigenvalue Problem . . . . . . . . . . . . . . 197

Sparse Matrices . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

The Arnoldi and Lanczos Methods

. . . . . . . . . . . . . . . . 200

Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

15.1

15.2

15.3

15.4

15.5

15.6

15.7

15.8

15.9

Bibliography

Index

209

217

�

book

2007/2/23

page viii

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc