Foundations and Trends R in

Machine Learning

Vol. 2, No. 1 (2009) 1–127

c 2009 Y. Bengio

DOI: 10.1561/2200000006

Learning Deep Architectures for AI

By Yoshua Bengio

Contents

1 Introduction

1.1 How do We Train Deep Architectures?

1.2

Intermediate Representations: Sharing Features and

Abstractions Across Tasks

1.3 Desiderata for Learning AI

1.4 Outline of the Paper

2 Theoretical Advantages of Deep Architectures

2.1 Computational Complexity

2.2

Informal Arguments

3 Local vs Non-Local Generalization

3.1 The Limits of Matching Local Templates

3.2 Learning Distributed Representations

4 Neural Networks for Deep Architectures

4.1 Multi-Layer Neural Networks

4.2 The Challenge of Training Deep Neural Networks

4.3 Unsupervised Learning for Deep Architectures

4.4 Deep Generative Architectures

4.5 Convolutional Neural Networks

4.6 Auto-Encoders

2

5

7

10

11

13

16

18

21

21

27

30

30

31

39

40

43

45

�

5 Energy-Based Models and Boltzmann Machines

5.1 Energy-Based Models and Products of Experts

5.2 Boltzmann Machines

5.3 Restricted Boltzmann Machines

5.4 Contrastive Divergence

6 Greedy Layer-Wise Training of Deep

Architectures

6.1 Layer-Wise Training of Deep Belief Networks

6.2 Training Stacked Auto-Encoders

6.3 Semi-Supervised and Partially Supervised Training

7 Variants of RBMs and Auto-Encoders

7.1 Sparse Representations in Auto-Encoders

and RBMs

7.2 Denoising Auto-Encoders

7.3 Lateral Connections

7.4 Conditional RBMs and Temporal RBMs

7.5 Factored RBMs

7.6 Generalizing RBMs and Contrastive Divergence

8 Stochastic Variational Bounds for Joint

Optimization of DBN Layers

8.1 Unfolding RBMs into Infinite Directed

Belief Networks

8.2 Variational Justification of Greedy Layer-wise Training

8.3 Joint Unsupervised Training of All the Layers

9 Looking Forward

9.1 Global Optimization Strategies

9.2 Why Unsupervised Learning is Important

9.3 Open Questions

48

48

53

55

59

68

68

71

72

74

74

80

82

83

85

86

89

90

92

95

99

99

105

106

�

10 Conclusion

Acknowledgments

References

110

112

113

�

Foundations and Trends R in

Machine Learning

Vol. 2, No. 1 (2009) 1–127

c 2009 Y. Bengio

DOI: 10.1561/2200000006

Learning Deep Architectures for AI

Yoshua Bengio

Dept. IRO, Universit´e de Montr´eal, C.P. 6128, Montreal, Qc, H3C 3J7,

Canada, yoshua.bengio@umontreal.ca

Abstract

Theoretical results suggest that in order to learn the kind of com-

plicated functions that can represent high-level abstractions (e.g., in

vision, language, and other AI-level tasks), one may need deep architec-

tures. Deep architectures are composed of multiple levels of non-linear

operations, such as in neural nets with many hidden layers or in com-

plicated propositional formulae re-using many sub-formulae. Searching

the parameter space of deep architectures is a difficult task, but learning

algorithms such as those for Deep Belief Networks have recently been

proposed to tackle this problem with notable success, beating the state-

of-the-art in certain areas. This monograph discusses the motivations

and principles regarding learning algorithms for deep architectures, in

particular those exploiting as building blocks unsupervised learning of

single-layer models such as Restricted Boltzmann Machines, used to

construct deeper models such as Deep Belief Networks.

�

1

Introduction

Allowing computers to model our world well enough to exhibit what

we call intelligence has been the focus of more than half a century of

research. To achieve this, it is clear that a large quantity of informa-

tion about our world should somehow be stored, explicitly or implicitly,

in the computer. Because it seems daunting to formalize manually all

that information in a form that computers can use to answer ques-

tions and generalize to new contexts, many researchers have turned

to learning algorithms to capture a large fraction of that information.

Much progress has been made to understand and improve learning

algorithms, but the challenge of artificial intelligence (AI) remains. Do

we have algorithms that can understand scenes and describe them in

natural language? Not really, except in very limited settings. Do we

have algorithms that can infer enough semantic concepts to be able to

interact with most humans using these concepts? No. If we consider

image understanding, one of the best specified of the AI tasks, we real-

ize that we do not yet have learning algorithms that can discover the

many visual and semantic concepts that would seem to be necessary to

interpret most images on the web. The situation is similar for other AI

tasks.

2

�

3

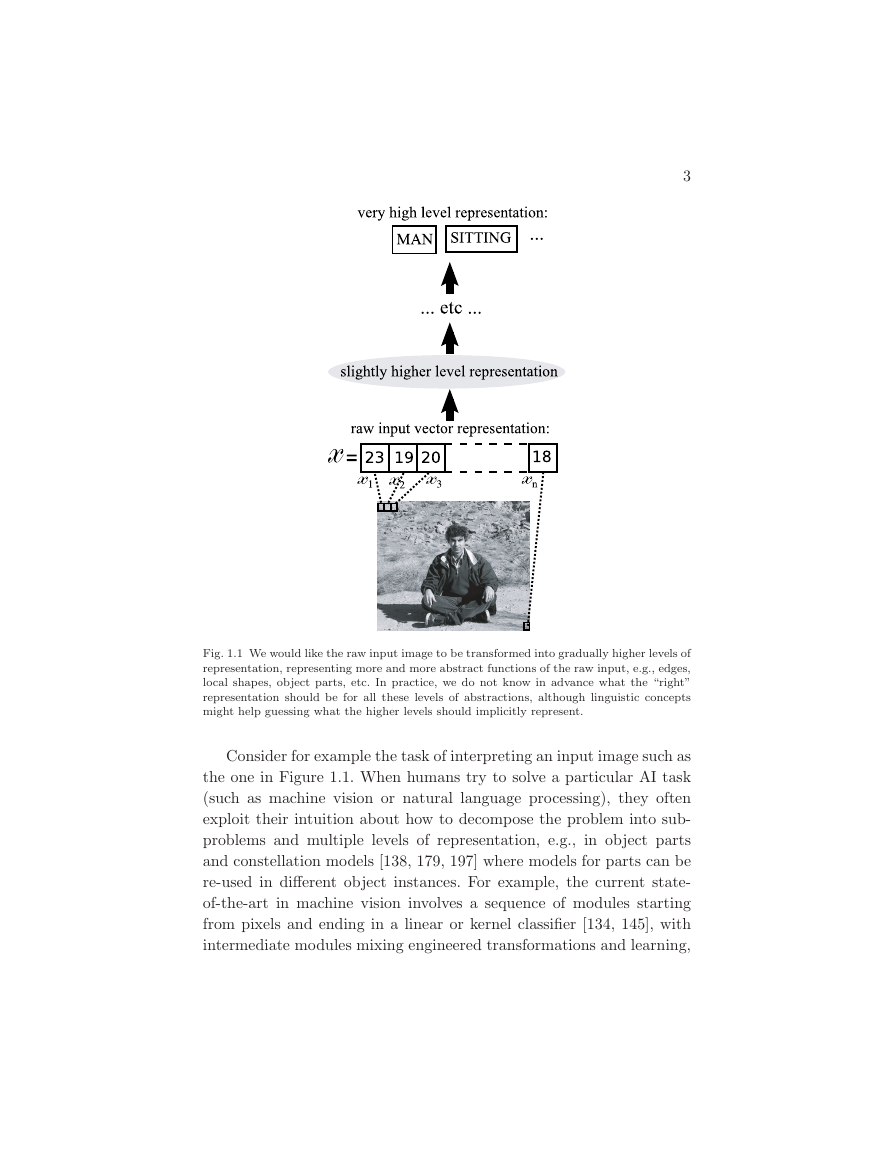

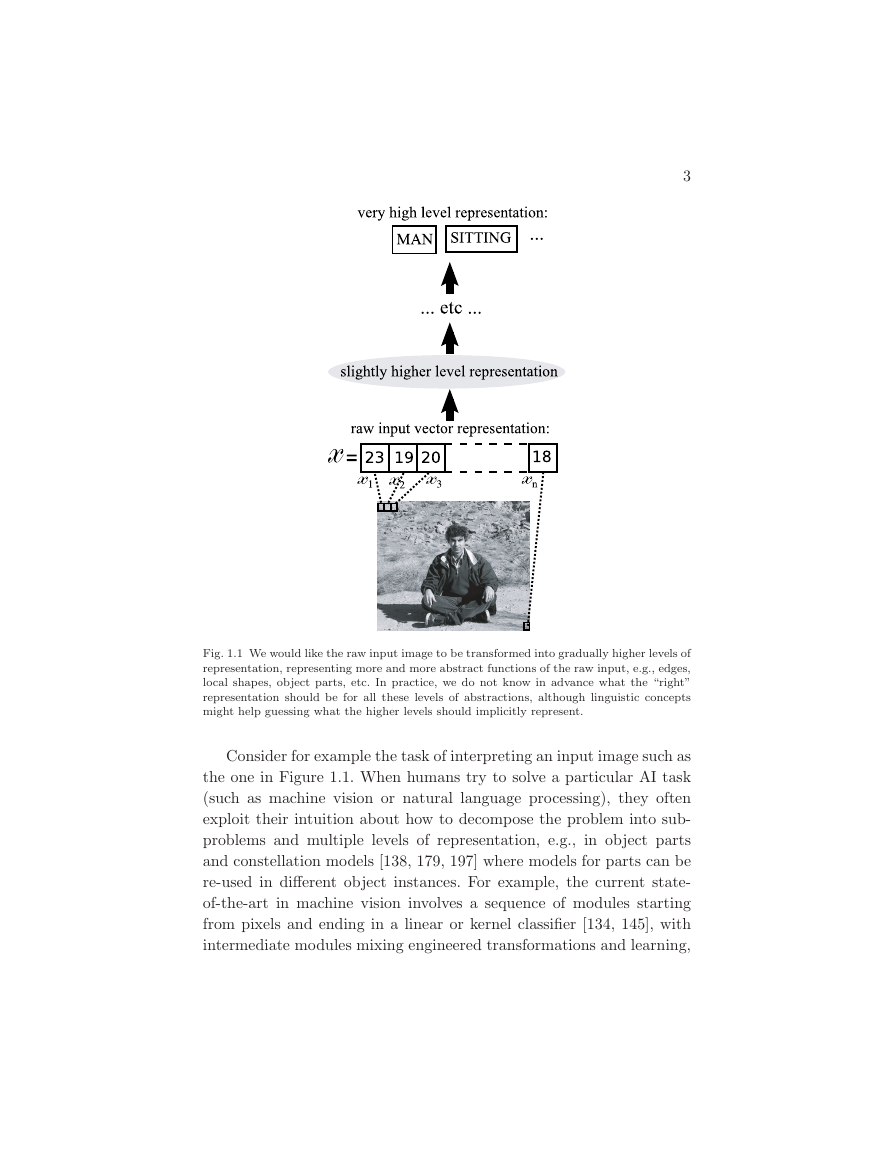

Fig. 1.1 We would like the raw input image to be transformed into gradually higher levels of

representation, representing more and more abstract functions of the raw input, e.g., edges,

local shapes, object parts, etc. In practice, we do not know in advance what the “right”

representation should be for all these levels of abstractions, although linguistic concepts

might help guessing what the higher levels should implicitly represent.

Consider for example the task of interpreting an input image such as

the one in Figure 1.1. When humans try to solve a particular AI task

(such as machine vision or natural language processing), they often

exploit their intuition about how to decompose the problem into sub-

problems and multiple levels of representation, e.g., in object parts

and constellation models [138, 179, 197] where models for parts can be

re-used in different object instances. For example, the current state-

of-the-art in machine vision involves a sequence of modules starting

from pixels and ending in a linear or kernel classifier [134, 145], with

intermediate modules mixing engineered transformations and learning,

�

4 Introduction

e.g., first extracting low-level features that are invariant to small geo-

metric variations (such as edge detectors from Gabor filters), transform-

ing them gradually (e.g., to make them invariant to contrast changes

and contrast inversion, sometimes by pooling and sub-sampling), and

then detecting the most frequent patterns. A plausible and common

way to extract useful information from a natural image involves trans-

forming the raw pixel representation into gradually more abstract rep-

resentations, e.g., starting from the presence of edges, the detection of

more complex but local shapes, up to the identification of abstract cat-

egories associated with sub-objects and objects which are parts of the

image, and putting all these together to capture enough understanding

of the scene to answer questions about it.

Here, we assume that the computational machinery necessary

to express complex behaviors (which one might label “intelligent”)

requires highly varying mathematical functions, i.e., mathematical func-

tions that are highly non-linear in terms of raw sensory inputs, and

display a very large number of variations (ups and downs) across the

domain of interest. We view the raw input to the learning system as

a high dimensional entity, made of many observed variables, which

are related by unknown intricate statistical relationships. For example,

using knowledge of the 3D geometry of solid objects and lighting, we

can relate small variations in underlying physical and geometric fac-

tors (such as position, orientation, lighting of an object) with changes

in pixel intensities for all the pixels in an image. We call these factors

of variation because they are different aspects of the data that can vary

separately and often independently. In this case, explicit knowledge of

the physical factors involved allows one to get a picture of the math-

ematical form of these dependencies, and of the shape of the set of

images (as points in a high-dimensional space of pixel intensities) asso-

ciated with the same 3D object. If a machine captured the factors that

explain the statistical variations in the data, and how they interact to

generate the kind of data we observe, we would be able to say that the

machine understands those aspects of the world covered by these factors

of variation. Unfortunately, in general and for most factors of variation

underlying natural images, we do not have an analytical understand-

ing of these factors of variation. We do not have enough formalized

�

1.1 How do We Train Deep Architectures?

5

prior knowledge about the world to explain the observed variety of

images, even for such an apparently simple abstraction as MAN, illus-

trated in Figure 1.1. A high-level abstraction such as MAN has the

property that it corresponds to a very large set of possible images,

which might be very different from each other from the point of view

of simple Euclidean distance in the space of pixel intensities. The set

of images for which that label could be appropriate forms a highly con-

voluted region in pixel space that is not even necessarily a connected

region. The MAN category can be seen as a high-level abstraction

with respect to the space of images. What we call abstraction here can

be a category (such as the MAN category) or a feature, a function of

sensory data, which can be discrete (e.g., the input sentence is at the

past tense) or continuous (e.g., the input video shows an object moving

at 2 meter/second). Many lower-level and intermediate-level concepts

(which we also call abstractions here) would be useful to construct

a MAN-detector. Lower level abstractions are more directly tied to

particular percepts, whereas higher level ones are what we call “more

abstract” because their connection to actual percepts is more remote,

and through other, intermediate-level abstractions.

In addition to the difficulty of coming up with the appropriate inter-

mediate abstractions, the number of visual and semantic categories

(such as MAN) that we would like an “intelligent” machine to cap-

ture is rather large. The focus of deep architecture learning is to auto-

matically discover such abstractions, from the lowest level features to

the highest level concepts. Ideally, we would like learning algorithms

that enable this discovery with as little human effort as possible, i.e.,

without having to manually define all necessary abstractions or hav-

ing to provide a huge set of relevant hand-labeled examples. If these

algorithms could tap into the huge resource of text and images on the

web, it would certainly help to transfer much of human knowledge into

machine-interpretable form.

1.1 How do We Train Deep Architectures?

Deep learning methods aim at learning feature hierarchies with fea-

tures from higher levels of the hierarchy formed by the composition of

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc