通天塔

作者\标题\内容

搜索

Continuous control with deep reinforcement learning

通过深入强化学习的持续控制

日期:2016-02-29

作者:Timothy P. Lillicrap 、Jonathan J. Hunt 、Alexander Pritzel 、Nicolas Heess 、Tom Erez 、Yuval Tassa 、David Silver 、

Daan Wierstra

论文:http://arxiv.org/pdf/1509.02971v5.pdf

报错 申请删除

ABSTRACT

摘要

We adapt the ideas underlying the success of Deep Q-Learning to the continuous action domain. We present an actor-critic, model-free algorithm based on

the deterministic policy gradient that can operate over continuous action spaces. Using the same learning algorithm, network architecture and hyper-

parameters, our algorithm robustly solves more than 20 simulated physics tasks, including classic problems such as cartpole swing-up, dexterous

manipulation, legged locomotion and car driving. Our algorithm is able to find policies whose performance is competitive with those found by a planning

algorithm with full access to the dynamics of the domain and its derivatives. We further demonstrate that for many of the tasks the algorithm can learn

policies “end-to-end”: directly from raw pixel inputs.

我们将Deep Q-Learning成功的基本思想应用于持续行动领域。我们提出一个基于确定性策略梯度的演员评论者,无模型算法,该算法可以在连

续动作空间上运行。使用相同的学习算法,网络架构和超参数,我们的算法强大地解决了超过20个模拟物理任务,包括经典问题,例如小推车

摆动,灵巧操纵,腿式运动和汽车驾驶。我们的算法能够找到策略,这些策略的性能与计划算法所发现的策略相当具有竞争力,可以充分利用

域及其衍生物的动态性。我们进一步证明,对于许多任务,算法可以学习策略“端到端”:直接从原始像素输入。

1 INTRODUCTION

1引言

One of the primary goals of the field of artificial intelligence is to solve complex tasks from unprocessed, high-dimensional, sensory input. Recently,

significant progress has been made by combining advances in deep learning for sensory processing (Krizhevsky et al., 2012) with reinforcement learning,

resulting in the “Deep Q Network” (DQN) algorithm (Mnih et al., 2015) that is capable of human level performance on many Atari video games using

unprocessed pixels for input. To do so, deep neural network function approximators were used to estimate the action-value function.

人工智能领域的主要目标之一是从未经处理的高维感官输入解决复杂任务。最近,通过将感官加工的深度学习(Krizhevsky等,2012)与强化学

习相结合,取得了重大进展,形成了“Deep Q Network”(DQN)算法(Mnih等,2015)能够在许多使用未处理像素输入的Atari视频游戏上实现

人性化的性能。为此,使用深度神经网络函数逼近器来估计动作值函数。

However, while DQN solves problems with high-dimensional observation spaces, it can only handle discrete and low-dimensional action spaces. Many tasks

of interest, most notably physical control tasks, have continuous (real valued) and high dimensional action spaces. DQN cannot be straightforwardly applied

to continuous domains since it relies on a finding the action that maximizes the action-value function, which in the continuous valued case requires an

iterative optimization process at every step.

然而,尽管DQN解决了高维观测空间的问题,但它只能处理离散的和低维的动作空间。许多感兴趣的任务,最显着的是物理控制任务,具有连

续(实值)和高维空间。DQN不能直接应用于连续域,因为它依赖于最大化动作值函数的动作,在连续值情况下需要在每一步都进行迭代优

化。

An obvious approach to adapting deep reinforcement learning methods such as DQN to continuous domains is to to simply discretize the action space.

However, this has many limitations, most notably the curse of dimensionality: the number of actions increases exponentially with the number of degrees of

freedom. For example, a 7 degree of freedom system (as in the human arm) with the coarsest discretization

for each joint leads to an

action space with dimensionality:

. The situation is even worse for tasks that require fine control of actions as they require a correspondingly

finer grained discretization, leading to an explosion of the number of discrete actions. Such large action spaces are difficult to explore efficiently, and thus

successfully training DQN-like networks in this context is likely intractable. Additionally, naive discretization of action spaces needlessly throws away

information about the structure of the action domain, which may be essential for solving many problems.

�

将深度强化学习方法(如DQN)适用于连续域的一种显而易见的方法是简单地将动作空间离散化。然而,这具有许多限制,最显着的是维度的

的7自由度系统(如在人类手臂

诅咒:行动的数量随着自由度的数量呈指数增长。例如,对于每个关节,具有最粗糙离散化

中)导致具有维度的动作空间:

。对于需要对行为进行精细控制的任务来说,情况更糟,因为这些任务需要相应的细化离散化,导

致离散操作的数量激增。这样的大动作空间很难有效地探索,因此在这种情况下成功地训练类DQN网络很可能是棘手的。另外,行为空间的天

真离散无用地抛弃了关于行动领域结构的信息,这对解决许多问题可能是必不可少的。

In this work we present a model-free, off-policy actor-critic algorithm using deep function approximators that can learn policies in high-dimensional,

continuous action spaces. Our work is based on the deterministic policy gradient (DPG) algorithm (Silver et al., 2014) (itself similar to NFQCA (Hafner &

Riedmiller, 2011), and similar ideas can be found in (Prokhorov et al., 1997)). However, as we show below, a naive application of this actor-critic method

with neural function approximators is unstable for challenging problems.

在这项工作中,我们提出了一个使用深度函数逼近器的无模型,不对外演员 - 评论者算法,可以学习高维连续动作空间中的策略。我们的工作基

于确定性政策梯度(DPG)算法(Silver等,2014)(本身类似于NFQCA(Hafner&Riedmiller,2011),类似的观点可以在(Prokhorov等,

1997)中找到) 。然而,正如我们下面所展示的那样,这种具有神经函数逼近器的actor-critic方法的天真应用对于具有挑战性的问题是不稳定

的。

These authors contributed equally.

*

Here we combine the actor-critic approach with insights from the recent success of Deep Q Network (DQN) (Mnih et al., 2013; 2015). Prior to DQN, it was

generally believed that learning value functions using large, non-linear function approximators was difficult and unstable. DQN is able to learn value

functions using such function approximators in a stable and robust way due to two innovations: 1. the network is trained off-policy with samples from a

replay buffer to minimize correlations between samples; 2. the network is trained with a target Q network to give consistent targets during temporal

difference backups. In this work we make use of the same ideas, along with batch normalization (Ioffe & Szegedy, 2015), a recent advance in deep learning.

在这里,我们将演员 - 评论者的方法与最近Deep Q Network(DQN)成功的见解相结合(Mnih et al。,2013; 2015)。在DQN之前,人们普遍认

为使用大型非线性函数逼近器的学习值函数是困难且不稳定的。DQN能够以稳定和强大的方式使用这些函数逼近器学习值函数,这归功于两项

创新:1.网络通过来自重播缓冲区的样本进行关闭策略训练,以最小化样本之间的相关性; 2.网络接受目标Q网络的训练,在时间差异备份期间提

供一致的目标。在这项工作中,我们利用了同样的想法,以及批量标准化(Ioffe&Szegedy,2015),这是近期深度学习的进展。

In order to evaluate our method we constructed a variety of challenging physical control problems that involve complex multi-joint movements, unstable and

rich contact dynamics, and gait behavior. Among these are classic problems such as the cartpole swing-up problem, as well as many new domains. A long-

standing challenge of robotic control is to learn an action policy directly from raw sensory input such as video. Accordingly, we place a fixed viewpoint

camera in the simulator and attempted all tasks using both low-dimensional observations (e.g. joint angles) and directly from pixels.

为了评估我们的方法,我们构建了各种具有挑战性的物理控制问题,这些问题涉及复杂的多关节运动,不稳定和丰富的接触动态以及步态行

为。其中包括典型的问题,例如车轮摇摆问题,以及许多新的领域。机器人控制的长期挑战是直接从原始感官输入(如视频)中学习行动策

略。因此,我们在模拟器中放置一个固定的视点相机,并尝试使用低维度观察(例如关节角度)和直接使用像素的所有任务。

Our model-free approach which we call Deep DPG (DDPG) can learn competitive policies for all of our tasks using low-dimensional observations (e.g.

cartesian coordinates or joint angles) using the same hyper-parameters and network structure. In many cases, we are also able to learn good policies directly

from pixels, again keeping hyperparameters and network structure constant 1.

我们称为Deep DPG(DDPG)的无模型方法可以使用相同的超参数和网络结构,使用低维观测(例如笛卡尔坐标或关节角度)学习我们所有任

务的竞争策略。在很多情况下,我们还能够直接从像素中学习良好的策略,同时保持超参数和网络结构不变1。

A key feature of the approach is its simplicity: it requires only a straightforward actor-critic architecture and learning algorithm with very few “moving

parts”, making it easy to implement and scale to more difficult problems and larger networks. For the physical control problems we compare our results to a

baseline computed by a planner (Tassa et al., 2012) that has full access to the underlying simulated dynamics and its derivatives (see supplementary

information). Interestingly, DDPG can sometimes find policies that exceed the performance of the planner, in some cases even when learning from pixels

(the planner always plans over the underlying low-dimensional state space).

该方法的一个关键特征是它的简单性:它只需要一个简单的演员 - 评论者架构和学习算法,只需很少的“移动部分”,便于实施和扩展到更困难的

问题和更大的网络。对于物理控制问题,我们将我们的结果与计划人员(Tassa等人,2012)计算出的基线进行比较,该基线可以完全访问底层

模拟动态及其衍生物(请参阅补充信息)。有趣的是,DDPG有时可能发现超出规划者表现的策略,在某些情况下,即使从像素学习(规划者总

是计划底层低维状态空间)。

2 BACKGROUND

2背景

∗

这

些

作

者

均

等

贡

献

。

�

We consider a standard reinforcement learning setup consisting of an agent interacting with an environment E in discrete timesteps. At each timestep t the

. In all the environments considered here the actions are real-valued

agent receives an observation

and receives a scalar reward

, takes an action

. In general, the environment may be partially observed so that the entire history of the observation, action pairs

may be required to describe the state. Here, we assumed the environment is fully-observed so

.

我们考虑一个标准的强化学习设置,它包括一个代理以离散的时间步长与环境E进行交互。在每个时间步t,代理收到一个观察 ,采取行动

并收到标量奖励 。在这里考虑的所有环境中,动作都是实值

需要动作对

。一般来说,环境可能会被部分观察到,因此观察的整个历史记录可能

来描述状态。在这里,我们假设环境是完全遵守的,所以

。

An agent’s behavior is defined by a policy, π, which maps states to a probability distribution over the actions

also be stochastic. We model it as a Markov decision process with a state space S, action space

dynamics

代理的行为由策略π定义,该策略将状态映射到动作

动作空间

, and reward function

,初始状态分布

,转换动力学

.

, an initial state distribution

. The environment, E, may

, transition

上的概率分布。环境E也可能是随机的。我们将它建模为具有状态空间S,

和奖励函数

的马尔可夫决策过程。

. Note that the

The return from a state is defined as the sum of discounted future reward

return depends on the actions chosen, and therefore on the policy π, and may be stochastic. The goal in reinforcement learning is to learn a policy which

maximizes the expected return from the start distribution

as

来自国家的收益被定义为折扣未来报酬

略π,并且可能是随机的。强化学习的目标是学习一项政策,使得从初始分销

扣状态访问分布表示为 。

. We denote the discounted state visitation distribution for a policy π

的总和。请注意,回报取决于所选择的行为,因此取决于策

的预期回报最大化。我们将政策π的折

with a discounting factor

与折现因子

.

The action-value function is used in many reinforcement learning algorithms. It describes the expected return after taking an action

thereafter following policy π:

动作值函数用于许多强化学习算法。它描述了在 状态下采取行动 之后的预期回报,此后遵循策略π:

in state

and

1You can view a movie of some of the learned policies at https://goo.gl/J4PIAz

1

https://goo.gl/J4PIAz

Many approaches in reinforcement learning make use of the recursive relationship known as the Bellman equation:

强化学习中的许多方法利用了称为Bellman方程的递归关系:

If the target policy is deterministic we can describe it as a function µ : S ← A and avoid the inner expectation:

如果目标政策是确定性的,我们可以将其描述为一个函数μ:S←A并避免内部期望:

(3)

(3)

The expectation depends only on the environment. This means that it is possible to learn

different stochastic behavior policy β.

期望仅取决于环境。这意味着可以学习 offpolicy,使用从不同的随机行为策略β生成的转换。

offpolicy, using transitions which are generated from a

Q-learning (Watkins & Dayan, 1992), a commonly used off-policy algorithm, uses the greedy policy

approximators parameterized by

Q-learning(Watkins&Dayan,1992)是一种常用的关闭策略算法,它使用贪婪策略

函数逼近器,我们通过最小化损失来进行优化:

, which we optimize by minimizing the loss:

. We consider function

。我们考虑由 进行参数化的

您

可

以

在

查

看

一

些

学

习

政

策

的

电

影

�

is also dependent on

While

虽然 也依赖于 ,但这通常被忽略。

, this is typically ignored.

The use of large, non-linear function approximators for learning value or action-value functions has often been avoided in the past since theoretical

performance guarantees are impossible, and practically learning tends to be unstable. Recently, (Mnih et al., 2013; 2015) adapted the Q-learning algorithm in

order to make effective use of large neural networks as function approximators. Their algorithm was able to learn to play Atari games from pixels. In order

to scale Q-learning they introduced two major changes: the use of a replay buffer, and a separate target network for calculating

. We employ these in the

context of DDPG and explain their implementation in the next section.

过去通常避免使用大型的非线性函数逼近器来学习价值或行为 - 价值函数,因为理论性能保证是不可能的,并且实际上学习趋于不稳定。最近,

(Mnih等,2013; 2015)改编了Q学习算法,以便有效地使用大型神经网络作为函数逼近器。他们的算法能够学习如何使用像素来玩Atari游戏。

为了扩展Q学习,他们引入了两个主要的改变:使用重播缓冲区和用于计算 的单独目标网络。我们在DDPG的背景下使用这些内容,并在下一

部分解释它们的实现。

3 ALGORITHM

3算法

It is not possible to straightforwardly apply Q-learning to continuous action spaces, because in continuous spaces finding the greedy policy requires an

optimization of

at every timestep; this optimization is too slow to be practical with large, unconstrained function approximators and nontrivial action

spaces. Instead, here we used an actor-critic approach based on the DPG algorithm (Silver et al., 2014).

将Q学习直接应用于连续动作空间是不可能的,因为在连续空间中发现贪婪策略需要在每个时间步都优化 ;这种优化速度太慢,不适用于大

的,无约束的函数逼近器和非平凡的动作空间。相反,在这里我们使用了基于DPG算法的演员 - 评论者方法(Silver et al。,2014)。

is learned using the Bellman equation as in Q-learning. The actor is updated by following the applying the chain rule to the

which specifies the current policy by deterministically mapping states to a specific

The DPG algorithm maintains a parameterized actor function

action. The critic

expected return from the start distribution J with respect to the actor parameters:

DPG算法维护一个参数化的参数化函数

用Bellman方程学习的。通过将链规则应用于从起始分布J相对于参数参数的预期回报来更新参与者:

,它通过将状态确定性地映射到特定操作来指定当前策略。批评者

是在Q-learning中使

Silver et al. (2014) proved that this is the policy gradient, the gradient of the policy’s performance 2.

Silver等人。 (2014)证明,这是政策梯度,政策表现的梯度2。

As with Q learning, introducing non-linear function approximators means that convergence is no longer guaranteed. However, such approximators appear

essential in order to learn and generalize on large state spaces. NFQCA (Hafner & Riedmiller, 2011), which uses the same update rules as DPG but with

neural network function approximators, uses batch learning for stability, which is intractable for large networks. A minibatch version of NFQCA which does

not reset the policy at each update, as would be required to scale to large networks, is equivalent to the original DPG, which we compare to here. Our

contribution here is to provide modifications to DPG, inspired by the success of DQN, which allow it to use neural network function approximators to learn

in large state and action spaces online. We refer to our algorithm as Deep DPG (DDPG, Algorithm 1).

与Q学习一样,引入非线性函数逼近器意味着不再保证收敛。然而,为了学习和推广大型状态空间,这样的近似者看起来是必不可少的。

NFQCA(Hafner&Riedmiller,2011)使用与DPG相同的更新规则,但是使用神经网络功能逼近器,使用批量学习来保持稳定性,这对于大型网

络来说是棘手的。NFQCA的minibatch版本不会在每次更新时重置策略,这将需要扩展到大型网络,这与我们在此比较的原始DPG相同。我们的

贡献是为DPG提供修改,这受到DQN的成功启发,它允许它使用神经网络函数逼近器在线状态和动作空间中学习。我们将我们的算法称为Deep

DPG(DDPG,算法1)。

2In practice, as in commonly done in policy gradient implementations, we ignored the discount in the statevisitation distribution

2

.

One challenge when using neural networks for reinforcement learning is that most optimization algorithms assume that the samples are independently and

identically distributed. Obviously, when the samples are generated from exploring sequentially in an environment this assumption no longer holds.

Additionally, to make efficient use of hardware optimizations, it is essential to learn in minibatches, rather than online.

当使用神经网络进行强化学习时,一个挑战是大多数优化算法都假设样本是独立且相同分布的。显然,当样本是在环境中依次探索而生成时,

这个假设不再成立。此外,为了有效利用硬件优化,学习minibatches而不是在线学习是非常重要的。

在

实

践

中

,

正

如

在

政

策

梯

度

实

施

中

通

常

所

做

的

那

样

,

我

们

忽

略

了

状

态

访

问

分

布

中

的

折

扣

。

�

As in DQN, we used a replay buffer to address these issues. The replay buffer is a finite sized cache R. Transitions were sampled from the environment

according to the exploration policy and the tuple

were discarded. At each timestep the actor and critic are updated by sampling a minibatch uniformly from the buffer. Because DDPG is an off-policy

algorithm, the replay buffer can be large, allowing the algorithm to benefit from learning across a set of uncorrelated transitions.

和DQN一样,我们使用重播缓冲区来解决这些问题。重放缓冲区是一个有限大小的缓存R.根据探索策略从环境中采样转换,并将元组

was stored in the replay buffer. When the replay buffer was full the oldest samples

存储在重放缓冲区中。当重播缓冲区已满时,丢弃最旧的样本。在每个时间步,演员和评论者通过从缓冲器统一取样小批次

来更新。由于DDPG是一种关闭策略算法,因此重播缓冲区可能较大,从而允许通过一组不相关的转换学习算法。

respectively, that are used for calculating the target values. The weights of these target networks are then

Directly implementing Q learning (equation 4) with neural networks proved to be unstable in many environments. Since the network

being

updated is also used in calculating the target value (equation 5), the Q update is prone to divergence. Our solution is similar to the target network used in

(Mnih et al., 2013) but modified for actor-critic and using “soft” target updates, rather than directly copying the weights. We create a copy of the actor and

critic networks,

updated by having them slowly track the learned networks:

. This means that the target values are constrained to

change slowly, greatly improving the stability of learning. This simple change moves the relatively unstable problem of learning the action-value function

closer to the case of supervised learning, a problem for which robust solutions exist. We found that having both a target

stable targets

estimations. However, in practice we found this was greatly outweighed by the stability of learning.

用神经网络直接实现Q学习(方程4)在许多环境中被证明是不稳定的。由于正在更新的网络

新容易发散。我们的解决方案与(Mnih et al。,2013)中使用的目标网络类似,但是对演员评论者进行修改并使用“软”目标更新,而不是直接

复制权重。我们分别创建了用于计算目标值的演员和评论者网络副本

。然后通过让这些目标网络慢慢跟踪学习网络来更

。这意味着目标值被限制缓慢变化,极大地提高了学习的稳定性。这种简单的

新这些目标网络的权重:带

变化将相对不稳定的动作值函数学习问题更接近监督学习的情况,这是一个存在鲁棒解的问题。我们发现同时拥有目标 和 需要有稳定的目

标 才能始终如一地训练评论者而不会发散。这可能会减慢学习速度,因为目标网络会延迟价值估计的传播。但是,实际上我们发现这一点远

远超过了学习的稳定性。

in order to consistently train the critic without divergence. This may slow learning, since the target network delays the propagation of value

and

was required to have

也用于计算目标值(等式5),所以Q更

with

和

and

的

When learning from low dimensional feature vector observations, the different components of the observation may have different physical units (for

example, positions versus velocities) and the ranges may vary across environments. This can make it difficult for the network to learn effectively and may

make it difficult to find hyper-parameters which generalise across environments with different scales of state values.

当从低维特征向量观测中学习时,观测的不同组分可能具有不同的物理单位(例如,位置与速度),并且范围可能在不同的环境中变化。这可

能会使网络很难有效地学习,并且很难找到在具有不同的状态值范围的环境中泛化的超参数。

One approach to this problem is to manually scale the features so they are in similar ranges across environments and units. We address this issue by adapting

a recent technique from deep learning called batch normalization (Ioffe & Szegedy, 2015). This technique normalizes each dimension across the samples in a

minibatch to have unit mean and variance. In addition, it maintains a running average of the mean and variance to use for normalization during testing (in

our case, during exploration or evaluation). In deep networks, it is used to minimize covariance shift during training, by ensuring that each layer receives

whitened input. In the low-dimensional case, we used batch normalization on the state input and all layers of the µ network and all layers of the Q network

prior to the action input (details of the networks are given in the supplementary material). With batch normalization, we were able to learn effectively across

many different tasks with differing types of units, without needing to manually ensure the units were within a set range.

解决此问题的一种方法是手动缩放功能,以使它们跨环境和单位处于类似范围内。我们通过调整最近的一项名为批量标准化的深度学习技术来

解决这个问题(Ioffe&Szegedy,2015)。该技术对小批量样本中的每个维度进行归一化,以得到单位均值和方差。另外,它在测试期间(在我

们的情况下,在勘探或评估期间)保持均值和方差的运行平均值用于标准化。在深度网络中,通过确保每个层接收到白化输入,它被用于最小

化训练期间的协方差偏移。在低维情况下,我们在动作输入之前在状态输入和μ网络的所有层以及Q网络的所有层上使用了批量标准化(网络细

节在补充材料中给出)。通过批量标准化,我们能够跨不同类型的单元有效地学习许多不同的任务,而无需手动确保单元在设定的范围内。

A major challenge of learning in continuous action spaces is exploration. An advantage of offpolicies algorithms such as DDPG is that we can treat the

problem of exploration independently from the learning algorithm. We constructed an exploration policy

to our actor policy

(7) N can be chosen to chosen to suit the environment. As detailed in the supplementary materials we used an

Ornstein-Uhlenbeck process (Uhlenbeck & Ornstein, 1930) to generate temporally correlated exploration for exploration efficiency in physical control

problems with inertia (similar use of autocorrelated noise was introduced in (Wawrzy´nski, 2015)).

在连续行动空间学习的一个主要挑战是探索。像DDPG这样的失衡策略算法的一个优点是我们可以独立于学习算法来处理探索问题。我们通过将

(7)中的噪音过程N中的噪音加入来构建探索政策 (7)可以选择N来适应环境。如补充材料所详述的,

我们的演员政策

我们使用Ornstein-Uhlenbeck过程(Uhlenbeck&Ornstein,1930)为具有惯性的物理控制问题中的勘探效率产生时间相关勘探(Wawrzy'nski,

2015)中引入了自相关噪声的类似用法, )。

by adding noise sampled from a noise process N

�

4 RESULTS

4结果

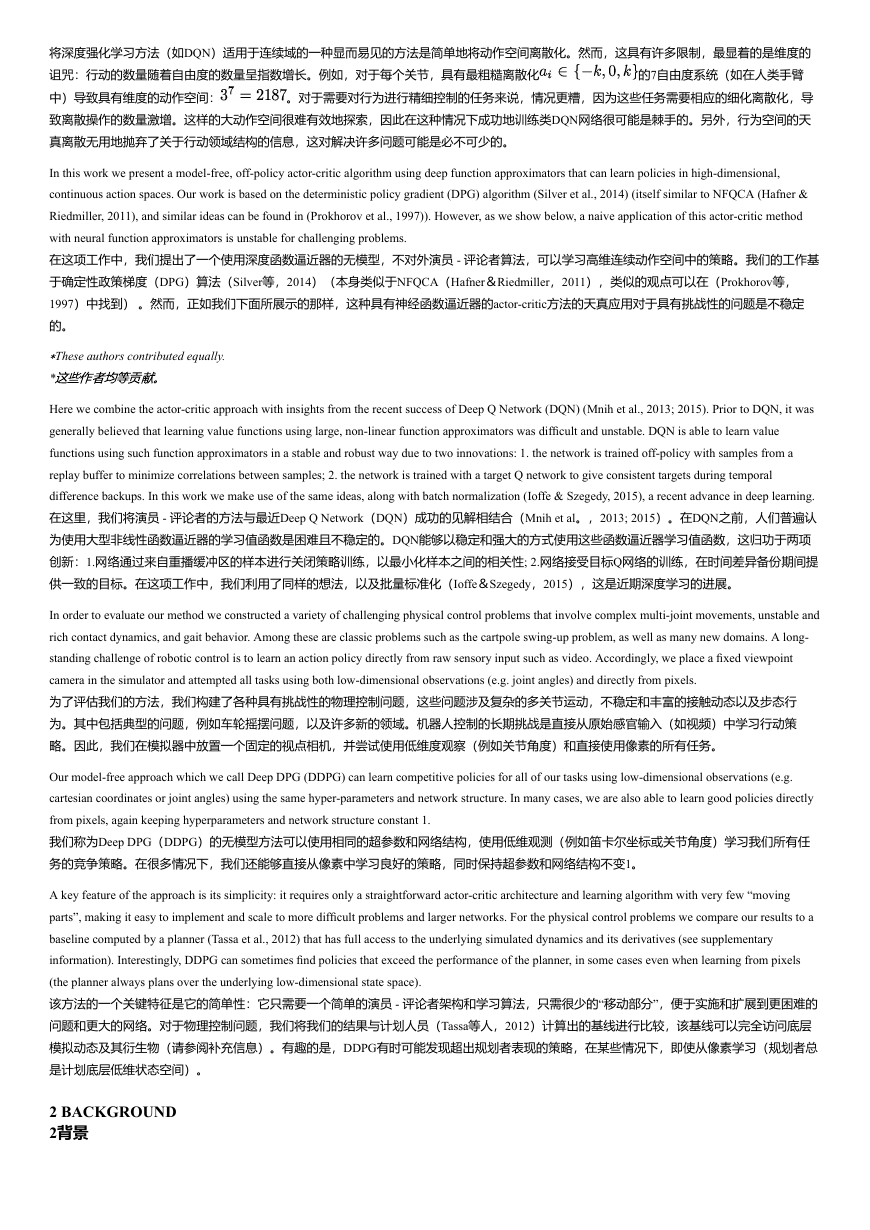

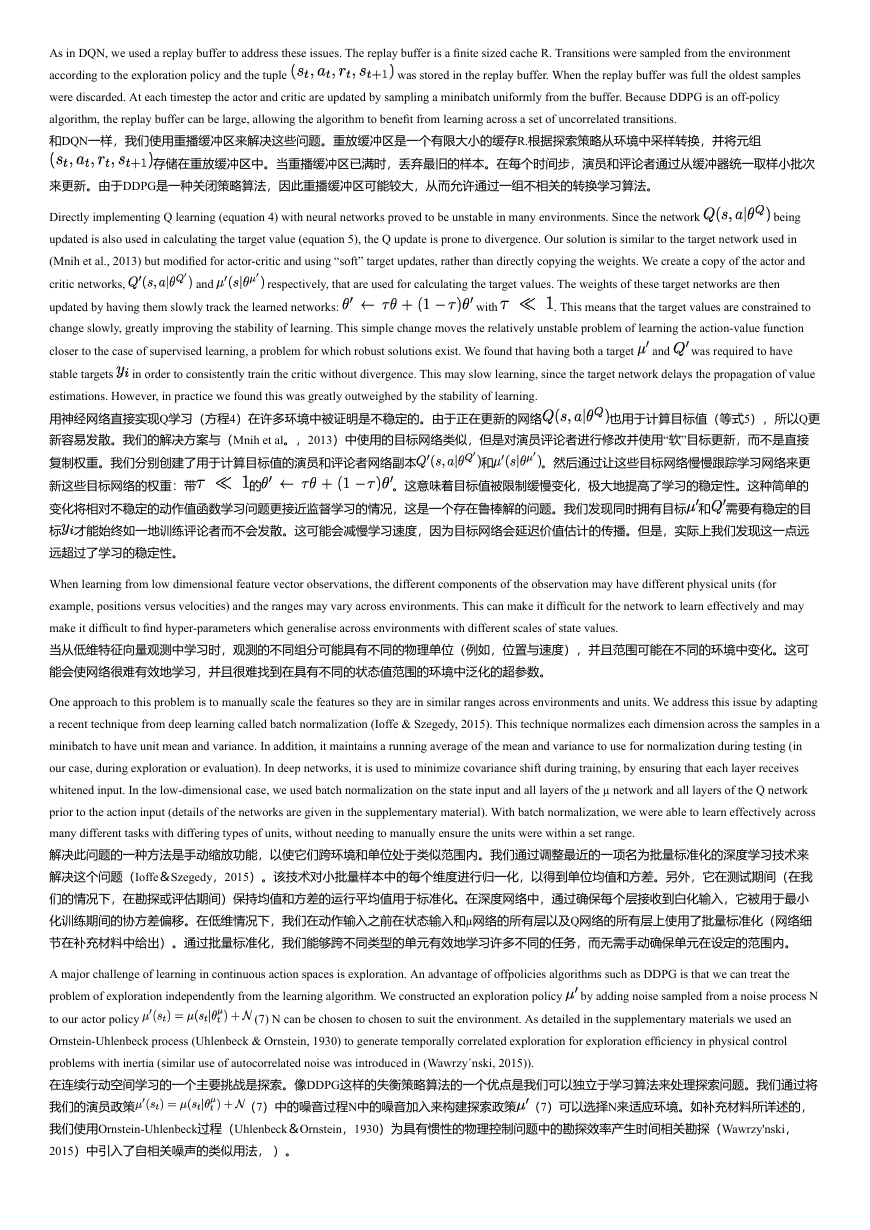

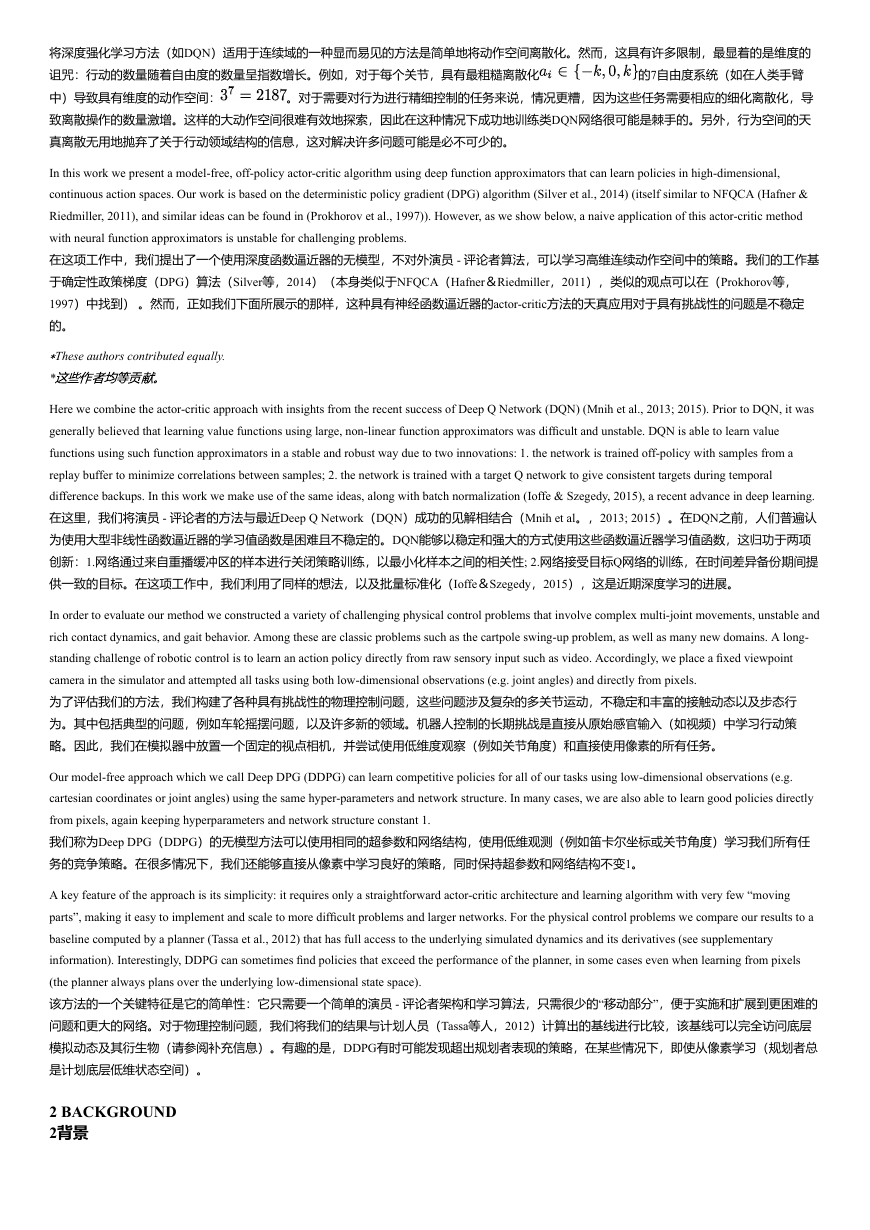

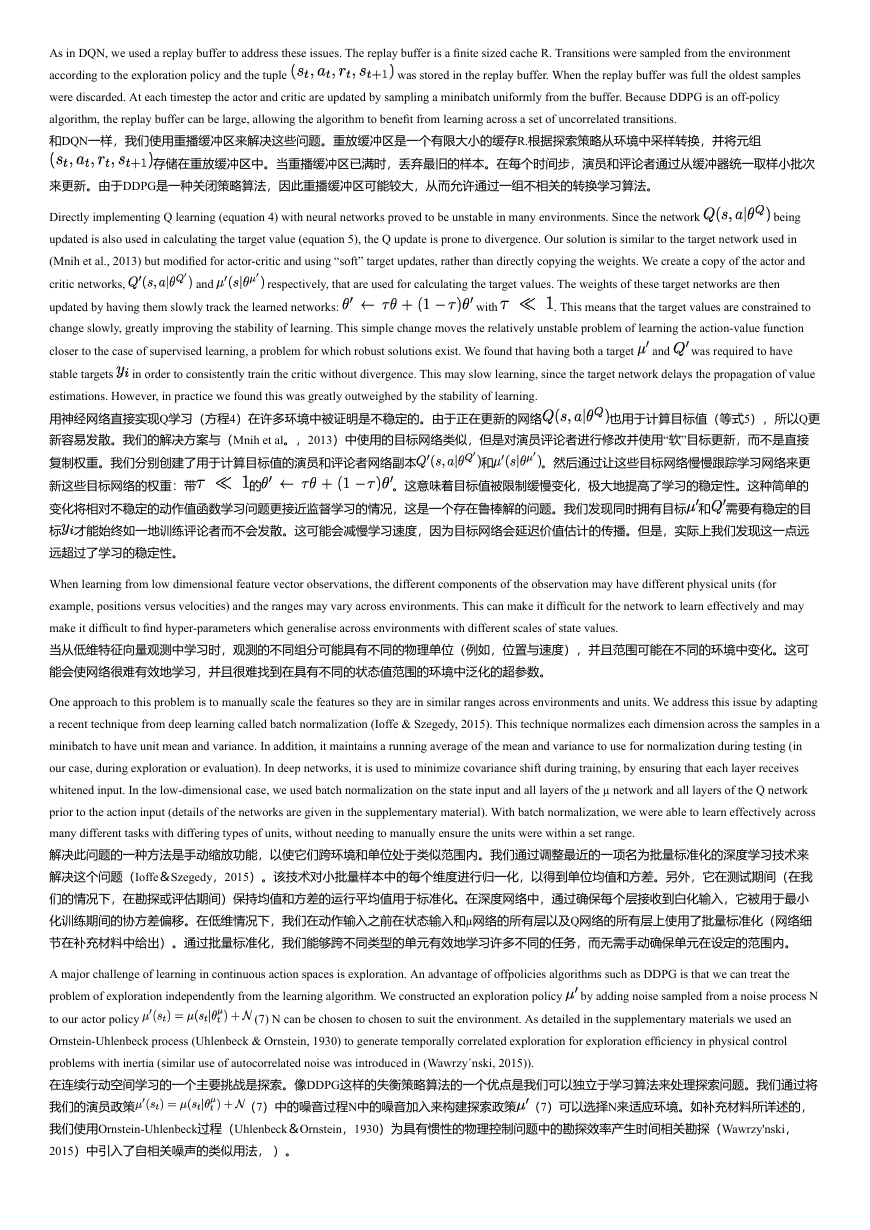

We constructed simulated physical environments of varying levels of difficulty to test our algorithm. This included classic reinforcement learning

environments such as cartpole, as well as difficult, high dimensional tasks such as gripper, tasks involving contacts such as puck striking (canada) and

locomotion tasks such as cheetah (Wawrzy´nski, 2009). In all domains but cheetah the actions were torques applied to the actuated joints. These

environments were simulated using MuJoCo (Todorov et al., 2012). Figure 1 shows renderings of some of the environments used in the task (the

supplementary contains details of the environments and you can view some of the learned policies at https://goo.gl/J4PIAz).

我们构建了不同难度等级的模拟物理环境来测试我们的算法。这包括经典的强化学习环境,例如cartpole,以及难度较大的高维度任务,如抓

手,涉及诸如击球(加拿大)等接触的任务以及猎豹(Wawrzy'nski,2009)等运动任务。在所有领域,但猎豹的行动扭矩施加到驱动关节。使

用MuJoCo模拟这些环境(Todorov等,2012)。图1显示了任务中使用的一些环境的渲染(补充内容包含环境的详细信息,您可以通过

https://goo.gl/J4PIAz查看一些学习到的策略)。

In all tasks, we ran experiments using both a low-dimensional state description (such as joint angles and positions) and high-dimensional renderings of the

environment. As in DQN (Mnih et al., 2013; 2015), in order to make the problems approximately fully observable in the high dimensional environment we

used action repeats. For each timestep of the agent, we step the simulation 3 timesteps, repeating the agent’s action and rendering each time. Thus the

observation reported to the agent contains 9 feature maps (the RGB of each of the 3 renderings) which allows the agent to infer velocities using the

differences between frames. The frames were downsampled to 64x64 pixels and the 8-bit RGB values were converted to floating point scaled to

supplementary information for details of our network structure and hyperparameters.

在所有任务中,我们都使用低维状态描述(例如关节角度和位置)以及环境的高维渲染来进行实验。和DQN一样(Mnih et al。,2013; 2015),

为了使这些问题在高维环境中几乎可以完全观察到,我们使用动作重复。对于代理的每个时间步,我们进行模拟3次步骤,每次重复代理的动作

和渲染。因此,向代理报告的观察结果包含9个特征地图(3个渲染中的每一个的RGB),这使得代理可以使用帧之间的差异来推断速度。帧被

下采样到64x64像素,并且8位RGB值被转换为缩放到

的浮点。有关我们网络结构和超参数的详细信息,请参阅补充信息。

. See

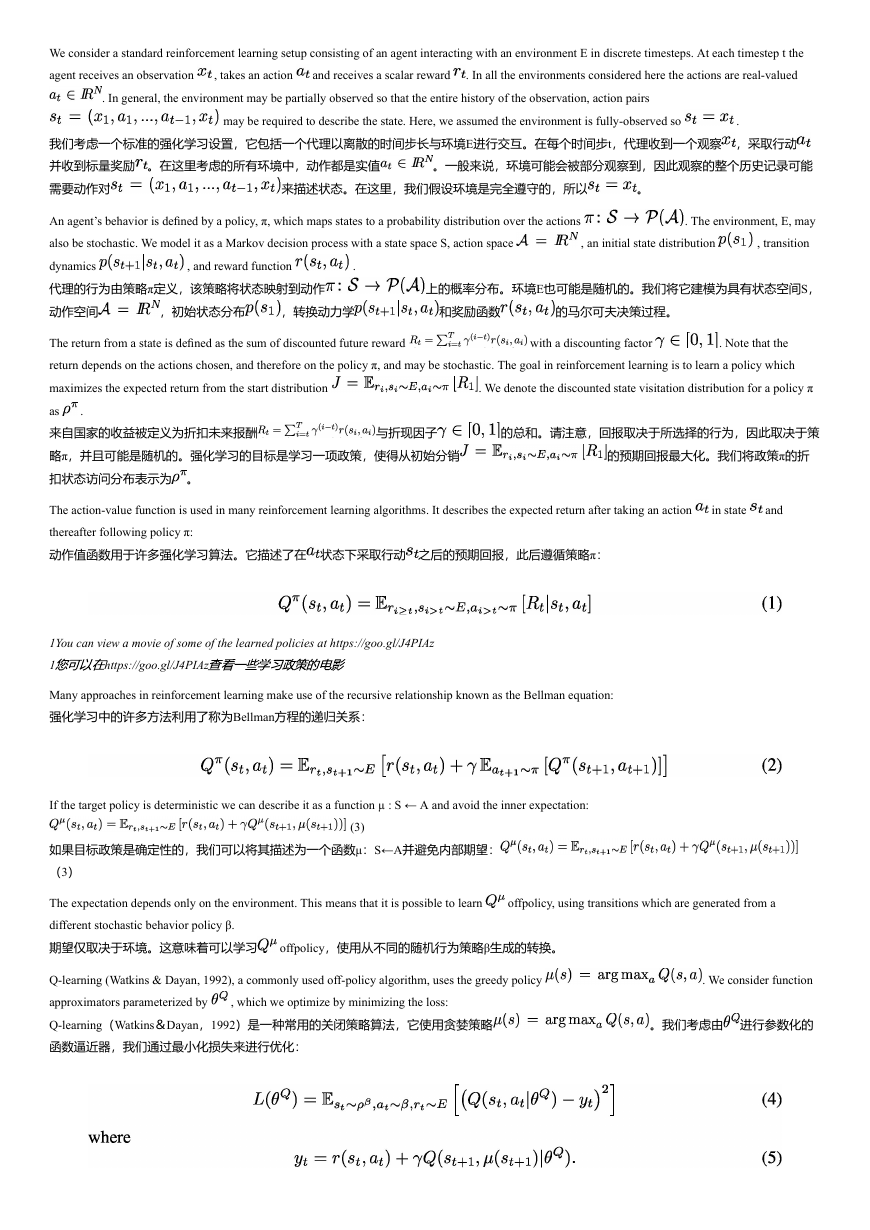

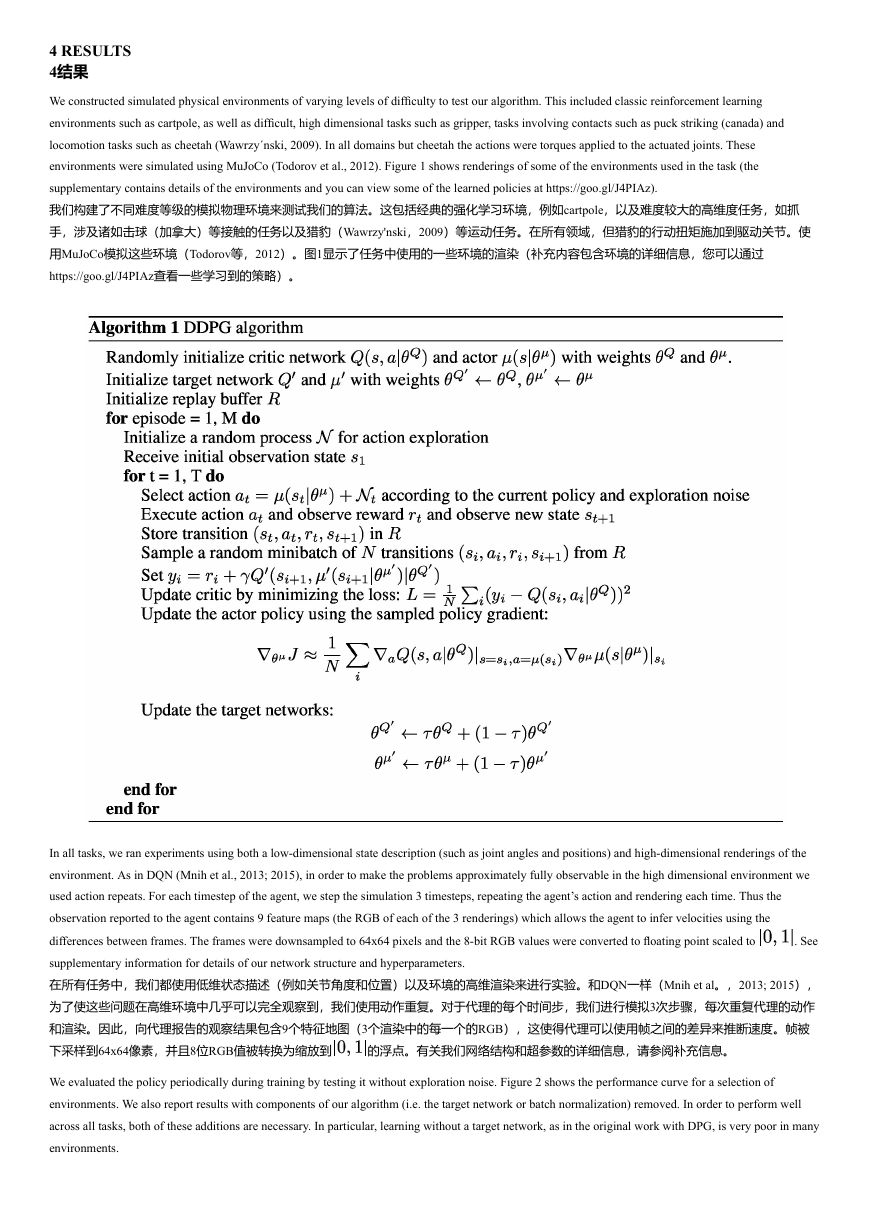

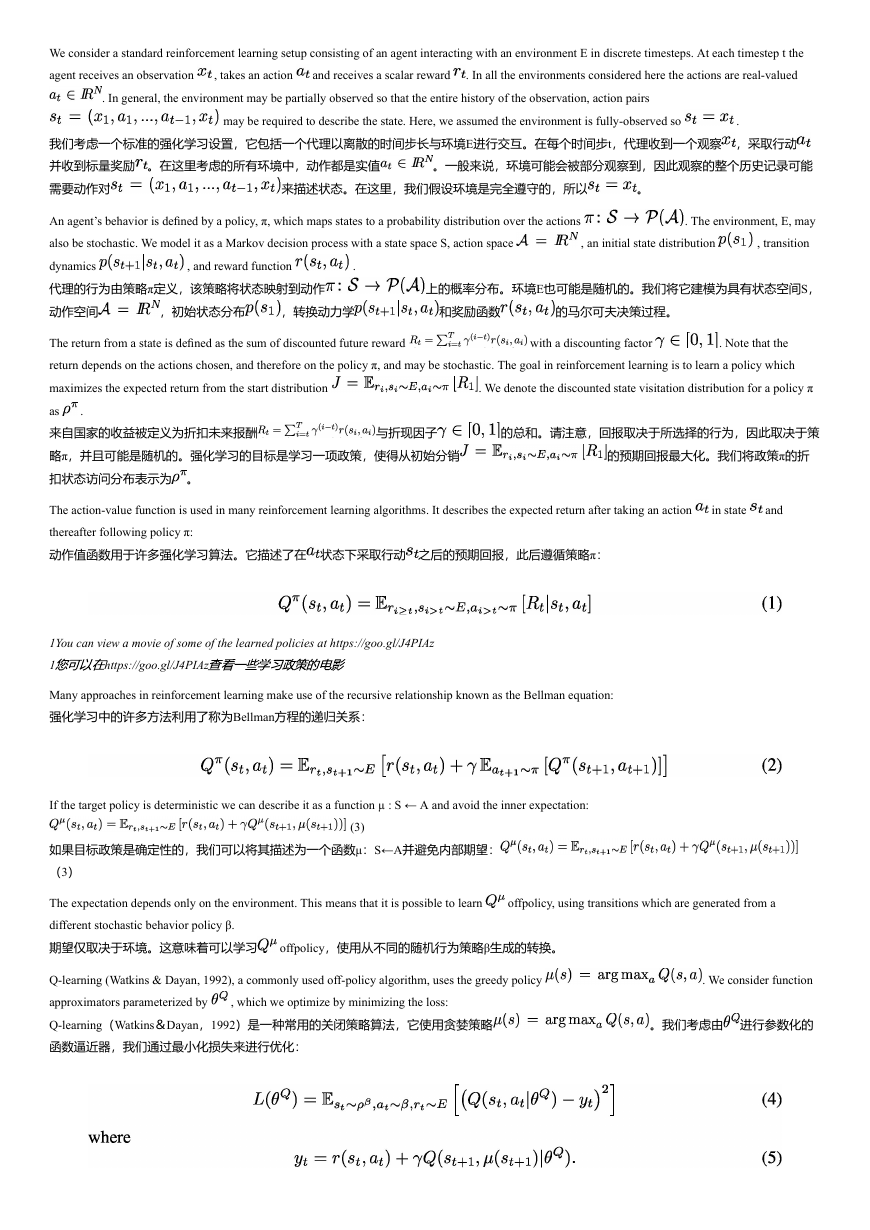

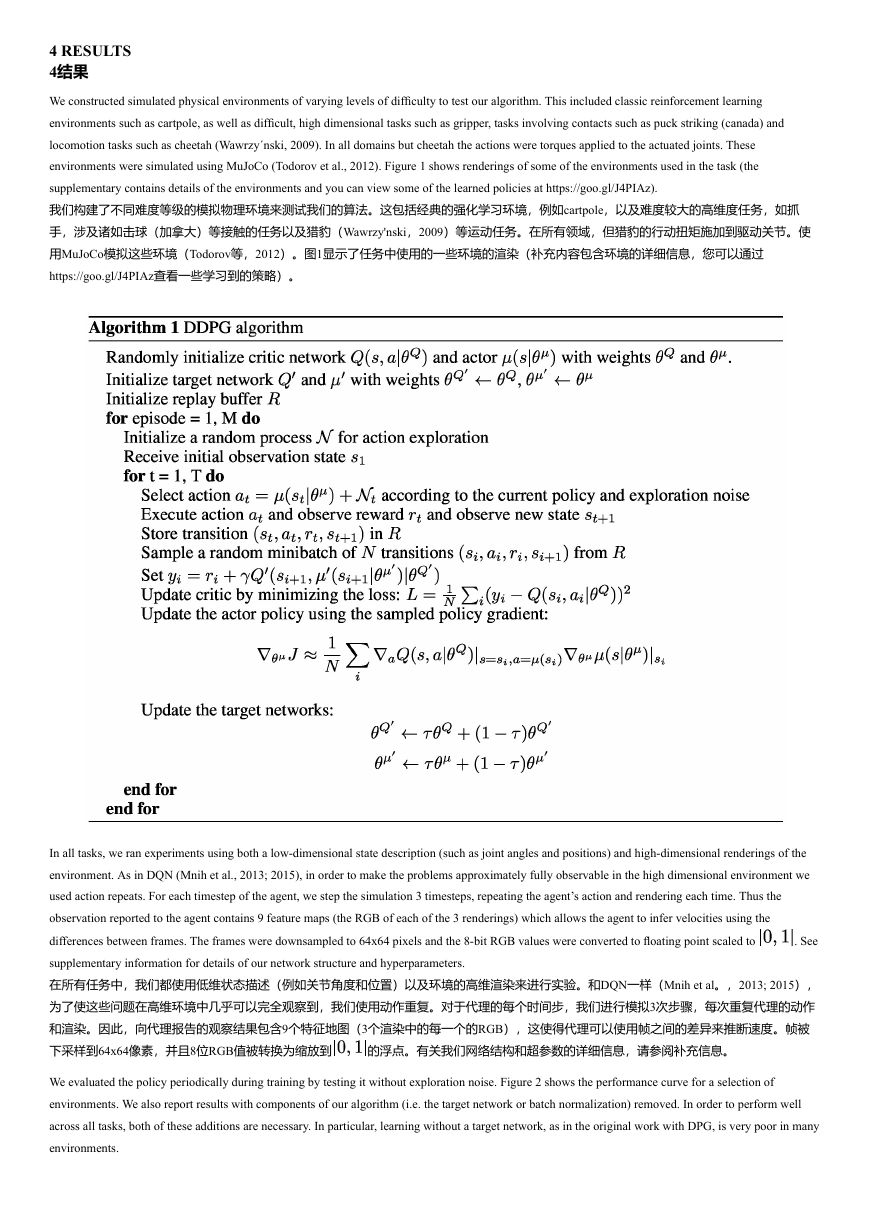

We evaluated the policy periodically during training by testing it without exploration noise. Figure 2 shows the performance curve for a selection of

environments. We also report results with components of our algorithm (i.e. the target network or batch normalization) removed. In order to perform well

across all tasks, both of these additions are necessary. In particular, learning without a target network, as in the original work with DPG, is very poor in many

environments.

�

我们在培训期间定期评估政策,通过在没有探索噪音的情况下测试它图2显示了选择环境的性能曲线。我们还报告了我们算法的组件(即目标网

络或批量标准化)的结果。为了在所有任务中表现良好,这两项增加都是必要的。特别是,在没有目标网络的情况下学习,就像在DPG的原始

工作中一样,在许多环境中都很差。

Surprisingly, in some simpler tasks, learning policies from pixels is just as fast as learning using the low-dimensional state descriptor. This may be due to the

action repeats making the problem simpler. It may also be that the convolutional layers provide an easily separable representation of state space, which is

straightforward for the higher layers to learn on quickly.

令人惊讶的是,在一些更简单的任务中,像素学习策略与使用低维状态描述符学习一样快。这可能是由于行动重复使问题变得更简单。它也可

能是卷积层提供了一个易于分离的状态空间表示,这对于更高层快速学习来说是直接的。

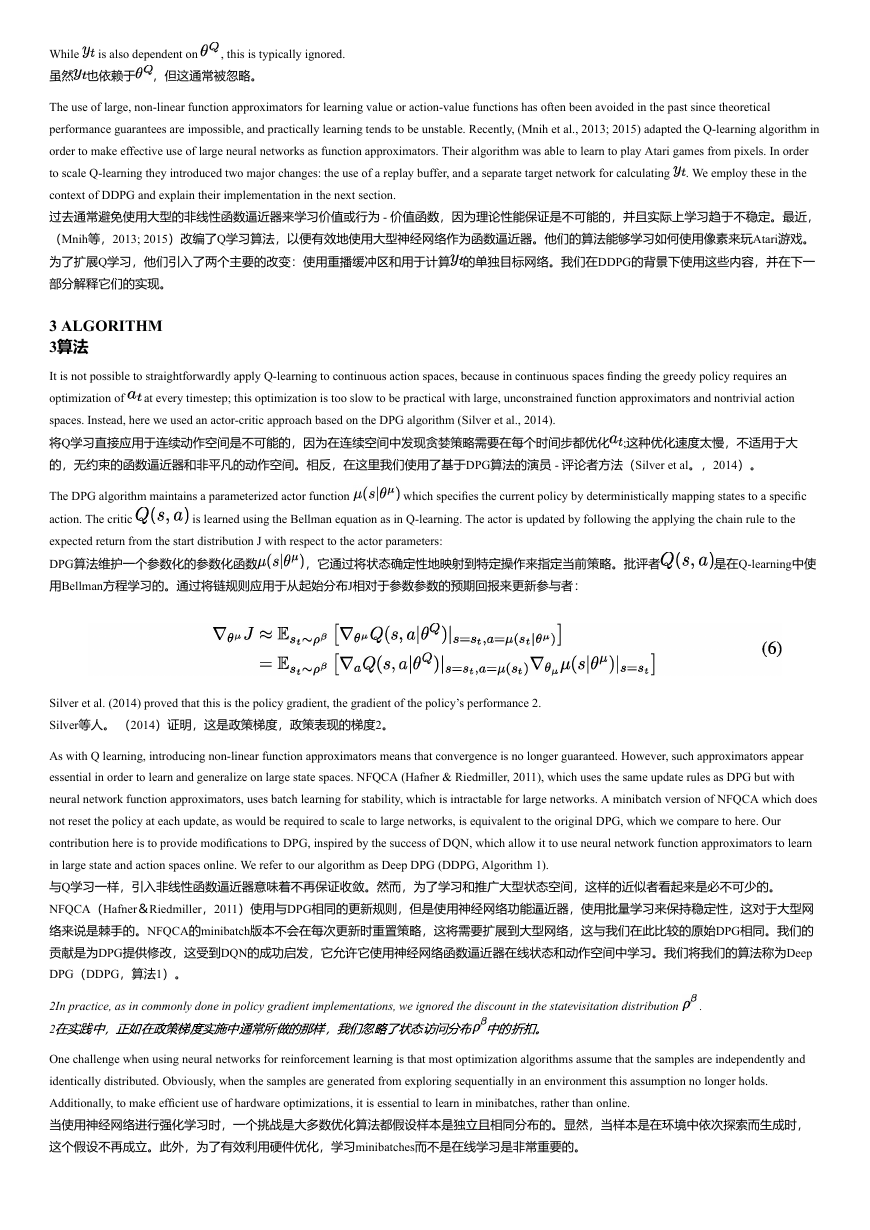

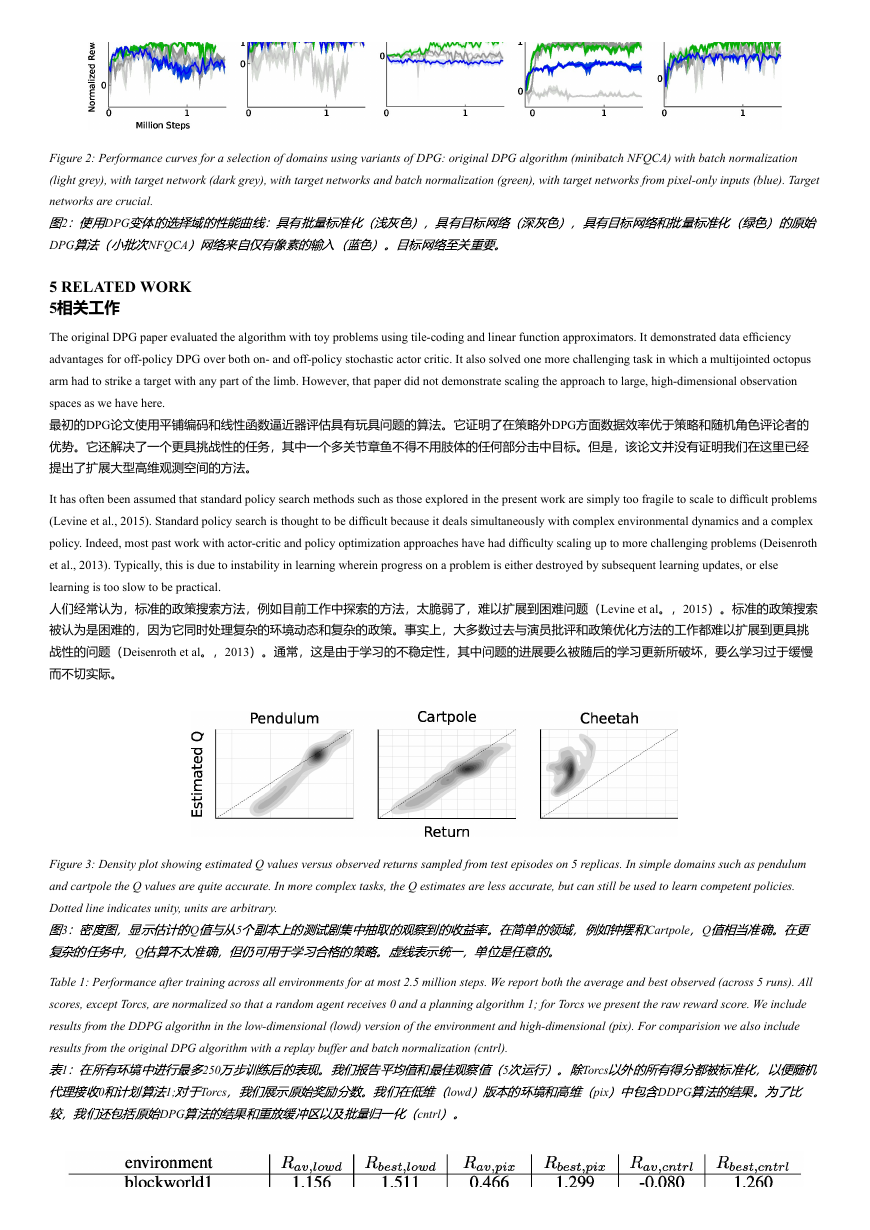

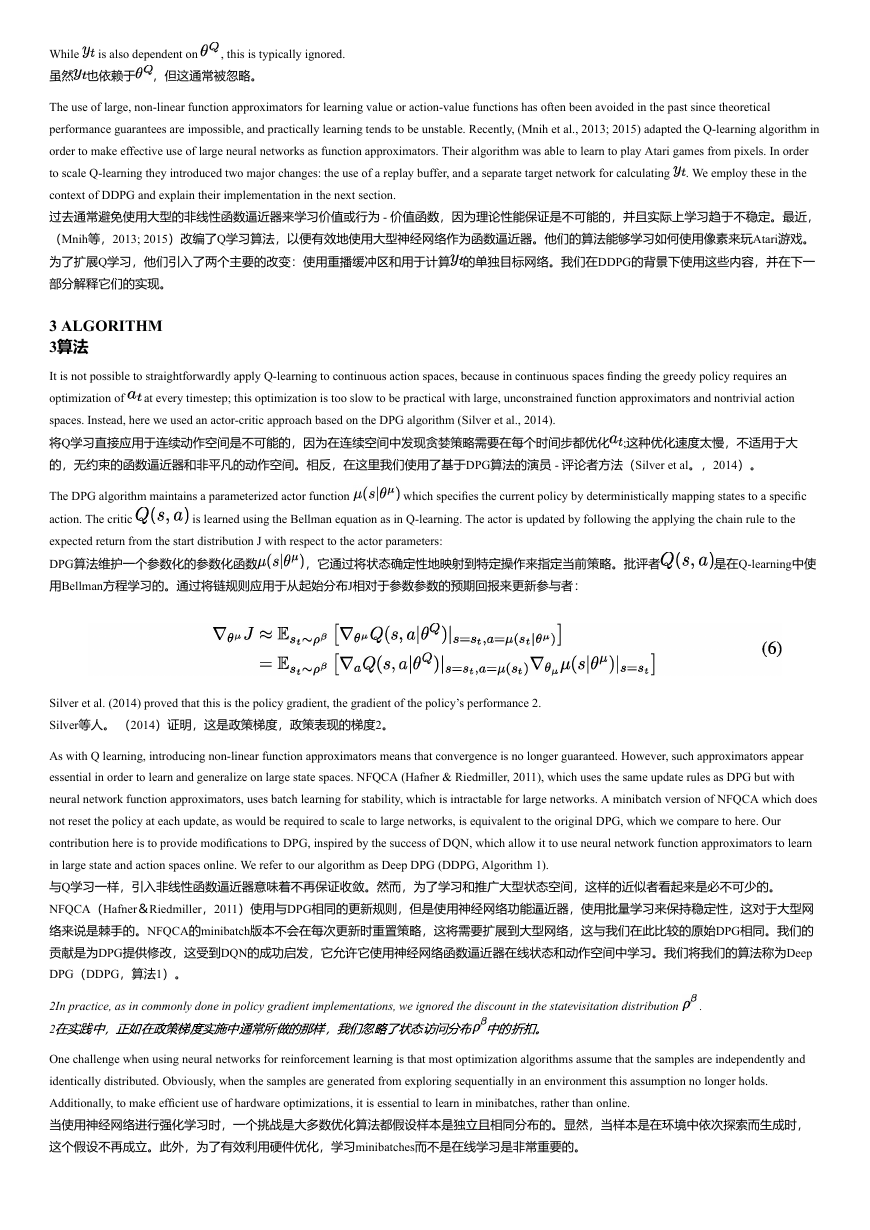

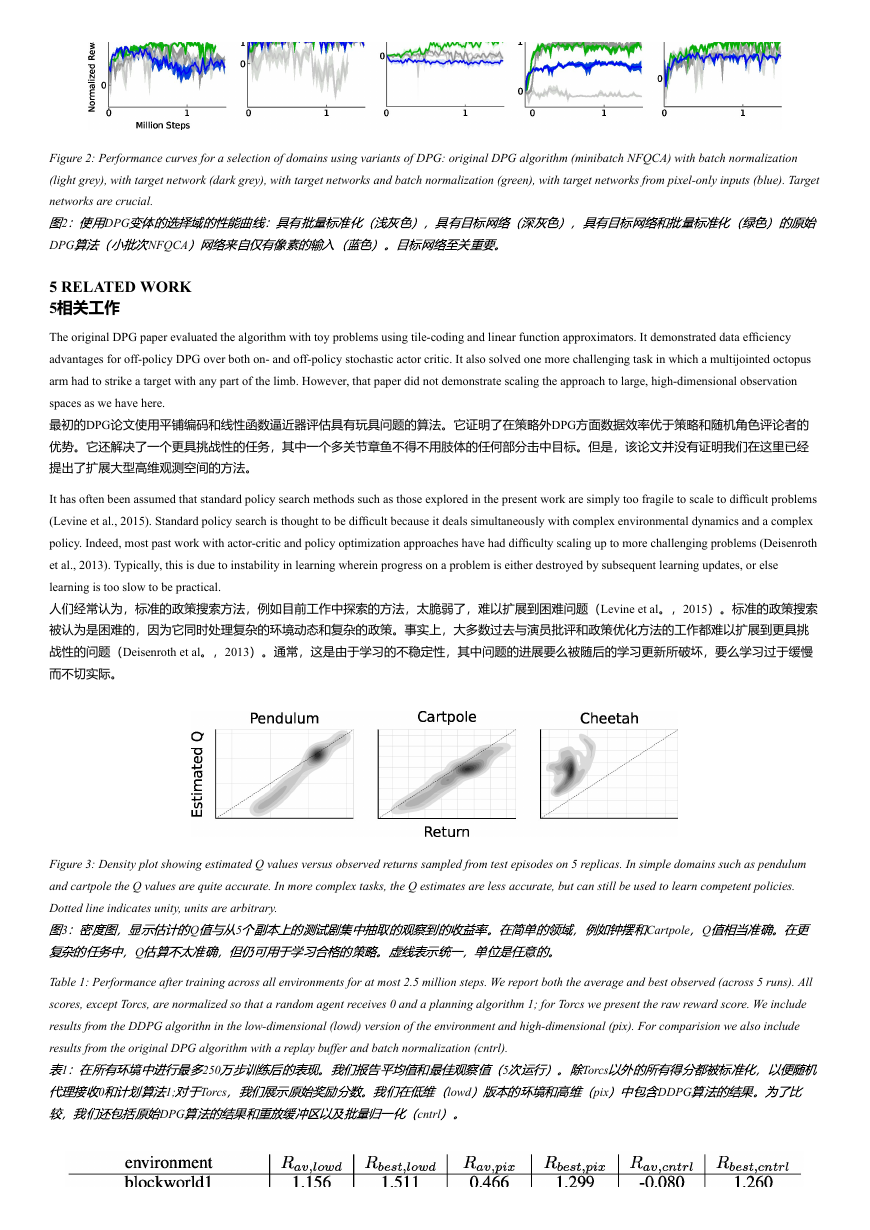

Table 1 summarizes DDPG’s performance across all of the environments (results are averaged over 5 replicas). We normalized the scores using two

baselines. The first baseline is the mean return from a naive policy which samples actions from a uniform distribution over the valid action space. The

second baseline is iLQG (Todorov & Li, 2005), a planning based solver with full access to the underlying physical model and its derivatives. We normalize

scores so that the naive policy has a mean score of 0 and iLQG has a mean score of 1. DDPG is able to learn good policies on many of the tasks, and in

many cases some of the replicas learn policies which are superior to those found by iLQG, even when learning directly from pixels.

表1总结了DDPG在所有环境中的表现(结果平均为5次复制)。我们使用两个基线将分数归一化。第一个基线是来自天真政策的平均回报,该政

策从有效行动空间的均匀分布中采取行动。第二个基准是iLQG(Todorov&Li,2005),这是一个基于规划的求解器,可以完全访问基础物理模

型及其衍生物。我们对分数进行归一化处理,以使幼稚政策的平均得分为0,iLQG的平均得分为1。DDPG能够针对许多任务学习良好的策略,

并且在许多情况下,一些副本学习优于iLQG所发现策略的策略,即使在直接从像素中学习时也是如此。

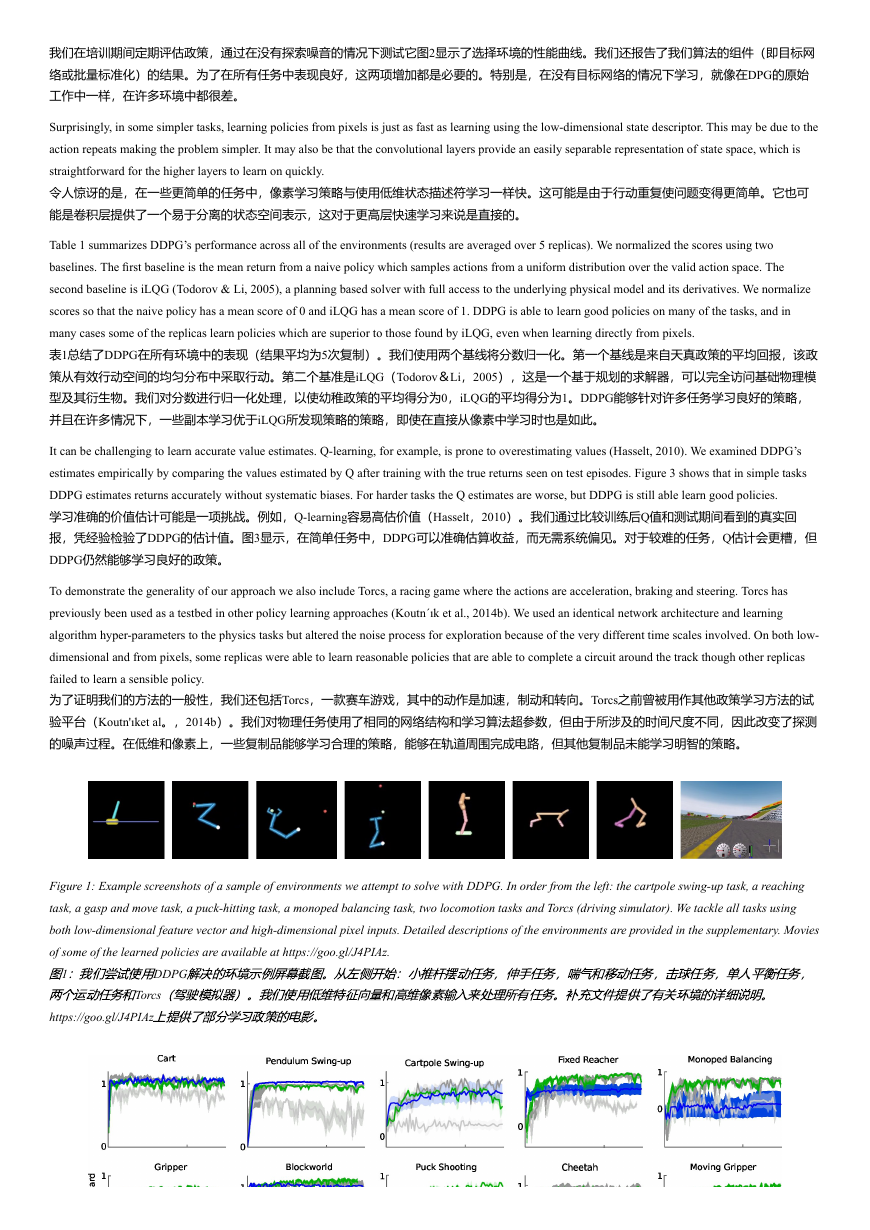

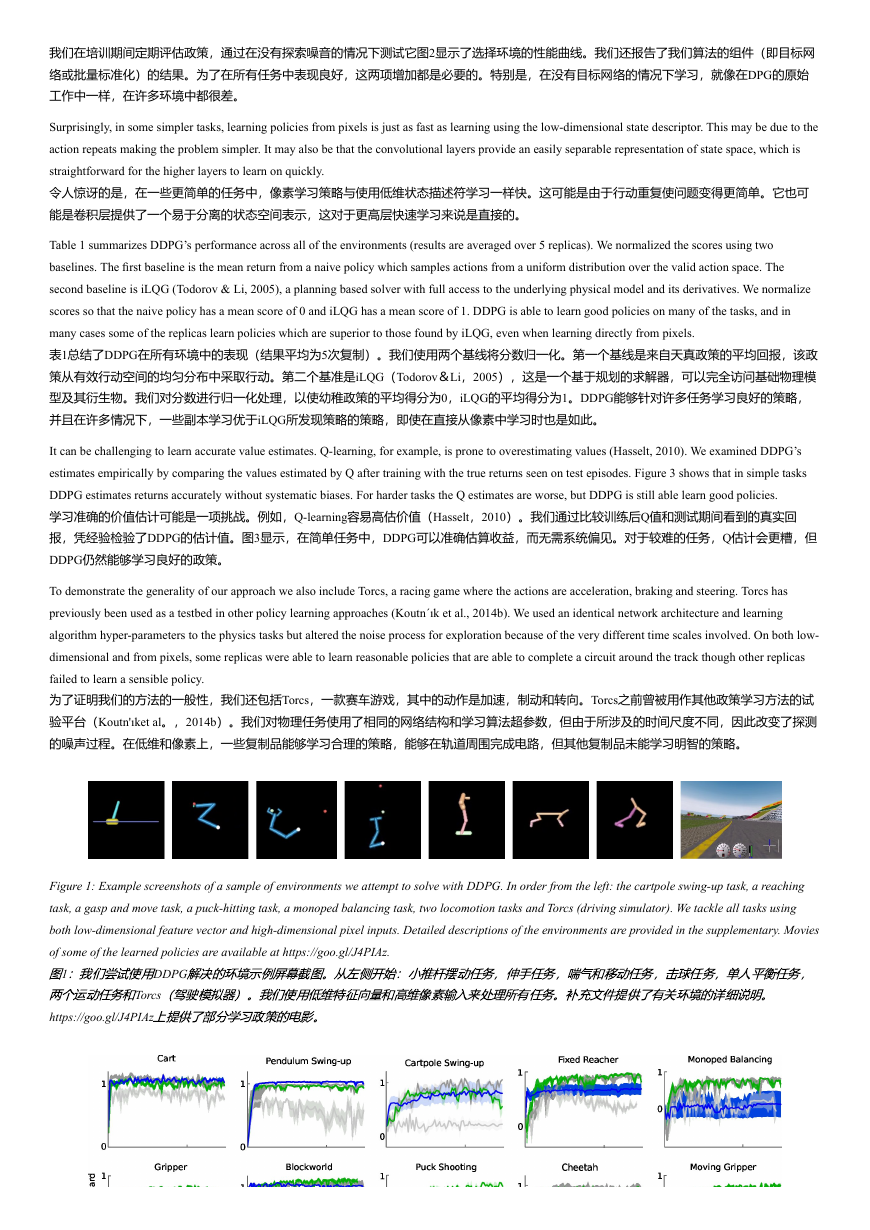

It can be challenging to learn accurate value estimates. Q-learning, for example, is prone to overestimating values (Hasselt, 2010). We examined DDPG’s

estimates empirically by comparing the values estimated by Q after training with the true returns seen on test episodes. Figure 3 shows that in simple tasks

DDPG estimates returns accurately without systematic biases. For harder tasks the Q estimates are worse, but DDPG is still able learn good policies.

学习准确的价值估计可能是一项挑战。例如,Q-learning容易高估价值(Hasselt,2010)。我们通过比较训练后Q值和测试期间看到的真实回

报,凭经验检验了DDPG的估计值。图3显示,在简单任务中,DDPG可以准确估算收益,而无需系统偏见。对于较难的任务,Q估计会更糟,但

DDPG仍然能够学习良好的政策。

To demonstrate the generality of our approach we also include Torcs, a racing game where the actions are acceleration, braking and steering. Torcs has

previously been used as a testbed in other policy learning approaches (Koutn´ık et al., 2014b). We used an identical network architecture and learning

algorithm hyper-parameters to the physics tasks but altered the noise process for exploration because of the very different time scales involved. On both low-

dimensional and from pixels, some replicas were able to learn reasonable policies that are able to complete a circuit around the track though other replicas

failed to learn a sensible policy.

为了证明我们的方法的一般性,我们还包括Torcs,一款赛车游戏,其中的动作是加速,制动和转向。Torcs之前曾被用作其他政策学习方法的试

验平台(Koutn'ıket al。,2014b)。我们对物理任务使用了相同的网络结构和学习算法超参数,但由于所涉及的时间尺度不同,因此改变了探测

的噪声过程。在低维和像素上,一些复制品能够学习合理的策略,能够在轨道周围完成电路,但其他复制品未能学习明智的策略。

Figure 1: Example screenshots of a sample of environments we attempt to solve with DDPG. In order from the left: the cartpole swing-up task, a reaching

task, a gasp and move task, a puck-hitting task, a monoped balancing task, two locomotion tasks and Torcs (driving simulator). We tackle all tasks using

both low-dimensional feature vector and high-dimensional pixel inputs. Detailed descriptions of the environments are provided in the supplementary. Movies

of some of the learned policies are available at https://goo.gl/J4PIAz.

1

DDPG

Torcs

https://goo.gl/J4PIAz

图

:

我

们

尝

试

使

用

解

决

的

环

境

示

例

屏

幕

截

图

。

从

左

侧

开

始

:

小

推

杆

摆

动

任

务

,

伸

手

任

务

,

喘

气

和

移

动

任

务

,

击

球

任

务

,

单

人

平

衡

任

务

,

两

个

运

动

任

务

和

(

驾

驶

模

拟

器

)

。

我

们

使

用

低

维

特

征

向

量

和

高

维

像

素

输

入

来

处

理

所

有

任

务

。

补

充

文

件

提

供

了

有

关

环

境

的

详

细

说

明

。

上

提

供

了

部

分

学

习

政

策

的

电

影

。

�

Figure 2: Performance curves for a selection of domains using variants of DPG: original DPG algorithm (minibatch NFQCA) with batch normalization

(light grey), with target network (dark grey), with target networks and batch normalization (green), with target networks from pixel-only inputs (blue). Target

networks are crucial.

DPG

2

DPG

NFQCA

5 RELATED WORK

5相关工作

The original DPG paper evaluated the algorithm with toy problems using tile-coding and linear function approximators. It demonstrated data efficiency

advantages for off-policy DPG over both on- and off-policy stochastic actor critic. It also solved one more challenging task in which a multijointed octopus

arm had to strike a target with any part of the limb. However, that paper did not demonstrate scaling the approach to large, high-dimensional observation

spaces as we have here.

最初的DPG论文使用平铺编码和线性函数逼近器评估具有玩具问题的算法。它证明了在策略外DPG方面数据效率优于策略和随机角色评论者的

优势。它还解决了一个更具挑战性的任务,其中一个多关节章鱼不得不用肢体的任何部分击中目标。但是,该论文并没有证明我们在这里已经

提出了扩展大型高维观测空间的方法。

It has often been assumed that standard policy search methods such as those explored in the present work are simply too fragile to scale to difficult problems

(Levine et al., 2015). Standard policy search is thought to be difficult because it deals simultaneously with complex environmental dynamics and a complex

policy. Indeed, most past work with actor-critic and policy optimization approaches have had difficulty scaling up to more challenging problems (Deisenroth

et al., 2013). Typically, this is due to instability in learning wherein progress on a problem is either destroyed by subsequent learning updates, or else

learning is too slow to be practical.

人们经常认为,标准的政策搜索方法,例如目前工作中探索的方法,太脆弱了,难以扩展到困难问题(Levine et al。,2015)。标准的政策搜索

被认为是困难的,因为它同时处理复杂的环境动态和复杂的政策。事实上,大多数过去与演员批评和政策优化方法的工作都难以扩展到更具挑

战性的问题(Deisenroth et al。,2013)。通常,这是由于学习的不稳定性,其中问题的进展要么被随后的学习更新所破坏,要么学习过于缓慢

而不切实际。

Figure 3: Density plot showing estimated Q values versus observed returns sampled from test episodes on 5 replicas. In simple domains such as pendulum

and cartpole the Q values are quite accurate. In more complex tasks, the Q estimates are less accurate, but can still be used to learn competent policies.

Dotted line indicates unity, units are arbitrary.

3

Q

5

Q

Cartpole

Q

Table 1: Performance after training across all environments for at most 2.5 million steps. We report both the average and best observed (across 5 runs). All

scores, except Torcs, are normalized so that a random agent receives 0 and a planning algorithm 1; for Torcs we present the raw reward score. We include

results from the DDPG algorithn in the low-dimensional (lowd) version of the environment and high-dimensional (pix). For comparision we also include

results from the original DPG algorithm with a replay buffer and batch normalization (cntrl).

5

1

250

Torcs

0

1;

DPG

lowd

cntrl

Torcs

pix

DDPG

图

:

使

用

变

体

的

选

择

域

的

性

能

曲

线

:

具

有

批

量

标

准

化

(

浅

灰

色

)

,

具

有

目

标

网

络

(

深

灰

色

)

,

具

有

目

标

网

络

和

批

量

标

准

化

(

绿

色

)

的

原

始

算

法

(

小

批

次

)

网

络

来

自

仅

有

像

素

的

输

入

(

蓝

色

)

。

目

标

网

络

至

关

重

要

。

图

:

密

度

图

,

显

示

估

计

的

值

与

从

个

副

本

上

的

测

试

剧

集

中

抽

取

的

观

察

到

的

收

益

率

。

在

简

单

的

领

域

,

例

如

钟

摆

和

,

值

相

当

准

确

。

在

更

复

杂

的

任

务

中

,

估

算

不

太

准

确

,

但

仍

可

用

于

学

习

合

格

的

策

略

。

虚

线

表

示

统

一

,

单

位

是

任

意

的

。

表

:

在

所

有

环

境

中

进

行

最

多

万

步

训

练

后

的

表

现

。

我

们

报

告

平

均

值

和

最

佳

观

察

值

(

次

运

行

)

。

除

以

外

的

所

有

得

分

都

被

标

准

化

,

以

便

随

机

代

理

接

收

和

计

划

算

法

对

于

,

我

们

展

示

原

始

奖

励

分

数

。

我

们

在

低

维

(

)

版

本

的

环

境

和

高

维

(

)

中

包

含

算

法

的

结

果

。

为

了

比

较

,

我

们

还

包

括

原

始

算

法

的

结

果

和

重

放

缓

冲

区

以

及

批

量

归

一

化

(

)

。

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc