Sentiment Analysis by Capsules∗

Yequan Wang1 Aixin Sun2

Jialong Han3

Ying Liu4 Xiaoyan Zhu1

1State Key Laboratory on Intelligent Technology and Systems

1Tsinghua National Laboratory for Information Science and Technology

1Department of Computer Science and Technology, Tsinghua University, Beijing, China

2School of Computer Science and Engineering, Nanyang Technological University, Singapore

tshwangyequan@gmail.com;axsun@ntu.edu.sg;jialonghan@gmail.com;liuy81@cardiff.ac.uk;zxy-dcs@tsinghua.edu.

3Tencent AI Lab, Shenzhen, China

4School of Engineering, Cardiff University, UK

cn

ABSTRACT

In this paper, we propose RNN-Capsule, a capsule model based

on Recurrent Neural Network (RNN) for sentiment analysis. For

a given problem, one capsule is built for each sentiment category

e.g., ‘positive’ and ‘negative’. Each capsule has an attribute, a state,

and three modules: representation module, probability module, and

reconstruction module. The attribute of a capsule is the assigned

sentiment category. Given an instance encoded in hidden vectors by

a typical RNN, the representation module builds capsule representa-

tion by the attention mechanism. Based on capsule representation,

the probability module computes the capsule’s state probability. A

capsule’s state is active if its state probability is the largest among

all capsules for the given instance, and inactive otherwise. On two

benchmark datasets (i.e., Movie Review and Stanford Sentiment

Treebank) and one proprietary dataset (i.e., Hospital Feedback),

we show that RNN-Capsule achieves state-of-the-art performance

on sentiment classification. More importantly, without using any

linguistic knowledge, RNN-Capsule is capable of outputting words

with sentiment tendencies reflecting capsules’ attributes. The words

well reflect the domain specificity of the dataset.

ACM Reference Format:

Yequan Wang1 Aixin Sun2

Xiaoyan Zhu1.

2018. Sentiment Analysis by Capsules. In WWW 2018: The 2018 Web Confer-

ence, April 23–27, 2018, Lyon, France. ACM, New York, NY, USA, 10 pages.

https://doi.org/10.1145/3178876.3186015

Jialong Han3

Ying Liu4

1 INTRODUCTION

Sentiment analysis, also known as opinion mining, is the field of

study that analyzes people’s sentiments, opinions, evaluations, atti-

tudes, and emotions from written languages [20, 26]. Many neural

network models have achieved good performance, e.g., Recursive

Auto Encoder [33, 34], Recurrent Neural Network (RNN) [21, 35],

and Convolutional Neural Network (CNN) [13, 14, 18].

∗This work was done when Yequan was a visiting Ph.D student at School of Computer

Science and Engineering, Nanyang Technological University, Singapore.

This paper is published under the Creative Commons Attribution 4.0 International

(CC BY 4.0) license. Authors reserve their rights to disseminate the work on their

personal and corporate Web sites with the appropriate attribution.

WWW 2018, April 23–27, 2018, Lyon, France

© 2018 IW3C2 (International World Wide Web Conference Committee), published

under Creative Commons CC BY 4.0 License.

ACM ISBN 978-1-4503-5639-8/18/04.

https://doi.org/10.1145/3178876.3186015

Despite the great success of recent neural network models, there

are some defects. First, existing models focus on, and heavily rely

on, the quality of instance representations. An instance here can be

a sentence, paragraph or document. Using a vector to represent sen-

timent is much limited because opinions are delicate and complex.

The capsule structure in our work gives the model more capacity

to model sentiments. Second, linguistic knowledge such as senti-

ment lexicon, negation words (e.g., no, not, never), and intensity

words (e.g., very, extremely), need to be carefully incorporated into

these models to realize their best potential in terms of prediction

accuracy. However, linguistic knowledge requires significant efforts

to develop. Further, the developed sentiment lexicon may not be

applicable to some domain specific datasets. For example, when

patients give feedback to hospital services, words like ‘quick’ and

‘caring’ are all considered strong positive words. These words, are

unlikely to be considered strong positive in movie reviews. Our cap-

sule model does not need any linguistic knowledge, and is able to

output words with sentiment tendencies to explain the sentiments.

In this paper, we make the very first attempt to perform senti-

ment analysis by capsules. A capsule is a group of neurons which

has rich significance [30]. We design each single capsule1 to contain

an attribute, a state, and three modules (i.e., representation module,

probability module, and reconstruction module).

• The attribute of a capsule reflects its dedicated sentiment cate-

gory, which is pre-assigned when we build the capsule. Depend-

ing on the number of sentiment categories in a given problem,

the same number of capsules are built. For example, Positive

Capsule and Negative Capsule are built for a problem with two

sentiment categories.

• The state of a capsule, i.e., ‘active’ or ‘inactive’, is determined by

the probability modules of all capsules in the model. A capsule’s

state is ‘active’ if the output of its probability module is the

largest among all capsules.

• Regarding the three modules, representation module uses the

attention mechanism to build capsule representation; Proba-

bility module uses the capsule representation to predict the

capsule’s state probability; Reconstruction module is used to

rebuild the representation of the input instance. The input in-

stance of a capsule model is a sequence (e.g., a sentence, or a

paragraph). In this work, the input instance representation of

a capsule is computed through RNN.

1This work was done before the publication of [30]. Capsule in this work is designed differently

from that in [30].

Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1165�

In the proposed RNN-Capsule model, each capsule is capable of, not

only predicting the probability of its assigned sentiment, but also

reconstructing the input instance representation. Both qualities are

considered in our training objectives.

Specifically, for each sentiment category, we build a capsule

whose attribute is the same as the sentiment category. Given an in-

put instance, we get its instance representation by using the hidden

vectors of RNN. Taking the hidden vectors as input, each capsule

outputs: (i) the state probability through its probability module, and

(ii) the reconstruction representation through its reconstruction

module. During training, one objective is to maximize the state

probability of the capsule corresponding to the groundtruth sen-

timent, and to minimize the state probabilities of other capsule(s).

The other objective is to minimize the distance between the input

instance representation and the reconstruction representation of

the capsule corresponding to the ground truth, and to maximize

such distances for other capsule(s). In testing, a capsule’s state

becomes ‘active’ if its state probability is the largest among all cap-

sules for a given test instance. The states of all other capsule(s) will

be ‘inactive’. Attribute of the active capsule is selected to be the

predicted sentiment category of the test instance.

Compared with most existing neural network models for senti-

ment analysis, RNN-Capsule model does not heavily rely on the

quality of input instance representation. In particular, the RNN

layer in our model can be realized through the widely used Long

Short-Term Memory (LSTM) model, Gated Recurrent Unit (GRU)

model or their variants. RNN-Capsule does not require any lin-

guistic knowledge. Instead, each capsule is capable of outputting

words with sentiment tendencies reflecting its assigned sentiment

category. Recall that the representation module of a capsule uses at-

tention mechanism to build the capsule representation. We observe

through experiments that the attended words by each capsule well

reflect the capsule’s sentiment category. These words reflect the do-

main specificity of the dataset, although not included in sentiment

lexicon. For instance, our model is able to identify ‘professional’,

‘quick’, and ‘caring’ as strong positive words in patient feedback

to hospitals. We also observe that the attended words include not

only high frequency words, but also medium and low frequency

words, and even typos which are common in social media. These

domain dependent sentiment words could be extremely useful for

decision makers to identify the positive and negative aspects of

their services or products. The main contributions are as follows:

• To the best of our knowledge, RNN-Capsule is the first attempt

to use capsule model for sentiment analysis. A capsule is easy

to build with input instance representations taken from RNN.

Each capsule contains an attribute, a state, and three simple

modules (representation, probability, and reconstruction).

• We demonstrate that RNN-Capsule does not require any lin-

guistic knowledge to achieve state-of-the-art performance. Fur-

ther, capsule model is able to attend opinion words that reflect

domain knowledge of the dataset.

• We conduct experiments on two benchmark datasets and one

proprietary dataset, to compare our capsule model with strong

baselines. Our experimental results show that capsule model is

competitive and robust.

2 RELATED WORK

Early methods for sentiment analysis are mostly based on manually

defined rules. With the recent development of deep learning tech-

niques, neural network based approaches become the mainstream.

On this basis, many researchers apply linguistic knowledge for

better performance in sentiment analysis.

Traditional Sentiment Analysis. Many methods for sentiment

analysis focus on feature engineering. The carefully designed fea-

tures are then fed to machine learning methods in a supervised

learning setting. Performance of sentiment classification therefore

heavily depends on the choice of feature representation of text. The

system in [24] implements a number of hand-crafted features, and

is the top performer in SemEval 2013 Twitter Sentiment Classifica-

tion Track. Other than supervised learning, Turney [38] introduces

an unsupervised approach by using sentiment words/phrases ex-

tracted from syntactic patterns to determine document polarity.

Goldberg and Zhu [6] propose a semi-supervised approach where

the unlabeled reviews are utilized in a graph-based method.

In terms of features, different kinds of representations have been

used in sentiment analysis, including bag-of-words representation,

word co-occurrences, and syntactic contexts [26]. Despite its effec-

tiveness, feature engineering is labor intensive, and is unable to

extract and organize the discriminative information from data [7].

Sentiment Analysis by Neural Networks. Since the proposal of

a simple and effective approach to learn distributed representations

of words and phrases [23], neural network based models have

shown their great success in many natural language processing

(NLP) tasks. Many models have been applied to sentiment analysis,

including Recursive Auto Encoder [4, 29, 33], Recursive Neural

Tensor Network [34], Recurrent Neural Network [22, 36], LSTM [9],

Tree-LSTMs [35], and GRU[3].

Recursive autoencoder neural network builds the representa-

tion of a sentence from subphrases recursively [4, 29, 33]. Such

recursive models usually depend on a tree structure of input text.

In order to obtain competitive results, all subphrases need to be

annotated. By utilizing syntax structures of sentences, tree-based

LSTMs have proved effective for many NLP tasks, including senti-

ment analysis [35]. However, such models may suffer from syntax

parsing errors which are common in resource-lacking languages. Se-

quence models like CNN, do not require tree-structured data, which

are widely adopted for sentiment classification [13, 14]. LSTM is

also common for learning sentence-level representation due to its

capability of modeling the prefix or suffix context as well as tree-

structured data [9, 35]. Despite the effectiveness of those methods,

it is still challenging to discriminate different sentiment polarities

at a fine-grained level.

In [8], the proposed neural model improves coherence by ex-

ploiting the distribution of word co-occurrences through the use of

neural word embeddings. The list of top representative words for

each inferred aspect reflects the aspect, leading to more meaningful

results. The approach in [19] combines two modular components,

generator and encoder, to extract pieces of input text as justifi-

cations. The extracted short and coherent pieces of text alone is

sufficient for the prediction, and can be used to explain the predic-

tion.

Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1166�

Linguistic Knowledge. Linguistic knowledge has been carefully

incorporated into models to realize the best potential in terms of

prediction accuracy. Classical linguistic knowledge or sentiment

resources include sentiment lexicons, negators, and intensifiers.

Sentiment lexicons are valuable for rule-based or lexicon-based

models [10]. There are also studies for automatic construction

of sentiment lexicons from social data [39] or from multiple lan-

guages [2]. Recently, a context-sensitive lexicon-based method was

proposed based on a simple weighted-sum model [37]. It uses an

RNN to learn the sentiments strength, intensification, and negation

of lexicon sentiments in composing the sentiment value of sen-

tences. Aspect information, negation words, sentiment intensities

of phrases, parsing tree and combination of them were applied into

models to improve their performance. Attention-based LSTMs for

aspect-level sentiment classification were proposed in [40]. The key

idea is to add aspect information to the attention mechanism. A

linear regression model was proposed to predict the valence value

for content words in [41]. The valence degree of the text can be

changed because of the effect of intensity words. In [28], sentiment

lexicons, negation words, and intensity words are all considered

into one model for sentence-level sentiment analysis.

However, linguistic knowledge requires significant human effort

to develop. The developed sentiment lexicon may not be applicable

to some domain specific dataset. All of those limit the application

of models based on linguistic knowledge.

3 RNN-CAPSULE MODEL

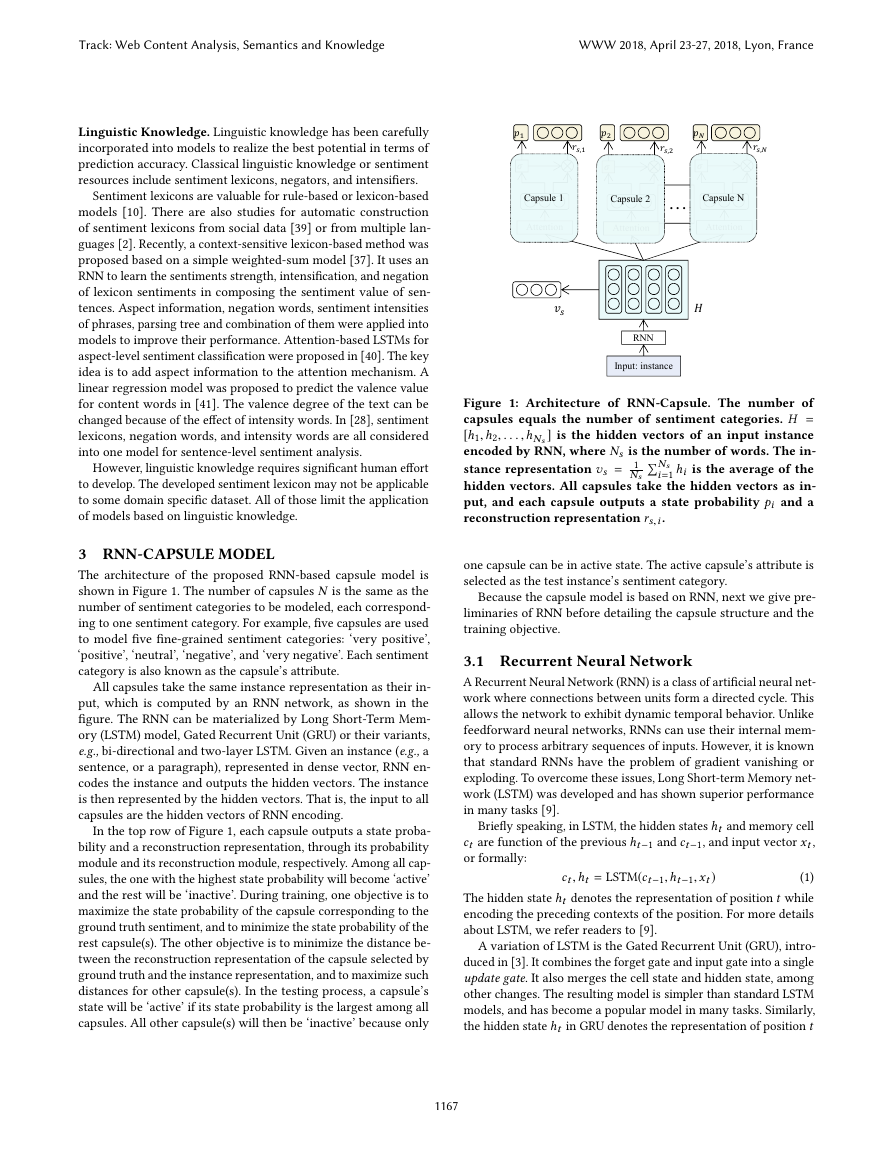

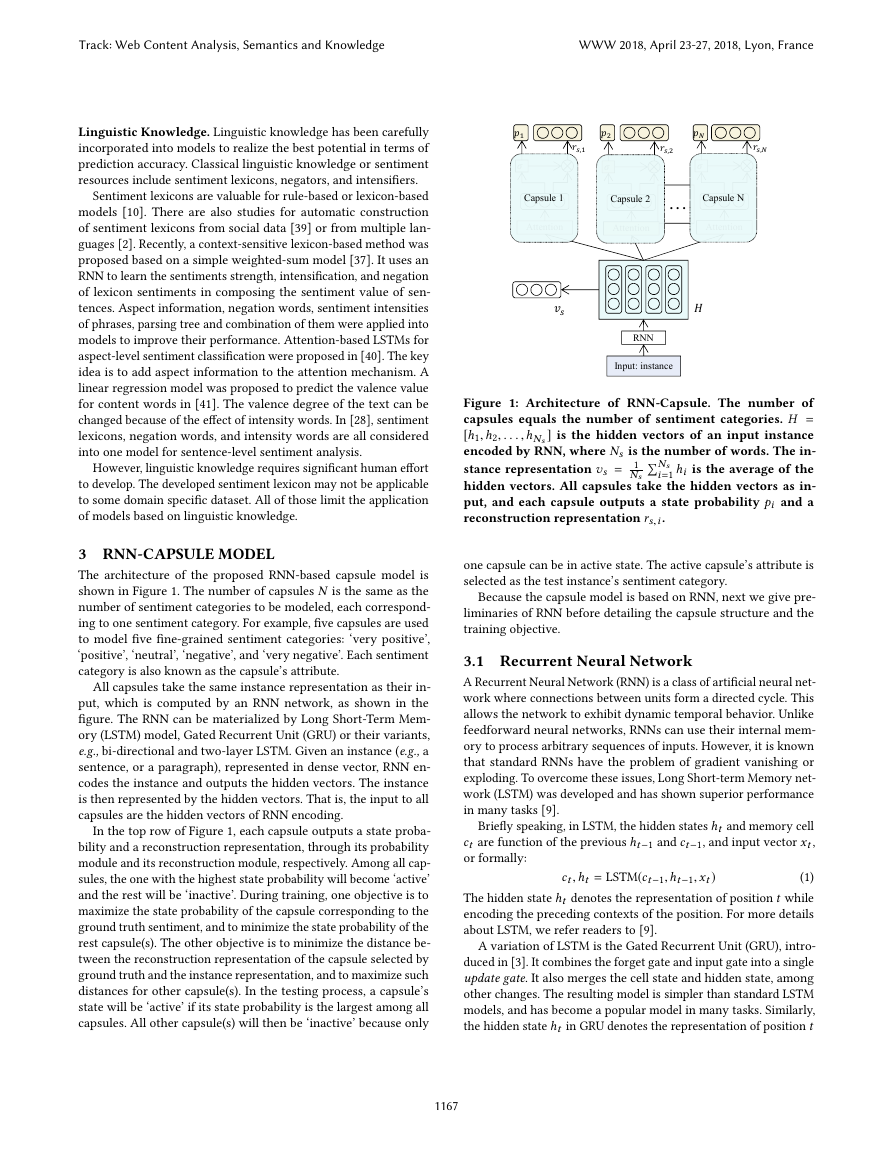

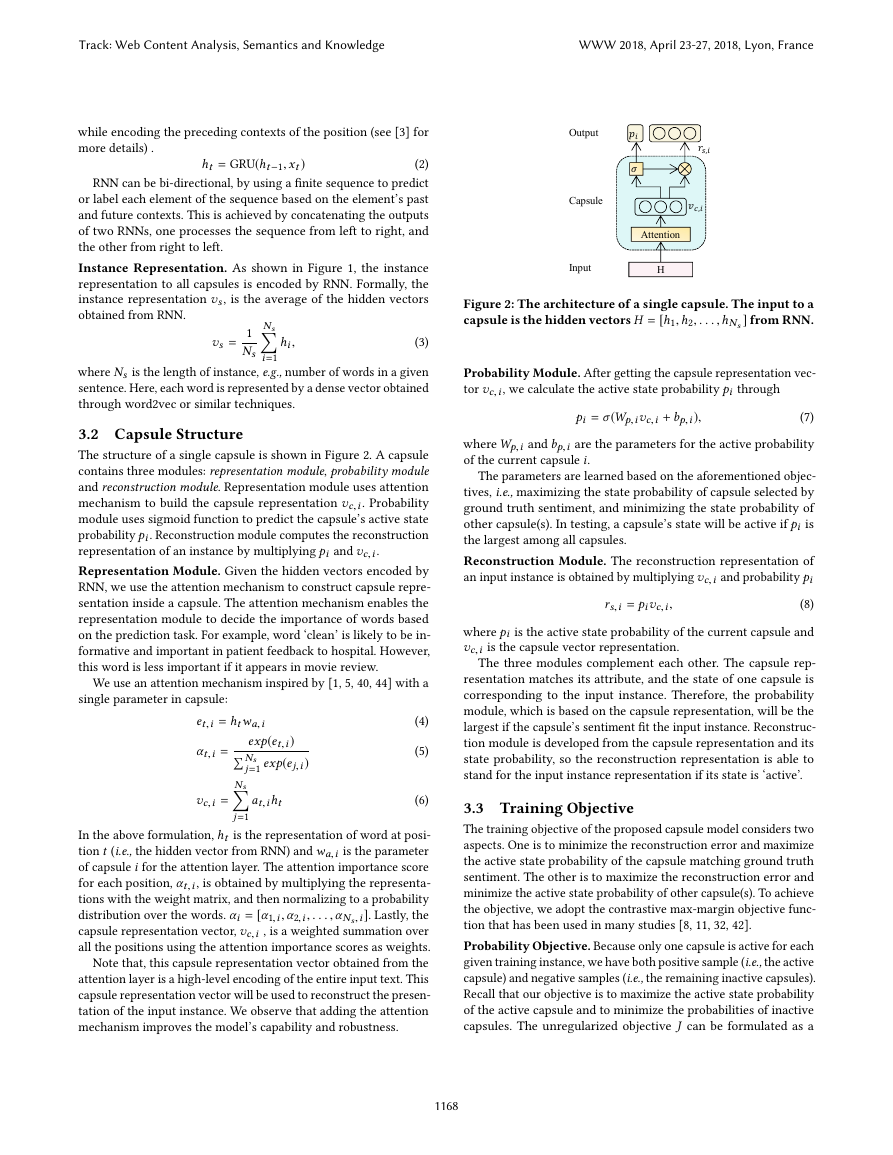

The architecture of the proposed RNN-based capsule model is

shown in Figure 1. The number of capsules N is the same as the

number of sentiment categories to be modeled, each correspond-

ing to one sentiment category. For example, five capsules are used

to model five fine-grained sentiment categories: ‘very positive’,

‘positive’, ‘neutral’, ‘negative’, and ‘very negative’. Each sentiment

category is also known as the capsule’s attribute.

All capsules take the same instance representation as their in-

put, which is computed by an RNN network, as shown in the

figure. The RNN can be materialized by Long Short-Term Mem-

ory (LSTM) model, Gated Recurrent Unit (GRU) or their variants,

e.g., bi-directional and two-layer LSTM. Given an instance (e.g., a

sentence, or a paragraph), represented in dense vector, RNN en-

codes the instance and outputs the hidden vectors. The instance

is then represented by the hidden vectors. That is, the input to all

capsules are the hidden vectors of RNN encoding.

In the top row of Figure 1, each capsule outputs a state proba-

bility and a reconstruction representation, through its probability

module and its reconstruction module, respectively. Among all cap-

sules, the one with the highest state probability will become ‘active’

and the rest will be ‘inactive’. During training, one objective is to

maximize the state probability of the capsule corresponding to the

ground truth sentiment, and to minimize the state probability of the

rest capsule(s). The other objective is to minimize the distance be-

tween the reconstruction representation of the capsule selected by

ground truth and the instance representation, and to maximize such

distances for other capsule(s). In the testing process, a capsule’s

state will be ‘active’ if its state probability is the largest among all

capsules. All other capsule(s) will then be ‘inactive’ because only

Figure 1: Architecture of RNN-Capsule. The number of

capsules equals the number of sentiment categories. H =

[h1, h2, . . . , hNs] is the hidden vectors of an input instance

encoded by RNN, where Ns is the number of words. The in-

stance representation vs = 1

i =1 hi is the average of the

Ns

hidden vectors. All capsules take the hidden vectors as in-

put, and each capsule outputs a state probability pi and a

reconstruction representation rs,i.

�Ns

one capsule can be in active state. The active capsule’s attribute is

selected as the test instance’s sentiment category.

Because the capsule model is based on RNN, next we give pre-

liminaries of RNN before detailing the capsule structure and the

training objective.

3.1 Recurrent Neural Network

A Recurrent Neural Network (RNN) is a class of artificial neural net-

work where connections between units form a directed cycle. This

allows the network to exhibit dynamic temporal behavior. Unlike

feedforward neural networks, RNNs can use their internal mem-

ory to process arbitrary sequences of inputs. However, it is known

that standard RNNs have the problem of gradient vanishing or

exploding. To overcome these issues, Long Short-term Memory net-

work (LSTM) was developed and has shown superior performance

in many tasks [9].

Briefly speaking, in LSTM, the hidden states ht and memory cell

ct are function of the previous ht−1 and ct−1, and input vector xt ,

or formally:

ct , ht = LSTM(ct−1, ht−1, xt)

(1)

The hidden state ht denotes the representation of position t while

encoding the preceding contexts of the position. For more details

about LSTM, we refer readers to [9].

A variation of LSTM is the Gated Recurrent Unit (GRU), intro-

duced in [3]. It combines the forget gate and input gate into a single

update gate. It also merges the cell state and hidden state, among

other changes. The resulting model is simpler than standard LSTM

models, and has become a popular model in many tasks. Similarly,

the hidden state ht in GRU denotes the representation of position t

,. . . RNNInput: instanceAttentionAttention,Attention,Capsule 1Capsule NCapsule 2Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1167�

while encoding the preceding contexts of the position (see [3] for

more details) .

ht = GRU(ht−1, xt)

(2)

RNN can be bi-directional, by using a finite sequence to predict

or label each element of the sequence based on the element’s past

and future contexts. This is achieved by concatenating the outputs

of two RNNs, one processes the sequence from left to right, and

the other from right to left.

Instance Representation. As shown in Figure 1, the instance

representation to all capsules is encoded by RNN. Formally, the

instance representation vs, is the average of the hidden vectors

obtained from RNN.

Ns�

i =1

vs =

1

Ns

hi ,

(3)

where Ns is the length of instance, e.g., number of words in a given

sentence. Here, each word is represented by a dense vector obtained

through word2vec or similar techniques.

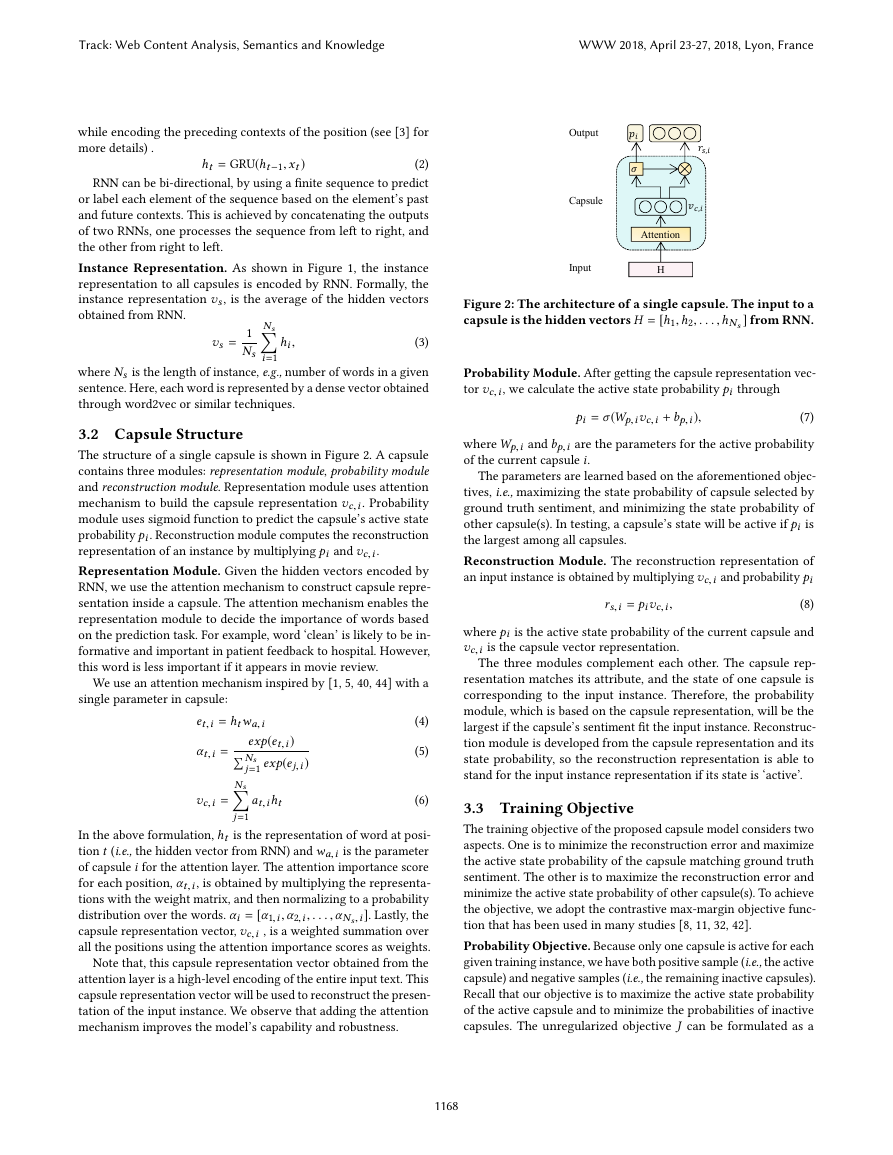

3.2 Capsule Structure

The structure of a single capsule is shown in Figure 2. A capsule

contains three modules: representation module, probability module

and reconstruction module. Representation module uses attention

mechanism to build the capsule representation vc,i. Probability

module uses sigmoid function to predict the capsule’s active state

probability pi. Reconstruction module computes the reconstruction

representation of an instance by multiplying pi and vc,i.

Representation Module. Given the hidden vectors encoded by

RNN, we use the attention mechanism to construct capsule repre-

sentation inside a capsule. The attention mechanism enables the

representation module to decide the importance of words based

on the prediction task. For example, word ‘clean’ is likely to be in-

formative and important in patient feedback to hospital. However,

this word is less important if it appears in movie review.

We use an attention mechanism inspired by [1, 5, 40, 44] with a

single parameter in capsule:

et,i = ht wa,i

�Ns

Ns�

j=1

αt,i =

vc,i =

exp(et,i)

j=1 exp(ej,i)

at,iht

(4)

(5)

(6)

In the above formulation, ht is the representation of word at posi-

tion t (i.e., the hidden vector from RNN) and wa,i is the parameter

of capsule i for the attention layer. The attention importance score

for each position, αt,i, is obtained by multiplying the representa-

tions with the weight matrix, and then normalizing to a probability

distribution over the words. αi = [α1,i , α2,i , . . . , αNs,i]. Lastly, the

capsule representation vector, vc,i , is a weighted summation over

all the positions using the attention importance scores as weights.

Note that, this capsule representation vector obtained from the

attention layer is a high-level encoding of the entire input text. This

capsule representation vector will be used to reconstruct the presen-

tation of the input instance. We observe that adding the attention

mechanism improves the model’s capability and robustness.

Figure 2: The architecture of a single capsule. The input to a

capsule is the hidden vectors H = [h1, h2, . . . , hNs] from RNN.

Probability Module. After getting the capsule representation vec-

tor vc,i, we calculate the active state probability pi through

pi = σ(Wp,ivc,i + bp,i),

(7)

where Wp,i and bp,i are the parameters for the active probability

of the current capsule i.

The parameters are learned based on the aforementioned objec-

tives, i.e., maximizing the state probability of capsule selected by

ground truth sentiment, and minimizing the state probability of

other capsule(s). In testing, a capsule’s state will be active if pi is

the largest among all capsules.

Reconstruction Module. The reconstruction representation of

an input instance is obtained by multiplying vc,i and probability pi

(8)

where pi is the active state probability of the current capsule and

vc,i is the capsule vector representation.

The three modules complement each other. The capsule rep-

resentation matches its attribute, and the state of one capsule is

corresponding to the input instance. Therefore, the probability

module, which is based on the capsule representation, will be the

largest if the capsule’s sentiment fit the input instance. Reconstruc-

tion module is developed from the capsule representation and its

state probability, so the reconstruction representation is able to

stand for the input instance representation if its state is ‘active’.

rs,i = pivc,i ,

3.3 Training Objective

The training objective of the proposed capsule model considers two

aspects. One is to minimize the reconstruction error and maximize

the active state probability of the capsule matching ground truth

sentiment. The other is to maximize the reconstruction error and

minimize the active state probability of other capsule(s). To achieve

the objective, we adopt the contrastive max-margin objective func-

tion that has been used in many studies [8, 11, 32, 42].

Probability Objective. Because only one capsule is active for each

given training instance, we have both positive sample (i.e., the active

capsule) and negative samples (i.e., the remaining inactive capsules).

Recall that our objective is to maximize the active state probability

of the active capsule and to minimize the probabilities of inactive

capsules. The unregularized objective J can be formulated as a

Attention,H InputCapsuleOutput,Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1168�

For a given training instance, yi = −1 for the active capsule (i.e., the

one that matches the training instance’s ground truth sentiment).

All remaining y’s are set to 1. We use a mask vector to indicate

which capsule is active for each training instance.

Reconstruction Objective. The other objective is to ensure that

the reconstruction representation rs,i of the active capsule is similar

to the instance representation vs, meanwhile vs is different from

the reconstruction representations of inactive capsules. Similarly,

the unregularized objective U can be formulated as another hinge

loss that maximizes the inner product between rs,i and vs and

simultaneously minimizes the inner product between rs,i from the

inactive capsules and vs:

U(θ) =� max(0, 1 +

N�

i =1

yivsrs,i)

(10)

hinge loss:

J(θ) =� max(0, 1 +

N�

i =1

yipi)

(9)

Again, yi = −1 if the capsule is active and yi = 1 if the capsule is

inactive.

Considering both objectives, our final objective function L is

obtained by adding J and U :

L(θ) = J(θ) + U(θ)

(11)

4 EXPERIMENT

4.1 Dataset

We conduct experiments on two benchmark datasets, namely Movie

Review (MR) [25] and Stanford Sentiment Treebank (SST) [31], and

one proprietary dataset. Both MR and SST have been widely used in

sentiment classification evaluation which enables us to benchmark

our result against the published results.

Movie Review. Movie Review (MR)2 is a collection of movie re-

views in English [25], collected from www.rottentomatoes.com.

Each instance, typically a sentence, is annotated with its source

review’s sentiment categories, either ‘positive’ or ‘negative’. There

are 5331 positive and 5331 negative processed sentences.

Stanford Sentiment Treebank. SST3 is the first corpus with fully

labeled parse trees, which allows for a comprehensive analysis of

the compositional effects of sentiment in language [31]. This corpus

is based on the dataset introduced by Pang and Lee [25]. It includes

fine-grained sentiment labels for 215,154 phrases parsed by the

Stanford parser [16] in the parse trees of 11,855 sentences. The

sentiment label set is {0,1,2,3,4}, where the numbers correspond to

‘very negative’, ‘negative’, ‘neutral’, ‘positive’, and ‘very positive’,

respectively. Note that, because SST provides phrase-level annota-

tions on the parse trees, some of the reported results are obtained

based on the phrase-level annotations. In our experiments, we only

utilize the sentence-level annotations because our capsule model

does not need the expensive phrase-level annotation.

2Sentence polarity dataset v1.0. http://www.cs.cornell.edu/people/pabo/movie-review-data/

3https://nlp.stanford.edu/sentiment/index.html

Table 1: Number of instances in hospital feedback dataset

Sentiment Number of answers

Question

Positive

What I liked?

What could be improved? Negative

25,042

21,240

Hospital Feedback. We use a proprietary patient opinion dataset

that was generated by a non-profit feedback platform for health

services in the UK.

We use the text content from the feedback forms filled by patients.

Specifically, we make sentiment analysis on the answers of two

questions: “What I liked?”, and “What could be improved?”. There

is another question in the feedback form: Anything else? whose

answers are not used in our experiments because the sentiment

is uncertain. The number of answers (or instances) to the two

questions are reported in Table 1.

Given the large number of instances, manually annotating all

sentences in hospital feedback is time consuming. In this study, we

simply consider an answer to the question “What I liked?” processes

‘positive’ sentiment, and an answer to the question “What could be

improved?” processes ‘negative’ sentiment. The average length of

the answers is about 120 words, and we consider each answer as

one instance without further splitting an answer into sentences.

We note that the simple labeling scheme (i.e., assigning answers

to “What I liked?” positive and answers to “What could be improved?”

negative) introduces some noise in the dataset. A patient may write

“perfect, nothing to improve” to answer “What could be improved”,

and will be labeled as ‘negative’. Such noise cannot be avoided

without manual annotation. However, their number is negligible

by observation.

4.2 Implementation Details

In our experiments, all word vectors are initialized by Glove4. The

word embedding vectors are pre-trained on an unlabeled corpus

whose size is about 840 billion and the dimension of word vectors

we used is 300 [27]. The dimension of hidden vectors encoded by

RNN is 256 if the RNN is single-directional, and 512 if the RNN is

bi-directional. More specifically, on MR and SST datasets, we use bi-

directional and two-layer LSTM, and on Hospital Feedback dataset,

we use two-layer GRU. The models are trained with a batch size

of 32 examples on SST, 64 examples on MR and Hospital Feedback

datasets. There is a checkpoint every 32 mini-batch on SST, and

64 on MR and Hospital Feedback dataset. The embedding dropout

is 0.3 on MR and Hospital Feedback dataset, and 0.5 on SST. The

same RNN cell dropout of 0.5 is applied on all the three datasets.

The dropout on capsule representation in probability modules of

capsules is also set to 0.5 on all datasets. The length of attention

weights is the same as the length of sentence.

We use Adam [15] as our optimization method. The learning

rate for model parameters except word vectors are 1e −3, and 1e −4

for word vectors. The two parameters β1 and β2 in Adam are 0.9

and 0.999, respectively. The capsule models are implemented on

Pytorch5 (version 0.2.0_3) and the model parameters are randomly

initialized.

4http://nlp.stanford.edu/projects/glove/

5https://github.com/pytorch

Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1169�

Table 2: The accuracy of methods on Movie Review (MR)

and Stanford Sentiment Treebank (SST) datasets. Note that

the models only use sentence-level annotation and not the

phrase-level annotation in SST. The accuracy marked with

* are reported in [12, 14, 18, 33]; and the accuracy marked

with # are reported in [28].

Model

RAE

RNTN

LSTM

Bi-LSTM

LR-LSTM

LR-Bi-LSTM

Tree-LSTM

CNN

CNN-Tensor

DAN

NCSL

RNN-Capsule

Movie Review (MR)

SST (Sentence-level)

77.7*

75.9#

77.4#

79.3#

81.5#

82.1#

80.7#

81.5*

-

-

82.9#

83.8

43.2*

43.4#

45.6#

46.5#

48.2#

48.6#

48.1#

46.9#

50.6*

47.7*

47.1#

49.3

4.3 Evaluation on Benchmark Datasets

Both MR and SST datasets have been widely used in evaluating

sentiment classification. This gives us the convenience of directly

comparing the result of our proposed capsule model against the

reported results using the same experimental setting. Table 2 lists

the accuracy of sentiment classification of baseline methods on the

two datasets reported in a recent ACL 2017 paper [28]. Our capsule

model, named RNN-Capsule, is listed in the last row.

Baseline Methods. We now briefly introduce the baseline meth-

ods, all based on neural networks. Recursive Auto Encoder (RAE,

also known as RecursiveNN) [33] and Recursive Tensor Neural Net-

work (RNTN) [31] are based on parsing trees. RNTN uses tensors

to model correlations between different dimensions of child nodes’

vectors. Bidirectional LSTM (Bi-LSTM) is a variant of LSTM which

is introduced in Section 3.1. Both LSTM and Bi-LSTM are based on

sequence structure of the sentences. LR-LSTM and LR-Bi-LSTM are

linguistically regularized variants of LSTM and Bi-LSTM, respec-

tively. Tree-Structured LSTM (Tree-LSTM) [35] is a generalization

of LSTMs to tree-structured network topologies. Convolutional

Neural Network (CNN) [14] uses convolution and pooling opera-

tions, which is popular in image captioning. CNN-Tensor [18] is

different from CNN where the convolution operation is replaced by

tensor product. Dynamic programming is applied in CNN-Tensor

to enumerate all skippable trigrams in a sentence. Deep Average

Network (DAN) [12] has three layers: one layer to average all word

vectors in a sentence, an MLP layer, and the last layer is the output

layer. Neural Context-Sensitive Lexicon (NCSL) [37] uses a Recur-

rent Neural Network to learn the sentiments values, based on a

simple weighted-sum model, but requires linguistic knowledge.

Observations. On the Movie Review dataset, our proposed RNN-

Capsule model achieves the best accuracy of 83.8. Among the base-

line methods, LR-Bi-LSTM and NCSL outperform the other base-

lines. However, both LR-Bi-LSTM and NCSL requires linguistic

Table 3: Accuracy on Hospital Feedback Dataset

Method

Navie Bayes

Navie Bayes (+Bigram)

Linear SVM

Linear SVM (+Bigram)

Word2vec-SVM (CBOW)

Doc2vec-SVM (PV-DM)

Doc2vec-SVM (PV-DBOW)

Doc2vec-SVM (PV-DM+PV-DBOW)

LSTM

Attention-LSTM

RNN-Capsule

Accuracy

84.7

81.9

87.6

88.9

85.5

77.7

81.8

83.2

89.8

90.2

91.6

knowledge like sentiment lexicon and intensity regularizer. It is

worth noting that lots of human efforts are required to build such

linguistic knowledge. Our capsule model does not use any linguis-

tic knowledge. On the SST dataset, our model is the second best

performer after CNN-Tensor. However, CNN-Tensor is much more

computationally intensive due to the tensor product operation. Our

model only requires simple linear operations on top of the hid-

den vectors obtained through RNN. Our model also outperforms

other strong baselines like LR-Bi-LSTM which requires dedicated

linguistic knowledge.

4.4 Evaluation on Hospital Feedback

Baseline Methods. We now evaluate RNN-Capsule on the hospi-

tal feedback dataset. Although neural network models have shown

their effectiveness on many other datasets, it is better to provide

a complete performance overview for a new dataset. To this end,

we evaluate three kinds of baseline methods listed in Table 3: (i)

The traditional machine learning models based on Naive Bayes and

Support Vector Machines (SVMs) using unigram and bigram repre-

sentations; (ii) SVMs with dense vector representations obtained

through Word2vec and Doc2vec; and (iii) LSTM based baselines,

due to the promising accuracy obtained by LSTM based models

among neural network models reported earlier.

Specifically, for the model named Word2vec-SVM, word vectors

learned through CBOW are used to learn the SVM classifiers on pa-

tient feedback. Each feedback is represented by the averaged vector

of its words. For Doc2vec-SVM, Doc2vec is used to learn vectors

for all feedbacks where PV-DBOW, PV-DM, or their concatenation

(i.e., PV-DBOW + PV-DM) are used [17]. Because attention mech-

anism is utilized in our RNN-Capsule model, we also evaluated

Attention-LSTM. This model is the same as LSTM, except that an

additional attention weight vector is trained. The weight vector is

applied to the LSTM outputs at every position to produce weights

for different time stamps. The weighted average of LSTM outputs

is used for sentiment classification6. Naive Bayes, Linear SVM,

word2vec/doc2vec, and LSTM/Attention-LSTM are implemented

by using NLTK, Scikit-learn, Gensim, and Keras, respectively.

6From each instance, up to the first 300 words are used in LSTM models for computational efficiency.

More than 90% of the instances are shorter than 300 words.

Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1170�

Observations. Among traditional machine learning models based

on Naive Bayes and Support Vector Machines, Linear SVM learned

by using both unigram and bigram (i.e., Linear SVM (+Bigram)) is a

clear winner with accuracy of 88.9. This accuracy is much higher

than all SVM models learn on dense representation from either

Word2vec or Doc2vec.

LSTM-based methods outperform Linear SVM with bigram. When

enhanced with the attention mechanism, attention-LSTM slightly

outperforms the vanilla LSTM by achieving accuracy of 90.2. Our

proposed model, RNN-Capsule, being the top-performer, further

improves the accuracy to 91.6.

5 EXPLAINABILITY ANALYSIS

In Section 4, we show that RNN-Capsule achieves comparable or

better accuracy than state-of-the-art models, without using any

linguistic knowledge. Now, we show that RNN-Capsule is capable

of outputting words with sentiment tendencies reflecting domain

knowledge. In other words, we try to explain for a given dataset,

based on which words, our RNN-Capsule model predicts the senti-

ment categories. These domain dependent sentiment words could

be extremely useful for decision makers to identify the positive and

negative aspects of their services or products.

Attended Words by Capsule. Because of the attention mecha-

nism in our capsule model, each word is assigned an attention

weight. The attention weight of a word is computed as follows:

wc,i = pi αi ,

(12)

where pi is the active state probability of capsule i, and αi is the

attention weight in the representation module of capsule i.

Because each capsule corresponds to one sentiment category, we

collect the attended words by individual capsules. More specifically,

for each capsule, we build a dictionary, where the key is a word

and the value is the sum of attention weights for this word in the

capsule, as the word may appear in multiple test instances. The sum

of attention weights is updated for the word only if the capsule is

‘active’ for the input instance. After evaluating all test instances, we

get the list of attended words for each capsule with their attention

weights.

A straightforward way of ranking the attended words is to com-

pute the averaged attention weight for each word (recall a word may

appear multiple times). We observe that many top-ranked words

are of low frequency. That is, the words have very high attention

weight (or strong sentiment tendencies) but do not appear often.

To get a ranking of medium and high frequency words that are

attended by each capsule, we multiple averaged attention weight

of a word and the logarithm of word frequency. In the following, we

discuss both rankings: the attended words with medium/high word

frequency, and the attended words with low frequency.

5.1 Attended Words with Medium/High Word

Frequency

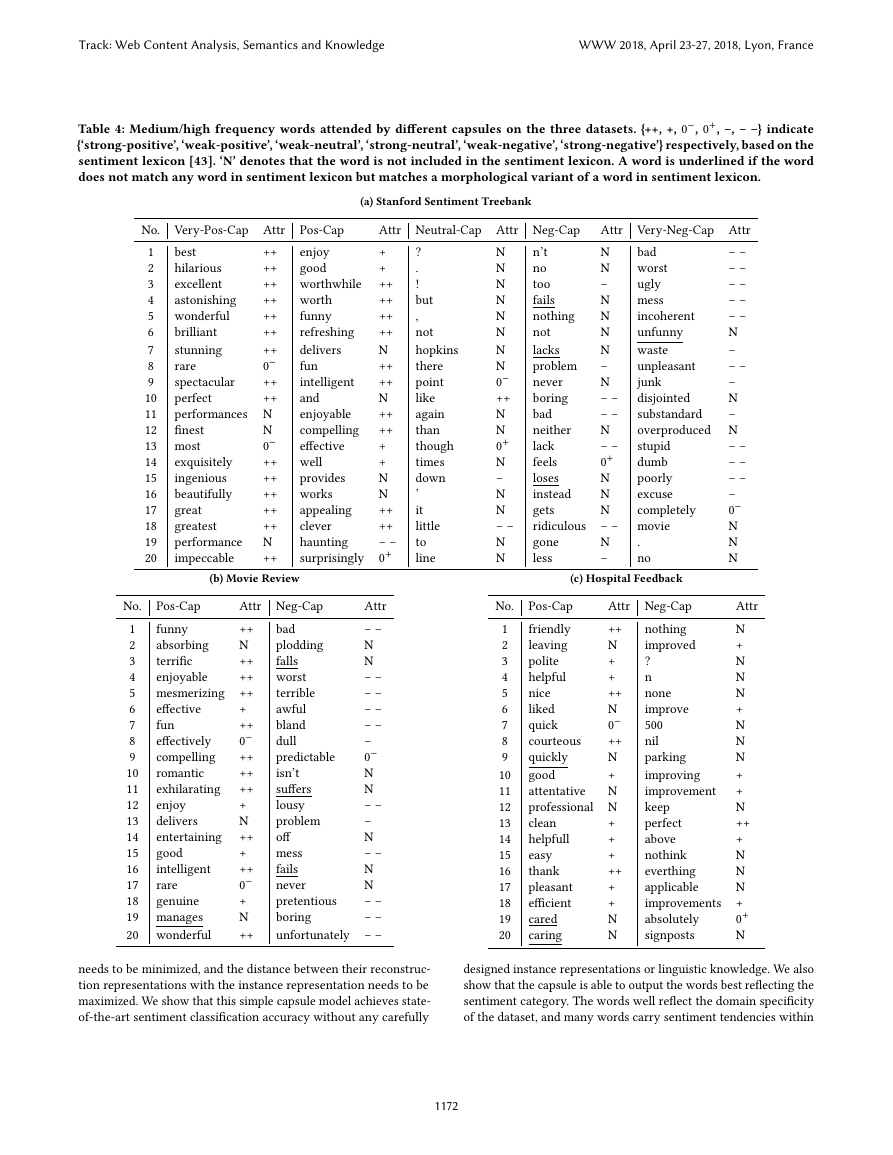

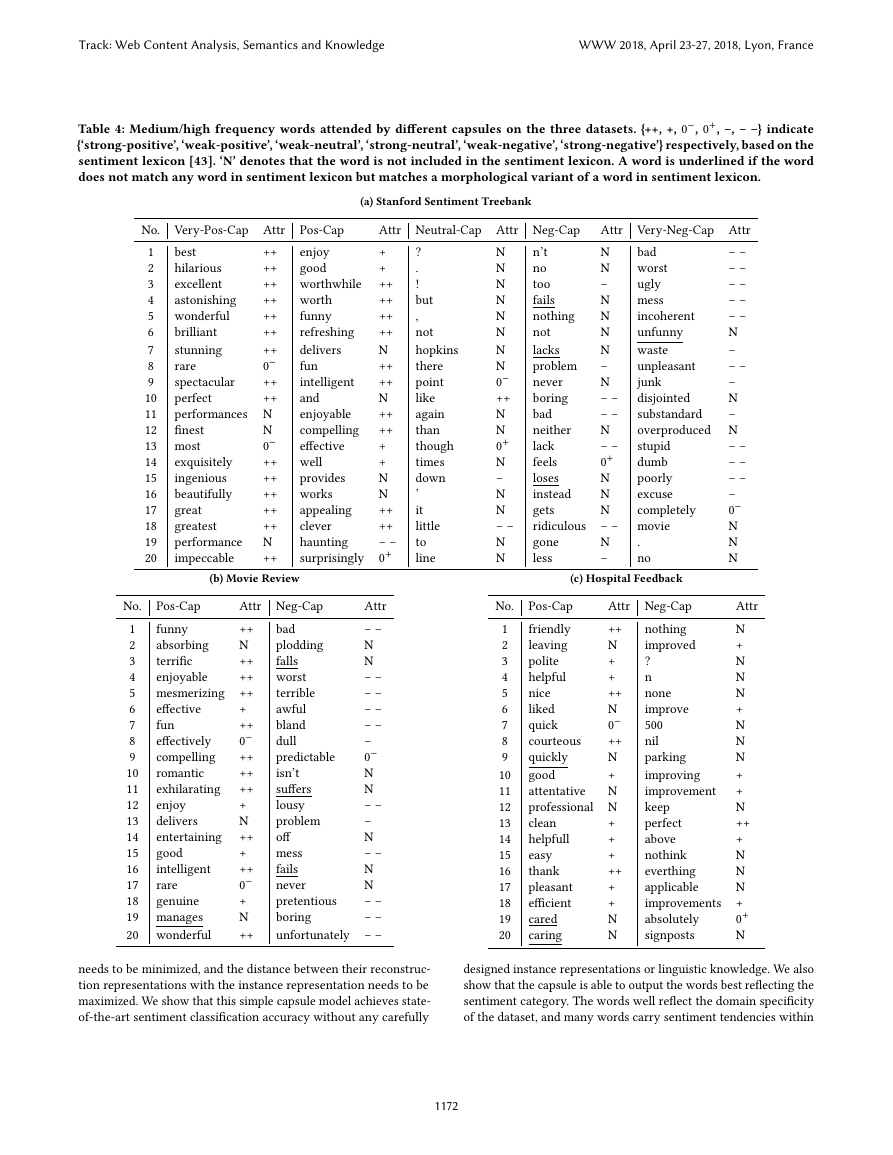

Tables 4a, 4b and 4c list the top 20 ranking words attended by

the different capsules on the three datasets. The words are ranked

by the product of averaged attention weight and the logarithm of

word frequency. Most of the words have medium to high word

frequency in the corresponding dataset. All the words are self-

explanatory for the assigned sentiment category. To further verify

the sentiment tendencies of the words, we match the words with a

sentiment lexicon [43]. In this sentiment lexicon, there are six senti-

ment tendencies, {‘strong-positive’, ‘weak-positive’, ‘weak-neutral’,

‘strong-neutral’, ‘weak-negative’, ‘strong-negative’}. We indicate

the matching words in the tables using {++, +, 0−, 0+, –, – –} for the

six sentiment tendencies. The words that are not included in the

sentiment lexicon are marked with ‘N’. There are also words, which

do not match any words in sentiment lexicon but are possible to

match with morphological changes. We indicate these underlined

words like ‘fails’ and ‘lacks’. Note that the punctuation marks are

processed as tokens and it is not surprising that many of them are

attended by the neutral capsule.

Observe from the three tables, the attended words not only well

reflect the sentiment tendencies, but also reflect the domain differ-

ence. We use the hospital feedback as an example (see Table 4c).

Word ‘leave’ or ‘leaving’ in most contexts are considered not having

any sentiment tendencies. The word is not included in the sentiment

lexicon as expected. However, it is ranked at the second position

in the positive capsule on hospital feedback. A closer look at the

dataset shows that many patients express their happiness for being

able to ‘leave’ hospital or being able to ‘leave’ earlier than expected.

The words like ‘quickly’, ‘attentative’, ‘professional’, ‘cared’, and

‘caring’ clearly make sense for carrying strong positive sentiments

in the context of the dataset. For the negative capsules, because the

sentences are for answering the question ‘What could be improved’,

many of them contain various forms of ‘improve’. From answers

like ‘perfect, nothing to improve’, the words ‘perfect’ and ‘noth-

ing’ are attended. There are also patients requesting to improve

‘everything’, particularly, ‘parking’.

5.2 Attended Words with Low Word Frequency

Tables 5a, 5b and 5c list the top 20 words by average attention

weights. Most of them are low frequency words with no more

than three appearances. Again, the words are self-explainable for

the corresponding sentiment category. On movie review dataset,

our negative capsule identifies ‘dopey’, ‘execrable’, ‘self-satisfied’,

and ‘cloying’ as strong negative words which are very meaningful

for comments on movies. Interestingly, the capsule model is not

sensitive to typos, which are common in social media. The word

‘noneconsideratedoctors’ is attended to be negative with the correct

spelling of ‘none considerate doctors’.

From these tables, we demonstrate that our capsule model is

capable of outputting words with sentiment tendencies reflecting

domain knowledge, even if the words only appear one or two times.

6 CONCLUSION

The key idea of RNN-Capsule model is to design a simple capsule

structure and use each capsule to focus on one sentiment category.

Each capsule outputs its active probability and the reconstruction

representation. The objective of learning is to maximize the active

probability of the capsule matching the ground truth and to mini-

mize its reconstruction representation with the given instance rep-

resentation. At the same time, the other capsules’ active probability

Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1171�

Table 4: Medium/high frequency words attended by different capsules on the three datasets. {++, +, 0−, 0+, –, – –} indicate

{‘strong-positive’, ‘weak-positive’, ‘weak-neutral’, ‘strong-neutral’, ‘weak-negative’, ‘strong-negative’} respectively, based on the

sentiment lexicon [43]. ‘N’ denotes that the word is not included in the sentiment lexicon. A word is underlined if the word

does not match any word in sentiment lexicon but matches a morphological variant of a word in sentiment lexicon.

(a) Stanford Sentiment Treebank

No. Very-Pos-Cap Attr

++

1

best

2

++

hilarious

++

3

excellent

4

++

astonishing

++

5

wonderful

6

++

brilliant

++

7

stunning

0−

8

rare

++

9

spectacular

10

perfect

++

performances N

11

N

12

finest

0−

13 most

++

14

++

15

++

16

++

17

++

18

N

19

20

++

exquisitely

ingenious

beautifully

great

greatest

performance

impeccable

Pos-Cap

enjoy

good

worthwhile

worth

funny

refreshing

delivers

fun

intelligent

and

enjoyable

compelling

effective

well

provides

works

appealing

clever

haunting

surprisingly

Attr Neutral-Cap Attr Neg-Cap

+

+

++

++

++

++

N

++

++

N

++

++

+

+

N

N

++

++

– –

0+

n’t

no

too

fails

nothing

not

lacks

problem

never

boring

bad

neither

lack

feels

loses

instead

gets

ridiculous

gone

less

?

.

!

but

,

not

hopkins

there

point

like

again

than

though

times

down

’

it

little

to

line

N

N

N

N

N

N

N

N

0−

++

N

N

0+

N

–

N

N

– –

N

N

Attr Very-Neg-Cap Attr

bad

– –

N

worst

N

– –

ugly

– –

–

mess

– –

N

incoherent

– –

N

unfunny

N

N

waste

–

N

unpleasant

– –

–

junk

–

N

disjointed

N

– –

substandard

– –

–

overproduced N

N

– –

stupid

– –

– –

dumb

0+

– –

poorly

N

N

excuse

–

0−

N

completely

N

– – movie

N

N

–

N

.

no

(b) Movie Review

Pos-Cap

No.

funny

1

absorbing

2

terrific

3

enjoyable

4

mesmerizing

5

effective

6

fun

7

effectively

8

compelling

9

romantic

10

exhilarating

11

enjoy

12

delivers

13

entertaining

14

good

15

intelligent

16

rare

17

18

genuine

19 manages

20 wonderful

Attr Neg-Cap

++

N

++

++

++

+

++

0−

++

++

++

+

N

++

+

++

0−

+

N

++

bad

plodding

falls

worst

terrible

awful

bland

dull

predictable

isn’t

suffers

lousy

problem

off

mess

fails

never

pretentious

boring

unfortunately

Attr

– –

N

N

– –

– –

– –

– –

–

0−

N

N

– –

–

N

– –

N

N

– –

– –

– –

(c) Hospital Feedback

Attr Neg-Cap

Pos-Cap

++

nothing

friendly

improved

N

leaving

?

+

polite

n

+

helpful

none

++

nice

N

improve

liked

0−

500

quick

nil

++

courteous

parking

N

quickly

+

improving

good

improvement

attentative

N

keep

professional N

perfect

+

clean

above

+

helpfull

easy

+

nothink

everthing

++

thank

applicable

+

pleasant

improvements

+

efficient

cared

N

absolutely

signposts

N

caring

No.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

Attr

N

+

N

N

N

+

N

N

N

+

+

N

++

+

N

N

N

+

0+

N

needs to be minimized, and the distance between their reconstruc-

tion representations with the instance representation needs to be

maximized. We show that this simple capsule model achieves state-

of-the-art sentiment classification accuracy without any carefully

designed instance representations or linguistic knowledge. We also

show that the capsule is able to output the words best reflecting the

sentiment category. The words well reflect the domain specificity

of the dataset, and many words carry sentiment tendencies within

Track: Web Content Analysis, Semantics and KnowledgeWWW 2018, April 23-27, 2018, Lyon, France1172�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc