0

2

0

2

r

a

M

6

1

]

V

C

.

s

c

[

1

v

1

2

0

7

0

.

3

0

0

2

:

v

i

X

r

a

Extended Feature Pyramid Network for Small

Object Detection

Chunfang Deng, Mengmeng Wang, Liang Liu, and Yong Liu†

{dengcf, mengmengwang, leonliuz}@zju.edu.cn, yongliu@iipc.zju.edu.cn

Zhejiang University

Abstract. Small object detection remains an unsolved challenge be-

cause it is hard to extract information of small objects with only a few

pixels. While scale-level corresponding detection in feature pyramid net-

work alleviates this problem, we find feature coupling of various scales

still impairs the performance of small objects. In this paper, we propose

extended feature pyramid network (EFPN) with an extra high-resolution

pyramid level specialized for small object detection. Specifically, we de-

sign a novel module, named feature texture transfer (FTT), which is used

to super-resolve features and extract credible regional details simultane-

ously. Moreover, we design a foreground-background-balanced loss func-

tion to alleviate area imbalance of foreground and background. In our

experiments, the proposed EFPN is efficient on both computation and

memory, and yields state-of-the-art results on small traffic-sign dataset

Tsinghua-Tencent 100K and small category of general object detection

dataset MS COCO.

Keywords: Small Object Detection, Feature Pyramid Network, Feature

Super-Resolution

1

Introduction

Object detection is a fundamental task of many advanced computer vision prob-

lems such as segmentation, image caption and video understanding. Over the

past few years, rapid development of deep learning has boosted the popular-

ity of CNN-based detectors, which mainly include two-stage pipelines [8,7,28,5]

and one-stage pipelines [24,27,20]. Although these general object detectors have

improved accuracy and efficiency substantially, they still perform poorly when

detecting small objects with a few pixels. Since CNN uses pooling layers repeat-

edly to extract advanced semantics, the pixels of small objects can be filtered

out during the downsampling process.

Utilization of low-level features is one way to pick up information about

small objects. Feature pyramid network (FPN) [19] is the first method to en-

hance features by fusing features from different levels and constructing feature

pyramids, where upper feature maps are responsible for larger object detection,

and lower feature maps are responsible for smaller object detection. Despite FPN

improves multi-scale detection performance, the heuristic mapping mechanism

�

2

Chunfang Deng, Mengmeng Wang, Liang Liu, and Yong Liu

(a) Mapping between pyramid level and

proposal size in vanilla FPN detectors.

(b) Detection performance of original P2

and our P

2 on Tsinghua-Tencent 100K.

Fig. 1. The drawback of small object detection in vanilla FPN detectors. (a)Feature

Coupling: Both small and medium objects are detected on the lowest level (P2) of FPN.

(b)Poor Performance of Small Objects on P2: The detection performance of P2 varies

with scale, and the average precision (AP) and average recall (AR) decline sharply

when instances turn small. The extended pyramid level P

2 in our EFPN mitigates this

performance drop

between pyramid level and proposal size in FPN detectors may confuse small

object detection. As shown in Fig. 1(a), small-sized objects must share the same

feature map with medium-sized objects and some large-sized objects, while easy

cases like large-sized objects can pick features from a suitable level. Besides, as

shown in Fig. 1(b), the detection accuracy and recall of the FPN bottom layer

fall dramatically as the object scale decreases. Fig.1 suggests that, feature cou-

pling across scales in vanilla FPN detectors still degenerates the ability of small

object detection.

Intuitively, another way of compensating for the information loss of small

objects is to increase the feature resolution. Thus some super-resolution (SR)

methods are introduced to object detection. Early practices [11,3] directly super-

resolve the input image, but the computational cost of feature extraction in the

following network would be expensive. Li et al. [14] introduce GAN [10] to lift

features of small objects to higher resolution. Noh et al. [25] use high-resolution

target features to supervise SR of the whole feature map containing context

information. These feature SR methods avoid adding to the burden of the CNN

backbone, but they imagine the absent details only on the basis of the low-

resolution feature map, and neglect credible details encoded in other features of

backbones. Hence, they are inclined to fabricate fake textures and artifacts on

CNN features, causing false positives.

In this paper, we propose extended feature pyramid network (EFPN), which

employs large-scale SR features with abundant regional details to decouple small

and medium object detection. EFPN extends the original FPN with a high-

resolution level specialized for small-sized object detection. To avoid expensive

computation that would be caused by direct high-resolution image input, the

01234050100150200250300350400450500550600FPNFeatureLevelProposalSize(px)SmallMediumLarge405060708090102030405060708090AP/AR(%)ObjectSize(px)AP ofAR ofAP ofAR of!"!"!"′!"′�

Extended Feature Pyramid Network for Small Object Detection

3

extended high-resolution feature maps of our method is generated by feature

SR embedded FPN-like framework. After construction of the vanilla feature

pyramid, the proposed feature texture transfer (FTT) module firstly combines

deep semantics from low-resolution features and shallow regional textures from

high-resolution feature reference. Then, the subsequent FPN-like lateral connec-

tion will further enrich the regional characteristics by tailor-made intermediate

CNN feature maps. One advantage of EFPN is that the generation of the high-

resolution feature maps depends on original real features produced by CNN and

FPN, rather than on unreliable imagination in other similar methods. As shown

in Fig. 1(b), the extended pyramid level with credible details in EFPN improves

detection performance on small objects significantly.

Moreover, we introduce features which are generated by large-scale input

images as supervision to optimize EFPN, and design a foreground-background-

balanced loss function. We argue that general reconstruction loss will lead to

insufficient learning of positive pixels, as small instances merely cover frac-

tional area on the whole feature map. In light of the importance of foreground-

background balance [20], we add loss of object areas to global loss function,

drawing attention to the feature quality of positive pixels.

We evaluate our method on challenging small traffic-sign dataset Tsinghua-

Tencent 100K and general object detection dataset MS COCO. The results

demonstrates that the proposed EFPN outperforms other state-of-the-art meth-

ods on both datasets. Besides, compared with multi-scale test, single-scale EFPN

achieves similar performance but with fewer computing resources.

For clarity, the main contributions of our work can be summarized as:

(1) We propose extended feature pyramid network (EFPN) which improves the

performance of small object detection.

(2) We design a pivotal feature reference-based SR module named feature tex-

ture transfer (FTT), to endow the extended feature pyramid with credible

details for more accurate small object detection.

(3) We introduce a foreground-background-balanced loss function to draw at-

tention on positive pixels, alleviating area imbalance of foreground and back-

ground.

(4) Our efficient approach significantly improves the performance of detectors,

and becomes state-of-the-art on Tsinghua-Tencent 100K and small category

of MS COCO.

2 Related Work

2.1 Deep Object Detectors

Deep learning based detectors have ruled general object detection due to their

high performance. The successful two-stage methods [8,7,28,5] firstly generate

Regions of Interest (RoIs), and then refine RoIs with a classifier and a regres-

sor. One-stage detectors [24,27,20], another kind of prevalent detectors, directly

conduct classification and localization on CNN feature maps with the help of

�

4

Chunfang Deng, Mengmeng Wang, Liang Liu, and Yong Liu

pre-defined anchor boxes. Recently, anchor-free frameworks [13,38,31,39] also be-

come increasingly popular. Despite of the development of deep object detectors,

small object detection remains an unsolved challenge. Dilated convolution [34]

is introduced in [23,17,16] to augment receptive fields for multi-scale detection.

However, general detectors tend to focus more on improving the performance

of easier large instances, since the metric of general object detection is average

precision of all scales. Detectors specialized for small objects still need more

exploration.

2.2 Cross-Scale Features

Utilizing cross-scale features is an effective way to alleviate the problem arising

from object scale variation. Building image pyramids is a traditional approach to

generating cross-scale features. Use of features from different layers of network

is another kind of cross-scale practice. SSD [24] and MS-CNN [4] detect objects

of different scales on different layers of CNN backbone. FPN [19] constructs

feature pyramids by merging features from lower layers and higher layers via

a top-down pathway. Following FPN, FPN variants explore more information

pathways in feature pyramids. PANet [22] adds an extra down-top pathway to

pass shallow localization information up. G-FRNet [1] introduces gate unit on

the pathway, which passes crucial information and block ambiguous information.

NAS-FPN [6] delves into optimal pathway configuration using AutoML. Though

these FPN variants improve the performance of multi-scale object detection, they

continue to use the same number of layers as original FPN. But these layers are

not suitable for small object detection, which leads to still poor performance of

small objects.

2.3 Super-Resolution in Object Detection

Some studies introduce SR to object detection, since small object detection al-

ways benefits from large scales. Image-level SR is adopted in some specific situa-

tions where extremely small objects exist, such as satellite images [15] and images

with crowded tiny faces [2]. But large-scale images are burdensome for subse-

quent networks. Instead of super-resolving the whole image, SOD-MTGAN [3]

only super-resolves the area of RoIs, but large quantities of RoIs still need con-

siderable computation. The other way of SR is to directly super-resolve features.

Li et al. [14] use Perceptual GAN to enhance features of small objects with the

characteristics of large objects. STDN [37] employs sub-pixel convolution on top

layers of DenseNet [12] to detect small objects and meanwhile reduce network

parameters. Noh et al. [25] super-resolve the whole feature map and introduce

supervision signal to training process. Nevertheless, above-mentioned feature SR

methods are all based on restricted information from a single feature map. Re-

cent reference-based SR methods [35,36] have capacity of enhancing SR images

with textures or contents from reference images. Enlightened by reference-based

SR, we design a novel module to super-resolves features under the reference of

�

Extended Feature Pyramid Network for Small Object Detection

5

shallow features with credible details, thus generating features more suitable for

small object detection.

Eq. (8)

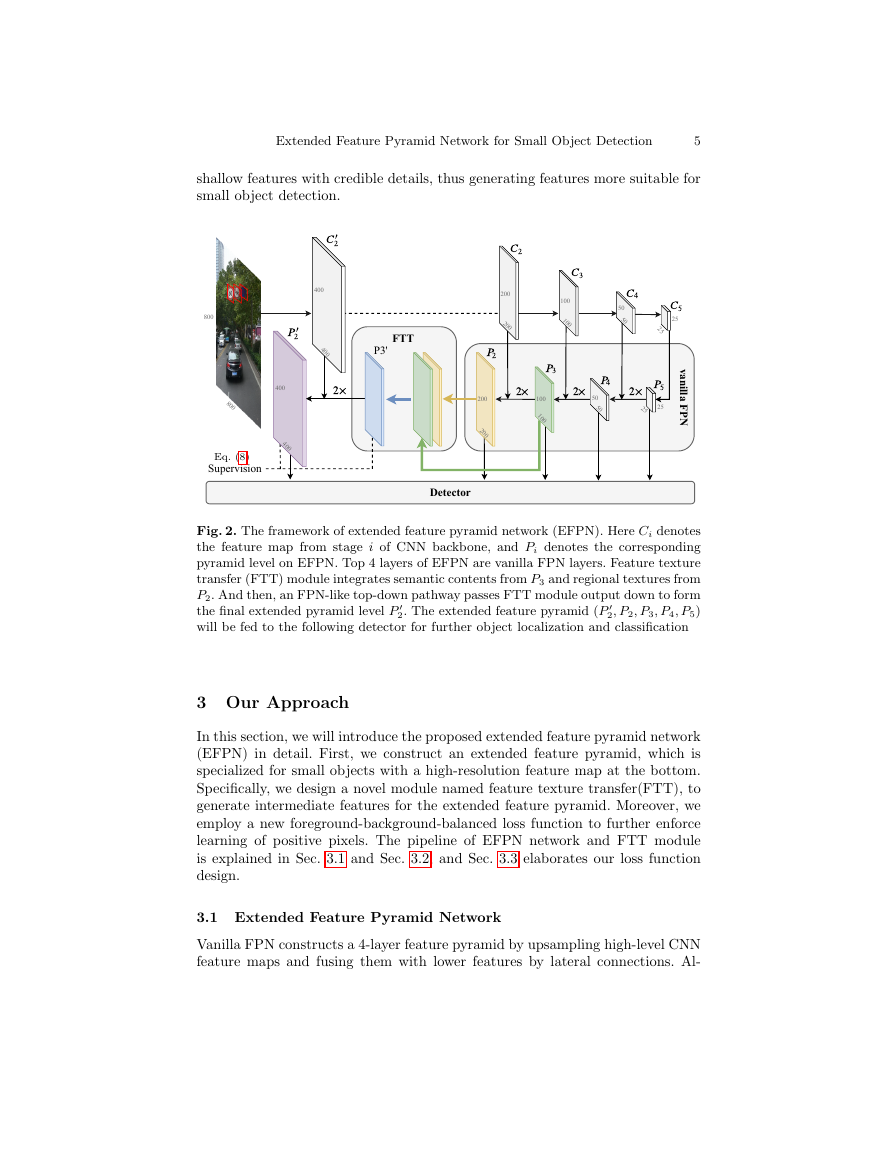

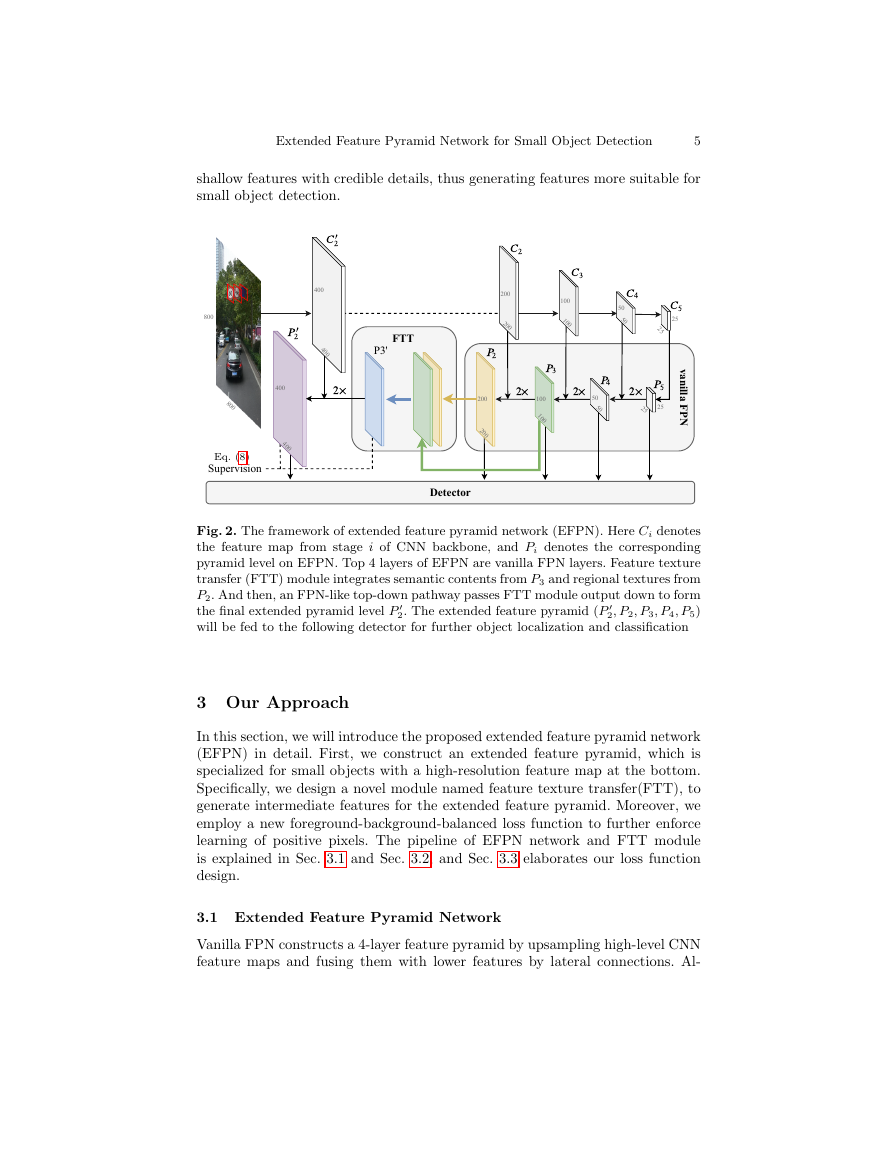

Fig. 2. The framework of extended feature pyramid network (EFPN). Here Ci denotes

the feature map from stage i of CNN backbone, and Pi denotes the corresponding

pyramid level on EFPN. Top 4 layers of EFPN are vanilla FPN layers. Feature texture

transfer (FTT) module integrates semantic contents from P3 and regional textures from

P2. And then, an FPN-like top-down pathway passes FTT module output down to form

the final extended pyramid level P

2, P2, P3, P4, P5)

will be fed to the following detector for further object localization and classification

2. The extended feature pyramid (P

3 Our Approach

In this section, we will introduce the proposed extended feature pyramid network

(EFPN) in detail. First, we construct an extended feature pyramid, which is

specialized for small objects with a high-resolution feature map at the bottom.

Specifically, we design a novel module named feature texture transfer(FTT), to

generate intermediate features for the extended feature pyramid. Moreover, we

employ a new foreground-background-balanced loss function to further enforce

learning of positive pixels. The pipeline of EFPN network and FTT module

is explained in Sec. 3.1 and Sec. 3.2, and Sec. 3.3 elaborates our loss function

design.

3.1 Extended Feature Pyramid Network

Vanilla FPN constructs a 4-layer feature pyramid by upsampling high-level CNN

feature maps and fusing them with lower features by lateral connections. Al-

�2�3�4�5�′2FTTDetector2×2×2×2×�2�3�4�5�′2P3'Supervision8008004001005025400100502540040020020010010050502525vanilla FPN200200�

6

Chunfang Deng, Mengmeng Wang, Liang Liu, and Yong Liu

Table 1. Generation of C

pooling in stage2 is added to generate C

C2 from 2× input image. The branches of C2 and C

C2 and C

2 are generated simultaneously from 1× input

2 in ResNet/ResNeXt backbones. A new branch without max-

2, simulating the semantics and resolution of

2 share the same weights. In EFPN,

Layer Name

Input

Stage1

Stage2

Output

Layer Components

3 × 3 max pool, stride 2

800 × 800(1×)

7 × 7, 64, stride 2

residual blocks ×3

C2:(200 × 200)

800 × 800(1×)

7 × 7, 64, stride 2

residual blocks ×3

2:(400 × 400)

C

though features on different pyramid levels are responsible for objects of differ-

ent sizes, small object detection and medium object detection are still coupled

on the same bottom layer P2 of FPN, as shown in Fig. 1. To relieve this issue,

we propose EFPN to extend the vanilla feature pyramid with a new level, which

accounts for small object detection with more regional details.

We implement the extended feature pyramid by an FPN-like framework

embedded with a feature SR module. This pipeline directly generates high-

resolution features from low-resolution images to support small object detection,

while stays in low computational cost. The overview of EFPN is shown in Fig. 2.

Top 4 pyramid layers are constructed by top-down pathways for medium

and large object detection. The bottom extension in EFPN, which contains an

FTT module, a top-down pathway and a purple pyramid layer in Fig. 2, aims

to capture regional details for small objects. More specifically, in the extension,

the 3rd and 4th pyramid layers of EFPN which are denoted by green and yellow

layers respectively in Fig. 2, are mixed up in the feature SR module FTT to

produce the intermediate feature P

3 with selected regional information, which is

denoted by a blue diamond in Fig. 2. And then, the top-down pathway merges

P

3 with a tailor-made high-resolution CNN feature map C2, producing the final

extended pyramid layer P

2. We remove a max-pooling layer in ResNet/ResNeXt

stage2, and get C

2 shares

the same representation level with original C2 but contains more regional details

due to its higher resolution. And the smaller receptive field in C

2 also helps

better locate small objects. Mathematically, operations of the extension in the

proposed EFPN can be described as

2 as the output of stage2, as shown in in Table 1. C

where ↑2× denotes double upscaling by nearest-neighbor interpolation.

P

2 = P

3 ↑2× +C

2

(1)

In EFPN detectors, the mapping between proposal size and pyramid level

still follows the fashion in [19]:

√

l = l0 + log2(

wh/224)

(2)

Here l represents pyramid level, w and h are the width and height of a box

proposal, 224 is the canonical ImageNet pre-training size, and l0 is the target

�

Extended Feature Pyramid Network for Small Object Detection

7

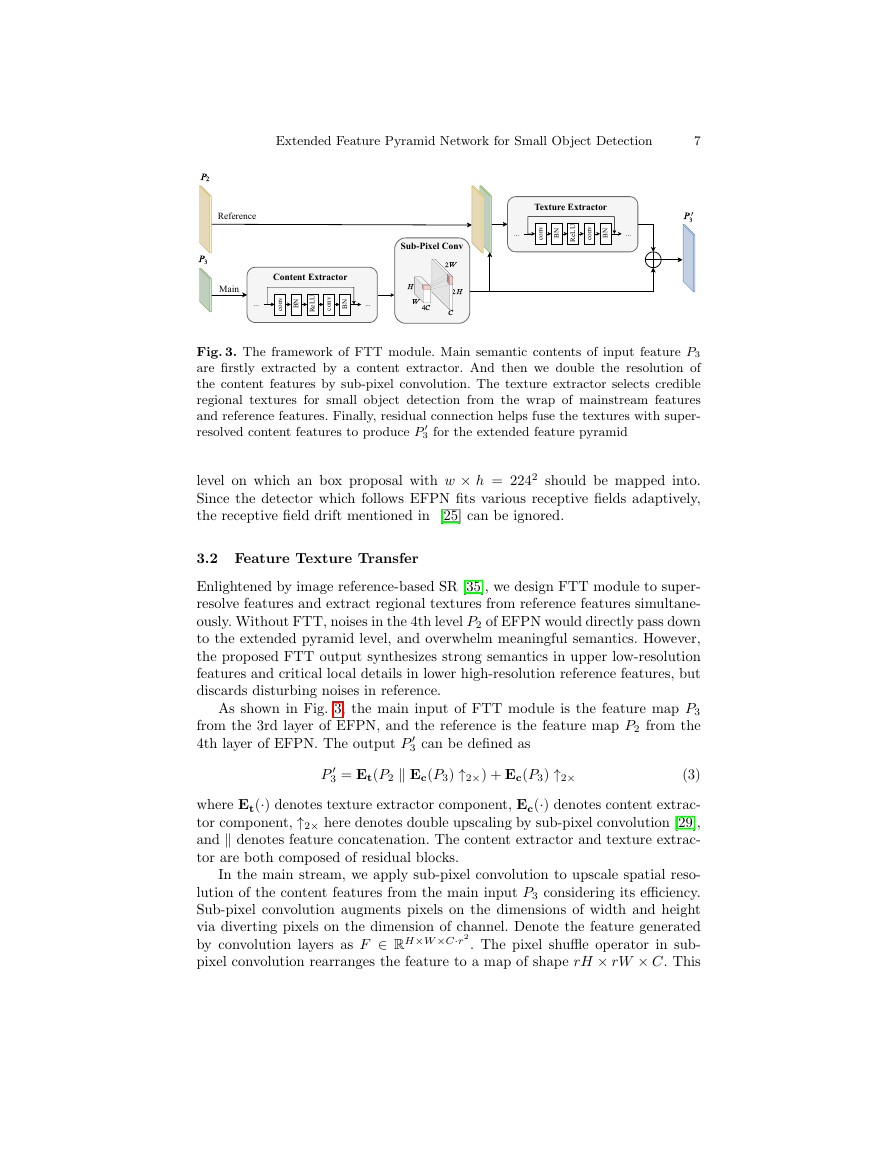

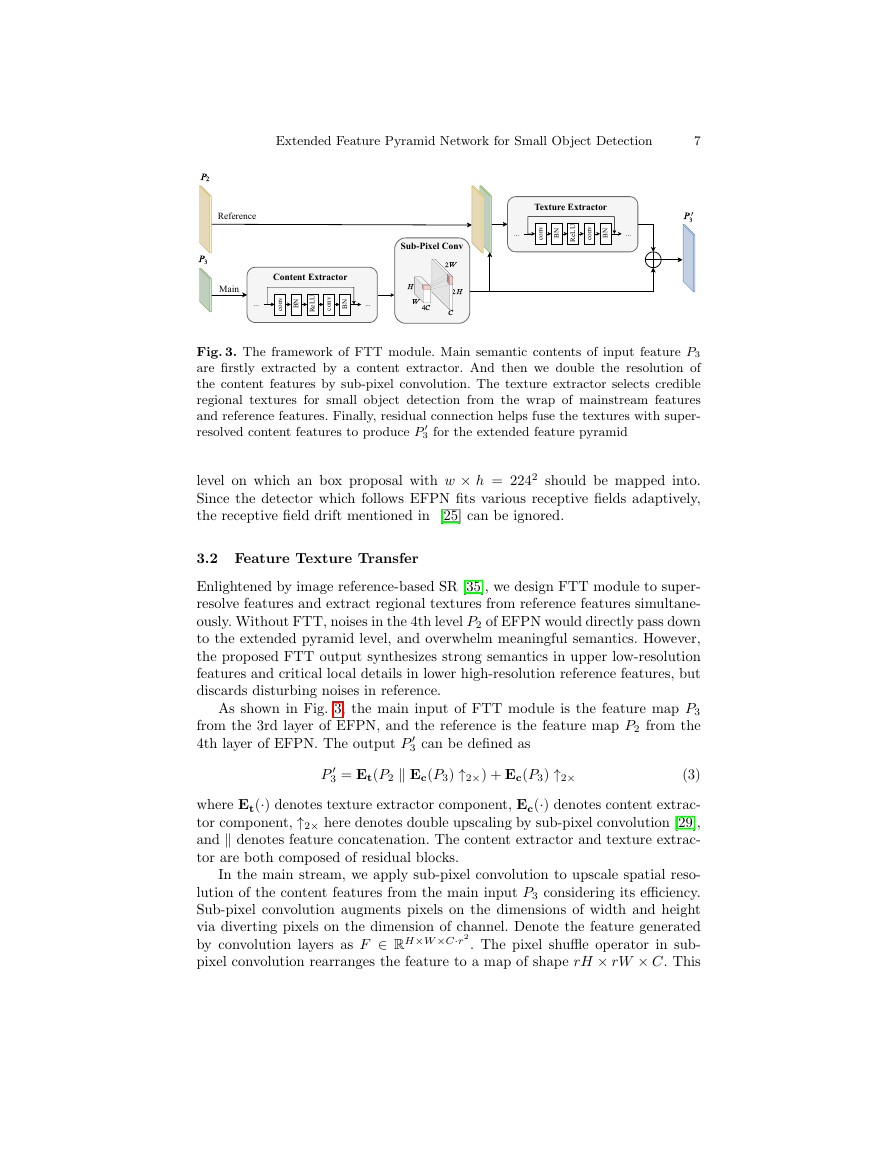

Fig. 3. The framework of FTT module. Main semantic contents of input feature P3

are firstly extracted by a content extractor. And then we double the resolution of

the content features by sub-pixel convolution. The texture extractor selects credible

regional textures for small object detection from the wrap of mainstream features

and reference features. Finally, residual connection helps fuse the textures with super-

resolved content features to produce P

3 for the extended feature pyramid

level on which an box proposal with w × h = 2242 should be mapped into.

Since the detector which follows EFPN fits various receptive fields adaptively,

the receptive field drift mentioned in [25] can be ignored.

3.2 Feature Texture Transfer

Enlightened by image reference-based SR [35], we design FTT module to super-

resolve features and extract regional textures from reference features simultane-

ously. Without FTT, noises in the 4th level P2 of EFPN would directly pass down

to the extended pyramid level, and overwhelm meaningful semantics. However,

the proposed FTT output synthesizes strong semantics in upper low-resolution

features and critical local details in lower high-resolution reference features, but

discards disturbing noises in reference.

As shown in Fig. 3, the main input of FTT module is the feature map P3

from the 3rd layer of EFPN, and the reference is the feature map P2 from the

4th layer of EFPN. The output P

3 can be defined as

3 = Et(P2 Ec(P3) ↑2×) + Ec(P3) ↑2×

P

(3)

where Et(·) denotes texture extractor component, Ec(·) denotes content extrac-

tor component, ↑2× here denotes double upscaling by sub-pixel convolution [29],

and denotes feature concatenation. The content extractor and texture extrac-

tor are both composed of residual blocks.

In the main stream, we apply sub-pixel convolution to upscale spatial reso-

lution of the content features from the main input P3 considering its efficiency.

Sub-pixel convolution augments pixels on the dimensions of width and height

via diverting pixels on the dimension of channel. Denote the feature generated

by convolution layers as F ∈ RH×W×C·r2

. The pixel shuffle operator in sub-

pixel convolution rearranges the feature to a map of shape rH × rW × C. This

......convBNReLUconvBNContent Extractor2����4�......convBNReLUconvBNTexture Extractor�2�3�′3ReferenceMainSub-Pixel Conv2��

8

Chunfang Deng, Mengmeng Wang, Liang Liu, and Yong Liu

operation can be mathematically defined as

PS(F )x,y,c = Fx/r,y/r,C·r·mod(y,r)+C·mod(x,r)+c

(4)

where PS(F )x,y,c denotes the output feature pixel on coordinates (x, y, c) after

pixel shuffle operation PS(·), and r denotes the upscaling factor. In our FTT

module, we adopt r = 2 in order to double the spatial scale.

In the reference stream, the wrap of reference feature P2 and super-resolved

content feature P3 is fed to texture extractor. Texture extractor aims to pick

up credible textures that are for small object detection and block useless noises

from the wrap.

The final element-wise addition of textures and contents ensures the out-

put integrates both semantic and regional information from input and reference.

Hence, the feature map P

3 possesses selected reliable textures from shallow fea-

ture reference P2, as well as similar semantics from the deeper level P3.

3.3 Training Loss

Foreground-Background-Balanced Loss. Foreground-background-balanced

loss is designed to improve comprehensive quality of EFPN. Common global loss

will lead to insufficient learning of small object areas, because small objects only

make up fractional part of the whole image. Foreground-background-balanced

loss function improves the feature quality of both background and foreground

by two parts: 1) global reconstruction loss 2) positive patch loss.

Global construction loss mainly enforces resemblance to the real background

features, since background pixels consist most part of an image. Here we adopt

l1 loss that is commonly used in SR as global reconstruction loss Lglob:

Lglob(F, F t) = ||F t − F||1

(5)

where F denotes the generated feature map, and F t denotes the target feature

map.

Positive patch loss is used to draw attention to positive pixels, because severe

foreground-background imbalance will impede detector performance [20]. We

employ l1 loss on foreground areas as positive patch loss Lglob:

Lpos(F, F t) =

1

N

||F t

x,y − Fx,y||1

(6)

(x,y)∈Ppos

where Ppos denotes the patches of ground truth objects, N denotes the total

number of positive pixels, and (x, y) denotes the coordinates of pixels on feature

maps. Positive patch loss plays the role of a stronger constraint for the areas

where objects locate, enforcing learning true representation of these areas.

The foreground-background-balanced loss function Lf bb is then defined as

Lf bb(F, F t) = Lglob(F, F t) + λLpos(F, F t)

(7)

where λ is a weight balancing factor. The balanced loss function mines true pos-

itives by improving feature quality of foreground areas, and kills false positives

by improving feature quality of background areas.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc