Grokking Deep Reinforcement Learning MEAP V11

Copyright

Welcome

Brief contents

Chapter 1: Introduction to deep reinforcement learning

What is deep reinforcement learning?

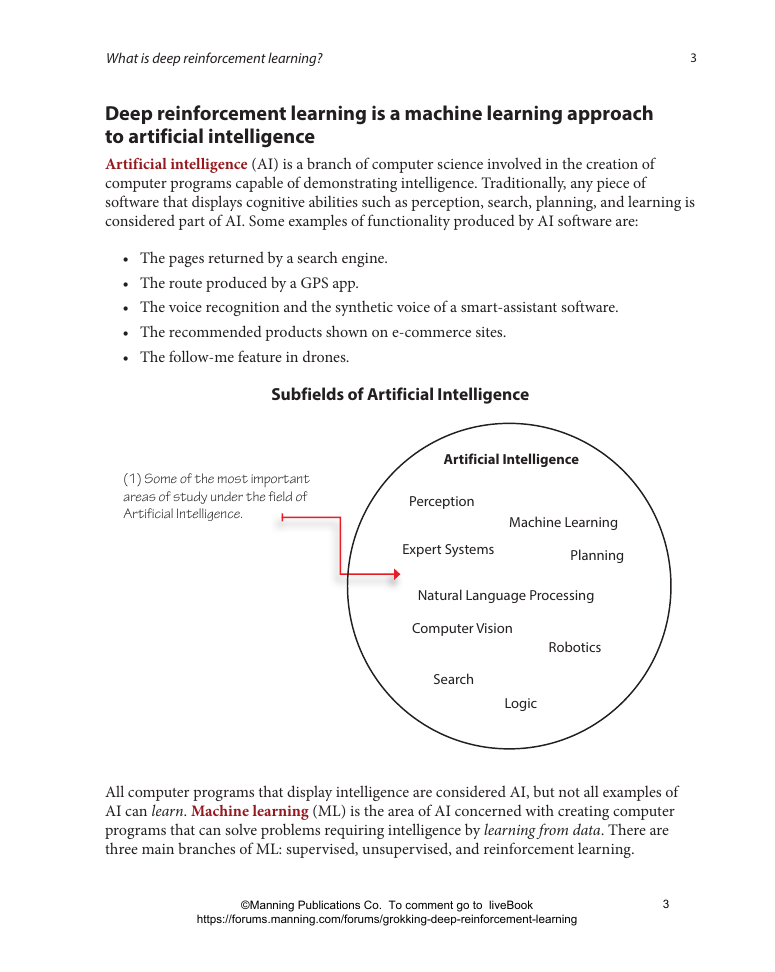

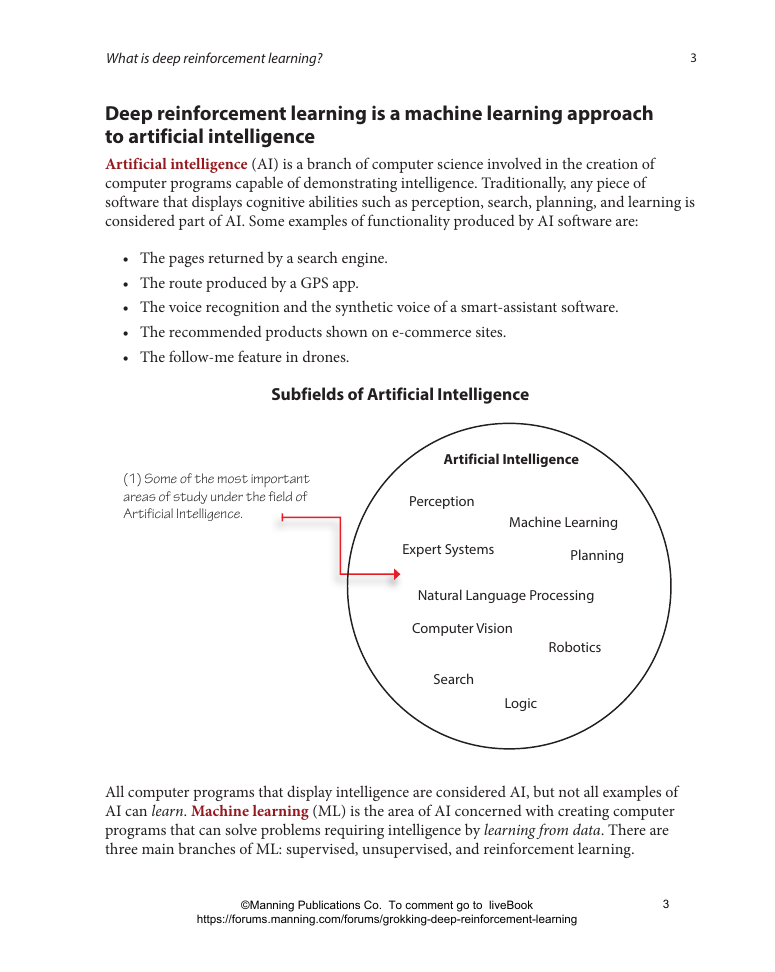

Deep reinforcement learning is a machine learning approachto artificial intelligence

Deep reinforcement learning is concernedwith creating computer programs

Deep reinforcement learning agentscan solve problems that require intelligence

Deep reinforcement learning agentsimprove their behavior through trial-and-error learning

Deep reinforcement learning agentslearn from sequential feedback

Deep reinforcement learning agentslearn from evaluative feedback

Deep reinforcement learning agentslearn from sampled feedback

Deep reinforcement learning agentsutilize powerful non-linear function approximation

The past, present, and futureof deep reinforcement learning

Recent history of artificial intelligenceand deep reinforcement learning

Artificial intelligence winters

The current state of artificial intelligence

Progress in deep reinforcement learning

Opportunities ahead

The suitability of deep reinforcement learning

What are the pros and cons?

Deep reinforcement learning's strengths

Deep reinforcement learning's weaknesses

Setting clear two-way expectations

What to expect from the book?

How to get the most out of the book?

Deep reinforcement learning development environment

Summary

Chapter 2: Mathematical foundations of reinforcement learning

Components of reinforcement learning

Examples of problems, agents, and environments

The agent: The decision-maker

The environment: Everything else

Agent-environment interaction cycle

MDPs: The engine of the environment

States: Specific configurations of the environment

Action: A mechanism to influence the environment

Transition function: Consequences of agent actions

Reward signal: Carrots and sticks

Horizon: Time changes what's optimal

Discount: The future is uncertain, value it less

Extensions to MDPs

Putting it all together

Summary

Chapter 3: Balancing immediate and long-term goals

The objective of a decision-making agent

Policies: Per-state action prescriptions

State-value function: What to expect from here?

Action-value function: What to expect from here if I do this?

Action-advantage function: How much better if I do that?

Optimality

Planning optimal sequences of actions

Policy Evaluation: Rating policies

Policy Improvement: Using ratings to get better

Policy Iteration: Improving upon improved behaviors

Value Iteration: Improving behaviors early

Summary

Chapter 4: Balancing the gathering and utilization of information

The challenge of interpreting evaluative feedback

Bandits: Single state decision problems

Regret: The cost of exploration

Approaches to solving MAB environments

Greedy: Always exploit

Random: Always explore

Epsilon-Greedy: Almost always greedy and sometimes random

Decaying Epsilon-Greedy: First maximize exploration, then exploitation

Optimistic Initialization: Start off believing it's a wonderful world

Strategic exploration

SoftMax: Select actions randomly in proportion to their estimates

UCB: It's not about just optimism; it's about realistic optimism

Thompson Sampling: Balancing reward and risk

Summary

Chapter 5: Evaluating agents' behaviors

Learning to estimate the value of policies

First-visit Monte-Carlo: Improving estimates after each episode

Every-visit Monte-Carlo: A different way of handling state visits

Temporal-Difference Learning: Improving estimates after each step

Learning to estimate from multiple steps

N-step TD Learning: Improving estimates after a couple of steps

Forward-view TD(λ): Improving estimates of all visited states

TD(λ): Improving estimates of all visited states after each step

Summary

Chapter 6: Improving agents' behaviors

The anatomy of reinforcement learning agents

Most agents gather experience samples

Most agents estimate something

Most agents improve a policy

Generalized Policy Iteration

Learning to improve policies of behavior

Monte-Carlo Control: Improving policies after each episode

Sarsa: Improving policies after each step

Decoupling behavior from learning

Q-Learning: Learning to act optimally, even if we choose not to

Double Q-Learning: a max of estimates for an estimate of a max

Summary

Chapter 7: Achieving goals more effectively and efficiently

Learning to improve policies using robust targets

Sarsa(λ): Improving policies after each step based on multi-step estimates

Watkins's Q(λ): Decoupling behavior from learning, again

Agents that interact, learn and plan

Dyna-Q: Learning sample models

Trajectory Sampling: Making plans for the immediate future

Summary

Chapter 8: Introduction to value-based deep reinforcement learning

The kind of feedback deep reinforcement learning agents use

Deep reinforcement learning agents deal with sequential feedback

But, if it is not sequential, what is it?

Deep reinforcement learning agents deal with evaluative feedback

But, if it is not evaluative, what is it?

Deep reinforcement learning agents deal with sampled feedback

But, if it is not sampled, what is it?

Introduction to function approximation for reinforcement learning

Reinforcement learning problems can have high-dimensional state and action spaces

Reinforcement learning problem scan have continuous state and action spaces

There are advantages when using function approximation

NFQ: The first attempt to value-based deep reinforcement learning

First decision point: Selecting a value function to approximate

Second decision point: Selecting a neural network architecture

Third decision point: Selecting what to optimize

Fourth decision point: Selecting the targets for policy evaluation

Fifth decision point: Selecting an exploration strategy

Sixth decision point: Selecting a loss function

Seventh decision point: Selecting an optimization method

Things that could (and do) go wrong

Summary

Chapter 9: More stable value-based methods

DQN: Making reinforcement learning more like supervised learning

Common problems in value-based deep reinforcement learning

Using target networks

Using larger networks

Using experience replay

Using other exploration strategies

Double DQN: Mitigating the overestimation of action-value functions

The problem of overestimation, take two

Separating action selection and action evaluation

A solution

A more practical solution

A more forgiving loss function

Things we can still improve on

Summary

Chapter 10: Sample-efficient value-based methods

Dueling DDQN: A reinforcement-learning-aware neural network architecture

Reinforcement learning is not a supervised learning problem

Nuances of value-based deep reinforcement learning methods

Advantage of using advantages

A reinforcement-learning-aware architecture

Building a dueling network

Reconstructing the action-value function

Continuously updating the target network

What does the dueling network bring to the table?

PER: Prioritizing the replay of meaningful experiences

A smarter way to replay experiences

Then, what is a good measure of "important" experiences?

Greedy prioritization by TD error

Sampling prioritized experiences stochastically

Proportional prioritization

Rank-based prioritization

Prioritization bias

Summary

Chapter 11: Policy-gradient and actor-critic methods

REINFORCE: Outcome-based policy learning

Introduction to policy-gradient methods

Advantages of policy-gradient methods

Learning policies directly

Reducing the variance of the policy gradient

VPG: Learning a value function

Further reducing the variance of the policy gradient

Learning a value function

Encouraging exploration

A3C: Parallel policy updates

Using actor-workers

Using n-step estimates

Non-blocking model updates

GAE: Robust advantage estimation

Generalized advantage estimation

A2C: Synchronous policy updates

Generalized advantage estimation

A2C: Synchronous policy updates

Generalized advantage estimation

A2C: Synchronous policy updates

Weight-sharing model

Restoring order in policy updates

Summary

Chapter 12: Advanced actor-critic methods

DDPG: Approximating a deterministic policy

DDPG uses lots of tricks from DQN

Learning a deterministic policy

Exploration with deterministic policies

TD3: State-of-the-art improvements over DDPG

DDPG uses lots of tricks from DQN

Learning a deterministic policy

Exploration with deterministic policies

TD3: State-of-the-art improvements over DDPG

Double learning in DDPG

Smoothing the targets used for policy updates

Delaying updates

SAC: Maximizing the expected return and entropy

Adding the entropy to the Bellman equations

Learning the action-value function

Learning the policy

Automatically tuning the entropy coefficient

PPO: Restricting optimization steps

Using the same actor-critic architecture as A2C

Batching experiences

Clipping the policy updates

Clipping the value function updates

Summary

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc