Foundations and Trends R

Machine Learning

Vol. 5, No. 4 (2012) 287–364

c 2013 B. Kulis

DOI: 10.1561/2200000019

in

Metric Learning: A Survey

By Brian Kulis

Contents

1 Introduction

2 Distance Learning via Linear Transformations

2.1 A Simple Motivating Example

2.2 Basic Techniques and Notation

2.3 Regularized Transformation Learning

2.4 Representative Special Cases

2.5 Optimization Techniques

2.6 Summary

3 Nonlinear Models for Metric Learning

3.1 Kernelization of Linear Methods

3.2 Other Nonlinear Methods

4 Extensions

4.1 Metric Learning for Kernel Regression

4.2 Metric Learning for Ranking

4.3 Dimensionality Reduction and Data Visualization

4.4 Database Indexing

4.5 Domain Adaptation

288

292

292

293

295

300

310

320

321

322

333

339

339

340

341

342

343

�

5 Applications

5.1 Computer Vision

5.2 Text Analysis

5.3 Other Applications

6 Conclusions

A Representer Theorem Proof

Acknowledgments

References

345

345

348

350

352

354

358

359

�

Foundations and Trends R

Machine Learning

Vol. 5, No. 4 (2012) 287–364

c 2013 B. Kulis

DOI: 10.1561/2200000019

in

Metric Learning: A Survey

Brian Kulis

Ohio State University, CSE Department, Columbus, OH 43210, USA,

kulis@cse.ohio-state.edu

Abstract

The metric learning problem is concerned with learning a distance

function tuned to a particular task, and has been shown to be use-

ful when used in conjunction with nearest-neighbor methods and other

techniques that rely on distances or similarities. This survey presents

an overview of existing research in metric learning, including recent

progress on scaling to high-dimensional feature spaces and to data sets

with an extremely large number of data points. A goal of the survey is to

present as unified as possible a framework under which existing research

on metric learning can be cast. The first part of the survey focuses on

linear metric learning approaches, mainly concentrating on the class

of Mahalanobis distance learning methods. We then discuss nonlinear

metric learning approaches, focusing on the connections between the

nonlinear and linear approaches. Finally, we discuss extensions of met-

ric learning, as well as applications to a variety of problems in computer

vision, text analysis, program analysis, and multimedia.

�

1

Introduction

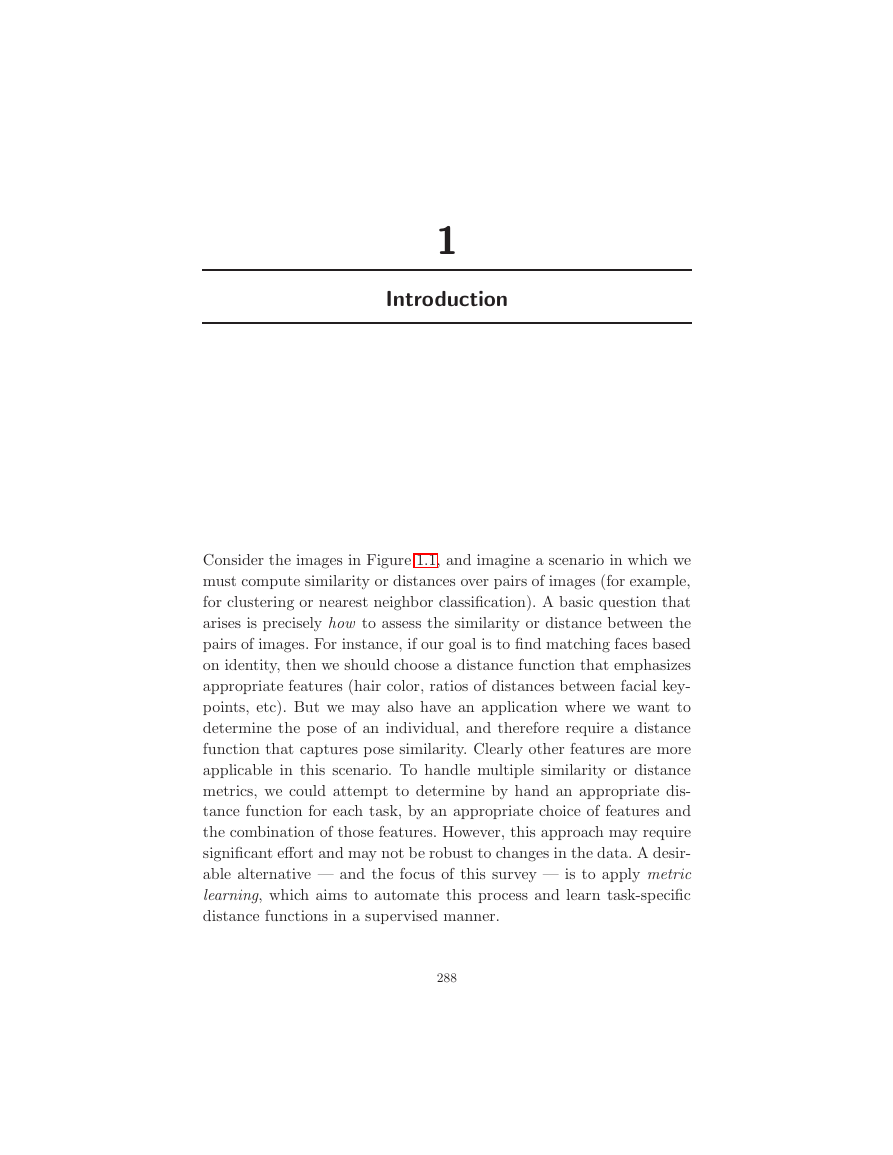

Consider the images in Figure 1.1, and imagine a scenario in which we

must compute similarity or distances over pairs of images (for example,

for clustering or nearest neighbor classification). A basic question that

arises is precisely how to assess the similarity or distance between the

pairs of images. For instance, if our goal is to find matching faces based

on identity, then we should choose a distance function that emphasizes

appropriate features (hair color, ratios of distances between facial key-

points, etc). But we may also have an application where we want to

determine the pose of an individual, and therefore require a distance

function that captures pose similarity. Clearly other features are more

applicable in this scenario. To handle multiple similarity or distance

metrics, we could attempt to determine by hand an appropriate dis-

tance function for each task, by an appropriate choice of features and

the combination of those features. However, this approach may require

significant effort and may not be robust to changes in the data. A desir-

able alternative — and the focus of this survey — is to apply metric

learning, which aims to automate this process and learn task-specific

distance functions in a supervised manner.

288

�

289

Fig. 1.1 Example face data set. In one application, our notion of “distance” between faces

may depend on the pose, whereas in another application it may depend on the identity.

A possible informal formulation of the metric learning problem

could be given as follows: given an input distance function d(x, y)

between objects x and y (for example, the Euclidean distance), along

with supervised information regarding an ideal distance, construct a

new distance function ˜d(x, y) which is “better” than the original dis-

tance function (we could also easily replace “distance” with “similar-

ity,” and d with s for some similarity function s(x, y)). This survey

will focus, for the most part, on learning distance functions ˜d(x, y) of

the form d(f (x), f (y)) for some function f — that is, we learn some

mapping f and utilize the original distance function over the mapped

data. We will denote this approach as global metric learning methods,

since they learn a single mapping f to be applied to all the data.

One possible drawback to the above definition of metric learning

is that it assumes that we have at least some supervision available

to learn the new distance; the fact that we assume supervision seems

somewhat arbitrary. Take, for instance, dimensionality reduction: linear

methods such as principal components analysis can be viewed as con-

structing a linear transformation P to be applied globally to the data,

�

290

Introduction

in an unsupervised manner. The resulting distance between objects

is therefore d(P x, P y), and one may claim that this is also a form of

metric learning. In contrast, the methods we study typically have super-

vised information regarding the structure of the desired distance func-

tion. For example, one popular form of supervision — relative distance

constraints — assumes we may not know the target distance between

pairs of instances, but does assume we know that object x is more sim-

ilar to y than it is to z. The fact that the supervised information is

a function of the ideal distance (or similarity) is key to distinguishing

the methods we study in this survey from other existing techniques

such as dimensionality reduction methods or classification techniques.

Furthermore, incorporating such supervision lends itself to interesting

algorithmic and analysis challenges, as we will see. Thus, in this sur-

vey we will mainly focus on metric learning as a supervised learning

problem.

We will break down global metric learning into two subclasses —

linear and nonlinear. For both cases, we will mainly focus on the

case where the input distance function is the Euclidean distance, i.e.,

d(x, y) = x − y2. In the linear case, we aim to learn a linear map-

that the learned distance is Gx − Gy2. This paradigm is by far the

ping based on supervision, which we can encode as a matrix G such

most prevalent in the metric learning community due to the fact that

many of the resulting formulations are tractable (at the least, local

solutions can be found easily). To achieve convexity, many methods

assume that G is square and full-rank, leading to convex optimization

problems with positive semi-definiteness constraints. We will discuss

such methods in Section 2.

We study nonlinear methods for global metric learning in Sec-

tion 3. In this case, the distance function is the more general d(x, y) =

f (x) − f (y)2. One of the most well-understood and effective tech-

niques for learning such nonlinear mappings is to extend linear methods

via kernelization. The basic idea is to learn a linear mapping in the

feature space of some potentially nonlinear function φ; that is, the dis-

tance function may be written d(x, y) = Gφ(x) − Gφ(y)2, where φ

may be a nonlinear function. While it may not appear that we have

gained anything by this, if we further assume that we can compute

�

291

the kernel function κ(x, y) = φ(x)T φ(y), then it turns out that we

may efficiently learn G in the input space using extensions of linear

techniques. Crucially, the resulting algorithms scale independently of

the dimensionality of the feature space of φ, allowing us to utilize

kernel functions whose embedding functions may be extremely high-

dimensional (or even infinite-dimensional, as in the Gaussian kernel).

A core result that we discuss is a representer theorem that demonstrates

when such metrics may be learned. Beyond kernelization, we discuss

some other proposed methods for nonlinear metric learning, including

methods based on neural networks.

The goal of the survey is to provide an overview of recent advances

in metric learning. For the sake of clarity, we will attempt to present as

much of the literature as possible under a unified framework. Of course,

given the broad scope of the metric learning problem, and the fact that

not all material fits neatly into such a unified presentation, we will have

to divert from the main presentation from time to time. In addition to

presenting the main metric learning models and algorithms that have

been studied, we also focus on several recent applications, including

applications from computer vision, multimedia, and text analysis. It is

our hope that this survey will synthesize much of the recent work on

metric learning, and inspire new algorithms and applications.

�

2

Distance Learning via Linear Transformations

We begin with the simplest and popular approach to learning metrics.

This approach is often called “Mahalanobis metric learning” in the

research community (though the metric learned is not the Mahalanobis

distance, as we will discuss), and attempts to learn distances of the form

Gx − Gy2 for some matrix G.

2.1 A Simple Motivating Example

As an example, consider the “wine” data set from the UCI machine

learning repository.1 This data set contains 13 attributes obtained by

chemical analyses of wines grown in Italy. The underlying classification

problem is to determine to which of three classes of wines each instance

belongs, based on these 13 attributes. Suppose we are interested in find-

ing a distance function over the space of these attributes. The simplest

and most obvious choice would be to compute the Euclidean distance

between the attribute vectors corresponding to two wines. Unfortu-

nately, a quick look at the underlying attributes shows why this will not

1 Available at http://archive.ics.uci.edu/ml/

292

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc