Foundations and Trends R in Robotics

Vol. 3, No. 4 (2012) 211–282

c 2014 C. Stachniss and W. Burgard

DOI: 10.1561/2300000013

Particle Filters for Robot Navigation

Cyrill Stachniss

University of Freiburg

stachnis@informatik.uni-freiburg.de

Wolfram Burgard

University of Freiburg

burgard@informatik.uni-freiburg.de

�

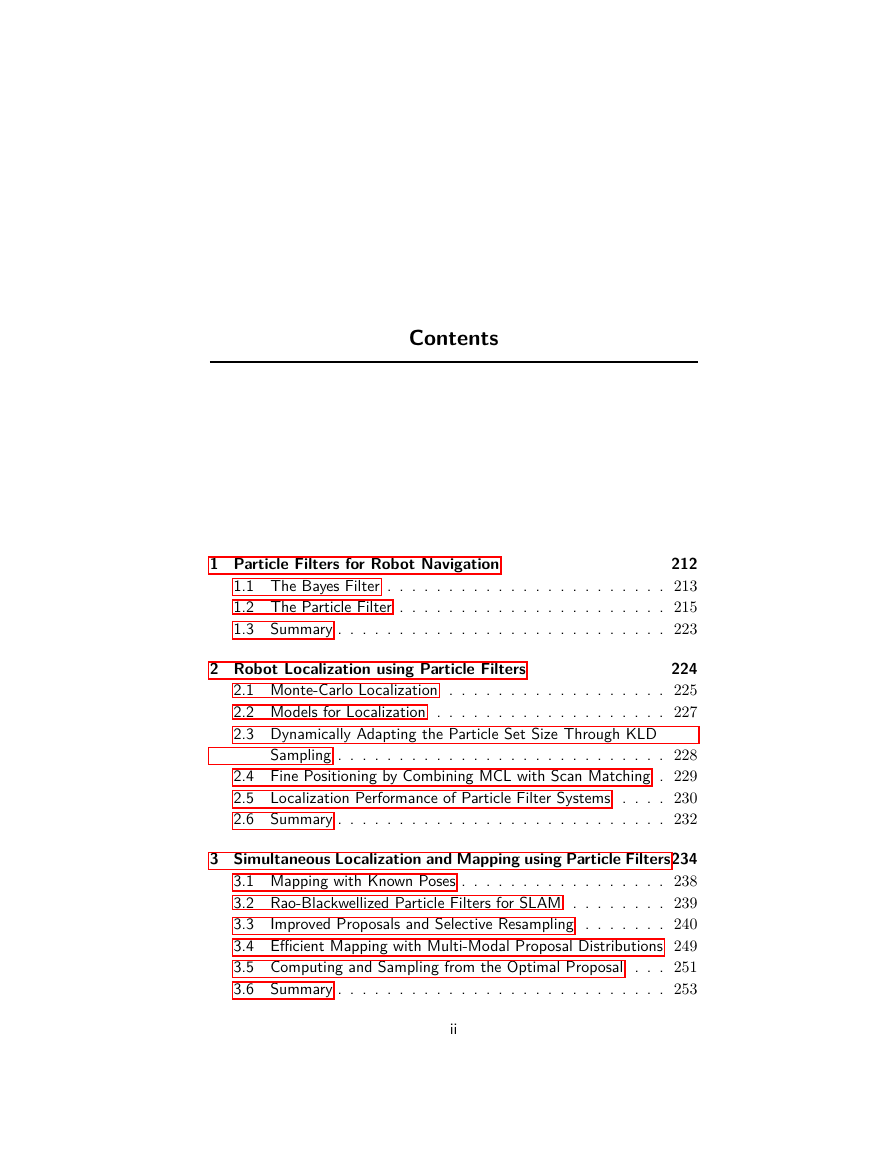

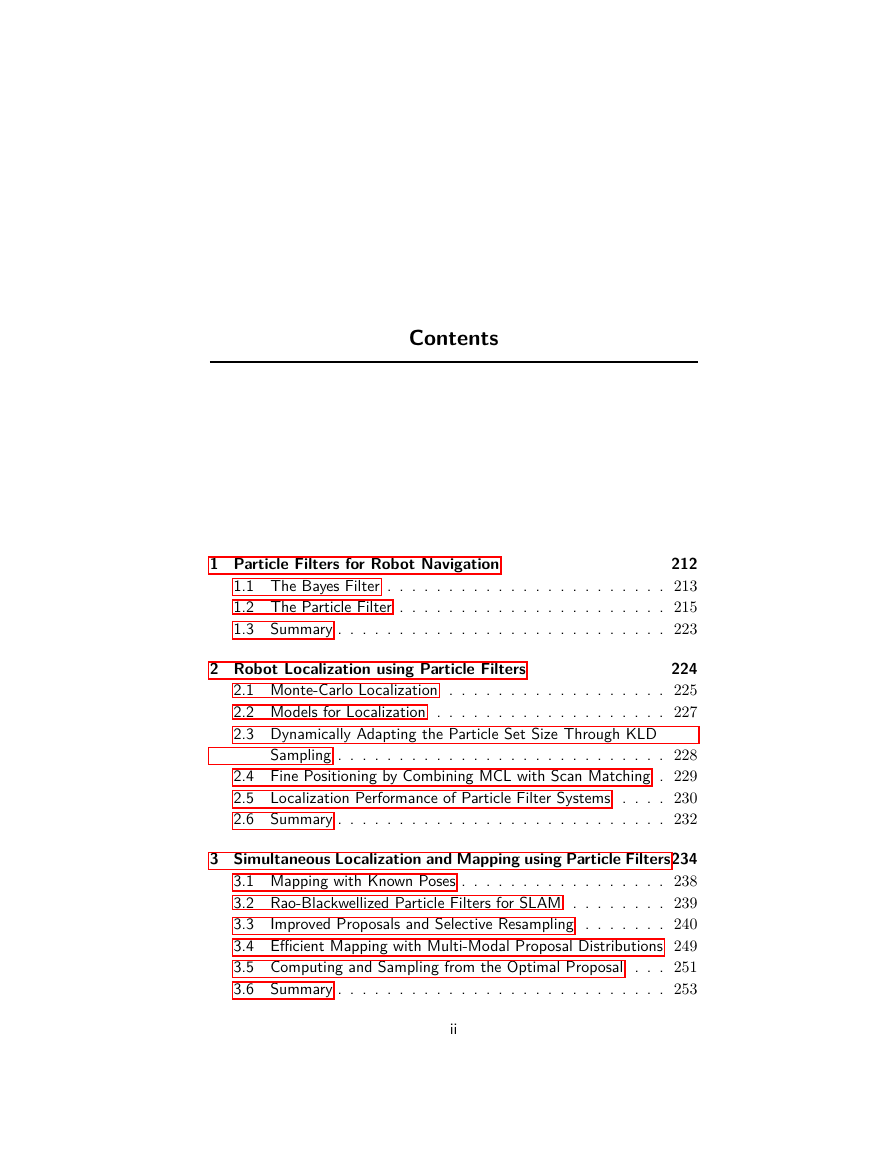

Contents

1 Particle Filters for Robot Navigation

212

1.1 The Bayes Filter . . . . . . . . . . . . . . . . . . . . . . . 213

1.2 The Particle Filter . . . . . . . . . . . . . . . . . . . . . . 215

1.3 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

2 Robot Localization using Particle Filters

224

2.1 Monte-Carlo Localization . . . . . . . . . . . . . . . . . . 225

2.2 Models for Localization . . . . . . . . . . . . . . . . . . . 227

2.3 Dynamically Adapting the Particle Set Size Through KLD

Sampling . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

2.4 Fine Positioning by Combining MCL with Scan Matching . 229

2.5 Localization Performance of Particle Filter Systems . . . . 230

2.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

3 Simultaneous Localization and Mapping using Particle Filters234

3.1 Mapping with Known Poses . . . . . . . . . . . . . . . . . 238

3.2 Rao-Blackwellized Particle Filters for SLAM . . . . . . . . 239

3.3

Improved Proposals and Selective Resampling . . . . . . . 240

3.4 Efficient Mapping with Multi-Modal Proposal Distributions 249

3.5 Computing and Sampling from the Optimal Proposal

. . . 251

3.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

ii

�

iii

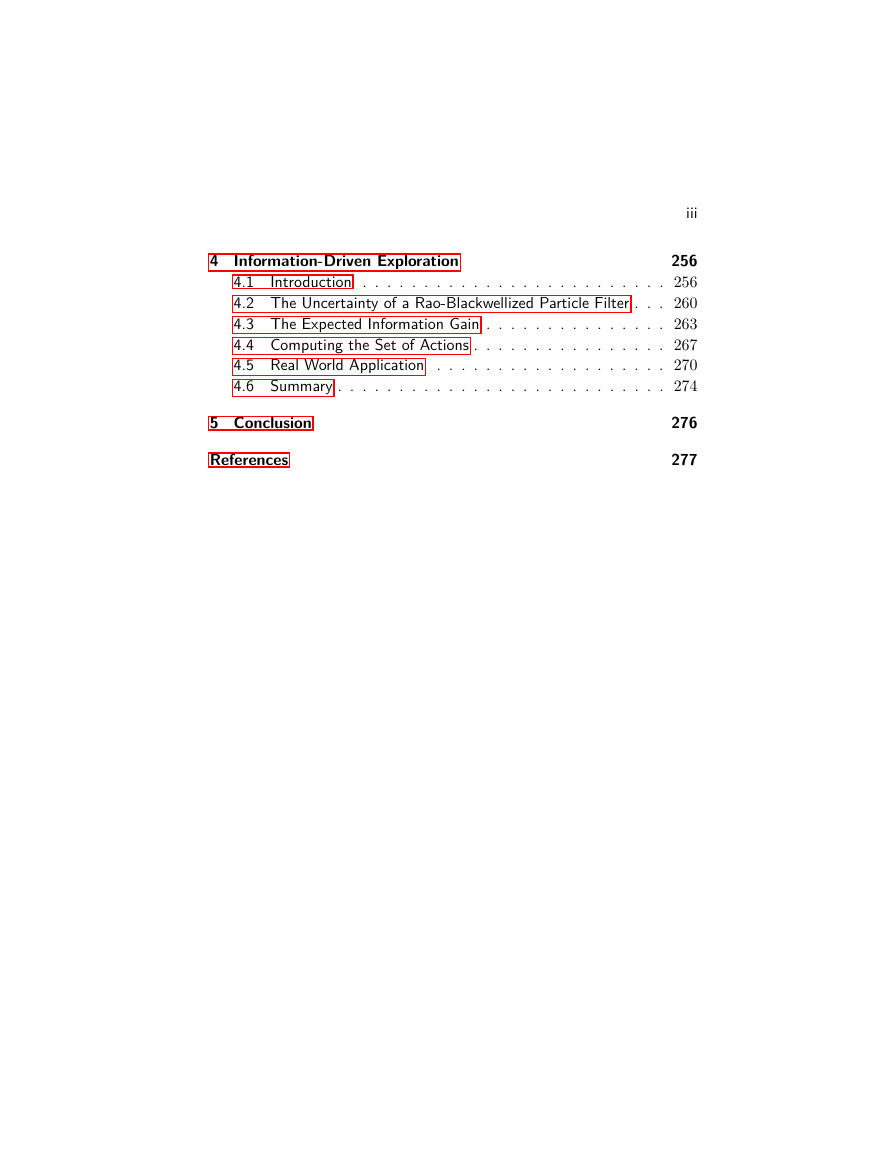

4 Information-Driven Exploration

256

4.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . 256

4.2 The Uncertainty of a Rao-Blackwellized Particle Filter . . . 260

4.3 The Expected Information Gain . . . . . . . . . . . . . . . 263

4.4 Computing the Set of Actions . . . . . . . . . . . . . . . . 267

4.5 Real World Application . . . . . . . . . . . . . . . . . . . 270

4.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . 274

5 Conclusion

References

276

277

�

Abstract

Autonomous navigation is an essential capability for mobile robots. In

order to operate robustly, a robot needs to know what the environ-

ment looks like, where it is in its environment, and how to navigate in

it. This work summarizes approaches that address these three problems

and that use particle filters as their main underlying model for repre-

senting beliefs. We illustrate that these filters are powerful tools that

can robustly estimate the state of the robot and its environment and

that it is also well-suited to make decisions about how to navigate in

order to minimize the uncertainty of the joint belief about the robot’s

position and the state of the environment.

C. Stachniss and W. Burgard. Particle Filters for Robot Navigation. Foundations

and Trends R in Robotics, vol. 3, no. 4, pp. 211–282, 2012.

DOI: 10.1561/2300000013.

�

1

Particle Filters for Robot Navigation

The ability to reliably navigate is an essential capability for au-

tonomous robots. In order to perform effective navigation tasks, robots

typically need to know what the environment looks like, where they are

in the environment, and how to reach a target location. Thus, models of

the environment play an important role for effective navigation. Learn-

ing maps has therefore been a major research focus in the robotics

community over the last decades.

Three main capabilities are needed for traveling through an en-

vironment and for learning an appropriate representation. These are

mapping, localization, and motion generation. Mapping is the problem

of integrating the information gathered with the robot’s sensors into a

given representation. It can be described by the question “What does

the world look like?” In contrast to this, localization is the problem of

estimating the pose, i.e., the position and heading, of the robot relative

to a map. In other words, the robot has to answer the question, “Where

am I?” Finally, the motion generation problem involves the question of

where to go and how to efficiently calculate a path to guide a vehicle

to that location. Expressed as a simple question, this problem can be

described as, “Where should I go and how to reach that location?”

212

�

1.1. The Bayes Filter

213

Unfortunately, these three tasks cannot be solved independently

of each other. Before a robot can answer the question of what the

environment looks like given a set of observations, it needs to know

from which locations these observations have been made. At the same

time, it is hard to estimate the current position of a vehicle without

a map. Planning a path to a goal location is also tightly coupled with

the knowledge of what the environment looks like as well as with the

information about the current pose of the robot.

It is important to acknowledge that robots operate in unpredictable

environments and that the sensors and the actuation of robots are in-

herently uncertain. Therefore, robots need the ability to deal with un-

certainty and to explicitly model it, even for performing basic tasks.

Particle filters are one way for performing state estimation in the pres-

ence of uncertainty. They offer a series of attractive capabilities, includ-

ing the ability to deal with non-Gaussian distributions and nonlinear

sensor and motion models. This article describes particle filter-based

systems developed by the authors in the context of robot navigation.

1.1 The Bayes Filter

Before introducing the particle filter, we start with the Bayes filter as

the particle filter is a special implementation of the Bayes filter. The

Bayes filter is a general algorithm for estimating a belief given control

commands and observations. The goal is to estimate the distribution

about the current state xt at time t given all commands u1:t = u1, . . . , ut

and observations z1:t = z1, . . . , zt. The Bayes filter performs this esti-

mation in a recursive manner using a prediction step that takes into

account the current control command and a correction step that uses

the current observation.

In the prediction step, the filter computes a predicted belief bel(xt)

at time t based on the previous belief bel(xt−1) and a model that de-

scribes how the command ut changes the state from t − 1 to t. This

model p(xt | xt−1, ut) is called transition model or motion model.

In the correction step, the filter corrects the predicted belief by tak-

ing into account the observation. It does so by multiplying the predicted

�

214

Particle Filters for Robot Navigation

belief bel(xt) with the observation model p(zt | xt) and a normalizing

constant η. The normalizer ensures that the integral over all possible

states xt equals to 1 so that we obtain a probability distribution.

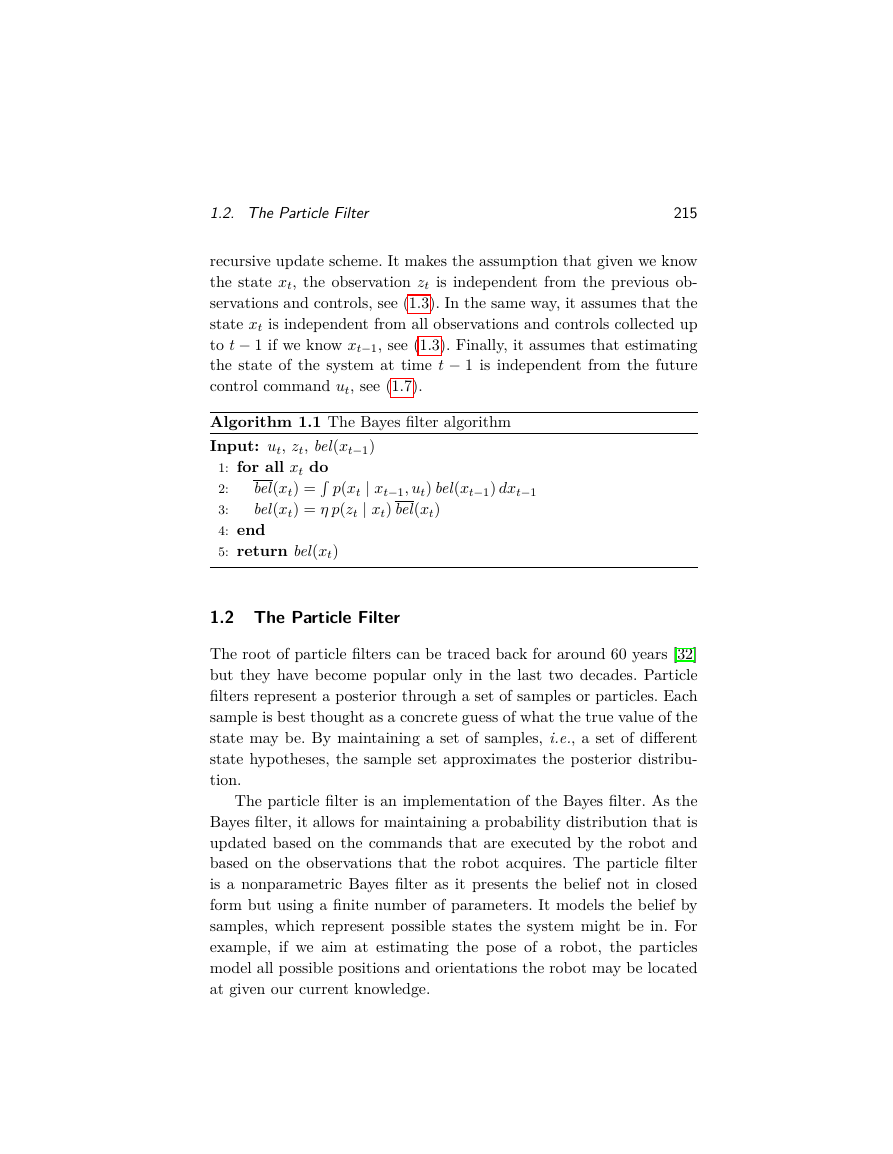

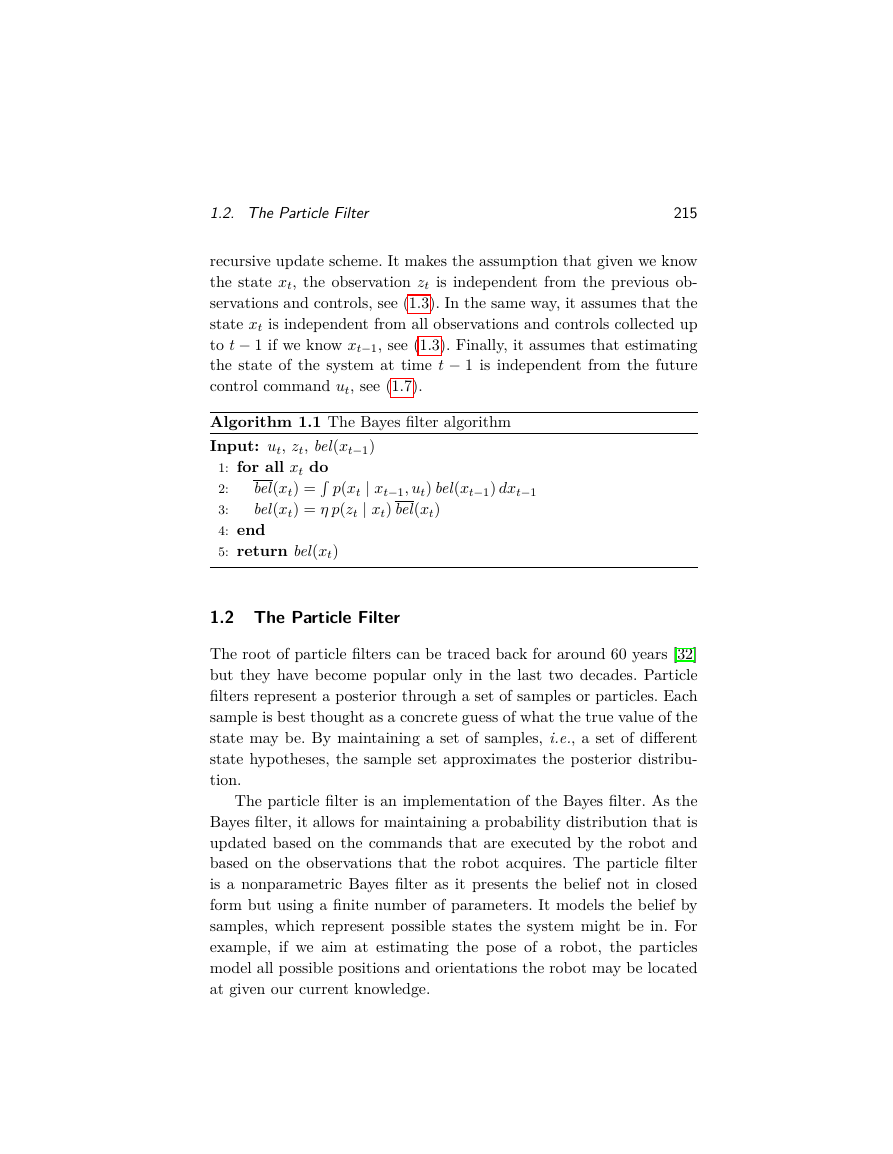

Algorithm 1.1 depicts the Bayes filter algorithm with the prediction

step in Line 2 and the correction step in Line 3. The algorithm computes

the belief at time t based on the previous belief at t−1. Thus, an initial

belief at time t = 0, the so called prior belief, serves as a starting point

for the estimation process. If no prior knowledge is available, the bel(x0)

is a uniform distribution.

The Bayes filter can be derived formally by using only Bayes’ rule,

Markov assumptions, and the law of total probability:

bel(xt)

Definition=

Bayes’ rule=

Markov=

Total prob.=

Markov=

Ignoring ut=

Definition=

p(xt | xt−1, z1:t−1, u1:t)

p(xt | z1:t, u1:t)

η p(zt | xt, z1:t−1, u1:t) p(xt | z1:t−1, u1:t)

Z

η p(zt | xt) p(xt | z1:t−1, u1:t)

η p(zt | xt)

p(xt−1 | z1:t−1, u1:t) dxt−1

Z

η p(zt | xt)

Z

η p(zt | xt)

p(xt | xt−1, ut) p(xt−1 | z1:t−1, u1:t) dxt−1

(1.1)

(1.2)

(1.3)

(1.4)

(1.5)

(1.6)

Z

|

η p(zt | xt)

|

p(xt | xt−1, ut) p(xt−1 | z1:t−1, u1:t−1) dxt−1(1.7)

}

p(xt | xt−1, ut) bel(xt−1) dxt−1

}

prediction step

{z

{z

correction step

(1.8)

This derivation shows that the belief bel(xt) can be estimated re-

cursively based on the previous belief bel(xt−1) and that is given by

the product of the prediction step and the correction step.

The Bayes filter makes several assumptions in order to derive the

�

1.2. The Particle Filter

215

recursive update scheme. It makes the assumption that given we know

the state xt, the observation zt is independent from the previous ob-

servations and controls, see (1.3). In the same way, it assumes that the

state xt is independent from all observations and controls collected up

to t − 1 if we know xt−1, see (1.3). Finally, it assumes that estimating

the state of the system at time t − 1 is independent from the future

control command ut, see (1.7).

Algorithm 1.1 The Bayes filter algorithm

Input: ut, zt, bel(xt−1)

1: for all xt do

2:

3:

4: end

5: return bel(xt)

bel(xt) =R p(xt | xt−1, ut) bel(xt−1) dxt−1

bel(xt) = η p(zt | xt) bel(xt)

1.2 The Particle Filter

The root of particle filters can be traced back for around 60 years [32]

but they have become popular only in the last two decades. Particle

filters represent a posterior through a set of samples or particles. Each

sample is best thought as a concrete guess of what the true value of the

state may be. By maintaining a set of samples, i.e., a set of different

state hypotheses, the sample set approximates the posterior distribu-

tion.

The particle filter is an implementation of the Bayes filter. As the

Bayes filter, it allows for maintaining a probability distribution that is

updated based on the commands that are executed by the robot and

based on the observations that the robot acquires. The particle filter

is a nonparametric Bayes filter as it presents the belief not in closed

form but using a finite number of parameters. It models the belief by

samples, which represent possible states the system might be in. For

example, if we aim at estimating the pose of a robot, the particles

model all possible positions and orientations the robot may be located

at given our current knowledge.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc