CHAPTER 6

NONLINEAR OPTIMIZATION

Previous chapters dealt with linear optimization, specifically linear programming and linear

integer programming. This chapter considers optimization problems that include a nonlinear

objective and/or nonlinear constraints, where the decision variables are real continuous variables

and both the objective and constraint functions are continuous and differentiable. The linear

programming problem is a special case of this problem.

We first discuss the unconstrained optimization problem, where we optimize an objective

function without any constraints and without any sign restrictions for the decision variables.

Then we will consider constraints restricting the variable values. These constraints may be either

strict equality constraints or inequality constraints. We will also consider sign restrictions for

variables. These problems are fundamentally different from the linear programming problem.

First, unlike linear programming, there is no systematic procedure that can solve all nonlinear

programming problems. Second, there may be local extremum points, which may not necessarily

be global solutions to the problem. Third, local optima may occur on the boundary of the

decision space or they may be interior points. Therefore, the convenience of searching an

optimum solution among the corner solutions of a feasible polytope, as in linear programming, is

lost immediately. This makes nonlinear programming problems much harder to solve than linear

programming problems. Here we will develop only the optimality conditions for these problems

and use those conditions to solve small scale example problems. In the following chapters we

will develop nonlinear market equilibrium models and risk programming models. There the

nonlinear programming optimality conditions will be utilized for understanding the properties of

optimum solutions and explaining why those models are appropriate tools for the purpose.

Nonlinear programming solution algorithms will not be covered.

6.1. Unconstrained optimization

This is the simplest nonlinear optimization problem. Suppose we have a function f:

which assigns a real number f(x) to each element

decision variables. When n=1, we have the univariate optimization problem, which can be stated

as Maximize f(x). Suppose x* is a solution of this problem. This means that

. Here x=(x1 , x2, ...,xn) is a vector of n

or

for any xx*. For xx* we have

arguments hold for a local minimum as well. Therefore, we obtain the first order necessary

condition for a local extremum:

. These two conditions imply that

. The same

6.1

nnx)x*()x(ff-*0f(x)f(x)0**)()(xxxfxf0*)(0**)()(lim*xfxxxfxfxx0*)(xf0=′*)x(f�

.

Theorem: If a differentiable function attains its local minimum or local maximum at a point x*,

then we have

Note that this is only a necessary condition since both for minimum and maximum we have the

same condition. In order to determine the nature of a critical point, i.e., x* where

, we

need to look at the second order derivative. The sufficiency condition is obtained from Taylor’s

theorem stated below:

Theorem: Suppose f is a twice differentiable function around a point x*. Then we can express the

function as a polynomial as follows:

is determined by the sign of the second derivative (here note that

is an error term which goes to zero faster than the rate x approaches to x*.

where

If x* is a critical point, the term including the first derivative vanishes, in which case the sign of

the difference

both ½! and (x-x*)2 are positive, and the second derivative term dominates the error term since

goes to zero faster than x approaches to x*). Therefore, if

, we have a local maximum,

we have a local minimum. This gives us the second order sufficiency condition:

and if

Theorem: If f is a twice differentiable function and

maximum occurs at x*. If

Note that we have strictly positive or strictly negative second derivatives in the above theorem. If

the second order derivative at x* is zero, we need to look at higher order derivatives. The above

theorem is extended as follows:

Theorem: If f is a differentiable function of order n or higher and n is the first higher order

derivative with

at a critical point x*, then a local maximum or local minimum occurs

, then a local minimum occurs at x*.

at a critical point x*, then a local

, then a local minimum occurs at x* and if

at x* if n is even. If

maximum occurs at x*. If n is odd, then x* is a saddle (or inflection) point, that is neither a

maximum nor a minimum occurs at x*.

Example: Suppose f(x) = x2 - 6x +1. Then

point.

Example: f(x) = x2 - 2ln(x).

namely x=1 and x= -1. Ignore x= -1 because the logarithm function is not defined at that point.

at any x, in particular at x=3, therefore x=3 is a local minimum.

= 2x - 6 = 0 implies x*=3. This is a critical

= 2x - 2/x = 0, or x2=1. Therefore there are two critical points,

a local

=2+2/x2 = 4 at x=1 . Therefore, this point is local minimum.

6.2

0=′*)x(f0=′*)x(ff(x)f(x*)0<′*)x(f(*)0fx0<′*)x(f0>′*)x(f0≠*)x(fn(*)0nfx(*)0nfx*)x(f′2=′*)x(f*)x(f′*)x(f′2*)*)((''!21 *)*)((' *)( )(xxxfxxxfxfxf�

implies 4x3 - 3x2 = 0 or x=0 or x=3/4. The second

Example: f(x) = x4 - x3 + 2.

derivative is 12x2 - 6x which is positive at x=3/4. Therefore, there is a local minimum at x=3/4.

However, at x=0, the second derivative is also zero. Therefore, we cannot determine the nature of

the critical point x=0 by using the second derivative test. The third derivative is 24x - 6 = -6 at

x=0. Since the first higher order derivative which is not zero is an odd order derivative, we have

a point of inflection at x=0.

can be determined. The same arguments can be extended to functions of several variables. The

only difference is that here we deal with partial derivatives instead of the ordinary univariate

derivative. This problem can be approached as follows. Suppose f:

is a multi-variate

function, and x*=(x*1 , .., x*i , ..., x*n) is an optimum solution. Then

function of one variable, namely xi, where all variables but xi are fixed at their optimum values,

i.e.

The above theorem and examples illustrate how a local extremum of a univariate function

for all

is a

. Then the optimum value of h must be obtained at

, therefore

. However,

is nothing but the partial derivative of f with respect to xi , i.e.

. This is true for all i. Therefore, the first order necessary optimality condition

in the case of multivariate functions is as follows:

Theorem: If

is a differentiable function (i.e., first order partial derivatives exist and

continuous) and there is a local extremum at x*, then

for all i.

The above theorem can also be stated in a more compact form as

vector of first order partial derivatives (called gradient) of f computed at x*.

In a similar way to the univariate case, the second order sufficiency conditions can be derived

from Taylor’s theorem. This requires second order partial derivatives and the Hessian of f at x*.

The latter is a symmetric matrix formed by the second order partial derivatives of f, i.e.,

, where

is the

.

Theorem: A twice differentiable function

can be represented as a polynomial around

6.3

()0fxn**1()(,... ,.., )iinhxfxxx*jjxxij≠*ix0=*)x('hi'hxiifh'(x*)(*)x:nf0=x∂∂*)(xfi0(*)x(*)x221212212............)(nnnxfxxfxxfxfxH:nf�

a point x* as follows:

, which means ‘approximately equal to’.

can be made arbitrarily small by selecting x sufficiently close to x*.

is the error term. We can state the above theorem without this term by replacing

where

The symbol

the strict equality with

Suppose x* is a critical point. Therefore, by the previous theorem the gradient term theorem

vanishes at x*. Then, the sign of f(x) - f(x*) is determined by the quadratic form defined by the

Hessian H. If H is a negative definite matrix, then the quadratic form is negative which means

that f(x) - f(x*) <0, or x* is a local maximum. If H is positive definite then x* is a local

minimum. If H(x*) is indefinite (in this case we can find different values of y for which the sign

of y′Hy can be negative, zero, or positive, where y=x-x*). This leads to the second order

sufficiency test:

Theorem: Suppose x* is a critical point of a twice differentiable function

is negative (positive) definite, then a local maximum (minimum) occurs at x* . If H(x*) is

negative (positive) semi-definite in a neighborhood of x*, again a local maximum (minimum)

occurs at x*. If H(x*) is indefinite, then x* is a saddle point.

Note the difference between the two statements in the above theorem. In the first case, we test

the strict definiteness of the function at the critical point x*. In the second case we test the semi-

definiteness not only at the critical point but also at any point in a small neighborhood of it.

Although the second condition is weaker, it has to be satisfied in an open set around x*, therefore

it may not be as practical as it seems.

Example: Suppose f(x,y,z)=x2 - xy + y2 - 3y + 2z2 -z. The first order optimality conditions are:

The solution of the above linear system is x=1, y=2, z=1/4. To determine the nature of this

critical point, form the Hessian and determine its definiteness. The Hessian at (1,2,1/4) (in

general at any point) is :

2x - y =0

-x + 2y -3 = 0

4z -1 =0

. If H(x*)

which is positive definite because all the leading principal minors of order 1, 2 and 3 are positive

(they are 2, 3, and 12, respectively). Therefore, a minimum occurs at this point.

6.4

*)*)((**)(21*)*)((*)()(xxxxHxxxxxxxfffε:nf400021012H�

Example: f(x,y) = 4x2 - 4xy + 2y2. The first order optimality conditions are:

the two conditions solved simultaneously give x=0 and y=0 as the only critical point. To

determine the nature of this critical point, form the Hessian and determine its definiteness. The

Hessian at (0,0) (in general at any point) is :

8x - 4y =0

-4x + 4y = 0

which is positive definite because the leading principal minors of order 1 and 2 are 8 and 16,

which are both positive. Therefore, a minimum occurs at this point.

In these two simple problems, the first order conditions are given by linear equations because the

objective functions are quadratic. When a non-quadratic objective function is involved, in

general a nonlinear set of equations arises and solving that set of equations may be a serious

problem itself. Nonlinear programming algorithms find the optimal solution (if exists and

unique) using iterative procedures, such as steepest ascend and steepest descend methods used to

solve a relative maximum or a relative minimum. This method is based on the concept of

directional derivative.

Definition: The directional derivative of f at a given point a in the direction of a unit vector u is

defined by

Example: Suppose

. To determine the directional derivative at (0,0,0) in the

direction of v=(1,-1,1), we first find the unit vector which has the same direction as the given

and denoted by Duf(a).

vector, (1,-1,1). Since

, the unit vector we need is

.

The gradient of

at (0,0,0) is (1,1,1). Therefore the directional derivative is:

This means that a slight movement (displacement) by tu from the point a in the direction of u

creates a change in the function value by about (0.577)t. For instance, if t=0.1, i.e. for the

displacement

, the change in the function value is approximately 0.0577.

Theorem: The maximum change occurs in the value of a function f when we move away from a

point a in the direction of the gradient vector (i.e. the directional derivative is maximum when u

6.5

4448H()f.auxyzf(x,y,z)e31)1(1222v)31,31,31(uxyzf(x,y,z)e577.031)31,31,31).(1,1,1(.uf)31.0,31.0,31.0(ut�

.

=

The above result is a direct consequence of an analytic geometry theorem which states that u.v =

, where

is maximum when

, the scalar product is largest if the two vectors are aligned. Therefore, the directional

is the angle between vectors u and v. Since

derivative will be maximum if the gradient vector and the direction vector have the same

direction (or the angle between them is zero). This result is particularly important in

optimization because it tells us that we would move in the direction of the gradient vector at any

point in order to achieve the highest gain in the value of the function that we want to maximize

(we choose the negative gradient direction if we want to minimize the function value). This

result is the basis of the steepest descend (and ascend) algorithms.

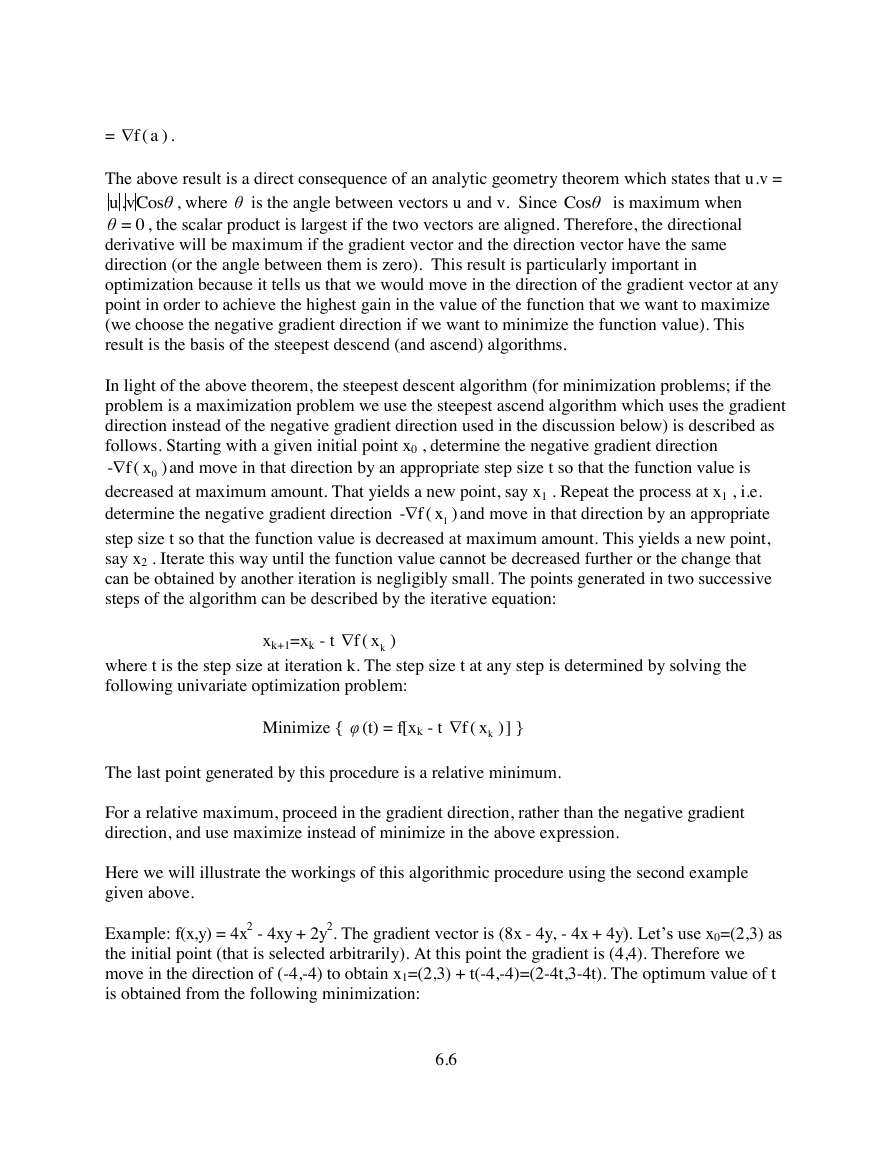

In light of the above theorem, the steepest descent algorithm (for minimization problems; if the

problem is a maximization problem we use the steepest ascend algorithm which uses the gradient

direction instead of the negative gradient direction used in the discussion below) is described as

follows. Starting with a given initial point x0 , determine the negative gradient direction

and move in that direction by an appropriate step size t so that the function value is

decreased at maximum amount. That yields a new point, say x1 . Repeat the process at x1 , i.e.

determine the negative gradient direction

and move in that direction by an appropriate

step size t so that the function value is decreased at maximum amount. This yields a new point,

say x2 . Iterate this way until the function value cannot be decreased further or the change that

can be obtained by another iteration is negligibly small. The points generated in two successive

steps of the algorithm can be described by the iterative equation:

xk+1=xk - t

where t is the step size at iteration k. The step size t at any step is determined by solving the

following univariate optimization problem:

Minimize {

(t) = f[xk - t

] }

The last point generated by this procedure is a relative minimum.

For a relative maximum, proceed in the gradient direction, rather than the negative gradient

direction, and use maximize instead of minimize in the above expression.

Here we will illustrate the workings of this algorithmic procedure using the second example

given above.

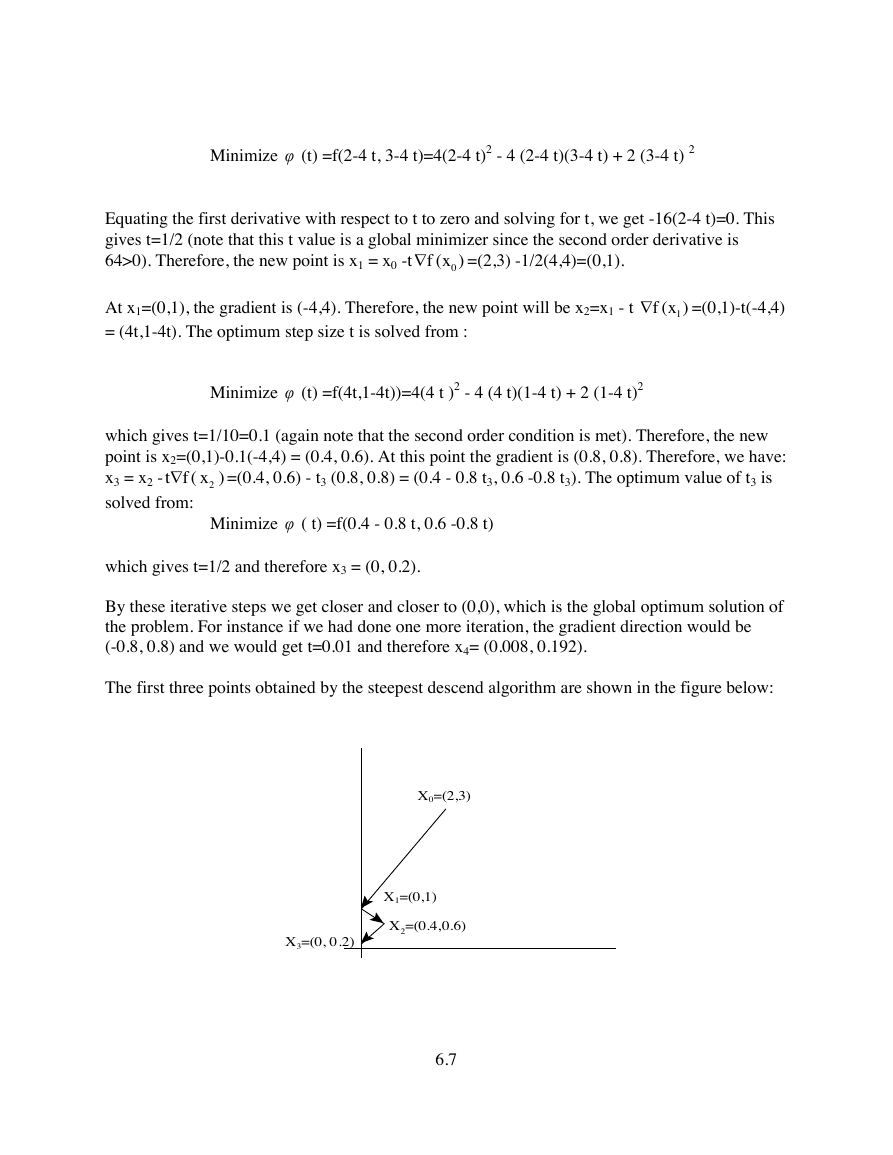

Example: f(x,y) = 4x2 - 4xy + 2y2. The gradient vector is (8x - 4y, - 4x + 4y). Let’s use x0=(2,3) as

the initial point (that is selected arbitrarily). At this point the gradient is (4,4). Therefore we

move in the direction of (-4,-4) to obtain x1=(2,3) + t(-4,-4)=(2-4t,3-4t). The optimum value of t

is obtained from the following minimization:

6.6

f()aθosv.uCθθosC0=θ0-f()x1-f()xkf()xφkf()x�

=(0,1)-t(-4,4)

Minimize

=(2,3) -1/2(4,4)=(0,1).

(t) =f(2-4 t, 3-4 t)=4(2-4 t)2 - 4 (2-4 t)(3-4 t) + 2 (3-4 t) 2

Equating the first derivative with respect to t to zero and solving for t, we get -16(2-4 t)=0. This

gives t=1/2 (note that this t value is a global minimizer since the second order derivative is

64>0). Therefore, the new point is x1 = x0 -t

At x1=(0,1), the gradient is (-4,4). Therefore, the new point will be x2=x1 - t

= (4t,1-4t). The optimum step size t is solved from :

which gives t=1/10=0.1 (again note that the second order condition is met). Therefore, the new

point is x2=(0,1)-0.1(-4,4) = (0.4, 0.6). At this point the gradient is (0.8, 0.8). Therefore, we have:

x3 = x2 -

solved from:

which gives t=1/2 and therefore x3 = (0, 0.2).

By these iterative steps we get closer and closer to (0,0), which is the global optimum solution of

the problem. For instance if we had done one more iteration, the gradient direction would be

(-0.8, 0.8) and we would get t=0.01 and therefore x4= (0.008, 0.192).

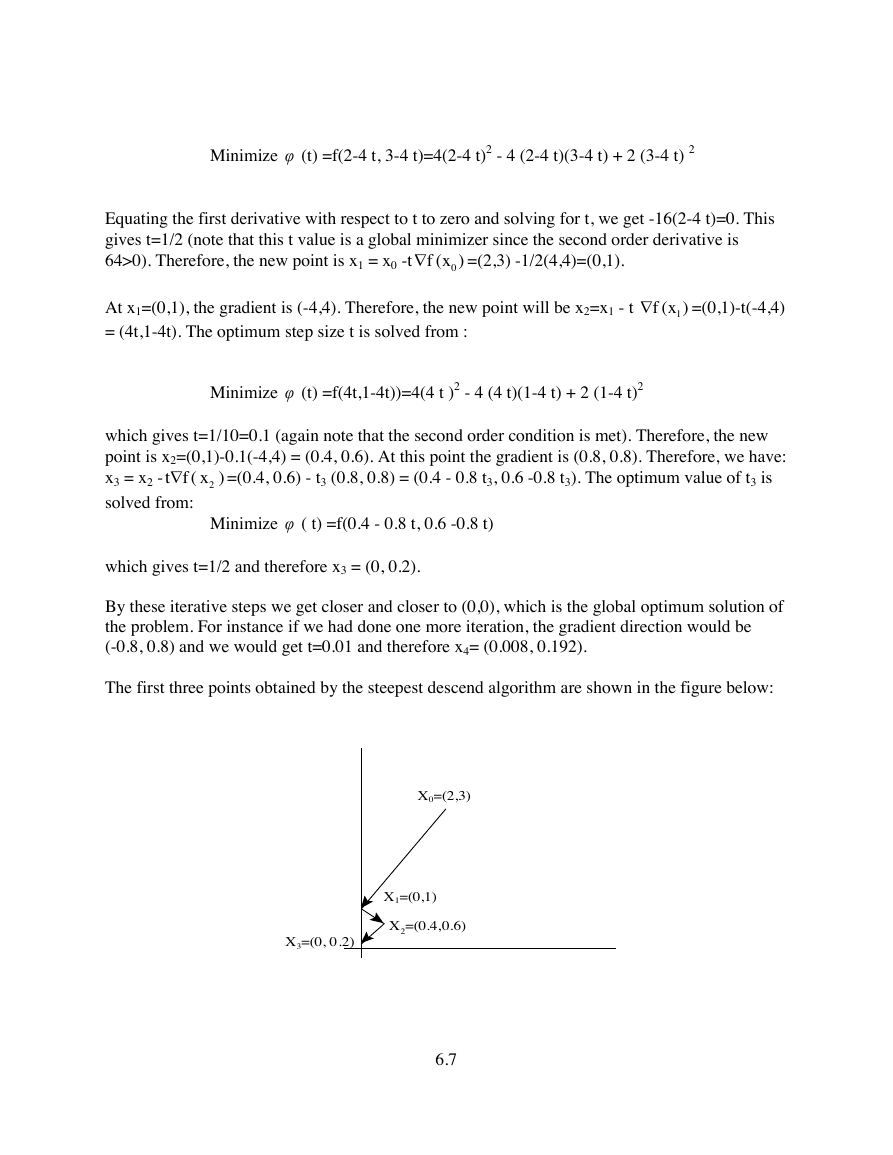

The first three points obtained by the steepest descend algorithm are shown in the figure below:

=(0.4, 0.6) - t3 (0.8, 0.8) = (0.4 - 0.8 t3, 0.6 -0.8 t3). The optimum value of t3 is

(t) =f(4t,1-4t))=4(4 t )2 - 4 (4 t)(1-4 t) + 2 (1-4 t)2

Minimize

( t) =f(0.4 - 0.8 t, 0.6 -0.8 t)

Minimize

6.7

φ0()fx1()fxφ2tf()xφX0=(2,3)X1=(0,1)X2=(0.4,0.6)X3=(0, 0.2)�

As may have been noticed, the direction vectors are perpendicular to each other. This is not a

coincidence. The steepest descend (or ascend) method always progresses in this way, i.e. always

finds perpendicular direction vectors to the previous direction vector.

Exercise: Solve the problem Maximize (

optimality conditions and then the steepest ascend algorithm (note that the problem is now

maximize, therefore use the gradient direction for determining a new point when approaching the

) using the first order and second order

optimal solution). The first approach should result in

. When using the steepest

ascend algorithm, start with

and generate the next five points [Answer:

,

,

,

,

,

]

6.2 Optimization with strict equality constraints

Nonlinear constrained optimization has fundamentally different properties than linear

programming in that the optimum solution can be an interior point or a boundary point. Even if

the constraints are linear, an optimum solution can occur at a boundary point which may not

necessarily be a corner solution (vertex). Therefore, as mentioned at the outset, the convenience

of linear programming, namely searching only the corner solutions and finding the best one, is

lost immediately. This is explained in the figure below. Suppose the solution space is the set of

points satisfying g(x)≤b, which are represented by all points below the graph of g in the figure.

Consider a given value of the objective function c and the set of all points x such that f(x)=c.

This set is called a level curve or an isoquant (such as an isoutility curve or a production or cost

isoquant). Three such level curves are shown in the figure. Suppose the nested closed curves

indicate higher isoquants and suppose we want to maximize f(x). Thus, the optimum solution of

the problem

would be the interior point shown by x1*. At a point like this all directions are feasible (if the

step size is small enough, i.e. we stay within the feasible region by taking a small displacement

vector in any given direction), and therefore the directional derivatives must all be zero. In

particular the partial derivatives must all be zero. However, the isoquants may be such that the

optimum point can be on the boundary of the feasible region, as indicated by the dashed

isoquants and the point x2*.

Max f(x)

s.t.

6.8

22xxyyy12*(,)33x0(1,1)x0(1,1)x11(,1)2x213(,)24x333(,)84x4311(,)816x51311(,)3216xg()bx�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc