TensorFlow-Slim

TF-Slim is a lightweight library for defining, training and evaluating complex models

in TensorFlow. Components of tf-slim can be freely mixed with native tensorflow, as

well as other frameworks, such as tf.contrib.learn.

TF-Slim 是 TensorFlow 中定义、训练和评估复杂模型的轻量级库。tf-slim 中的组件

可以轻易地和原生 TensorFlow 框架以及例如 tf.contrib.learn 这样的框架进行整合。

Usage

import tensorflow.contrib.slim as slim

Why TF-Slim?

TF-Slim is a library that makes defining, training and evaluating neural networks

simple:

• Allows the user to define models much more compactly by eliminating

boilerplate code. This is accomplished through the use of argument

scoping and numerous high level layers and variables. These tools increase

readability and maintainability, reduce the likelihood of an error from copy-

and-pasting hyperparameter values and simplifies hyperparameter tuning.

• Makes developing models simple by providing commonly used regularizers.

• Several widely used computer vision models (e.g., VGG, AlexNet) have been

developed in slim, and are available to users. These can either be used as

black boxes, or can be extended in various ways, e.g., by adding "multiple

heads" to different internal layers.

• Slim makes it easy to extend complex models, and to warm start training

algorithms by using pieces of pre-existing model checkpoints.

TF-Slim 是一个使构建,训练和评估神经网络更加简单的库:

• 允许用户通过消除样板代码来更加紧凑地定义模型。 这是通过使用 argument

scoping 和许多 high level layers 和 variables.来完成的。 这些工具提高了代码

的可读性和可维护性,通过复制和粘贴超参数值降低了错误发生的可能性,并简

化了超参数的调整。

• 通过提供常用的正则化使开发模型变得简单。

• 提供几种广泛使用的计算机视觉模型(例如,VGG,AlexNet)。 这些可以用作

black box,或者可以以各种方式扩展,例如通过向不同内部层添加"multiple

heads"。

1 / 26

�

• Slim 使得扩展复杂模型变得很容易,并且通过使用预训练模型来热启动训练算

法。

What are the various components of TF-Slim?

TF-Slim is composed of several parts which were design to exist independently. These

include the following main pieces (explained in detail below).

TF-Slim 由独立存在的几个部分组成。这些包括以下主要部分(在下面详细解释)。

• arg_scope: provides a new scope named arg_scope that allows a user to define

default arguments for specific operations within that scope.

允许用户对该 scope 内的操作定义默认参数

• data: contains TF-slim's dataset definition, data providers, parallel_reader,

and decoding utilities.

包含了 Slim 模块的 dataset definition、data providers、

parallel_reader 及 decoding utilities

• evaluation: contains routines for evaluating models.

评估模型需要的一些东西

•

layers: contains high level layers for building models using tensorflow.

构建模型需要的一些高级 layers

•

learning: contains routines for training models.

训练模型需要的一些东西

•

losses: contains commonly used loss functions.

常见的 loss 函数

• metrics: contains popular evaluation metrics.

常见的评估指标

• nets: contains popular network definitions such as VGG and AlexNet models.

常见的深度网络(例如 VGG、AlexNet)

2 / 26

�

• queues: provides a context manager for easily and safely starting and closing

QueueRunners.

提供一个容易、简单的开始和关闭 QueueRunners 的 content manager

•

regularizers: contains weight regularizers.

常见的权重 regularizer

•

variables: provides convenience wrappers for variable creation and

manipulation.

为变量创建和操作提供方便的封装

1. Defining Models

Models can be succinctly defined using TF-Slim by combining its variables, layers and

scopes. Each of these elements is defined below.

利用 TF-Slim 通过合并 variables, layers and scopes,模型可以简洁地进行定义。各

元素定义如下。

1.1 Variables (Slim 变量)

Creating Variables in native tensorflow requires either a predefined value or an

initialization mechanism (e.g. randomly sampled from a Gaussian). Furthermore, if a

variable needs to be created on a specific device, such as a GPU, the specification

must be made explicit. To alleviate the code required for variable creation, TF-Slim

provides a set of thin wrapper functions in variables.py which allow callers to easily

define variables.

想在原生 tensorflow 中创建变量,要么需要一个预定义值,要么需要一种初始化机

制。此外,如果变量需要在特定的设备上创建,比如 GPU 上,则必要要显式指定。为

了简化代码的变量创建,TF-Slim 在 variables.py 中提供了一批轻量级的函数封装,

从而使调用者可以更加容易地定义变量。

For example, to create a weights variable, initialize it using a truncated normal

distribution, regularize it with an l2_lossand place it on the CPU, one need only

declare the following:

例如,创建一个权值变量,并且用 truncated_normal 初始化,用 L2 损失正则化,放

置于 CPU 中,我们只需要定义如下:

3 / 26

�

weights = slim.variable('weights',

shape=[10, 10, 3 , 3],

initializer=tf.truncated_normal_initializer(stddev=0.1),

regularizer=slim.l2_regularizer(0.05),

device='/CPU:0')

Note that in native TensorFlow, there are two types of variables: regular variables and

local (transient) variables. The vast majority of variables are regular variables: once

created, they can be saved to disk using a saver. Local variables are those variables

that only exist for the duration of a session and are not saved to disk.

在原生 tensorflow 中,有两种类型的变量:常规变量和局部(临时)变量。绝大部分

都是常规变量,它们一旦创建,可以用 Saver 保存在磁盘上。局部变量则只在一个

session 期间存在,且不会保存在磁盘上。

TF-Slim further differentiates variables by defining model variables, which are

variables that represent parameters of a model. Model variables are trained or fine-

tuned during learning and are loaded from a checkpoint during evaluation or

inference. Examples include the variables created by

a slim.fully_connected or slim.conv2d layer. Non-model variables are all other

variables that are used during learning or evaluation but are not required for actually

performing inference. For example, the global_step is a variable using during learning

and evaluation but it is not actually part of the model. Similarly, moving average

variables might mirror model variables, but the moving averages are not themselves

model variables.

TF-Slim 通过定义 model variables 可以进一步区分变量,这种变量代表一个模型的

参数。模型变量在学习阶段被训练或微调,在评估和预测阶段从 checkpoint 中加

载。 示例包括由 slim.fully_connected or slim.conv2d 创建的变量。非模型变量是在

学习或评估阶段使用,但不会在预测阶段起作用的变量。例如 global_step,它在学习

和评估阶段使用,但不是模型的一部分。类似地,移动均值可以 mirror 模型参数,可

能会反映模型变量,但是它们本身不是模型变量。

Both model variables and regular variables can be easily created and retrieved via TF-

Slim:

通过 TF-Slim,模型变量和常规变量都可以很容易地创建和获取:

4 / 26

�

# Model Variables

weights = slim.model_variable('weights',

shape=[10, 10, 3 , 3],

initializer=tf.truncated_normal_initializer(stddev=0.1),

regularizer=slim.l2_regularizer(0.05),

device='/CPU:0')

model_variables = slim.get_model_variables()

# Regular variables

my_var = slim.variable('my_var',

shape=[20, 1],

initializer=tf.zeros_initializer())

regular_variables_and_model_variables = slim.get_variables()

How does this work? When you create a model variable via TF-Slim's layers or directly

via the slim.model_variable function, TF-Slim adds the variable to

the tf.GraphKeys.MODEL_VARIABLES collection. What if you have your own custom layers

or variable creation routine but still want TF-Slim to manage or be aware of your

model variables? TF-Slim provides a convenience function for adding the model

variable to its collection:

这玩意是怎么起作用的呢?当你通过 TF-Slim's layers 或者直接通过

slim.model_variable 函数创建一个模型变量,TF-Slim 会把这个变量添加到

tf.GraphKeys.MODEL_VARIABLES 这个集合中。如果您有自己的自定义 layer 或变量

创建例程,但仍希望 TF-Slim 管理或了解模型变量,该怎么办? TF-Slim 提供了一个

方便的功能来将模型变量添加到其集合中:

my_model_variable = CreateViaCustomCode()

# Letting TF-Slim know about the additional variable.

slim.add_model_variable(my_model_variable)

1.2 Layers (Slim 层)

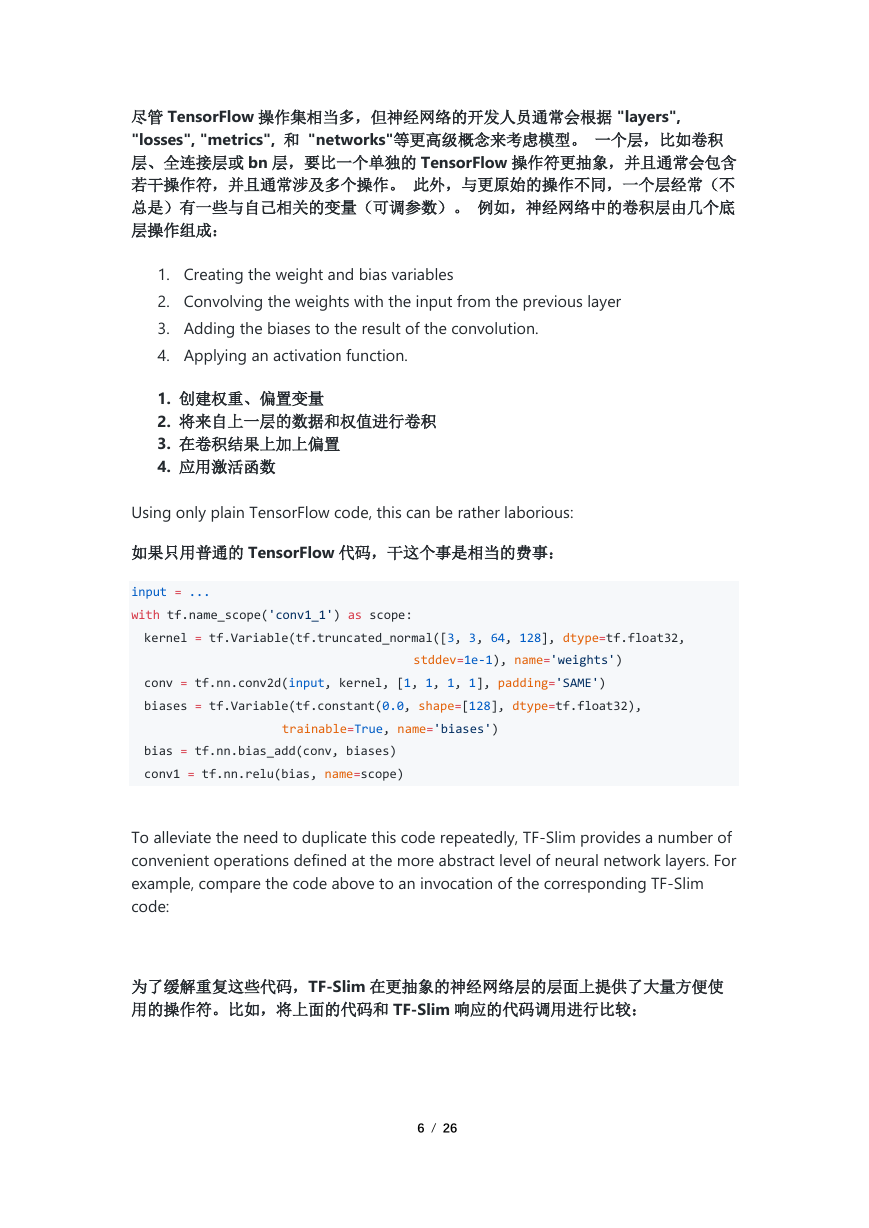

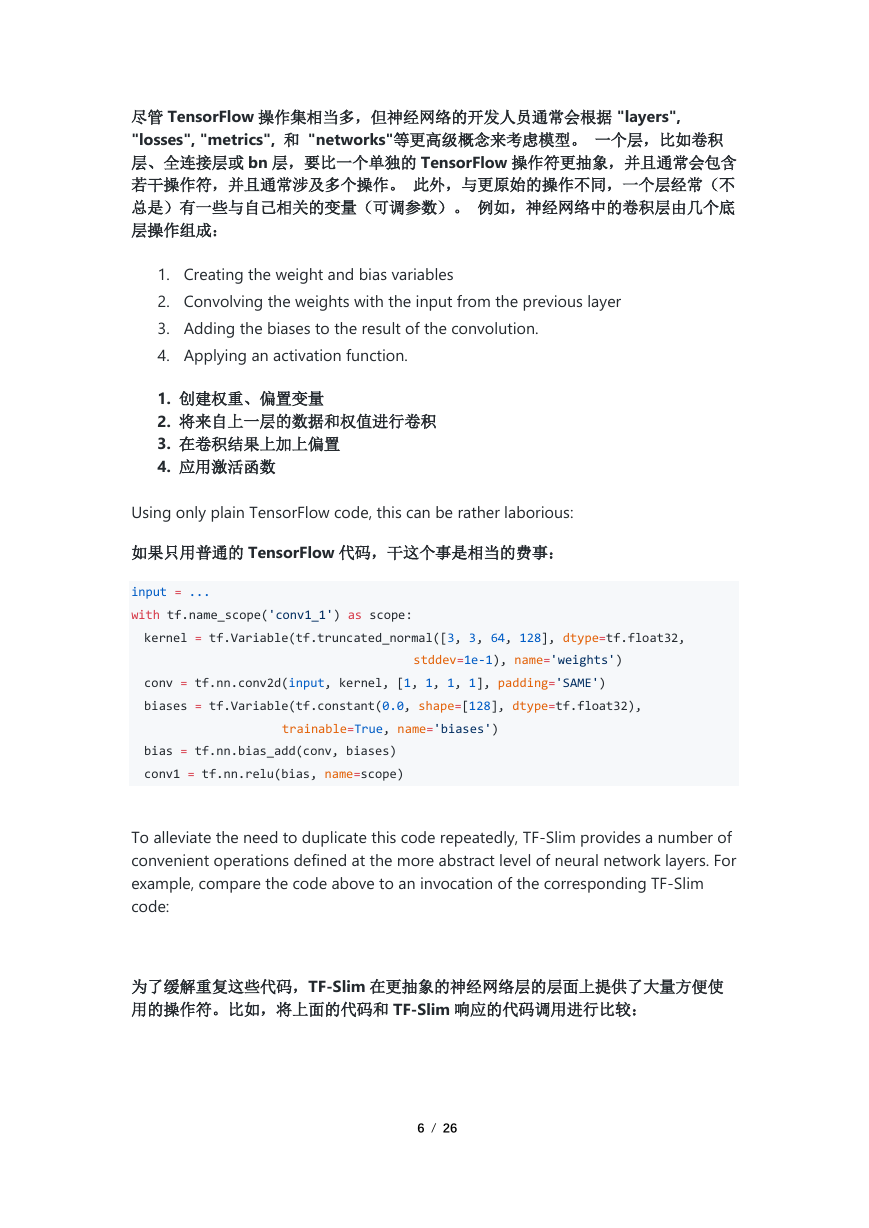

While the set of TensorFlow operations is quite extensive, developers of neural

networks typically think of models in terms of higher level concepts like "layers",

"losses", "metrics", and "networks". A layer, such as a Convolutional Layer, a Fully

Connected Layer or a BatchNorm Layer is more abstract than a single TensorFlow

operation and typically involve several operations. Furthermore, a layer usually (but

not always) has variables (tunable parameters) associated with it, unlike more

primitive operations. For example, a Convolutional Layer in a neural network is

composed of several low level operations:

5 / 26

�

尽管 TensorFlow 操作集相当多,但神经网络的开发人员通常会根据 "layers",

"losses", "metrics", 和 "networks"等更高级概念来考虑模型。 一个层,比如卷积

层、全连接层或 bn 层,要比一个单独的 TensorFlow 操作符更抽象,并且通常会包含

若干操作符,并且通常涉及多个操作。 此外,与更原始的操作不同,一个层经常(不

总是)有一些与自己相关的变量(可调参数)。 例如,神经网络中的卷积层由几个底

层操作组成:

1. Creating the weight and bias variables

2. Convolving the weights with the input from the previous layer

3. Adding the biases to the result of the convolution.

4. Applying an activation function.

1. 创建权重、偏置变量

2. 将来自上一层的数据和权值进行卷积

3. 在卷积结果上加上偏置

4. 应用激活函数

Using only plain TensorFlow code, this can be rather laborious:

如果只用普通的 TensorFlow 代码,干这个事是相当的费事:

input = ...

with tf.name_scope('conv1_1') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 64, 128], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(input, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[128], dtype=tf.float32),

trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv1 = tf.nn.relu(bias, name=scope)

To alleviate the need to duplicate this code repeatedly, TF-Slim provides a number of

convenient operations defined at the more abstract level of neural network layers. For

example, compare the code above to an invocation of the corresponding TF-Slim

code:

为了缓解重复这些代码,TF-Slim 在更抽象的神经网络层的层面上提供了大量方便使

用的操作符。比如,将上面的代码和 TF-Slim 响应的代码调用进行比较:

6 / 26

�

input = ...

net = slim.conv2d(input, 128, [3, 3], scope='conv1_1')

TF-Slim provides standard implementations for numerous components for building

neural networks. These include:

TF-Slim 提供了标准接口用于组建神经网络,包括:

Layer

TF-Slim

BiasAdd

slim.bias_add

BatchNorm

slim.batch_norm

Conv2d

slim.conv2d

Conv2dInPlane

slim.conv2d_in_plane

Conv2dTranspose (Deconv)

slim.conv2d_transpose

FullyConnected

slim.fully_connected

AvgPool2D

slim.avg_pool2d

Dropout

Flatten

slim.dropout

slim.flatten

MaxPool2D

slim.max_pool2d

OneHotEncoding

slim.one_hot_encoding

SeparableConv2

slim.separable_conv2d

UnitNorm

slim.unit_norm

7 / 26

�

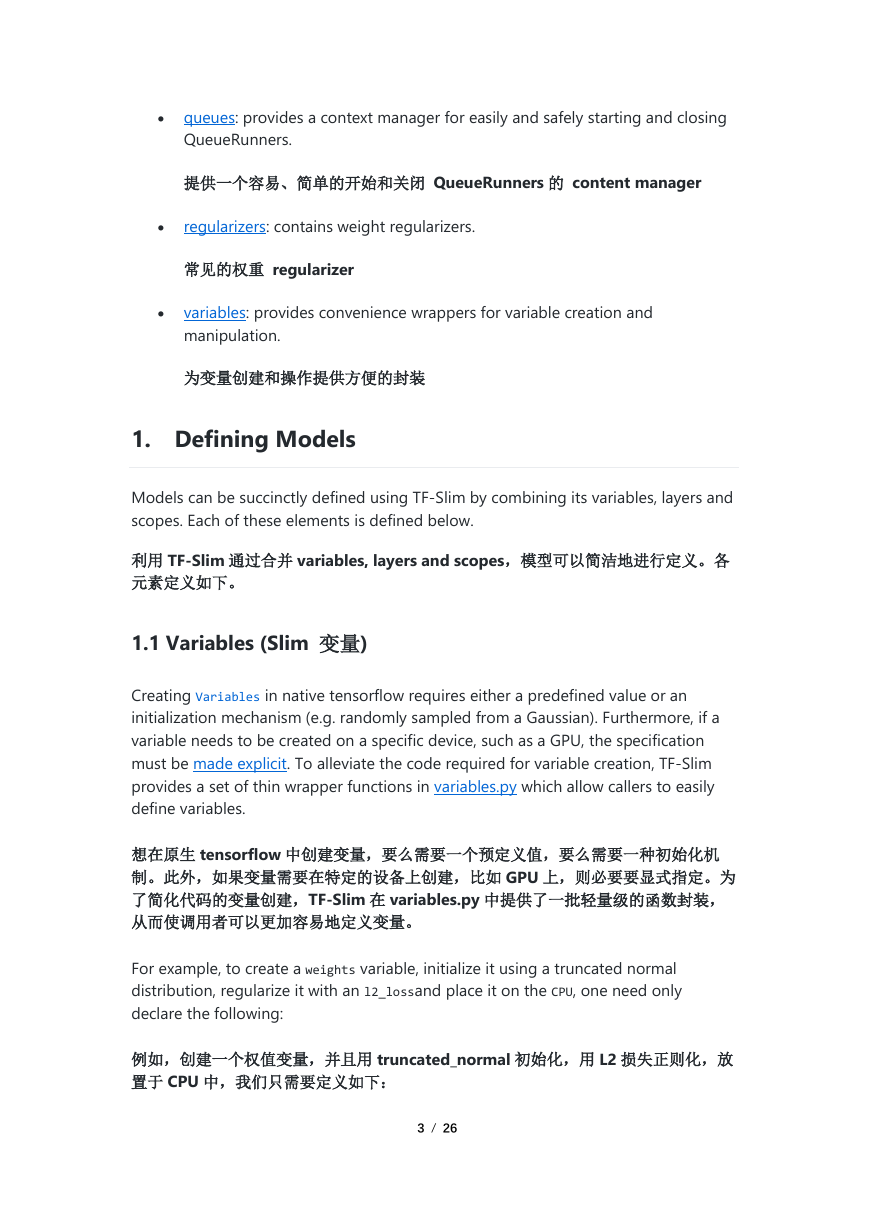

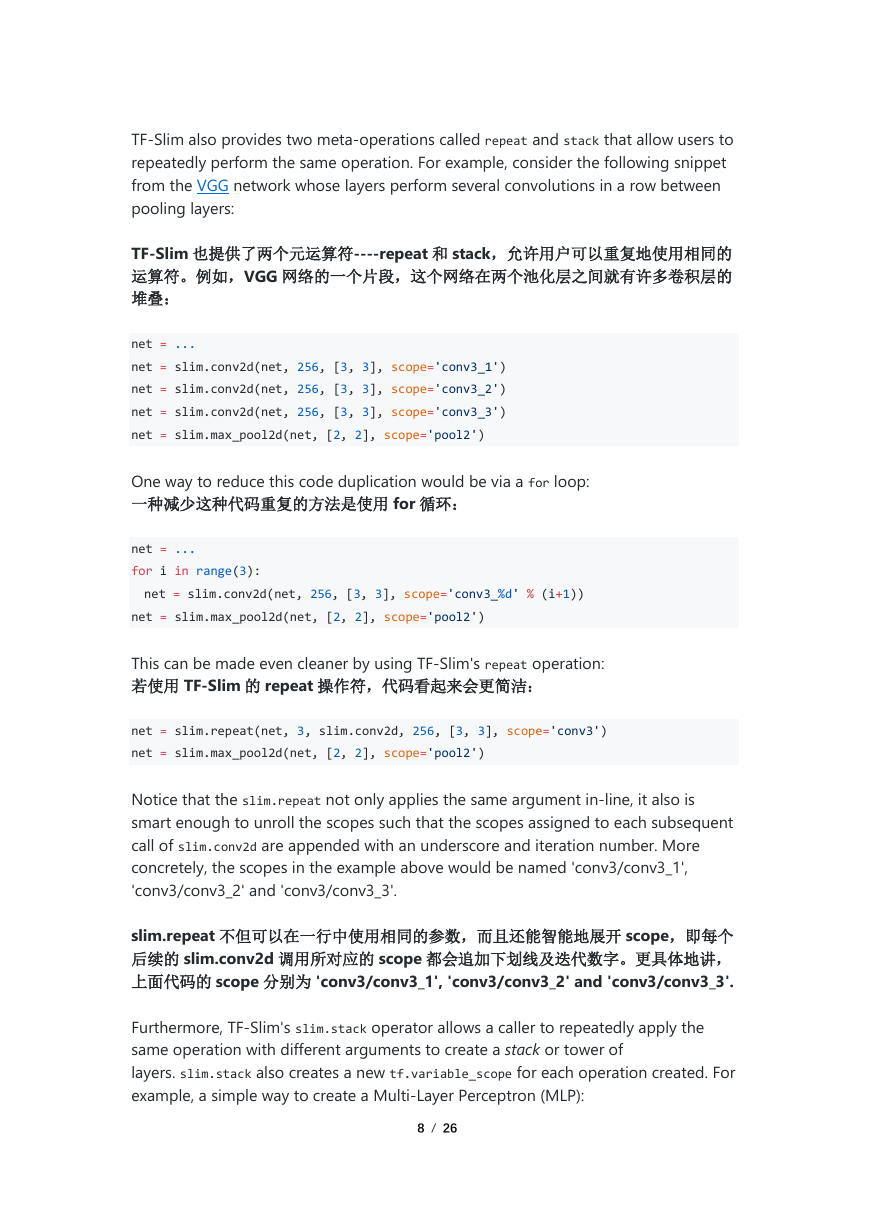

TF-Slim also provides two meta-operations called repeat and stack that allow users to

repeatedly perform the same operation. For example, consider the following snippet

from the VGG network whose layers perform several convolutions in a row between

pooling layers:

TF-Slim 也提供了两个元运算符----repeat 和 stack,允许用户可以重复地使用相同的

运算符。例如,VGG 网络的一个片段,这个网络在两个池化层之间就有许多卷积层的

堆叠:

net = ...

net = slim.conv2d(net, 256, [3, 3], scope='conv3_1')

net = slim.conv2d(net, 256, [3, 3], scope='conv3_2')

net = slim.conv2d(net, 256, [3, 3], scope='conv3_3')

net = slim.max_pool2d(net, [2, 2], scope='pool2')

One way to reduce this code duplication would be via a for loop:

一种减少这种代码重复的方法是使用 for 循环:

net = ...

for i in range(3):

net = slim.conv2d(net, 256, [3, 3], scope='conv3_%d' % (i+1))

net = slim.max_pool2d(net, [2, 2], scope='pool2')

This can be made even cleaner by using TF-Slim's repeat operation:

若使用 TF-Slim 的 repeat 操作符,代码看起来会更简洁:

net = slim.repeat(net, 3, slim.conv2d, 256, [3, 3], scope='conv3')

net = slim.max_pool2d(net, [2, 2], scope='pool2')

Notice that the slim.repeat not only applies the same argument in-line, it also is

smart enough to unroll the scopes such that the scopes assigned to each subsequent

call of slim.conv2d are appended with an underscore and iteration number. More

concretely, the scopes in the example above would be named 'conv3/conv3_1',

'conv3/conv3_2' and 'conv3/conv3_3'.

slim.repeat 不但可以在一行中使用相同的参数,而且还能智能地展开 scope,即每个

后续的 slim.conv2d 调用所对应的 scope 都会追加下划线及迭代数字。更具体地讲,

上面代码的 scope 分别为 'conv3/conv3_1', 'conv3/conv3_2' and 'conv3/conv3_3'.

Furthermore, TF-Slim's slim.stack operator allows a caller to repeatedly apply the

same operation with different arguments to create a stack or tower of

layers. slim.stack also creates a new tf.variable_scope for each operation created. For

example, a simple way to create a Multi-Layer Perceptron (MLP):

8 / 26

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc