Dynamic Programming

and Optimal Control

Volume I

THIRD EDITION

P. Bertsekas

Massachusetts Institute of Technology

WWW site for book information and

http://www.athenasc.com

IiJ Athena Scientific, Belmont,

�

Athena Scientific

Post Office Box 805

NH 03061-0805

U.S.A.

ErnaH:

WWW: http://www.athenasc.co:m

info@athenasc.com

Cover Design: Ann Gallager, www.gallagerdesign.com

© 2005, 2000, 1995 Dimitri P. Bertsekas

All rights reserved. No part of this book may be reproduced in any form

by ~1Il~ electronic or mechanical means (including photocopying, recording,

or mlormation storage and retrieval) without permission in writing from

the publisher.

Publisher's Cataloging-in-Publication Data

ABOUT THE AUTHOR

Dimitri Bertsekas studied Mechanical and Electrical Engineering at

the National Technical University of Athens, Greece, and obtained his

Ph.D. in system science from the Massachusetts Institute of Technology. He

has held faculty positions with the Engineering-Economic Systems Dept.,

Stanford University, and the Electrical Engineering Dept. of the Univer

sity of Illinois, Urbana. Since 1979 he has been teaching at the Electrical

Engineering and Computer Science Department of the Massachusetts In

stitute of Technology (M.LT.), where he is currently McAfee Professor of

Engineering.

His research spans several fields, including optimization, control, la,rge

scale computation, and data communication networks, and is closely tied

to his teaching and book authoring activities. He has written llUInerous

research papers, and thirteen books, several of which are used as textbooks

in MIT classes. He consults regularly with private industry and has held

editorial positions in several journals.

Professor Bertsekas was awarded the INFORMS 1997 Prize for H,e

search Excellence in the Interface Between Operations Research and Com

puter Science for his book "Neuro-Dynamic Programming" (co-authored

with John Tsitsiklis), the 2000 Greek National Award for Operations Re

search, and the 2001 ACC John R. Ragazzini Education Award. In 2001,

he was elected to the United States National Academy of Engineering.

Bertsekas, Dimitri P.

Dynamic Programming and Optimal Control

Includes Bibliography and Index

1. Mathematical Optimization. 2. Dynamic Programming. L Title.

QA402.5 .13465 2005

00-91281

519.703

ISBN 1-886529-26-4

�

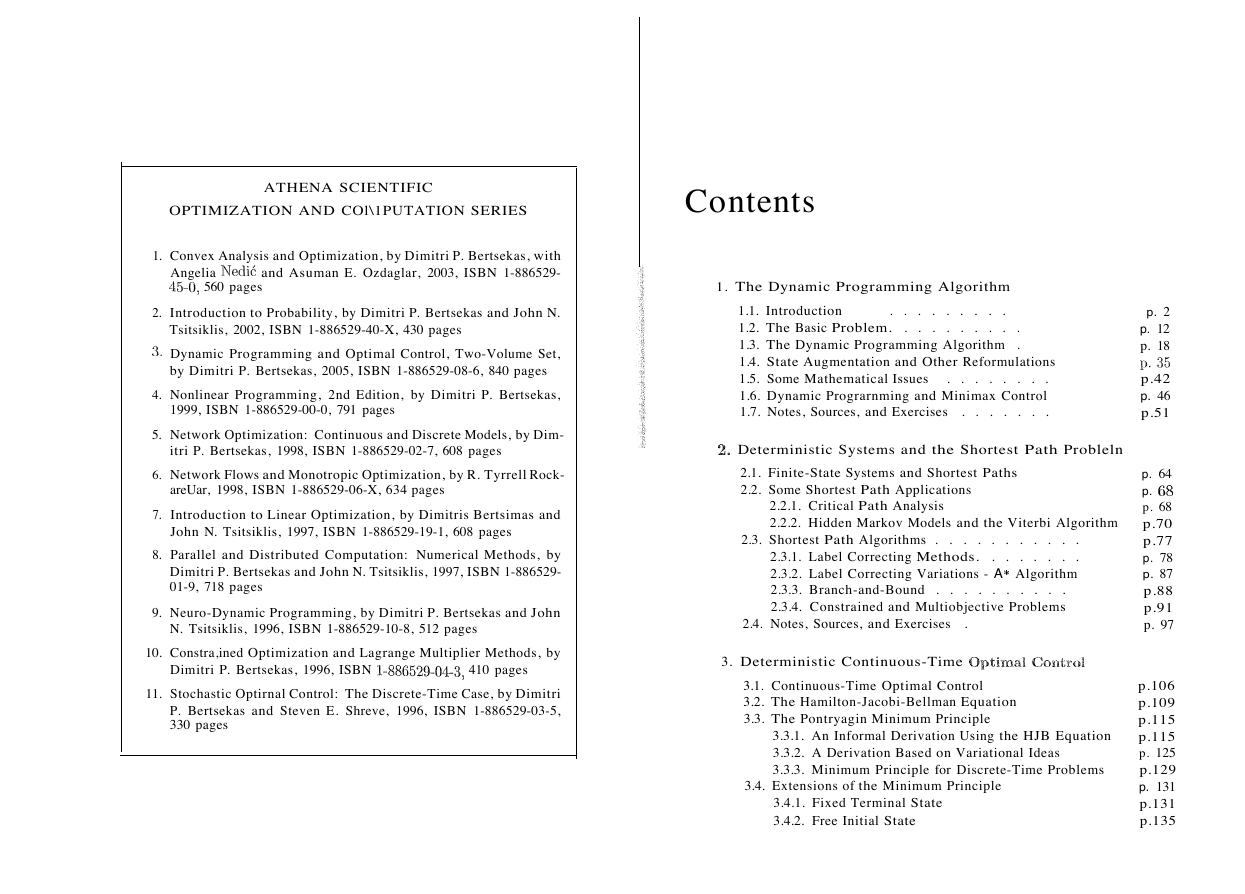

ATHENA SCIENTIFIC

OPTIMIZATION AND COl\1PUTATION SERIES

Contents

1. Convex Analysis and Optimization, by Dimitri P. Bertsekas, with

Angelia Nedic and Asuman E. Ozdaglar, 2003, ISBN 1-886529

45-0, 560 pages

2. Introduction to Probability, by Dimitri P. Bertsekas and John N.

Tsitsiklis, 2002, ISBN 1-886529-40-X, 430 pages

3. Dynamic Programming and Optimal Control, Two-Volume Set,

by Dimitri P. Bertsekas, 2005, ISBN 1-886529-08-6, 840 pages

4. Nonlinear Programming, 2nd Edition, by Dimitri P. Bertsekas,

1999, ISBN 1-886529-00-0, 791 pages

5. Network Optimization: Continuous and Discrete Models, by Dim

itri P. Bertsekas, 1998, ISBN 1-886529-02-7, 608 pages

6. Network Flows and Monotropic Optimization, by R. Tyrrell Rock

areUar, 1998, ISBN 1-886529-06-X, 634 pages

7. Introduction to Linear Optimization, by Dimitris Bertsimas and

John N. Tsitsiklis, 1997, ISBN 1-886529-19-1, 608 pages

8. Parallel and Distributed Computation: Numerical Methods, by

Dimitri P. Bertsekas and John N. Tsitsiklis, 1997, ISBN 1-886529

01-9, 718 pages

9. Neuro-Dynamic Programming, by Dimitri P. Bertsekas and John

N. Tsitsiklis, 1996, ISBN 1-886529-10-8, 512 pages

10. Constra,ined Optimization and Lagrange Multiplier Methods, by

Dimitri P. Bertsekas, 1996, ISBN 1-88f1529-04-3, 410 pages

11. Stochastic Optirnal Control: The Discrete-Time Case, by Dimitri

P. Bertsekas and Steven E. Shreve, 1996, ISBN 1-886529-03-5,

330 pages

1. The Dynamic Programming Algorithm

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1.1. Introduction

.

1.2. The Basic Problem.

.

1.3. The Dynamic Programming Algorithm .

1.4. State Augmentation and Other Reformulations

1.5. Some Mathematical Issues

.

1.6. Dynamic Prograrnming and Minimax Control

1.7. Notes, Sources, and Exercises

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2. Deterministic Systems and the Shortest Path Probleln

2.1. Finite-State Systems and Shortest Paths

2.2. Some Shortest Path Applications

2.2.1. Critical Path Analysis

2.2.2. Hidden Markov Models and the Viterbi Algorithm

2.3. Shortest Path Algorithms .

.

.

2.3.1. Label Correcting Methods.

.

2.3.2. Label Correcting Variations - A* Algorithm

2.3.3. Branch-and-Bound .

.

2.3.4. Constrained and Multiobjective Problems

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2.4. Notes, Sources, and Exercises

.

3. Deterministic Continuous-Time

3.1. Continuous-Time Optimal Control

3.2. The Hamilton-Jacobi-Bellman Equation

3.3. The Pontryagin Minimum Principle

3.3.1. An Informal Derivation Using the HJB Equation

3.3.2. A Derivation Based on Variational Ideas

3.3.3. Minimum Principle for Discrete-Time Problems

3.4. Extensions of the Minimum Principle

3.4.1. Fixed Terminal State

3.4.2. Free Initial State

p. 2

p. 12

p. 18

p.35

p.42

p. 46

p.51

p. 64

p. fl8

p. 68

p.70

p.77

p. 78

p. 87

p.88

p.91

p. 97

p.106

p.109

p.115

p.115

p. 125

p.129

p. 131

p.131

p.135

�

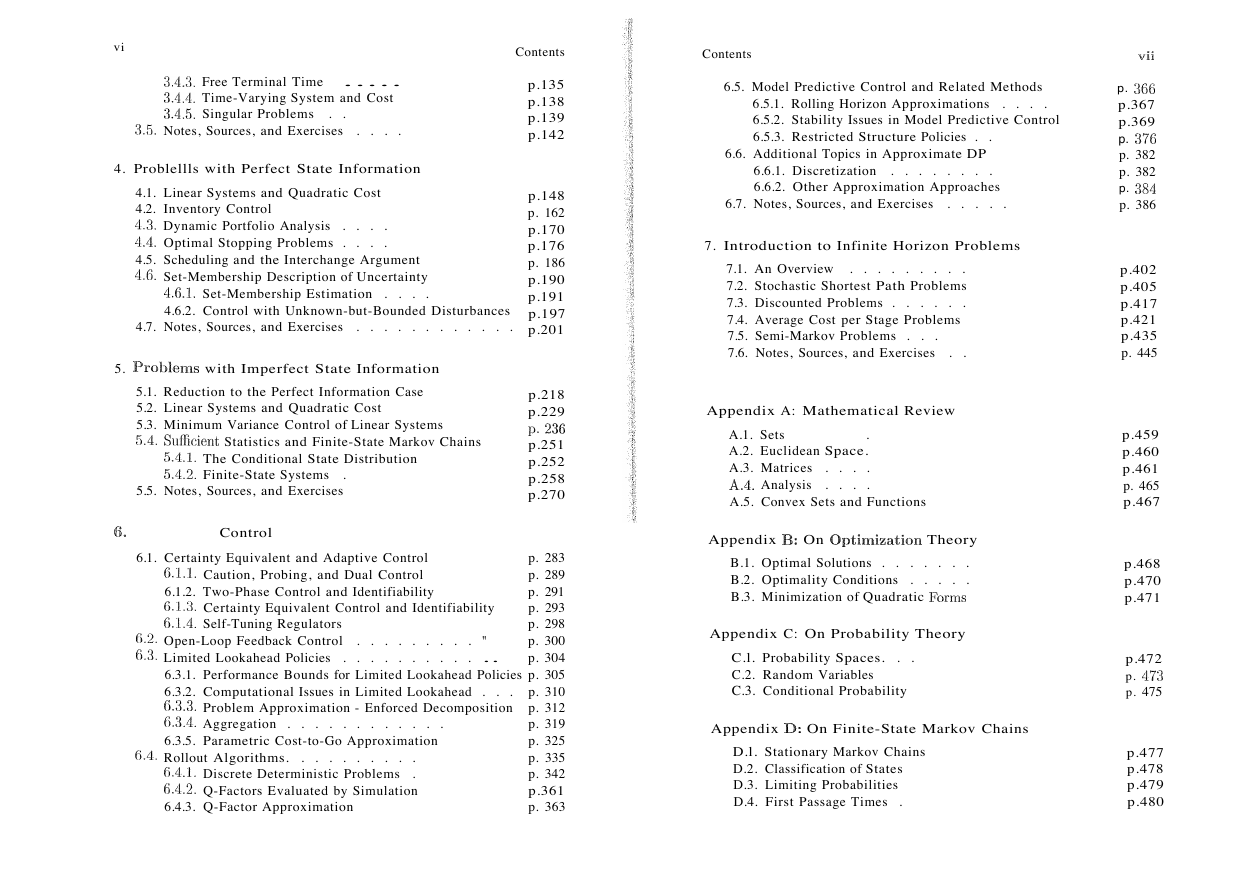

vi

3.4.3. Free Terminal Time . . . . .

~.L4.4. Time-Varying System and Cost

3.4.5. Singular Problems

.

~~.5. Notes, Sources, and Exercises

.

.

.

.

.

4. Problellls with Perfect State Information

4.1. Linear Systems and Quadratic Cost

4.2. Inventory Control

L1.3. Dynamic Portfolio Analysis

.

4.4. Optimal Stopping Problems .

4.5. Scheduling and the Interchange Argument

4.6. Set-Membership Description of Uncertainty

.

.

.

.

.

.

.

.

4.6.1. Set-Membership Estimation .

4.6.2. Control with Unknown-but-Bounded Disturbances

.

4.7. Notes, Sources, and Exercises

.

.

.

.

.

.

.

.

.

.

.

.

5. Problen'ls with Imperfect State Information

5.1. Reduction to the Perfect Information Case

5.2. Linear Systems and Quadratic Cost

5.3. Minimum Variance Control of Linear Systems

5.4. SufIicient Statistics and Finite-State Markov Chains

5.4.1. The Conditional State Distribution

5.4.2. Finite-State Systems

.

5.5. Notes, Sources, and Exercises

Contents

p.135

p.138

p.139

p.142

p.148

p. 162

p.170

p.176

p. 186

p.190

p.191

p.197

p.201

p.218

p.229

p.236

p.251

p.252

p.258

p.270

Contents

6.5. Model Predictive Control and Related Methods

6.5.1. Rolling Horizon Approximations

6.5.2. Stability Issues in Model Predictive Control

6.5.3. Restricted Structure Policies .

.

.

.

.

.

6.6. Additional Topics in Approximate DP

6.6.1. Discretization

6.6.2. Other Approximation Approaches

.

.

.

.

.

.

.

.

6.7. Notes, Sources, and Exercises

.

.

.

.

.

7. Introduction to Infinite Horizon Problems

.

.

.

.

.

.

.

7.1. An Overview .

.

7.2. Stochastic Shortest Path Problems

7.3. Discounted Problems .

.

7.4. Average Cost per Stage Problems

7.5. Semi-Markov Problems .

.

7.6. Notes, Sources, and Exercises

.

.

.

.

.

.

.

Appendix A: Mathematical Review

.

A.1. Sets

A.2. Euclidean Space.

.

A.3. Matrices

A.4. Analysis

.

A.5. Convex Sets and Functions

.

.

.

.

.

.

6.

Control

Appendix B: On Optimization Theory

6.1. Certainty Equivalent and Adaptive Control

G.l.l. Caution, Probing, and Dual Control

6.1.2. Two-Phase Control and Identifiability

6.1.~1. Certainty Equivalent Control and Identifiability

6.1.4. Self-Tuning Regulators

G.2. Open-Loop Feedback Control

t\.~3. Limited Lookahead Policies

.

p. 283

p. 289

p. 291

p. 293

p. 298

p. 300

p. 304

6.3.1. Performance Bounds for Limited Lookahead Policies p. 305

6.3.2. Computational Issues in Limited Lookahead .

p. 310

.

p. 312

G.3.3. Problem Approximation - Enforced Decomposition

6.3.4. Aggregation .

p. 319

p. 325

6.3.5. Parametric Cost-to-Go Approximation

p. 335

p. 342

p.361

p. 363

.

.

6.4.1. Discrete Deterministic Problems

.

6.4.2. Q-Factors Evaluated by Simulation

6.4.3. Q-Factor Approximation

6.4. Rollout Algorithms.

. "

. . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

B.1. Optimal Solutions .

.

.

B.2. Optimality Conditions

B.3. Minimization of Quadratic J:iorms

.

.

.

.

.

.

.

.

Appendix C: On Probability Theory

C.l. Probability Spaces.

C.2. Random Variables

C.3. Conditional Probability

.

.

Appendix D: On Finite-State Markov Chains

D.l. Stationary Markov Chains

D.2. Classification of States

D.3. Limiting Probabilities

D.4. First Passage Times

.

vii

p. ~366

p.367

p.369

p. ~376

p. 382

p. 382

p. 38 L1

p. 386

p.402

p.405

p.417

p.421

p.435

p. 445

p.459

p.460

p.461

p. 465

p.467

p.468

p.470

p.471

p.472

p. 47~i

p. 475

p.477

p.478

p.479

p.480

�

viii

Contents

Contents

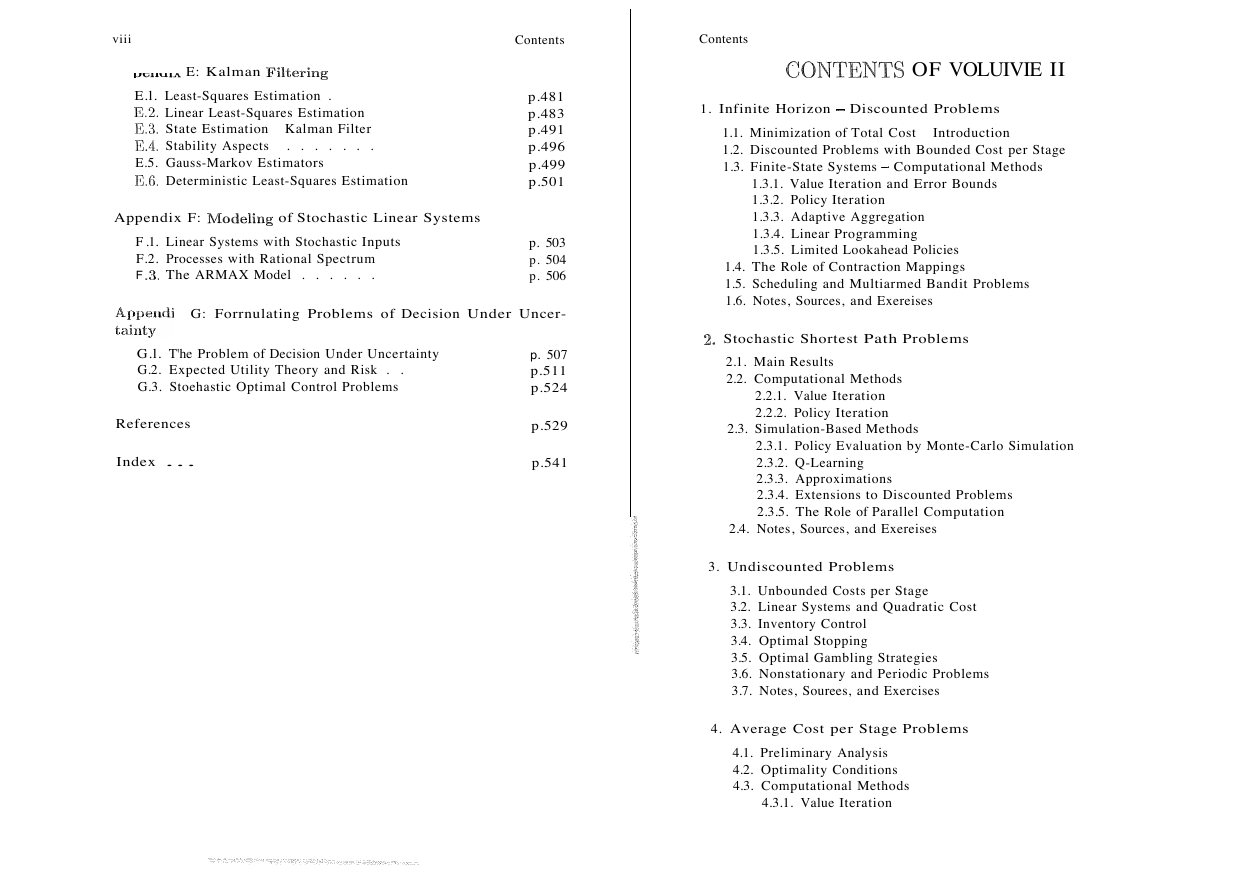

P'C'A.a'LIU.lI.. E: Kalman Filtering

E.l. Least-Squares Estimation .

E.2. Linear Least-Squares Estimation

E.~1. State Estimation Kalman Filter

E.4. Stability Aspects

.

.

E.5. Gauss-Markov Estimators

E.6. Deterministic Least-Squares Estimation

.

.

.

.

.

Appendix F: lVIodeling of Stochastic Linear Systems

F .1. Linear Systems with Stochastic Inputs

F.2. Processes with Rational Spectrum

F .~1. The ARMAX Model

.

.

.

.

.

.

p.481

p.483

p.491

p.496

p.499

p.501

p. 503

p. 504

p. 506

G: Forrnulating Problems of Decision Under Uncer-

G.l. T'he Problem of Decision Under Uncertainty

G.2. Expected Utility Theory and Risk .

G.3. Stoehastic Optimal Control Problems

.

References

Index . . .

p. 507

p.511

p.524

p.529

p.541

COl\fTENTS OF VOLUIVIE II

1. Infinite Horizon - Discounted Problems

1.1. Minimization of Total Cost

1.2. Discounted Problems with Bounded Cost per Stage

1.3. Finite-State Systems - Computational Methods

Introduction

1.3.1. Value Iteration and Error Bounds

1.3.2. Policy Iteration

1.3.3. Adaptive Aggregation

1.3.4. Linear Programming

1.3.5. Limited Lookahead Policies

1.4. The Role of Contraction Mappings

1.5. Scheduling and Multiarmed Bandit Problems

1.6. Notes, Sources, and Exereises

2. Stochastic Shortest Path Problems

2.1. Main Results

2.2. Computational Methods

2.2.1. Value Iteration

2.2.2. Policy Iteration

2.3. Simulation-Based Methods

2.3.1. Policy Evaluation by Monte-Carlo Simulation

2.3.2. Q-Learning

2.3.3. Approximations

2.3.4. Extensions to Discounted Problems

2.3.5. The Role of Parallel Computation

2.4. Notes, Sources, and Exereises

3. Undiscounted Problems

3.1. Unbounded Costs per Stage

3.2. Linear Systems and Quadratic Cost

3.3. Inventory Control

3.4. Optimal Stopping

3.5. Optimal Gambling Strategies

3.6. Nonstationary and Periodic Problems

3.7. Notes, Sourees, and Exercises

4. Average Cost per Stage Problems

4.1. Preliminary Analysis

4.2. Optimality Conditions

4.3. Computational Methods

4.3.1. Value Iteration

�

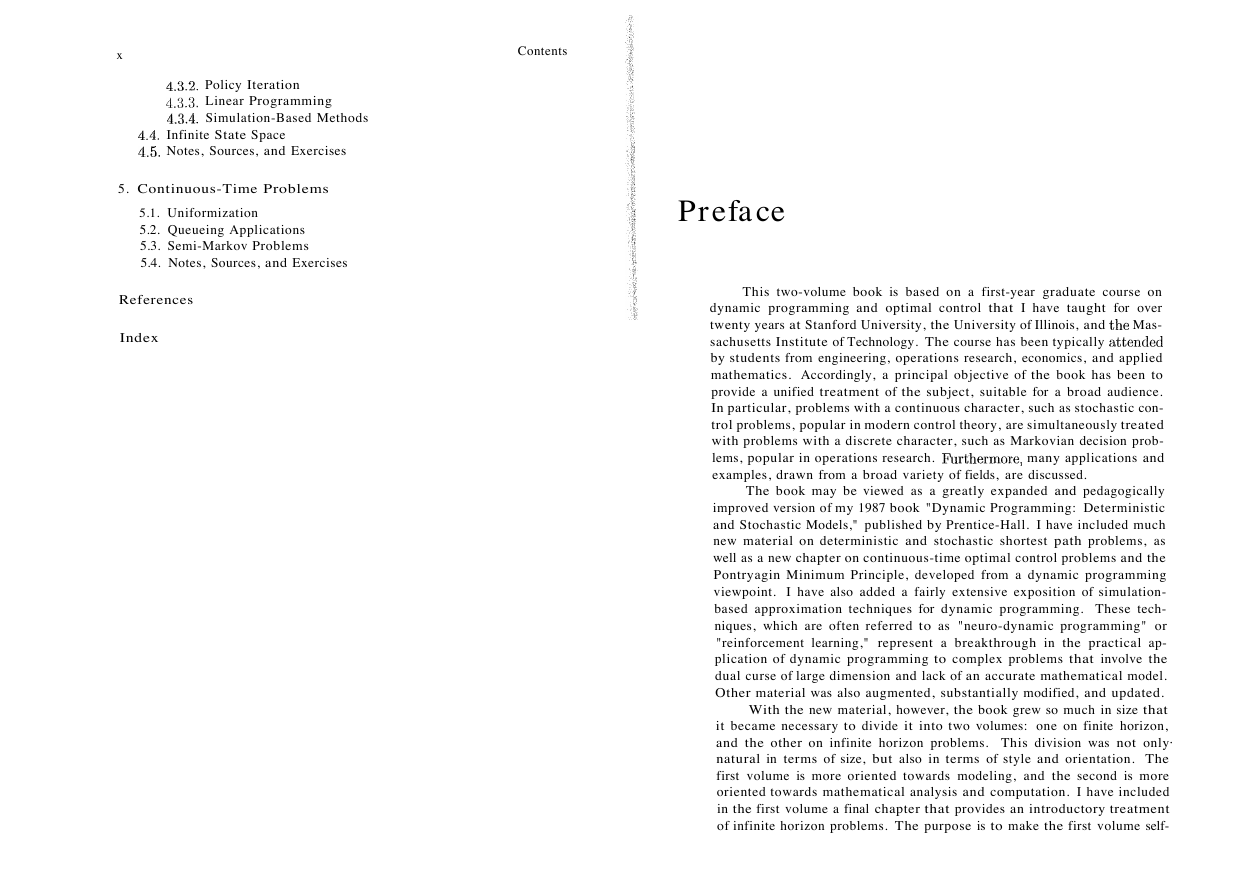

x

Contents

4.3.2. Policy Iteration

L1.~t3. Linear Programming

4.3.4. Simulation-Based Methods

Infinite State Space

Notes, Sources, and Exercises

4.4.

4.5.

5. Continuous-Time Problems

5.1. Uniformization

5.2. Queueing Applications

5.3. Semi-Markov Problems

5.4. Notes, Sources, and Exercises

References

Index

Preface

This two-volume book is based on a first-year graduate course on

dynamic programming and optimal control that I have taught for over

twenty years at Stanford University, the University of Illinois, and HIe Mas

sachusetts Institute of Technology. The course has been typically attended

by students from engineering, operations research, economics, and applied

mathematics. Accordingly, a principal objective of the book has been to

provide a unified treatment of the subject, suitable for a broad audience.

In particular, problems with a continuous character, such as stochastic con

trol problems, popular in modern control theory, are simultaneously treated

with problems with a discrete character, such as Markovian decision prob

lems, popular in operations research. F\lrthermore, many applications and

examples, drawn from a broad variety of fields, are discussed.

The book may be viewed as a greatly expanded and pedagogically

improved version of my 1987 book "Dynamic Programming: Deterministic

and Stochastic Models," published by Prentice-Hall. I have included much

new material on deterministic and stochastic shortest path problems, as

well as a new chapter on continuous-time optimal control problems and the

Pontryagin Minimum Principle, developed from a dynamic programming

viewpoint.

I have also added a fairly extensive exposition of simulation

based approximation techniques for dynamic programming. These tech

niques, which are often referred to as "neuro-dynamic programming" or

"reinforcement learning," represent a breakthrough in the practical ap

plication of dynamic programming to complex problems that involve the

dual curse of large dimension and lack of an accurate mathematical model.

Other material was also augmented, substantially modified, and updated.

With the new material, however, the book grew so much in size that

it became necessary to divide it into two volumes: one on finite horizon,

and the other on infinite horizon problems. This division was not only·

natural in terms of size, but also in terms of style and orientation. The

first volume is more oriented towards modeling, and the second is more

oriented towards mathematical analysis and computation. I have included

in the first volume a final chapter that provides an introductory treatment

of infinite horizon problems. The purpose is to make the first volume self-

�

xii

Preface

Preface

xiii

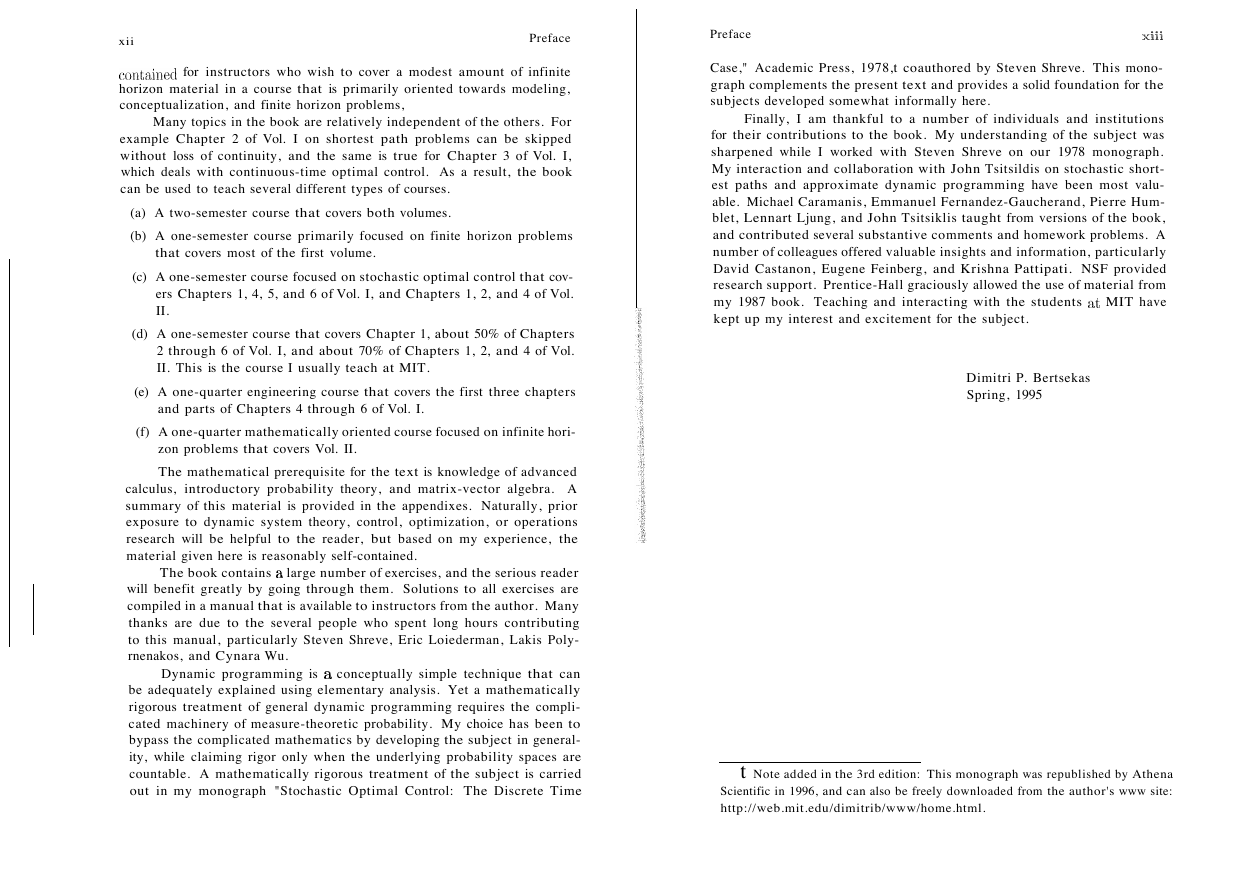

conta,ined for instructors who wish to cover a modest amount of infinite

horizon material in a course that is primarily oriented towards modeling,

conceptualization, and finite horizon problems,

Many topics in the book are relatively independent of the others. For

example Chapter 2 of Vol. I on shortest path problems can be skipped

without loss of continuity, and the same is true for Chapter 3 of Vol. I,

which deals with continuous-time optimal control. As a result, the book

can be used to teach several different types of courses.

(a) A two-semester course that covers both volumes.

(b) A one-semester course primarily focused on finite horizon problems

that covers most of the first volume.

(c) A one-semester course focused on stochastic optimal control that cov

ers Chapters 1, 4, 5, and 6 of Vol. I, and Chapters 1, 2, and 4 of Vol.

II.

(d) A one-semester course that covers Chapter 1, about 50% of Chapters

2 through 6 of Vol. I, and about 70% of Chapters 1, 2, and 4 of Vol.

II. This is the course I usually teach at MIT.

(e) A one-quarter engineering course that covers the first three chapters

and parts of Chapters 4 through 6 of Vol. I.

(f) A one-quarter mathematically oriented course focused on infinite hori

zon problems that covers Vol. II.

The mathematical prerequisite for the text is knowledge of advanced

introductory probability theory, and matrix-vector algebra. A

calculus,

summary of this material is provided in the appendixes. Naturally, prior

exposure to dynamic system theory, control, optimization, or operations

research will be helpful to the reader, but based on my experience, the

material given here is reasonably self-contained.

The book contains a large number of exercises, and the serious reader

will benefit greatly by going through them. Solutions to all exercises are

compiled in a manual that is available to instructors from the author. Many

thanks are due to the several people who spent long hours contributing

to this manual, particularly Steven Shreve, Eric Loiederman, Lakis Poly

rnenakos, and Cynara Wu.

Dynamic programming is a conceptually simple technique that can

be adequately explained using elementary analysis. Yet a mathematically

rigorous treatment of general dynamic programming requires the compli

cated machinery of measure-theoretic probability. My choice has been to

bypass the complicated mathematics by developing the subject in general

ity, while claiming rigor only when the underlying probability spaces are

countable. A mathematically rigorous treatment of the subject is carried

out in my monograph "Stochastic Optimal Control: The Discrete Time

Case," Academic Press, 1978,t coauthored by Steven Shreve. This mono

graph complements the present text and provides a solid foundation for the

subjects developed somewhat informally here.

Finally, I am thankful to a number of individuals and institutions

for their contributions to the book. My understanding of the subject was

sharpened while I worked with Steven Shreve on our 1978 monograph.

My interaction and collaboration with John Tsitsildis on stochastic short

est paths and approximate dynamic programming have been most valu

able. Michael Caramanis, Emmanuel Fernandez-Gaucherand, Pierre Hum

blet, Lennart Ljung, and John Tsitsiklis taught from versions of the book,

and contributed several substantive comments and homework problems. A

number of colleagues offered valuable insights and information, particularly

David Castanon, Eugene Feinberg, and Krishna Pattipati. NSF provided

research support. Prentice-Hall graciously allowed the use of material from

my 1987 book. Teaching and interacting with the students at MIT have

kept up my interest and excitement for the subject.

Dimitri P. Bertsekas

Spring, 1995

t Note added in the 3rd edition: This monograph was republished by Athena

Scientific in 1996, and can also be freely downloaded from the author's www site:

http://web.mit.edu/dimitrib/www/home.html.

�

xiv

Preface

Preface

xv

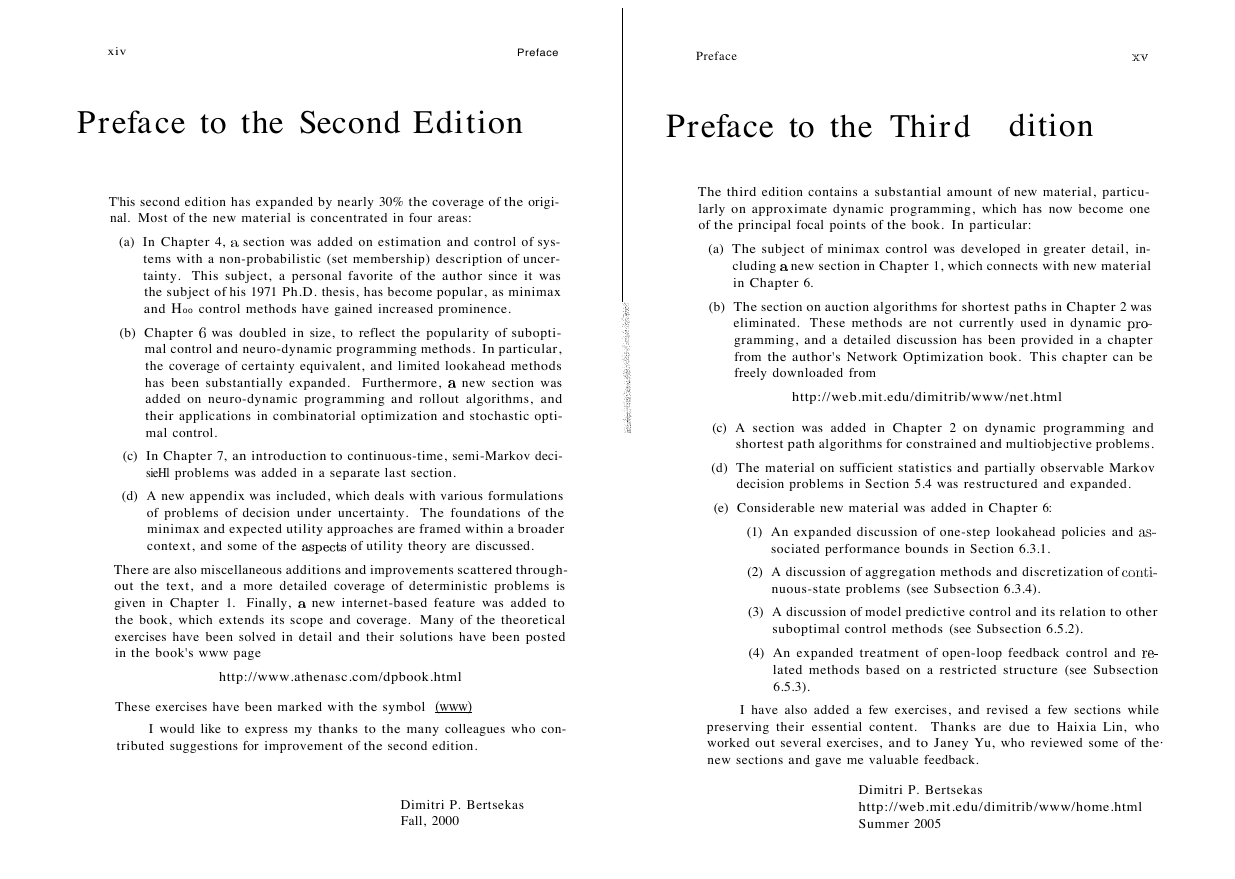

Preface to the Second Edition

Preface to the Third

dition

T'his second edition has expanded by nearly 30% the coverage of the origi

nal. Most of the new material is concentrated in four areas:

(a) In Chapter 4, a section was added on estimation and control of sys

tems with a non-probabilistic (set membership) description of uncer

tainty. This subject, a personal favorite of the author since it was

the subject of his 1971 Ph.D. thesis, has become popular, as minimax

and H 00 control methods have gained increased prominence.

(b) Chapter 6 was doubled in size, to reflect the popularity of subopti

mal control and neuro-dynamic programming methods. In particular,

the coverage of certainty equivalent, and limited lookahead methods

has been substantially expanded. Furthermore, a new section was

added on neuro-dynamic programming and rollout algorithms, and

their applications in combinatorial optimization and stochastic opti

mal control.

(c) In Chapter 7, an introduction to continuous-time, semi-Markov deci

sieHl problems was added in a separate last section.

(d) A new appendix was included, which deals with various formulations

of problems of decision under uncertainty. The foundations of the

minimax and expected utility approaches are framed within a broader

context, and some of the aspects of utility theory are discussed.

There are also miscellaneous additions and improvements scattered through

out the text, and a more detailed coverage of deterministic problems is

given in Chapter 1. Finally, a new internet-based feature was added to

the book, which extends its scope and coverage. Many of the theoretical

exercises have been solved in detail and their solutions have been posted

in the book's www page

http://www.athenasc.com/dpbook.html

These exercises have been marked with the symbol

(www)

I would like to express my thanks to the many colleagues who con

tributed suggestions for improvement of the second edition.

Dimitri P. Bertsekas

Fall, 2000

The third edition contains a substantial amount of new material, particu

larly on approximate dynamic programming, which has now become one

of the principal focal points of the book. In particular:

(a) The subject of minimax control was developed in greater detail, in

cluding a new section in Chapter 1, which connects with new material

in Chapter 6.

(b) The section on auction algorithms for shortest paths in Chapter 2 was

eliminated. These methods are not currently used in dynamic pro

gramming, and a detailed discussion has been provided in a chapter

from the author's Network Optimization book. This chapter can be

freely downloaded from

http://web.mit.edu/dimitrib/www/net.html

(c) A section was added in Chapter 2 on dynamic programming and

shortest path algorithms for constrained and multiobjective problems.

(d) The material on sufficient statistics and partially observable Markov

decision problems in Section 5.4 was restructured and expanded.

(e) Considerable new material was added in Chapter 6:

(1) An expanded discussion of one-step lookahead policies and as

sociated performance bounds in Section 6.3.1.

(2) A discussion of aggregation methods and discretization of conti

nuous-state problems (see Subsection 6.3.4).

(3) A discussion of model predictive control and its relation to other

suboptimal control methods (see Subsection 6.5.2).

(4) An expanded treatment of open-loop feedback control and re

lated methods based on a restricted structure (see Subsection

6.5.3).

I have also added a few exercises, and revised a few sections while

preserving their essential content. Thanks are due to Haixia Lin, who

worked out several exercises, and to Janey Yu, who reviewed some of the·

new sections and gave me valuable feedback.

Dimitri P. Bertsekas

http://web.mit.edu/dimitrib /www/home.html

Summer 2005

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc