Cover

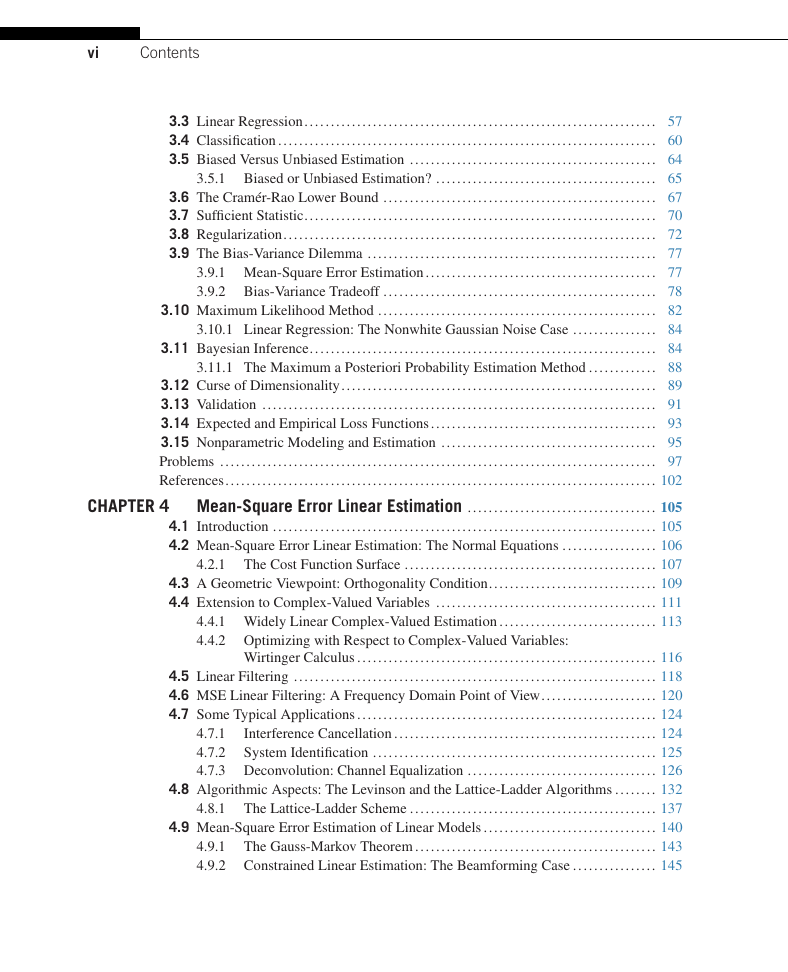

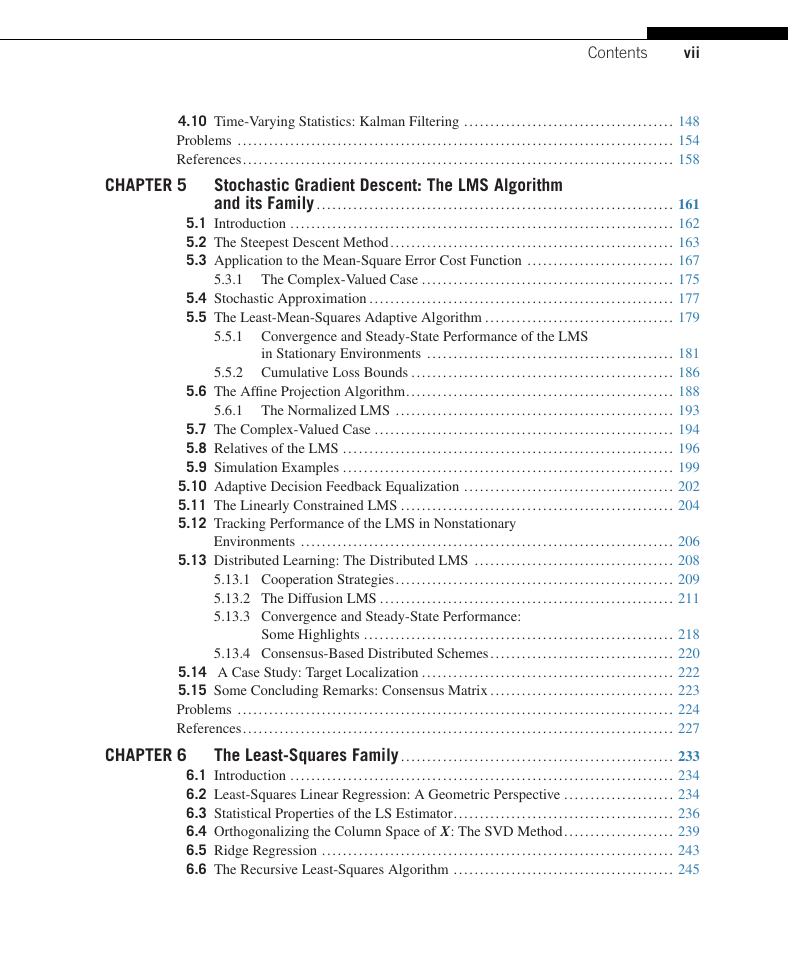

Contents

Preface

Acknowledgments

Notation

Dedication

1 Introduction

What Machine Learning is About

Classification

Regression

Structure and a Road Map of the Book

References

2 Probability and Stochastic Processes

Introduction����������������������������������������������������

Probability and Random Variables����������������������������������������������������������������������������������������������������������������

Probability�������������������������������������������������

Relative frequency definition

Axiomatic definition

Discrete Random Variables�������������������������������������������������������������������������������������������

Joint and conditional probabilities

Bayes theorem

Continuous Random Variables�������������������������������������������������������������������������������������������������

Mean and Variance�������������������������������������������������������������������

Complex random variables

Transformation of Random Variables����������������������������������������������������������������������������������������������������������������������

Examples of Distributions�������������������������������������������������������������������������������������������

Discrete Variables����������������������������������������������������������������������

The Bernoulli distribution

The Binomial distribution

The Multinomial distribution

Continuous Variables����������������������������������������������������������������������������

The uniform distribution

The Gaussian distribution

The central limit theorem

The exponential distribution

The beta distribution

The gamma distribution

The Dirichlet distribution

Stochastic Processes����������������������������������������������������������������������������

First and Second Order Statistics�������������������������������������������������������������������������������������������������������������������

Stationarity and Ergodicity�������������������������������������������������������������������������������������������������

Power Spectral Density����������������������������������������������������������������������������������

Properties of the autocorrelation sequence

Power spectral density

Transmission through a linear system

Physical interpretation of the PSD

Autoregressive Models�������������������������������������������������������������������������������

Information Theory����������������������������������������������������������������������

Discrete Random Variables�������������������������������������������������������������������������������������������

Information

Mutual and conditional information

Entropy and average mutual information

Continuous Random Variables�������������������������������������������������������������������������������������������������

Average mutual information and conditional information

Relative entropy or Kullback-Leibler divergence

Stochastic Convergence����������������������������������������������������������������������������������

Convergence everywhere

Convergence almost everywhere

Convergence in the mean-square sense

Convergence in probability

Convergence in distribution

Problems

References

3 Learning in Parametric Modeling

3.1 Introduction

3.2 Parameter Estimation: The Deterministic Point of View

3.3 Linear Regression

3.4 Classification

Generative versus discriminative learning

Supervised, semisupervised, and unsupervised learning

3.5 Biased Versus Unbiased Estimation

3.5.1 Biased or Unbiased Estimation?

3.6 The Cramér-Rao Lower Bound

3.7 Sufficient Statistic

3.8 Regularization

Inverse problems: Ill-conditioning and overfitting

3.9 The Bias-Variance Dilemma

3.9.1 Mean-Square Error Estimation

3.9.2 Bias-Variance Tradeoff

3.10 Maximum Likelihood Method

3.10.1 Linear Regression: The Nonwhite Gaussian Noise Case

3.11 Bayesian Inference

3.11.1 The Maximum A Posteriori Probability Estimation Method

3.12 Curse of Dimensionality

3.13 Validation

Cross-validation

3.14 Expected and Empirical Loss Functions

3.15 Nonparametric Modeling and Estimation

Problems

References

4 Mean-Square Error Linear Estimation

Introduction

Mean-Square Error Linear Estimation: The Normal Equations

The Cost Function Surface

A Geometric Viewpoint: Orthogonality Condition

Extension to Complex-Valued Variables

Widely Linear Complex-Valued Estimation

Circularity conditions

Optimizing with Respect to Complex-Valued Variables: Wirtinger Calculus

Linear Filtering

MSE Linear Filtering: A Frequency Domain Point of View

Deconvolution: image deblurring

Some Typical Applications

Interference Cancellation

System Identification

Deconvolution: Channel Equalization

Algorithmic Aspects

Forward and backward MSE optimal predictors

The Lattice-Ladder Scheme

Orthogonality of the optimal backward errors

Mean-Square Error Estimation of Linear Models

The Gauss-Markov Theorem

Constrained Linear Estimation: The Beamforming Case

Time-Varying Statistics: Kalman Filtering

Problems

MATLAB Exercises

References

5 Stochastic Gradient Descent: the lms Algorithm and its Family

Introduction

The Steepest Descent Method

Application to the Mean-Square Error Cost Function

Time-varying step-sizes

The Complex-Valued Case

Stochastic Approximation

Application to the MSE linear estimation

The Least-Mean-Squares Adaptive Algorithm

Convergence and Steady-State Performance of the LMS in Stationary Environments

Convergence of the parameter error vector

Cumulative Loss Bounds

The Affine Projection Algorithm

Geometric interpretation of APA

Orthogonal projections

The Normalized LMS

The Complex-Valued Case

The widely linear LMS

The widely linear APA

Relatives of the LMS

The sign-error LMS

The least-mean-fourth (LMF) algorithm

Transform-domain LMS

Simulation Examples

Adaptive Decision Feedback Equalization

The Linearly Constrained LMS

Tracking Performance of the LMS in Nonstationary Environments

Distributed Learning: The Distributed LMS

Cooperation Strategies

Centralized networks

Decentralized networks

The Diffusion LMS

Convergence and Steady-State Performance: Some Highlights

Consensus-Based Distributed Schemes

A Case Study: Target Localization

Some Concluding Remarks: Consensus Matrix

Problems

MATLAB Exercises

References

6 The Least-Squares Family

Introduction

Least-Squares Linear Regression: A Geometric Perspective

Statistical Properties of the LS Estimator

The LS estimator is unbiased

Covariance matrix of the LS estimator

The LS estimator is BLUE in the presence of white noise

The LS estimator achieves the Cramér-Rao bound for white Gaussian noise

Asymptotic distribution of the LS estimator

Orthogonalizing the Column Space of X: The SVD Method

Pseudo-inverse matrix and SVD

Ridge Regression

Principal components regression

The Recursive Least-Squares Algorithm

Time-iterative computations of ɸn, pn

Time updating of θn

Newton's Iterative Minimization Method

RLS and Newton's Method

Steady-State Performance of the RLS

Complex-Valued Data: The Widely Linear RLS

Computational Aspects of the LS Solution

Cholesky factorization

QR factorization

Fast RLS versions

The Coordinate and Cyclic Coordinate Descent Methods

Simulation Examples

Total-Least-Squares

Geometric interpretation of the total-least-squares method

Problems

MATLAB Exercises

References

7 Classification: A Tour of the Classics

Introduction

Bayesian Classification

The Bayesian classifier minimizes the misclassification error

Average Risk

Decision (Hyper)Surfaces

The Gaussian Distribution Case

Minimum distance classifiers

The Naive Bayes Classifier

The Nearest Neighbor Rule

Logistic Regression

Fisher's Linear Discriminant

Classification Trees

Combining Classifiers

Experimental comparisons

Schemes for combining classifiers

The Boosting Approach

The AdaBoost algorithm

The log-loss function

Boosting Trees

Case Study: Protein Folding Prediction

Protein folding prediction as a classification task

Classification of folding prediction via decision trees

Problems

MATLAB Exercises

References

8 Parameter Learning: A Convex Analytic Path

Introduction

Convex Sets and Functions

Convex Sets

Convex Functions

Projections onto Convex Sets

Properties of Projections

Fundamental Theorem of Projections onto Convex Sets

A Parallel Version of POCS

From Convex Sets to Parameter Estimation and Machine Learning

Regression

Classification

Infinite Many Closed Convex Sets: The Online Learning Case

Convergence of APSM

Some practical hints

Constrained Learning

The Distributed APSM

Optimizing Nonsmooth Convex Cost Functions

Subgradients and Subdifferentials

Minimizing Nonsmooth Continuous Convex Loss Functions: The BatchLearning Case

The subgradient method

The generic projected subgradient scheme

The projected gradient method (PGM)

Projected subgradient method

Online Learning for Convex Optimization

The PEGASOS algorithm

Regret Analysis

Regret analysis of the subgradient algorithm

Online Learning and Big Data Applications: A Discussion

Approximation, estimation and optimization errors

Batch versus online learning

Proximal Operators

Properties of the Proximal Operator

Proximal Minimization

Resolvent of the subdifferential mapping

Proximal Splitting Methods for Optimization

The proximal forward-backward splitting operator

Alternating direction method of multipliers (ADMM)

Mirror descent algorithms

Problems

MATLAB Exercises

Appendix to Chapter 8

References

9 Sparsity-Aware Learning: Concepts andTheoretical Foundations

Introduction

Searching for a Norm

The Least Absolute Shrinkage and Selection Operator (LASSO)

Sparse Signal Representation

In Search of the Sparsest Solution

The Ɩ2 norm minimizer

The l0 norm minimizer

The l1 norm minimizer

Characterization of the l1 norm minimizer

Geometric interpretation

Uniqueness of the l0 Minimizer

Mutual Coherence

Equivalence of l0 and l1 Minimizers: Sufficiency Conditions

Condition Implied by the Mutual Coherence Number

The Restricted Isometry Property (RIP)

Constructing matrices that obey the RIP of order k

Robust Sparse Signal Recovery from Noisy Measurements

Compressed Sensing: The Glory of Randomness

Compressed sensing

Dimensionality Reduction and Stable Embeddings

Sub-Nyquist Sampling: Analog-to-Information Conversion

A Case Study: Image De-Noising

Problems

MATLAB Exercises

References

10 Sparsity-aware Learning: Algorithms and Applications

Introduction

Sparsity-Promoting Algorithms

Greedy Algorithms

OMP can recover optimal sparse solutions: sufficiency condition

The LARS algorithm

Compressed sensing matching pursuit (CSMP) algorithms

Iterative Shrinkage/Thresholding (IST) Algorithms

Which Algorithm?: Some Practical Hints

Variations on the Sparsity-Aware Theme

Online Sparsity-Promoting Algorithms

LASSO: Asymptotic Performance

The Adaptive Norm-Weighted LASSO

Adaptive CoSaMP (AdCoSaMP) Algorithm

Sparse Adaptive Projection Subgradient Method (SpAPSM)

Projection onto the weighted l1 ball

Learning Sparse Analysis Models

Compressed Sensing for Sparse Signal Representation in Coherent Dictionaries

Cosparsity

A Case Study: Time-Frequency Analysis

Gabor transform and frames

Time-frequency resolution

Gabor frames

Time-frequency analysis of echolocation signals emitted by bats

Appendix to Chapter 10: Some Hints from the Theory of Frames

Problems

MATLAB Exercises

References

11 Learning in Reproducing Kernel Hilbert Spaces

11.1 Introduction

11.2 Generalized Linear Models

11.3 Volterra, Wiener, and Hammerstein Models

11.4 Cover's Theorem: Capacity of a Space in Linear Dichotomies

11.5 Reproducing Kernel Hilbert Spaces

11.5.1 Some Properties and Theoretical Highlights

11.5.2 Examples of Kernel Functions

Constructing kernels

String kernels

11.6 Representer Theorem

11.6.1 Semiparametric Representer Theorem

11.6.2 Nonparametric Modeling: A Discussion

11.7 Kernel Ridge Regression

11.8 Support Vector Regression

11.8.1 The Linear ε-Insensitive Optimal Regression

The solution

Solving the optimization task

11.9 Kernel Ridge Regression Revisited

11.10 Optimal Margin Classification: Support Vector Machines

11.10.1 Linearly Separable Classes: Maximum Margin Classifiers

The solution

The optimization task

11.10.2 Nonseparable Classes

The solution

The optimization task

11.10.3 Performance of SVMs and Applications

11.10.4 Choice of Hyperparameters

11.11 Computational Considerations

11.11.1 Multiclass Generalizations

11.12 Online Learning in RKHS

11.12.1 The Kernel LMS (KLMS)

11.12.2 The Naive Online Rreg Minimization Algorithm (NORMA)

Classification: the hinge loss function

Regression: the linear ε-insensitive loss function

Error bounds and convergence performance

11.12.3 The Kernel APSM Algorithm

Regression

Classification

11.13 Multiple Kernel Learning

11.14 Nonparametric Sparsity-Aware Learning: Additive Models

11.15 A Case Study: Authorship Identification

Problems

MATLAB Exercises

References

12 Bayesian Learning: Inference and the EM Algorithm

Introduction

Regression: A Bayesian Perspective

The Maximum Likelihood Estimator

The MAP Estimator

The Bayesian Approach

The Evidence Function and Occam's Razor Rule

Laplacian approximation and the evidence function

Exponential Family of Probability Distributions

The Exponential Family and the Maximum Entropy Method

Latent Variables and the EM Algorithm

The Expectation-Maximization Algorithm

The EM Algorithm: A Lower Bound Maximization View

Linear Regression and the EM Algorithm

Gaussian Mixture Models

Gaussian Mixture Modeling and Clustering

Combining Learning Models: A Probabilistic Point of View

Mixing Linear Regression Models

Mixture of experts

Hierarchical mixture of experts

Mixing Logistic Regression Models

Problems

MATLAB Exercises

Appendix to Chapter 12

PDFs with Exponent of Quadratic Form

The Conditional from the Joint Gaussian pdf

The Marginal from the Joint Gaussian Pdf

The Posterior from Gaussian Prior and Conditional Pdfs

References

13 Bayesian Learning: Approximate Inference and Nonparametric Models

13.1 Introduction

13.2 Variational Approximation in Bayesian Learning

The mean field approximation

13.2.1 The Case of the Exponential Family of Probability Distributions

13.3 A Variational Bayesian Approach to Linear Regression

Computation of the lower bound

13.4 A Variational Bayesian Approach to Gaussian Mixture Modeling

13.5 When Bayesian Inference Meets Sparsity

13.6 Sparse Bayesian Learning (SBL)

13.6.1 The Spike and Slab Method

13.7 The Relevance Vector Machine Framework

13.7.1 Adopting the Logistic Regression Model for Classification

13.8 Convex Duality and Variational Bounds

13.9 Sparsity-Aware Regression: A Variational Bound Bayesian Path

13.10 Sparsity-Aware Learning: Some Concluding Remarks

Parameter identifiability and sparse Bayesian modeling

13.11 Expectation Propagation

Minimizing the KL divergence

The expectation propagation algorithm

13.12 Nonparametric Bayesian Modeling

13.12.1 The Chinese Restaurant Process

13.12.2 Inference

13.12.3 Dirichlet Processes

13.12.4 The Stick-Breaking Construction of a DP

13.13 Gaussian Processes

13.13.1 Covariance Functions and Kernels

13.13.2 Regression

Dealing with hyperparameters

Computational considerations

13.13.3 Classification

13.14 A Case Study: Hyperspectral Image Unmixing

13.14.1 Hierarchical Bayesian Modeling

13.14.2 Experimental Results

Problems

MATLAB Exercises

References

14 Monte Carlo Methods

Introduction

Monte Carlo Methods: The Main Concept

Random number generation

Random Sampling Based on Function Transformation

Rejection Sampling

Importance Sampling

Monte Carlo Methods and the EM Algorithm

Markov Chain Monte Carlo Methods

Ergodic Markov Chains

The Metropolis Method

Convergence Issues

Gibbs Sampling

In Search of More Efficient Methods: A Discussion

Variational inference or Monte Carlo methods

A Case Study: Change-Point Detection

Problems

MATLAB Exercise

References

15 Probabilistic Graphical Models: Part I

Introduction

The Need for Graphical Models

Bayesian Networks and the Markov Condition

Graphs: Basic Definitions

Some Hints on Causality

d-separation

Sigmoidal Bayesian Networks

Linear Gaussian Models

Multiple-Cause Networks

I-Maps, Soundness, Faithfulness, and Completeness

Undirected Graphical Models

Independencies and I-Maps in Markov Random Fields

The Ising Model and Its Variants

Conditional Random Fields (CRFs)

Factor Graphs

Graphical Models for Error-Correcting Codes

Moralization of Directed Graphs

Exact Inference Methods: Message-Passing Algorithms

Exact Inference in Chains

Exact Inference in Trees

The Sum-Product Algorithm

The Max-Product and Max-Sum Algorithms

Problems

References

16 Probabilistic Graphical Models: Part II

Introduction

Triangulated Graphs and Junction Trees

Constructing a Join Tree

message-passing in Junction Trees

Approximate Inference Methods

Variational Methods: Local Approximation

Multiple-cause networks and the noisy-OR model

The Boltzmann machine

Block Methods for Variational Approximation

The mean field approximation and the Boltzmann machine

Loopy Belief Propagation

Dynamic Graphical Models

Hidden Markov Models

Inference

Learning the Parameters in an HMM

Discriminative Learning

Beyond HMMs: A Discussion

Factorial Hidden Markov Models

Time-Varying Dynamic Bayesian Networks

Learning Graphical Models

Parameter Estimation

Learning the Structure

Problems

References

17 Particle Filtering

Introduction

Sequential Importance Sampling

Importance Sampling Revisited

Resampling

Sequential Sampling

Kalman and Particle Filtering

Kalman Filtering: A Bayesian Point of View

Particle Filtering

Degeneracy

Generic Particle Filtering

Auxiliary Particle Filtering

Problems

MATLAB Exercises

References

18 Neural Networks and Deep Learning

Introduction

The Perceptron

The Kernel Perceptron Algorithm

Feed-Forward Multilayer Neural Networks

The Backpropagation Algorithm

The Gradient Descent Scheme

Speeding up the convergence rate

Some practical hints

Beyond the Gradient Descent Rationale

Selecting a Cost Function

Pruning the Network

Universal Approximation Property of Feed-Forward Neural Networks

Neural Networks: A Bayesian Flavor

Learning Deep Networks

The Need for Deep Architectures

Training Deep Networks

Distributed representations

Training Restricted Boltzmann Machines

Computation of the conditional probabilities

Contrastive divergence

Training Deep Feed-Forward Networks

Deep Belief Networks

Variations on the Deep Learning Theme

Gaussian Units

Stacked Autoencoders

The Conditional RBM

Case Study: A Deep Network for Optical Character Recognition

CASE Study: A Deep Autoencoder

Example: Generating Data via a DBN

Problems

MATLAB Exercises

References

19 Dimensionality Reduction

19.1 Introduction

19.2 Intrinsic Dimensionality

19.3 Principle Component Analysis

PCA, SVD, and low-Rank matrix factorization

Minimum error interpretation

PCA and information retrieval

Orthogonalizing properties of PCA and feature generation

Latent variables

19.4 Canonical Correlation Analysis

19.4.1 Relatives of CCA

Partial least-squares

19.5 Independent Component Analysis

19.5.1 ICA and Gaussianity

19.5.2 ICA and Higher Order Cumulants

ICA ambiguities

19.5.3 Non-Gaussianity and Independent Components

19.5.4 ICA Based on Mutual Information

19.5.5 Alternative Paths to ICA

The cocktail party problem

19.6 Dictionary Learning: The k-SVD Algorithm

Why the name k-SVD

19.7 Nonnegative Matrix Factorization

19.8 Learning Low-Dimensional Models: A Probabilistic Perspective

19.8.1 Factor Analysis

19.8.2 Probabilistic PCA

19.8.3 Mixture of Factors Analyzers: A Bayesian View to Compressed Sensing

19.9 Nonlinear Dimensionality Reduction

19.9.1 Kernel PCA

19.9.2 Graph-Based Methods

Laplacian eigenmaps

Local linear embedding (LLE)

Isometric mapping (ISOMAP)

19.10 Low-Rank Matrix Factorization: A Sparse Modeling Path

19.10.1 Matrix Completion

19.10.2 Robust PCA

19.10.3 Applications of Matrix Completion and ROBUST PCA

Matrix completion

Robust PCA/PCP

19.11 A Case Study: fMRI Data Analysis

Problems

MATLAB Exercises

References

Appendix-A- Linear Algebra

A.1 Properties of Matrices

Matrix inversion lemmas

Matrix derivatives

A.2 Positive Definite and Symmetric Matrices

A.3 Wirtinger Calculus

References

Appendix-B- Probability Theory and Statistics

B.1 Cramér-Rao Bound

B.2 Characteristic Functions

B.3 Moments and Cumulants

B.4 Edgeworth Expansion of a pdf

Reference

Appendix-C-Hints on Constrained Optimization

C.1 Equality Constraints

C.2 Inequality Constraints

The Karush-Kuhn-Tucker (KKT) conditions

Min-Max duality

Saddle point condition

Lagrangian duality

Convex programming

Wolfe dual representation

References

Index

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

Q

R

S

T

U

V

W

X

Y

Z

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc