0

2

0

2

v

o

N

3

2

]

V

C

.

s

c

[

1

v

1

4

6

0

0

.

2

1

0

2

:

v

i

X

r

a

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

1

A Decade Survey of Content Based Image

Retrieval using Deep Learning

Shiv Ram Dubey

Abstract—The content based image retrieval aims to find the similar images from a large scale dataset against a query image.

Generally, the similarity between the representative features of the query image and dataset images is used to rank the images for

retrieval. In early days, various hand designed feature descriptors have been investigated based on the visual cues such as color,

texture, shape, etc. that represent the images. However, the deep learning has emerged as a dominating alternative of hand-designed

feature engineering from a decade. It learns the features automatically from the data. This paper presents a comprehensive survey of

deep learning based developments in the past decade for content based image retrieval. The categorization of existing state-of-the-art

methods from different perspectives is also performed for greater understanding of the progress. The taxonomy used in this survey

covers different supervision, different networks, different descriptor type and different retrieval type. A performance analysis is also

performed using the state-of-the-art methods. The insights are also presented for the benefit of the researchers to observe the

progress and to make the best choices. The survey presented in this paper will help in further research progress in image retrieval

using deep learning.

Index Terms—Content Based Image Retrieval; Deep Learning; Convolutional Neural Networks; Survey; Supervised and

Unsupervised Learning.

!

1 INTRODUCTION

I MAGE retrieval is a well studied problem of image matching

where the similar images are retrieved from a database w.r.t. a

given query image [1], [2]. Basically, the similarity between the

query image and the database images is used to rank the database

images in decreasing order of similarity [3]. Thus, the performance

of any image retrieval method depends upon the similarity com-

putation between images. Ideally, the similarity score computation

method between two images should be discriminative, robust and

efficient. The easiest way to compute the similarity between two

images is to find the sum of absolute difference of corresponding

pixels in both the images, i.e., L1 distance. This method is also

referred as the template matching. However, this approach is not

robust against the image geometric and photometric changes,

such as translation, rotation, viewpoint, illumination, etc. It is

demonstrated in Fig. 1 with the help of two pictures of the same

category of Corel dataset [4] and corresponding representative

intensity values of a window. Another problem with this approach

is that it is not efficient due to the high dimensionality of the

image which leads to the high computation requirement to find

the similarity between the query and database images.

1.1 Hand-crafted Descriptor based Image Retrieval

In order to make the retrieval robust to geometric and photometric

changes, the similarity between images is computed based on the

content of images. Basically, the content of the images (i.e., the

visual appearance) in terms of the color, texture, shape, gradient,

etc. are represented in the form of a feature descriptor [6].

The similarity between the feature vectors of the corresponding

images is treated as the similarity between the images. Thus, the

S.R. Dubey is with the Computer Vision Group, Indian Institute of Informa-

tion Technology, Sri City, Chittoor, Andhra Pradesh-517646, India (e-mail:

shivram1987@gmail.com, srdubey@iiits.in).

Fig. 1: Comparing pixels of two regions (images are taken from

Corel-database [4]). The presented raw intensity values are only

indicative not actual. The uses of raw intensity values for the

image similarity computation is not a good idea as it is not robust

against the geometric and photometric changes. This figure has

been originally appeared in [5].

performance of any content based image retrieval (CBIR) method

heavily depends upon the feature descriptor representation of the

image. Any feature descriptor representation method is expected to

have the discriminating ability, robustness and low dimensionality.

Fig. 2 illustrates the effect of descriptor function in terms of

its robustness. The rotation and scale hybrid descriptor (RSHD)

function [7] is used to show the rotation invariance between an

image taken from Corel-dataset [4] and its rotated version. It can

be seen in Fig. 2 that the raw intensity values based comparison

does not work, however the descriptor based comparison works

given that the descriptor function is able to capture the relevant

information from the image. Various feature descriptor represen-

tation methods have been investigated to compute the similarity

between the two images for content based image retrieval. The

feature descriptor representation utilizes the visual cues of the

images selected manually based on the need [8], [9], [10], [11],

[12], [13], [14], [15], [16], [17], [18], [19]. These approaches

are also termed as the hand-designed or hand-engineered feature

description. Moreover, generally these methods are unsupervised

as they do not need the data to design the feature representation

method. Various survey has been also conducted time to time to

present the progress in content based image retrieval, including

�

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

2

Fig. 2: Depicting rotation robustness of descriptor function. The

image is taken from Corel-database [4]. The 1st image is 90o

rotated version of 2nd image in counter-clockwise direction.

The rotation and scale invariant hybrid descriptor (RSHD) [7]

is used as the descriptor function in this example. In spite of

having the differences in the intensity values at the corresponding

pixels between both images, the feature descriptors are very much

similar. This figure has been originally appeared in [5].

Fig. 3: The pipeline of state-of-the-art feature representation is

replaced by the CNN based feature representation with increased

discriminative ability and robustness.

[2] in 2000, [20] in 2002, [21] in 2004, [22] in 2006, [23] in

2007, [24] in 2008, [25] in 2014 and [26] in 2017. The hand-

engineering feature for image retrieval was a very active research

area. However, its performance was limited as the hand-engineered

features are not able to represent the image characteristics in an

accurate manner.

1.2 Distance Metric Learning based Image Retrieval

The distance metric learning has been also used very extensively

for feature vectors representation [27]. It is also explored well

for image retrieval [28]. Some notable deep metric learning based

image retrieval approaches include Contextual constraints distance

metric learning [29], Kernel-based distance metric learning [30],

Visuality-preserving distance metric learning [31], Rank-based

distance metric learning [32], Semi-supervised distance metric

learning [33], etc. Generally,

the deep metric learning based

approaches have shown the promising retrieval performance com-

pared to hand-crafted approaches. However, most of the existing

deep metric learning based methods rely on the linear distance

functions which limits its discriminative ability and robustness to

represent the non-linear data for image retrieval. Moreover, it is

also not able to handle the multi-modal retrieval effectively.

1.3 Deep Learning based Image Retrieval

From a decade, a shift has been observed in feature representation

from hand-engineering to learning-based after the emergence of

deep learning [34], [35]. This transition is depicted in Fig. 3 where

the convoltional neural networks based feature learning replaces

Fig. 4: Taxonomy used in this survey to categorize the existing

deep learning based image retrieval approaches.

the state-of-the-art pipeline of traditional hand-engineered feature

representation. The deep learning is a hierarchical feature repre-

sentation technique to learn the abstract features from data which

are important for that dataset and application [36]. Based on the

type of data to be processed, different architectures came into

existence such as Artificial Neural Network (ANN)/ Multilayer

Perceptron (MLP) for 1-D data [37], [38], [39], Convolutional

Neural Networks (CNN) for image data [40], [41], [42], and

Reurrent Neural Networks (RNN) for time-series data [43], [44],

[45]. The CNN features off-the-shelf have shown very promising

performance for the object recognition and retrieval tasks in terms

of the discriminative power and robustness [34]. A huge progress

has been made in this decade to utilize the power of deep learning

for content based image retrieval [46], [47], [48], [49]. Thus,

this survey mainly focuses over the progress in state-of-the-art

deep learning based models and features for content based image

retrieval from its inception. A taxonomy of state-of-the-art deep

learning approaches for image retrieval is portrayed in Fig. 4.

The major contributions of this survey, w.r.t.

the existing

literature, can be outlined as follows:

2)

1) As per my best knowledge, this survey can be seen as the

first of its kind to cover the deep learning based image

retrieval approaches very comprehensively in terms of

evolution of image retrieval using deep learning, different

supervision type, network type, descriptor type, retrieval

type and other aspects.

In contrast to the recent reviews [47], [28], [48], this

survey specifically covers the progress in image retrieval

using deep learning progress in 2011-2020 decade rather

than hand-crafted and distance metric learning based

approaches. Moreover, we provide a very informative tax-

onomy (refer Fig. 4) with wide coverage of existing deep

learning based image retrieval approaches as compared to

the recent survey [49].

3) This survey enriches the reader with the state-of-the-art

image retrieval using deep learning methods with analysis

from various perspectives.

4) This paper also presents the brief highlights and impor-

tant discussions along with the comprehensive compar-

isons on benchmark datasets using the state-of-the-art

deep learning based image retrieval approaches (Refer

Table 3, 4, and 5).

�

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

TABLE 1: The summary of large-scale datasets for deep learning

based image retrieval.

3

Dataset

CIFAR-10 [50]

NUS-WIDE [51]

MNIST [52]

SVHN [53]

SUN397 [54]

UT-ZAP50K [55]

Yahoo-1M [56]

ILSVRC2012 [57]

MS COCO [58]

MIRFlicker-1M [59]

Google Landmarks

[60]

Google Landmarks

v2 [61]

Year

2009

2009

1998

2011

2010

2014

2015

2012

2014

2010

2017

#Classes Training

10

21

10

10

397

8

116

1,000

80

-

15 K

Test

10,000

50,000

65,075

97,214

10,000

60,000

26032

73257

8,000

100,754

42,025

8,000

112,363 Clothing Images

1,011,723

∼1.2 M 50,000

40,504

82,783

-

1 M

∼1 M

-

Image Type

Object Category Images

Scene Images

Handwritten Digit Images

House Number Images

Scene Images

Shoes Images

Object Category Images

Common Object Images

Scene Images

Landmark Images

2020

200 K

5 M

-

Landmark Images

This survey is organized as follows: the background is pre-

sented in Section 2 in terms of the datasets and evaluation

measures; the evolution of deep learning based image retrieval

is compiled in Section 3; the categorization of existing approaches

based on the supervision type, network type, descriptor type, and

retrieval type are discussed in Section 4, 5, 6, and 7, respectively;

Some other aspects are highlighted in Section 8; the performance

comparison of the popular methods is performed in Section 9;

conclusions and future directions are presented in Section 10.

2 BACKGROUND

In this section the background is presented in terms of the

commonly used performance evaluation metrics and benchmark

retrieval datasets.

2.1 Retrieval Evaluation Measures

In order to judge the performance of image retrieval approaches,

precision, recall and f-score are the common evaluation metrics.

The mean average precision (mAP ) is very commonly used in the

literature. The precision is defined as the percentage of correctly

retrieved images out of the total number of retrieved images. The

recall is another performance measure being used for image re-

trieval by computing the percentage of correctly retrieved images

out of the total number of relevant images present in the dataset.

The f-score is computed from the harmonic mean of precision and

recall as (2 × precision × recall)/(precision + recall). Thus,

the f-score provides a trade-off between precision and recall.

2.2 Datasets

With the inception of deep learning models, various large-scale

datasets have been created to facilitate the research in image

recognition and retrieval. The details of large-scale datasets are

summarized in Table 1. Datasets having various types of images

are available to test the deep learning based approaches such as

object category datasets [50], [57], [58], scene datasets [51], [54],

[62], digit datasets [52], [53], apparel datasets [55], [56], landmark

datasets [60], [61], etc. The CIFAR-10 dataset is very widely

used object category datset [50]. The ImageNet (ILSVRC2012),

a large-scale dataset, is also an object category dataset with more

than a million number of images [57]. The MS COCO dataset

[58] created for common object detection is also utilized for image

retrieval purpose. Among scene image datasets commonly used for

retrieval purpose, the NUS-WIDE dataset is from National Univer-

sity of Singapore [51]; the Sun397 is a scene understanding dataset

from 397 categories with more than one lakh images [54], [63];

and the MIRFlicker-1M [62] dataset consists of a million images

downloaded from the social photography site Flickr. The MNIST

dataset is one of the old and large-scale digit image datasets [52]

consisting of optical characters. The SVHN is another digit dataset

[53] from the street view house number images which is more

complex than MNIST dataset. The shoes apparel dataset, namely

UT-ZAP50K [55], consists of roughly 50K images. The Yahoo-

1M is another apparel large-scale dataset used in [56] for image

retrieval. The Google landmarks dataset is having around a million

landmark images [60]. The extended version of Google landmarks

(i.e., v2) [61] contains around 5 million landmark images. There

are more datasets used for retrieval in the literature, such as Corel,

Oxford, Paris, etc., however, these are not the large-scale datasets.

The CIFAR-10, MNIST, SVHN and ImageNet are the widely used

datasets in majority of the research.

3 EVOLUTION OF DEEP LEARNING FOR CONTENT

BASED IMAGE RETRIEVAL (CBIR)

The deep learning based generation of descriptors or hash codes is

the recent trends large-scale content based image retrieval, due

to its computational efficiency and retrieval quality [28]. The

deep learning driven features led to the improved retrieval quality.

Recently, it has received increasing attention to utilize the features

for image retrieval using end-to-end representation learning. In

this section, a journey of deep learning models for image retrieval

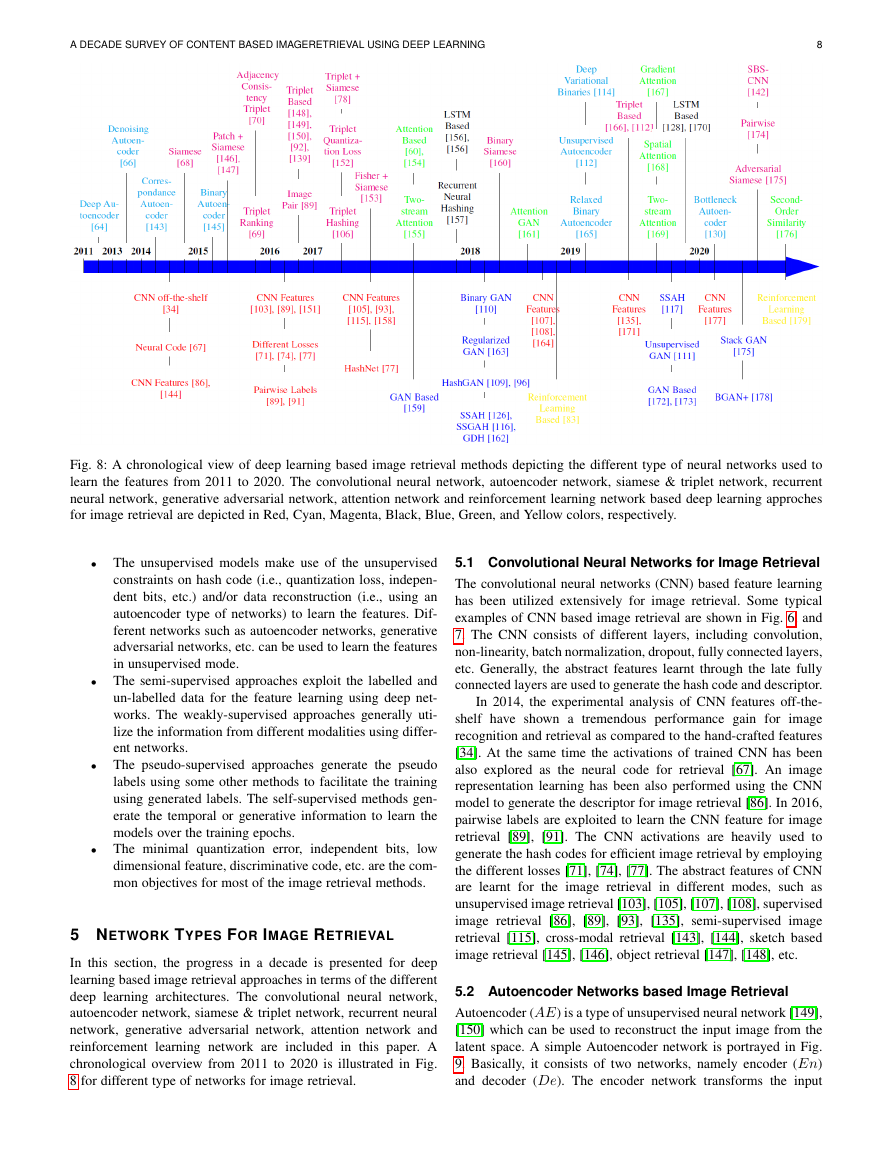

from 2011 to 2020 is presented. A chronological overview of

different methods is illustrated in Fig. 5. Rest of this section

highlights the selected methods in chronological manner.

3.1 Chronological Overview: 2011 - 2015

3.1.1 2011-2013

Among the initial attempts, in 2011, Krizhevsky and Hinton have

used a deep autoencoder to map the images to short binary codes

for content based image retrieval (CBIR) [64]. Kang et al. (2012)

have proposed a deep multi-view hashing to generate the code

for CBIR from multiple views of data by modeling the layers

with view-specific and shared hidden nodes [65]. In 2013, Wu

et al. have considered the multiple pretrained stacked denoising

autoencoders over low features of the images [66]. They also fine

tune the multiple deep networks on the output of the pretrained

autoencoders and integrated to generate the multi-modal similarity

function for image retrieval.

3.1.2 2014

In an outstanding work, Babenko et al. (2014) have utilized

the activations of the top layers of a large convolutional neural

network (CNN) as the descriptors (neural codes) for image re-

trieval application [67] as depicted in Fig. 6. A very promising

performance has been recorded using the neural codes for image

retrieval even if the model is trained on un-related data. The

retrieval results are further improved by re-training the model

over similar data and then extracting the neural codes as the

descriptor. They also compress the neural code using principal

component analysis (PCA) to generate the compact descriptor.

In 2014, Wang et al. have investigated a deep ranking model by

learning the similarity metric directly from images [68]. Basically,

they have employed the triplets to capture the inter-class and intra-

class image differences to improve the discriminative ability of the

learnt latent space as the descriptor.

�

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

4

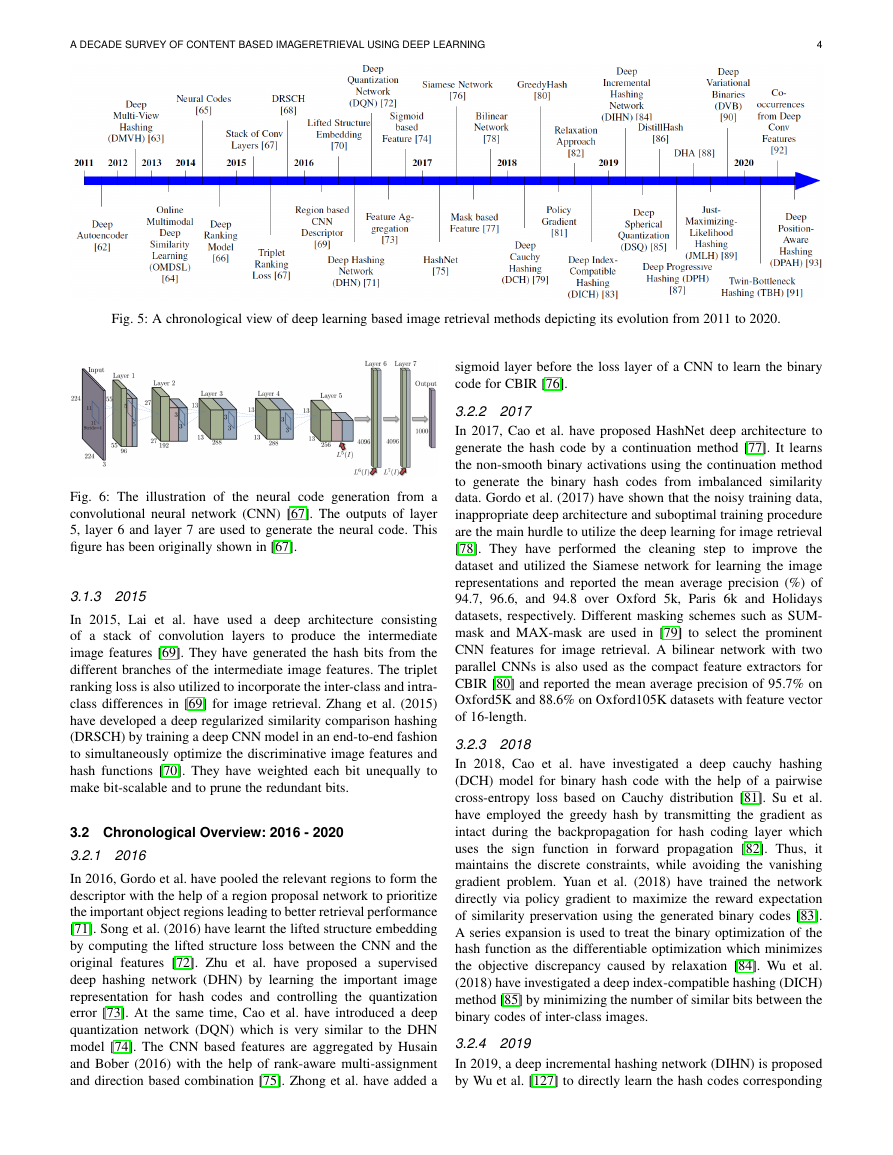

Fig. 5: A chronological view of deep learning based image retrieval methods depicting its evolution from 2011 to 2020.

sigmoid layer before the loss layer of a CNN to learn the binary

code for CBIR [76].

3.2.2 2017

In 2017, Cao et al. have proposed HashNet deep architecture to

generate the hash code by a continuation method [77]. It learns

the non-smooth binary activations using the continuation method

to generate the binary hash codes from imbalanced similarity

data. Gordo et al. (2017) have shown that the noisy training data,

inappropriate deep architecture and suboptimal training procedure

are the main hurdle to utilize the deep learning for image retrieval

[78]. They have performed the cleaning step to improve the

dataset and utilized the Siamese network for learning the image

representations and reported the mean average precision (%) of

94.7, 96.6, and 94.8 over Oxford 5k, Paris 6k and Holidays

datasets, respectively. Different masking schemes such as SUM-

mask and MAX-mask are used in [79] to select the prominent

CNN features for image retrieval. A bilinear network with two

parallel CNNs is also used as the compact feature extractors for

CBIR [80] and reported the mean average precision of 95.7% on

Oxford5K and 88.6% on Oxford105K datasets with feature vector

of 16-length.

3.2.3 2018

In 2018, Cao et al. have investigated a deep cauchy hashing

(DCH) model for binary hash code with the help of a pairwise

cross-entropy loss based on Cauchy distribution [81]. Su et al.

have employed the greedy hash by transmitting the gradient as

intact during the backpropagation for hash coding layer which

uses the sign function in forward propagation [82]. Thus,

it

maintains the discrete constraints, while avoiding the vanishing

gradient problem. Yuan et al. (2018) have trained the network

directly via policy gradient to maximize the reward expectation

of similarity preservation using the generated binary codes [83].

A series expansion is used to treat the binary optimization of the

hash function as the differentiable optimization which minimizes

the objective discrepancy caused by relaxation [84]. Wu et al.

(2018) have investigated a deep index-compatible hashing (DICH)

method [85] by minimizing the number of similar bits between the

binary codes of inter-class images.

3.2.4 2019

In 2019, a deep incremental hashing network (DIHN) is proposed

by Wu et al. [127] to directly learn the hash codes corresponding

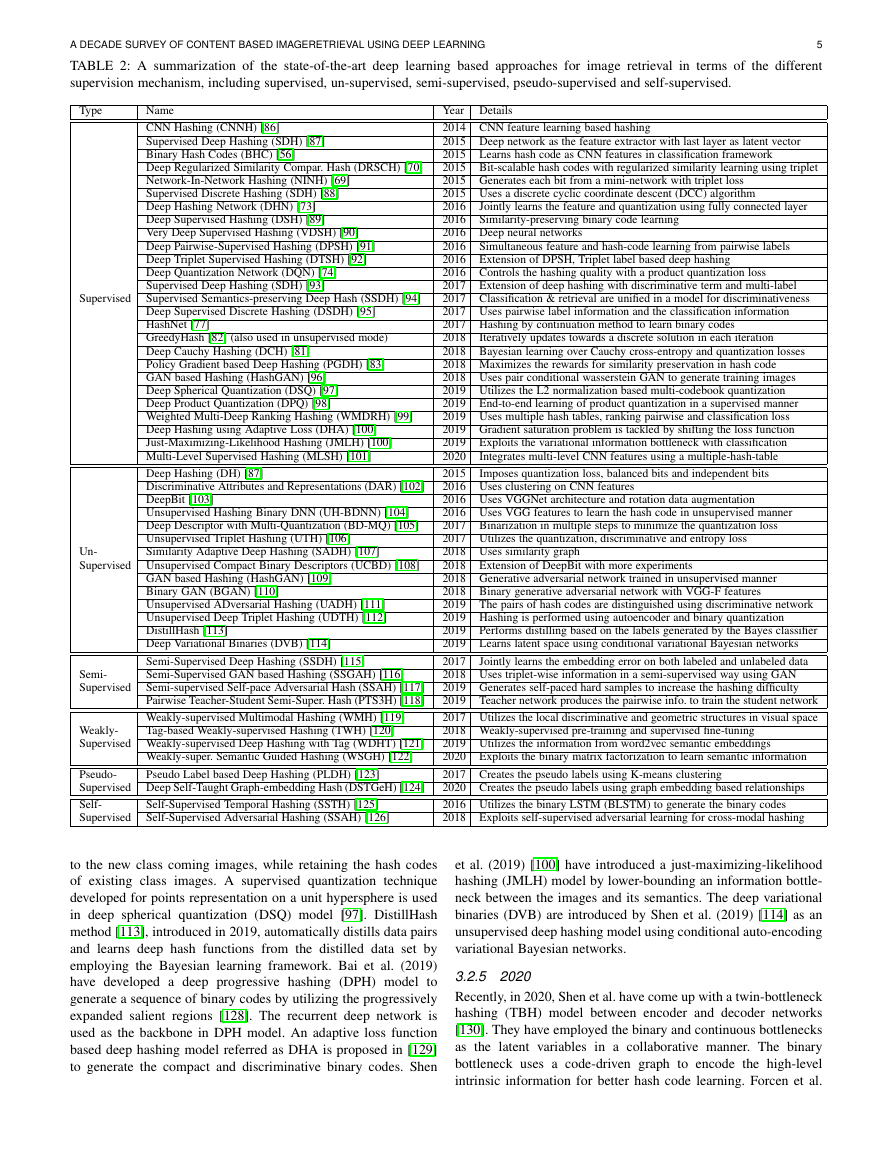

Fig. 6: The illustration of the neural code generation from a

convolutional neural network (CNN) [67]. The outputs of layer

5, layer 6 and layer 7 are used to generate the neural code. This

figure has been originally shown in [67].

3.1.3 2015

In 2015, Lai et al. have used a deep architecture consisting

of a stack of convolution layers to produce the intermediate

image features [69]. They have generated the hash bits from the

different branches of the intermediate image features. The triplet

ranking loss is also utilized to incorporate the inter-class and intra-

class differences in [69] for image retrieval. Zhang et al. (2015)

have developed a deep regularized similarity comparison hashing

(DRSCH) by training a deep CNN model in an end-to-end fashion

to simultaneously optimize the discriminative image features and

hash functions [70]. They have weighted each bit unequally to

make bit-scalable and to prune the redundant bits.

3.2 Chronological Overview: 2016 - 2020

3.2.1 2016

In 2016, Gordo et al. have pooled the relevant regions to form the

descriptor with the help of a region proposal network to prioritize

the important object regions leading to better retrieval performance

[71]. Song et al. (2016) have learnt the lifted structure embedding

by computing the lifted structure loss between the CNN and the

original features [72]. Zhu et al. have proposed a supervised

deep hashing network (DHN) by learning the important image

representation for hash codes and controlling the quantization

error [73]. At the same time, Cao et al. have introduced a deep

quantization network (DQN) which is very similar to the DHN

model [74]. The CNN based features are aggregated by Husain

and Bober (2016) with the help of rank-aware multi-assignment

and direction based combination [75]. Zhong et al. have added a

�

5

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

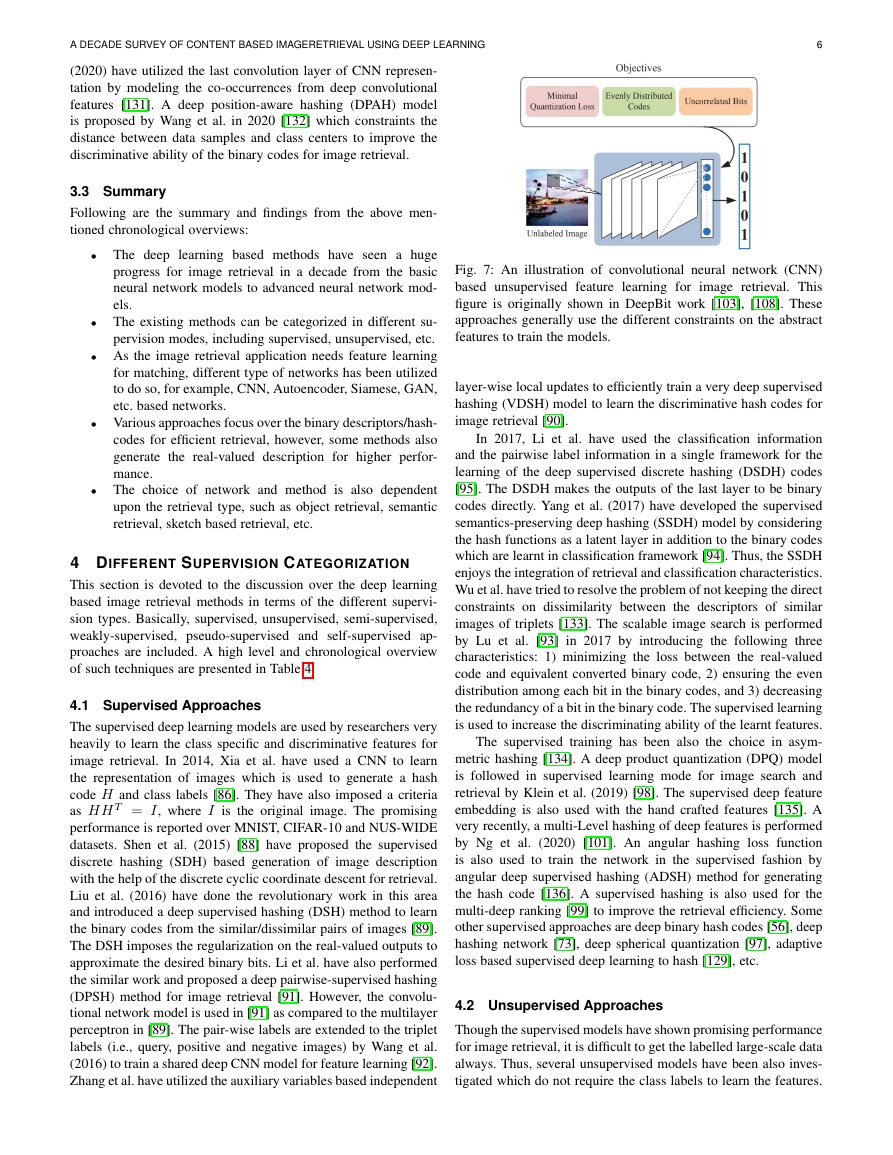

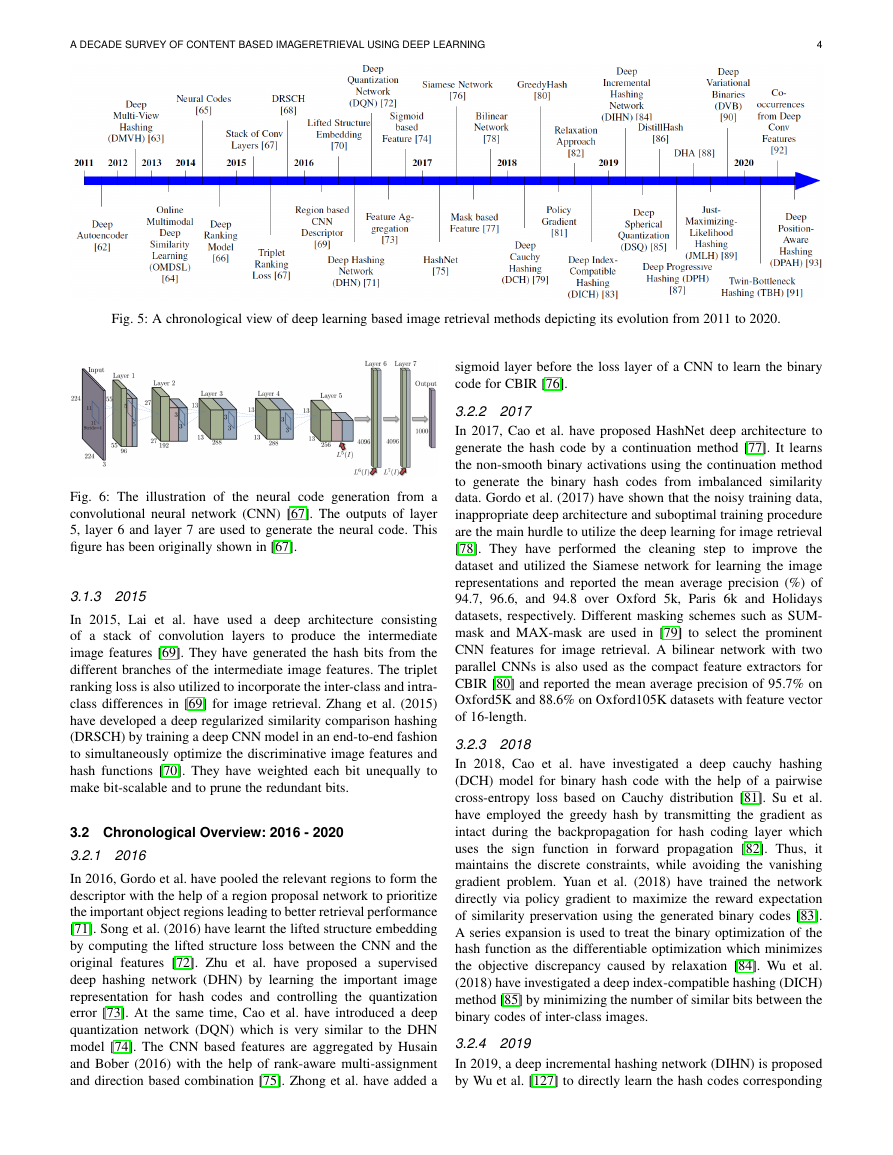

TABLE 2: A summarization of the state-of-the-art deep learning based approaches for image retrieval in terms of the different

supervision mechanism, including supervised, un-supervised, semi-supervised, pseudo-supervised and self-supervised.

Type

Supervised

Name

CNN Hashing (CNNH) [86]

Supervised Deep Hashing (SDH) [87]

Binary Hash Codes (BHC) [56]

Deep Regularized Similarity Compar. Hash (DRSCH) [70]

Network-In-Network Hashing (NINH) [69]

Supervised Discrete Hashing (SDH) [88]

Deep Hashing Network (DHN) [73]

Deep Supervised Hashing (DSH) [89]

Very Deep Supervised Hashing (VDSH) [90]

Deep Pairwise-Supervised Hashing (DPSH) [91]

Deep Triplet Supervised Hashing (DTSH) [92]

Deep Quantization Network (DQN) [74]

Supervised Deep Hashing (SDH) [93]

Supervised Semantics-preserving Deep Hash (SSDH) [94]

Deep Supervised Discrete Hashing (DSDH) [95]

HashNet [77]

GreedyHash [82] (also used in unsupervised mode)

Deep Cauchy Hashing (DCH) [81]

Policy Gradient based Deep Hashing (PGDH) [83]

GAN based Hashing (HashGAN) [96]

Deep Spherical Quantization (DSQ) [97]

Deep Product Quantization (DPQ) [98]

Weighted Multi-Deep Ranking Hashing (WMDRH) [99]

Deep Hashing using Adaptive Loss (DHA) [100]

Just-Maximizing-Likelihood Hashing (JMLH) [100]

Multi-Level Supervised Hashing (MLSH) [101]

Deep Hashing (DH) [87]

Discriminative Attributes and Representations (DAR) [102]

DeepBit [103]

Unsupervised Hashing Binary DNN (UH-BDNN) [104]

Deep Descriptor with Multi-Quantization (BD-MQ) [105]

Unsupervised Triplet Hashing (UTH) [106]

Similarity Adaptive Deep Hashing (SADH) [107]

GAN based Hashing (HashGAN) [109]

Binary GAN (BGAN) [110]

Unsupervised ADversarial Hashing (UADH) [111]

Unsupervised Deep Triplet Hashing (UDTH) [112]

DistillHash [113]

Deep Variational Binaries (DVB) [114]

Semi-Supervised Deep Hashing (SSDH) [115]

Semi-Supervised GAN based Hashing (SSGAH) [116]

Semi-supervised Self-pace Adversarial Hash (SSAH) [117]

Pairwise Teacher-Student Semi-Super. Hash (PTS3H) [118]

Weakly-supervised Multimodal Hashing (WMH) [119]

Tag-based Weakly-supervised Hashing (TWH) [120]

Un-

Supervised Unsupervised Compact Binary Descriptors (UCBD) [108]

Semi-

Supervised

Weakly-

Supervised Weakly-supervised Deep Hashing with Tag (WDHT) [121]

Weakly-super. Semantic Guided Hashing (WSGH) [122]

Pseudo Label based Deep Hashing (PLDH) [123]

Pseudo-

Supervised Deep Self-Taught Graph-embedding Hash (DSTGeH) [124]

Self-

Supervised

Self-Supervised Temporal Hashing (SSTH) [125]

Self-Supervised Adversarial Hashing (SSAH) [126]

Iteratively updates towards a discrete solution in each iteration

Simultaneous feature and hash-code learning from pairwise labels

Jointly learns the feature and quantization using fully connected layer

Similarity-preserving binary code learning

Year Details

2014 CNN feature learning based hashing

2015 Deep network as the feature extractor with last layer as latent vector

2015 Learns hash code as CNN features in classification framework

2015 Bit-scalable hash codes with regularized similarity learning using triplet

2015 Generates each bit from a mini-network with triplet loss

2015 Uses a discrete cyclic coordinate descent (DCC) algorithm

2016

2016

2016 Deep neural networks

2016

2016 Extension of DPSH, Triplet label based deep hashing

2016 Controls the hashing quality with a product quantization loss

2017 Extension of deep hashing with discriminative term and multi-label

2017 Classification & retrieval are unified in a model for discriminativeness

2017 Uses pairwise label information and the classification information

2017 Hashing by continuation method to learn binary codes

2018

2018 Bayesian learning over Cauchy cross-entropy and quantization losses

2018 Maximizes the rewards for similarity preservation in hash code

2018 Uses pair conditional wasserstein GAN to generate training images

2019 Utilizes the L2 normalization based multi-codebook quantization

2019 End-to-end learning of product quantization in a supervised manner

2019 Uses multiple hash tables, ranking pairwise and classification loss

2019 Gradient saturation problem is tackled by shifting the loss function

2019 Exploits the variational information bottleneck with classification

2020

2015

2016 Uses clustering on CNN features

2016 Uses VGGNet architecture and rotation data augmentation

2016 Uses VGG features to learn the hash code in unsupervised manner

2017 Binarization in multiple steps to minimize the quantization loss

2017 Utilizes the quantization, discriminative and entropy loss

2018 Uses similarity graph

2018 Extension of DeepBit with more experiments

2018 Generative adversarial network trained in unsupervised manner

2018 Binary generative adversarial network with VGG-F features

2019 The pairs of hash codes are distinguished using discriminative network

2019 Hashing is performed using autoencoder and binary quantization

2019

2019 Learns latent space using conditional variational Bayesian networks

2017

2018 Uses triplet-wise information in a semi-supervised way using GAN

2019 Generates self-paced hard samples to increase the hashing difficulty

2019 Teacher network produces the pairwise info. to train the student network

2017 Utilizes the local discriminative and geometric structures in visual space

2018 Weakly-supervised pre-training and supervised fine-tuning

2019 Utilizes the information from word2vec semantic embeddings

2020 Exploits the binary matrix factorization to learn semantic information

2017 Creates the pseudo labels using K-means clustering

2020 Creates the pseudo labels using graph embedding based relationships

2016 Utilizes the binary LSTM (BLSTM) to generate the binary codes

2018 Exploits self-supervised adversarial learning for cross-modal hashing

Integrates multi-level CNN features using a multiple-hash-table

Imposes quantization loss, balanced bits and independent bits

Performs distilling based on the labels generated by the Bayes classifier

Jointly learns the embedding error on both labeled and unlabeled data

to the new class coming images, while retaining the hash codes

of existing class images. A supervised quantization technique

developed for points representation on a unit hypersphere is used

in deep spherical quantization (DSQ) model [97]. DistillHash

method [113], introduced in 2019, automatically distills data pairs

and learns deep hash functions from the distilled data set by

employing the Bayesian learning framework. Bai et al. (2019)

have developed a deep progressive hashing (DPH) model

to

generate a sequence of binary codes by utilizing the progressively

expanded salient regions [128]. The recurrent deep network is

used as the backbone in DPH model. An adaptive loss function

based deep hashing model referred as DHA is proposed in [129]

to generate the compact and discriminative binary codes. Shen

et al. (2019) [100] have introduced a just-maximizing-likelihood

hashing (JMLH) model by lower-bounding an information bottle-

neck between the images and its semantics. The deep variational

binaries (DVB) are introduced by Shen et al. (2019) [114] as an

unsupervised deep hashing model using conditional auto-encoding

variational Bayesian networks.

3.2.5 2020

Recently, in 2020, Shen et al. have come up with a twin-bottleneck

hashing (TBH) model between encoder and decoder networks

[130]. They have employed the binary and continuous bottlenecks

as the latent variables in a collaborative manner. The binary

bottleneck uses a code-driven graph to encode the high-level

intrinsic information for better hash code learning. Forcen et al.

�

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

6

(2020) have utilized the last convolution layer of CNN represen-

tation by modeling the co-occurrences from deep convolutional

features [131]. A deep position-aware hashing (DPAH) model

is proposed by Wang et al. in 2020 [132] which constraints the

distance between data samples and class centers to improve the

discriminative ability of the binary codes for image retrieval.

3.3 Summary

Following are the summary and findings from the above men-

tioned chronological overviews:

• The deep learning based methods have seen a huge

progress for image retrieval in a decade from the basic

neural network models to advanced neural network mod-

els.

• The existing methods can be categorized in different su-

pervision modes, including supervised, unsupervised, etc.

• As the image retrieval application needs feature learning

for matching, different type of networks has been utilized

to do so, for example, CNN, Autoencoder, Siamese, GAN,

etc. based networks.

• Various approaches focus over the binary descriptors/hash-

codes for efficient retrieval, however, some methods also

generate the real-valued description for higher perfor-

mance.

• The choice of network and method is also dependent

upon the retrieval type, such as object retrieval, semantic

retrieval, sketch based retrieval, etc.

4 DIFFERENT SUPERVISION CATEGORIZATION

This section is devoted to the discussion over the deep learning

based image retrieval methods in terms of the different supervi-

sion types. Basically, supervised, unsupervised, semi-supervised,

weakly-supervised, pseudo-supervised and self-supervised ap-

proaches are included. A high level and chronological overview

of such techniques are presented in Table 4.

4.1 Supervised Approaches

The supervised deep learning models are used by researchers very

heavily to learn the class specific and discriminative features for

image retrieval. In 2014, Xia et al. have used a CNN to learn

the representation of images which is used to generate a hash

code H and class labels [86]. They have also imposed a criteria

as HH T = I, where I is the original image. The promising

performance is reported over MNIST, CIFAR-10 and NUS-WIDE

datasets. Shen et al. (2015) [88] have proposed the supervised

discrete hashing (SDH) based generation of image description

with the help of the discrete cyclic coordinate descent for retrieval.

Liu et al. (2016) have done the revolutionary work in this area

and introduced a deep supervised hashing (DSH) method to learn

the binary codes from the similar/dissimilar pairs of images [89].

The DSH imposes the regularization on the real-valued outputs to

approximate the desired binary bits. Li et al. have also performed

the similar work and proposed a deep pairwise-supervised hashing

(DPSH) method for image retrieval [91]. However, the convolu-

tional network model is used in [91] as compared to the multilayer

perceptron in [89]. The pair-wise labels are extended to the triplet

labels (i.e., query, positive and negative images) by Wang et al.

(2016) to train a shared deep CNN model for feature learning [92].

Zhang et al. have utilized the auxiliary variables based independent

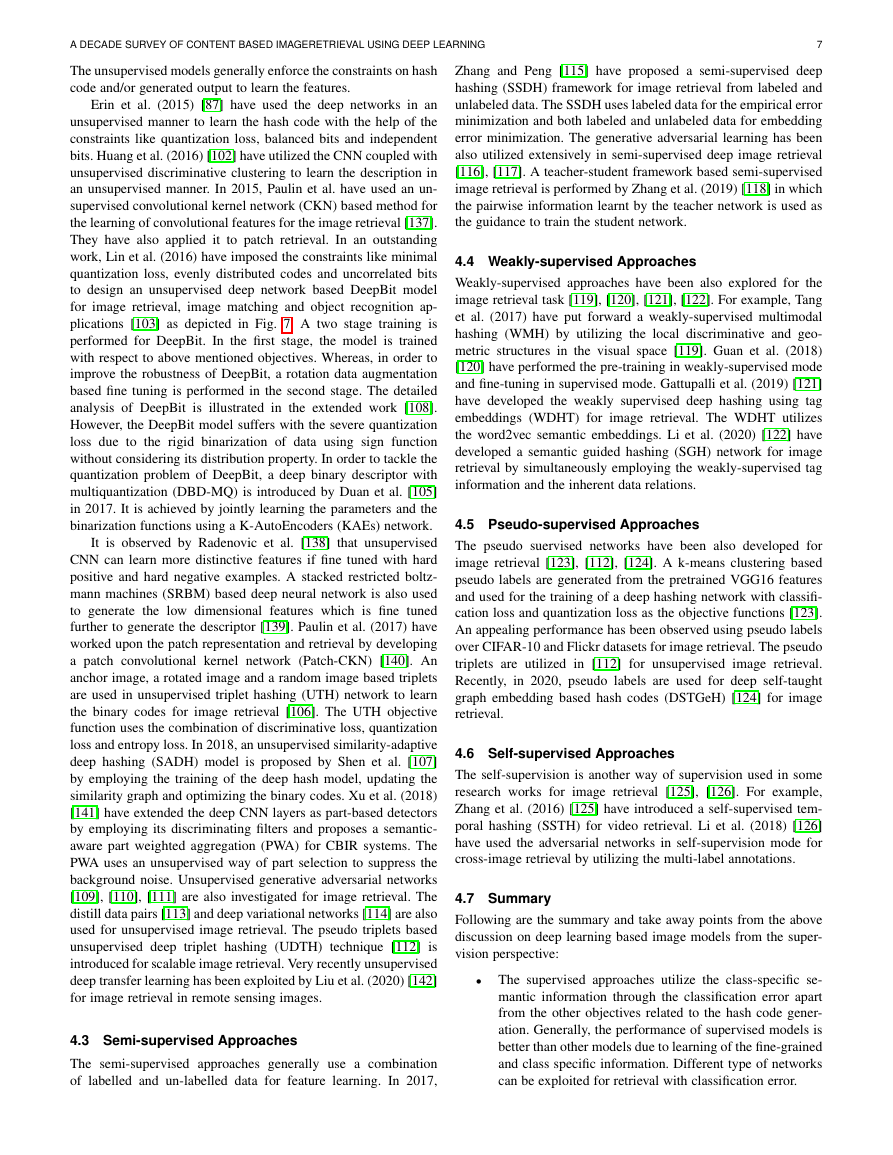

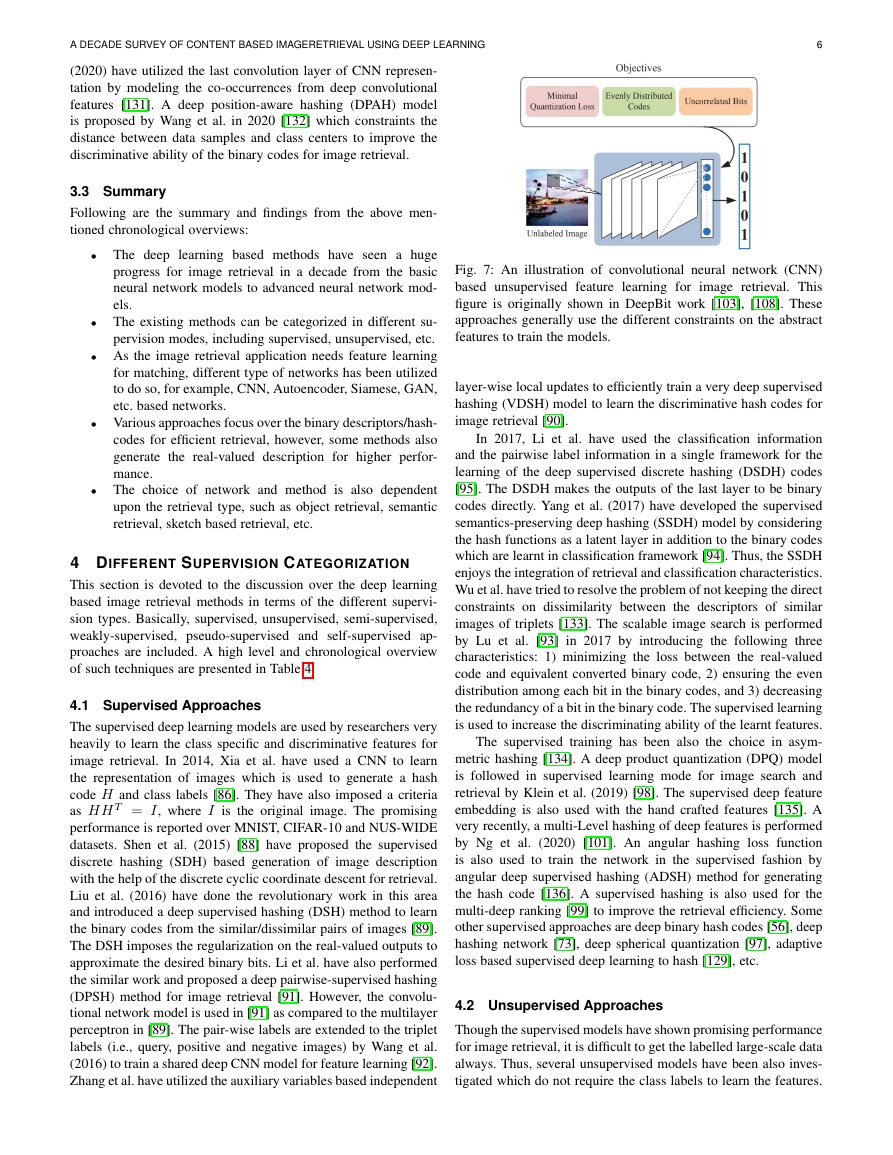

Fig. 7: An illustration of convolutional neural network (CNN)

based unsupervised feature learning for image retrieval. This

figure is originally shown in DeepBit work [103], [108]. These

approaches generally use the different constraints on the abstract

features to train the models.

layer-wise local updates to efficiently train a very deep supervised

hashing (VDSH) model to learn the discriminative hash codes for

image retrieval [90].

In 2017, Li et al. have used the classification information

and the pairwise label information in a single framework for the

learning of the deep supervised discrete hashing (DSDH) codes

[95]. The DSDH makes the outputs of the last layer to be binary

codes directly. Yang et al. (2017) have developed the supervised

semantics-preserving deep hashing (SSDH) model by considering

the hash functions as a latent layer in addition to the binary codes

which are learnt in classification framework [94]. Thus, the SSDH

enjoys the integration of retrieval and classification characteristics.

Wu et al. have tried to resolve the problem of not keeping the direct

constraints on dissimilarity between the descriptors of similar

images of triplets [133]. The scalable image search is performed

by Lu et al. [93] in 2017 by introducing the following three

characteristics: 1) minimizing the loss between the real-valued

code and equivalent converted binary code, 2) ensuring the even

distribution among each bit in the binary codes, and 3) decreasing

the redundancy of a bit in the binary code. The supervised learning

is used to increase the discriminating ability of the learnt features.

The supervised training has been also the choice in asym-

metric hashing [134]. A deep product quantization (DPQ) model

is followed in supervised learning mode for image search and

retrieval by Klein et al. (2019) [98]. The supervised deep feature

embedding is also used with the hand crafted features [135]. A

very recently, a multi-Level hashing of deep features is performed

by Ng et al. (2020) [101]. An angular hashing loss function

is also used to train the network in the supervised fashion by

angular deep supervised hashing (ADSH) method for generating

the hash code [136]. A supervised hashing is also used for the

multi-deep ranking [99] to improve the retrieval efficiency. Some

other supervised approaches are deep binary hash codes [56], deep

hashing network [73], deep spherical quantization [97], adaptive

loss based supervised deep learning to hash [129], etc.

4.2 Unsupervised Approaches

Though the supervised models have shown promising performance

for image retrieval, it is difficult to get the labelled large-scale data

always. Thus, several unsupervised models have been also inves-

tigated which do not require the class labels to learn the features.

�

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

7

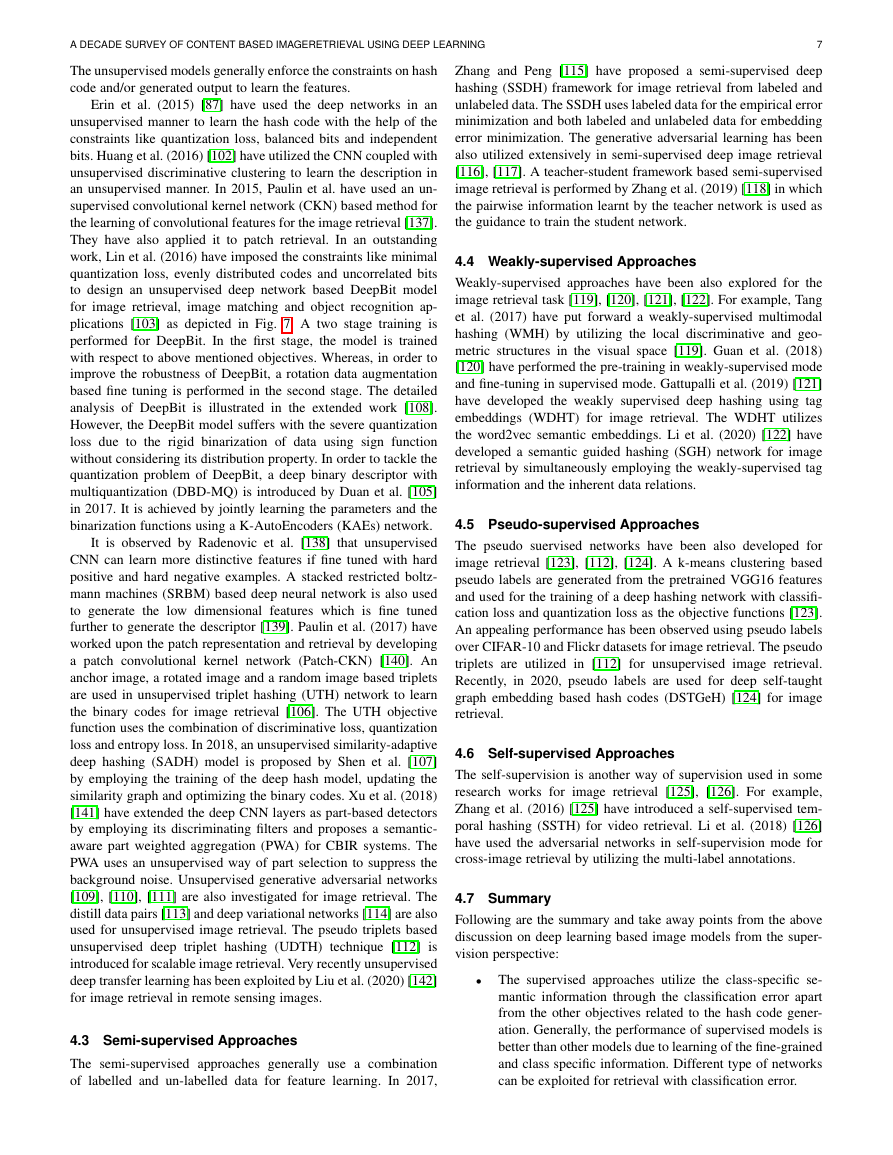

The unsupervised models generally enforce the constraints on hash

code and/or generated output to learn the features.

Erin et al. (2015) [87] have used the deep networks in an

unsupervised manner to learn the hash code with the help of the

constraints like quantization loss, balanced bits and independent

bits. Huang et al. (2016) [102] have utilized the CNN coupled with

unsupervised discriminative clustering to learn the description in

an unsupervised manner. In 2015, Paulin et al. have used an un-

supervised convolutional kernel network (CKN) based method for

the learning of convolutional features for the image retrieval [137].

They have also applied it to patch retrieval. In an outstanding

work, Lin et al. (2016) have imposed the constraints like minimal

quantization loss, evenly distributed codes and uncorrelated bits

to design an unsupervised deep network based DeepBit model

for image retrieval, image matching and object recognition ap-

plications [103] as depicted in Fig. 7. A two stage training is

performed for DeepBit. In the first stage, the model is trained

with respect to above mentioned objectives. Whereas, in order to

improve the robustness of DeepBit, a rotation data augmentation

based fine tuning is performed in the second stage. The detailed

analysis of DeepBit is illustrated in the extended work [108].

However, the DeepBit model suffers with the severe quantization

loss due to the rigid binarization of data using sign function

without considering its distribution property. In order to tackle the

quantization problem of DeepBit, a deep binary descriptor with

multiquantization (DBD-MQ) is introduced by Duan et al. [105]

in 2017. It is achieved by jointly learning the parameters and the

binarization functions using a K-AutoEncoders (KAEs) network.

It is observed by Radenovic et al. [138] that unsupervised

CNN can learn more distinctive features if fine tuned with hard

positive and hard negative examples. A stacked restricted boltz-

mann machines (SRBM) based deep neural network is also used

to generate the low dimensional features which is fine tuned

further to generate the descriptor [139]. Paulin et al. (2017) have

worked upon the patch representation and retrieval by developing

a patch convolutional kernel network (Patch-CKN) [140]. An

anchor image, a rotated image and a random image based triplets

are used in unsupervised triplet hashing (UTH) network to learn

the binary codes for image retrieval [106]. The UTH objective

function uses the combination of discriminative loss, quantization

loss and entropy loss. In 2018, an unsupervised similarity-adaptive

deep hashing (SADH) model is proposed by Shen et al. [107]

by employing the training of the deep hash model, updating the

similarity graph and optimizing the binary codes. Xu et al. (2018)

[141] have extended the deep CNN layers as part-based detectors

by employing its discriminating filters and proposes a semantic-

aware part weighted aggregation (PWA) for CBIR systems. The

PWA uses an unsupervised way of part selection to suppress the

background noise. Unsupervised generative adversarial networks

[109], [110], [111] are also investigated for image retrieval. The

distill data pairs [113] and deep variational networks [114] are also

used for unsupervised image retrieval. The pseudo triplets based

unsupervised deep triplet hashing (UDTH) technique [112] is

introduced for scalable image retrieval. Very recently unsupervised

deep transfer learning has been exploited by Liu et al. (2020) [142]

for image retrieval in remote sensing images.

4.3 Semi-supervised Approaches

The semi-supervised approaches generally use a combination

of labelled and un-labelled data for feature learning. In 2017,

Zhang and Peng [115] have proposed a semi-supervised deep

hashing (SSDH) framework for image retrieval from labeled and

unlabeled data. The SSDH uses labeled data for the empirical error

minimization and both labeled and unlabeled data for embedding

error minimization. The generative adversarial learning has been

also utilized extensively in semi-supervised deep image retrieval

[116], [117]. A teacher-student framework based semi-supervised

image retrieval is performed by Zhang et al. (2019) [118] in which

the pairwise information learnt by the teacher network is used as

the guidance to train the student network.

4.4 Weakly-supervised Approaches

Weakly-supervised approaches have been also explored for the

image retrieval task [119], [120], [121], [122]. For example, Tang

et al. (2017) have put forward a weakly-supervised multimodal

hashing (WMH) by utilizing the local discriminative and geo-

metric structures in the visual space [119]. Guan et al. (2018)

[120] have performed the pre-training in weakly-supervised mode

and fine-tuning in supervised mode. Gattupalli et al. (2019) [121]

have developed the weakly supervised deep hashing using tag

embeddings (WDHT) for image retrieval. The WDHT utilizes

the word2vec semantic embeddings. Li et al. (2020) [122] have

developed a semantic guided hashing (SGH) network for image

retrieval by simultaneously employing the weakly-supervised tag

information and the inherent data relations.

4.5 Pseudo-supervised Approaches

The pseudo suervised networks have been also developed for

image retrieval [123], [112], [124]. A k-means clustering based

pseudo labels are generated from the pretrained VGG16 features

and used for the training of a deep hashing network with classifi-

cation loss and quantization loss as the objective functions [123].

An appealing performance has been observed using pseudo labels

over CIFAR-10 and Flickr datasets for image retrieval. The pseudo

triplets are utilized in [112] for unsupervised image retrieval.

Recently, in 2020, pseudo labels are used for deep self-taught

graph embedding based hash codes (DSTGeH) [124] for image

retrieval.

4.6 Self-supervised Approaches

The self-supervision is another way of supervision used in some

research works for image retrieval [125], [126]. For example,

Zhang et al. (2016) [125] have introduced a self-supervised tem-

poral hashing (SSTH) for video retrieval. Li et al. (2018) [126]

have used the adversarial networks in self-supervision mode for

cross-image retrieval by utilizing the multi-label annotations.

4.7 Summary

Following are the summary and take away points from the above

discussion on deep learning based image models from the super-

vision perspective:

• The supervised approaches utilize the class-specific se-

mantic information through the classification error apart

from the other objectives related to the hash code gener-

ation. Generally, the performance of supervised models is

better than other models due to learning of the fine-grained

and class specific information. Different type of networks

can be exploited for retrieval with classification error.

�

A DECADE SURVEY OF CONTENT BASED IMAGERETRIEVAL USING DEEP LEARNING

8

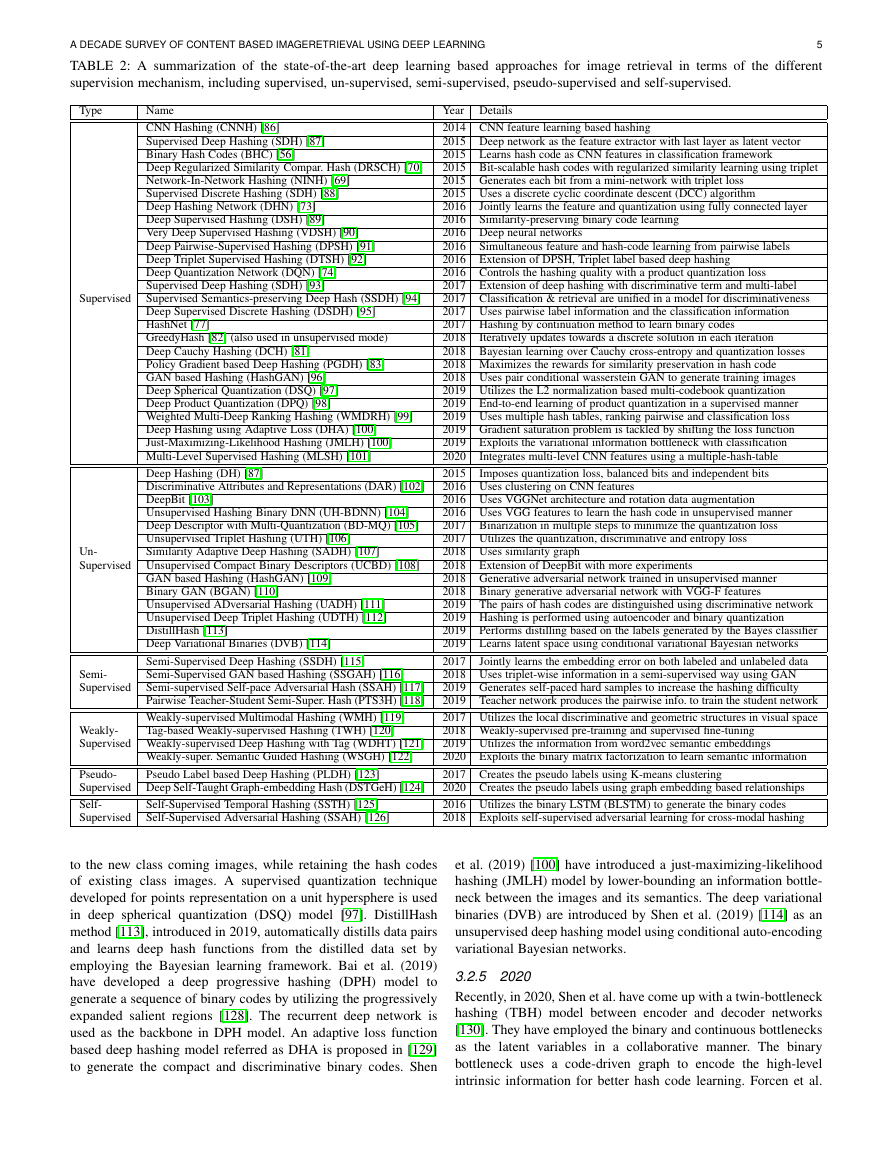

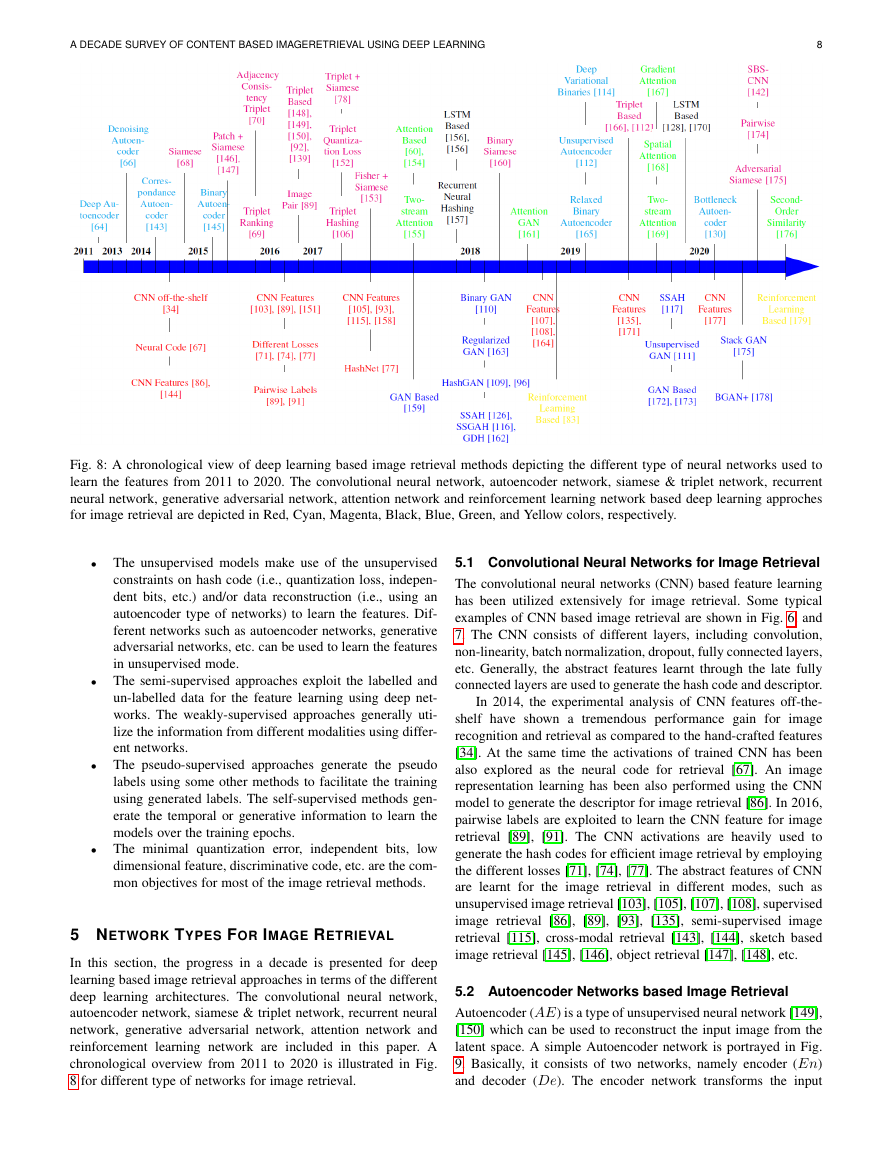

Fig. 8: A chronological view of deep learning based image retrieval methods depicting the different type of neural networks used to

learn the features from 2011 to 2020. The convolutional neural network, autoencoder network, siamese & triplet network, recurrent

neural network, generative adversarial network, attention network and reinforcement learning network based deep learning approches

for image retrieval are depicted in Red, Cyan, Magenta, Black, Blue, Green, and Yellow colors, respectively.

• The unsupervised models make use of the unsupervised

constraints on hash code (i.e., quantization loss, indepen-

dent bits, etc.) and/or data reconstruction (i.e., using an

autoencoder type of networks) to learn the features. Dif-

ferent networks such as autoencoder networks, generative

adversarial networks, etc. can be used to learn the features

in unsupervised mode.

• The semi-supervised approaches exploit the labelled and

un-labelled data for the feature learning using deep net-

works. The weakly-supervised approaches generally uti-

lize the information from different modalities using differ-

ent networks.

• The pseudo-supervised approaches generate the pseudo

labels using some other methods to facilitate the training

using generated labels. The self-supervised methods gen-

erate the temporal or generative information to learn the

models over the training epochs.

• The minimal quantization error,

low

dimensional feature, discriminative code, etc. are the com-

mon objectives for most of the image retrieval methods.

independent bits,

5 NETWORK TYPES FOR IMAGE RETRIEVAL

In this section, the progress in a decade is presented for deep

learning based image retrieval approaches in terms of the different

deep learning architectures. The convolutional neural network,

autoencoder network, siamese & triplet network, recurrent neural

network, generative adversarial network, attention network and

reinforcement learning network are included in this paper. A

chronological overview from 2011 to 2020 is illustrated in Fig.

8 for different type of networks for image retrieval.

5.1 Convolutional Neural Networks for Image Retrieval

The convolutional neural networks (CNN) based feature learning

has been utilized extensively for image retrieval. Some typical

examples of CNN based image retrieval are shown in Fig. 6, and

7. The CNN consists of different layers, including convolution,

non-linearity, batch normalization, dropout, fully connected layers,

etc. Generally, the abstract features learnt through the late fully

connected layers are used to generate the hash code and descriptor.

In 2014, the experimental analysis of CNN features off-the-

shelf have shown a tremendous performance gain for image

recognition and retrieval as compared to the hand-crafted features

[34]. At the same time the activations of trained CNN has been

also explored as the neural code for retrieval [67]. An image

representation learning has been also performed using the CNN

model to generate the descriptor for image retrieval [86]. In 2016,

pairwise labels are exploited to learn the CNN feature for image

retrieval [89], [91]. The CNN activations are heavily used to

generate the hash codes for efficient image retrieval by employing

the different losses [71], [74], [77]. The abstract features of CNN

are learnt for the image retrieval in different modes, such as

unsupervised image retrieval [103], [105], [107], [108], supervised

image retrieval [86], [89], [93], [135], semi-supervised image

retrieval [115], cross-modal retrieval [143], [144], sketch based

image retrieval [145], [146], object retrieval [147], [148], etc.

5.2 Autoencoder Networks based Image Retrieval

Autoencoder (AE) is a type of unsupervised neural network [149],

[150] which can be used to reconstruct the input image from the

latent space. A simple Autoencoder network is portrayed in Fig.

9. Basically, it consists of two networks, namely encoder (En)

and decoder (De). The encoder network transforms the input

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc