Edge Boxes: Locating

Object Proposals from Edges

C. Lawrence Zitnick and Piotr Doll´ar

Microsoft Research

Abstract. The use of object proposals is an effective recent approach

for increasing the computational efficiency of object detection. We pro-

pose a novel method for generating object bounding box proposals us-

ing edges. Edges provide a sparse yet informative representation of an

image. Our main observation is that the number of contours that are

wholly contained in a bounding box is indicative of the likelihood of the

box containing an object. We propose a simple box objectness score that

measures the number of edges that exist in the box minus those that

are members of contours that overlap the box’s boundary. Using efficient

data structures, millions of candidate boxes can be evaluated in a fraction

of a second, returning a ranked set of a few thousand top-scoring propos-

als. Using standard metrics, we show results that are significantly more

accurate than the current state-of-the-art while being faster to compute.

In particular, given just 1000 proposals we achieve over 96% object recall

at overlap threshold of 0.5 and over 75% recall at the more challenging

overlap of 0.7. Our approach runs in 0.25 seconds and we additionally

demonstrate a near real-time variant with only minor loss in accuracy.

Keywords: object proposals, object detection, edge detection

1 Introduction

The goal of object detection is to determine whether an object exists in an

image, and if so where in the image it occurs. The dominant approach to this

problem over the past decade has been the sliding windows paradigm in which

object classification is performed at every location and scale in an image [1–

3]. Recently, an alternative framework for object detection has been proposed.

Instead of searching for an object at every image location and scale, a set of

object bounding box proposals is first generated with the goal of reducing the

set of positions that need to be further analyzed. The remarkable discovery

made by these approaches [4–11] is that object proposals may be accurately

generated in a manner that is agnostic to the type of object being detected.

Object proposal generators are currently used by several state-of-the-art object

detection algorithms [5, 12, 13], which include the winners of the 2013 ImageNet

detection challenge [14] and top methods on the PASCAL VOC dataset [15].

High recall and efficiency are critical properties of an object proposal gen-

erator. If a proposal is not generated in the vicinity of an object that object

�

2

C. Lawrence Zitnick and Piotr Doll´ar

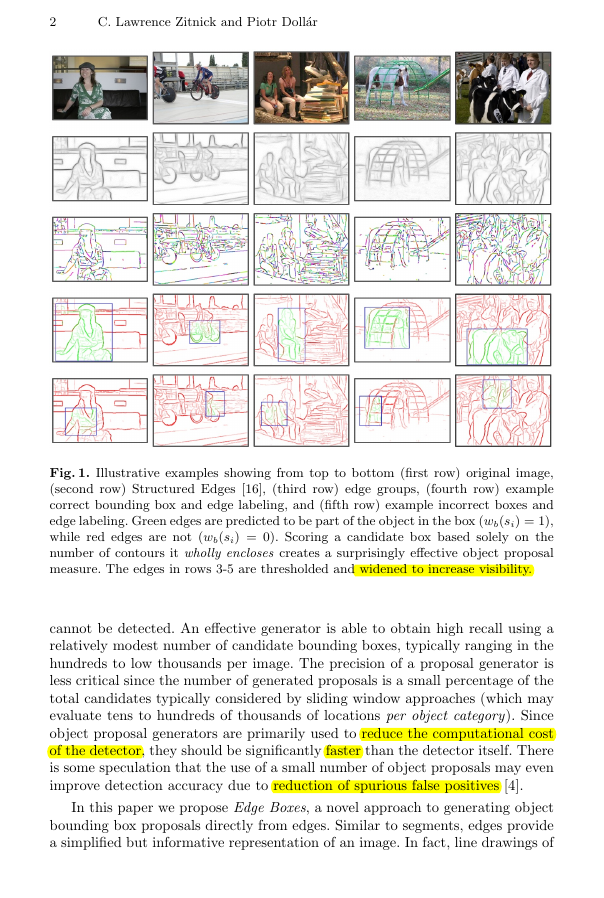

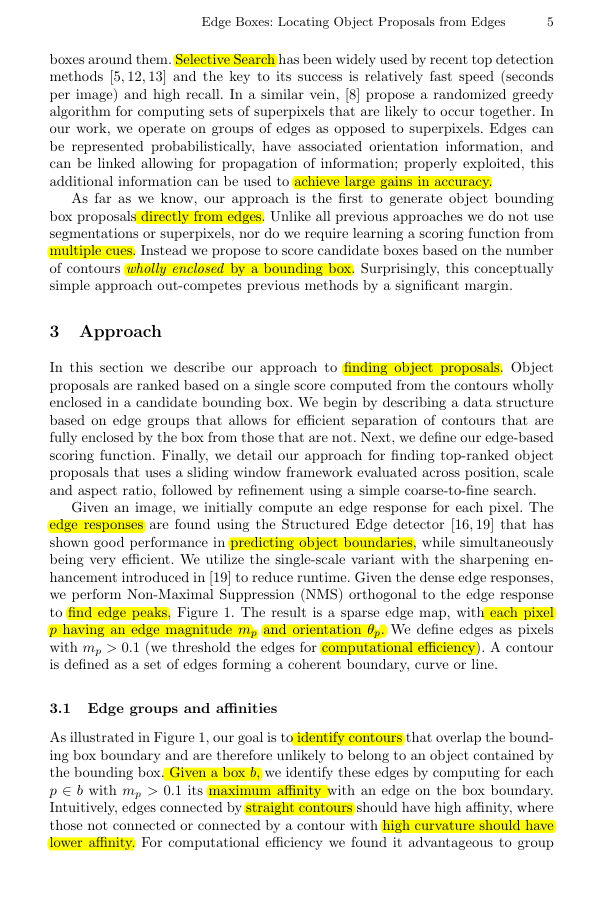

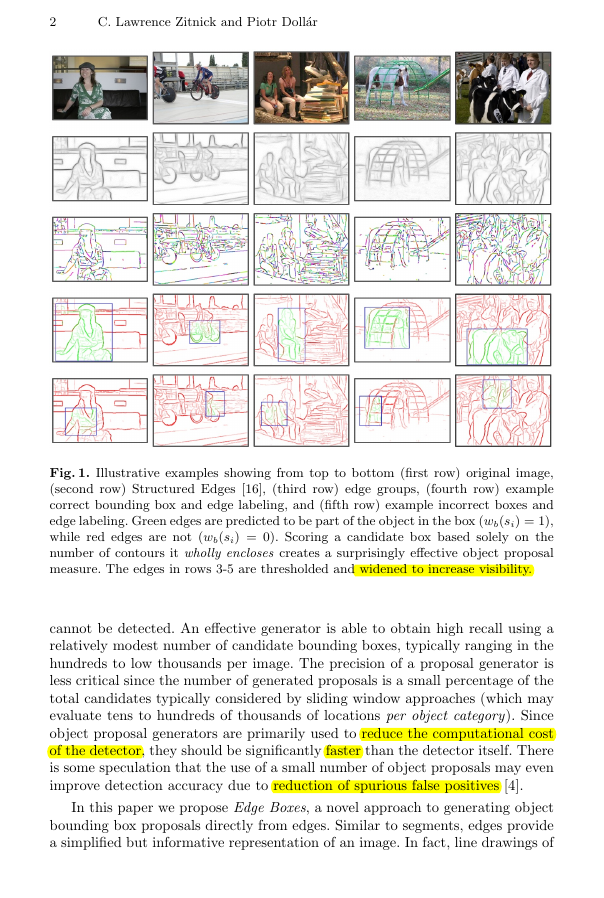

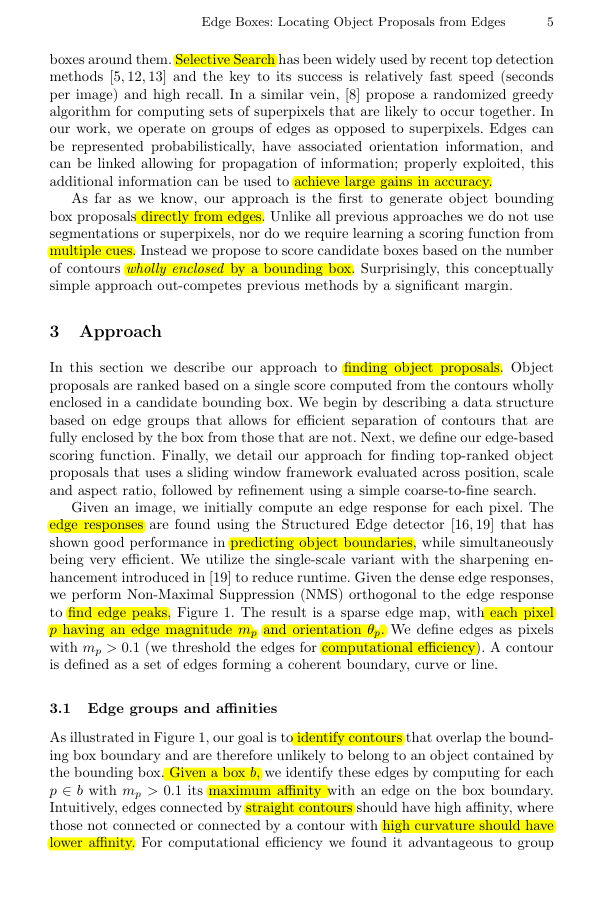

Fig. 1. Illustrative examples showing from top to bottom (first row) original image,

(second row) Structured Edges [16], (third row) edge groups, (fourth row) example

correct bounding box and edge labeling, and (fifth row) example incorrect boxes and

edge labeling. Green edges are predicted to be part of the object in the box (wb(si) = 1),

while red edges are not (wb(si) = 0). Scoring a candidate box based solely on the

number of contours it wholly encloses creates a surprisingly effective object proposal

measure. The edges in rows 3-5 are thresholded and widened to increase visibility.

cannot be detected. An effective generator is able to obtain high recall using a

relatively modest number of candidate bounding boxes, typically ranging in the

hundreds to low thousands per image. The precision of a proposal generator is

less critical since the number of generated proposals is a small percentage of the

total candidates typically considered by sliding window approaches (which may

evaluate tens to hundreds of thousands of locations per object category). Since

object proposal generators are primarily used to reduce the computational cost

of the detector, they should be significantly faster than the detector itself. There

is some speculation that the use of a small number of object proposals may even

improve detection accuracy due to reduction of spurious false positives [4].

In this paper we propose Edge Boxes, a novel approach to generating object

bounding box proposals directly from edges. Similar to segments, edges provide

a simplified but informative representation of an image. In fact, line drawings of

�

Edge Boxes: Locating Object Proposals from Edges

3

an image can accurately convey the high-level information contained in an image

using only a small fraction of the information [17, 18]. As we demonstrate, the

use of edges offers many computational advantages since they may be efficiently

computed [16] and the resulting edge maps are sparse. In this work we investigate

how to directly detect object proposals from edge-maps.

Our main contribution is the following observation: the number of contours

wholly enclosed by a bounding box is indicative of the likelihood of the box

containing an object. We say a contour is wholly enclosed by a box if all edge

pixels belonging to the contour lie within the interior of the box. Edges tend to

correspond to object boundaries, and as such boxes that tightly enclose a set

of edges are likely to contain an object. However, some edges that lie within

an object’s bounding box may not be part of the contained object. Specifically,

edge pixels that belong to contours straddling the box’s boundaries are likely

to correspond to objects or structures that lie outside the box, see Figure 1. In

this paper we demonstrate that scoring a box based on the number of contours

it wholly encloses creates a surprisingly effective proposal measure. In contrast,

simply counting the number of edge pixels within the box is not as informative.

Our approach bears some resemblance to superpixels straddling measure intro-

duced by [4]; however, rather than measuring the number of straddling contours

we instead remove such contours from consideration.

As the number of possible bounding boxes in an image is large, we must

be able to score candidates efficiently. We utilize the fast and publicly avail-

able Structured Edge detector recently proposed in [16, 19] to obtain the initial

edge map. To aid in later computations, neighboring edge pixels of similar ori-

entation are clustered together to form groups. Affinities are computed between

edge groups based on their relative positions and orientations such that groups

forming long continuous contours have high affinity. The score for a box is com-

puted by summing the edge strength of all edge groups within the box, minus

the strength of edge groups that are part of a contour that straddles the box’s

boundary, see Figure 1.

We evaluate candidate boxes utilizing a sliding window approach, similar

to traditional object detection. At every potential object position, scale and

aspect ratio we generate a score indicating the likelihood of an object being

present. Promising candidate boxes are further refined using a simple coarse-to-

fine search. Utilizing efficient data structures, our approach is capable of rapidly

finding the top object proposals from among millions of potential candidates.

We show improved recall rates over state-of-the-art methods for a wide range

of intersection over union thresholds, while simultaneously improving efficiency.

In particular, on the PASCAL VOC dataset [15], given just 1000 proposals we

achieve over 96% object recall at overlap threshold of 0.5 and over 75% recall

at an overlap of 0.7. At the latter and more challenging setting, previous state-

of-the-art approaches required considerably more proposals to achieve similar

recall. Our approach runs in quarter of a second, while a near real-time variant

runs in a tenth of a second with only a minor loss in accuracy.

�

4

C. Lawrence Zitnick and Piotr Doll´ar

2 Related work

The goal of generating object proposals is to create a relatively small set of

candidate bounding boxes that cover the objects in the image. The most com-

mon use of the proposals is to allow for efficient object detection with complex

and expensive classifiers [5, 12, 13]. Another popular use is for weakly supervised

learning [20, 21], where by limiting the number of candidate regions, learning

with less supervision becomes feasible. For detection, recall is critical and thou-

sands of candidates can be used, for weakly supervised learning typically a few

hundred proposals per image are kept. Since it’s inception a few years ago [4, 9,

6], object proposal generation has found wide applicability.

Generating object proposals aims to achieve many of the benefits of image

segmentation without having to solve the harder problem of explicitly partition-

ing an image into non-overlapping regions. While segmentation has found limited

success in object detection [22], in general it fails to provide accurate object re-

gions. Hoiem et al. [23] proposed to use multiple overlapping segmentations to

overcome errors of individual segmentations, this was explored further by [24]

and [25] in the context of object detection. While use of multiple segmentations

improves robustness, constructing coherent segmentations is an inherently dif-

ficult task. Object proposal generation seeks to sidestep the challenges of full

segmentation by directly generating multiple overlapping object proposals.

Three distinct paradigms have emerged for object proposal generation. Can-

didate bounding boxes representing object proposals can be found by measuring

their ‘objectness’ [4, 11], producing multiple foreground-background segmenta-

tions of an image [6, 9, 10], or by merging superpixels [5, 8]. Our approach pro-

vides an alternate framework based on edges that is both simpler and more

efficient while sharing many advantages with previous work. Below we briefly

outline representative work for each paradigm; we refer readers to Hosang et

al. [26] for a thorough survey and evaluation of object proposal methods.

Objectness Scoring: Alexe et al. [4] proposed to rank candidates by com-

bining a number of cues in a classification framework and assigning a resulting

‘objectness’ score to each proposal. [7] built on this idea by learning efficient

cascades to more quickly and accurately rank candidates. Among multiple cues,

both [4] and [7] define scores based on edge distributions near window bound-

aries. However, these edge scores do not remove edges belonging to contours

intersecting the box boundary, which we found to be critical. [4] utilizes a super-

pixel straddling measure penalizing candidates containing segments overlapping

the boundary. In contrast, we suppress straddling contours by propagating in-

formation across edge groups that may not directly lie on the boundary. Finally,

recently [11] proposed a very fast objectness score based on image gradients.

Seed Segmentation: [6, 9, 10] all start with multiple seed regions and gener-

ate a separate foreground-background segmentation for each seed. The primary

advantage of these approaches is generation of high quality segmentation masks,

the disadvantage is their high computation cost (minutes per image).

Superpixel Merging: Selective Search [5] is based on computing multiple

hierarchical segmentations based on superpixels from [27] and placing bounding

�

Edge Boxes: Locating Object Proposals from Edges

5

boxes around them. Selective Search has been widely used by recent top detection

methods [5, 12, 13] and the key to its success is relatively fast speed (seconds

per image) and high recall. In a similar vein, [8] propose a randomized greedy

algorithm for computing sets of superpixels that are likely to occur together. In

our work, we operate on groups of edges as opposed to superpixels. Edges can

be represented probabilistically, have associated orientation information, and

can be linked allowing for propagation of information; properly exploited, this

additional information can be used to achieve large gains in accuracy.

As far as we know, our approach is the first to generate object bounding

box proposals directly from edges. Unlike all previous approaches we do not use

segmentations or superpixels, nor do we require learning a scoring function from

multiple cues. Instead we propose to score candidate boxes based on the number

of contours wholly enclosed by a bounding box. Surprisingly, this conceptually

simple approach out-competes previous methods by a significant margin.

3 Approach

In this section we describe our approach to finding object proposals. Object

proposals are ranked based on a single score computed from the contours wholly

enclosed in a candidate bounding box. We begin by describing a data structure

based on edge groups that allows for efficient separation of contours that are

fully enclosed by the box from those that are not. Next, we define our edge-based

scoring function. Finally, we detail our approach for finding top-ranked object

proposals that uses a sliding window framework evaluated across position, scale

and aspect ratio, followed by refinement using a simple coarse-to-fine search.

Given an image, we initially compute an edge response for each pixel. The

edge responses are found using the Structured Edge detector [16, 19] that has

shown good performance in predicting object boundaries, while simultaneously

being very efficient. We utilize the single-scale variant with the sharpening en-

hancement introduced in [19] to reduce runtime. Given the dense edge responses,

we perform Non-Maximal Suppression (NMS) orthogonal to the edge response

to find edge peaks, Figure 1. The result is a sparse edge map, with each pixel

p having an edge magnitude mp and orientation θp. We define edges as pixels

with mp > 0.1 (we threshold the edges for computational efficiency). A contour

is defined as a set of edges forming a coherent boundary, curve or line.

3.1 Edge groups and affinities

As illustrated in Figure 1, our goal is to identify contours that overlap the bound-

ing box boundary and are therefore unlikely to belong to an object contained by

the bounding box. Given a box b, we identify these edges by computing for each

p ∈ b with mp > 0.1 its maximum affinity with an edge on the box boundary.

Intuitively, edges connected by straight contours should have high affinity, where

those not connected or connected by a contour with high curvature should have

lower affinity. For computational efficiency we found it advantageous to group

�

6

C. Lawrence Zitnick and Piotr Doll´ar

edges that have high affinity and only compute affinities between edge groups. We

form the edge groups using a simple greedy approach that combines 8-connected

edges until the sum of their orientation differences is above a threshold (π/2).

Small groups are merged with neighboring groups. An illustration of the edge

groups is shown in Figure 1, row 3.

Given a set of edge groups si ∈ S, we compute an affinity between each pair of

neighboring groups. For a pair of groups si and sj, the affinity is computed based

on their mean positions xi and xj and mean orientations θi and θj. Intuitively,

edge groups have high affinity if the angle between the groups’ means in similar

to the groups’ orientations. Specifically, we compute the affinity a(si, sj) using:

a(si, sj) = |cos(θi − θij) cos(θj − θij)|γ ,

(1)

where θij is the angle between xi and xj. The value of γ may be used to adjust

the affinity’s sensitivity to changes in orientation, with γ = 2 used in practice. If

two edge groups are separated by more than 2 pixels their affinity is set to zero.

For increased computational efficiency only affinities above a small threshold

(0.05) are stored and the rest are assumed to be zero.

The edge grouping and affinity measure are computationally trivial. In prac-

tice results are robust to the details of the edge grouping.

3.2 Bounding box scoring

Given the set of edge groups S and their affinities, we can compute an object

proposal score for any candidate bounding box b. To find our score, we first

compute the sum mi of the magnitudes mp for all edges p in the group si. We

also pick an arbitrary pixel position ¯xi of some pixel p in each group si. As we

will show, the exact choice of p ∈ si does not matter.

For each group si we compute a continuous value wb(si) ∈ [0, 1] that indicates

whether si is wholly contained in b, wb(si) = 1, or not, wb(si) = 0. Let Sb be

the set of edge groups that overlap the box b’s boundary. We find Sb using an

efficient data structure that is described in Section 3.3. For all si ∈ Sb, wb(si)

is set to 0. Similarly wb(si) = 0 for all si for which ¯xi /∈ b, since all of its pixels

must either be outside of b or si ∈ Sb. For the remaining edge groups for which

¯xi ∈ b and si /∈ Sb we compute wb(si) as follows:

wb(si) = 1 − max

T

a(tj, tj+1),

(2)

where T is an ordered path of edge groups with a length of |T| that begins with

some t1 ∈ Sb and ends at t|T| = si. If no such path exists we define wb(si) = 1.

Thus, Equation (2) finds the path with highest affinity between the edge group

si and an edge group that overlaps the box’s boundary. Since most pairwise

affinities are zero, this can be done efficiently.

Using the computed values of wb we define our score using:

|T|−1

j

hb =

i wb(si)mi

2(bw + bh)κ ,

(3)

�

Edge Boxes: Locating Object Proposals from Edges

7

where bw and bw are the box’s width and height. Note that we divide by the

box’s perimeter and not its area, since edges have a width of one pixel regardless

of scale. Nevertheless, a value of κ = 1.5 is used to offset the bias of larger

windows having more edges on average.

In practice we use an integral image to speed computation of the numerator

in Equation (3). The integral image is used to compute the sum of all mi for

which ¯xi ∈ b. Next, for all si with ¯xi ∈ b and wb(si) < 1, (1 − wb(si))mi is

subtracted from this sum. This speeds up computation considerably as typically

wb(si) = 1 for most si and all such si do not need to be explicitly considered.

Finally, it has been observed that the edges in the center of the box are of less

importance than those near the box’s edges [4]. To account for this observation

we can subtract the edge magnitudes from a box bin centered in b:

b = hb −

hin

p∈bin mp

2(bw + bh)κ ,

(4)

where the width and height of bin is bw/2 and bh/2 respectively. The sum of

the edge magnitudes in bin can be efficiently computed using an integral image.

As shown in Section 4 we found hin

b offers slightly better accuracy than hb with

minimal additional computational cost.

3.3 Finding intersecting edge groups

In the previous section we assumed the set of edge groups Sb that overlap the

box b’s boundary is known. Since we evaluate a huge number of bounding boxes

(Sec. 3.4), an efficient method for finding Sb is critical. Naive approaches such

as exhaustively searching all of the pixels on the boundary of a box would be

prohibitively expensive, especially for large boxes.

We propose an efficient method for finding intersecting edge groups for each

side of a bounding box that relies on two additional data structures. Below,

we describe the process for finding intersections along a horizontal boundary

from pixel (c0, r) to (c1, r). The vertical boundaries may be handled in a similar

manner. For horizontal boundaries we create two data structures for each row

of the image. The first data structure stores an ordered list Lr of edge group

indices for row r. The list is created by storing the order in which the edge

groups occur along the row r. An index is only added to Lr if the edge group

index changes from one pixel to the next. The result is the size of Lr is much

smaller than the width of the image. If there are pixels between the edge groups

that are not edges, a zero is added to the list. A second data structure Kr with

the same size as the width of the image is created that stores the corresponding

index into Lr for each column c in row r. Thus, if pixel p at location (c, r) is

a member of edge group si, Lr(Kr(c)) = i. Since most pixels do not belong to

an edge group, using these two data structures we can efficiently find the list of

overlapping edge groups by searching Lr from index Kr(c0) to Kr(c1).

�

8

C. Lawrence Zitnick and Piotr Doll´ar

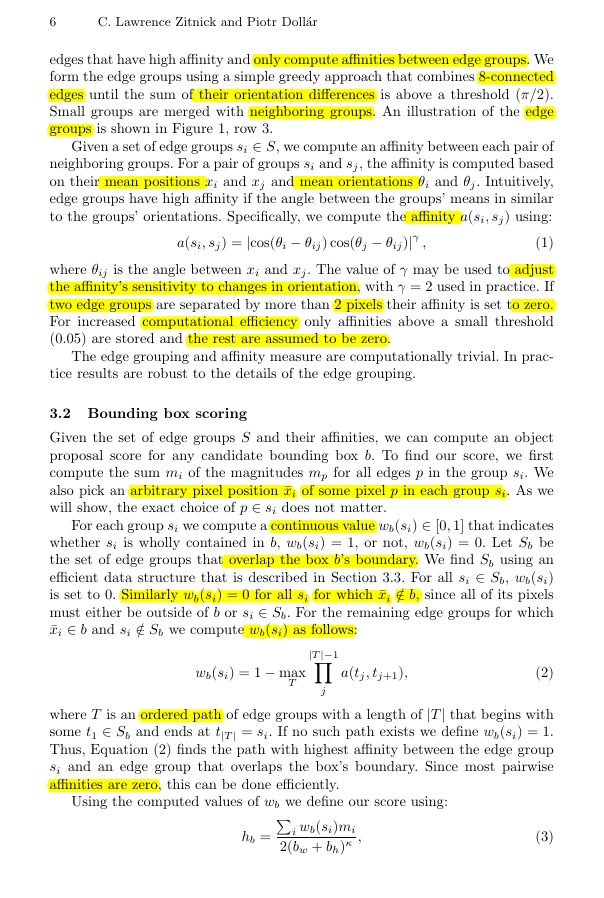

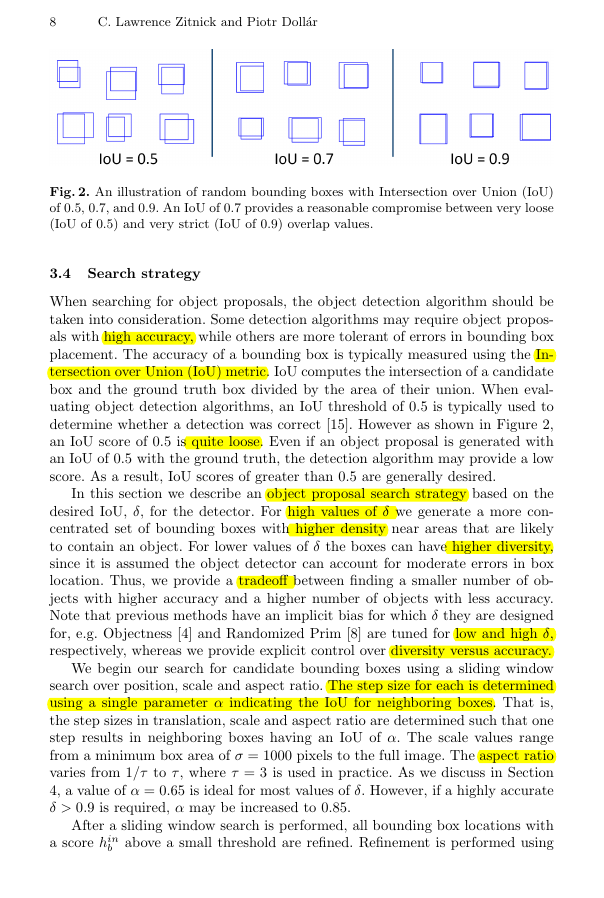

Fig. 2. An illustration of random bounding boxes with Intersection over Union (IoU)

of 0.5, 0.7, and 0.9. An IoU of 0.7 provides a reasonable compromise between very loose

(IoU of 0.5) and very strict (IoU of 0.9) overlap values.

3.4 Search strategy

When searching for object proposals, the object detection algorithm should be

taken into consideration. Some detection algorithms may require object propos-

als with high accuracy, while others are more tolerant of errors in bounding box

placement. The accuracy of a bounding box is typically measured using the In-

tersection over Union (IoU) metric. IoU computes the intersection of a candidate

box and the ground truth box divided by the area of their union. When eval-

uating object detection algorithms, an IoU threshold of 0.5 is typically used to

determine whether a detection was correct [15]. However as shown in Figure 2,

an IoU score of 0.5 is quite loose. Even if an object proposal is generated with

an IoU of 0.5 with the ground truth, the detection algorithm may provide a low

score. As a result, IoU scores of greater than 0.5 are generally desired.

In this section we describe an object proposal search strategy based on the

desired IoU, δ, for the detector. For high values of δ we generate a more con-

centrated set of bounding boxes with higher density near areas that are likely

to contain an object. For lower values of δ the boxes can have higher diversity,

since it is assumed the object detector can account for moderate errors in box

location. Thus, we provide a tradeoff between finding a smaller number of ob-

jects with higher accuracy and a higher number of objects with less accuracy.

Note that previous methods have an implicit bias for which δ they are designed

for, e.g. Objectness [4] and Randomized Prim [8] are tuned for low and high δ,

respectively, whereas we provide explicit control over diversity versus accuracy.

We begin our search for candidate bounding boxes using a sliding window

search over position, scale and aspect ratio. The step size for each is determined

using a single parameter α indicating the IoU for neighboring boxes. That is,

the step sizes in translation, scale and aspect ratio are determined such that one

step results in neighboring boxes having an IoU of α. The scale values range

from a minimum box area of σ = 1000 pixels to the full image. The aspect ratio

varies from 1/τ to τ , where τ = 3 is used in practice. As we discuss in Section

4, a value of α = 0.65 is ideal for most values of δ. However, if a highly accurate

δ > 0.9 is required, α may be increased to 0.85.

After a sliding window search is performed, all bounding box locations with

b above a small threshold are refined. Refinement is performed using

a score hin

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc