Preface

Acknowledgments

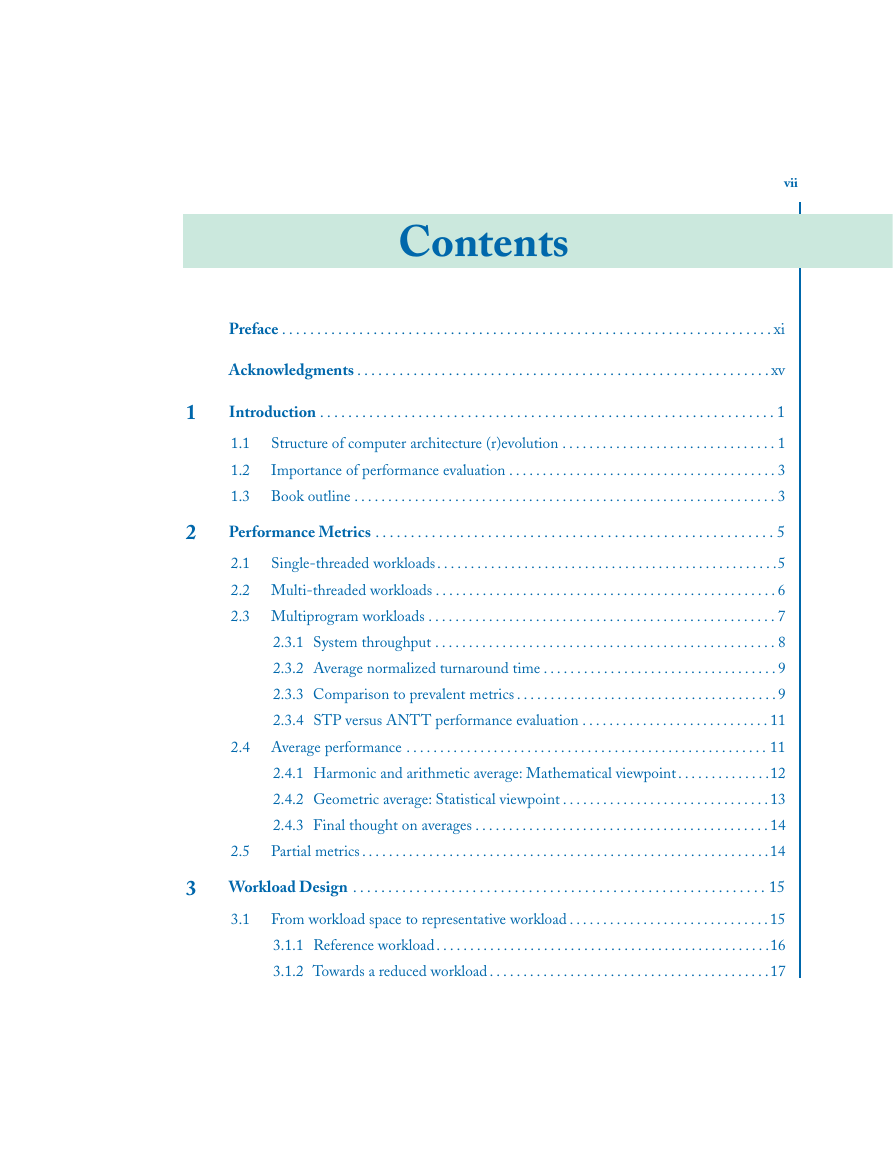

Introduction

Structure of computer architecture (r)evolution

Importance of performance evaluation

Book outline

Performance Metrics

Single-threaded workloads

Multi-threaded workloads

Multiprogram workloads

System throughput

Average normalized turnaround time

Comparison to prevalent metrics

STP versus ANTT performance evaluation

Average performance

Harmonic and arithmetic average: Mathematical viewpoint

Geometric average: Statistical viewpoint

Final thought on averages

Partial metrics

Workload Design

From workload space to representative workload

Reference workload

Towards a reduced workload

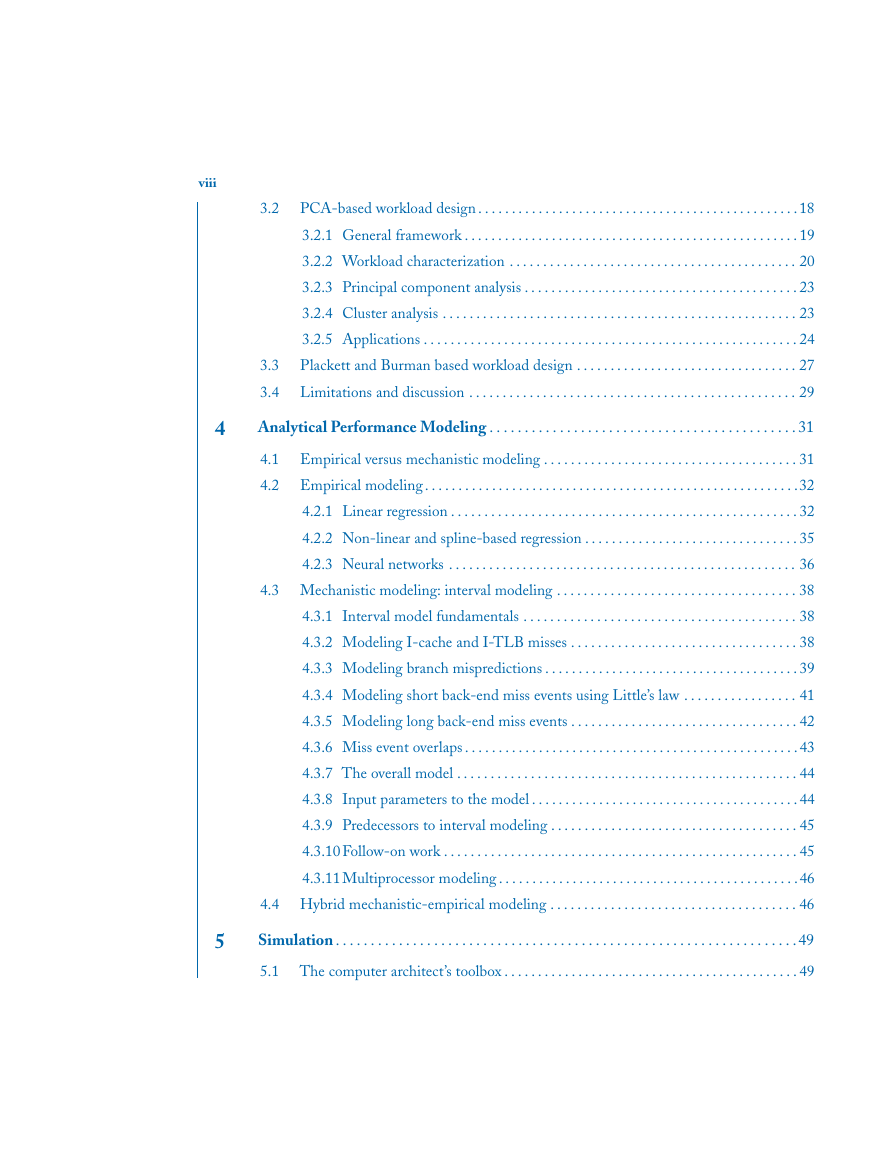

PCA-based workload design

General framework

Workload characterization

Principal component analysis

Cluster analysis

Applications

Plackett and Burman based workload design

Limitations and discussion

Analytical Performance Modeling

Empirical versus mechanistic modeling

Empirical modeling

Linear regression

Non-linear and spline-based regression

Neural networks

Mechanistic modeling: interval modeling

Interval model fundamentals

Modeling I-cache and I-TLB misses

Modeling branch mispredictions

Modeling short back-end miss events using Little's law

Modeling long back-end miss events

Miss event overlaps

The overall model

Input parameters to the model

Predecessors to interval modeling

Follow-on work

Multiprocessor modeling

Hybrid mechanistic-empirical modeling

Simulation

The computer architect's toolbox

Functional simulation

Alternatives

Operating system effects

Full-system simulation

Specialized trace-driven simulation

Trace-driven simulation

Execution-driven simulation

Taxonomy

Dealing with non-determinism

Modular simulation infrastructure

Need for simulation acceleration

Sampled Simulation

What sampling units to select?

Statistical sampling

Targeted Sampling

Comparing design alternatives through sampled simulation

How to initialize architecture state?

Fast-forwarding

Checkpointing

How to initialize microarchitecture state?

Cache state warmup

Predictor warmup

Processor core state

Sampled multiprocessor and multi-threaded processor simulation

Statistical Simulation

Methodology overview

Applications

Single-threaded workloads

Statistical profiling

Synthetic trace generation

Synthetic trace simulation

Multi-program workloads

Multi-threaded workloads

Other work in statistical modeling

Parallel Simulation and Hardware Acceleration

Parallel sampled simulation

Parallel simulation

FPGA-accelerated simulation

Taxonomy

Example projects

Concluding Remarks

Topics that this book did not cover (yet)

Measurement bias

Design space exploration

Simulator validation

Future work in performance evaluation methods

Challenges related to software

Challenges related to hardware

Final comment

Bibliography

Author's Biography

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc