5

1

0

2

p

e

S

2

]

V

C

.

s

c

[

2

v

6

7

5

6

0

.

8

0

5

1

:

v

i

X

r

a

A Neural Algorithm of Artistic Style

Leon A. Gatys,1,2,3⇤ Alexander S. Ecker,1,2,4,5 Matthias Bethge1,2,4

1Werner Reichardt Centre for Integrative Neuroscience

and Institute of Theoretical Physics, University of T¨ubingen, Germany

2Bernstein Center for Computational Neuroscience, T¨ubingen, Germany

3Graduate School for Neural Information Processing, T¨ubingen, Germany

4Max Planck Institute for Biological Cybernetics, T¨ubingen, Germany

5Department of Neuroscience, Baylor College of Medicine, Houston, TX, USA

⇤To whom correspondence should be addressed; E-mail: leon.gatys@bethgelab.org

In fine art, especially painting, humans have mastered the skill to create unique

visual experiences through composing a complex interplay between the con-

tent and style of an image. Thus far the algorithmic basis of this process is

unknown and there exists no artificial system with similar capabilities. How-

ever, in other key areas of visual perception such as object and face recognition

near-human performance was recently demonstrated by a class of biologically

inspired vision models called Deep Neural Networks.1,2 Here we introduce an

artificial system based on a Deep Neural Network that creates artistic images

of high perceptual quality. The system uses neural representations to sepa-

rate and recombine content and style of arbitrary images, providing a neural

algorithm for the creation of artistic images. Moreover, in light of the strik-

ing similarities between performance-optimised artificial neural networks and

biological vision,3–7 our work offers a path forward to an algorithmic under-

standing of how humans create and perceive artistic imagery.

1

�

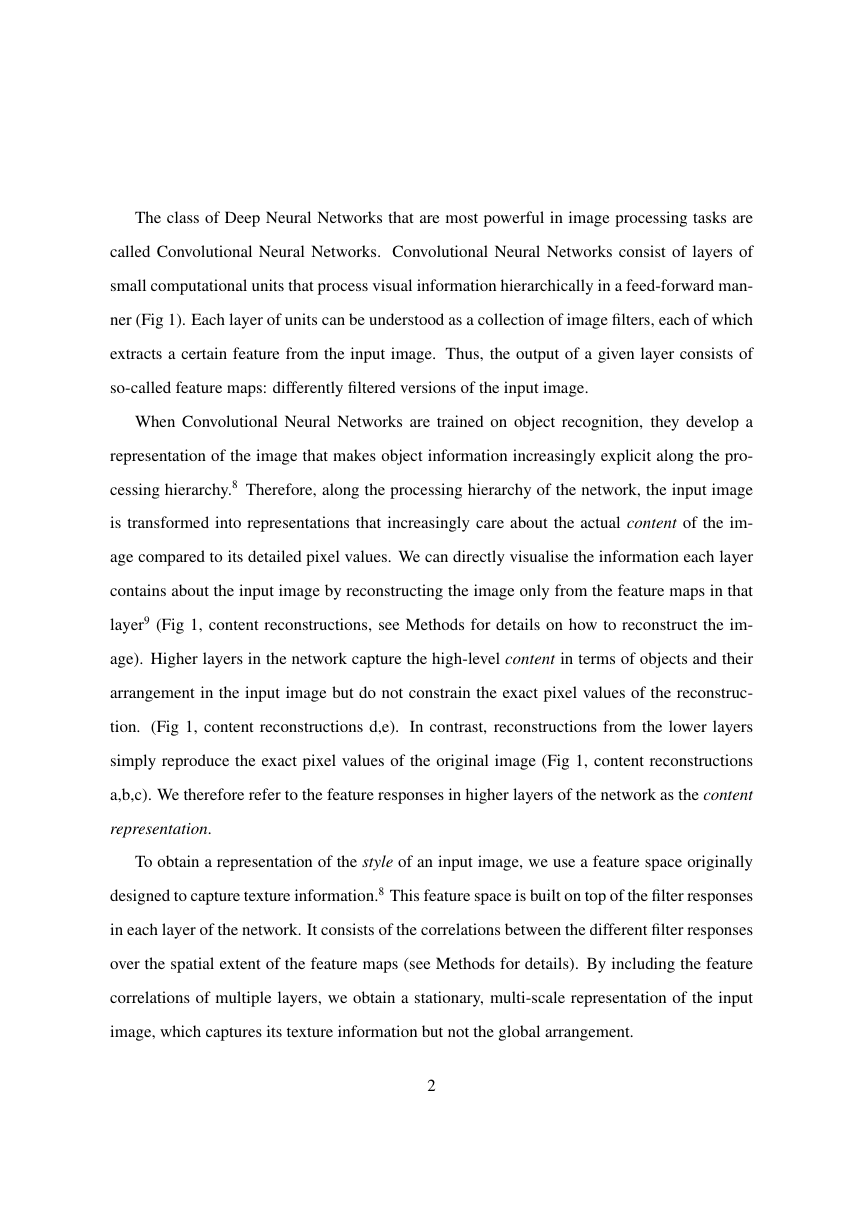

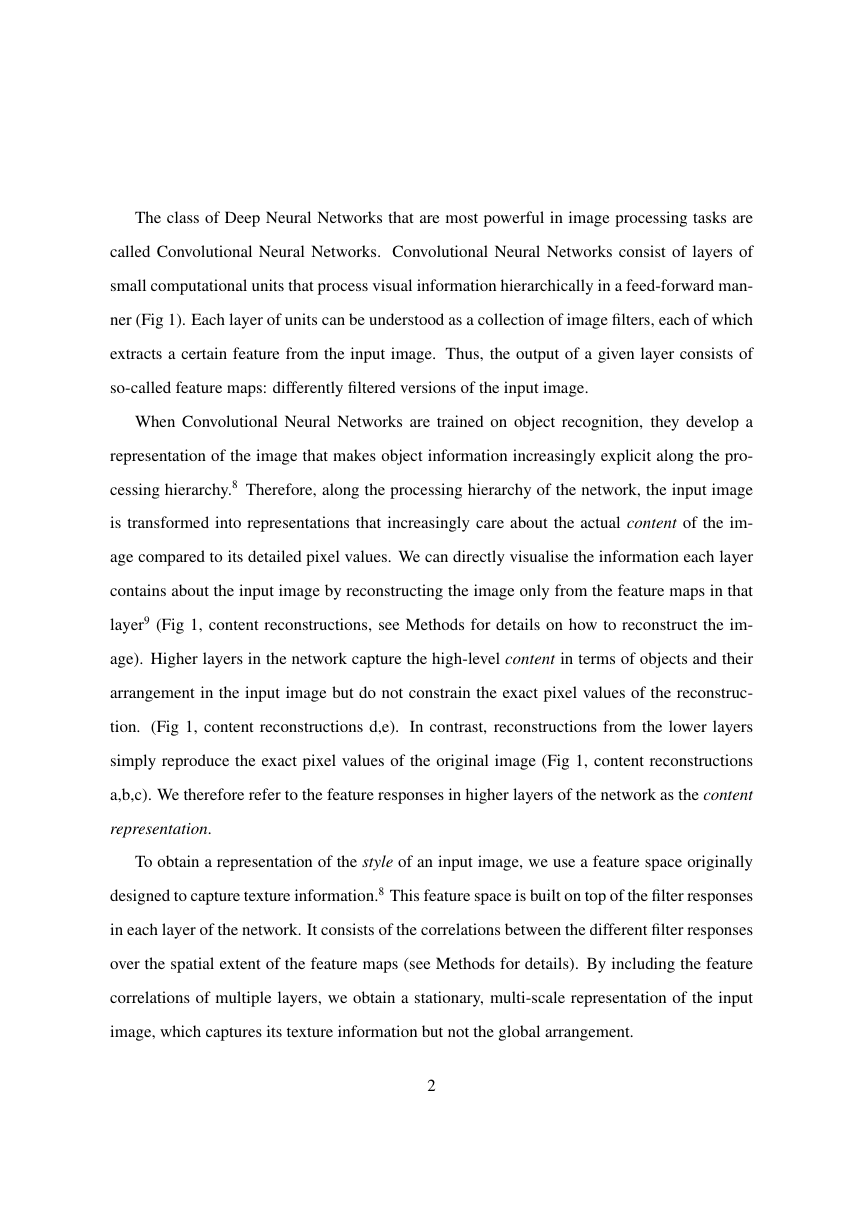

The class of Deep Neural Networks that are most powerful in image processing tasks are

called Convolutional Neural Networks. Convolutional Neural Networks consist of layers of

small computational units that process visual information hierarchically in a feed-forward man-

ner (Fig 1). Each layer of units can be understood as a collection of image filters, each of which

extracts a certain feature from the input image. Thus, the output of a given layer consists of

so-called feature maps: differently filtered versions of the input image.

When Convolutional Neural Networks are trained on object recognition, they develop a

representation of the image that makes object information increasingly explicit along the pro-

cessing hierarchy.8 Therefore, along the processing hierarchy of the network, the input image

is transformed into representations that increasingly care about the actual content of the im-

age compared to its detailed pixel values. We can directly visualise the information each layer

contains about the input image by reconstructing the image only from the feature maps in that

layer9 (Fig 1, content reconstructions, see Methods for details on how to reconstruct the im-

age). Higher layers in the network capture the high-level content in terms of objects and their

arrangement in the input image but do not constrain the exact pixel values of the reconstruc-

tion. (Fig 1, content reconstructions d,e). In contrast, reconstructions from the lower layers

simply reproduce the exact pixel values of the original image (Fig 1, content reconstructions

a,b,c). We therefore refer to the feature responses in higher layers of the network as the content

representation.

To obtain a representation of the style of an input image, we use a feature space originally

designed to capture texture information.8 This feature space is built on top of the filter responses

in each layer of the network. It consists of the correlations between the different filter responses

over the spatial extent of the feature maps (see Methods for details). By including the feature

correlations of multiple layers, we obtain a stationary, multi-scale representation of the input

image, which captures its texture information but not the global arrangement.

2

�

Figure 1: Convolutional Neural Network (CNN). A given input image is represented as a set

of filtered images at each processing stage in the CNN. While the number of different filters

increases along the processing hierarchy, the size of the filtered images is reduced by some

downsampling mechanism (e.g. max-pooling) leading to a decrease in the total number of

units per layer of the network. Content Reconstructions. We can visualise the information

at different processing stages in the CNN by reconstructing the input image from only know-

ing the network’s responses in a particular layer. We reconstruct the input image from from

layers ‘conv1 1’ (a), ‘conv2 1’ (b), ‘conv3 1’ (c), ‘conv4 1’ (d) and ‘conv5 1’ (e) of the orig-

inal VGG-Network. We find that reconstruction from lower layers is almost perfect (a,b,c). In

higher layers of the network, detailed pixel information is lost while the high-level content of the

image is preserved (d,e). Style Reconstructions. On top of the original CNN representations

we built a new feature space that captures the style of an input image. The style representation

computes correlations between the different features in different layers of the CNN. We recon-

struct the style of the input image from style representations built on different subsets of CNN

layers ( ‘conv1 1’ (a), ‘conv1 1’ and ‘conv2 1’ (b), ‘conv1 1’, ‘conv2 1’ and ‘conv3 1’ (c),

‘conv1 1’, ‘conv2 1’, ‘conv3 1’ and ‘conv4 1’ (d), ‘conv1 1’, ‘conv2 1’, ‘conv3 1’, ‘conv4 1’

and ‘conv5 1’ (e)). This creates images that match the style of a given image on an increasing

scale while discarding information of the global arrangement of the scene.

3

�

Again, we can visualise the information captured by these style feature spaces built on

different layers of the network by constructing an image that matches the style representation

of a given input image (Fig 1, style reconstructions).10, 11 Indeed reconstructions from the style

features produce texturised versions of the input image that capture its general appearance in

terms of colour and localised structures. Moreover, the size and complexity of local image

structures from the input image increases along the hierarchy, a result that can be explained

by the increasing receptive field sizes and feature complexity. We refer to this multi-scale

representation as style representation.

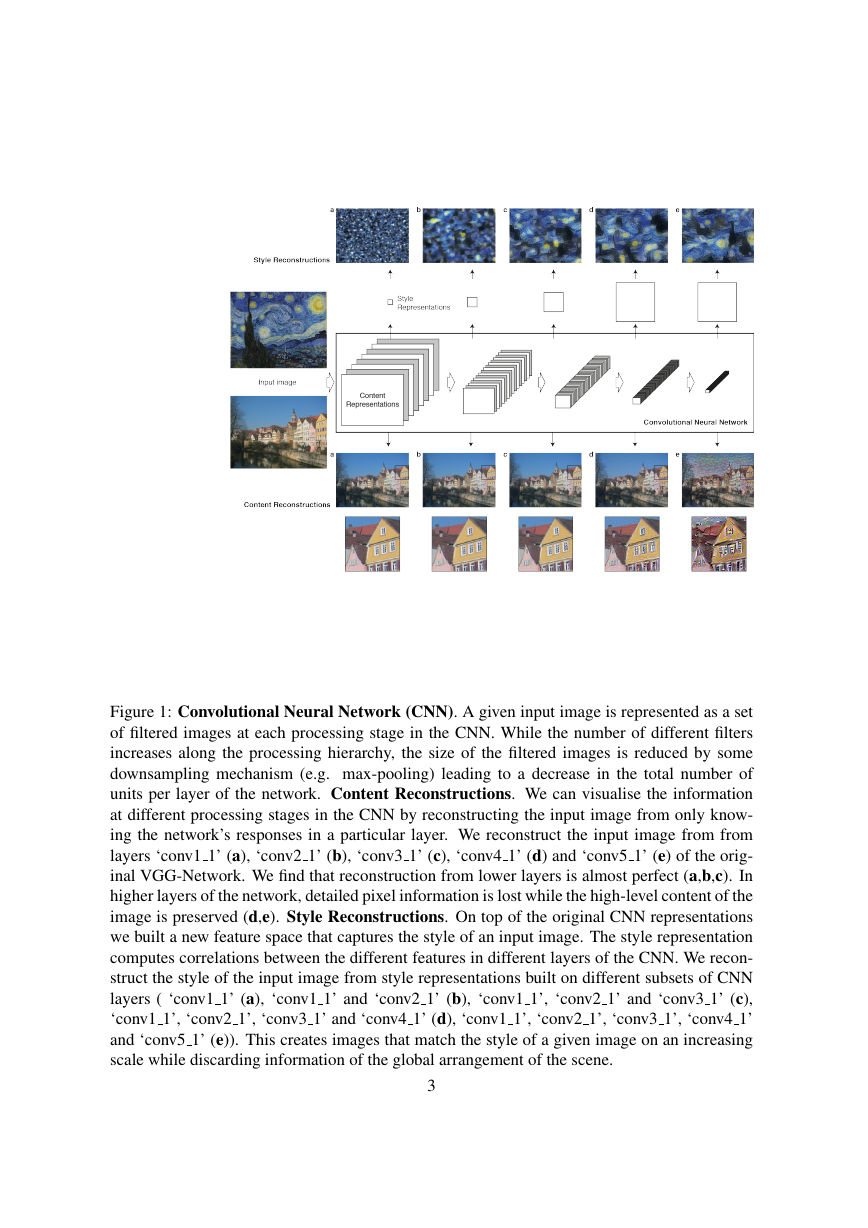

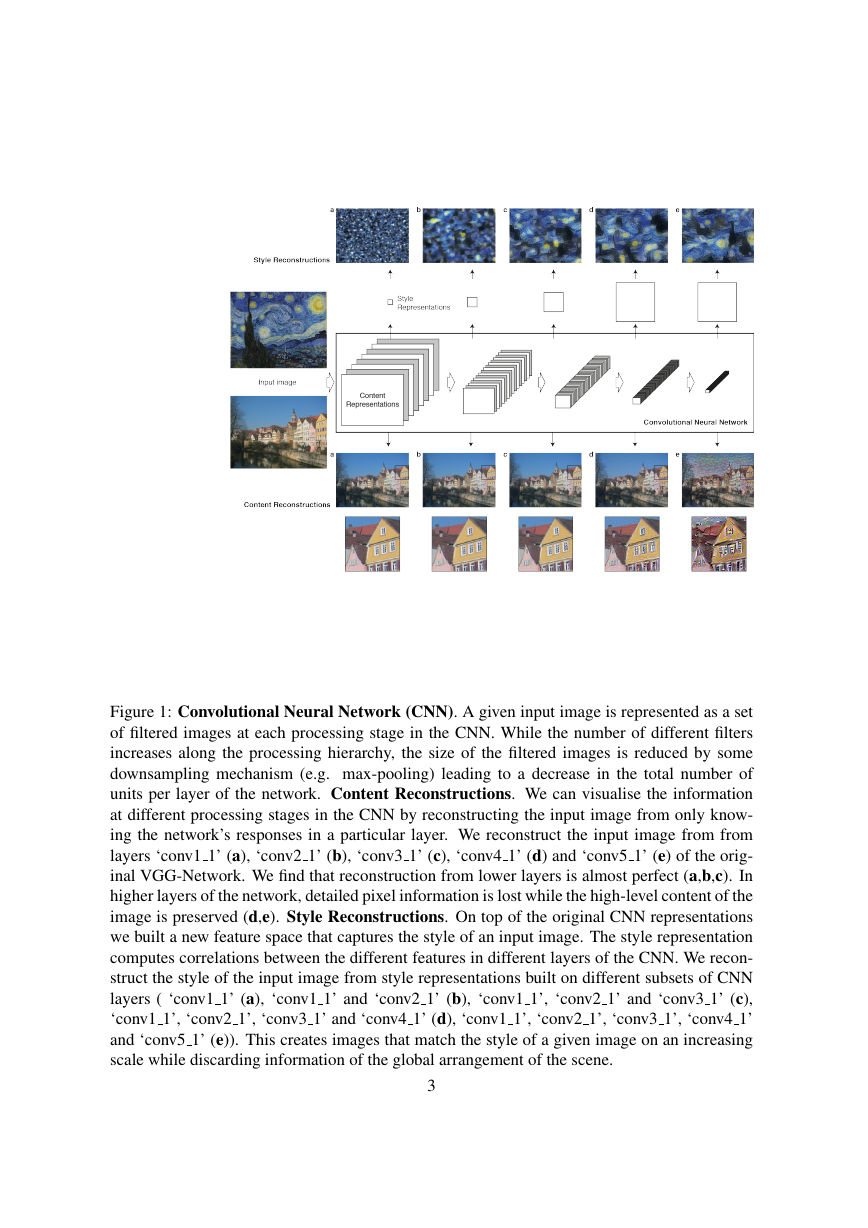

The key finding of this paper is that the representations of content and style in the Convo-

lutional Neural Network are separable. That is, we can manipulate both representations inde-

pendently to produce new, perceptually meaningful images. To demonstrate this finding, we

generate images that mix the content and style representation from two different source images.

In particular, we match the content representation of a photograph depicting the “Neckarfront”

in T¨ubingen, Germany and the style representations of several well-known artworks taken from

different periods of art (Fig 2).

The images are synthesised by finding an image that simultaneously matches the content

representation of the photograph and the style representation of the respective piece of art (see

Methods for details). While the global arrangement of the original photograph is preserved,

the colours and local structures that compose the global scenery are provided by the artwork.

Effectively, this renders the photograph in the style of the artwork, such that the appearance of

the synthesised image resembles the work of art, even though it shows the same content as the

photograph.

As outlined above, the style representation is a multi-scale representation that includes mul-

tiple layers of the neural network. In the images we have shown in Fig 2, the style representation

included layers from the whole network hierarchy. Style can also be defined more locally by

4

�

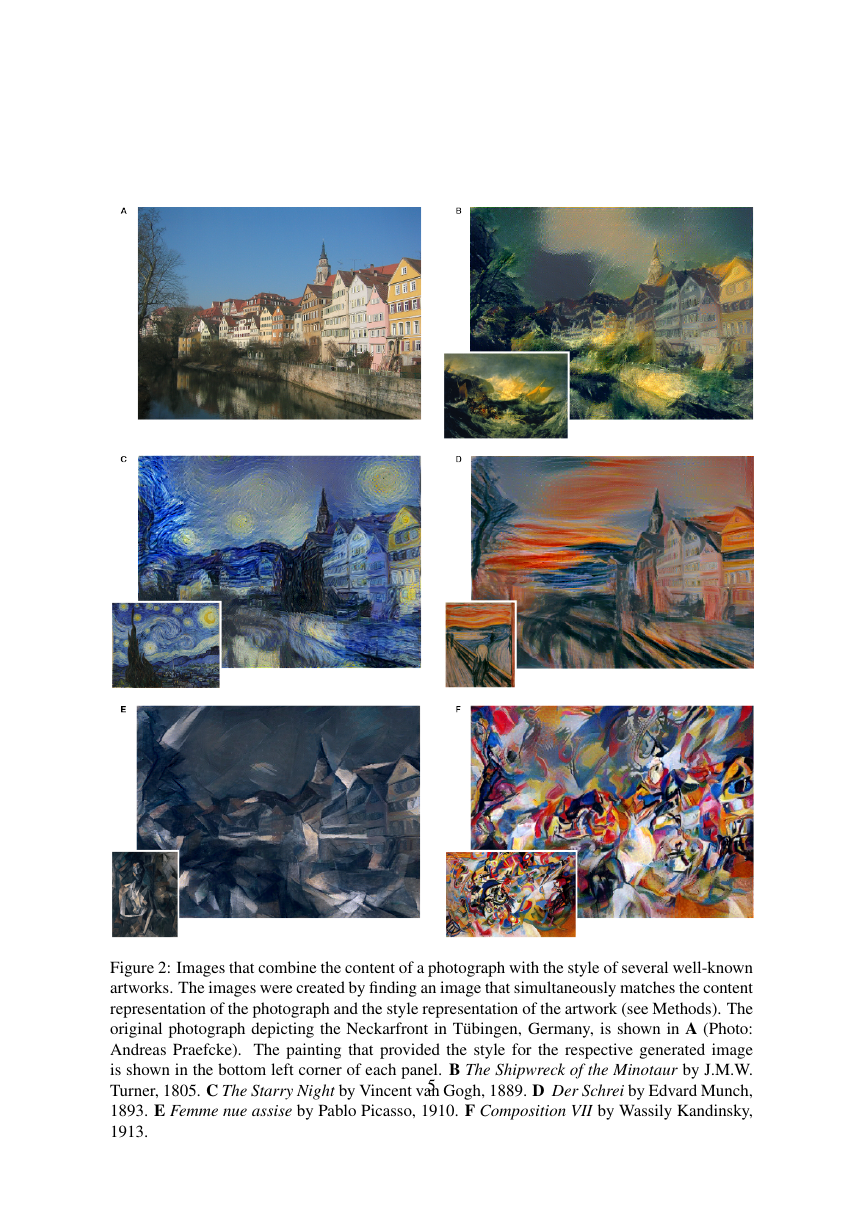

Figure 2: Images that combine the content of a photograph with the style of several well-known

artworks. The images were created by finding an image that simultaneously matches the content

representation of the photograph and the style representation of the artwork (see Methods). The

original photograph depicting the Neckarfront in T¨ubingen, Germany, is shown in A (Photo:

Andreas Praefcke). The painting that provided the style for the respective generated image

is shown in the bottom left corner of each panel. B The Shipwreck of the Minotaur by J.M.W.

Turner, 1805. C The Starry Night by Vincent van Gogh, 1889. D Der Schrei by Edvard Munch,

1893. E Femme nue assise by Pablo Picasso, 1910. F Composition VII by Wassily Kandinsky,

1913.

5

�

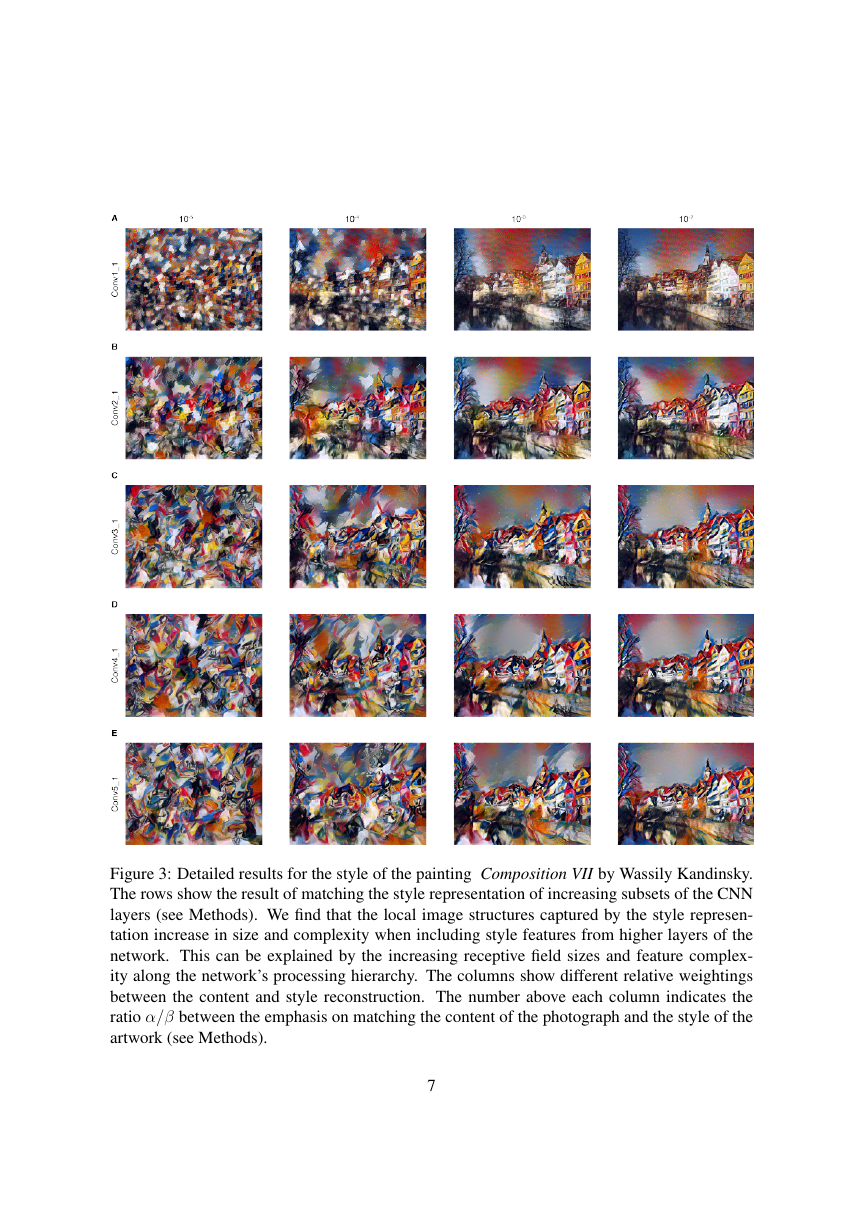

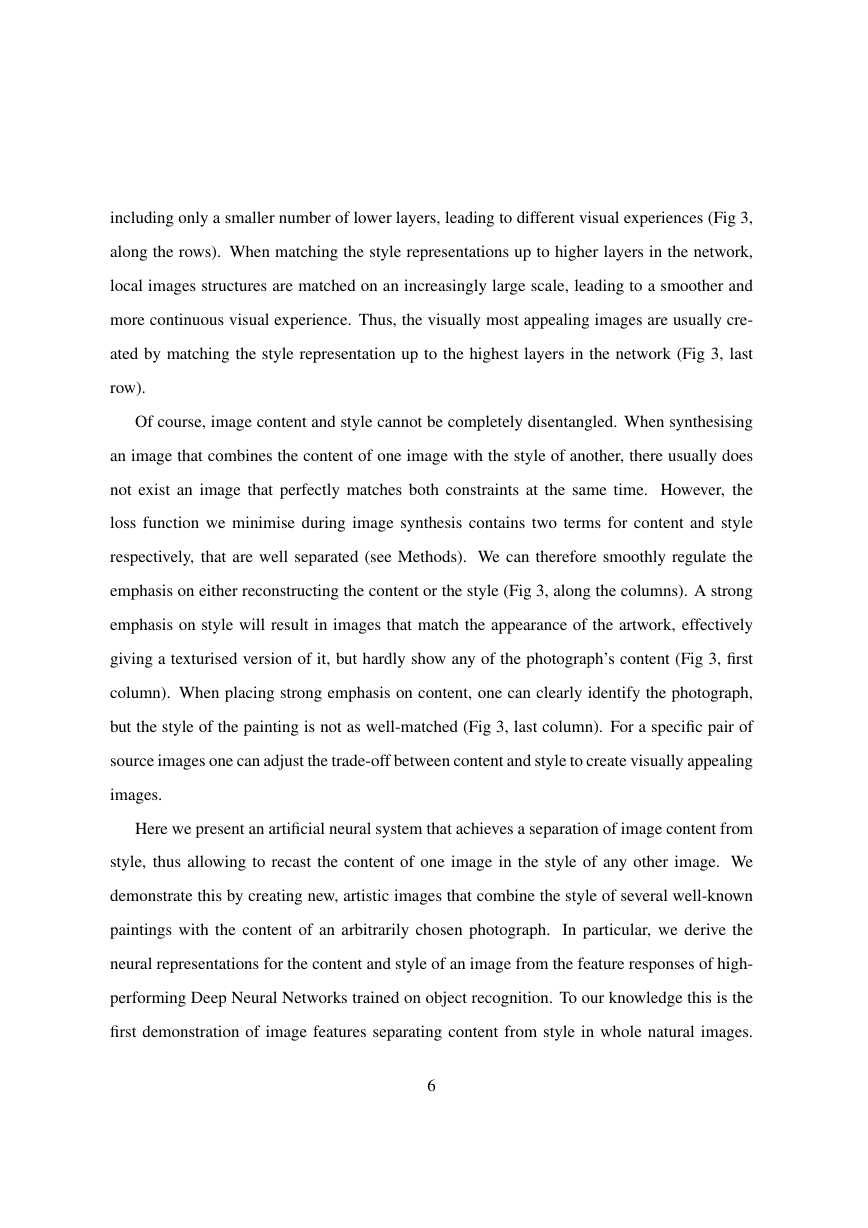

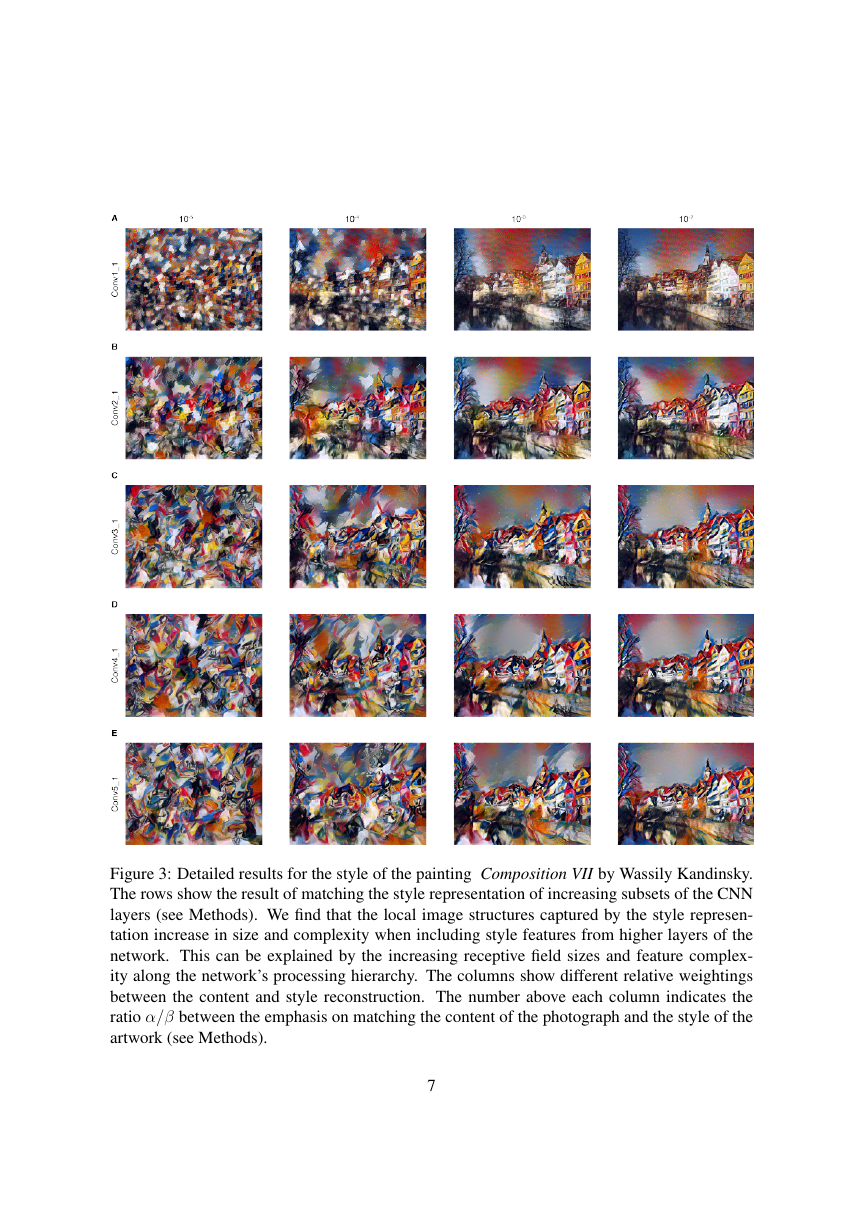

including only a smaller number of lower layers, leading to different visual experiences (Fig 3,

along the rows). When matching the style representations up to higher layers in the network,

local images structures are matched on an increasingly large scale, leading to a smoother and

more continuous visual experience. Thus, the visually most appealing images are usually cre-

ated by matching the style representation up to the highest layers in the network (Fig 3, last

row).

Of course, image content and style cannot be completely disentangled. When synthesising

an image that combines the content of one image with the style of another, there usually does

not exist an image that perfectly matches both constraints at the same time. However, the

loss function we minimise during image synthesis contains two terms for content and style

respectively, that are well separated (see Methods). We can therefore smoothly regulate the

emphasis on either reconstructing the content or the style (Fig 3, along the columns). A strong

emphasis on style will result in images that match the appearance of the artwork, effectively

giving a texturised version of it, but hardly show any of the photograph’s content (Fig 3, first

column). When placing strong emphasis on content, one can clearly identify the photograph,

but the style of the painting is not as well-matched (Fig 3, last column). For a specific pair of

source images one can adjust the trade-off between content and style to create visually appealing

images.

Here we present an artificial neural system that achieves a separation of image content from

style, thus allowing to recast the content of one image in the style of any other image. We

demonstrate this by creating new, artistic images that combine the style of several well-known

paintings with the content of an arbitrarily chosen photograph.

In particular, we derive the

neural representations for the content and style of an image from the feature responses of high-

performing Deep Neural Networks trained on object recognition. To our knowledge this is the

first demonstration of image features separating content from style in whole natural images.

6

�

Figure 3: Detailed results for the style of the painting Composition VII by Wassily Kandinsky.

The rows show the result of matching the style representation of increasing subsets of the CNN

layers (see Methods). We find that the local image structures captured by the style represen-

tation increase in size and complexity when including style features from higher layers of the

network. This can be explained by the increasing receptive field sizes and feature complex-

ity along the network’s processing hierarchy. The columns show different relative weightings

between the content and style reconstruction. The number above each column indicates the

ratio ↵/� between the emphasis on matching the content of the photograph and the style of the

artwork (see Methods).

7

�

Previous work on separating content from style was evaluated on sensory inputs of much lesser

complexity, such as characters in different handwriting or images of faces or small figures in

different poses.12, 13

In our demonstration, we render a given photograph in the style of a range of well-known

artworks. This problem is usually approached in a branch of computer vision called non-

photorealistic rendering (for recent review see14). Conceptually most closely related are meth-

ods using texture transfer to achieve artistic style transfer.15–19 However, these previous ap-

proaches mainly rely on non-parametric techniques to directly manipulate the pixel representa-

tion of an image. In contrast, by using Deep Neural Networks trained on object recognition, we

carry out manipulations in feature spaces that explicitly represent the high level content of an

image.

Features from Deep Neural Networks trained on object recognition have been previously

used for style recognition in order to classify artworks according to the period in which they

were created.20 There, classifiers are trained on top of the raw network activations, which we

call content representations. We conjecture that a transformation into a stationary feature space

such as our style representation might achieve even better performance in style classification.

In general, our method of synthesising images that mix content and style from different

sources, provides a new, fascinating tool to study the perception and neural representation of

art, style and content-independent image appearance in general. We can design novel stimuli

that introduce two independent, perceptually meaningful sources of variation: the appearance

and the content of an image. We envision that this will be useful for a wide range of experimen-

tal studies concerning visual perception ranging from psychophysics over functional imaging

to even electrophysiological neural recordings. In fact, our work offers an algorithmic under-

standing of how neural representations can independently capture the content of an image and

the style in which it is presented. Importantly, the mathematical form of our style representa-

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc