Cover

Half-title

Title

Copyright

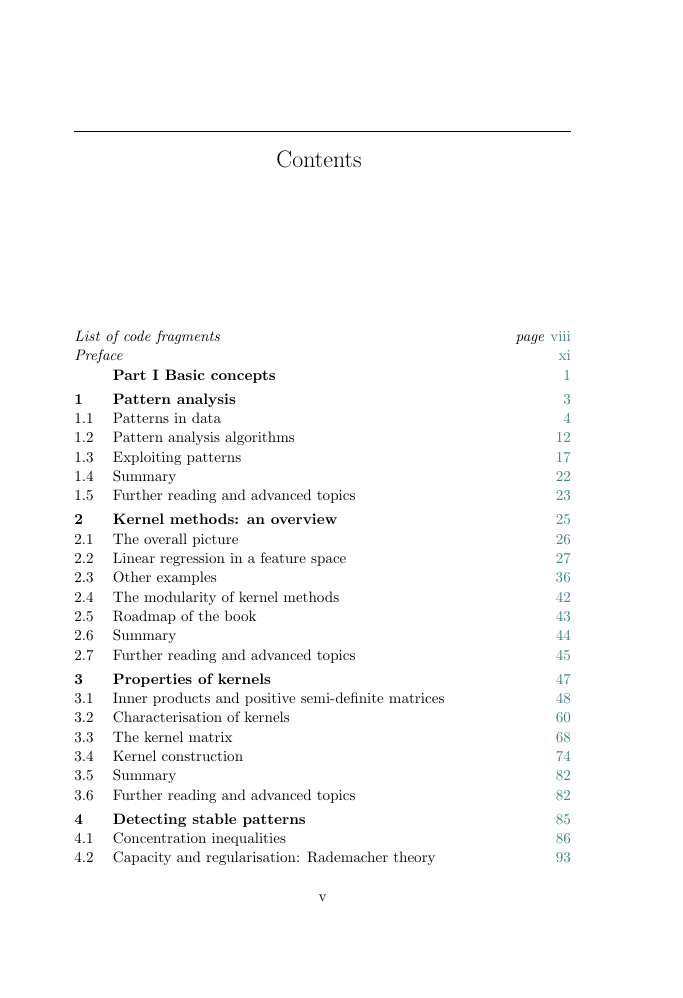

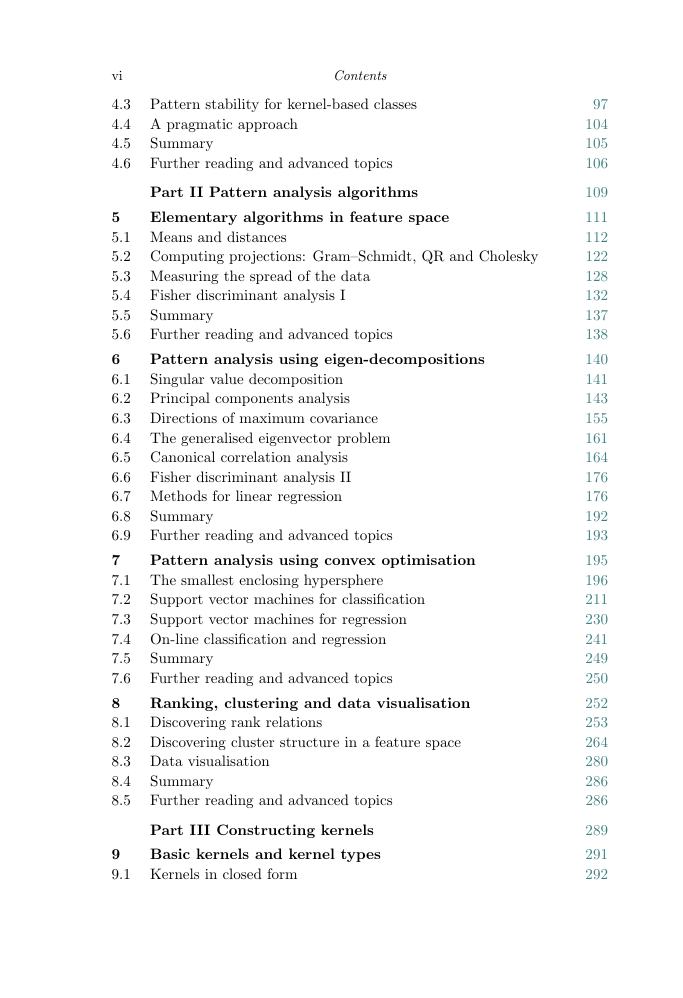

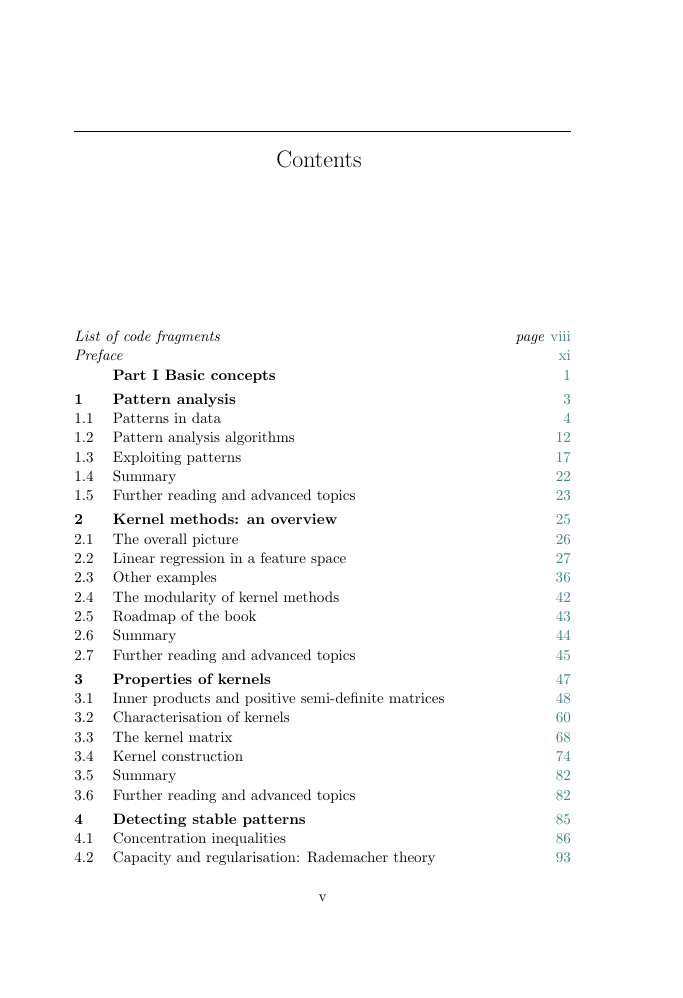

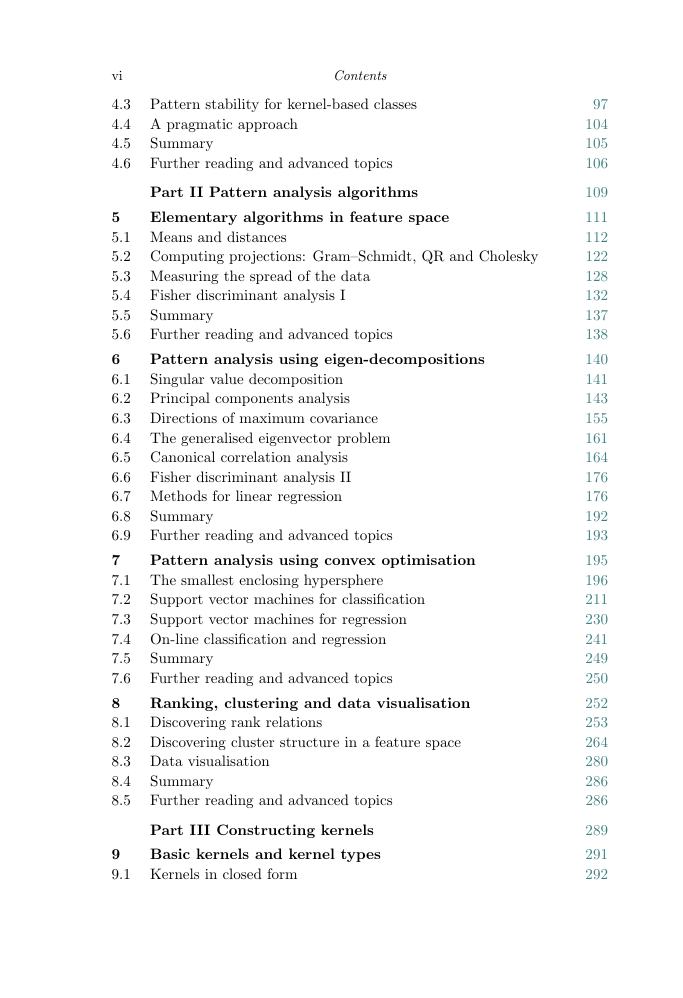

Contents

Code fragments

Preface

Part I Basic concepts

1 Pattern analysis

1.1 Patterns in data

1.1.1 Data

1.1.2 Patterns

1.2 Pattern analysis algorithms

1.2.1 Statistical stability of patterns

1.2.2 Detecting patterns by recoding

1.3 Exploiting patterns

1.3.1 The overall strategy

1.3.2 Common pattern analysis tasks

1.4 Summary

1.5 Further reading and advanced topics

2 Kernel methods: an overview

2.1 The overall picture

2.2 Linear regression in a feature space

2.2.1 Primal linear regression

2.2.2 Ridge regression: primal and dual

2.2.3 Kernel-defined nonlinear feature mappings

2.3 Other examples

2.3.1 Algorithms

2.3.2 Kernels

2.4 The modularity of kernel methods

2.5 Roadmap of the book

2.6 Summary

2.7 Further reading and advanced topics

3 Properties of kernels

3.1 Inner products and positive semi-definite matrices

3.1.1 Hilbert spaces

3.1.2 Gram matrix

3.2 Characterisation of kernels

3.3 The kernel matrix

3.4 Kernel construction

3.4.1 Operations on kernel functions

3.4.2 Operations on kernel matrices

3.5 Summary

3.6 Further reading and advanced topics

4 Detecting stable patterns

4.1 Concentration inequalities

4.2 Capacity and regularisation: Rademacher theory

4.3 Pattern stability for kernel-based classes

4.4 A pragmatic approach

4.5 Summary

4.6 Further reading and advanced topics

Part II Pattern analysis algorithms

5 Elementary algorithms in feature space

5.1 Means and distances

5.1.1 A simple algorithm for novelty-detection

5.1.2 A simple algorithm for classification

5.2 Computing projections: Gram–Schmidt, QR and Cholesky

5.3 Measuring the spread of the data

5.4 Fisher discriminant analysis I

5.5 Summary

5.6 Further reading and advanced topics

6 Pattern analysis using eigen-decompositions

6.1 Singular value decomposition

6.2 Principal components analysis

6.2.1 Kernel principal components analysis

6.2.2 Stability of principal components analysis

6.3 Directions of maximum covariance

6.4 The generalised eigenvector problem

6.5 Canonical correlation analysis

6.6 Fisher discriminant analysis II

6.7 Methods for linear regression

6.7.1 Partial least squares

6.7.2 Kernel partial least squares

6.8 Summary

6.9 Further reading and advanced topics

7 Pattern analysis using convex optimisation

7.1 The smallest enclosing hypersphere

7.1.1 The smallest hypersphere containing a set of points

7.1.2 Stability of novelty-detection

7.1.3 Hyperspheres containing most of the points

7.2 Support vector machines for classification

7.2.1 The maximal margin classifier

7.2.2 Soft margin classifiers

7.3 Support vector machines for regression

7.3.1 Stability of regression

7.3.2 Ridge regression

7.3.3 ε-insensitive regression

7.4 On-line classification and regression

7.5 Summary

7.6 Further reading and advanced topics

8 Ranking, clustering and data visualisation

8.1 Discovering rank relations

8.1.1 Batch ranking

8.1.2 On-line ranking

8.2 Discovering cluster structure in a feature space

8.2.1 Measuring cluster quality

8.2.2 Greedy solution: k-means

8.2.3 Relaxed solution: spectral methods

8.3 Data visualisation

8.4 Summary

8.5 Further reading and advanced topics

Part III Constructing kernels

9 Basic kernels and kernel types

9.1 Kernels in closed form

9.2 ANOVA kernels

9.3 Kernels from graphs

9.4 Diffusion kernels on graph nodes

9.5 Kernels on sets

9.6 Kernels on real numbers

9.7 Randomised kernels

9.8 Other kernel types

9.8.1 Kernels from successive embeddings

9.8.2 Kernels over general structures

9.8.3 Kernels from generative information

9.9 Summary

9.10 Further reading and advanced topics

10 Kernels for text

10.1 From bag of words to semantic space

10.1.1 Representing text

10.1.2 Semantic issues

10.2 Vector space kernels

10.2.1 Designing semantic kernels

10.2.2 Designing the proximity matrix

10.3 Summary

10.4 Further reading and advanced topics

11 Kernels for structured data: strings, trees, etc.

11.1 Comparing strings and sequences

11.2 Spectrum kernels

11.3 All-subsequences kernels

11.4 Fixed length subsequences kernels

11.5 Gap-weighted subsequences kernels

11.5.1 Naive implementation

11.5.2 Efficient implementation

11.5.3 Variations on the theme

11.6 Beyond dynamic programming: trie-based kernels

11.6.1 Trie computation of the p-spectrum kernels

11.6.2 Trie-based mismatch kernels

11.6.3 Trie-based restricted gap-weighted kernels

11.7 Kernels for structured data

11.7.1 Comparing trees

11.7.2 Structured data: a framework

11.8 Summary

11.9 Further reading and advanced topics

12 Kernels from generative models

12.1 P-kernels

12.1.1 Conditional-independence (CI) and marginalisation

12.1.2 Representing multivariate distributions

12.1.3 Fixed length strings generated by a hidden binomial model

12.1.4 Fixed length strings generated by a hidden markov model

12.1.5 Pair hidden Markov model kernels

12.1.6 Hidden tree model kernels

12.2 Fisher kernels

12.2.1 From probability to geometry

12.2.2 Fisher kernels for hidden Markov models

12.3 Summary

12.4 Further reading and advanced topics

Appendix A Proofs omitted from the main text

A.1 Proof of McDiarmid’s theorem

A.2 Stability of principal components analysis

A.3 Proofs of diffusion kernels

Appendix B Notational conventions

B.1 List of symbols

B.2 Notation for Tables

Appendix C List of pattern analysis methods

C.1 Pattern analysis computations

C.2 Pattern analysis algorithms

Appendix D List of kernels

D.1 Kernel definitions and computations

D.2 Kernel algorithms

References

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc