High Dynamic Range Video

Karol Myszkowski, Rafał Mantiuk, Grzegorz Krawczyk

�

Contents

1

Introduction

1.1 Low vs. High Dynamic Range Imaging . . . . .

1.2 Device- and Scene-referred Image Representations

. . . . .

1.3 HDR Revolution . .

. . . . .

1.4 Organization of the Book . . .

. . . . .

. . . . .

1.4.1 Why HDR Video? . .

1.4.2 Chapter Overview . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . .

. . .

. . . .

. . . .

. . . .

. . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. .

. .

. .

. .

. .

. .

2 Representation of an HDR Image

. . . . .

. . . . .

. . . . .

. . . . .

2.1 Light . . . .

2.2 Color

. . . . .

. . .

2.3 Dynamic Range . . .

3 HDR Image and Video Acquisition

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . .

. . . .

. . . .

. . . . .

. . . . .

. . . . .

. .

. .

. .

Solid State Sensors . .

. . . . .

. . . . .

3.1 Capture Techniques Capable of HDR . .

. . . . .

3.1.1 Temporal Exposure Change . .

3.1.2

. . . . .

Spatial Exposure Change . . . .

3.1.3 Multiple Sensors with Beam Splitters . .

. . . . .

3.1.4

3.2 Photometric Calibration of HDR Cameras . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

3.2.1 Camera Response to Light

. . . . .

3.2.2 Mathematical Framework for Response Estimation . . . .

. . . . .

3.2.3

. . . .

. . . . .

3.2.4 Example Calibration of HDR Video Cameras . .

. . . . .

3.2.5 Quality of Luminance Measurement . . .

. . . .

3.2.6 Alternative Response Estimation Methods . . . .

. . . . .

. . . . .

. . . .

3.2.7 Discussion .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

Procedure for Photometric Calibration . .

. . .

. . . . .

. . . . .

. . . . .

. .

. .

. .

. .

. .

. .

. .

. .

. .

. .

. .

. .

. .

4 HDR Image Quality

4.1 Visual Metric Classification . .

4.2 A Visual Difference Predictor for HDR Images

. . . . .

4.2.1

Implementation . . . .

. . . . .

. . . . .

.

. . . . .

. . . .

. . . .

. . . .

. . . . .

. . . . .

. . . . .

. .

. .

. .

5 HDR Image, Video and Texture Compression

1

5

5

7

9

10

11

12

13

13

15

18

21

21

22

23

24

24

25

25

26

29

30

33

33

34

39

39

41

43

45

�

2

CONTENTS

. . .

5.1 HDR Pixel Formats and Color Spaces

5.2.1 Radiance’s HDR Format

5.2.2 OpenEXR . . . .

5.2 High Fidelity Image Formats . . .

.

. . . . .

5.3 High Fidelity Video Formats . . .

. . . . .

5.1.1 Minifloat: 16-bit Floating Point Numbers . .

. . . . .

5.1.2 RGBE: Common Exponent

. . . .

5.1.3 LogLuv: Logarithmic encoding . .

. . . . .

5.1.4 RGB Scale: low-complexity RGBE coding .

5.1.5 LogYuv: low-complexity LogLuv .

. . . . .

JND steps: Perceptually uniform encoding .

5.1.6

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

5.4.1

. . . . .

5.4.2 Wavelet Compander

. . . . .

5.4.3 Backward Compatible HDR MPEG . . . . .

5.4.4

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

Scalable High Dynamic Range Video Coding from the JVT .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

5.3.1 Digital Motion Picture Production .

5.3.2 Digital Cinema .

. . . . .

5.3.3 MPEG for High-quality Content . .

5.3.4 HDR Extension of MPEG-4 . . . .

5.4 Backward Compatible Compression . . . .

. . . . .

. . . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

5.5 High Dynamic Range Texture Compression . . . . .

5.6 Conclusions . .

. . . . .

. . . . .

JPEG HDR . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . .

46

47

47

48

49

50

50

54

55

55

55

56

56

57

58

58

59

60

60

63

65

67

69

69

70

71

72

74

75

76

80

80

81

84

86

89

89

91

95

96

97

98

101

. . . .

. . . .

6 Tone Reproduction

6.1 Tone Mapping Operators . . . . .

. . . . .

6.1.1 Luminance Domain Operators . . .

6.1.2 Local Adaptation . . . . .

. . . . .

Prevention of Halo Artifacts . . . .

6.1.3

Segmentation Based Operators . . .

6.1.4

6.1.5 Contrast Domain Operators

. . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

6.2 Tone Mapping Studies with Human Subjects . . . . .

6.3 Objective Evaluation of Tone Mapping . . .

. . . . .

6.3.1 Contrast Distortion in Tone Mapping . . . .

6.3.2 Analysis of Tone Mapping Algorithms . . . .

. . . . .

. . . . .

6.4 Temporal Aspects of Tone Reproduction . .

6.5 Conclusions . .

. . . . .

. . . . .

. . . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

7 HDR Display Devices

. . .

7.1 HDR Display Requirements

7.2 Dual-modulation Displays

. . . .

7.3 Laser Projection Systems . . . . .

7.4 Conclusions . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . .

. . . .

. . . .

. . . .

. . . . .

. . . . .

. . . . .

. . . . .

8 LDR2HDR: Recovering Dynamic Range in Legacy Content

8.1 Bit-depth Expansion and Decontouring Techniques .

8.2 Reversing Tone Mapping Curve

. . . . .

. . . . .

.

. . . .

. . . .

. . . . .

. . . . .

�

CONTENTS

. .

8.3 Single Image-based Camera Response Approximation .

. .

. . . .

8.4 Recovering Clipped Pixels . .

8.5 Handling Video On-the-fly . .

. .

. . . .

8.6 Exploiting Image Capturing Artifacts for Upgrading Dynamic Range .

8.7 Conclusions . . . . .

. .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . .

. . . . .

. . . . .

9 HDRI in Computer Graphics

9.1 Computer Graphics as the Source of HDR Images and Video . . .

9.2 HDR Images and Video as the Input Data for Computer Graphics .

. . . . .

. . . . .

. . . . .

9.2.1 HDR Video-based Lighting . .

. . . .

9.2.2 HDR Imaging in Reflectance Measurements . . .

. . . .

9.3 Conclusions . . . . .

. . . . .

. . . . .

. . . . .

. . . . .

10 Software

. .

. . . . .

10.1 pfstools

. . . . .

. . . . .

10.2 pfscalibration . . . .

10.3 pfstmo . . .

. . . . .

. . . . .

10.4 HDR Visible Differences Predictor . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . .

. . . .

. . . .

. . . .

. . . . .

. . . . .

. . . . .

. . . . .

. .

. .

. .

. .

. .

. .

. .

. .

. .

3

104

106

108

110

111

113

113

116

117

130

136

139

139

140

140

140

�

4

CONTENTS

�

Chapter 1

Introduction

1.1 Low vs. High Dynamic Range Imaging

The majority of existing digital imagery and video material capture only a fraction

of the visual information that is visible to the human eye and are not of sufficient

quality for reproduction by the future generation of display devices. The limiting factor

is not the resolution, since most consumer level digital cameras can take images of

higher number of pixels than most of displays can offer. The problem is the limited

color gamut and even more limited dynamic range (contrast) captured by cameras and

stored by the majority of image and video formats. To emphasize these limitations of

traditional imaging technology it is often called low-dynamic range or simply LDR.

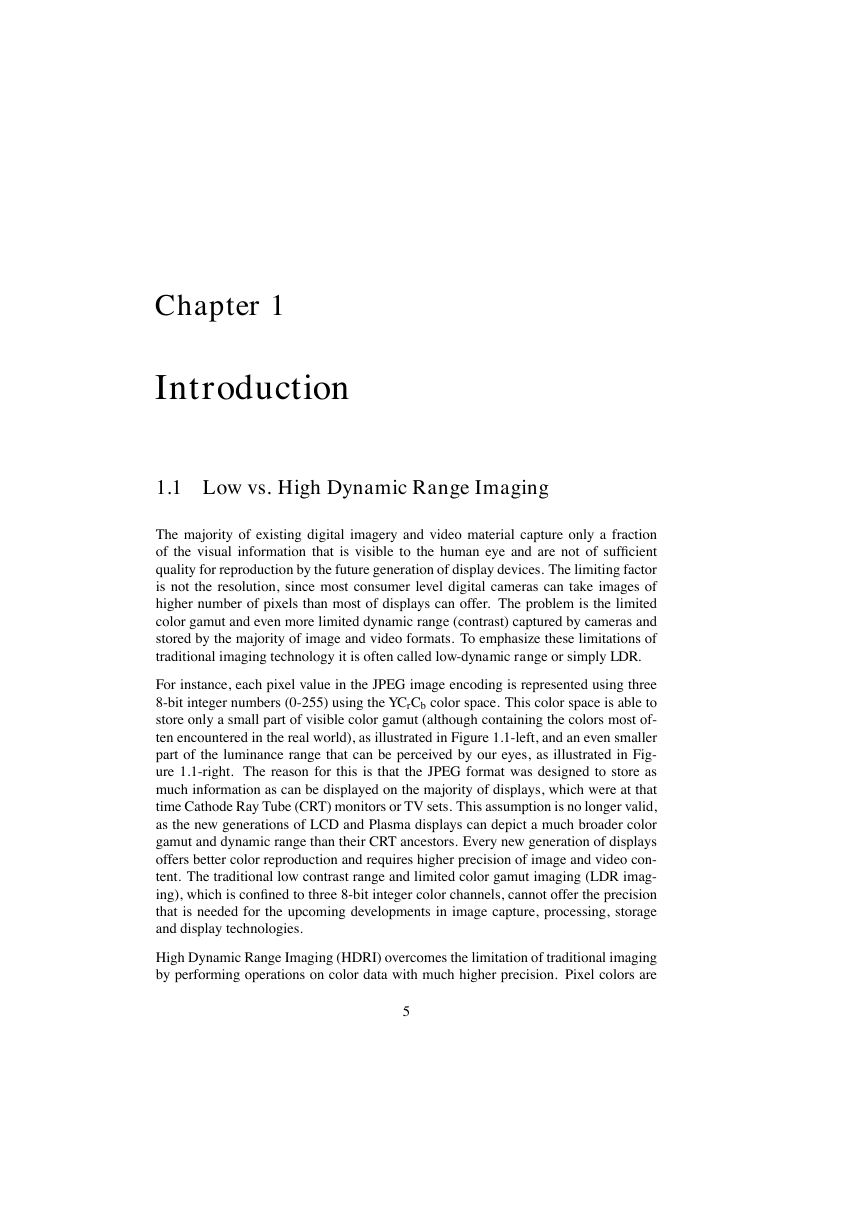

For instance, each pixel value in the JPEG image encoding is represented using three

8-bit integer numbers (0-255) using the YCrCb color space. This color space is able to

store only a small part of visible color gamut (although containing the colors most of-

ten encountered in the real world), as illustrated in Figure 1.1-left, and an even smaller

part of the luminance range that can be perceived by our eyes, as illustrated in Fig-

ure 1.1-right. The reason for this is that the JPEG format was designed to store as

much information as can be displayed on the majority of displays, which were at that

time Cathode Ray Tube (CRT) monitors or TV sets. This assumption is no longer valid,

as the new generations of LCD and Plasma displays can depict a much broader color

gamut and dynamic range than their CRT ancestors. Every new generation of displays

offers better color reproduction and requires higher precision of image and video con-

tent. The traditional low contrast range and limited color gamut imaging (LDR imag-

ing), which is confined to three 8-bit integer color channels, cannot offer the precision

that is needed for the upcoming developments in image capture, processing, storage

and display technologies.

High Dynamic Range Imaging (HDRI) overcomes the limitation of traditional imaging

by performing operations on color data with much higher precision. Pixel colors are

5

�

6

CHAPTER 1. INTRODUCTION

LCD Display [2006] (0.5-500 cd/m

2

)

CRT Display (1-100 cd/m

2

)

Full Moon

3

6 10 cd/m

•

2

2 10 cd/m

•

Moonless Sky

2

3 10 cd/m

-5

•

Sun

9

2

-6

10

-4

10

0.01

1

100

4

10

6

10

8

10

10

10

2

Luminance [cd/m ]

Figure 1.1: Left:

the standard color gamut frequently used in traditional imaging

(CCIR-705), compared to the full visible color gamut. Right: real-world luminance

values compared with the range of luminance that can be displayed on CRT and LDR

monitors. Most digital content is stored in a format that at most preserves the dynamic

range of typical displays.

specified in HDR images as a triple of floating point values (usually 32-bit per color

channel), providing accuracy that exceeds the capabilities of the human visual system

in any viewing conditions. By its inherent colorimetric precision, HDRI can represent

all colors found in real world that can be perceived by the human eye.

HDRI does not only provide higher precision, but also enables the synthesis, storage

and visualization of a range of perceptual cues that are not achievable with traditional

imaging. Most of the LDR imaging standards and color spaces have been developed to

match the needs of office or display illumination conditions. When viewing such scenes

or images in such conditions, our visual system operates in a mixture of day-light and

dim-light vision state, so called the mesopic vision. When viewing out-door scenes,

we use day-light perception of colors, so called the photopic vision. This distinction

is important for digital imaging as both types of vision shows different performance

and result in different perception of colors. HDRI can represent images of luminance

range fully covering both the photopic and the mesopic vision, thus making distinction

between them possible. One of the differences between mesopic and photopic vision is

the impression of colorfulness. We tend to regard objects more colorful when they are

brightly illuminated, which is the phenomenon that is called Hunt’s effect. To render

enhanced colorfulness properly, digital images must preserve information about the

actual level of luminance of the original scene, which is not possible in the case of

traditional imaging.

Real-world scenes are not only brighter and more colorful than their digital reproduc-

tions, but also contain much higher contrast, both local between neighboring objects,

and global between distant objects. The eye has evolved to cope with such high con-

trast and its presence in a scene evokes important perceptual cues. Traditional imaging,

unlike HDRI, is not able to represent such high-contrast scenes. Similarly, traditional

�

1.2. DEVICE- AND SCENE-REFERRED IMAGE REPRESENTATIONS

7

images can hardly represent common visual phenomena, such as self-luminous sur-

faces (sun, shining lamps) and bright specular highlights. They also do not contain

enough information to reproduce visual glare (brightening of the areas surrounding

shining objects) and a short-time dazzle due to sudden increase of the brightness of a

scene (e.g. when exposed to the sunlight after staying indoors). To faithfully represent,

store and then reproduce all these effects, the original scene must be stored and treated

using high fidelity HDR techniques.

1.2 Device- and Scene-referred Image Representations

To accommodate all discussed requirements imposed on HDRI a common format of

data is required to enable their efficient transfer and processing on the way from HDR

acquisition to HDR display devices. Here again fundamental differences between im-

age formats used in traditional imaging and HDRI arise, which we address in this

section.

Commonly used LDR image formats (JPEG, PNG, TIFF, etc.) contain data that is tai-

lored to particular display devices: cameras, CRT or LCD displays. For example, two

JPEG images shown using two different LCD displays may be significantly different

due to dissimilar image processing, color filters, gamma correction, and so on. Obvi-

ously, such representation of images vaguely relates to the actual photometric proper-

ties of the scene it depicts, but it is dependent on a display device. Therefore those

formats can be considered as device-referred (also known as output-referred), since

they are tightly coupled with the capabilities and characteristic of a particular imaging

device.

ICC color profiles can be used to convert visual data from one device-referred format

to another. Such profiles define the colorimetric properties of a device for which the

image is intended for. Problems arise if the two devices have different color gamuts

or dynamic ranges, in which case a conversion from one format to another usually

involves the loss of some visual information. The algorithms for the best reproduction

of LDR images on the output media of different color gamut have been thoroughly

studied [1] and CIE technical committee (CIE Division 8: TC8-03) have been started

to choose the best algorithm. However, as for now, the committee has not been able

to select a single algorithm that would give reliable results in all cases. The problem

is even more difficult when an image captured with an HDR camera is converted to

the color space of a low-dynamic range monitor (see a multitude of tone reproduction

algorithms discussed in Chapter 6). Obviously, the ICC profiles cannot be easily used

to facilitate interchange of data between LDR and HDR devices.

Scene-referred representation of images offers a much simpler solution to this prob-

lem. The scene-referred image encodes the actual photometric characteristic of a scene

it depicts. Conversion from such common representation, which directly corresponds

to physical luminance or spectral radiance values, to a format suitable for a particu-

lar device is the responsibility of that device. This should guarantee the best possible

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc