A Survey on Contextual Embeddings

Qi Liu‡, Matt J. Kusner†∗, Phil Blunsom‡⋄,

‡University of Oxford ⋄DeepMind

†University College London ∗The Alan Turing Institute

‡{firstname.lastname}@cs.ox.ac.uk

†m.kusner@ucl.ac.uk

0

2

0

2

r

a

M

6

1

]

L

C

.

s

c

[

1

v

8

7

2

7

0

.

3

0

0

2

:

v

i

X

r

a

Abstract

Contextual embeddings, such as ELMo and

BERT, move beyond global word represen-

tations like Word2Vec and achieve ground-

breaking performance on a wide range of natu-

ral language processing tasks. Contextual em-

beddings assign each word a representation

based on its context, thereby capturing uses

of words across varied contexts and encod-

ing knowledge that transfers across languages.

In this survey, we review existing contextual

embedding models, cross-lingual polyglot pre-

training, the application of contextual embed-

dings in downstream tasks, model compres-

sion, and model analyses.

1 Introduction

Distributional word representations (Turian et al.,

2010; Mikolov et al., 2013; Pennington et al.,

2014) trained in an unsupervised manner on

large-scale corpora are widely used in modern

natural language processing systems. However,

these approaches only obtain a single global rep-

resentation for each word, ignoring their context.

Different from traditional word representations,

contextual embeddings move beyond word-level

semantics in that each token is associated with a

representation that is a function of the entire input

sequence. These context-dependent representa-

tions can capture many syntactic and semantic

properties of words under diverse linguistic

Previous work (Peters et al., 2018;

contexts.

Devlin et al., 2018; Yang et al., 2019; Raffel et al.,

2019) has shown that contextual embeddings pre-

trained on large-scale unlabelled corpora achieve

state-of-the-art performance on a wide range of

natural language processing tasks, such as text

classification, question answering and text sum-

marization. Further analyses (Liu et al., 2019a;

Hewitt and Liang,

2019; Hewitt and Manning,

2019; Tenney et al., 2019a) demonstrate that

contextual embeddings are capable of learning

useful and transferable representations across

languages.

The rest of the survey is organized as follows.

In Section 2, we define the concept of contextual

embeddings. In Section 3, we introduce existing

methods for obtaining contextual embeddings. In

Section 4, we present the pre-training methods of

contextual embeddings on multi-lingual corpora.

In Section 5, we describe methods for applying

pre-trained contextual embeddings in downstream

tasks. In Section 6, we detail model compression

methods.

In Section 7, we survey analyses that

have aimed to identify the linguistic knowledge

learned by contextual embeddings. We conclude

the survey by highlighting some challenges for fu-

ture research in Section 8.

2 Token Embeddings

Consider a text corpus that

is represented as

a sequence S of tokens, (t1, t2, ..., tN ). Dis-

tributed representations of words (Harris, 1954;

Bengio et al., 2003) associate each token ti with

a dense feature vector hti . Traditional word em-

bedding techniques aim to learn a global word em-

bedding matrix E ∈ RV ×d, where V is the vo-

cabulary size and d is the number of dimensions.

Specifically, each row ei of E corresponds to the

global embedding of word type i in the vocabu-

lary V . Well-known models for learning word em-

beddings include Word2vec (Mikolov et al., 2013)

and Glove (Pennington et al., 2014). On the

other hand, methods that learn contextual embed-

dings associate each token ti with a represen-

tation that is a function of the entire input se-

quence S, i.e. hti = f (et1 , et2 , ..., etN ), where

each input token tj is usually mapped to its non-

contextualized representation etj first, before ap-

plying an aggregation function f . These context-

�

dependent

representations are better suited to

capture sequence-level semantics (e.g. polysemy)

than non-contextual word embeddings. There are

many model architectures for f , which we review

here. We begin by describing pre-training meth-

ods for learning contextual embeddings that can

be used in downstream tasks.

3 Pre-training Methods for Contextual

Embeddings

In large part, pre-training contextual embeddings

can be divided into either unsupervised methods

(e.g. language modelling and its variants) or super-

vised methods (e.g. machine translation and natu-

ral language inference).

3.1 Unsupervised Pre-training via Language

Modeling

The prototypical way to learn distributed token

embeddings is via language modelling. A lan-

guage model is a probability distribution over a

sequence of tokens. Given a sequence of N to-

kens, (t1, t2, ..., tN ), a language model factorizes

the probability of the sequence as:

p(t1, t2, ..., tN ) =

N

Y

i=1

p(ti|t1, t2, ..., ti−1).

(1)

Language modelling uses maximum likelihood

estimation (MLE), often penalized with regular-

ization terms, to estimate model parameters. A

left-to-right language model takes the left con-

text, t1, t2, ..., ti−1, of ti

into account for esti-

mating the conditional probability.

Language

models are usually trained using large-scale un-

labelled corpora. The conditional probabilities

are most commonly learned using neural networks

(Bengio et al., 2003), and the learned represen-

tations have been proven to be transferable to

downstream natural language understanding tasks

(Dai and Le, 2015; Ramachandran et al., 2016).

Precursor Models. Dai and Le (2015) is the first

work we are aware of that uses language modelling

together with a sequence autoencoder to improve

sequence learning with recurrent networks. Thus,

it can be thought of as a precursor to modern con-

textual embedding methods. Pre-trained on the

datasets IMDB, Rotten Tomatoes, 20 Newsgroups,

and DBpedia, the model is then fine-tuned on senti-

ment analysis and text classification tasks, achiev-

ing strong performance compared to randomly-

initialized models.

Ramachandran et al.

(2016) extends Dai and

Le (2015) by proposing a pre-training method to

improve the accuracy of sequence to sequence

(seq2seq) models. The encoder and decoder of the

seq2seq model is initialized with the pre-trained

weights of two language models. These language

models are separately trained on either the News

Crawl English or German corpora for machine

translation, while both are initialized with the lan-

guage model trained with the English Gigaword

corpus for abstractive summarization. These pre-

trained models are fine-tuned on the WMT En-

glish → German task and the CNN/Daily Mail

corpus, respectively, achieving better results over

baselines without pre-training.

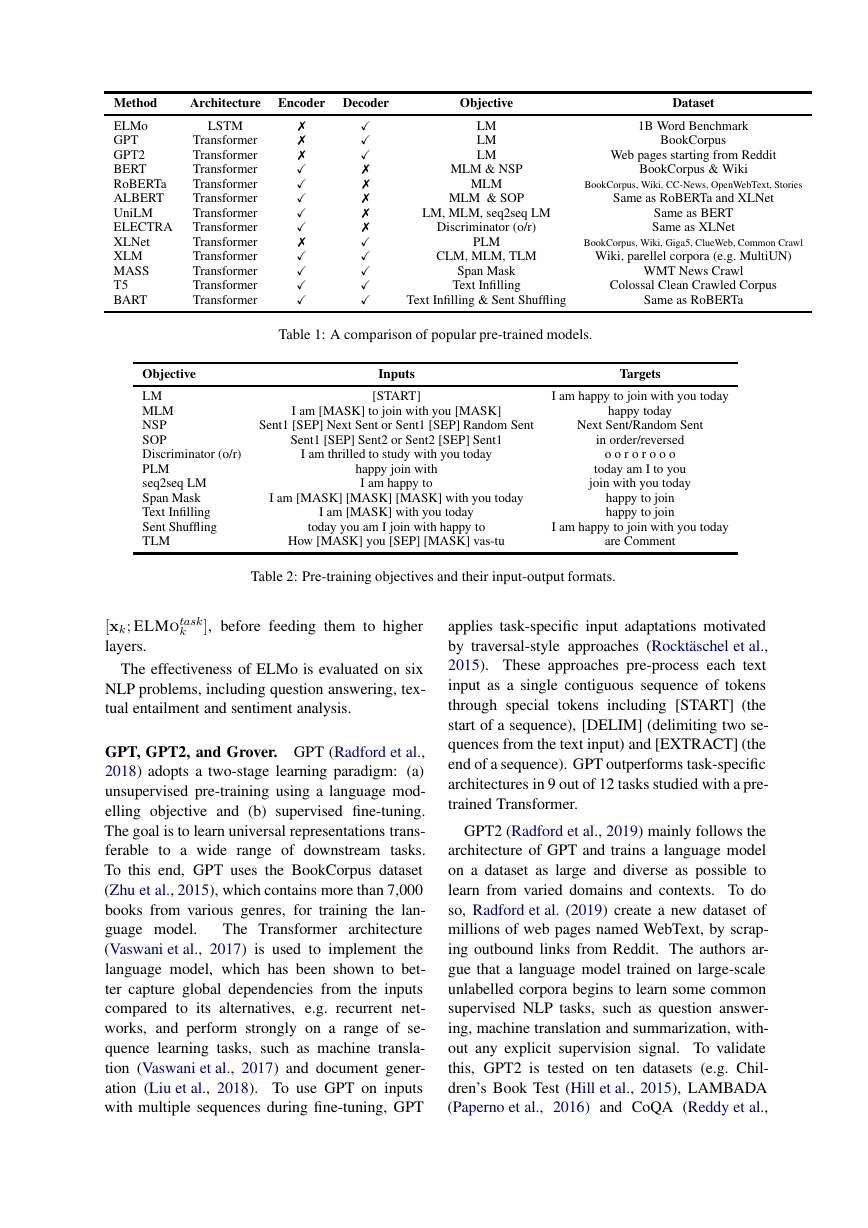

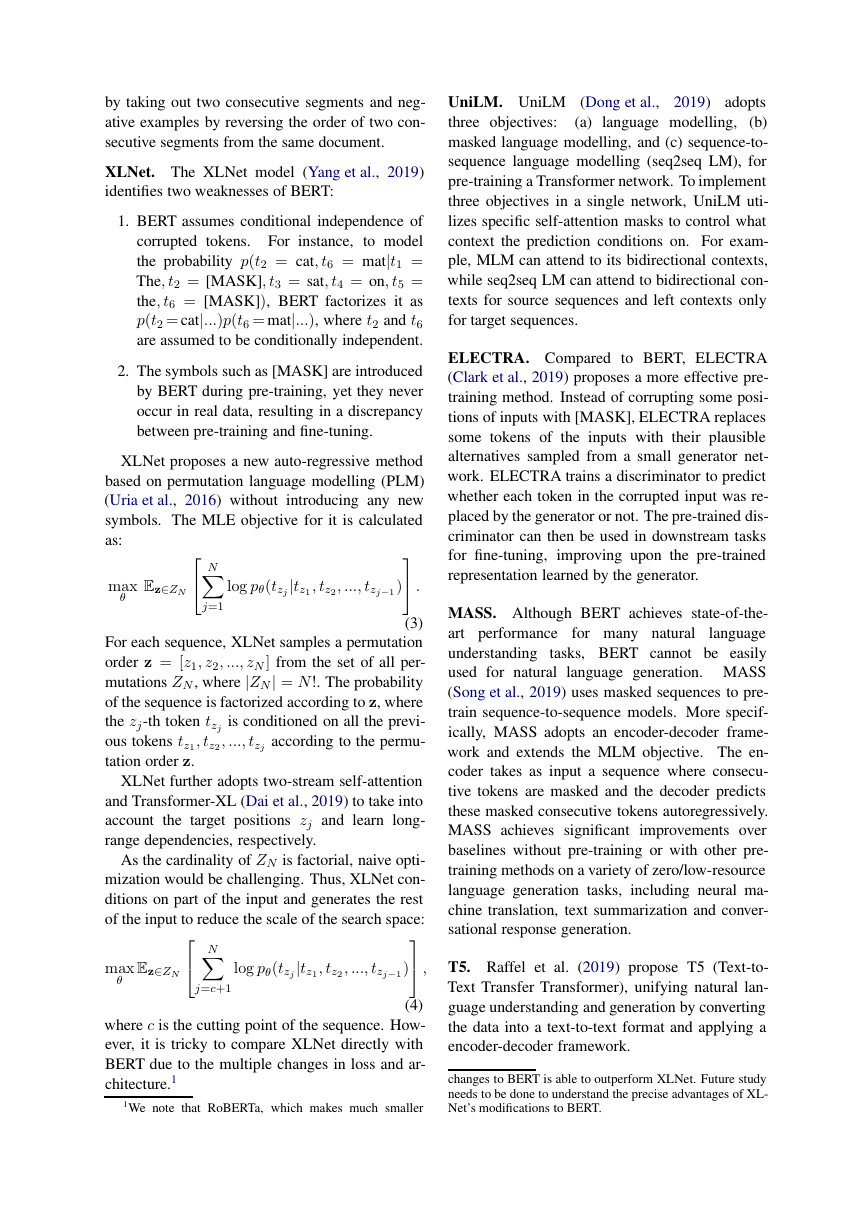

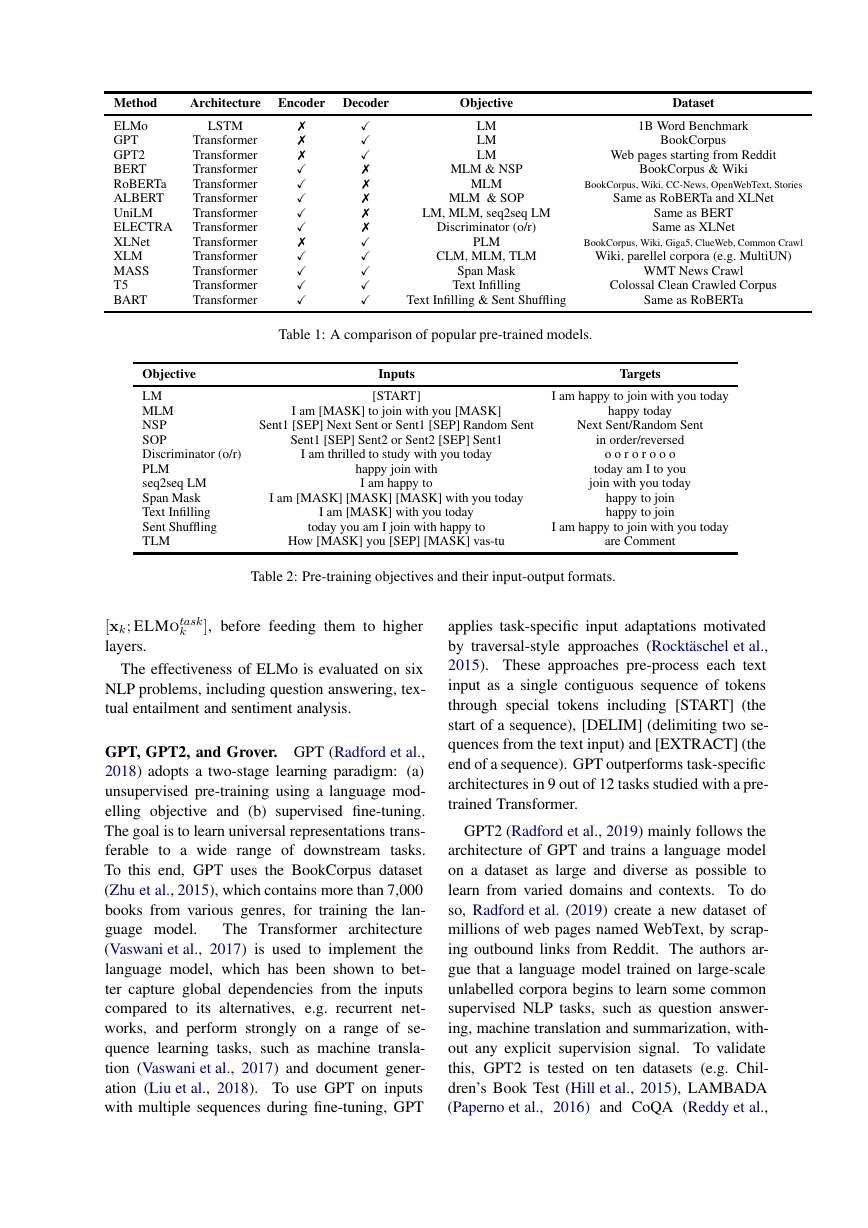

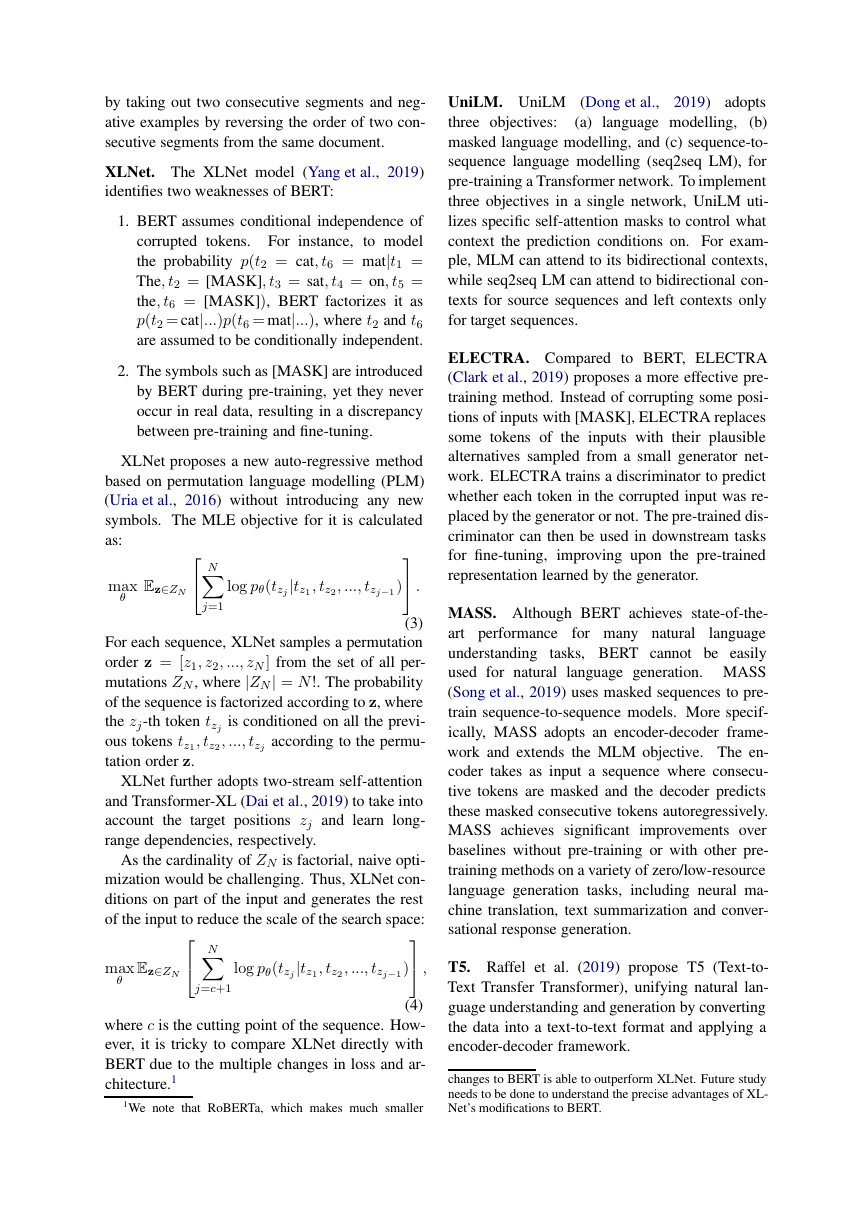

The work in the following sections improves

over Dai and Le (2015) and Ramachandran et al.

(2016) with new architectures (e.g. Transformer),

larger datasets, and new pre-training objectives. A

summary of the models and the pre-training objec-

tives is shown in Table 1 and 2.

ELMo. The ELMo model (Peters et al., 2018)

generalizes traditional word embeddings by ex-

tracting context-dependent representations from a

bidirectional language model. A forward L-layer

LSTM and a backward L-layer LSTM are applied

to encode the left and right contexts, respectively.

At each layer j, the contextualized representations

are the concatenation of the left-to-right and right-

to-left representations, obtaining N hidden repre-

sentations, (h1,j , h2,j , ..., hN,j ), for a sequence of

length N .

To use ELMo in downstream tasks, the (L + 1)-

layer representations (including the global word

embedding) for each token k are aggregated as:

ELMO

task

k = γtask

L

X

j=0

stask

j

hk,j,

(2)

where stask are layer-wise weights normalized by

the softmax used to linearly combine the (L + 1)-

layer representations of the token k and γtask is a

task-specific constant.

Given a pre-trained ELMo, it is straightforward

to incorporate it into a task-specific architecture

for improving the performance. As most super-

vised models use global word representations xk

in their lowest layers, these representations can

be concatenated with their corresponding context-

dependent representations ELMO

, obtaining

task

k

�

Method

Architecture Encoder Decoder

Objective

Dataset

ELMo

GPT

GPT2

BERT

RoBERTa

ALBERT

UniLM

ELECTRA

XLNet

XLM

MASS

T5

BART

LSTM

Transformer

Transformer

Transformer

Transformer

Transformer

Transformer

Transformer

Transformer

Transformer

Transformer

Transformer

Transformer

✗

✗

✗

X

X

X

X

X

✗

X

X

X

X

X

X

X

✗

✗

✗

✗

✗

X

X

X

X

X

LM

LM

LM

MLM & NSP

MLM

MLM & SOP

LM, MLM, seq2seq LM

Discriminator (o/r)

PLM

1B Word Benchmark

BookCorpus

Web pages starting from Reddit

BookCorpus & Wiki

BookCorpus, Wiki, CC-News, OpenWebText, Stories

Same as RoBERTa and XLNet

Same as BERT

Same as XLNet

BookCorpus, Wiki, Giga5, ClueWeb, Common Crawl

CLM, MLM, TLM

Wiki, parellel corpora (e.g. MultiUN)

Span Mask

Text Infilling

WMT News Crawl

Colossal Clean Crawled Corpus

Text Infilling & Sent Shuffling

Same as RoBERTa

Table 1: A comparison of popular pre-trained models.

Objective

LM

MLM

NSP

SOP

Discriminator (o/r)

PLM

seq2seq LM

Span Mask

Text Infilling

Sent Shuffling

TLM

Inputs

[START]

Targets

I am happy to join with you today

I am [MASK] to join with you [MASK]

happy today

Sent1 [SEP] Next Sent or Sent1 [SEP] Random Sent

Next Sent/Random Sent

Sent1 [SEP] Sent2 or Sent2 [SEP] Sent1

I am thrilled to study with you today

happy join with

I am happy to

I am [MASK] [MASK] [MASK] with you today

I am [MASK] with you today

today you am I join with happy to

in order/reversed

o o r o r o o o

today am I to you

join with you today

happy to join

happy to join

I am happy to join with you today

How [MASK] you [SEP] [MASK] vas-tu

are Comment

Table 2: Pre-training objectives and their input-output formats.

[xk; ELMO

layers.

task

k

], before feeding them to higher

The effectiveness of ELMo is evaluated on six

NLP problems, including question answering, tex-

tual entailment and sentiment analysis.

GPT, GPT2, and Grover. GPT (Radford et al.,

2018) adopts a two-stage learning paradigm: (a)

unsupervised pre-training using a language mod-

elling objective and (b) supervised fine-tuning.

The goal is to learn universal representations trans-

ferable to a wide range of downstream tasks.

To this end, GPT uses the BookCorpus dataset

(Zhu et al., 2015), which contains more than 7,000

books from various genres, for training the lan-

guage model.

The Transformer architecture

(Vaswani et al., 2017) is used to implement the

language model, which has been shown to bet-

ter capture global dependencies from the inputs

compared to its alternatives, e.g. recurrent net-

works, and perform strongly on a range of se-

quence learning tasks, such as machine transla-

tion (Vaswani et al., 2017) and document gener-

ation (Liu et al., 2018). To use GPT on inputs

with multiple sequences during fine-tuning, GPT

applies task-specific input adaptations motivated

by traversal-style approaches (Rockt¨aschel et al.,

2015). These approaches pre-process each text

input as a single contiguous sequence of tokens

through special tokens including [START] (the

start of a sequence), [DELIM] (delimiting two se-

quences from the text input) and [EXTRACT] (the

end of a sequence). GPT outperforms task-specific

architectures in 9 out of 12 tasks studied with a pre-

trained Transformer.

GPT2 (Radford et al., 2019) mainly follows the

architecture of GPT and trains a language model

on a dataset as large and diverse as possible to

learn from varied domains and contexts. To do

so, Radford et al. (2019) create a new dataset of

millions of web pages named WebText, by scrap-

ing outbound links from Reddit. The authors ar-

gue that a language model trained on large-scale

unlabelled corpora begins to learn some common

supervised NLP tasks, such as question answer-

ing, machine translation and summarization, with-

out any explicit supervision signal. To validate

this, GPT2 is tested on ten datasets (e.g. Chil-

dren’s Book Test (Hill et al., 2015), LAMBADA

(Paperno et al., 2016) and CoQA (Reddy et al.,

�

2019)) in a zero-shot setting. GPT2 performs

strongly on some tasks. For instance, when con-

ditioned on a document and questions, GPT2

reaches an F1-score of 55 on the CoQA dataset

without using any labelled training data. This

matches or outperforms the performance of 3 out

of 4 baseline systems. As GPT2 divides texts into

bytes and uses BPE (Sennrich et al., 2016) to build

up its vocabulary (instead of using characters or

words, as in previous work), it is unclear if the im-

proved performance comes from the model or the

new input representation.

Grover (Zellers et al., 2019) creates a news

dataset, RealNews, from Common Crawl and pre-

trains a language model for generating realistic-

looking fake news that is conditioned on meta-

data including domains, dates, authors and head-

lines. They further study discriminators that can

be used to detect fake news. The best defense

against Grover turns out to be Grover itself, which

sheds light on the importance of releasing trained

models for detecting fake news.

BERT. ELMo (Peters et al., 2018) concatenates

representations

from the forward and back-

ward LSTMs without considering the interac-

tions between the left and right contexts. GPT

(Radford et al., 2018) and GPT2 (Radford et al.,

2019) use a left-to-right decoder, where every to-

ken can only attend to its left context. These archi-

tectures are sub-optimal for sentence-level tasks,

e.g. named entity recognition and sentiment anal-

ysis, as it is crucial to incorporate contexts from

both directions.

BERT proposes a masked language modelling

(MLM) objective, where some of the tokens of a

input sequence are randomly masked, and the ob-

jective is to predict these masked positions taking

the corrupted sequence as input. BERT applies

a Transformer encoder to attend to bi-directional

contexts during pre-training.

In addition, BERT

uses a next-sentence-prediction (NSP) objective.

Given two input sentences, NSP predicts whether

the second sentence is the actual next sentence of

the first sentence. The NSP objective aims to im-

prove the tasks, such as question answering and

natural language inference, which require reason-

ing over sentence pairs.

Similar to GPT, BERT uses special tokens to

obtain a single contiguous sequence for each in-

put sequence. Specifically, the first token is al-

ways a special classification token [CLS], and sen-

tence pairs are separated using a special token

[SEP]. BERT adopts a pre-training followed by

fine-tuning scheme. The final hidden state of

[CLS] is used for sentence-level tasks and the final

hidden state of each token is used for token-level

tasks. BERT obtains new state-of-the-art results

on eleven natural language processing tasks, e.g.

improving the GLUE (Wang et al., 2018) score to

80.5%.

Similar to GPT2,

it is unclear exactly why

BERT improves over prior work as it uses differ-

ent objectives, datasets (Wikipedia and BookCor-

pus) and architectures compared to previous meth-

ods. For partial insight on this, we refer the read-

ers to (Raffel et al., 2019) for a controlled compar-

ison between unidirectional and bidirectional mod-

els, traditional language modelling and masked

language modelling using the same datasets.

BERT variants. Recent work further studies

and improves the objective and architecture of

BERT.

Instead of randomly masking tokens, ERNIE

(Zhang et al., 2019) incorporates knowledge mask-

ing strategies, including entity-level masking and

phrase-level masking.

SpanBERT (Joshi et al.,

2019) generalizes this idea to mask random spans,

without referring to external knowledge. Struct-

BERT (Wang et al., 2019b) proposes a word struc-

tural objective that randomly permutes the order

of 3-grams for reconstruction and a sentence struc-

tural objective that predicts the order of two con-

secutive segments.

RoBERTa (Liu et al., 2019c) makes a few

changes to the released BERT model and achieves

substantial improvements. The changes include:

(1) Training the model longer with larger batches

and more data; (2) Removing the NSP objective;

(3) Training on longer sequences; (4) Dynami-

cally changing the masked positions during pre-

training.

ALBERT (Lan et al., 2019) proposes

two

parameter-reduction techniques (factorized em-

bedding parameterization and cross-layer param-

eter sharing) to lower memory consumption and

speed up training. Furthermore, ALBERT argues

that the NSP objective lacks difficulty, as the neg-

ative examples are created by pairing segments

from different documents, this mixes topic predic-

tion and coherence prediction into a single task.

ALBERT instead uses a sentence-order prediction

(SOP) objective. SOP obtains positive examples

�

by taking out two consecutive segments and neg-

ative examples by reversing the order of two con-

secutive segments from the same document.

XLNet. The XLNet model (Yang et al., 2019)

identifies two weaknesses of BERT:

1. BERT assumes conditional independence of

corrupted tokens. For instance,

to model

the probability p(t2 = cat, t6 = mat|t1 =

The, t2 = [MASK], t3 = sat, t4 = on, t5 =

the, t6 = [MASK]), BERT factorizes it as

p(t2 = cat|...)p(t6 = mat|...), where t2 and t6

are assumed to be conditionally independent.

2. The symbols such as [MASK] are introduced

by BERT during pre-training, yet they never

occur in real data, resulting in a discrepancy

between pre-training and fine-tuning.

XLNet proposes a new auto-regressive method

based on permutation language modelling (PLM)

(Uria et al., 2016) without introducing any new

symbols. The MLE objective for it is calculated

as:

max

θ

Ez∈ZN

N

X

j=1

log pθ(tzj |tz1 , tz2, ..., tzj−1 )

.

(3)

For each sequence, XLNet samples a permutation

order z = [z1, z2, ..., zN ] from the set of all per-

mutations ZN , where |ZN | = N !. The probability

of the sequence is factorized according to z, where

the zj -th token tzj is conditioned on all the previ-

ous tokens tz1 , tz2, ..., tzj according to the permu-

tation order z.

XLNet further adopts two-stream self-attention

and Transformer-XL (Dai et al., 2019) to take into

account the target positions zj and learn long-

range dependencies, respectively.

As the cardinality of ZN is factorial, naive opti-

mization would be challenging. Thus, XLNet con-

ditions on part of the input and generates the rest

of the input to reduce the scale of the search space:

log pθ(tzj |tz1, tz2 , ..., tzj−1 )

,

Ez∈ZN

N

X

max

θ

j=c+1

(4)

where c is the cutting point of the sequence. How-

ever, it is tricky to compare XLNet directly with

BERT due to the multiple changes in loss and ar-

chitecture.1

1We note that RoBERTa, which makes much smaller

(a) language modelling,

UniLM. UniLM (Dong et al., 2019) adopts

three objectives:

(b)

masked language modelling, and (c) sequence-to-

sequence language modelling (seq2seq LM), for

pre-training a Transformer network. To implement

three objectives in a single network, UniLM uti-

lizes specific self-attention masks to control what

context the prediction conditions on. For exam-

ple, MLM can attend to its bidirectional contexts,

while seq2seq LM can attend to bidirectional con-

texts for source sequences and left contexts only

for target sequences.

ELECTRA. Compared to BERT, ELECTRA

(Clark et al., 2019) proposes a more effective pre-

training method. Instead of corrupting some posi-

tions of inputs with [MASK], ELECTRA replaces

some tokens of the inputs with their plausible

alternatives sampled from a small generator net-

work. ELECTRA trains a discriminator to predict

whether each token in the corrupted input was re-

placed by the generator or not. The pre-trained dis-

criminator can then be used in downstream tasks

for fine-tuning,

improving upon the pre-trained

representation learned by the generator.

MASS. Although BERT achieves state-of-the-

art performance for many natural

language

understanding tasks, BERT cannot be easily

used for natural

language generation. MASS

(Song et al., 2019) uses masked sequences to pre-

train sequence-to-sequence models. More specif-

ically, MASS adopts an encoder-decoder frame-

work and extends the MLM objective. The en-

coder takes as input a sequence where consecu-

tive tokens are masked and the decoder predicts

these masked consecutive tokens autoregressively.

MASS achieves significant

improvements over

baselines without pre-training or with other pre-

training methods on a variety of zero/low-resource

language generation tasks, including neural ma-

chine translation, text summarization and conver-

sational response generation.

T5. Raffel et al. (2019) propose T5 (Text-to-

Text Transfer Transformer), unifying natural lan-

guage understanding and generation by converting

the data into a text-to-text format and applying a

encoder-decoder framework.

changes to BERT is able to outperform XLNet. Future study

needs to be done to understand the precise advantages of XL-

Net’s modifications to BERT.

�

T5 introduces a new pre-training dataset, Colos-

sal Clean Crawled Corpus by cleaning the web

pages from Common Crawl. T5 also system-

atically compares previous methods in terms of

pre-training objectives, architectures, pre-training

datasets, and transfer approaches. T5 adopts a

text infilling objective (where spans of text are re-

placed with a single mask token), longer training,

multi-task pre-training on GLUE or SuperGLUE,

fine-tuning on each individual GLUE and Super-

GLUE tasks, and beam search.

For fine-tuning, to convert the input data into a

text-to-text framework, T5 utilizes the token vo-

cabulary of the decoder as the prediction labels.

For example, the tokens “entailment”, “contradic-

tion”, and “neutral” are used as the labels for nat-

ural language inference tasks. For the regression

task (e.g. STS-B (Cer et al., 2017)), T5 simply

rounds up the scores to the nearest multiple of 0.2

and converts the results to literal string represen-

tations (e.g. 2.57 is converted to the string “2.6”).

T5 also adds a task-specific prefix to each input se-

quence to specify its task. For instance, T5 adds

the prefix “translate English to German” to each

input sequence like “That is good.” for English-to-

German translation datasets.

Then,

BART. The BART model (Lewis et al., 2019)

introduces additional noising functions beyond

MLM for pre-training sequence-to-sequence mod-

els. First,

the input sequence is corrupted us-

ing an arbitrary noising function.

the

is reconstructed by a Trans-

corrupted input

former network trained using teacher

forcing

(Williams and Zipser, 1989). BART evaluates a

wide variety of noising functions, including token

masking, token deletion, text infilling, document

rotation, and sentence shuffling (randomly shuf-

fling the word order of a sentence). The best per-

formance is achieved by using both sentence shuf-

fling and text infilling. BART matches the perfor-

mance of RoBERTa on GLUE and SQuAD and

achieves state-of-the-art performance on a variety

of text generation tasks.

3.2 Supervised Objectives

Pre-training on the ImageNet dataset (which has

supervision about the objects in images) before

fine-tuning on downstream tasks has become

the de facto standard in the computer vision

community. Motivated by the success of su-

pervised pre-training in computer vision, some

work (Conneau et al., 2017; McCann et al., 2017;

Subramanian et al., 2018) utilizes data-rich tasks

in NLP to learn transferable representations.

CoVe (McCann et al., 2017) shows that the rep-

resentations learned from machine translation are

transferable to downstream tasks. CoVe uses a

deep LSTM encoder from a sequence-to-sequence

model trained for machine translation to obtain

contextual embeddings. Empirical results show

that augmenting non-contextualized word repre-

sentations (Mikolov et al., 2013; Pennington et al.,

2014) with CoVe embeddings improves perfor-

mance over a wide variety of common NLP tasks,

such as sentiment analysis, question classifica-

tion, entailment, and question answering.

In-

ferSent (Conneau et al., 2017) obtains contextual-

ized representations from a pre-trained natural lan-

guage inference model on SNLI. Subramanian et

(2018) use multi-task learning to pre-train

al.

a sequence-to-sequence model for obtaining gen-

eral representations, where the tasks include skip-

thought (Kiros et al., 2015), machine translation,

constituency parsing, and natural language infer-

ence.

4 Cross-lingual Polyglot Pre-training for

Contextual Embeddings

representations,

Cross-lingual polyglot pre-training aims to learn

joint multi-lingual

enabling

knowledge transfer from data-rich languages like

English to data-scarce languages like Romanian.

Based on whether joint

training and a shared

vocabulary are used, we divide previous work into

three categories.

Joint training & shared vocabulary. Artetxe

and Schwenk (2019) use a BiLSTM encoder-

decoder framework with a shared BPE vocabulary

for 93 languages. The framework is pre-trained

using parallel corpora, including as Europarl and

Tanzil. The contextual embeddings from the en-

coder are used to train classifiers using English

corpora for downstream tasks. As the embedding

space and the encoder are shared, the resultant

classifiers can be transferred to any of the 93 lan-

guages without further modification. Experiments

show that these classifiers achieve competitive per-

formance on cross-lingual natural language infer-

ence, cross-lingual document classification, and

parallel corpus mining.

Rosita (Mulcaire et al., 2019) pre-trains a lan-

guage model using text from different languages,

�

showing the benefits of polyglot learning on low-

resource languages.

Recently,

the authors of BERT developed a

multi-lingual BERT2 which is pre-trained using

the Wikipedia dump with more than 100 lan-

guages.

(1) Causal

XLM (Lample and Conneau, 2019) uses three

pre-training methods for learning cross-lingual

language models:

language mod-

elling, where the model

is trained to predict

p(ti|t1, t2, ..., ti−1), (2) Masked language mod-

elling, and (3) Translation language modelling

(TLM). Parallel corpora are used, and tokens in

both source and target sequences are masked for

learning cross-lingual association. XLM performs

strongly on cross-lingual classification, unsuper-

vised machine translation, and supervised ma-

chine translation. XLM-R (Conneau et al., 2019)

scales up XLM by training a Transformer-based

masked language model on one hundred lan-

guages, using more than two terabytes of filtered

CommonCrawl data. XLM-R shows that large-

scale multi-lingual pre-training leads to signifi-

cant performance gains for a wide range of cross-

lingual transfer tasks.

Joint training & separate vocabularies. Wu et

(2019) study the emergence of cross-lingual

al.

structures in pre-trained multi-lingual

language

models.

It is found that cross-lingual transfer is

possible even when there is no shared vocabulary

across the monolingual corpora, and there are uni-

versal latent symmetries in the embedding spaces

of different languages.

Separate training & separate vocabularies.

Artetxe et al. (2019) use a four-step method for

obtaining multi-lingual embeddings. Suppose we

have the monolingual sequences of two languages

L1 and L2: (1) Pre-training BERT with the vo-

cabulary of L1 using L1’s monolingual data. (2)

Replacing the vocabulary of L1 with the vocab-

ulary of L2 and training new vocabulary embed-

dings, while freezing the other parameters, using

L2’s monolingual data. (3) Fine-tuning the BERT

model for a downstream task using labeled data in

L1, while freezing L1’s vocabulary embeddings.

(4) Replacing the fine-tuned BERT with L2’s vo-

cabulary embeddings for zero-shot transfer tasks.

2https://github.com/google-

research/bert/blob/master/multilingual.md

5 Downstream Learning

Once learned, contextual embeddings have demon-

strated impressive performance when used down-

stream on various learning problems. Here we de-

scribe the ways in which contextual embeddings

are used downstream, the ways in which one can

avoid forgetting information in the embeddings

during downstream learning, and how they can be

specialized to multiple learning tasks.

5.1 Ways to Use Contextual Embeddings

Downstream

There are three main ways to use pre-trained

contextual embeddings in downstream tasks: (1)

Feature-based methods, (2) Fine-tuning methods,

and (3) Adapter methods.

Feature-based. One example of a feature-based

is the method used by ELMo (Peters et al., 2018).

Specifically, as shown in equation 2, ELMo

freezes the weights of the pre-trained contextual

embedding model and forms a linear combina-

tion of its internal representations. The linearly-

combined representations are then used as fea-

tures for task-specific architectures. The benefit of

feature-based models is that they can use state-of-

the-art handcrafted architectures for specific tasks.

as

Fine-tuning. Fine-tuning works

follows:

starting with the weights of the pre-trained contex-

tual embedding model, fine-tuning makes small

adjustments to them in order to specialize them to

a specific downstream task. One stream of work

applies minimal changes to pre-trained models to

take full advantage of their parameters. The most

straightforward way is adding linear layers on

top of the pre-trained models (Devlin et al., 2018;

Lan et al., 2019). Another method (Radford et al.,

2019; Raffel et al., 2019) uses universal data

formats without introducing new parameters for

downstream tasks.

To apply pre-trained models to structurally

different tasks, where task-specific architectures

is initialized

are used, as much of the model

with pre-trained weights as possible.

For in-

stance, XLM (Lample and Conneau, 2019) ap-

plies two pre-trained monolingual language mod-

els to initialize the encoder and the decoder for ma-

chine translation, respectively, leaving only cross-

attention weights randomly initialized.

Adapters. Adapters

2017;

Stickland and Murray, 2019) are small modules

(Rebuffi et al.,

�

added between layers of pre-trained models to

be trained in a multi-task learning setting. The

parameters of the pre-trained model are fixed

while tuning these adapter modules. Compared

to previous work that fine-tunes a separate

pre-trained model for each task, a model with

shared adapters for all tasks often requires fewer

parameters.

5.2 Countering Catastrophic Forgetting

Learning on downstream tasks is prone to over-

write the information from pre-trained mod-

els, which is widely known as

the catas-

trophic forgetting (McCloskey and Cohen, 1989;

d’Autume et al., 2019). Previous work combats

this by (1) Freezing layers, (2) Using adaptive

learning rates, and (3) Regularization.

Freezing layers. Motivated by layer-wise train-

ing of neural networks (Hinton et al., 2006), train-

ing certain layers while freezing others can poten-

tially reduce forgetting during fine-tuning. Differ-

ent layer-wise tuning schedules have been studied.

Long et al. (2015) freeze all layers except the top

layer. Felbo et al. (2017) use “chain-thaw”, which

sequentially unfreezes and fine-tunes a layer at a

time. Howard and Ruder (2018) gradually un-

freeze all layers one by one from top to bottom.

(2019) apply a three-stage

Chronopoulou et al.

fine-tuning schedule: (a) randomly-initialized pa-

rameters are updated for n epochs, (b) the pre-

trained parameters (except word embeddings) are

then fine-tuned, (c) at last, all parameters are fine-

tuned.

Adaptive learning rates. Another method to

mitigate catastrophic forgetting is by using adap-

tive learning rates. As it is believed that the lower

layers of pre-trained models tend to capture gen-

eral language knowledge (Tenney et al., 2019a),

Howard and Ruder (2018) use lower learning rates

for lower layers when fine-tuning.

Regularization. Regularization limits the fine-

tuned parameters to be close to the pre-trained pa-

rameters. Wiese et al.

(2017) minimize the Eu-

clidean distance between the fine-tuned parame-

ters and pre-trained parameters. Kirkpatrick et al.

(2017) use the Fisher information matrix to pro-

tect the weights that are identified as essential for

pre-trained models.

5.3 Multi-task Fine-tuning

on

Wang et al.,

learning

2019b;

downstream tasks

Multi-task

2019a;

(Liu et al.,

Jozefowicz et al., 2016) obtains general

rep-

resentations across tasks and achieves strong

performance on each individual task.

MT-DNN (Liu et al., 2019b) fine-tunes BERT

on all the GLUE tasks,

improving the GLUE

benchmark to 82.7%. MT-DNN also demonstrates

that the representations from multi-task learning

obtain better performance on domain adaptation

compared to BERT.

Wang et al.

(2019a) investigate further, non-

GLUE tasks, such as skip-thought and Reddit re-

sponse generation, for multi-task learning.

T5 (Raffel et al., 2019) studies various settings

of multi-task learning and finds that using multi-

task learning before fine-tuning on each task per-

forms the best.

6 Model Compression

To address

As many pre-trained language models have a

prohibitive memory footprint and latency,

it is

a challenging task to deploy them in resource-

constrained environments.

this,

model compression (Cheng et al., 2017), which

has gained popularity in recent years for shrinking

large neural networks, has been investigated for

compressing contextual embedding models. Work

on compressing language models utilizes (1) Low-

rank approximation, (2) Knowledge distillation,

and (3) Weight quantization, to make them usable

in embedded systems and edge devices.

Low rank approximation. Methods that learn

low rank approximations seek to compress the

full-rank model weight matrices into low-rank

matrices, thereby reducing the effective number

of model parameters. As the embedding matri-

ces usually account for a large portion of model

parameters (e.g. 21% for BERTBase), ALBERT

(Lan et al., 2019) approximates the embedding

matrix E ∈ RV ×d as the product of two smaller

and E2 ∈ Rd′×d, where

matrices, E1 ∈ RV ×d′

d′ ≪ d.

Knowledge distillation. A method

called

‘knowledge distillation’ was proposed by Hinton

(2015), where the ‘knowledge’ encoded

et al.

in a teacher network is transferred to a student

network. Hinton et al. (2015) use the soft target

probabilities, output by the teacher network, to

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc