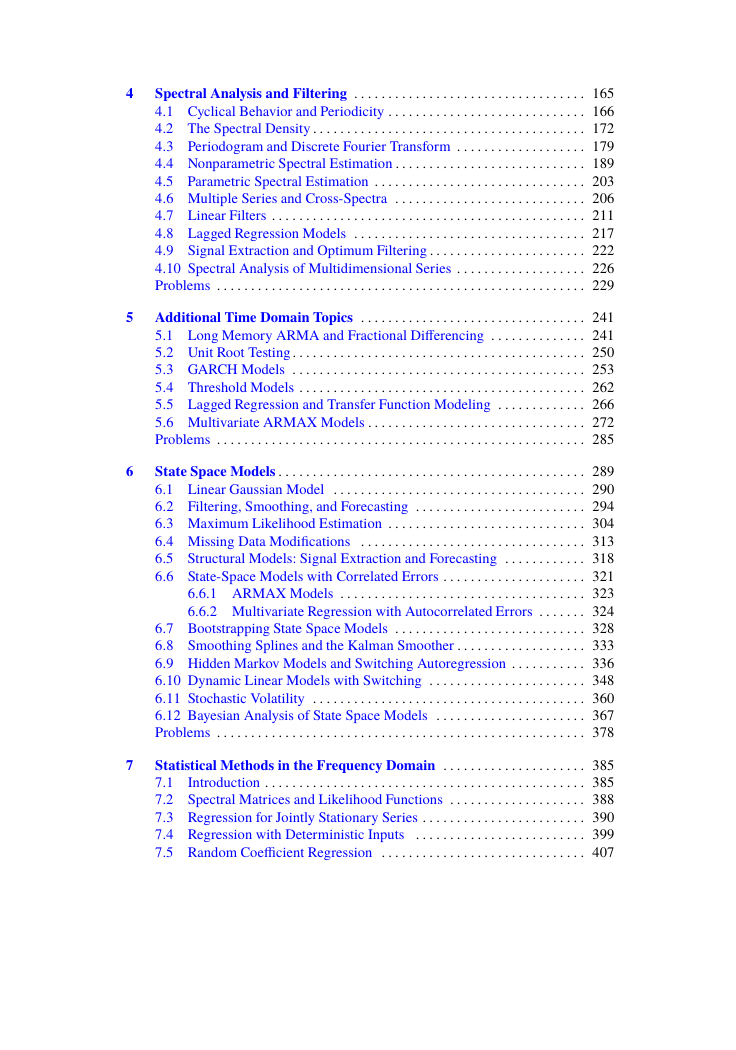

Contents

1 Characteristics of Time Series

1.1 The Nature of Time Series Data

1.2 Time Series Statistical Models

1.3 Measures of Dependence

1.4 Stationary Time Series

1.5 Estimation of Correlation

1.6 Vector-Valued and Multidimensional Series

Problems

2 Time Series Regression & Exploratory Data Analysis

2.1 Classical Regression in the Time Series Context

2.2 Exploratory Data Analysis

2.3 Smoothing in the Time Series Context

Problems

3 ARIMA Models

3.1 Autoregressive Moving Average Models

3.2 Difference Equations

3.3 Autocorrelation and Partial Autocorrelation

3.4 Forecasting

3.5 Estimation

3.6 Integrated Models for Nonstationary Data

3.7 Building ARIMA Models

3.8 Regression with Autocorrelated Errors

3.9 Multiplicative Seasonal ARIMA Models

Problems

4 Spectral Analysis & Filtering

4.1 Cyclical Behavior and Periodicity

4.2 The Spectral Density

4.3 Periodogram and Discrete Fourier Transform

4.4 Nonparametric Spectral Estimation

4.5 Parametric Spectral Estimation

4.6 Multiple Series and Cross-Spectra

4.7 Linear Filters

4.8 Lagged Regression Models

4.9 Signal Extraction and Optimum Filtering

4.10 Spectral Analysis of Multidimensional Series

Problems

5 Additional Time Domain Topics

5.1 Long Memory ARMA and Fractional Differencing

5.2 Unit Root Testing

5.3 GARCH Models

5.4 Threshold Models

5.5 Lagged Regression and Transfer Function Modeling

5.6 Multivariate ARMAX Models

Problems

6 State Space Models

6.1 Linear Gaussian Model

6.2 Filtering, Smoothing, and Forecasting

6.3 Maximum Likelihood Estimation

6.4 Missing Data Modifications

6.5 Structural Models: Signal Extraction and Forecasting

6.6 State-Space Models with Correlated Errors

6.6.1 ARMAX Models

6.6.2 Multivariate Regression with Autocorrelated Errors

6.7 Bootstrapping State Space Models

6.8 Smoothing Splines and the Kalman Smoother

6.9 Hidden Markov Models and Switching Autoregression

6.10 Dynamic Linear Models with Switching

6.11 Stochastic Volatility

6.12 Bayesian Analysis of State Space Models

Problems

7 Statistical Methods in Frequency Domain

7.1 Introduction

7.2 Spectral Matrices and Likelihood Functions

7.3 Regression for Jointly Stationary Series

7.4 Regression with Deterministic Inputs

7.5 Random Coefficient Regression

7.6 Analysis of Designed Experiments

7.7 Discriminant and Cluster Analysis

7.8 Principal Components and Factor Analysis

7.9 The Spectral Envelope

Problems

Large Sample Theory

Convergence Modes

Central Limit Theorems

Mean & Autocorrelation Functions

Time Domain Theory

Hilbert Spaces & Projection Theorem

Causal Conditions for ARMA Models

Large Sample Distribution of AR Conditional Least Squares Estimators

The Wold Decomposition

Spectral Domain Theory

Spectral Representation Theorems

Large Sample Distribution of Smoothed Periodogram

Complex Multivariate Normal Distribution

Integration

Spectral Analysis as Principal Component Analysis

Parametric Spectral Estimation

R Supplement

First Things first

astsa

Start

Time Series Primer

Refs

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc