Home Trees Indices Help

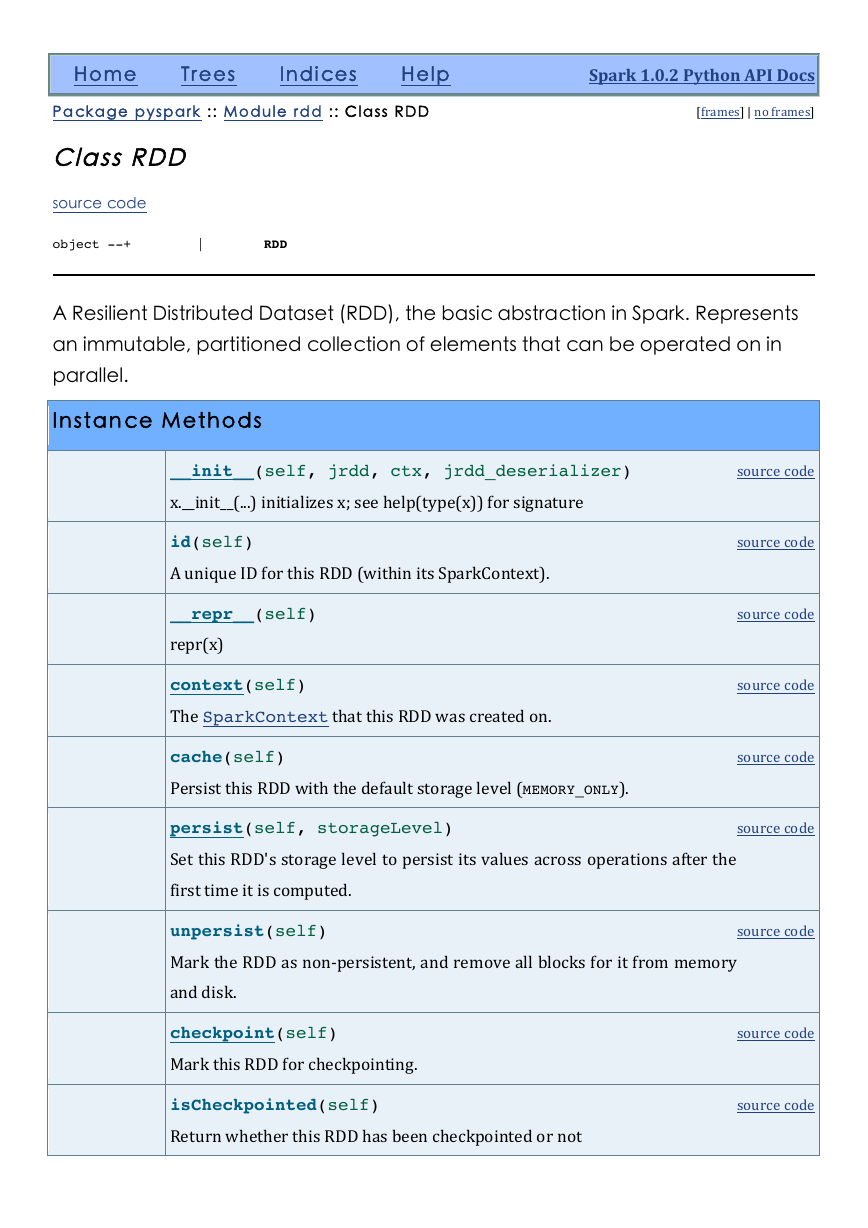

Package pyspark :: M odule rdd :: Class RDD

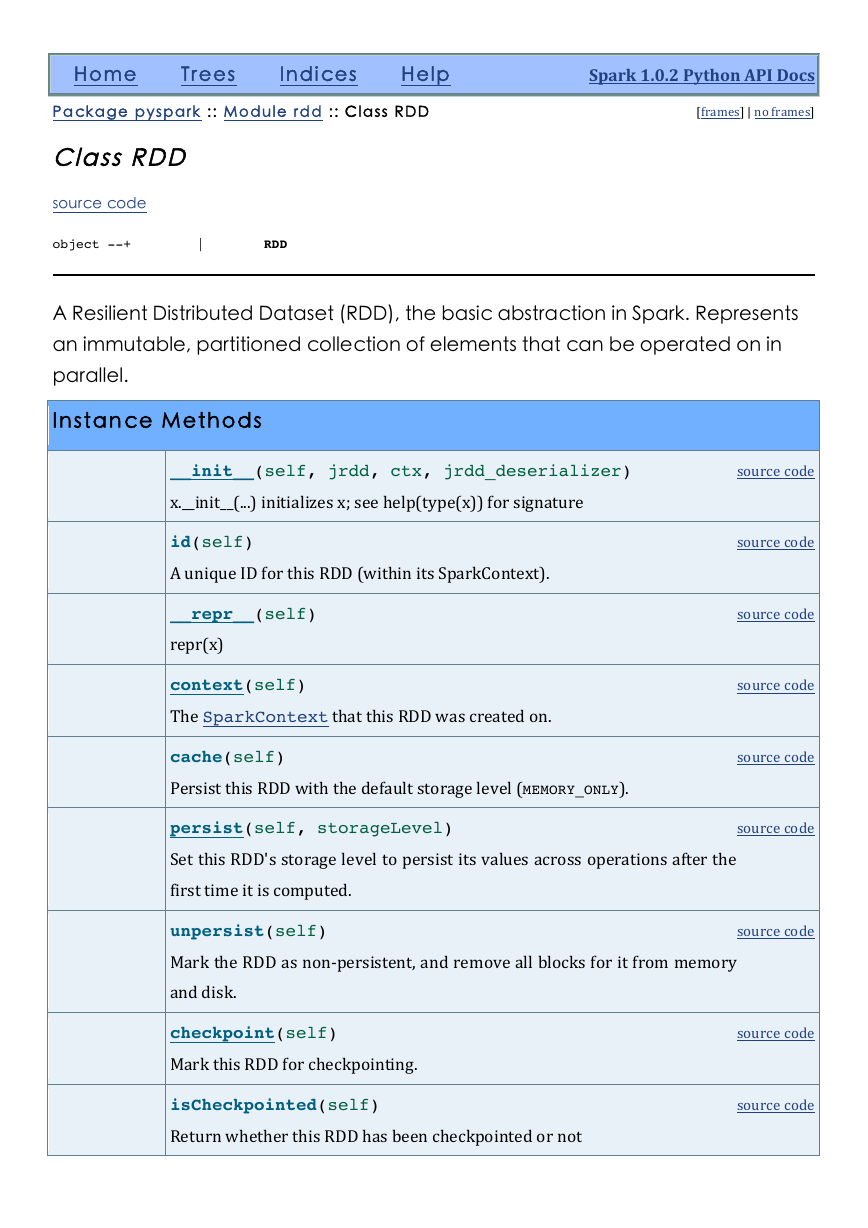

Class RDD

source code

object --+ | RDD

Spark

1.0.2

Python

API

Docs

[frames]

|

no

frames]

A Resilient Distributed Dataset (RDD), the basic abstraction in Spark. Represents

an immutable, partitioned collection of elements that can be operated on in

parallel.

Instance Methods

__init__(self, jrdd, ctx, jrdd_deserializer)

x.__init__(...)

initializes

x;

see

help(type(x))

for

signature

id(self)

A

unique

ID

for

this

RDD

(within

its

SparkContext).

__repr__(self)

repr(x)

context(self)

The

SparkContext

that

this

RDD

was

created

on.

cache(self)

Persist

this

RDD

with

the

default

storage

level

(MEMORY_ONLY).

persist(self, storageLevel)

Set

this

RDD's

storage

level

to

persist

its

values

across

operations

after

the

first

time

it

is

computed.

unpersist(self)

Mark

the

RDD

as

non-‐persistent,

and

remove

all

blocks

for

it

from

memory

and

disk.

checkpoint(self)

Mark

this

RDD

for

checkpointing.

isCheckpointed(self)

Return

whether

this

RDD

has

been

checkpointed

or

not

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

�

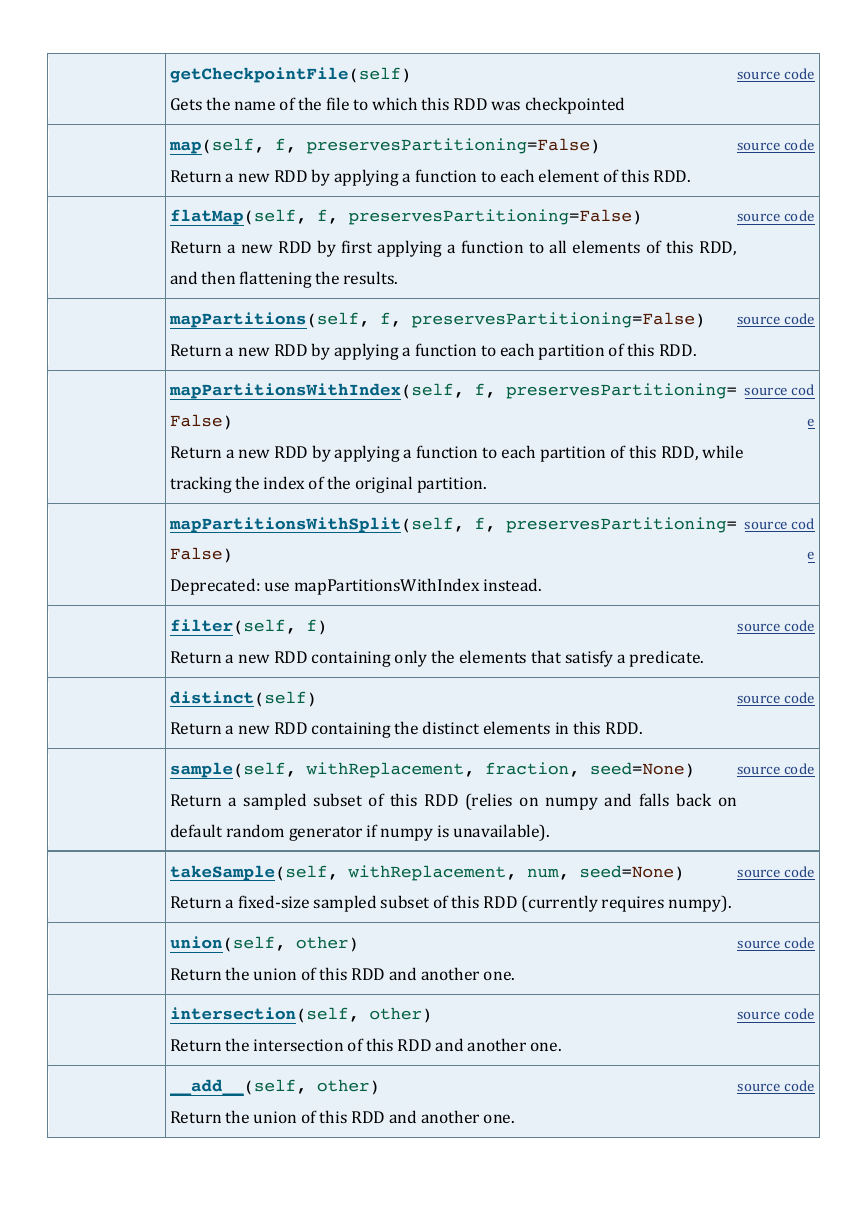

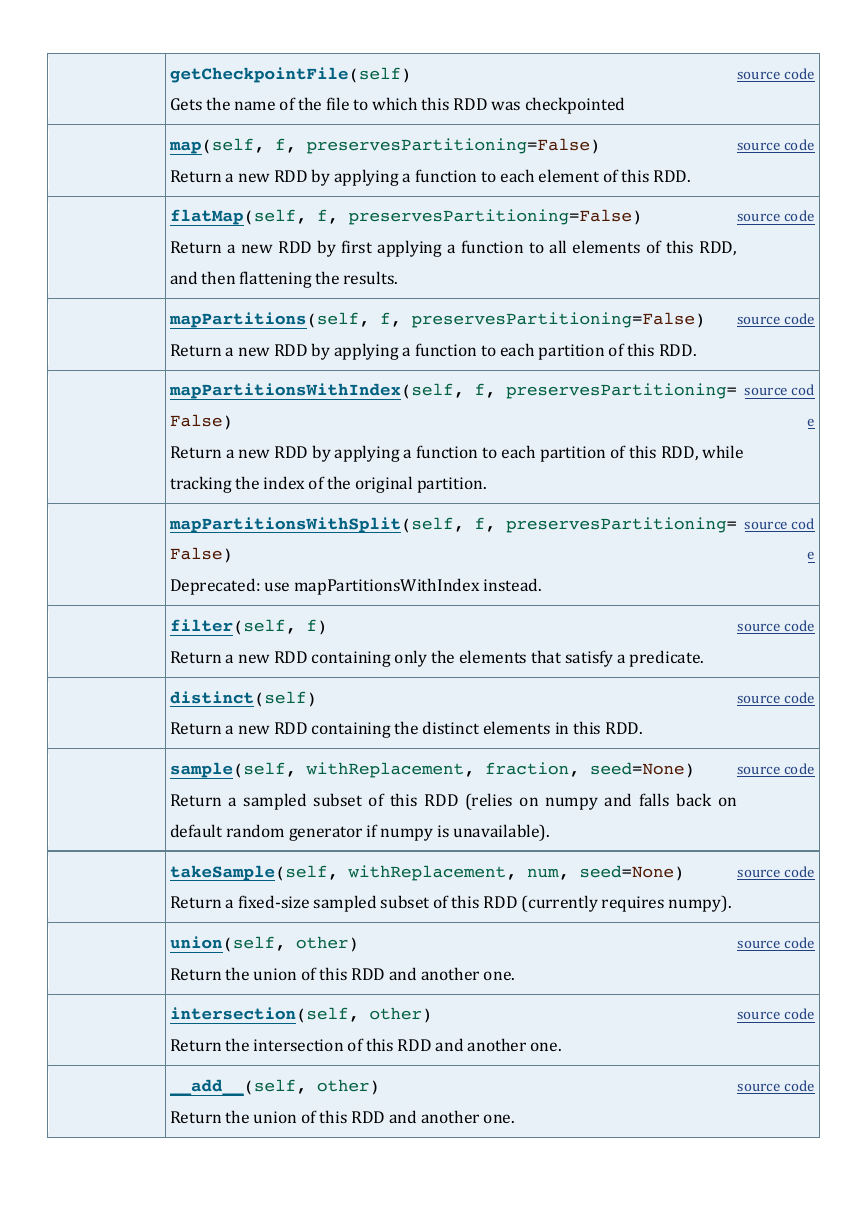

mapPartitionsWithSplit(self, f, preservesPartitioning=

mapPartitionsWithIndex(self, f, preservesPartitioning=

getCheckpointFile(self)

Gets

the

name

of

the

file

to

which

this

RDD

was

checkpointed

map(self, f, preservesPartitioning=False)

Return

a

new

RDD

by

applying

a

function

to

each

element

of

this

RDD.

flatMap(self, f, preservesPartitioning=False)

Return

a

new

RDD

by

first

applying

a

function

to

all

elements

of

this

RDD,

and

then

flattening

the

results.

mapPartitions(self, f, preservesPartitioning=False)

Return

a

new

RDD

by

applying

a

function

to

each

partition

of

this

RDD.

False)

Return

a

new

RDD

by

applying

a

function

to

each

partition

of

this

RDD,

while

tracking

the

index

of

the

original

partition.

False)

Deprecated:

use

mapPartitionsWithIndex

instead.

filter(self, f)

Return

a

new

RDD

containing

only

the

elements

that

satisfy

a

predicate.

distinct(self)

Return

a

new

RDD

containing

the

distinct

elements

in

this

RDD.

sample(self, withReplacement, fraction, seed=None)

Return

a

sampled

subset

of

this

RDD

(relies

on

numpy

and

falls

back

on

default

random

generator

if

numpy

is

unavailable).

takeSample(self, withReplacement, num, seed=None)

Return

a

fixed-‐size

sampled

subset

of

this

RDD

(currently

requires

numpy).

union(self, other)

Return

the

union

of

this

RDD

and

another

one.

intersection(self, other)

Return

the

intersection

of

this

RDD

and

another

one.

__add__(self, other)

Return

the

union

of

this

RDD

and

another

one.

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

�

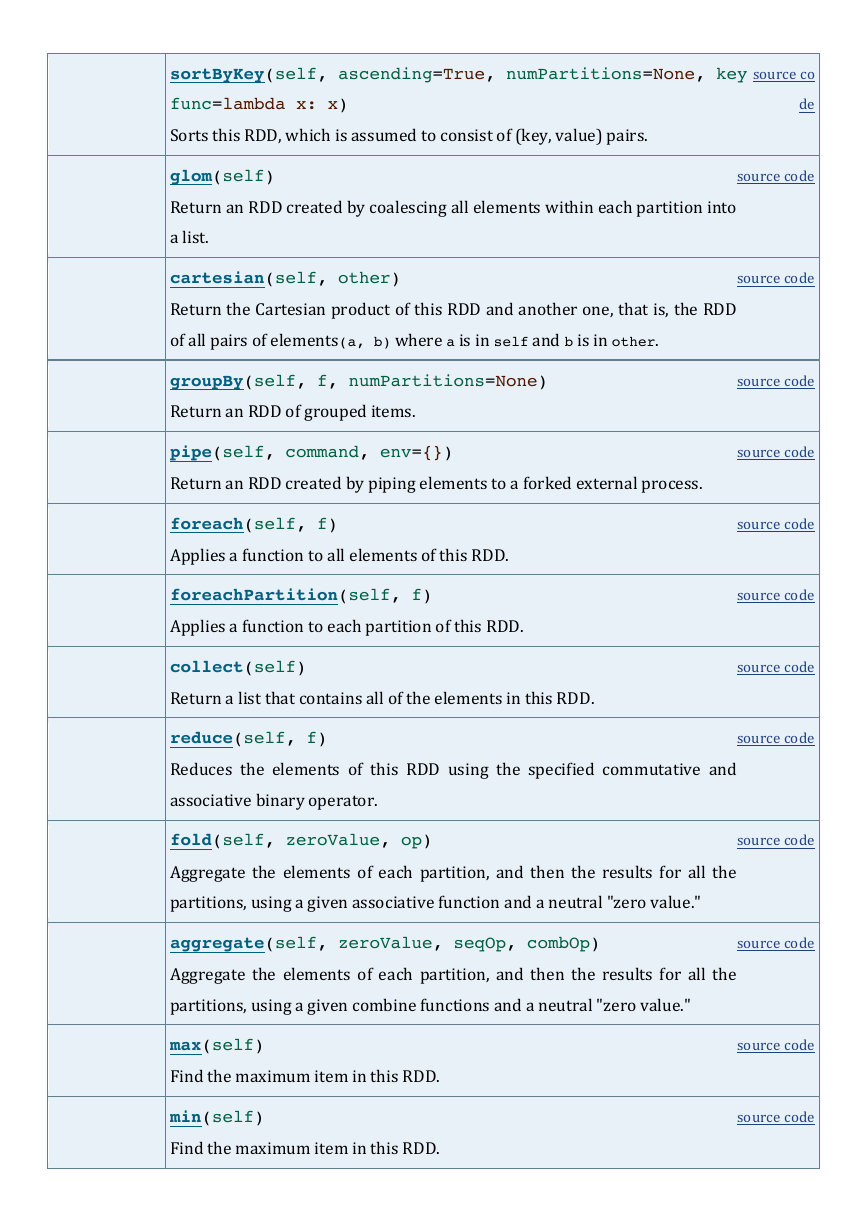

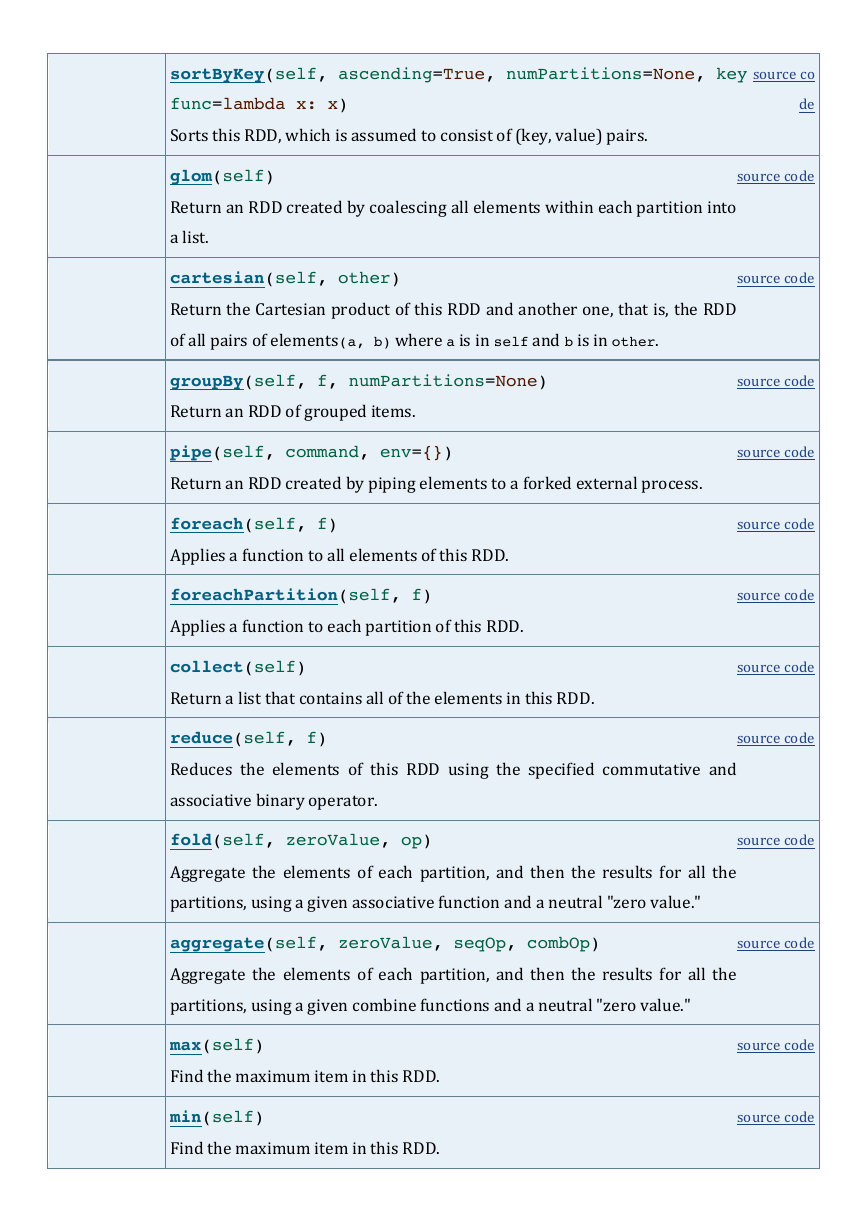

sortByKey(self, ascending=True, numPartitions=None, key

func=lambda x: x)

Sorts

this

RDD,

which

is

assumed

to

consist

of

(key,

value)

pairs.

glom(self)

Return

an

RDD

created

by

coalescing

all

elements

within

each

partition

into

a

list.

cartesian(self, other)

Return

the

Cartesian

product

of

this

RDD

and

another

one,

that

is,

the

RDD

of

all

pairs

of

elements(a, b)

where

a

is

in

self

and

b

is

in

other.

groupBy(self, f, numPartitions=None)

Return

an

RDD

of

grouped

items.

pipe(self, command, env={})

Return

an

RDD

created

by

piping

elements

to

a

forked

external

process.

foreach(self, f)

Applies

a

function

to

all

elements

of

this

RDD.

foreachPartition(self, f)

Applies

a

function

to

each

partition

of

this

RDD.

collect(self)

Return

a

list

that

contains

all

of

the

elements

in

this

RDD.

reduce(self, f)

Reduces

the

elements

of

this

RDD

using

the

specified

commutative

and

associative

binary

operator.

fold(self, zeroValue, op)

Aggregate

the

elements

of

each

partition,

and

then

the

results

for

all

the

partitions,

using

a

given

associative

function

and

a

neutral

"zero

value."

aggregate(self, zeroValue, seqOp, combOp)

Aggregate

the

elements

of

each

partition,

and

then

the

results

for

all

the

partitions,

using

a

given

combine

functions

and

a

neutral

"zero

value."

max(self)

Find

the

maximum

item

in

this

RDD.

min(self)

Find

the

maximum

item

in

this

RDD.

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

�

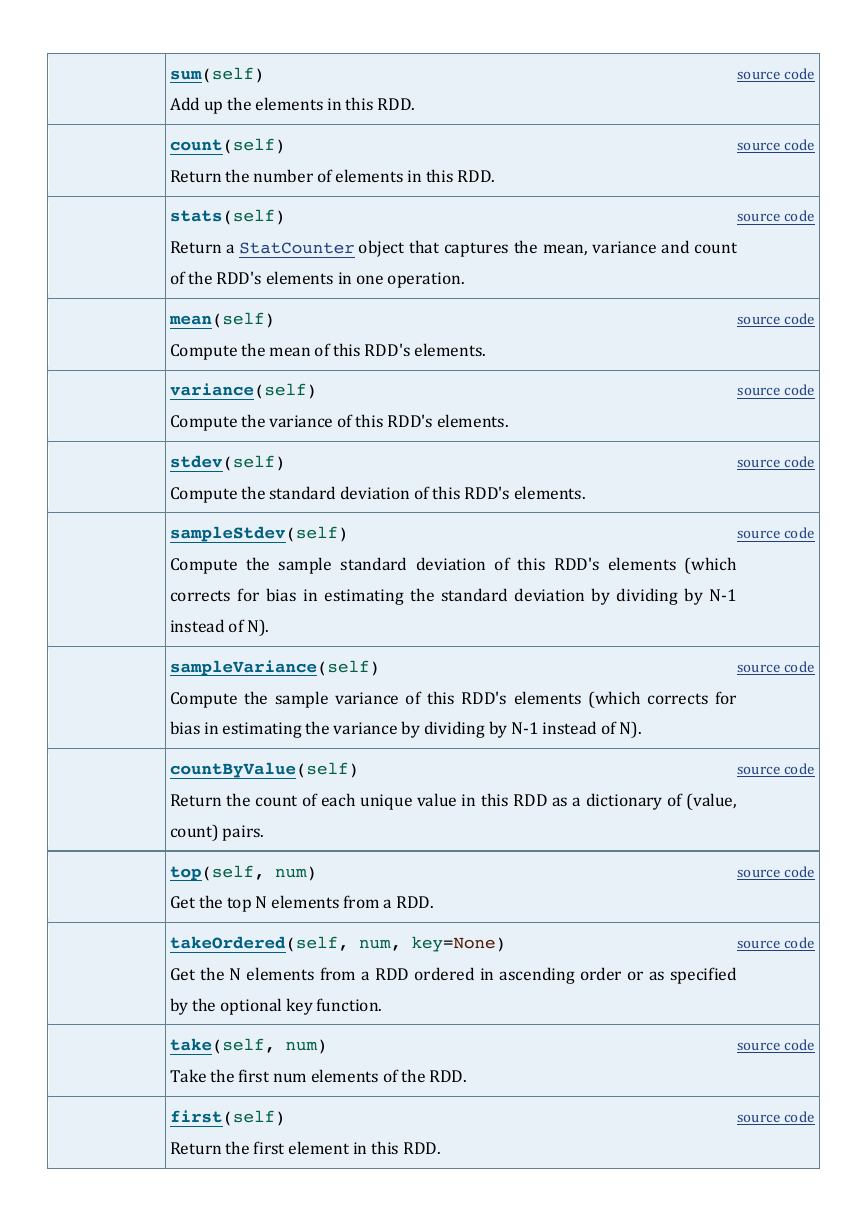

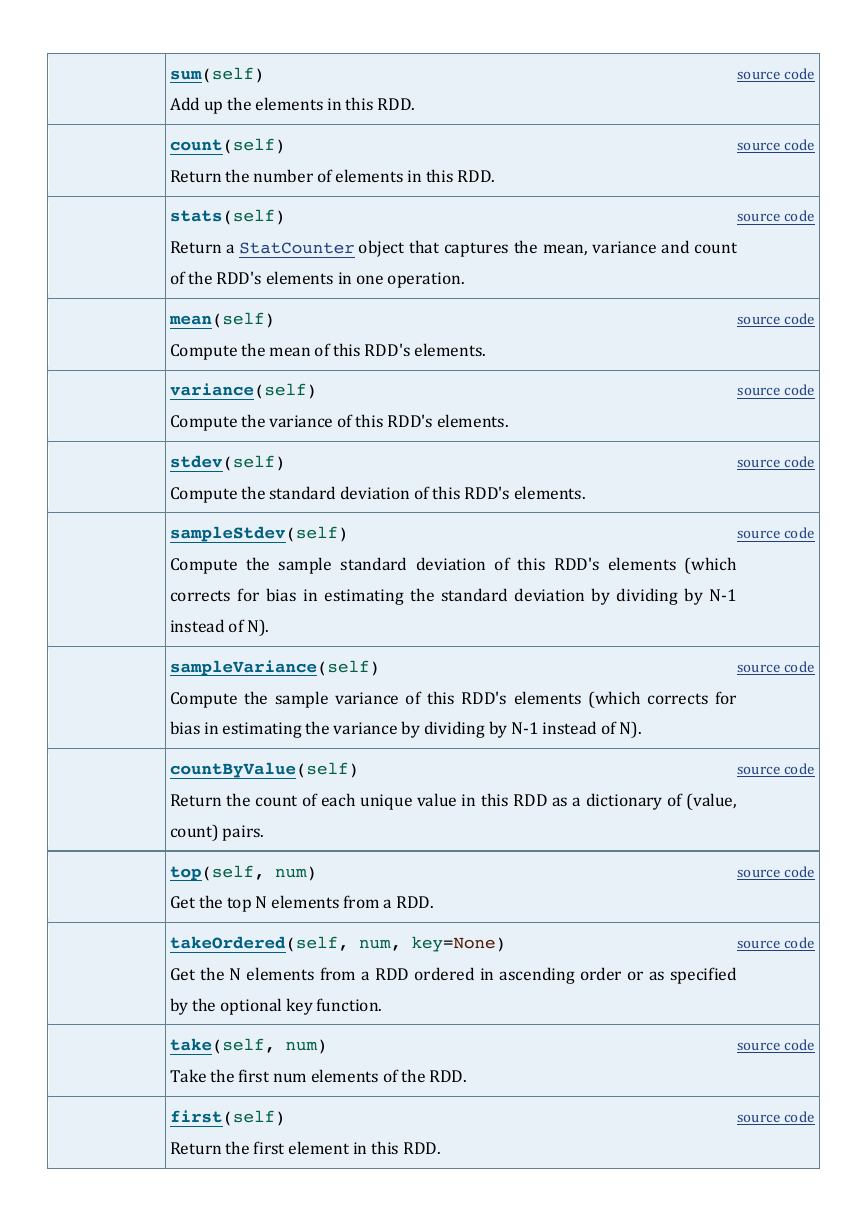

sum(self)

Add

up

the

elements

in

this

RDD.

count(self)

Return

the

number

of

elements

in

this

RDD.

stats(self)

Return

a

StatCounter

object

that

captures

the

mean,

variance

and

count

of

the

RDD's

elements

in

one

operation.

mean(self)

Compute

the

mean

of

this

RDD's

elements.

variance(self)

Compute

the

variance

of

this

RDD's

elements.

stdev(self)

Compute

the

standard

deviation

of

this

RDD's

elements.

sampleStdev(self)

Compute

the

sample

standard

deviation

of

this

RDD's

elements

(which

corrects

for

bias

in

estimating

the

standard

deviation

by

dividing

by

N-‐1

instead

of

N).

sampleVariance(self)

Compute

the

sample

variance

of

this

RDD's

elements

(which

corrects

for

bias

in

estimating

the

variance

by

dividing

by

N-‐1

instead

of

N).

countByValue(self)

Return

the

count

of

each

unique

value

in

this

RDD

as

a

dictionary

of

(value,

count)

pairs.

top(self, num)

Get

the

top

N

elements

from

a

RDD.

takeOrdered(self, num, key=None)

Get

the

N

elements

from

a

RDD

ordered

in

ascending

order

or

as

specified

by

the

optional

key

function.

take(self, num)

Take

the

first

num

elements

of

the

RDD.

first(self)

Return

the

first

element

in

this

RDD.

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

�

saveAsTextFile(self, path)

source

code

Save

this

RDD

as

a

text

file,

using

string

representations

of

elements.

collectAsMap(self)

source

code

Return

the

key-‐value

pairs

in

this

RDD

to

the

master

as

a

dictionary.

keys(self)

source

code

Return

an

RDD

with

the

keys

of

each

tuple.

values(self)

source

code

Return

an

RDD

with

the

values

of

each

tuple.

reduceByKey(self, func, numPartitions=None)

source

code

Merge

the

values

for

each

key

using

an

associative

reduce

function.

reduceByKeyLocally(self, func)

source

code

Merge

the

values

for

each

key

using

an

associative

reduce

function,

but

return

the

results

immediately

to

the

master

as

a

dictionary.

countByKey(self)

source

code

Count

the

number

of

elements

for

each

key,

and

return

the

result

to

the

master

as

a

dictionary.

join(self, other, numPartitions=None)

source

code

Return

an

RDD

containing

all

pairs

of

elements

with

matching

keys

in

self

and

other.

leftOuterJoin(self, other, numPartitions=None)

source

code

Perform

a

left

outer

join

of

self

and

other.

rightOuterJoin(self, other, numPartitions=None)

source

code

Perform

a

right

outer

join

of

self

and

other.

source

code

e_hash)

Return

a

copy

of

the

RDD

partitioned

using

the

specified

partitioner.

source

iners, numPartitions=None)

code

Generic

function

to

combine

the

elements

for

each

key

using

a

custom

set

of

aggregation

functions.

foldByKey(self, zeroValue, func, numPartitions=None)

Merge

the

values

for

each

key

using

an

associative

function

"func"

and

a

source

code

combineByKey(self, createCombiner, mergeValue, mergeComb

partitionBy(self, numPartitions, partitionFunc=portabl

�

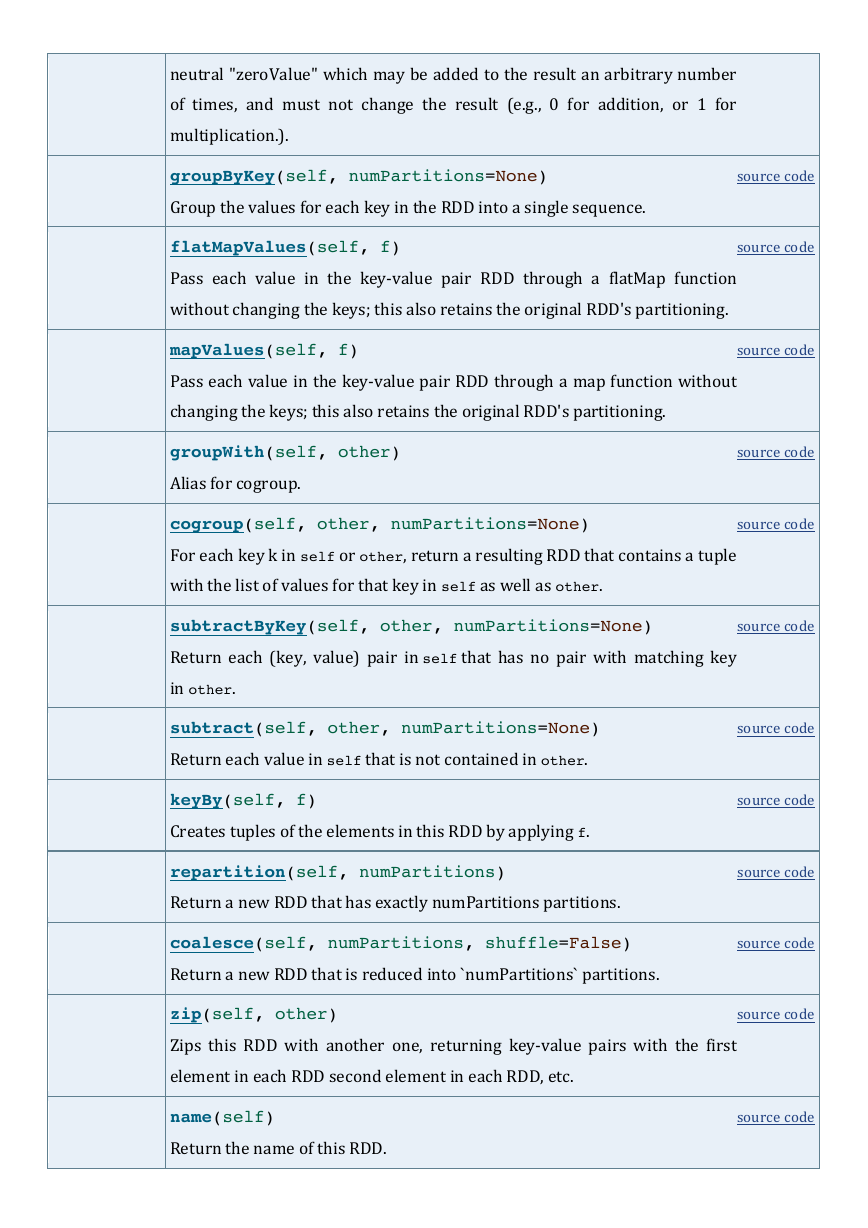

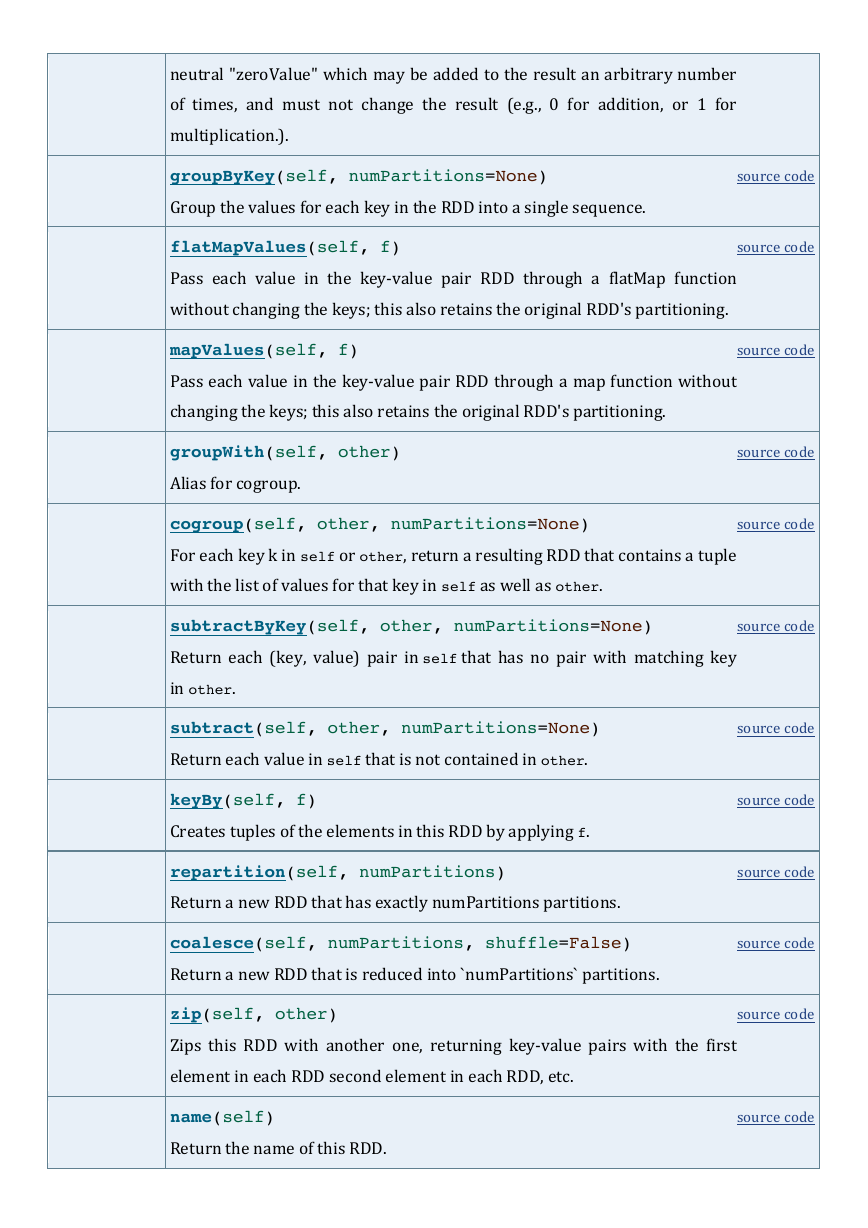

neutral

"zeroValue"

which

may

be

added

to

the

result

an

arbitrary

number

of

times,

and

must

not

change

the

result

(e.g.,

0

for

addition,

or

1

for

multiplication.).

groupByKey(self, numPartitions=None)

Group

the

values

for

each

key

in

the

RDD

into

a

single

sequence.

flatMapValues(self, f)

Pass

each

value

in

the

key-‐value

pair

RDD

through

a

flatMap

function

without

changing

the

keys;

this

also

retains

the

original

RDD's

partitioning.

mapValues(self, f)

Pass

each

value

in

the

key-‐value

pair

RDD

through

a

map

function

without

changing

the

keys;

this

also

retains

the

original

RDD's

partitioning.

groupWith(self, other)

Alias

for

cogroup.

cogroup(self, other, numPartitions=None)

For

each

key

k

in

self

or

other,

return

a

resulting

RDD

that

contains

a

tuple

with

the

list

of

values

for

that

key

in

self

as

well

as

other.

subtractByKey(self, other, numPartitions=None)

Return

each

(key,

value)

pair

in

self

that

has

no

pair

with

matching

key

in

other.

subtract(self, other, numPartitions=None)

Return

each

value

in

self

that

is

not

contained

in

other.

keyBy(self, f)

Creates

tuples

of

the

elements

in

this

RDD

by

applying

f.

repartition(self, numPartitions)

Return

a

new

RDD

that

has

exactly

numPartitions

partitions.

coalesce(self, numPartitions, shuffle=False)

Return

a

new

RDD

that

is

reduced

into

`numPartitions`

partitions.

zip(self, other)

Zips

this

RDD

with

another

one,

returning

key-‐value

pairs

with

the

first

element

in

each

RDD

second

element

in

each

RDD,

etc.

name(self)

Return

the

name

of

this

RDD.

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

source

code

�

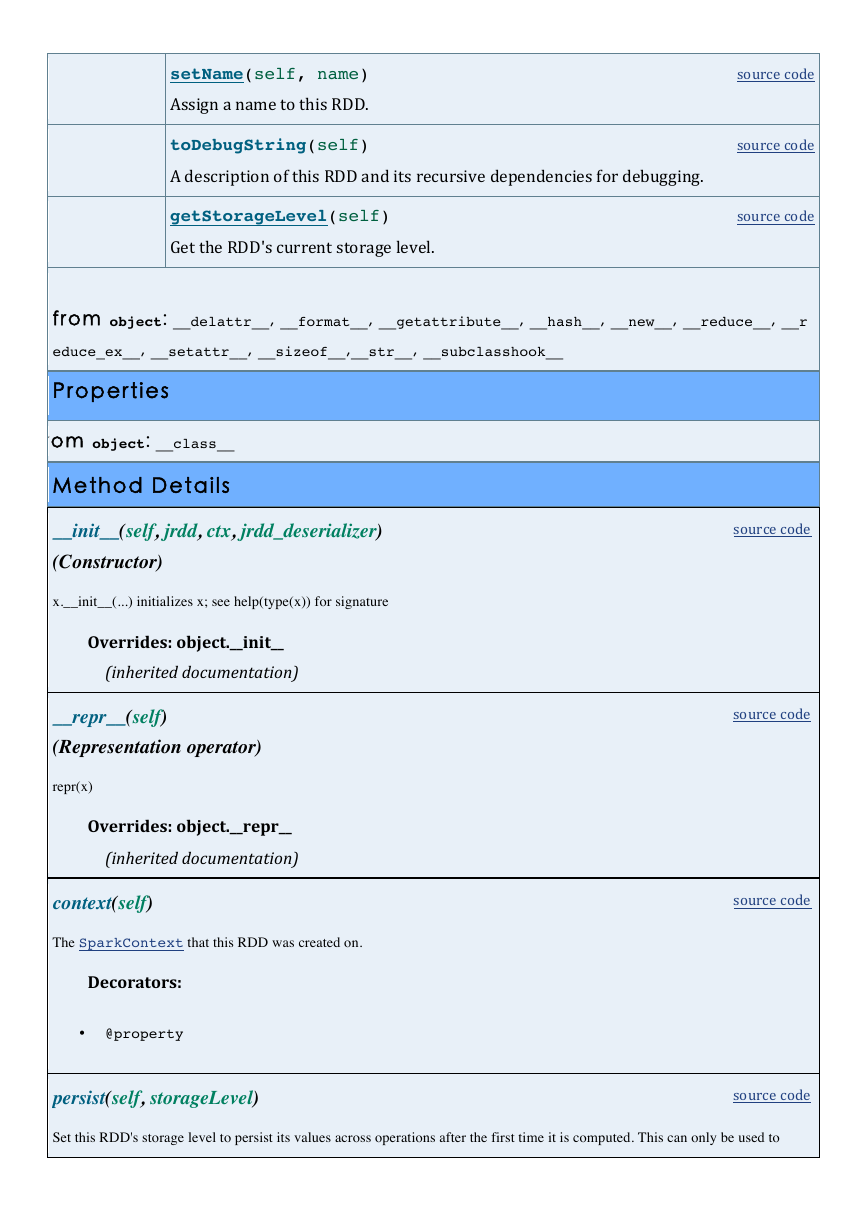

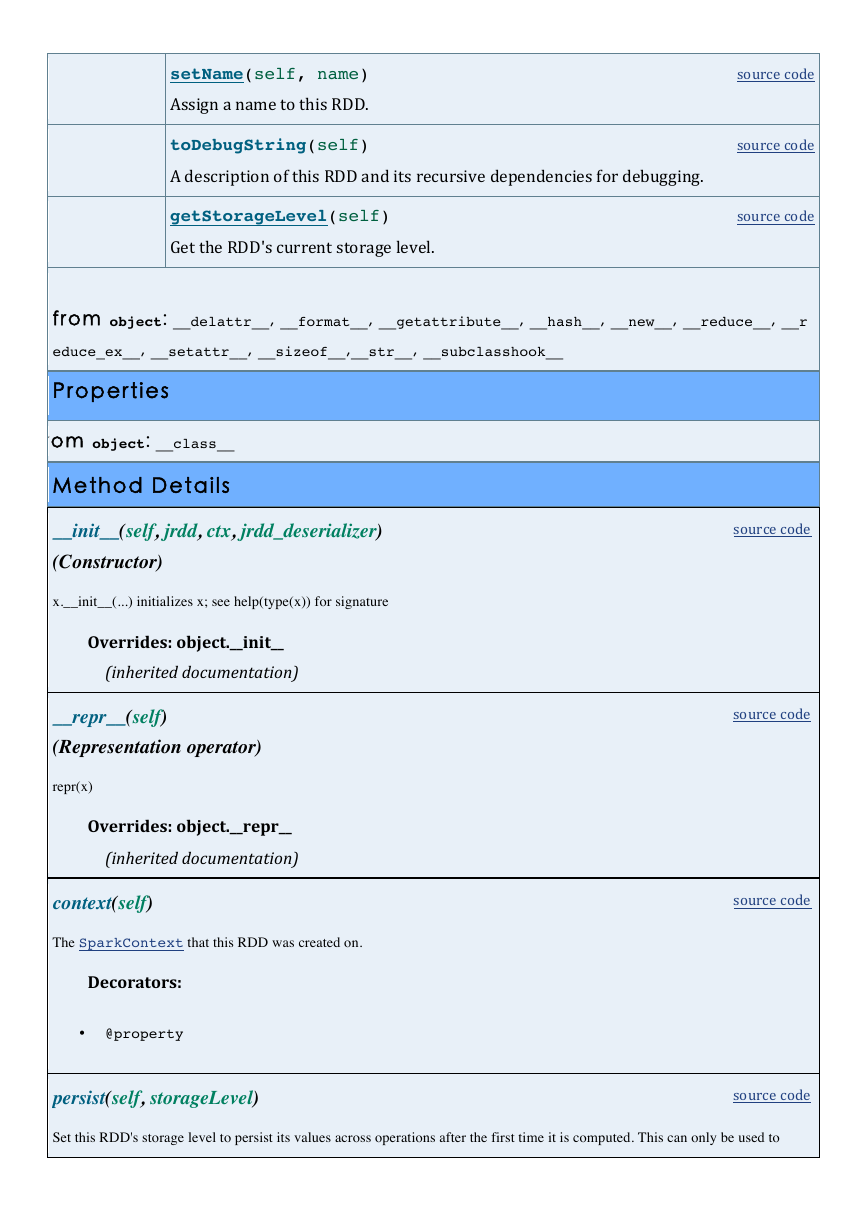

setName(self, name)

Assign

a

name

to

this

RDD.

toDebugString(self)

A

description

of

this

RDD

and

its

recursive

dependencies

for

debugging.

getStorageLevel(self)

Get

the

RDD's

current

storage

level.

source

code

source

code

source

code

Inherited

from object: __delattr__, __format__, __getattribute__, __hash__, __new__, __reduce__, __r

educe_ex__, __setattr__, __sizeof__,__str__, __subclasshook__

Properties

Inherited from object: __class__

Method Details

__init__(self, jrdd, ctx, jrdd_deserializer)

(Constructor)

x.__init__(...) initializes x; see help(type(x)) for signature

Overrides:

object.__init__

(inherited

documentation)

__repr__(self)

(Representation operator)

repr(x)

Overrides:

object.__repr__

(inherited

documentation)

context(self)

The SparkContext that this RDD was created on.

Decorators:

• @property

persist(self, storageLevel)

Set this RDD's storage level to persist its values across operations after the first time it is computed. This can only be used to

source

code

source

code

source

code

source

code

�

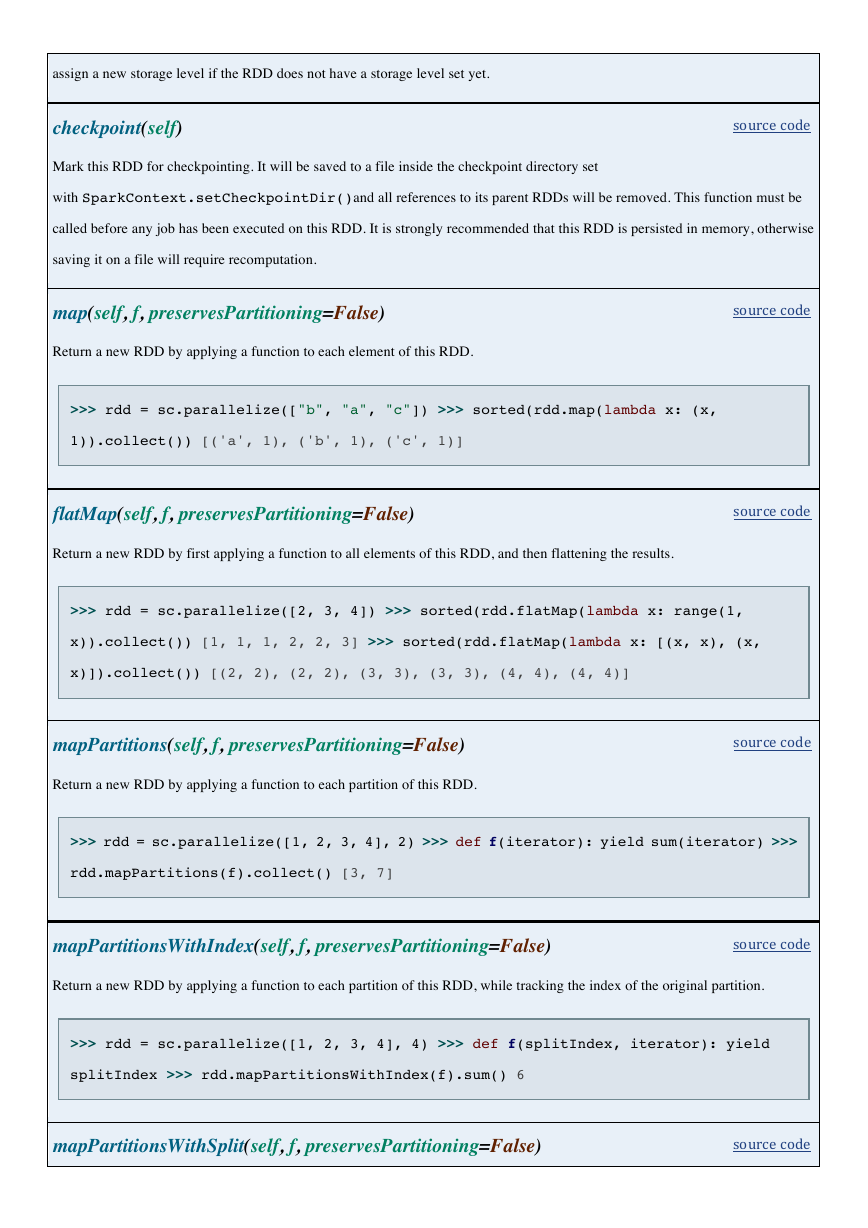

assign a new storage level if the RDD does not have a storage level set yet.

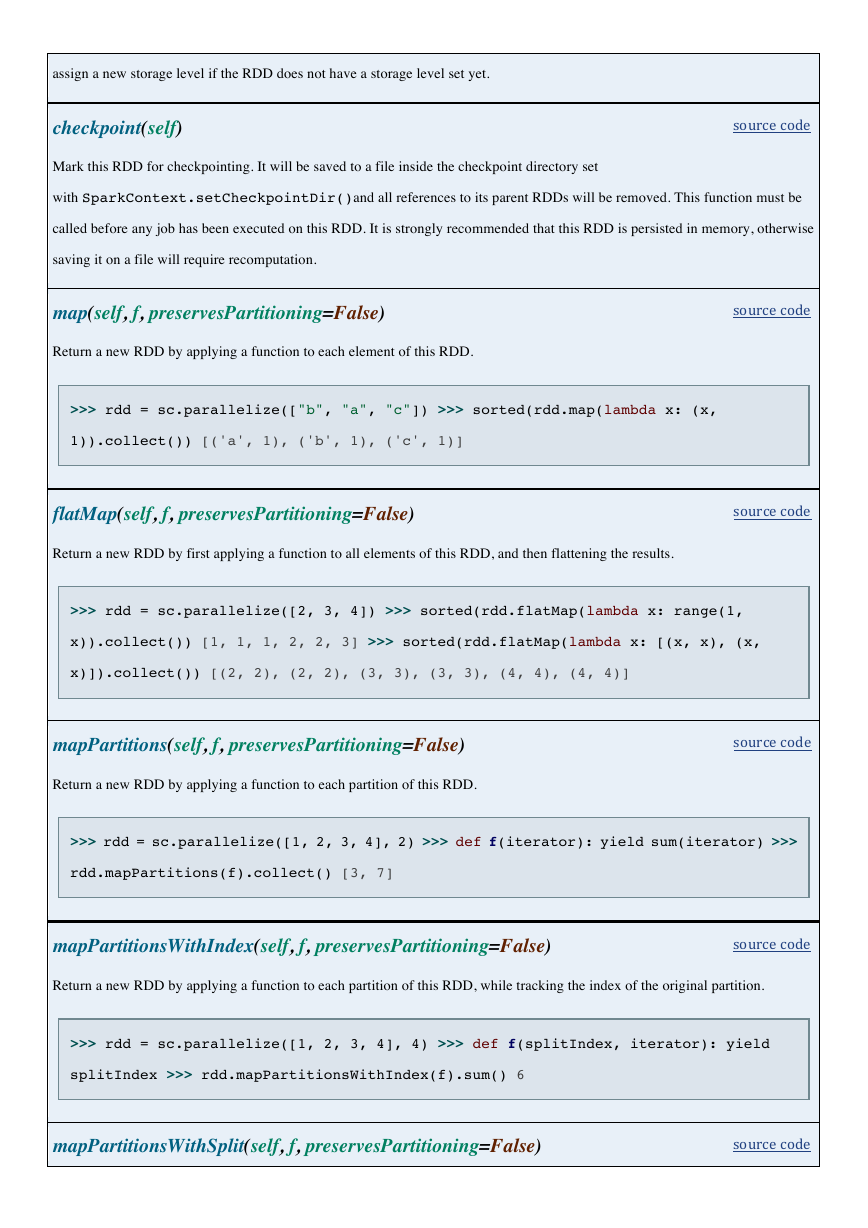

checkpoint(self)

Mark this RDD for checkpointing. It will be saved to a file inside the checkpoint directory set

with SparkContext.setCheckpointDir()and all references to its parent RDDs will be removed. This function must be

called before any job has been executed on this RDD. It is strongly recommended that this RDD is persisted in memory, otherwise

saving it on a file will require recomputation.

map(self, f, preservesPartitioning=False)

Return a new RDD by applying a function to each element of this RDD.

>>> rdd = sc.parallelize(["b", "a", "c"]) >>> sorted(rdd.map(lambda x: (x,

1)).collect()) [('a', 1), ('b', 1), ('c', 1)]

flatMap(self, f, preservesPartitioning=False)

Return a new RDD by first applying a function to all elements of this RDD, and then flattening the results.

source

code

>>> rdd = sc.parallelize([2, 3, 4]) >>> sorted(rdd.flatMap(lambda x: range(1,

x)).collect()) [1, 1, 1, 2, 2, 3] >>> sorted(rdd.flatMap(lambda x: [(x, x), (x,

x)]).collect()) [(2, 2), (2, 2), (3, 3), (3, 3), (4, 4), (4, 4)]

source

code

source

code

source

code

source

code

source

code

mapPartitions(self, f, preservesPartitioning=False)

Return a new RDD by applying a function to each partition of this RDD.

>>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>> def f(iterator): yield sum(iterator) >>>

rdd.mapPartitions(f).collect() [3, 7]

mapPartitionsWithIndex(self, f, preservesPartitioning=False)

Return a new RDD by applying a function to each partition of this RDD, while tracking the index of the original partition.

>>> rdd = sc.parallelize([1, 2, 3, 4], 4) >>> def f(splitIndex, iterator): yield

splitIndex >>> rdd.mapPartitionsWithIndex(f).sum() 6

mapPartitionsWithSplit(self, f, preservesPartitioning=False)

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc