IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 33, NO. 12, DECEMBER 2011

2341

Single Image Haze Removal

Using Dark Channel Prior

Kaiming He, Jian Sun, and Xiaoou Tang, Fellow, IEEE

Abstract—In this paper, we propose a simple but effective image prior—dark channel prior to remove haze from a single input image.

The dark channel prior is a kind of statistics of outdoor haze-free images. It is based on a key observation—most local patches in

outdoor haze-free images contain some pixels whose intensity is very low in at least one color channel. Using this prior with the haze

imaging model, we can directly estimate the thickness of the haze and recover a high-quality haze-free image. Results on a variety of

hazy images demonstrate the power of the proposed prior. Moreover, a high-quality depth map can also be obtained as a byproduct of

haze removal.

Index Terms—Dehaze, defog, image restoration, depth estimation.

Ç

1 INTRODUCTION

Haze removal1 (or dehazing)

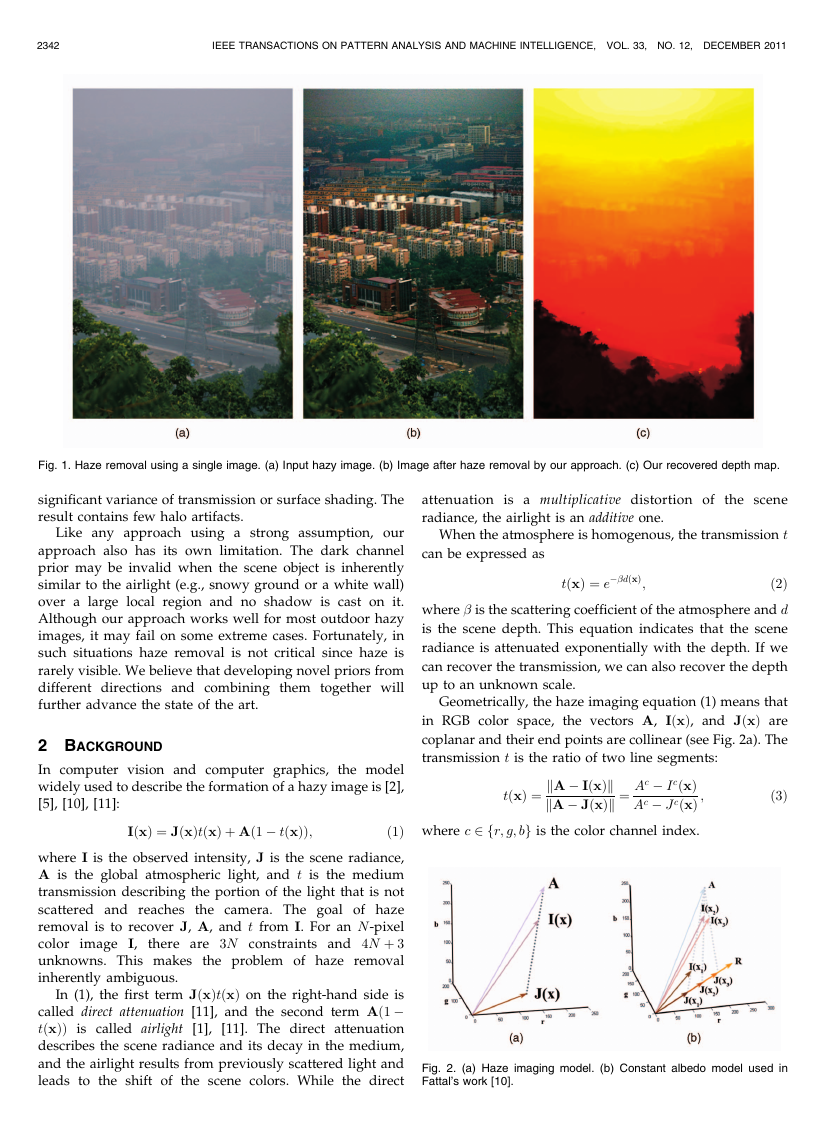

IMAGES of outdoor scenes are usually degraded by the

turbid medium (e.g., particles and water droplets) in

the atmosphere. Haze, fog, and smoke are such phenomena

due to atmospheric absorption and scattering. The irradiance

received by the camera from the scene point is attenuated

along the line of sight. Furthermore, the incoming light is

blended with the airlight [1]—ambient light reflected into the

line of sight by atmospheric particles. The degraded images

lose contrast and color fidelity, as shown in Fig. 1a. Since the

amount of scattering depends on the distance of the scene

points from the camera, the degradation is spatially variant.

is highly desired in

consumer/computational photography and computer

vision applications. First, removing haze can significantly

increase the visibility of the scene and correct the color shift

caused by the airlight. In general, the haze-free image is

more visually pleasing. Second, most computer vision

algorithms, from low-level

image analysis to high-level

object recognition, usually assume that the input image

(after radiometric calibration) is the scene radiance. The

performance of many vision algorithms (e.g.,

feature

detection, filtering, and photometric analysis) will inevita-

bly suffer from the biased and low-contrast scene radiance.

Last, haze removal can provide depth information and

benefit many vision algorithms and advanced image

1. Haze, fog, and smoke differ mainly in the material, size, shape, and

concentration of the atmospheric particles. See [2] for more details. In this

paper, we do not distinguish these similar phenomena and use the term

haze removal for simplicity.

. K. He and X. Tang are with the Department of Information Engineering,

The Chinese University of Hong Kong, Shatin, N.T., Hong Kong, China.

E-mail: {hkm007, xtang}@ie.cuhk.edu.hk.

. J. Sun is with Microsoft Research Asia, F5, #49, Zhichun Road, Haldian

District, Beijing, China. E-mail: jiansun@microsoft.com.

Manuscript received 17 Dec. 2009; revised 24 June 2010; accepted 26 June

2010; published online 31 Aug. 2010.

Recommended for acceptance by S.B. Kang.

For information on obtaining reprints of this article, please send e-mail to:

tpami@computer.org, and reference IEEECS Log Number

TPAMISI-2009-12-0832.

Digital Object Identifier no. 10.1109/TPAMI.2010.168.

editing. Haze or fog can be a useful depth clue for scene

understanding. A bad hazy image can be put to good use.

However, haze removal is a challenging problem because

the haze is dependent on the unknown depth. The problem is

underconstrained if the input is only a single hazy image.

Therefore, many methods have been proposed by using

multiple images or additional

information. Polarization-

based methods [3], [4] remove the haze effect through two or

more images taken with different degrees of polarization. In

[5], [6], [7], more constraints are obtained from multiple

images of the same scene under different weather conditions.

Depth-based methods [8], [9] require some depth informa-

tion from user inputs or known 3D models.

Recently, single image haze removal has made significant

progresses [10], [11]. The success of these methods lies on

using stronger priors or assumptions. Tan [11] observes that

a haze-free image must have higher contrast compared with

the input hazy image and he removes haze by maximizing

the local contrast of the restored image. The results are

visually compelling but may not be physically valid. Fattal

[10] estimates the albedo of the scene and the medium

transmission under the assumption that the transmission

and the surface shading are locally uncorrelated. This

approach is physically sound and can produce impressive

results. However, it cannot handle heavily hazy images well

and may fail in the cases where the assumption is broken.

In this paper, we propose a novel prior—dark channel

prior—for single image haze removal. The dark channel prior

is based on the statistics of outdoor haze-free images. We find

that, in most of the local regions which do not cover the sky,

some pixels (called dark pixels) very often have very low

intensity in at least one color (RGB) channel. In hazy images,

the intensity of these dark pixels in that channel is mainly

contributed by the airlight. Therefore, these dark pixels can

directly provide an accurate estimation of the haze transmis-

sion. Combining a haze imaging model and a soft matting

interpolation method, we can recover a high-quality haze-

free image and produce a good depth map.

Our approach is physically valid and is able to handle

distant objects in heavily hazy images. We do not rely on

0162-8828/11/$26.00 ß 2011 IEEE

Published by the IEEE Computer Society

�

2342

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 33, NO. 12, DECEMBER 2011

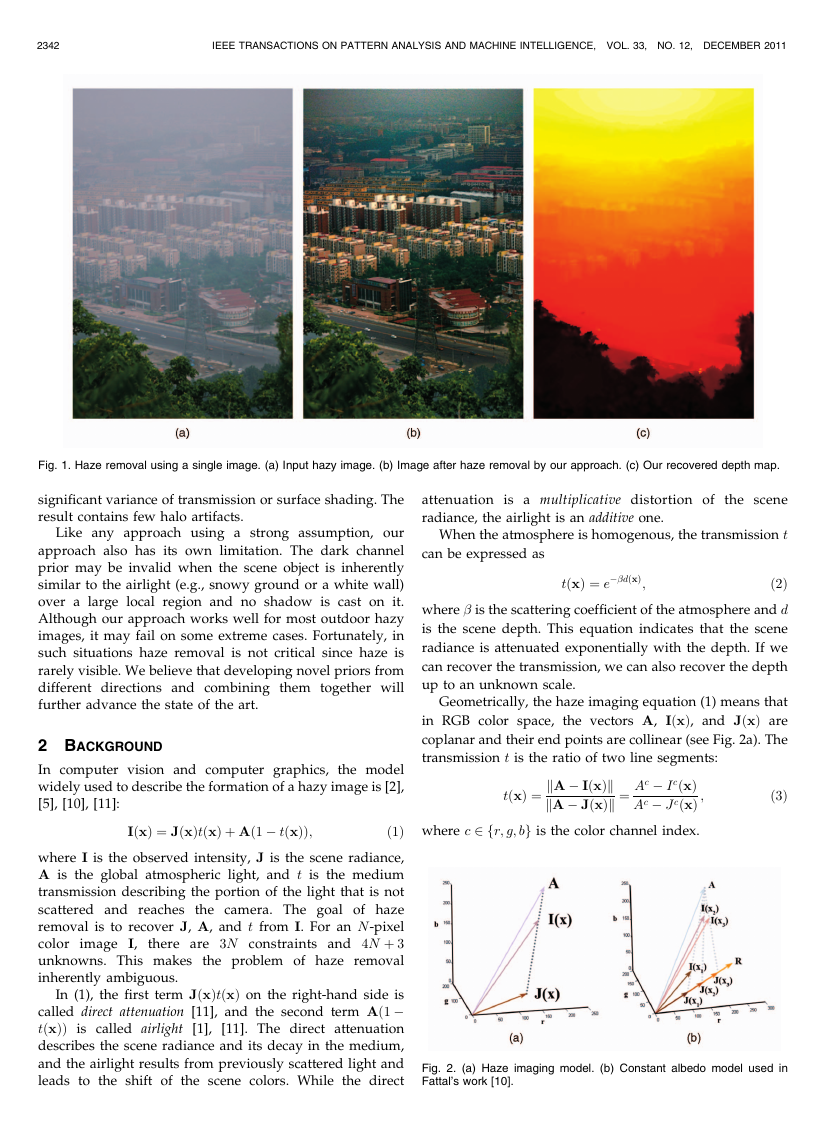

Fig. 1. Haze removal using a single image. (a) Input hazy image. (b) Image after haze removal by our approach. (c) Our recovered depth map.

significant variance of transmission or surface shading. The

result contains few halo artifacts.

Like any approach using a strong assumption, our

approach also has its own limitation. The dark channel

prior may be invalid when the scene object is inherently

similar to the airlight (e.g., snowy ground or a white wall)

over a large local region and no shadow is cast on it.

Although our approach works well for most outdoor hazy

images, it may fail on some extreme cases. Fortunately, in

such situations haze removal is not critical since haze is

rarely visible. We believe that developing novel priors from

different directions and combining them together will

further advance the state of the art.

2 BACKGROUND

In computer vision and computer graphics, the model

widely used to describe the formation of a hazy image is [2],

[5], [10], [11]:

IðxÞ ¼ JðxÞtðxÞ þ Að1 � tðxÞÞ;

ð1Þ

where I is the observed intensity, J is the scene radiance,

A is the global atmospheric light, and t is the medium

transmission describing the portion of the light that is not

scattered and reaches the camera. The goal of haze

removal is to recover J, A, and t from I. For an N-pixel

there are 3N constraints and 4N þ 3

color image I,

unknowns. This makes the problem of haze removal

inherently ambiguous.

In (1), the first term JðxÞtðxÞ on the right-hand side is

called direct attenuation [11], and the second term Að1 �

tðxÞÞ is called airlight

[11]. The direct attenuation

describes the scene radiance and its decay in the medium,

and the airlight results from previously scattered light and

leads to the shift of the scene colors. While the direct

[1],

attenuation is a multiplicative distortion of

radiance, the airlight is an additive one.

the scene

When the atmosphere is homogenous, the transmission t

can be expressed as

tðxÞ ¼ e��dðxÞ;

ð2Þ

where � is the scattering coefficient of the atmosphere and d

is the scene depth. This equation indicates that the scene

radiance is attenuated exponentially with the depth. If we

can recover the transmission, we can also recover the depth

up to an unknown scale.

Geometrically, the haze imaging equation (1) means that

in RGB color space, the vectors A, IðxÞ, and JðxÞ are

coplanar and their end points are collinear (see Fig. 2a). The

transmission t is the ratio of two line segments:

tðxÞ ¼ kA � IðxÞk

kA � JðxÞk ¼ Ac � IcðxÞ

Ac � J cðxÞ ;

where c 2 fr; g; bg is the color channel index.

ð3Þ

Fig. 2. (a) Haze imaging model. (b) Constant albedo model used in

Fattal’s work [10].

�

HE ET AL.: SINGLE IMAGE HAZE REMOVAL USING DARK CHANNEL PRIOR

2343

Fig. 3. Calculation of a dark channel. (a) An arbitrary image J. (b) For each pixel, we calculate the minimum of its (r, g, b) values. (c) A minimum filter

is performed on (b). This is the dark channel of J. The image size is 800 � 551, and the patch size of

is 15 � 15.

Based on this model, Tan’s method [11] focuses on

enhancing the visibility of the image. For a patch with

uniform transmission t, the visibility (sum of gradient) of

the input image is reduced by the haze since t < 1:

krJðxÞk:

krIðxÞk ¼ t

krJðxÞk <

X

X

X

ð4Þ

x

x

x

The transmission t in a local patch is estimated by

maximizing the visibility of the patch under a constraint

that the intensity of JðxÞ is less than the intensity of A. An

MRF model is used to further regularize the result. This

approach is able to greatly unveil details and structures

from hazy images. However, the output images usually

tend to have larger saturation values because this method

focuses solely on the enhancement of the visibility and does

not intend to physically recover the scene radiance. Besides,

the result may contain halo effects near the depth

discontinuities.

In [10], Fattal proposes an approach based on Indepen-

dent Component Analysis (ICA). First, the albedo of a local

patch is assumed to be a constant vector R. Thus, all vectors

JðxÞ in the patch have the same direction R, as shown in

Fig. 2b. Second, by assuming that the statistics of the surface

shading kJðxÞk and the transmission tðxÞ are independent

in the patch, the direction of R is estimated by ICA. Finally,

an MRF model guided by the input color image is applied

to extrapolate the solution to the whole image. This

approach is physics-based and can produce a natural

haze-free image together with a good depth map. But,

due to the statistical

this

approach requires that the independent components vary

significantly. Any lack of variation or low signal-to-noise

ratio (often in dense haze region) will make the statistics

unreliable. Moreover, as the statistic is based on color

information, it is invalid for gray-scale images and it is

difficult to handle dense haze that is colorless.

independence assumption,

In the next section, we present a new prior—dark

channel prior—to estimate the transmission directly from

an outdoor hazy image.

3 DARK CHANNEL PRIOR

The dark channel prior is based on the following observa-

tion on outdoor haze-free images: In most of the nonsky

patches, at least one color channel has some pixels whose

intensity are very low and close to zero. Equivalently, the

minimum intensity in such a patch is close to zero.

To formally describe this observation, we first define the

concept of a dark channel. For an arbitrary image J, its dark

channel J dark is given by

�

�

;

min

c2fr;g;bg J cðyÞ

J darkðxÞ ¼ min

y2

ðxÞ

ð5Þ

where J c is a color channel of J and

ðxÞ is a local patch

centered at x. A dark channel

is the outcome of two

minimum operators: minc2fr;g;bg is performed on each pixel

(Fig. 3b), and miny2

ðxÞ is a minimum filter (Fig. 3c). The

minimum operators are commutative.

Using the concept of a dark channel, our observation

says that if J is an outdoor haze-free image, except for the

sky region, the intensity of J’s dark channel is low and

tends to be zero:

J dark ! 0:

ð6Þ

We call this observation dark channel prior.

The low intensity in the dark channel is mainly due to

three factors: a) shadows, e.g.,

the shadows of cars,

buildings, and the inside of windows in cityscape images,

or the shadows of leaves, trees, and rocks in landscape

images; b) colorful objects or surfaces, e.g., any object with

low reflectance in any color channel (for example, green

grass/tree/plant, red or yellow flower/leaf, and blue

water surface) will result

in low values in the dark

channel; c) dark objects or surfaces, e.g., dark tree trunks

and stones. As the natural outdoor images are usually

colorful and full of shadows, the dark channels of these

images are really dark!

To verify how good the dark channel prior is, we collect

an outdoor image set from Flickr.com and several other

image search engines using 150 most popular tags annotated

by the Flickr users. Since haze usually occurs in outdoor

landscape and cityscape scenes, we manually pick out the

haze-free landscape and cityscape ones from the data set.

Besides, we only focus on daytime images. Among them, we

randomly select 5,000 images and manually cut out the sky

regions. The images are resized so that the maximum of

width and height is 500 pixels and their dark channels are

computed using a patch size 15 � 15. Fig. 4 shows several

outdoor images and the corresponding dark channels.

�

2344

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 33, NO. 12, DECEMBER 2011

Fig. 4. (a) Example images in our haze-free image database. (b) The corresponding dark channels. (c) A hazy image and its dark channel.

Fig. 5a is the intensity histogram over all 5,000 dark

channels and Fig. 5b is the corresponding cumulative

distribution. We can see that about 75 percent of the pixels

in the dark channels have zero values, and the intensity of

90 percent of the pixels is below 25. This statistic gives very

strong support to our dark channel prior. We also compute

the average intensity of each dark channel and plot the

corresponding histogram in Fig. 5c. Again, most dark

channels have very low average intensity, showing that

only a small portion of outdoor haze-free images deviate

from our prior.

Due to the additive airlight, a hazy image is brighter than

its haze-free version where the transmission t is low. So, the

dark channel of a hazy image will have higher intensity in

regions with denser haze (see the right-hand side of Fig. 4).

Visually, the intensity of the dark channel

is a rough

approximation of the thickness of the haze. In the next

section, we will use this property to estimate the transmis-

sion and the atmospheric light.

Note that we neglect the sky regions because the dark

channel of a haze-free image may have high intensity here.

Fortunately, we can gracefully handle the sky regions by

using the haze imaging model (1) and our prior together. It

is not necessary to cut out the sky regions explicitly. We

discuss this issue in Section 4.1.

Our dark channel prior is partially inspired by the well-

known dark-object subtraction technique [12] widely used

in multispectral remote sensing systems. In [12], spatially

homogeneous haze is removed by subtracting a constant

value corresponding to the darkest object in the scene. We

generalize this idea and propose a novel prior for natural

image dehazing.

Fig. 5. Statistics of the dark channels. (a) Histogram of the intensity of the pixels in all of the 5,000 dark channels (each bin stands for 16 intensity

levels). (b) Cumulative distribution. (c) Histogram of the average intensity of each dark channel.

�

HE ET AL.: SINGLE IMAGE HAZE REMOVAL USING DARK CHANNEL PRIOR

4 HAZE REMOVAL USING DARK CHANNEL PRIOR

4.1 Estimating the Transmission

We assume that the atmospheric light A is given. An

automatic method to estimate A is proposed in Section 4.3.

We first normalize the haze imaging equation (1) by A:

IcðxÞ

Ac ¼ tðxÞ J cðxÞ

Ac þ 1 � tðxÞ:

ð7Þ

Note that we normalize each color channel independently.

We further assume that the transmission in a local patch

ðxÞ is constant. We denote this transmission as ~tðxÞ. Then,

we calculate the dark channel on both sides of

(7).

Equivalently, we put the minimum operators on both sides:

�

�

�

�

y2

ðxÞ min

min

IcðyÞ

Ac

þ 1 � ~tðxÞ:

c

¼ ~tðxÞ min

y2

ðxÞ min

c

J cðyÞ

Ac

ð8Þ

Since ~tðxÞ is a constant in the patch, it can be put on the

outside of the min operators.

As the scene radiance J is a haze-free image, the dark

channel of J is close to zero due to the dark channel prior:

ð9Þ

¼ 0:

min

�

J darkðxÞ ¼ min

y2

ðxÞ

�

As Ac is always positive, this leads to

�

J cðyÞ

�

c

�

y2

ðxÞ min

min

c

J cðyÞ

Ac

¼ 0:

ð10Þ

Putting (10) into (8), we can eliminate the multiplicative

term and estimate the transmission ~t simply by

�

~tðxÞ ¼ 1 � min

IcðyÞ

Ac

c

:

ð11Þ

y2

ðxÞ min

IcðyÞ

Ac Þ is the dark channel of the

It directly provides the

.

In fact, miny2

ðxÞðminc

normalized hazy image IcðyÞ

Ac

estimation of the transmission.

As we mentioned before, the dark channel prior is not a

good prior for the sky regions. Fortunately, the color of the

sky in a hazy image I is usually very similar to the

atmospheric light A. So, in the sky region, we have

�

�

IcðyÞ

Ac

! 1;

y2

ðxÞ min

min

c

and (11) gives ~tðxÞ ! 0. Since the sky is infinitely distant

and its transmission is indeed close to zero (see (2)), (11)

gracefully handles both sky and nonsky regions. We do not

need to separate the sky regions beforehand.

In practice, even on clear days the atmosphere is not

absolutely free of any particle. So the haze still exists when

we look at distant objects. Moreover, the presence of haze is

a fundamental cue for human to perceive depth [13], [14].

This phenomenon is called aerial perspective. If we remove

the haze thoroughly, the image may seem unnatural and we

may lose the feeling of depth. So, we can optionally keep a

very small amount of haze for the distant objects by

introducing a constant parameter ! (0 < ! � 1) into (11):

�

~tðxÞ ¼ 1 � ! min

y2

ðxÞ min

c

�

IcðyÞ

Ac

:

2345

ð12Þ

The nice property of this modification is that we adaptively

keep more haze for the distant objects. The value of ! is

application based. We fix it to 0.95 for all results reported in

this paper.

In the derivation of (11), the dark channel prior is

essential for eliminating the multiplicative term (direct

transmission) in the haze imaging model (1). Only the

additive term (airlight) is left. This strategy is completely

different from previous single image haze removal methods

[10], [11], which rely heavily on the multiplicative term.

These methods are driven by the fact that the multiplicative

term changes the image contrast [11] and the color variance

[10]. On the contrary, we notice that the additive term

changes the intensity of the local dark pixels. With the help

of

the multiplicative term is

discarded and the additive term is sufficient to estimate

the transmission. We can further generalize (1) by

the dark channel prior,

IðxÞ ¼ JðxÞt1ðxÞ þ Að1 � t2ðxÞÞ;

ð13Þ

where t1 and t2 are not necessarily the same. Using the

method for deriving (11), we can estimate t2 and thus

separate the additive term. The problem is reduced to a

multiplicative form (JðxÞt1), and other constraints or priors

can be used to further disentangle this term. In the literature

of human vision research [15], the additive term is called a

veiling luminance, and (13) can be used to describe a scene

seen through a veil or glare of highlights.

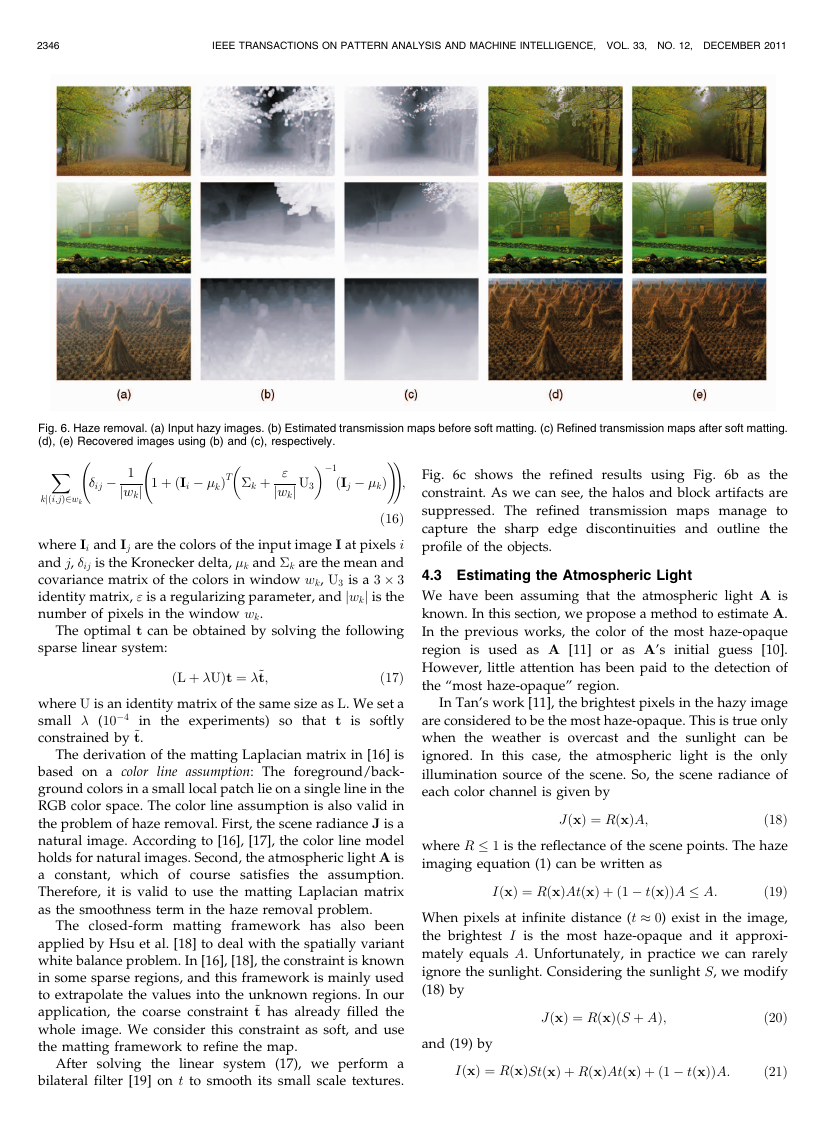

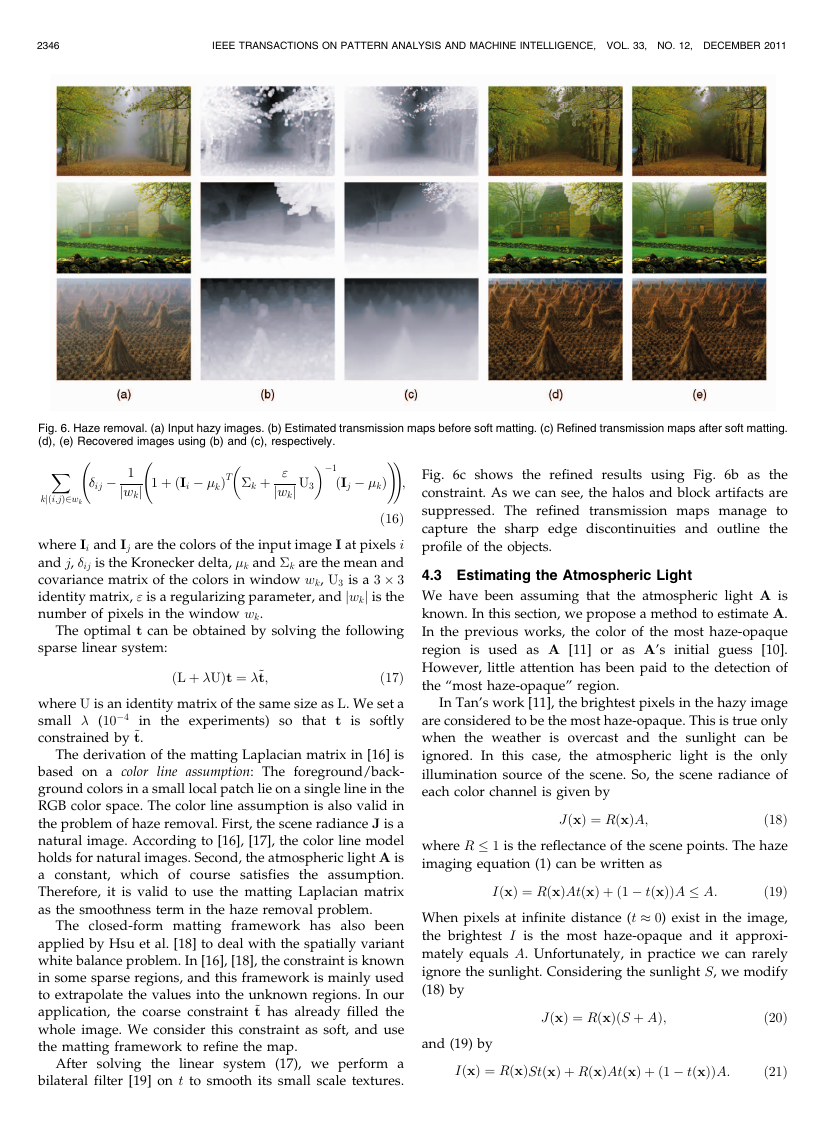

Fig. 6b shows the estimated transmission maps using

(12). Fig. 6d shows the corresponding recovered images. As

we can see, the dark channel prior is effective on recovering

the vivid colors and unveiling low contrast objects. The

transmission maps are reasonable. The main problems are

some halos and block artifacts. This is because the

transmission is not always constant in a patch. In the next

section, we propose a soft matting method to refine the

transmission maps.

4.2 Soft Matting

We notice that the haze imaging equation (1) has a similar

form as the image matting equation:

I ¼ F� þ Bð1 � �Þ;

ð14Þ

where F and B are foreground and background colors,

respectively, and � is the foreground opacity. A transmis-

sion map in the haze imaging equation is exactly an alpha

map. Therefore, we can apply a closed-form framework of

matting [16] to refine the transmission.

Denote the refined transmission map by tðxÞ. Rewriting

tðxÞ and ~tðxÞ in their vector forms as t and ~t, we minimize

the following cost function:

EðtÞ ¼ tTLt þ �ðt � ~tÞTðt � ~tÞ:

ð15Þ

Here, the first term is a smoothness term and the second

term is a data term with a weight �. The matrix L is

called the matting Laplacian matrix [16]. Its ði; jÞ element

is defined as

�

2346

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 33, NO. 12, DECEMBER 2011

Fig. 6. Haze removal. (a) Input hazy images. (b) Estimated transmission maps before soft matting. (c) Refined transmission maps after soft matting.

(d), (e) Recovered images using (b) and (c), respectively.

X

�ij � 1

�

Fig. 6c shows the refined results using Fig. 6b as the

constraint. As we can see, the halos and block artifacts are

suppressed. The refined transmission maps manage to

capture the sharp edge discontinuities and outline the

profile of the objects.

4.3 Estimating the Atmospheric Light

We have been assuming that the atmospheric light A is

known. In this section, we propose a method to estimate A.

In the previous works, the color of the most haze-opaque

region is used as A [11] or as A’s initial guess [10].

However, little attention has been paid to the detection of

the “most haze-opaque” region.

In Tan’s work [11], the brightest pixels in the hazy image

are considered to be the most haze-opaque. This is true only

when the weather is overcast and the sunlight can be

ignored. In this case, the atmospheric light is the only

illumination source of the scene. So, the scene radiance of

each color channel is given by

ð18Þ

where R � 1 is the reflectance of the scene points. The haze

imaging equation (1) can be written as

JðxÞ ¼ RðxÞA;

IðxÞ ¼ RðxÞAtðxÞ þ ð1 � tðxÞÞA � A:

ð19Þ

When pixels at infinite distance (t � 0) exist in the image,

the brightest I is the most haze-opaque and it approxi-

mately equals A. Unfortunately, in practice we can rarely

ignore the sunlight. Considering the sunlight S, we modify

(18) by

JðxÞ ¼ RðxÞðS þ AÞ;

and (19) by

IðxÞ ¼ RðxÞStðxÞ þ RðxÞAtðxÞ þ ð1 � tðxÞÞA:

ð20Þ

ð21Þ

kjði;jÞ2wk

jwkj 1 þ ðIi � �kÞT �k þ "

!

!

�

�1ðIj � �kÞ

ð16Þ

where Ii and Ij are the colors of the input image I at pixels i

and j, �ij is the Kronecker delta, �k and �k are the mean and

covariance matrix of the colors in window wk, U3 is a 3 � 3

identity matrix, " is a regularizing parameter, and jwkj is the

number of pixels in the window wk.

jwkj U3

;

The optimal t can be obtained by solving the following

sparse linear system:

ðL þ �UÞt ¼ �~t;

ð17Þ

where U is an identity matrix of the same size as L. We set a

small � (10�4

in the experiments) so that t is softly

constrained by ~t.

The derivation of the matting Laplacian matrix in [16] is

based on a color line assumption: The foreground/back-

ground colors in a small local patch lie on a single line in the

RGB color space. The color line assumption is also valid in

the problem of haze removal. First, the scene radiance J is a

natural image. According to [16], [17], the color line model

holds for natural images. Second, the atmospheric light A is

a constant, which of course satisfies the assumption.

Therefore, it is valid to use the matting Laplacian matrix

as the smoothness term in the haze removal problem.

The closed-form matting framework has also been

applied by Hsu et al. [18] to deal with the spatially variant

white balance problem. In [16], [18], the constraint is known

in some sparse regions, and this framework is mainly used

to extrapolate the values into the unknown regions. In our

application, the coarse constraint ~t has already filled the

whole image. We consider this constraint as soft, and use

the matting framework to refine the map.

After solving the linear system (17), we perform a

bilateral filter [19] on t to smooth its small scale textures.

�

HE ET AL.: SINGLE IMAGE HAZE REMOVAL USING DARK CHANNEL PRIOR

2347

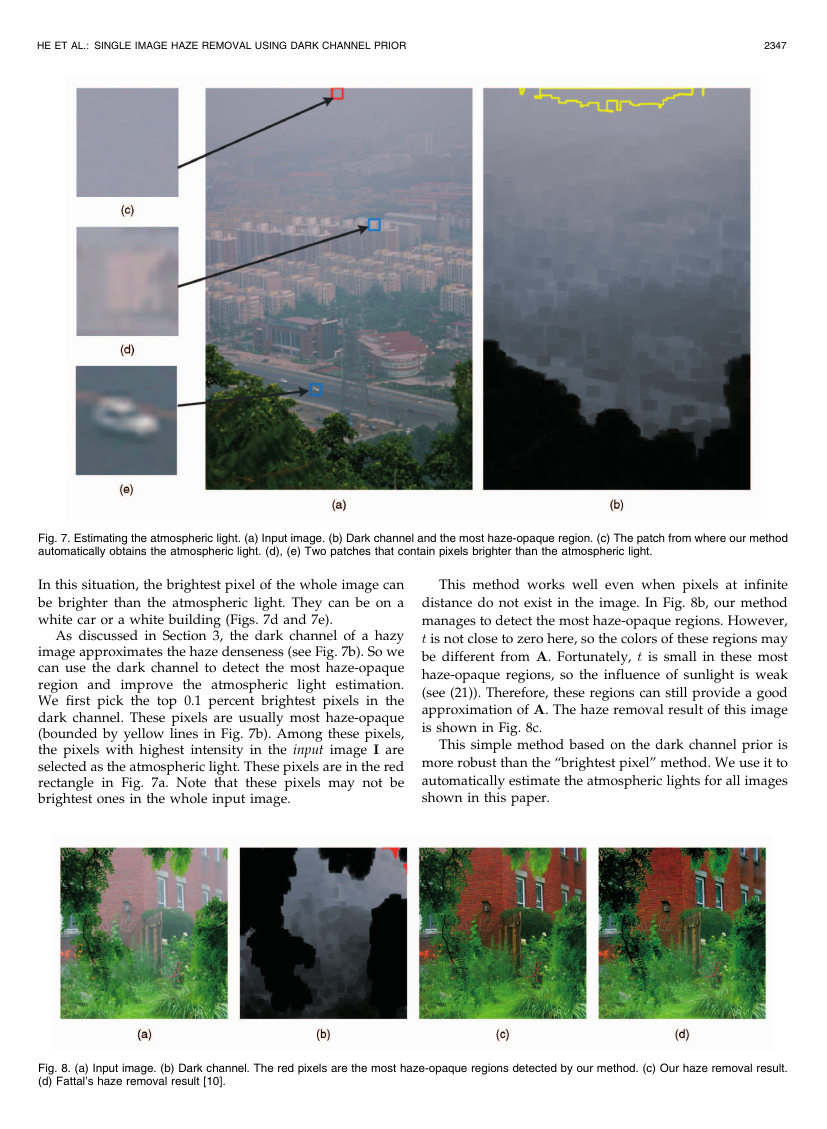

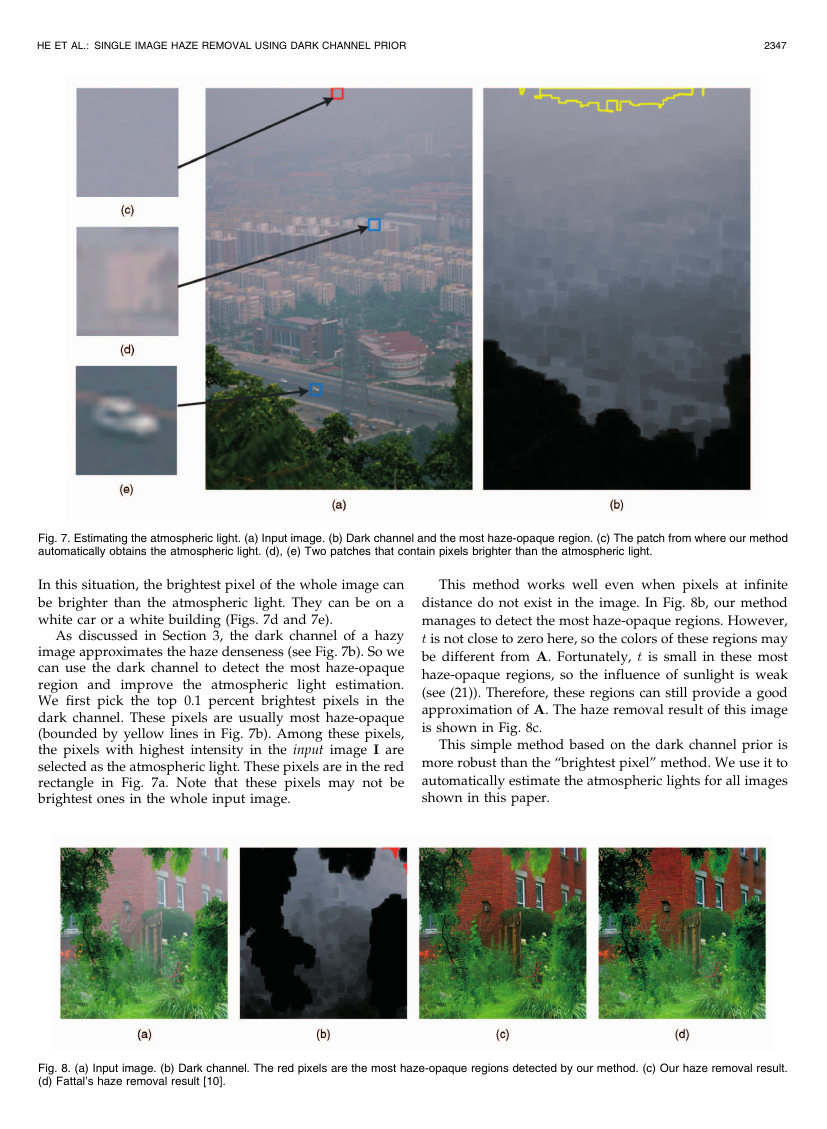

Fig. 7. Estimating the atmospheric light. (a) Input image. (b) Dark channel and the most haze-opaque region. (c) The patch from where our method

automatically obtains the atmospheric light. (d), (e) Two patches that contain pixels brighter than the atmospheric light.

In this situation, the brightest pixel of the whole image can

be brighter than the atmospheric light. They can be on a

white car or a white building (Figs. 7d and 7e).

As discussed in Section 3, the dark channel of a hazy

image approximates the haze denseness (see Fig. 7b). So we

can use the dark channel to detect the most haze-opaque

region and improve the atmospheric light estimation.

We first pick the top 0.1 percent brightest pixels in the

dark channel. These pixels are usually most haze-opaque

(bounded by yellow lines in Fig. 7b). Among these pixels,

the pixels with highest intensity in the input image I are

selected as the atmospheric light. These pixels are in the red

rectangle in Fig. 7a. Note that these pixels may not be

brightest ones in the whole input image.

This method works well even when pixels at infinite

distance do not exist in the image. In Fig. 8b, our method

manages to detect the most haze-opaque regions. However,

t is not close to zero here, so the colors of these regions may

be different from A. Fortunately, t is small in these most

haze-opaque regions, so the influence of sunlight is weak

(see (21)). Therefore, these regions can still provide a good

approximation of A. The haze removal result of this image

is shown in Fig. 8c.

This simple method based on the dark channel prior is

more robust than the “brightest pixel” method. We use it to

automatically estimate the atmospheric lights for all images

shown in this paper.

Fig. 8. (a) Input image. (b) Dark channel. The red pixels are the most haze-opaque regions detected by our method. (c) Our haze removal result.

(d) Fattal’s haze removal result [10].

�

2348

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 33, NO. 12, DECEMBER 2011

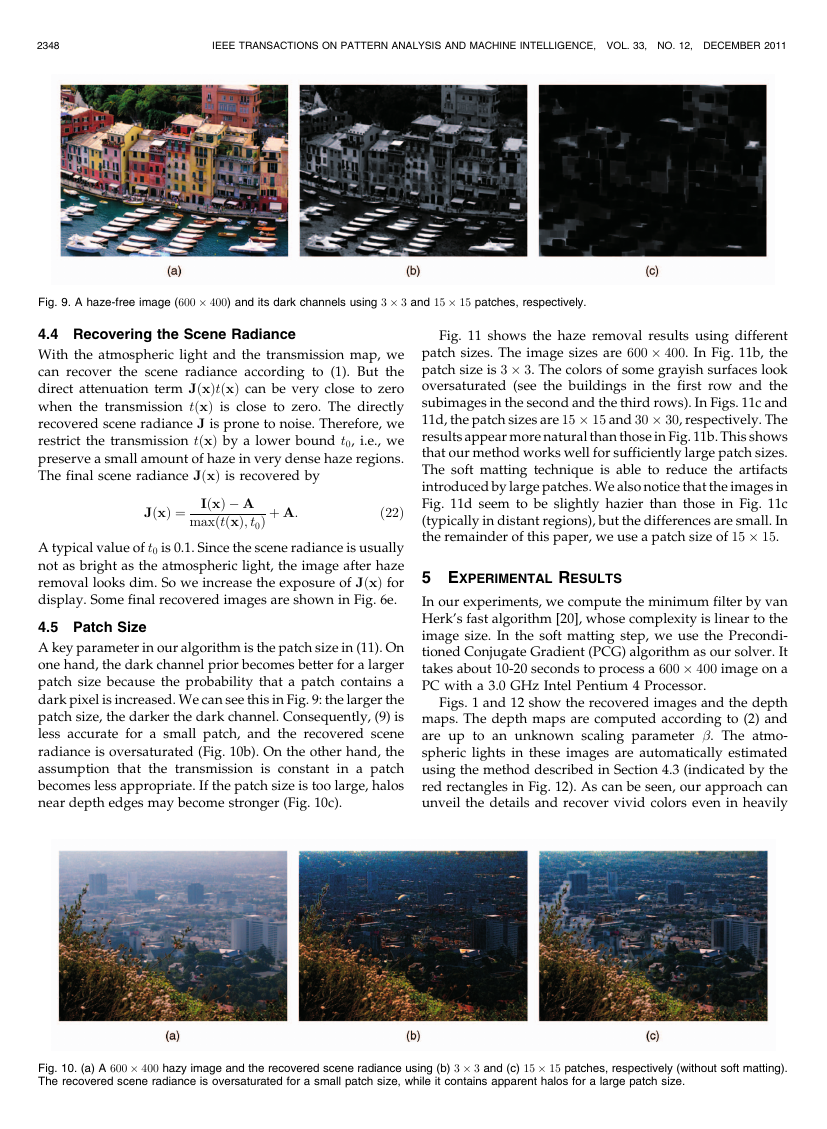

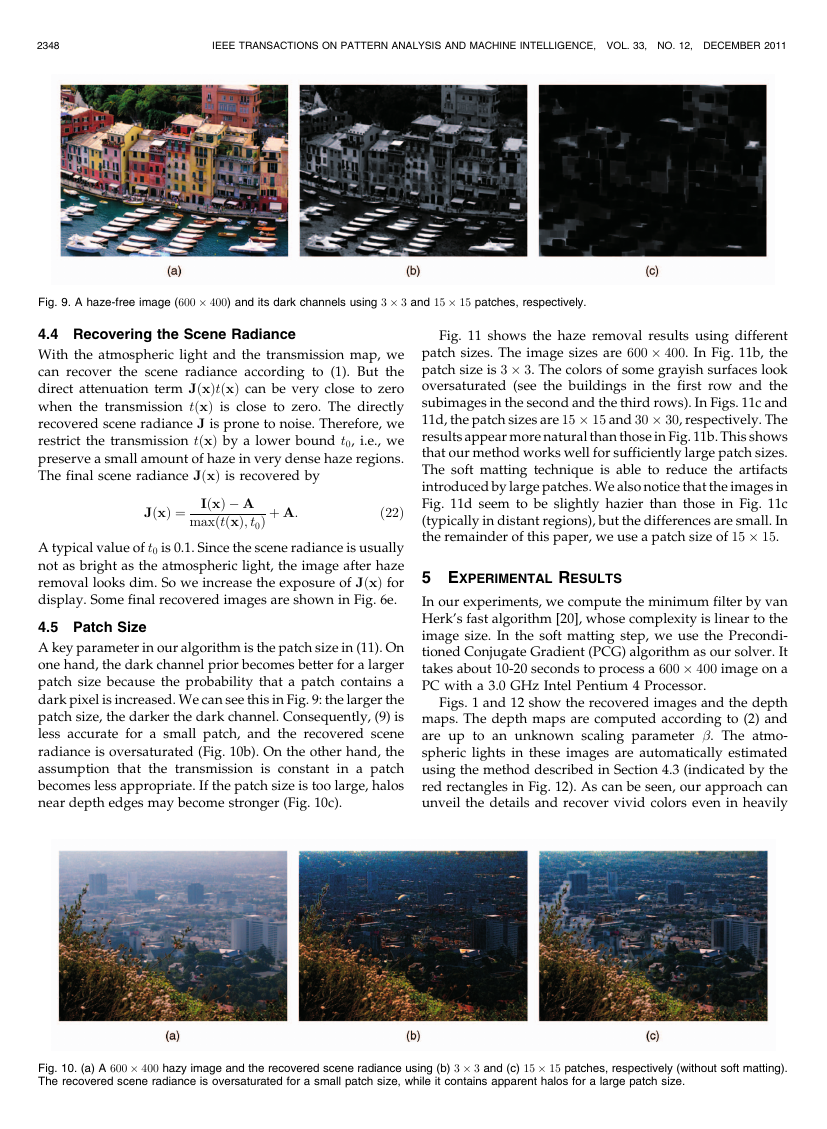

Fig. 9. A haze-free image (600 � 400) and its dark channels using 3 � 3 and 15 � 15 patches, respectively.

4.4 Recovering the Scene Radiance

With the atmospheric light and the transmission map, we

can recover the scene radiance according to (1). But the

direct attenuation term JðxÞtðxÞ can be very close to zero

when the transmission tðxÞ is close to zero. The directly

recovered scene radiance J is prone to noise. Therefore, we

restrict the transmission tðxÞ by a lower bound t0, i.e., we

preserve a small amount of haze in very dense haze regions.

The final scene radiance JðxÞ is recovered by

JðxÞ ¼ IðxÞ � A

maxðtðxÞ; t0Þ þ A:

ð22Þ

A typical value of t0 is 0.1. Since the scene radiance is usually

not as bright as the atmospheric light, the image after haze

removal looks dim. So we increase the exposure of JðxÞ for

display. Some final recovered images are shown in Fig. 6e.

4.5 Patch Size

A key parameter in our algorithm is the patch size in (11). On

one hand, the dark channel prior becomes better for a larger

patch size because the probability that a patch contains a

dark pixel is increased. We can see this in Fig. 9: the larger the

patch size, the darker the dark channel. Consequently, (9) is

less accurate for a small patch, and the recovered scene

radiance is oversaturated (Fig. 10b). On the other hand, the

assumption that the transmission is constant in a patch

becomes less appropriate. If the patch size is too large, halos

near depth edges may become stronger (Fig. 10c).

Fig. 11 shows the haze removal results using different

patch sizes. The image sizes are 600 � 400. In Fig. 11b, the

patch size is 3 � 3. The colors of some grayish surfaces look

oversaturated (see the buildings in the first row and the

subimages in the second and the third rows). In Figs. 11c and

11d, the patch sizes are 15 � 15 and 30 � 30, respectively. The

results appear more natural than those in Fig. 11b. This shows

that our method works well for sufficiently large patch sizes.

The soft matting technique is able to reduce the artifacts

introduced by large patches. We also notice that the images in

Fig. 11d seem to be slightly hazier than those in Fig. 11c

(typically in distant regions), but the differences are small. In

the remainder of this paper, we use a patch size of 15 � 15.

5 EXPERIMENTAL RESULTS

In our experiments, we compute the minimum filter by van

Herk’s fast algorithm [20], whose complexity is linear to the

image size. In the soft matting step, we use the Precondi-

tioned Conjugate Gradient (PCG) algorithm as our solver. It

takes about 10-20 seconds to process a 600 � 400 image on a

PC with a 3.0 GHz Intel Pentium 4 Processor.

Figs. 1 and 12 show the recovered images and the depth

maps. The depth maps are computed according to (2) and

are up to an unknown scaling parameter �. The atmo-

spheric lights in these images are automatically estimated

using the method described in Section 4.3 (indicated by the

red rectangles in Fig. 12). As can be seen, our approach can

unveil the details and recover vivid colors even in heavily

Fig. 10. (a) A 600 � 400 hazy image and the recovered scene radiance using (b) 3 � 3 and (c) 15 � 15 patches, respectively (without soft matting).

The recovered scene radiance is oversaturated for a small patch size, while it contains apparent halos for a large patch size.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc