1262

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 20, NO. 5, MAY 2011

A Linear Programming Approach for Optimal

Contrast-Tone Mapping

Xiaolin Wu, Fellow, IEEE

Abstract—This paper proposes a novel algorithmic approach of

image enhancement via optimal contrast-tone mapping. In a fun-

damental departure from the current practice of histogram equal-

ization for contrast enhancement, the proposed approach maxi-

mizes expected contrast gain subject to an upper limit on tone dis-

tortion and optionally to other constraints that suppress artifacts.

The underlying contrast-tone optimization problem can be solved

efficiently by linear programming. This new constrained optimiza-

tion approach for image enhancement is general, and the user can

add and fine tune the constraints to achieve desired visual effects.

Experimental results demonstrate clearly superior performance of

the new approach over histogram equalization and its variants.

Index Terms—Contrast enhancement, dynamic range, his-

togram equalization, linear programming, tone reproduction.

I. INTRODUCTION

I N MOST image and video applications it is human viewers

that make the ultimate judgement of visual quality. They

typically associate high image contrast with good image quality.

Indeed, a noticeable progress in image display and generation

(both acquisition and synthetic rendering) technologies is the

increase of dynamic range and associated image enhancement

techniques [1].

The contrast of a raw image can be far less than ideal, due to

various causes such as poor illumination conditions, low quality

inexpensive imaging sensors, user operation errors, media de-

terioration (e.g., old faded prints and films), etc. For improved

human interpretation of image semantics and higher perceptual

quality, contrast enhancement is often performed and it has been

an active research topic since early days of digital image pro-

cessing, consumer electronics and computer vision.

Contrast enhancement

techniques can be classified into

two approaches: context-sensitive (point-wise operators) and

context-free (point operators). In context-sensitive approach

the contrast is defined in terms of the rate of change in intensity

between neighboring pixels. The contrast is increased by di-

rectly altering the local waveform on a pixel by pixel basis. For

Manuscript received April 05, 2010; revised July 21, 2010; accepted

September 19, 2010. Date of publication November 15, 2010; date of current

version April 15, 2011. This work was supported by the Natural Sciences and

Engineering Research Council of Canada, and by Natural Science Foundation

of China Grant 60932006 and the 111 Project B07022. The associate editor

coordinating the review of this manuscript and approving it for publication was

Dr. Xuelong Li.

The author

is with Department of Electrical and Computer Engi-

neering, McMaster University, Hamilton, ON L8S 4K1, Canada (e-mail:

xwu@ece.mcmaster.ca).

Color versions of one or more of the figures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TIP.2010.2092438

instance, edge enhancement and high-boost filtering belong to

the context-sensitive approach. Although intuitively appealing,

the context-sensitive techniques are prone to artifacts such as

ringing and magnified noises, and they cannot preserve the rank

consistency of the altered intensity levels. The context-free

contrast enhancement approach, on the other hand, does not

adjust the local waveform on a pixel by pixel basis. Instead, the

class of context-free contrast enhancement techniques adopt a

statistical approach. They manipulate the histogram of the input

image to separate the gray levels of higher probability further

apart from the neighboring gray levels. In other words, the

context-free techniques aim to increase the average difference

between any two altered input gray levels. Compared with its

context-sensitive counterpart, the context-free approach does

not suffer from the ringing artifacts and it can preserve the

relative ordering of altered gray levels.

Despite more than half a century of research on contrast en-

hancement, most published techniques are largely ad hoc. Due

to the lack of a rigorous analytical approach to contrast en-

hancement, histogram equalization seems to be a folklore syn-

onym for contrast enhancement in the literature and in textbooks

of image processing and computer vision. The justification of

histogram equalization as a contrast enhancement technique is

heuristic, catering to an intuition. Low contrast corresponds to a

biased histogram and, thus, can be rectified by reallocating un-

derused dynamic range of the output device to more probable

pixel values. Although this intuition is backed up by empirical

observations in many cases, the relationship between histogram

and contrast has not been precisely quantified.

No mathematical basis exists for the uniformity or near

uniformity of the processed histogram to be an objective of

contrast enhancement in general sense. On the contrary, his-

togram equalization can be detrimental to image interpretation

if carried out mechanically without care. In lack of proper

constraints histogram equalization can over shoot the gradient

amplitude in some narrow intensity range(s) and flatten subtle

smooth shades in other ranges. It can bring unacceptable distor-

tions to image statistics such as average intensity, energy, and

covariances, generating unnatural and incoherent 2-D wave-

forms. To alleviate these shortcomings, a number of different

techniques were proposed to modify the histogram equalization

algorithm [2]–[7]. This line of investigations was initiated by

Pisano et al. in their work of contrast-limited adaptive histogram

equalization (CLAHE) [8]. Somewhat ironically, these authors

had to limit contrast while pursuing contrast enhancement.

Recently, Arici et al. proposed to generate an intermediate

histogram in between the original input histogram and the

uniform histogram and then performs histogram equalization

1057-7149/$26.00 © 2010 IEEE

�

WU: A LINEAR PROGRAMMING APPROACH FOR OPTIMAL CONTRAST-TONE MAPPING

1263

of

. The in-between histogram is computed by minimizing a

. The authors showed

weighted distance

that undesirable side effects of histogramequalization can be

. This

suppressed via choosing the Lagrangian multiplier

latest paper also gave a good synopses of existing contrast

enhancement techniques. We refer the reader to [9] for a survey

of previous works, instead of reparaphrasing them here.

Compared with the aforementioned works on histogram-

based contrast enhancement techniques, this paper presents a

more rigorous study of the problem. We reexamine contrast en-

hancement in a new perspective of optimal allocation of output

dynamic range constrained by tune continuity. This brings about

a more principled approach of image enhancement. Our critique

of the current practice is that directly manipulating histograms

for contrast enhancement was ill conceived. The histogram is an

unwieldy, obscure proxy for contrast. The wide use of histogram

equalization as a means of context-free contrast enhancement is

apparently due to the lack of a proper mathematical formulation

of the problem. To fill this void we define an expected (con-

text-free) contrast gain of a transfer function. This relative mea-

sure of contrast takes on its base value of one if the input image

is left unchanged (i.e., identity transfer function), and increases

if a skewed histogram is made more uniform. However, percep-

tual image quality is more than the single aspect of high contrast.

If the output dynamic range is less than that of the human visual

system, which is the case for most display and printing tech-

nologies, context-free contrast enhancement will inevitably dis-

tort subtle tones. To balance between tone subtlety and contrast

enhancement we introduce a counter measure of tone distortion.

Based upon the said measures of contrast gain and tone distor-

tion, we formulate the problem of optimal contrast-tone map-

ping (OCTM) that aims to achieve high contrast and subtle tone

reproduction at the same time, and propose a linear program-

ming strategy to solve the underlying constrained optimization

problem. In the OCTM formulation, the optimal transfer func-

tion for images of uniform histogram is the identify function.

Although an image of uniform histogram cannot be further en-

hanced, histogram equalization does not produce OCTM so-

lutions in general for arbitrary input histograms. Instead, the

proposed linear programming-based OCTM algorithm can op-

timize the transfer function such that sharp contrast and subtle

tone are best balanced according to application requirements

and user preferences. The OCTM technique offers a greater

and more precise control of visual effects than existing tech-

niques of contrast enhancement. Common side effects of con-

trast enhancement, such as contours, shift of average intensity,

over exaggerated gradient, etc., can be effectively suppressed

by imposing appropriate constraints in the linear programming

framework.

In addition, in the OCTM framework,

input gray levels can

be mapped to an arbitrary number L of output gray levels, al-

lowing L to be equal, less or greater than . The OCTM tech-

nique is, therefore, suited to output conventional images on high

dynamic range displays or high dynamic range images on con-

ventional displays, with perceptual quality optimized for de-

vice characteristics and image contents. As such, OCTM can be

useful tool in high dynamic range imaging. Moreover, OCTM

can be unified with Gamma correction.

Analogously to global and local histogram equalization,

OCTM can be performed based upon either global or local sta-

tistics. However, in order to make our technical developments

in what follows concrete and focused, we will only discuss the

problem of contrast enhancement over an entire image instead

of adapting to local statistics of different subimages. All the

results and observations can be readily extended to locally

adaptive contrast enhancement.

The remainder of the paper is organized as follows. In the

next section, we introduce some new definitions related to the

intuitive notions of contrast and tone, and propose the OCTM

approach of image enhancement. In Section III, we develop

a linear programming algorithm to solve the OCTM problem.

In Section IV, we discuss how to fine tune output images ac-

cording to application requirements or users’ preferences within

the proposed linear programming framework. Experimental re-

sults are reported in Section V, and they demonstrate the ver-

satility and superior visual quality of the new contrast enhance-

ment technique.

II. CONTRAST AND TONE

Consider a gray scale image of bits with a histogram of

.

nonzero entries,

. We

be the probability of gray level

Let

define the expected context-free contrast of

by

By the definition, the maximum contrast

is achieved by a binary black-and-white image

(1)

and it

; the minimum contrast

constant. As long as the histogram of

i.e.,

the intensity distribution

when the image is a

is full without holes,

regardless

. Likewise, if

, then

.

Contrast enhancement is to increase the difference between

two adjacent gray levels and it is achieved by a remapping of

input gray levels to output gray levels. Such a remapping is also

gray levels by

necessary when reproducing a digital image of

a device of L gray levels,

L. This process is an integer-to-

integer transfer function

L

(2)

In order not to violate physical and psychovisual common sense,

should be monotonically nondecreasing

the transfer function

does not reverse the order of intensities.1 In other

such that

and, hence, any

words, we must have

transfer function

has the form

if

L

L

(3)

1This restriction may be relaxed in locally adaptive contrast enhancement.

But in each locality the monotonicity should still be imposed.

�

1264

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 20, NO. 5, MAY 2011

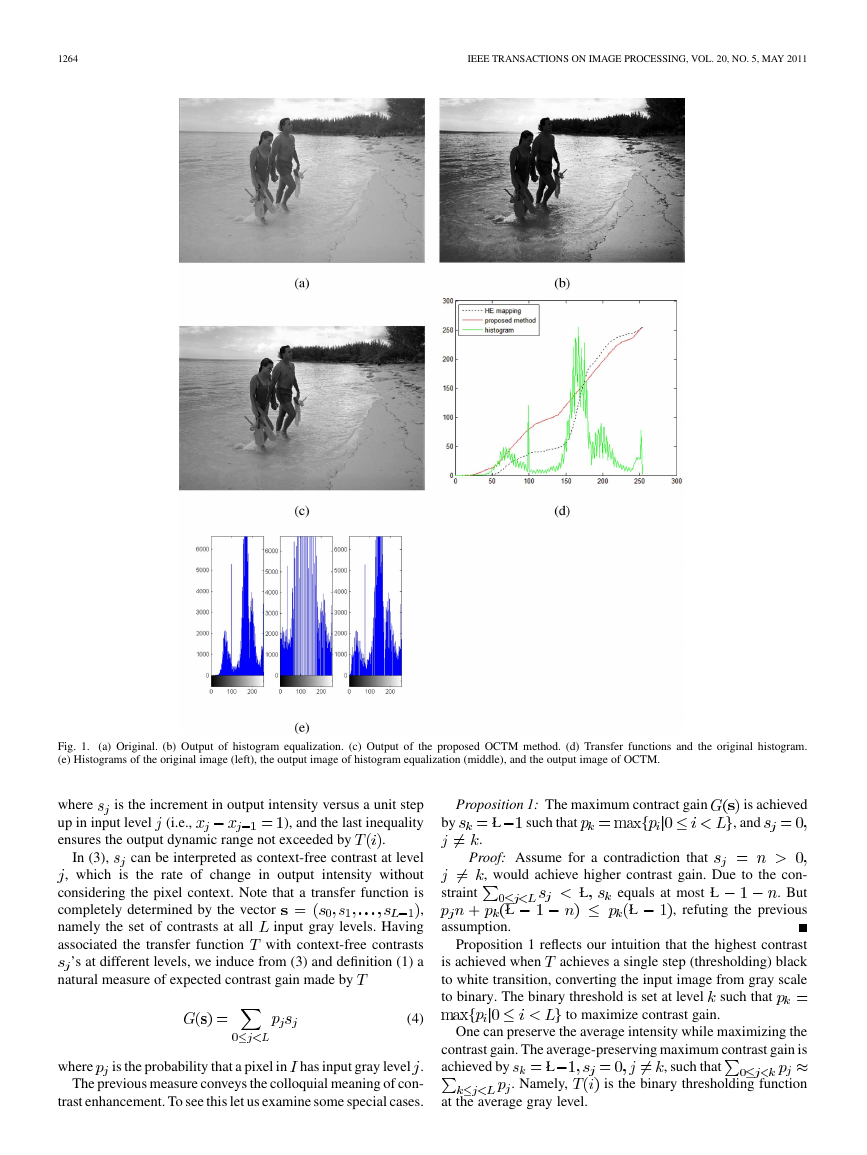

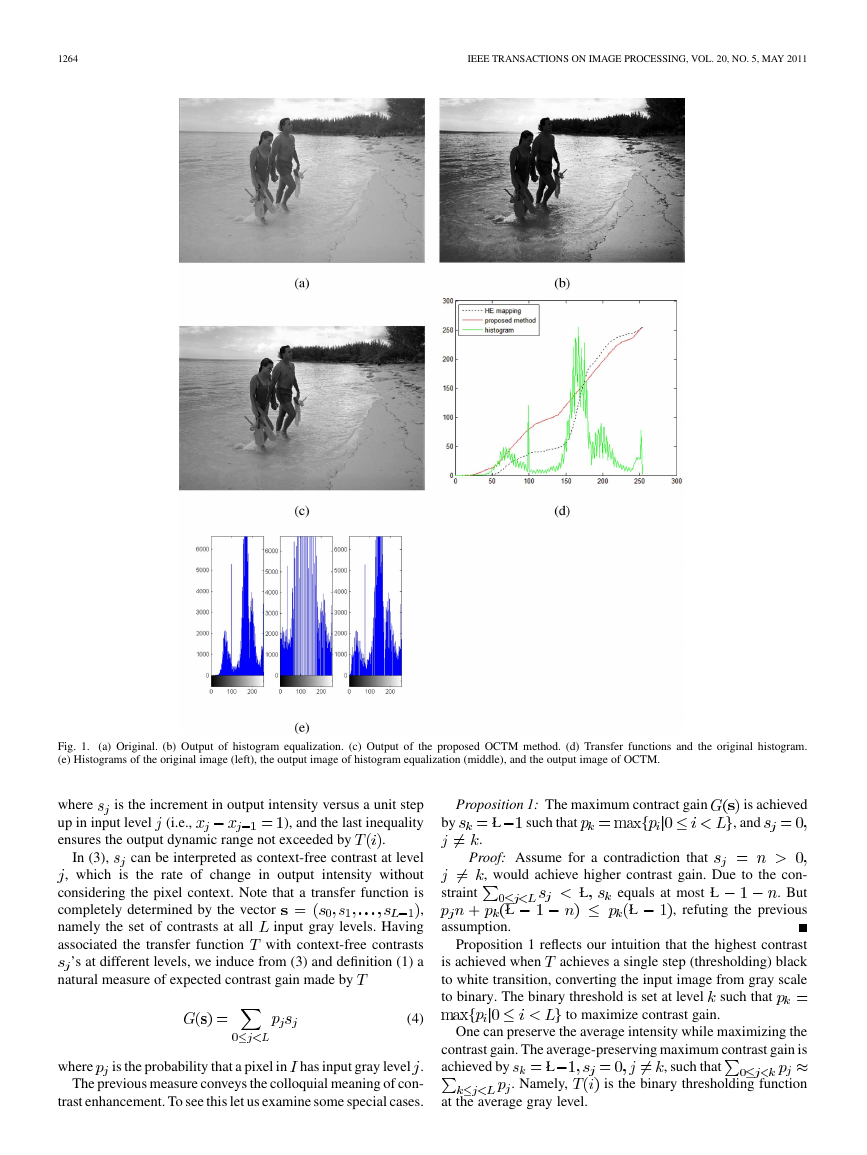

Fig. 1.

(e) Histograms of the original image (left), the output image of histogram equalization (middle), and the output image of OCTM.

(a) Original. (b) Output of histogram equalization. (c) Output of the proposed OCTM method. (d) Transfer functions and the original histogram.

Proposition 1: The maximum contract gain

is achieved

by

L

such that

, and

.

.

where

up in input level

ensures the output dynamic range not exceeded by

is the increment in output intensity versus a unit step

), and the last inequality

(i.e.,

can be interpreted as context-free contrast at level

In (3),

, which is the rate of change in output intensity without

considering the pixel context. Note that a transfer function is

,

completely determined by the vector

input gray levels. Having

namely the set of contrasts at all

with context-free contrasts

associated the transfer function

’s at different levels, we induce from (3) and definition (1) a

natural measure of expected contrast gain made by

(4)

where

is the probability that a pixel in has input gray level

.

The previous measure conveys the colloquial meaning of con-

trast enhancement. To see this let us examine some special cases.

Proof: Assume for a contradiction that

, would achieve higher contrast gain. Due to the con-

. But

, refuting the previous

equals at most L

L

L

straint

L

assumption.

Proposition 1 reflects our intuition that the highest contrast

achieves a single step (thresholding) black

is achieved when

to white transition, converting the input image from gray scale

to binary. The binary threshold is set at level

such that

to maximize contrast gain.

One can preserve the average intensity while maximizing the

contrast gain. The average-preserving maximum contrast gain is

achieved by

, such that

L

. Namely,

is the binary thresholding function

at the average gray level.

�

WU: A LINEAR PROGRAMMING APPROACH FOR OPTIMAL CONTRAST-TONE MAPPING

1265

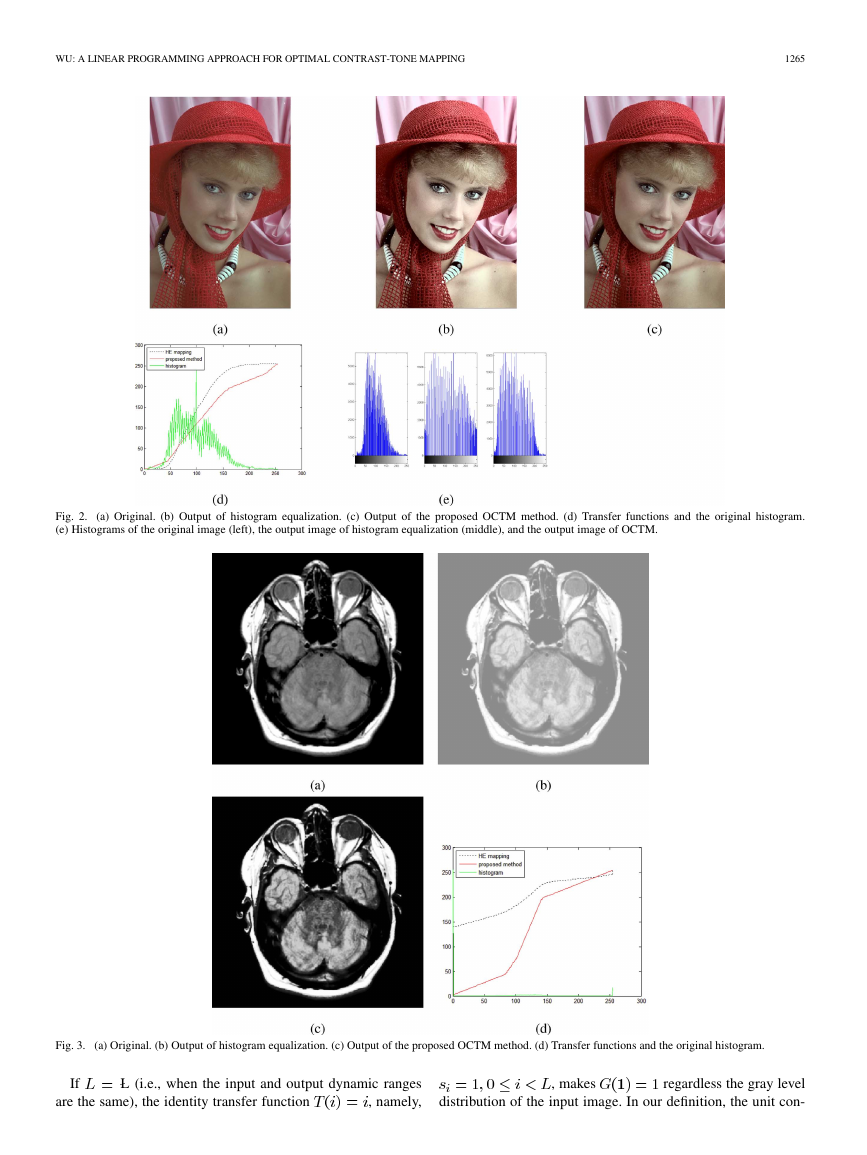

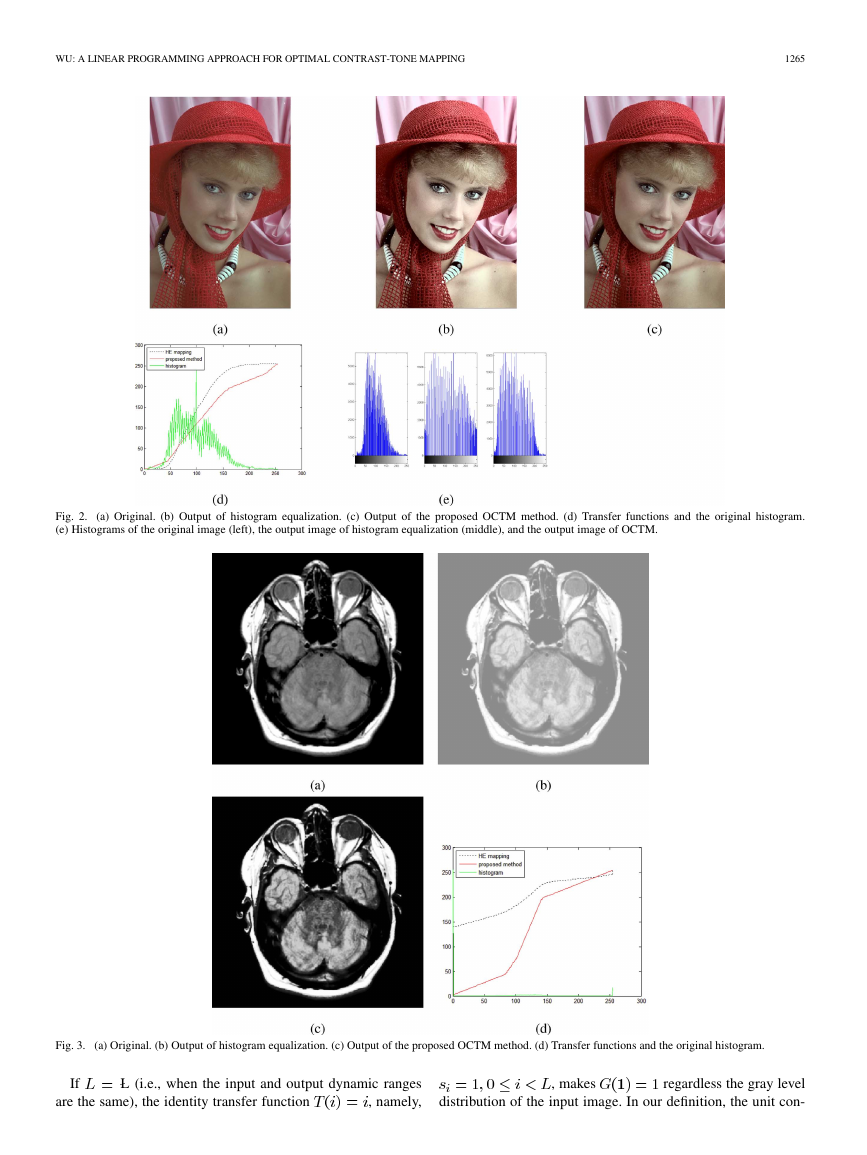

Fig. 2.

(e) Histograms of the original image (left), the output image of histogram equalization (middle), and the output image of OCTM.

(a) Original. (b) Output of histogram equalization. (c) Output of the proposed OCTM method. (d) Transfer functions and the original histogram.

Fig. 3.

(a) Original. (b) Output of histogram equalization. (c) Output of the proposed OCTM method. (d) Transfer functions and the original histogram.

If

are the same), the identity transfer function

L (i.e., when the input and output dynamic ranges

, namely,

regardless the gray level

distribution of the input image. In our definition, the unit con-

, makes

�

1266

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 20, NO. 5, MAY 2011

(a) Original image before Gamma correction. (b) After Gamma correction. (c) Gamma correction followed by histogram equalization. (d) Joint Gamma

Fig. 4.

correction and contrast-tone optimization by the proposed OCTM method.

Fig. 5. Comparison of different methods on image Pollen. (a) Original image. (b) HE. (c) CLAHE. (d) OCTM.

trast gain means a neutral contrast level without any enhance-

ment. The notion of neutral contrast can be generalized to the

the tone scale. In general,

cases when

the transfer function

L. We call

L

L

(5)

or equivalently

neutral contrast

.

, corresponds to the state of

High contrast by itself does not equate high image quality.

Another important aspect of image fidelity is the tone conti-

would

nuity. A single-minded approach of maximizing

likely produce over-exaggerated, unnatural visual effects, as re-

�

WU: A LINEAR PROGRAMMING APPROACH FOR OPTIMAL CONTRAST-TONE MAPPING

1267

Fig. 6. Comparison of different methods on image Rocks. (a) Original image. (b) HE. (c) CLAHE. (d) OCTM.

degenerates a con-

vealed by Proposition 1. The resulting

tinuous-tone image to a binary image. This maximizes the con-

trast of a particular gray level but completely ignores accurate

tone reproduction. We begin our discussions on the tradeoff be-

tween contrast and tone by stating the following simple and yet

informative observation.

Proposition 2: The

is

achieved if and only if

, or

.

As stated previously, the simple linear transfer function, i.e.,

doing nothing in the traditional sense of contrast enhancement,

of

actually maximizes the minimum of context-free contrasts

, and the neutral contrast gain largest

different levels

is possible when satisfying this maxmin criterion.

In terms of visual effects, the reproduction of continuous

tones demands the transfer function to meet the maxmin crite-

rion of proposition 2. The collapse of distinct gray levels into

one tends to create contours or banding artifacts. In this con-

sideration, we define the tone distortion of a transfer function

by

(6)

In the definition we account for the fact that the transfer function

is not a one-to-one mapping in general. The smaller the

tone distortion

.

It is immediate from the definition that the smallest achievable

tone distortion is

the smoother the tone reproduced by

However, since the dynamic range Lof the output device is fi-

nite, the two visual quality criteria of high contrast and tone

continuity are in mutual conflict. Therefore, the mitigation of

such an inherent conflict is a critical issue in designing contrast

enhancement algorithms, which is seemingly overlooked in the

existing literature on the subject.

Following the previous discussions, the problem of contrast

enhancement manifests itself as the following optimization

problem

(7)

The OCTM objective function (7) aims for sharpness of high

frequency details and tone subtlety of smooth shades at the same

time, using the Lagrangian multiplier

to regulate the relative

importance of the two mutually conflicting fidelity metrics.

Interestingly, the OCTM solution of (7) is

if the input

is uniform. It is easy to verify that

histogram of an image

for all

but

when

for

. In other words, no other transfer

functions can make any contrast gain over the identity transfer

), and at the same time the identity

function

transfer function achieves the minimum tone distortion

(or

. This concludes that an image of uniform his-

togram cannot be further enhanced in OCTM, lending a support

for histogram equalization as a contrast enhancement technique.

For a general input histogram, however, the transfer function of

histogram equalization is not necessarily the OCTM solution,

as we will appreciate in the following sections.

�

1268

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 20, NO. 5, MAY 2011

Fig. 7. Comparison of different methods on image Tree. (a) Original image. (b) HE. (c) CLAHE. (d) OCTM.

III. CONTRAST-TONE OPTIMIZATION BY

LINEAR PROGRAMMING

control of undesired side effects of contrast enhancement is re-

alized by the use of constraints when maximizing contrast gain

and tone distortion

To motivate the development of an algorithm for solving (7),

it is useful to view contrast enhancement as an optimal resource

allocation problem with constraint. The resource is the output dy-

namic range and the constraint is tone distortion. The achievable

contrast gain

are physically con-

fined by the output dynamic range L of the output device. In (4)

the optimization variables

represent an alloca-

tion of L available output intensity levels, each competing for a

larger piece of dynamic range. While contrast enhancement nec-

essarily invokes a competition for dynamic range (an insufficient

resource), a highly skewed allocation of L output levels to input

levels can deprive some input gray levels of necessary represen-

tations, incurring tone distortion. This causes unwanted side ef-

fects, such as flattened subtle shades, unnatural contour bands,

shifted average intensity, and etc. Such artifacts were noticed by

other researchers as drawbacks of the original histogram equal-

ization algorithm, and they proposed a number of ad hoc. tech-

niques to alleviate these artifacts by reshaping the original his-

togram prior to the equalization process. In OCTM, however, the

.

Since the tone distortion function

is not linear in , di-

rectly solving (7) is difficult. Instead, we rewrite (7) as the fol-

lowing constrained optimization problem:

subject to

L

(8)

In (8), constraint (a) is to confine the output intensity level to the

available dynamic range; constraints (b) ensure that the transfer

function

be monotonically nondecreasing; constraints (c)

specify the maximum tone distortion allowed, where

is an

upper bound

. The objective function and all the con-

straints are linear in . The choice of

depends upon user’s re-

�

WU: A LINEAR PROGRAMMING APPROACH FOR OPTIMAL CONTRAST-TONE MAPPING

1269

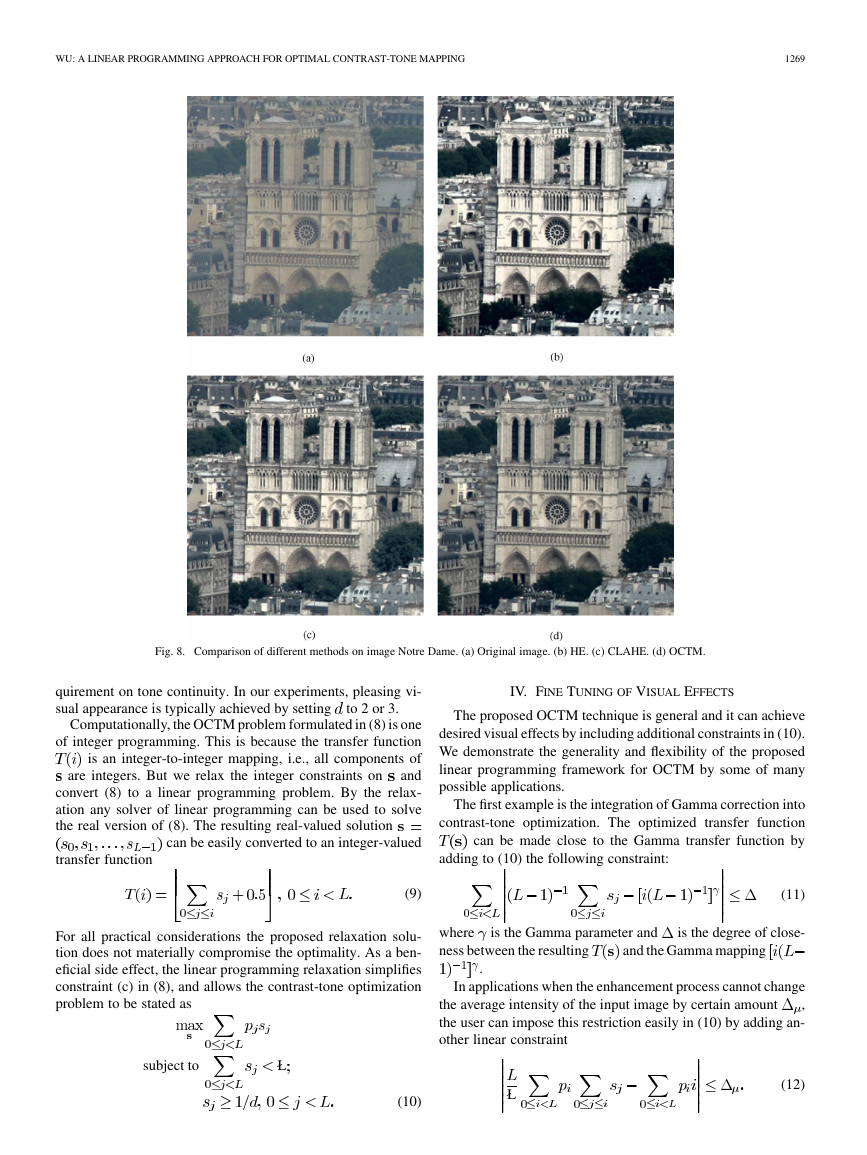

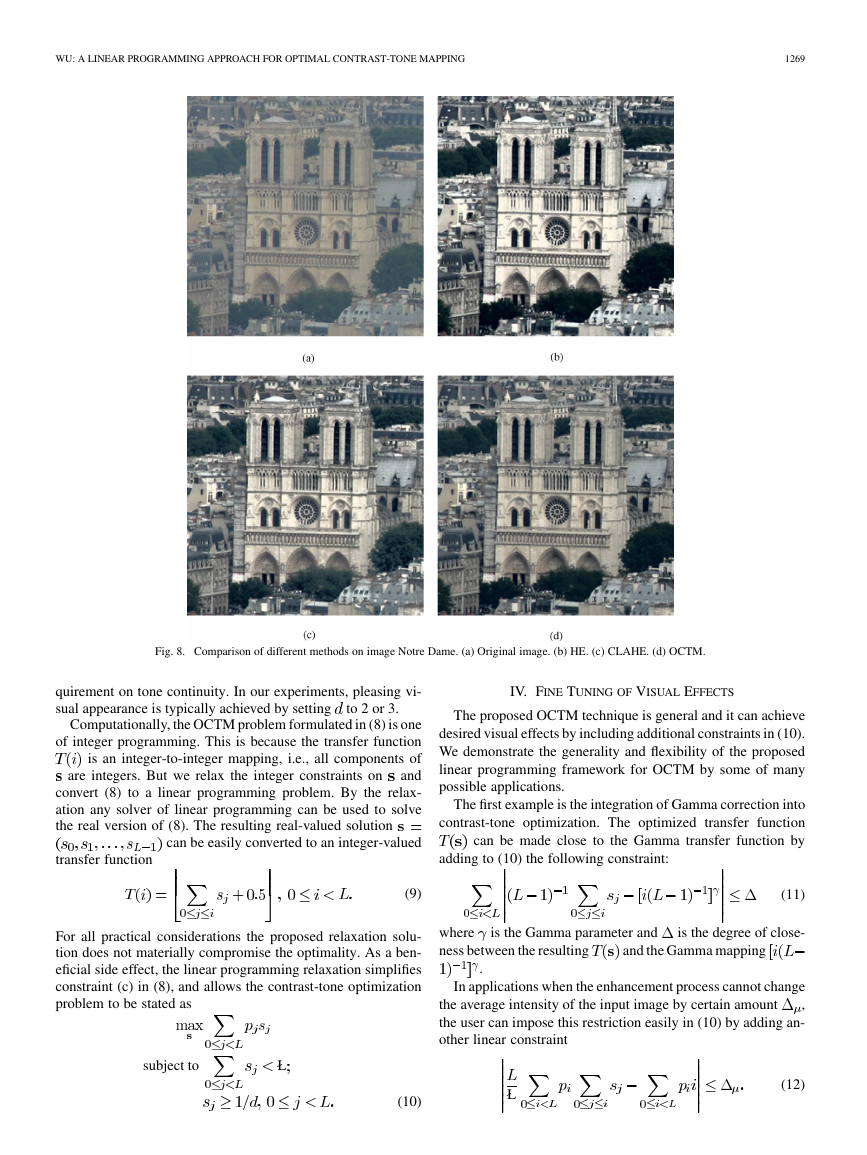

Fig. 8. Comparison of different methods on image Notre Dame. (a) Original image. (b) HE. (c) CLAHE. (d) OCTM.

quirement on tone continuity. In our experiments, pleasing vi-

sual appearance is typically achieved by setting

to 2 or 3.

Computationally, the OCTM problem formulated in (8) is one

of integer programming. This is because the transfer function

is an integer-to-integer mapping, i.e., all components of

and

convert (8) to a linear programming problem. By the relax-

ation any solver of linear programming can be used to solve

the real version of (8). The resulting real-valued solution

are integers. But we relax the integer constraints on

can be easily converted to an integer-valued

transfer function

IV. FINE TUNING OF VISUAL EFFECTS

The proposed OCTM technique is general and it can achieve

desired visual effects by including additional constraints in (10).

We demonstrate the generality and flexibility of the proposed

linear programming framework for OCTM by some of many

possible applications.

The first example is the integration of Gamma correction into

contrast-tone optimization. The optimized transfer function

can be made close to the Gamma transfer function by

adding to (10) the following constraint:

(9)

For all practical considerations the proposed relaxation solu-

tion does not materially compromise the optimality. As a ben-

eficial side effect, the linear programming relaxation simplifies

constraint (c) in (8), and allows the contrast-tone optimization

problem to be stated as

is the Gamma parameter and

where

ness between the resulting

(11)

is the degree of close-

and the Gamma mapping

.

In applications when the enhancement process cannot change

the average intensity of the input image by certain amount

,

the user can impose this restriction easily in (10) by adding an-

other linear constraint

subject to

L

(10)

L

(12)

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc