1 Introduction

1.1 Motivation

1.2 Eye Gaze and Human Communication

1.3 Eye Gaze for Computer Input

1.4 Methods and Approach

1.5 Thesis Outline

1.6 Contributions

2 Overview and Related Work

2.1 Definition of Eye Tracking

2.2 History of Eye Tracking

2.3 Application Domains for Eye Tracking

2.4 Technological Basics of Eye Tracking

2.4.1 Methods of Eye Tracking

2.4.2 Video-Based Eye Tracking

2.4.3 The Corneal Reflection Method

2.5 Available Video-Based Eye Tracker Systems

2.5.1 Types of Video-Based Eye Trackers

2.5.2 Low-Cost Open Source Eye Trackers for HCI

2.5.3 Commercial Eye Trackers for HCI

2.5.4 Criteria for the Quality of an Eye Tracker

2.6 The ERICA Eye Tracker

2.6.1 Specifications

2.6.2 Geometry of the Experimental Setup

2.6.3 The ERICA-API

2.7 Related Work on Interaction by Gaze

2.8 Current Challenges

3 The Eye and its Movements

3.1 Anatomy and Movements of the Eye

3.2 Accuracy, Calibration and Anatomy

3.3 Statistics on Saccades and Fixations

3.3.1 The Data

3.3.2 Saccades Lengths

3.3.3 Saccade Speed

3.3.4 Fixation Times

3.3.5 Summary of Statistics

3.4 Speed and Accuracy

3.4.1 Eye Speed Models

3.4.2 Fitts’ Law

3.4.3 The Debate on Fitts’ Law for Eye Movements

3.4.4 The Screen Key Experiment

3.4.5 The Circle Experiment

3.4.6 Ballistic or Feedback-Controlled Saccades

3.4.7 Conclusions on Fitts’ Law for the Eyes

4 Eye Gaze as Pointing Device

4.1 Overview on Pointing Devices

4.1.1 Properties of Pointing Devices

4.1.2 Problems with Traditional Pointing Devices

4.1.3 Problems with Eye Gaze as Pointing Device

4.2 Related Work for Eye Gaze as Pointing Device

4.3 MAGIC Pointing with a Touch-Sensitive Mouse Device

4.4 User Studies with the Touch-Sensitive Mouse Device

4.4.1 First User Study – Testing the Concept

4.4.2 Second User Study – Learning and Hand-Eye Coordination

4.4.3 Third User Study – Raw and Fine Positioning

4.5 A Deeper Understanding of MAGIC Touch

4.6 Summary on the Results for Eye Gaze as Pointing Device

4.7 Conclusions on Eye Gaze as Pointing Device

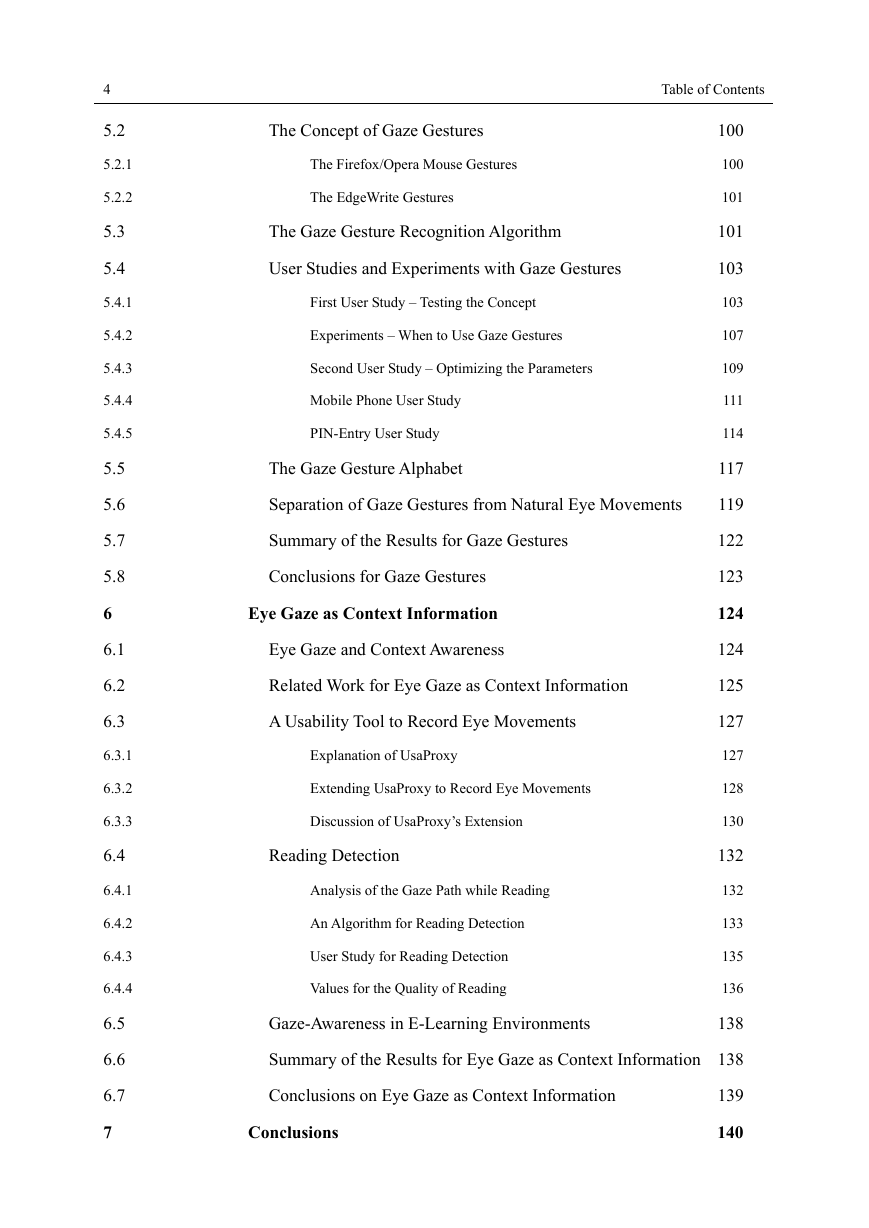

5 Gaze Gestures

5.1 Related Work on Gaze Gestures

5.2 The Concept of Gaze Gestures

5.2.1 The Firefox/Opera Mouse Gestures

5.2.2 The EdgeWrite Gestures

5.3 The Gaze Gesture Recognition Algorithm

5.4 User Studies and Experiments with Gaze Gestures

5.4.1 First User Study – Testing the Concept

5.4.2 Experiments – When to Use Gaze Gestures

5.4.3 Second User Study – Optimizing the Parameters

5.4.4 Mobile Phone User Study

5.4.5 PIN-Entry User Study

5.5 The Gaze Gesture Alphabet

5.6 Separation of Gaze Gestures from Natural Eye Movements

5.7 Summary of the Results for Gaze Gestures

5.8 Conclusions for Gaze Gestures

6 Eye Gaze as Context Information

6.1 Eye Gaze and Context Awareness

6.2 Related Work for Eye Gaze as Context Information

6.3 A Usability Tool to Record Eye Movements

6.3.1 Explanation of UsaProxy

6.3.2 Extending UsaProxy to Record Eye Movements

6.3.3 Discussion of UsaProxy’s Extension

6.4 Reading Detection

6.4.1 Analysis of the Gaze Path while Reading

6.4.2 An Algorithm for Reading Detection

6.4.3 User Study for Reading Detection

6.4.4 Values for the Quality of Reading

6.5 Gaze-Awareness in E-Learning Environments

6.6 Summary of the Results for Eye Gaze as Context Information

6.7 Conclusions on Eye Gaze as Context Information

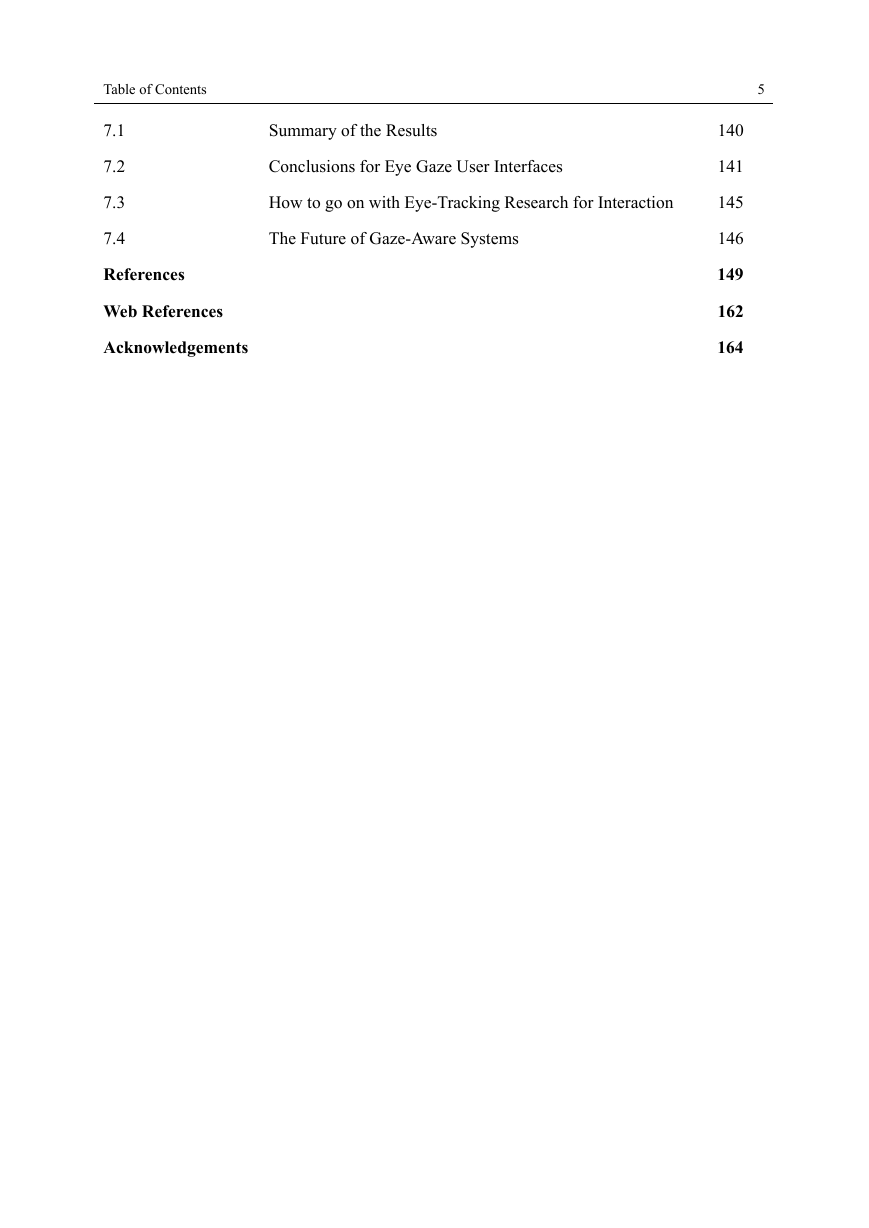

7 Conclusions

7.1 Summary of the Results

7.2 Conclusions for Eye Gaze User Interfaces

7.3 How to go on with Eye-Tracking Research for Interaction

7.4 The Future of Gaze-Aware Systems

References

Web References

Acknowledgements

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc