i

Essentials of Stochastic Processes

Rick Durrett

Solutions manual for the 2nd Edition, December, 2011

Copyright 2011, All rights reserved.

Note: Due to the way the solutions manual was produced (including the

entire text but only printing the solutions) the page numbering is somewhat

strange.

�

ii

�

1.12. EXERCISES

1.12 Exercises

65

Understanding the definitions

1.1. A fair coin is tossed repeatedly with results Y0, Y1, Y2, . . . that are 0 or 1

with probability 1/2 each. For n ≥ 1 let Xn = Yn + Yn−1 be the number of 1’s

in the (n − 1)th and nth tosses. Is Xn a Markov chain?

Ans. No. We first argue this intuitively: when Xn = 1 the last two results may

be 0, 1 or 1, 0. In the first case we may jump only to 2 or 1, while in the second

case we may only jump to 0 or 1. Thus it is not enough to know just current

state. To get a formal contradiction we note that X1 = 2, X2 = 1 implies that

Y0 = Y1 = 1, Y2 = 0 so

P (X3 = 2|X2 = 1, X1 = 2) = 0 < P (X3 = 2|X2 = 1)

5ballE 1.2. Five white balls and five black balls are distributed in two urns in such a

way that each urn contains five balls. At each step we draw one ball from each

urn and exchange them. Let Xn be the number of white balls in the left urn at

time n. Compute the transition probability for Xn.

Ans.

0

0

1

1

0

1 1/25 8/25

4/25

2

3

4

5

0

0

0

0

0

0

0

16/25

12/25

9/25

2

0

0

0

3

0

0

0

9/25

12/25

16/25

4

0

0

0

1

5

0

0

0

0

0

4/25

8/25

1/25

dicemod6 1.3. We repeated roll two four sided dice with numbers 1, 2, 3, and 4 on them.

Let Yk be the sum on the kth roll, Sn = Y1 + ··· + Yn be the total of the first

n rolls, and Xn = Sn (mod 6). Find the transition probability for Xn.

Ans.

0

1

0 3/16 2/16

1 4/16 3/16

2 3/16 4/16

3 2/16 3/16

4 2/16 2/16

5 2/16 2/16

2

2/16

2/16

3/16

4/16

3/16

2/16

3

2/16

2/16

2/16

3/16

4/16

3/16

4

3/16

2/16

2/16

2/16

3/16

4/16

5

4/16

3/16

2/16

2/16

2/16

3/16

1.4. The 1990 census showed that 36% of the households in the District of

Columbia were homeowners while the remainder were renters. During the next

decade 6% of the homeowners became renters and 12% of the renters became

homeowners. What percentage were homeowners in 2000? in 2010?

Ans. 0.4152, 0.4604

1.5. Consider a gambler’s ruin chain with N = 4. That is, if 1 ≤ i ≤ 3,

p(i, i + 1) = 0.4, and p(i, i − 1) = 0.6, but the endpoints are absorbing states:

p(0, 0) = 1 and p(4, 4) = 1 Compute p3(1, 4) and p3(1, 0).

�

66

Ans.

(a) to go from 1 to 4 in three steps we must go 1,2,3,4 so p3(1, 4) =

(.4)3 = .064. (b) to go from 1 to 0 in three steps we may go 1,2,1,0 or 1,0,0,0

so p3(1, 0) = (.4)(.6)2 + .6 = .744

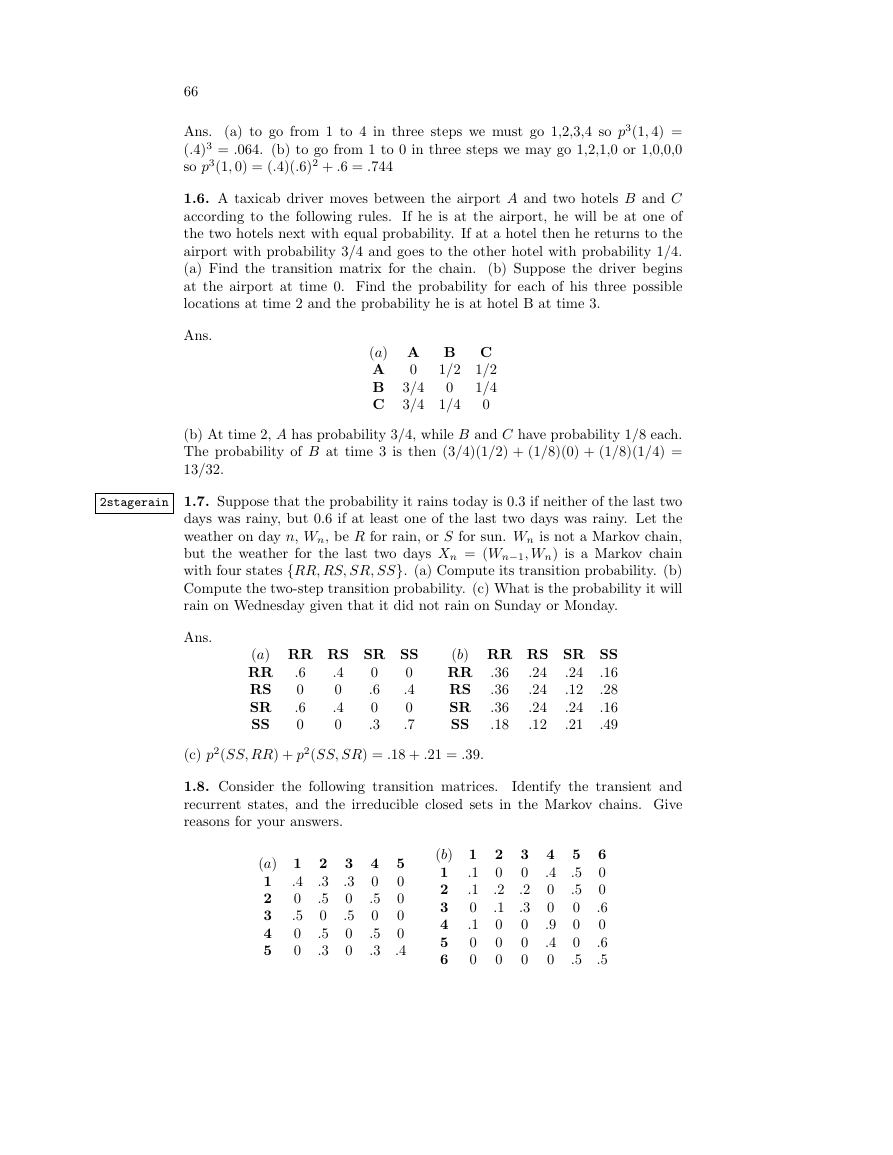

1.6. A taxicab driver moves between the airport A and two hotels B and C

according to the following rules. If he is at the airport, he will be at one of

the two hotels next with equal probability. If at a hotel then he returns to the

airport with probability 3/4 and goes to the other hotel with probability 1/4.

(a) Find the transition matrix for the chain.

(b) Suppose the driver begins

at the airport at time 0. Find the probability for each of his three possible

locations at time 2 and the probability he is at hotel B at time 3.

Ans.

(a) A B

1/2

A 0

0

B 3/4

C 3/4

1/4

C

1/2

1/4

0

(b) At time 2, A has probability 3/4, while B and C have probability 1/8 each.

The probability of B at time 3 is then (3/4)(1/2) + (1/8)(0) + (1/8)(1/4) =

13/32.

2stagerain 1.7. Suppose that the probability it rains today is 0.3 if neither of the last two

days was rainy, but 0.6 if at least one of the last two days was rainy. Let the

weather on day n, Wn, be R for rain, or S for sun. Wn is not a Markov chain,

but the weather for the last two days Xn = (Wn−1, Wn) is a Markov chain

with four states {RR, RS, SR, SS}. (a) Compute its transition probability. (b)

Compute the two-step transition probability. (c) What is the probability it will

rain on Wednesday given that it did not rain on Sunday or Monday.

Ans.

(a) RR RS SR SS

0

RR .6

.4

0

RS

SR .6

0

.7

0

SS

.4

0

.4

0

0

.6

0

.3

(b) RR RS SR SS

.16

RR .36

.28

.36

RS

SR .36

.16

.49

.18

SS

.24

.12

.24

.21

.24

.24

.24

.12

(c) p2(SS, RR) + p2(SS, SR) = .18 + .21 = .39.

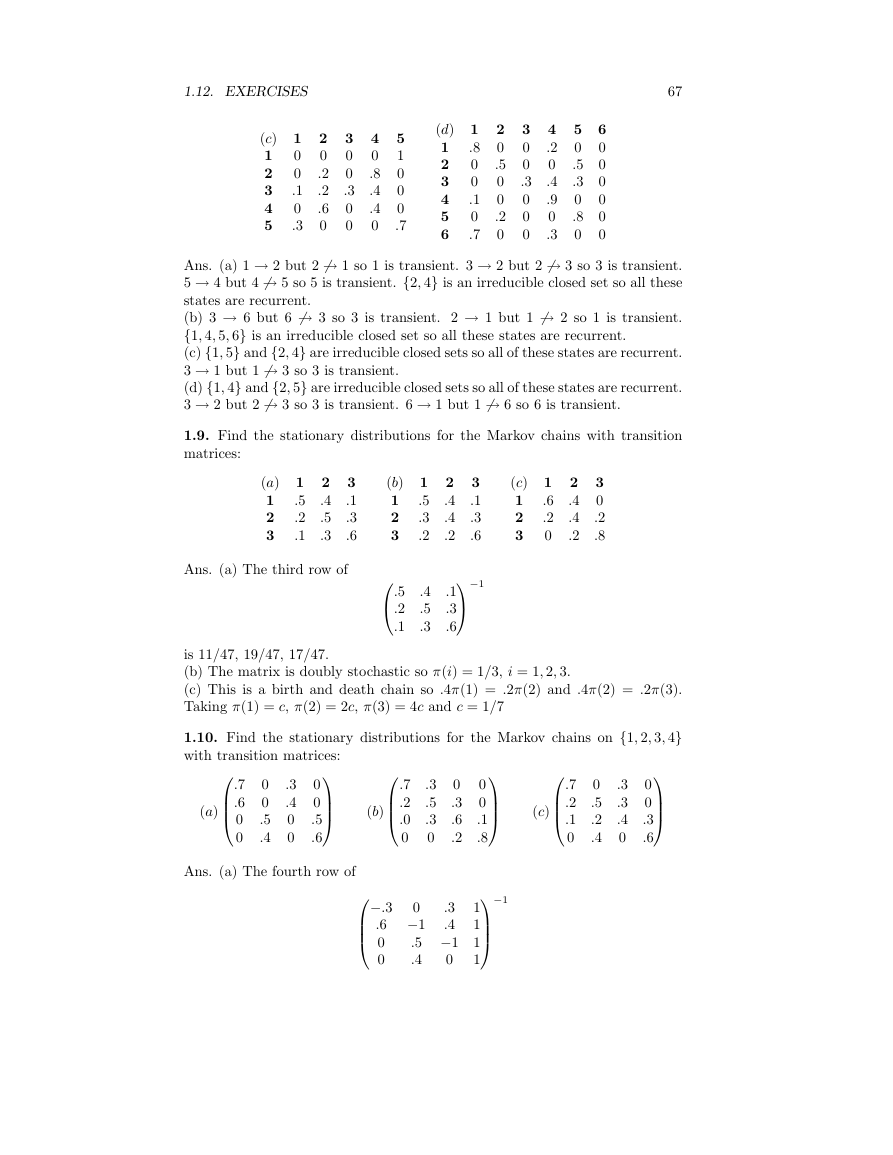

1.8. Consider the following transition matrices.

Identify the transient and

recurrent states, and the irreducible closed sets in the Markov chains. Give

reasons for your answers.

(a) 1

.4

1

0

2

.5

3

0

4

0

5

2

.3

.5

0

.5

.3

3

.3

0

.5

0

0

4

0

.5

0

.5

.3

5

0

0

0

0

.4

(b) 1

.1

1

.1

2

0

3

.1

4

0

5

0

6

2

0

.2

.1

0

0

0

3

0

.2

.3

0

0

0

4

.4

0

0

.9

.4

0

5

.5

.5

0

0

0

.5

6

0

0

.6

0

.6

.5

�

1.12. EXERCISES

67

(c) 1

0

1

0

2

.1

3

0

4

.3

5

2

0

.2

.2

.6

0

3

0

0

.3

0

0

4

0

.8

.4

.4

0

5

1

0

0

0

.7

(d) 1

.8

1

0

2

0

3

.1

4

0

5

.7

6

2

0

.5

0

0

.2

0

3

0

0

.3

0

0

0

4

.2

0

.4

.9

0

.3

5 6

0

0

0

.5

.3

0

0

0

0

.8

0

0

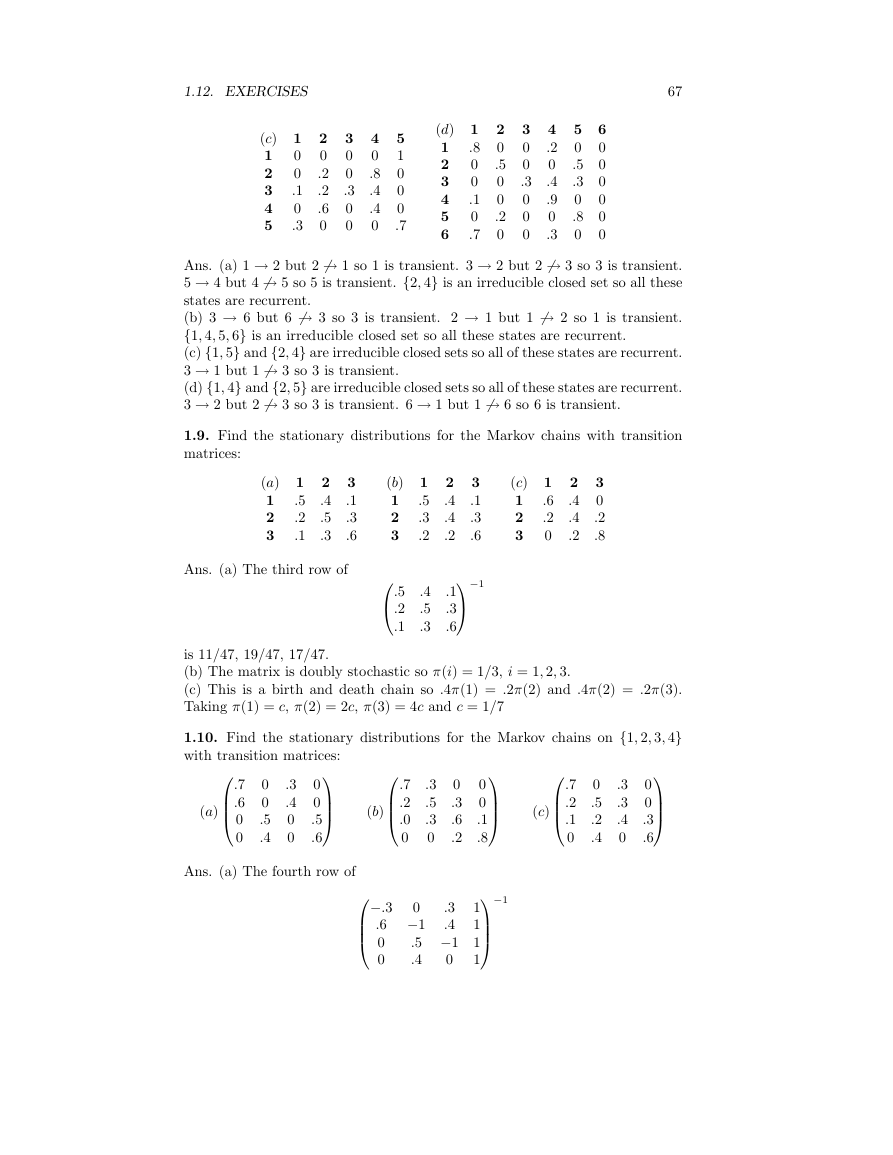

Ans. (a) 1 → 2 but 2 6→ 1 so 1 is transient. 3 → 2 but 2 6→ 3 so 3 is transient.

5 → 4 but 4 6→ 5 so 5 is transient. {2, 4} is an irreducible closed set so all these

states are recurrent.

(b) 3 → 6 but 6 6→ 3 so 3 is transient. 2 → 1 but 1 6→ 2 so 1 is transient.

{1, 4, 5, 6} is an irreducible closed set so all these states are recurrent.

(c) {1, 5} and {2, 4} are irreducible closed sets so all of these states are recurrent.

3 → 1 but 1 6→ 3 so 3 is transient.

(d) {1, 4} and {2, 5} are irreducible closed sets so all of these states are recurrent.

3 → 2 but 2 6→ 3 so 3 is transient. 6 → 1 but 1 6→ 6 so 6 is transient.

1.9. Find the stationary distributions for the Markov chains with transition

matrices:

(a) 1

.5

1

.2

2

.1

3

2

.4

.5

.3

3

.1

.3

.6

Ans. (a) The third row of

(b) 1

.5

1

.3

2

.2

3

.5

.2

.1

.4

.5

.3

(c) 1

.6

1

.2

2

0

3

2

.4

.4

.2

3

0

.2

.8

3

.1

.3

.6

−1

2

.4

.4

.2

.1

.3

.6

is 11/47, 19/47, 17/47.

(b) The matrix is doubly stochastic so π(i) = 1/3, i = 1, 2, 3.

(c) This is a birth and death chain so .4π(1) = .2π(2) and .4π(2) = .2π(3).

Taking π(1) = c, π(2) = 2c, π(3) = 4c and c = 1/7

1.10. Find the stationary distributions for the Markov chains on {1, 2, 3, 4}

with transition matrices:

.7

.2

.1

0

(c)

0

.5

.2

.4

.3

.3

.4

0

0

0

.3

.6

(b)

(a)

.6

0

0

0

0

.5

.6

.3

.4

0

0

0

0

.5

.4

.7

.7

Ans. (a) The fourth row of−.3

0

0

.1

.8

1

1

1

1

−1

.3

.5

.3

0

0

.3

.6

.2

.2

.0

0

.3

0

.6 −1

.4

.5 −1

0

.4

0

0

�

68

is 8/21, 4/21, 4/21, 5/21.

(b) This is a birth and death chain so .3π(1) = .2π(2), .3π(2) = .3π(3), and

.1π(3) = .2π(4). Taking π(1) = c, π(2) = 3c/2, π(3) = 3c/2, π(4) = 3c/4 and

c = 4/19, making the stationary distribution 4/19, 6/19, 6/19, 3/19.

(c) The matrix is doubly stochastic so π(i) = 1/4, i = 1, 2, 3, 4.

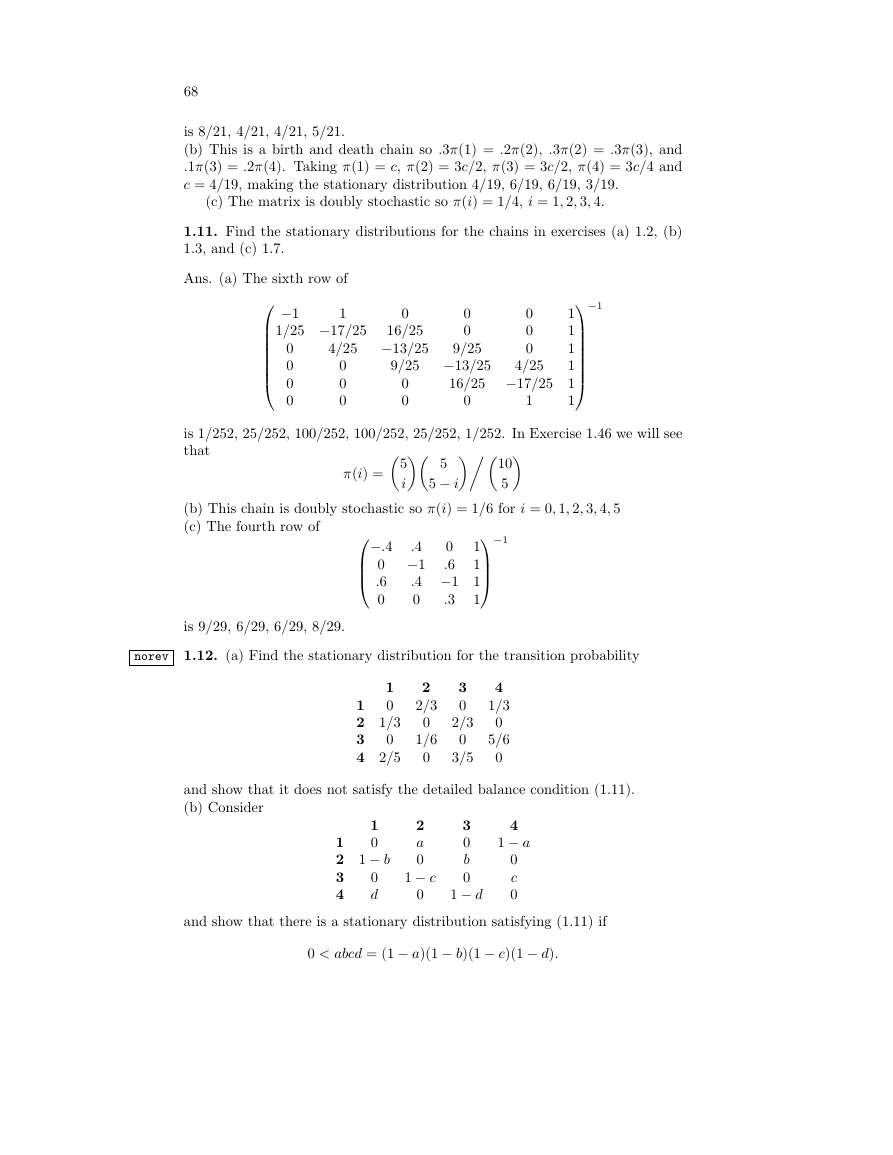

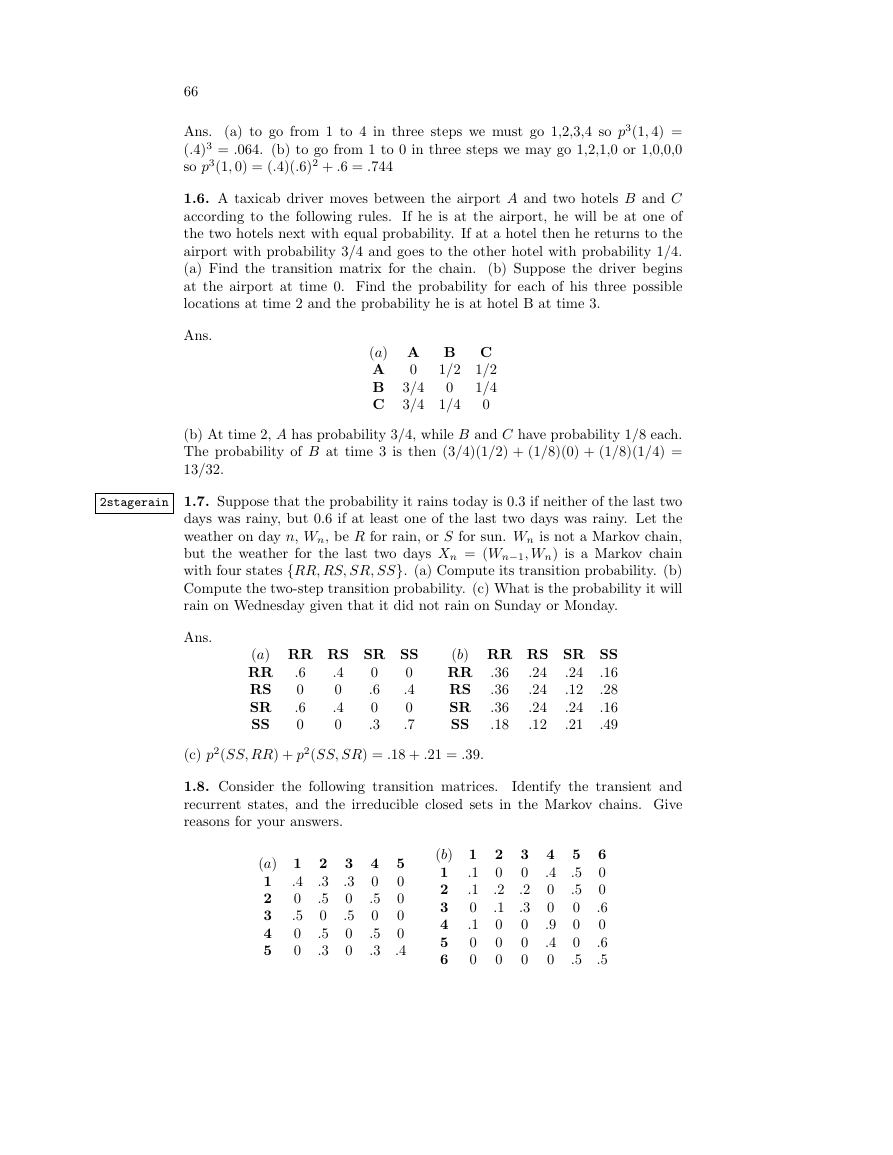

1.11. Find the stationary distributions for the chains in exercises (a) 1.2, (b)

1.3, and (c) 1.7.

Ans. (a) The sixth row of

1

−1

1/25 −17/25

0

4/25

0

0

0

0

0

0

16/25

−13/25

9/25

0

0

0

0

0

0

9/25

−13/25

4/25

16/25 −17/25

0

0

0

1

−1

1

1

1

1

1

1

is 1/252, 25/252, 100/252, 100/252, 25/252, 1/252. In Exercise 1.46 we will see

that

(b) This chain is doubly stochastic so π(i) = 1/6 for i = 0, 1, 2, 3, 4, 5

(c) The fourth row of

π(i) =

i

5

−.4

0

.4

0 −1

.6

.4 −1

.6

0

.3

0

5

5 − i

5

10

−1

1

1

1

1

is 9/29, 6/29, 6/29, 8/29.

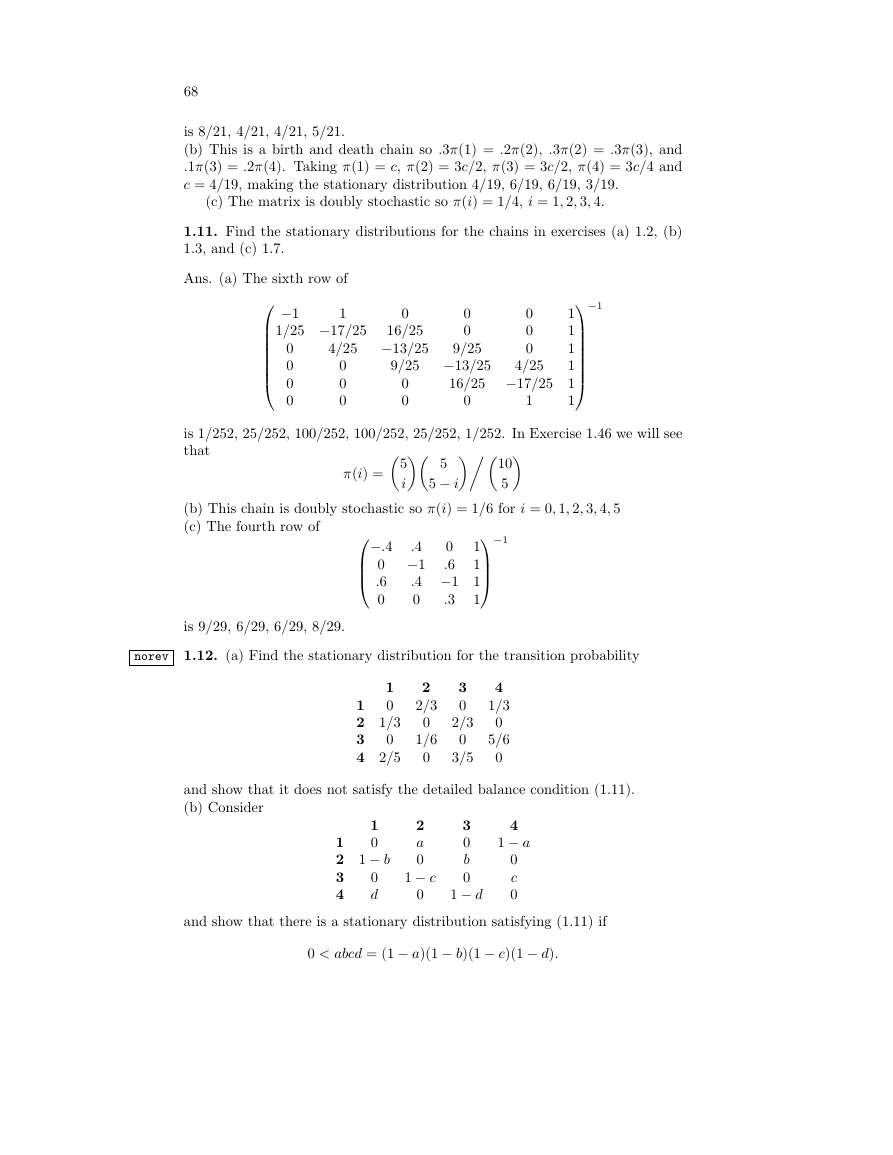

norev 1.12. (a) Find the stationary distribution for the transition probability

1

0

1

2 1/3

0

3

4 2/5

2

2/3

0

1/6

0

3

0

2/3

0

3/5

4

1/3

0

5/6

0

and show that it does not satisfy the detailed balance condition (1.11).

(b) Consider

1

0

1

2 1 − b

0

3

4

d

2

a

0

1 − c

0

3

0

b

0

1 − d

4

1 − a

0

c

0

and show that there is a stationary distribution satisfying (1.11) if

0 < abcd = (1 − a)(1 − b)(1 − c)(1 − d).

�

1.12. EXERCISES

69

Ans. (a) The fourth row of−1

0

2/3

1/3 −1

2/3

1/6 −1

0

0

3/5

2/5

−1

1

1

1

1

is 35/186, 33/186, 58/186, 60/186. To satisfy (1.11) we must have (2/3)π(1) =

1/3)π(2).

(b) If (1.11) holds

π(2) = π(1)a/(1 − b)

π(4) = π(1)abc/(1 − b)(1 − c)(1 − d)

π(3) = π(1)ab/(1 − b)(1 − c)

π(1) = abcd/(1 − b)(1 − c)(1 − d)(1 − a)

1.13. Consider the Markov chain with transition matrix:

1

0

1

0

2

3 0.8

4 0.4

2

0

0

0.2

0.6

3

0.1

0.6

0

0

4

0.9

0.4

0

0

(b) Find the stationary distributions of p and all of the

(a) Compute p2.

stationary distributions of p2. (c) Find the limit of p2n(x, x) as n → ∞.

Ans.

(a)

1

2

3

4

−1

1

.44

.64

0

0

2

.56

.36

0

0

0.1

0

0 −1

0.6

0.2 −1

0.8

0.6

0

0.4

4

0

0

.8

.6

−1

3

0

0

.2

.4

1

1

1

1

(b) The fourth row of

is 8/30, 7/30, 5/30, 10/30. p2 has two irreducible closed sets. By the formula

for the stationary distribution of the two state chain π1 = (8/15, 7/15, 0, 0)

and π2 = (0, 0, 1/3, 2/3) are stationary distributions. Since πp = π is linear, if

θ ∈ [0, 1] then θπ1 + (1 − θ)π2 is a stationary distribution.

(c) 8/15,7/15,1/3/2/3.

1.14. Do the following Markov chains converge to equilibrium?

(a) 1

0

1

0

2

.3

3

1

4

2

0

0

.7

0

3

1

.5

0

0

4

0

.5

0

0

(b)

1

2

3

4

1

0

0

1

1/3

2

1

0

0

0

3

.0

0

0

2/3

4

0

1

0

0

�

70

(c) 1

0

1

0

2

0

3

1

4

0

5

.2

6

2

.5

0

0

0

1

0

3

.5

0

0

0

0

0

4

0

1

.4

0

0

0

5

0

0

0

0

0

.8

6

0

0

.6

0

0

0

(a) No. The chain moves from {1, 2} to {3, 4} and then back, so all

Ans.

states have period 2.

(b) Yes. The chain is irreducible. Starting from 4 you can return to it in 3 or

4 steps so it and all the other states have period 1.

(c) No. The chain moves from {1, 5} to {2, 3} to {4, 6} and then back to {1, 5}

so all states have period 3.

1.15. Find limn→∞ pn(i, j) for

p =

1

1

1

0

2

3 1/8

0

4

5 1/3

2

0

2/3

1/4

1/6

0

3

0

0

5/8

0

1/3

4

0

1/3

0

5/6

0

5

0

0

0

0

1/3

You are supposed to do this and the next problem by solving equations. How-

ever you can check your answers by using your calculator to find FRAC(p100).

Ans. 1 is an absorbing state. {2, 4} is a closed irreducible set with stationary

distribution 1/3, 2/3. When we leave 3 we go to 1 with probability 1/3 and enter

{2, 4} with probability 2/3. When we leave 5 we go to 1 or 3 with probability

1/2 each, so we get absorbed at 1 with probability 1/2 + (1/2)(1/3) = 2/3 and

in {2, 4} with probability 1/3. Thus the limit is

p =

1

1

1

0

2

3 1/3

0

4

5 2/3

2

0

1/3

2/9

1/3

1/9

3

0

0

0

0

0

4

0

2/3

4/9

2/3

2/9

5

0

0

0

0

0

1.16. If we rearrange the matrix for the seven state chain in Example 1.14 we

get

2

.2

0

0

0

0

0

0

3

.3

.5

0

0

0

0

0

1

.1

0

.7

.6

0

0

0

5

0

.2

.3

.4

0

0

0

4

.4

.3

0

0

.5

0

1

6

0

0

0

0

.5

.2

0

7

0

0

0

0

0

.8

0

2

3

1

5

4

6

7

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc