NONNEGATIVE MATRIX AND TENSOR FACTORIZATIONS: APPLICATIONS TO EXPLORATORY MULTI-WAY DATA ANALYSIS AND BLIND SOURCE SEPARATION

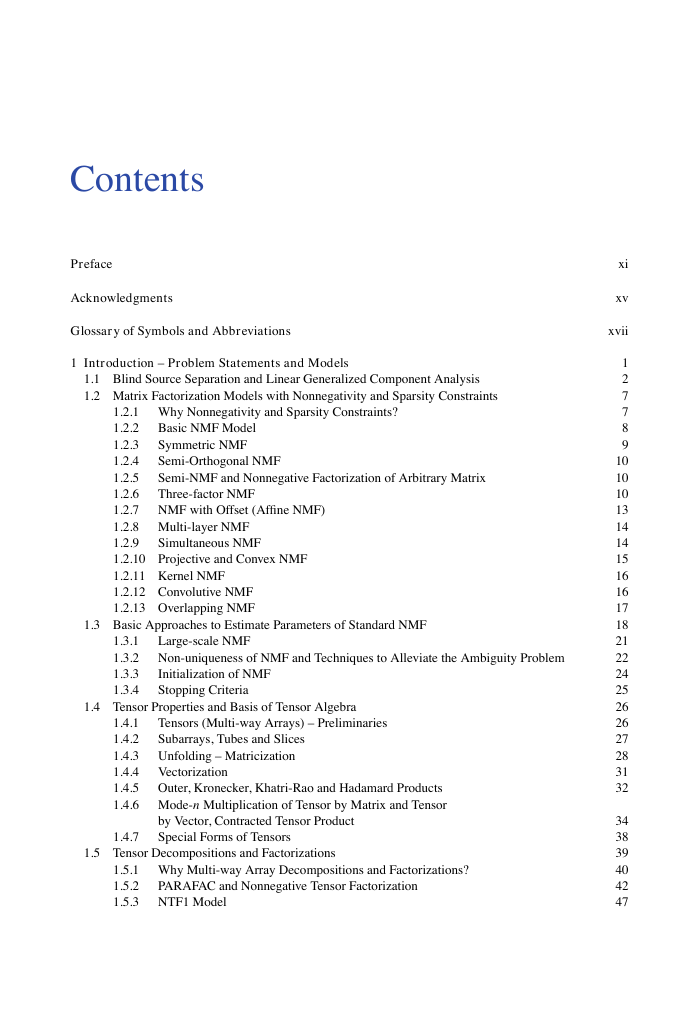

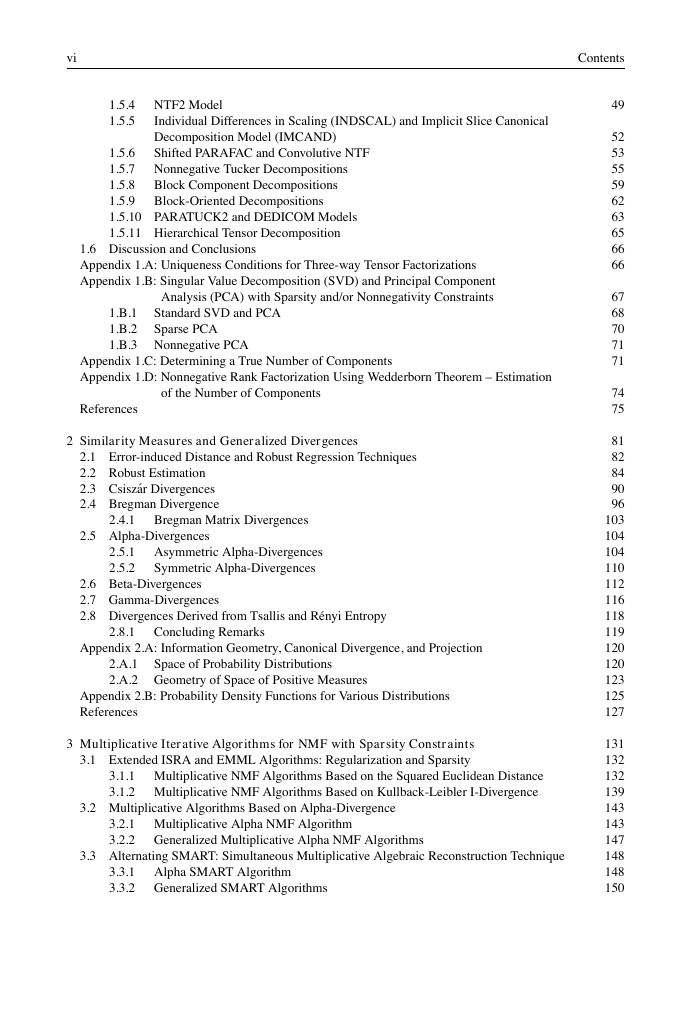

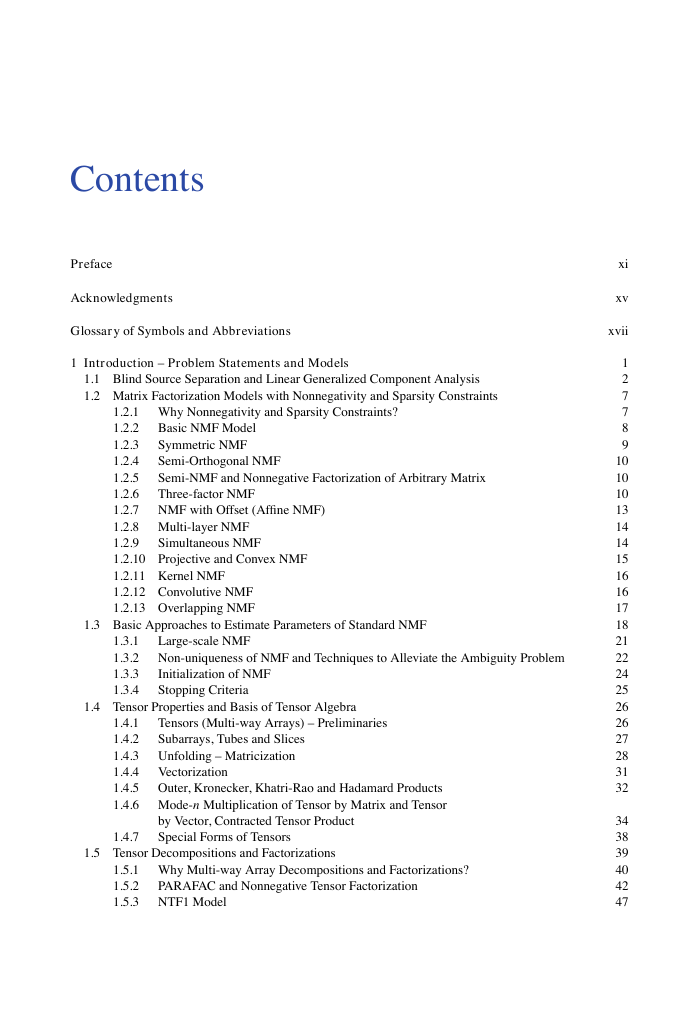

Contents

Preface

Acknowledgments

Glossary of Symbols and Abbreviations

1 Introduction – Problem Statements and Models

1.1 Blind Source Separation and Linear Generalized Component Analysis

1.2 Matrix Factorization Models with Nonnegativity and Sparsity Constraints

1.2.1 Why Nonnegativity and Sparsity Constraints?

1.2.2 Basic NMF Model

1.2.3 Symmetric NMF

1.2.4 Semi-Orthogonal NMF

1.2.5 Semi-NMF and Nonnegative Factorization of Arbitrary Matrix

1.2.6 Three-factor NMF

1.2.7 NMF with Offset (Affine NMF)

1.2.8 Multi-layer NMF

1.2.9 Simultaneous NMF

1.2.10 Projective and Convex NMF

1.2.11 Kernel NMF

1.2.12 Convolutive NMF

1.2.13 Overlapping NMF

1.3 Basic Approaches to Estimate Parameters of Standard NMF

1.3.1 Large-scale NMF

1.3.2 Non-uniqueness of NMF and Techniques to Alleviate the Ambiguity Problem

1.3.3 Initialization of NMF

1.3.4 Stopping Criteria

1.4 Tensor Properties and Basis of Tensor Algebra

1.4.1 Tensors (Multi-way Arrays) – Preliminaries

1.4.2 Subarrays, Tubes and Slices

1.4.3 Unfolding – Matricization

1.4.4 Vectorization

1.4.5 Outer, Kronecker, Khatri-Rao and Hadamard Products

1.4.6 Mode-n Multiplication of Tensor by Matrix and Tensor by Vector, Contracted Tensor Product

1.4.7 Special Forms of Tensors

1.5 Tensor Decompositions and Factorizations

1.5.1 Why Multi-way Array Decompositions and Factorizations?

1.5.2 PARAFAC and Nonnegative Tensor Factorization

1.5.3 NTF1 Model

1.5.4 NTF2 Model

1.5.5 Individual Differences in Scaling (INDSCAL) and Implicit Slice Canonical Decomposition Model (IMCAND)

1.5.6 Shifted PARAFAC and Convolutive NTF

1.5.7 Nonnegative Tucker Decompositions

1.5.8 Block Component Decompositions

1.5.9 Block-Oriented Decompositions

1.5.10 PARATUCK2 and DEDICOM Models

1.5.11 Hierarchical Tensor Decomposition

1.6 Discussion and Conclusions

Appendix 1.A: Uniqueness Conditions for Three-way Tensor Factorizations

Appendix 1.B: Singular Value Decomposition (SVD) and Principal Component Analysis (PCA) with Sparsity and/or Nonnegativity Constraints

1.B.1 Standard SVD and PCA

1.B.2 Sparse PCA

1.B.3 Nonnegative PCA

Appendix 1.C: Determining a True Number of Components

Appendix 1.D: Nonnegative Rank Factorization Using Wedderborn Theorem – Estimation of the Number of Components

References

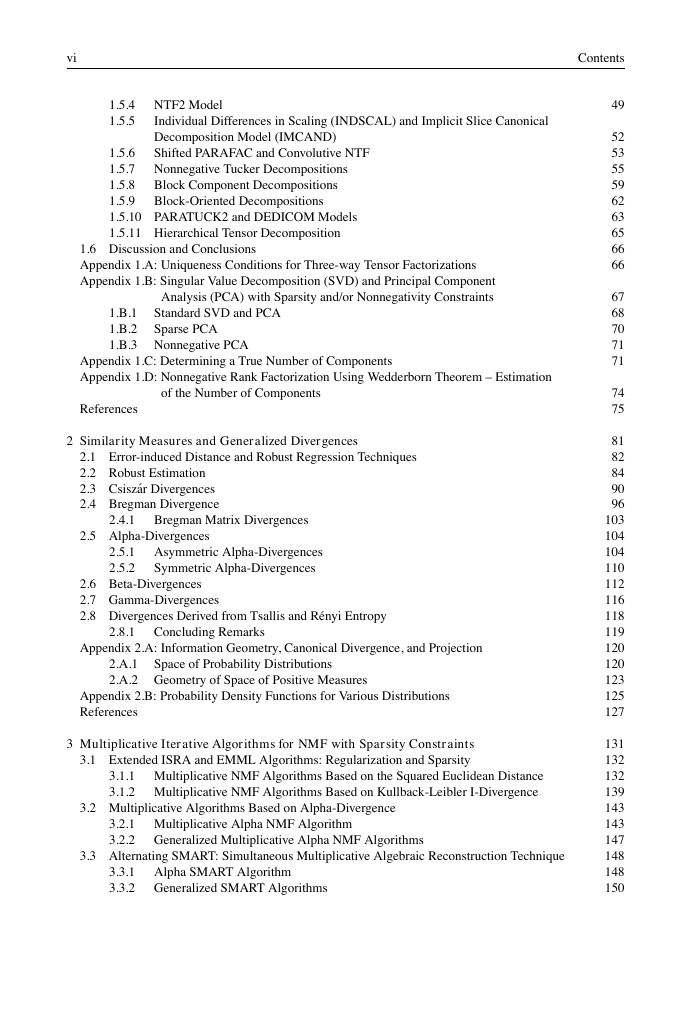

2 Similarity Measures and Generalized Divergences

2.1 Error-induced Distance and Robust Regression Techniques

2.2 Robust Estimation

2.3 Csiszár Divergences

2.4 Bregman Divergence

2.4.1 Bregman Matrix Divergences

2.5 Alpha-Divergences

2.5.1 Asymmetric Alpha-Divergences

2.5.2 Symmetric Alpha-Divergences

2.6 Beta-Divergences

2.7 Gamma-Divergences

2.8 Divergences Derived from Tsallis and Rényi Entropy

2.8.1 Concluding Remarks

Appendix 2.A: Information Geometry, Canonical Divergence, and Projection

2.A.1 Space of Probability Distributions

2.A.2 Geometry of Space of Positive Measures

Appendix 2.B: Probability Density Functions for Various Distributions

References

3 Multiplicative Iterative Algorithms for NMF with Sparsity Constraints

3.1 Extended ISRA and EMML Algorithms: Regularization and Sparsity

3.1.1 Multiplicative NMF Algorithms Based on the Squared Euclidean Distance

3.1.2 Multiplicative NMF Algorithms Based on Kullback-Leibler I-Divergence

3.2 Multiplicative Algorithms Based on Alpha-Divergence

3.2.1 Multiplicative Alpha NMF Algorithm

3.2.2 Generalized Multiplicative Alpha NMF Algorithms

3.3 Alternating SMART: Simultaneous Multiplicative Algebraic Reconstruction Technique

3.3.1 Alpha SMART Algorithm

3.3.2 Generalized SMART Algorithms

3.4 Multiplicative NMF Algorithms Based on Beta-Divergence

3.4.1 Multiplicative Beta NMF Algorithm

3.4.2 Multiplicative Algorithm Based on the Itakura-Saito Distance

3.4.3 Generalized Multiplicative Beta Algorithm for NMF

3.5 Algorithms for Semi-orthogonal NMF and Orthogonal Three-Factor NMF

3.6 Multiplicative Algorithms for Affine NMF

3.7 Multiplicative Algorithms for Convolutive NMF

3.7.1 Multiplicative Algorithm for Convolutive NMF Based on Alpha-Divergence

3.7.2 Multiplicative Algorithm for Convolutive NMF Based on Beta-Divergence

3.7.3 Efficient Implementation of CNMF Algorithm

3.8 Simulation Examples for Standard NMF

3.9 Examples for Affine NMF

3.10 Music Analysis and Decomposition Using Convolutive NMF

3.11 Discussion and Conclusions

Appendix 3.A: Fast Algorithms for Large-scale Data

3.A.1 Random Block-wise Processing Approach – Large-scale NMF

3.A.2 Multi-layer Procedure

3.A.3 Parallel Processing

Appendix 3.B: Performance Evaluation

3.B.1 Signal-to-Interference-Ratio - SIR

3.B.2 Peak Signal-to-Noise-Ratio (PSNR)

Appendix 3.C: Convergence Analysis of the Multiplicative Alpha NMF Algorithm

Appendix 3.D: MATLAB Implementation of the Multiplicative NMF Algorithms

3.D.1 Alpha Algorithm

3.D.2 SMART Algorithm

3.D.3 ISRA Algorithm for NMF

Appendix 3.E: Additional MATLAB Functions

3.E.1 Multi-layer NMF

3.E.2 MC Analysis with Distributed Computing Tool

References

4 Alternating Least Squares and Related Algorithms for NMF and SCA Problems

4.1 Standard ALS Algorithm

4.1.1 Multiple Linear Regression – Vectorized Version of ALS Update Formulas

4.1.2 Weighted ALS

4.2 Methods for Improving Performance and Convergence Speed of ALS Algorithms

4.2.1 ALS Algorithm for Very Large-scale NMF

4.2.2 ALS Algorithm with Line-Search

4.2.3 Acceleration of ALS Algorithm via Simple Regularization

4.3 ALS Algorithm with Flexible and Generalized Regularization Terms

4.3.1 ALS with Tikhonov Type Regularization Terms

4.3.2 ALS Algorithms with Sparsity Control and Decorrelation

4.4 Combined Generalized Regularized ALS Algorithms

4.5 Wang-Hancewicz Modified ALS Algorithm

4.6 Implementation of Regularized ALS Algorithms for NMF

4.7 HALS Algorithm and its Extensions

4.7.1 Projected Gradient Local Hierarchical Alternating Least Squares (HALS) Algorithm

4.7.2 Extensions and Implementations of the HALS Algorithm

4.7.3 Fast HALS NMF Algorithm for Large-scale Problems

4.7.4 HALS NMF Algorithm with Sparsity, Smoothness and Uncorrelatedness Constraints

4.7.5 HALS Algorithm for Sparse Component Analysis and Flexible Component Analysis

4.7.6 Simplified HALS Algorithm for Distributed and Multi-task Compressed Sensing

4.7.7 Generalized HALS-CS Algorithm

4.7.8 Generalized HALS Algorithms Using Alpha-Divergence

4.7.9 Generalized HALS Algorithms Using Beta-Divergence

4.8 Simulation Results

4.8.1 Underdetermined Blind Source Separation Examples

4.8.2 NMF with Sparseness, Orthogonality and Smoothness Constraints

4.8.3 Simulations for Large-scale NMF

4.8.4 Illustrative Examples for Compressed Sensing

4.9 Discussion and Conclusions

Appendix 4.A: MATLAB Source Code for ALS Algorithm

Appendix 4.B: MATLAB Source Code for Regularized ALS Algorithms

Appendix 4.C: MATLAB Source Code for Mixed ALS-HALS Algorithms

Appendix 4.D: MATLAB Source Code for HALS CS Algorithm

Appendix 4.E: Additional MATLAB Functions

References

5 Projected Gradient Algorithms

5.1 Oblique Projected Landweber (OPL) Method

5.2 Lin’s Projected Gradient (LPG) Algorithm with Armijo Rule

5.3 Barzilai-Borwein Gradient Projection for Sparse Reconstruction (GPSR-BB)

5.4 Projected Sequential Subspace Optimization (PSESOP)

5.5 Interior Point Gradient (IPG) Algorithm

5.6 Interior Point Newton (IPN) Algorithm

5.7 Regularized Minimal Residual Norm Steepest Descent Algorithm (RMRNSD)

5.8 Sequential Coordinate-Wise Algorithm (SCWA)

5.9 Simulations

5.10 Discussions

Appendix 5.A: Stopping Criteria

Appendix 5.B: MATLAB Source Code for Lin’s PG Algorithm

References

6 Quasi-Newton Algorithms for Nonnegative Matrix Factorization

6.1 Projected Quasi-Newton Optimization

6.1.1 Projected Quasi-Newton for Frobenius Norm

6.1.2 Projected Quasi-Newton for Alpha-Divergence

6.1.3 Projected Quasi-Newton for Beta-Divergence

6.1.4 Practical Implementation

6.2 Gradient Projection Conjugate Gradient

6.3 FNMA algorithm

6.4 NMF with Quadratic Programming

6.4.1 Nonlinear Programming

6.4.2 Quadratic Programming

6.4.3 Trust-region Subproblem

6.4.4 Updates for A

6.5 Hybrid Updates

6.6 Numerical Results

6.7 Discussions

Appendix 6.A: Gradient and Hessian of Cost Functions

Appendix 6.B: MATLAB Source Codes

References

7 Multi-Way Array (Tensor) Factorizations and Decompositions

7.1 Learning Rules for the Extended Three-way NTF1 Problem

7.1.1 Basic Approaches for the Extended NTF1 Model

7.1.2 ALS Algorithms for NTF1

7.1.3 Multiplicative Alpha and Beta Algorithms for the NTF1 Model

7.1.4 Multi-layer NTF1 Strategy

7.2 Algorithms for Three-way Standard and Super Symmetric Nonnegative Tensor Factorization

7.2.1 Multiplicative NTF Algorithms Based on Alpha- and Beta-Divergences

7.2.2 Simple Alternative Approaches for NTF and SSNTF

7.3 Nonnegative Tensor Factorizations for Higher-Order Arrays

7.3.1 Alpha NTF Algorithm

7.3.2 Beta NTF Algorithm

7.3.3 Fast HALS NTF Algorithm Using Squared Euclidean Distance

7.3.4 Generalized HALS NTF Algorithms Using Alpha- and Beta-Divergences

7.3.5 Tensor Factorization with Additional Constraints

7.4 Algorithms for Nonnegative and Semi-Nonnegative Tucker Decompositions

7.4.1 Higher Order SVD (HOSVD) and Higher Order Orthogonal Iteration (HOOI) Algorithms

7.4.2 ALS Algorithm for Nonnegative Tucker Decomposition

7.4.3 HOSVD, HOOI and ALS Algorithms as Initialization Tools for Nonnegative Tensor Decomposition

7.4.4 Multiplicative Alpha Algorithms for Nonnegative Tucker Decomposition

7.4.5 Beta NTD Algorithm

7.4.6 Local ALS Algorithms for Nonnegative TUCKER Decompositions

7.4.7 Semi-Nonnegative Tucker Decomposition

7.5 Nonnegative Block-Oriented Decomposition

7.5.1 Multiplicative Algorithms for NBOD

7.6 Multi-level Nonnegative Tensor Decomposition - High Accuracy Compression and Approximation

7.7 Simulations and Illustrative Examples

7.7.1 Experiments for Nonnegative Tensor Factorizations

7.7.2 Experiments for Nonnegative Tucker Decomposition

7.7.3 Experiments for Nonnegative Block-Oriented Decomposition

7.7.4 Multi-Way Analysis of High Density Array EEG – Classification of Event Related Potentials

7.7.5 Application of Tensor Decomposition in Brain Computer Interface – Classification of Motor Imagery Tasks

7.7.6 Image and Video Applications

7.8 Discussion and Conclusions

Appendix 7.A: Evaluation of Interactions and Relationships Among Hidden Components for NTD Model

Appendix 7.B: Computation of a Reference Tensor

Appendix 7.C: Trilinear and Direct Trilinear Decompositions for Efficient Initialization

Appendix 7.D: MATLAB Source Code for Alpha NTD Algorithm

Appendix 7.E: MATLAB Source Code for Beta NTD Algorithm

Appendix 7.F: MATLAB Source Code for HALS NTD Algorithm

Appendix 7.G: MATLAB Source Code for ALS NTF1 Algorithm

Appendix 7.H: MATLAB Source Code for ISRA BOD Algorithm

Appendix 7.I: Additional MATLAB functions

References

8 Selected Applications

8.1 Clustering

8.1.1 Semi-Binary NMF

8.1.2 NMF vs. Spectral Clustering

8.1.3 Clustering with Convex NMF

8.1.4 Application of NMF to Text Mining

8.1.5 Email Surveillance

8.2 Classification

8.2.1 Musical Instrument Classification

8.2.2 Image Classification

8.3 Spectroscopy

8.3.1 Raman Spectroscopy

8.3.2 Fluorescence Spectroscopy

8.3.3 Hyperspectral Imaging

8.3.4 Chemical Shift Imaging

8.4 Application of NMF for Analyzing Microarray Data

8.4.1 Gene Expression Classification

8.4.2 Analysis of Time Course Microarray Data

References

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc