1 Introduction

1.1 About this manual

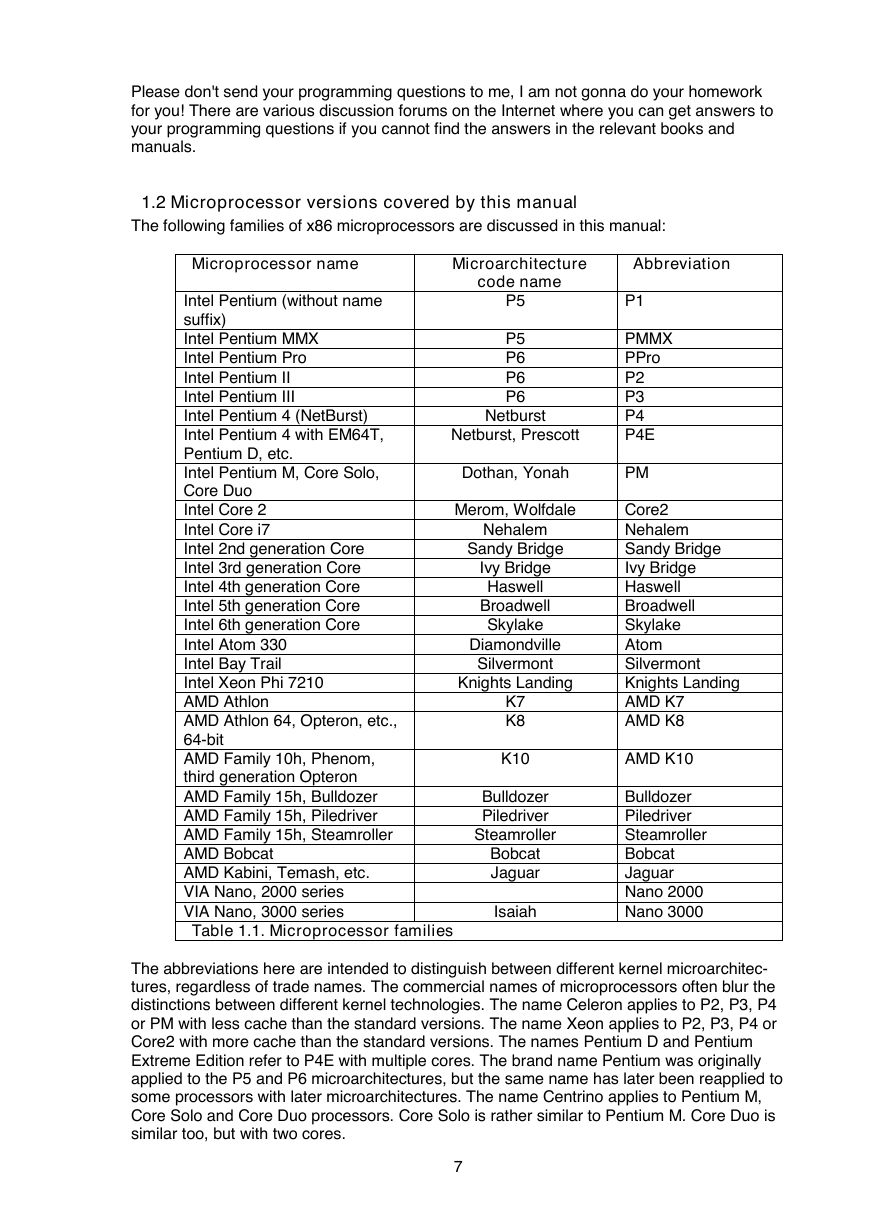

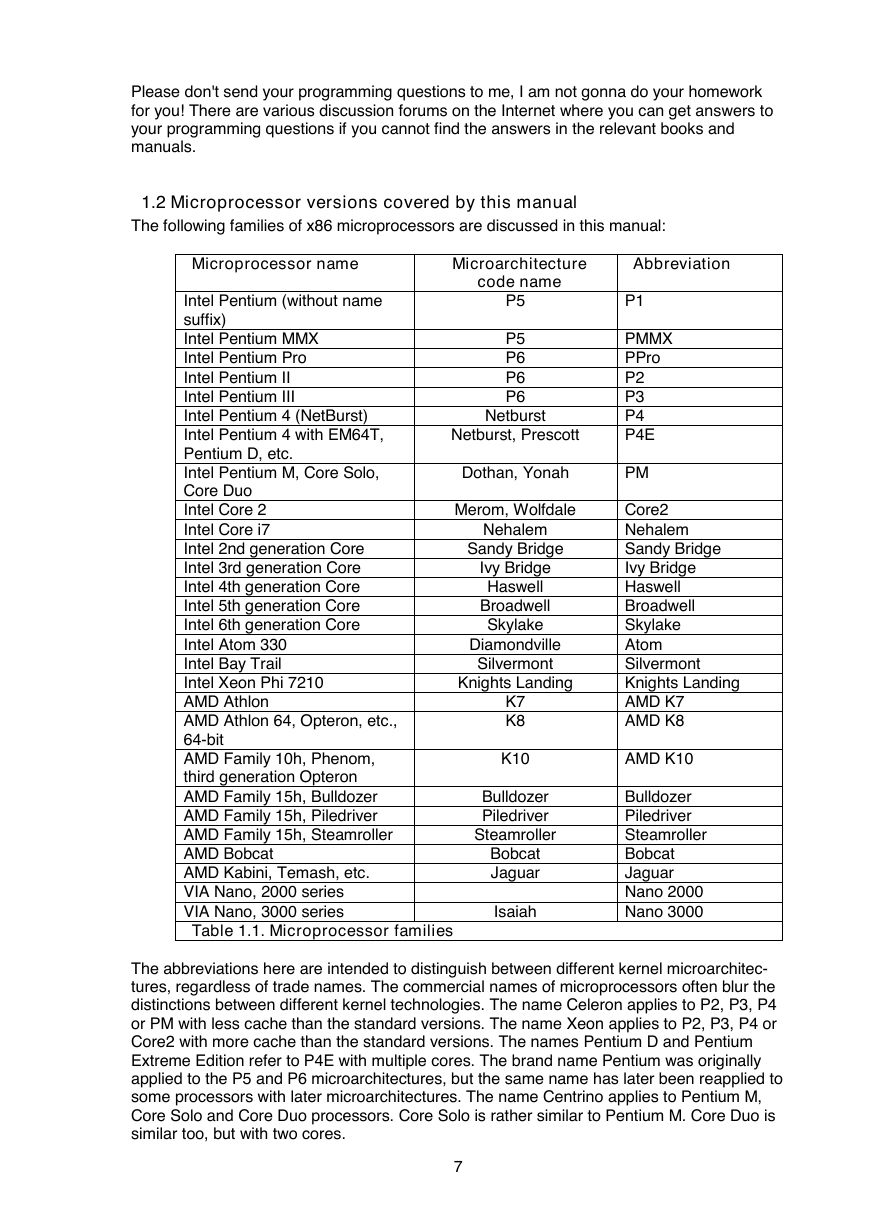

1.2 Microprocessor versions covered by this manual

2 Out-of-order execution (All processors except P1, PMMX)

2.1 Instructions are split into µops

2.2 Register renaming

3 Branch prediction (all processors)

3.1 Prediction methods for conditional jumps

Saturating counter

Two-level adaptive predictor with local history tables

Two-level adaptive predictor with global history table

The agree predictor

Loop counter

Indirect jump prediction

Subroutine return prediction

Hybrid predictors

Future branch prediction methods

3.2 Branch prediction in P1

BTB is looking ahead (P1)

Consecutive branches

3.3 Branch prediction in PMMX, PPro, P2, and P3

BTB organization

Misprediction penalty

Pattern recognition for conditional jumps

Tight loops (PMMX)

Indirect jumps and calls (PMMX, PPro, P2 and P3)

JECXZ and LOOP (PMMX)

3.4 Branch prediction in P4 and P4E

Pattern recognition for conditional jumps in P4

Alternating branches

Pattern recognition for conditional jumps in P4E

3.5 Branch prediction in PM and Core2

Misprediction penalty

Pattern recognition for conditional jumps

Pattern recognition for indirect jumps and calls

BTB organization

3.6 Branch prediction in Intel Nehalem

Misprediction penalty

Pattern recognition for conditional jumps

Pattern recognition for indirect jumps and calls

BTB organization

Prediction of function returns

3.7 Branch prediction in Intel Sandy Bridge and Ivy Bridge

Misprediction penalty

Pattern recognition for conditional jumps

Pattern recognition for indirect jumps and calls

BTB organization

Prediction of function returns

3.8 Branch prediction in Intel Haswell, Broadwell and Skylake

Misprediction penalty

Pattern recognition for conditional jumps

Pattern recognition for indirect jumps and calls

BTB organization

Prediction of function returns

3.9 Branch prediction in Intel Atom, Silvermont and Knights Landing

Misprediction penalty

Prediction of indirect branches

Return stack buffer

3.10 Branch prediction in VIA Nano

3.11 Branch prediction in AMD K8 and K10

BTB organization

Misprediction penalty

Pattern recognition for conditional jumps

Prediction of indirect branches

Return stack buffer

Literature:

3.12 Branch prediction in AMD Bulldozer, Piledriver and Steamroller

Misprediction penalty

Return stack buffer

Literature:

3.13 Branch prediction in AMD Bobcat and Jaguar

BTB organization

Misprediction penalty

Pattern recognition for conditional jumps

Prediction of indirect branches

Return stack buffer

Literature:

3.14 Indirect jumps on older processors

3.15 Returns (all processors except P1)

3.16 Static prediction

Static prediction in P1 and PMMX

Static prediction in PPro, P2, P3, P4, P4E

Static prediction in PM and Core2

Static prediction in AMD

3.17 Close jumps

Close jumps on PMMX

Chained jumps on PPro, P2 and P3

Chained jumps on P4, P4E and PM

Chained jumps on AMD

4 Pentium 1 and Pentium MMX pipeline

4.1 Pairing integer instructions

Perfect pairing

Imperfect pairing

4.2 Address generation interlock

4.3 Splitting complex instructions into simpler ones

4.4 Prefixes

4.5 Scheduling floating point code

5 Pentium 4 (NetBurst) pipeline

5.1 Data cache

5.2 Trace cache

Economizing trace cache use on P4

Trace cache use on P4E

Trace cache delivery rate

Branches in the trace cache

Guidelines for improving trace cache performance

5.3 Instruction decoding

5.4 Execution units

5.5 Do the floating point and MMX units run at half speed?

Hypothesis 1

Hypothesis 2

Hypothesis 3

Hypothesis 4

5.6 Transfer of data between execution units

Explanation A

Explanation B

Explanation C

5.7 Retirement

5.8 Partial registers and partial flags

5.9 Store forwarding stalls

5.10 Memory intermediates in dependency chains

Transferring parameters to procedures

Transferring data between floating point and other registers

Literature

5.11 Breaking dependency chains

5.12 Choosing the optimal instructions

INC and DEC

8-bit and 16-bit integers

Memory stores

Shifts and rotates

Integer multiplication

LEA

Register-to-register moves with FP, mmx and xmm registers

5.13 Bottlenecks in P4 and P4E

Memory access

Execution latency

Execution unit throughput

Port throughput

Trace cache delivery

Trace cache size

µop retirement

Instruction decoding

Branch prediction

Replaying of µops

6 Pentium Pro, II and III pipeline

6.1 The pipeline in PPro, P2 and P3

6.2 Instruction fetch

6.3 Instruction decoding

Instruction length decoding

The 4-1-1 rule

IFETCH block boundaries

Instruction prefixes

6.4 Register renaming

6.5 ROB read

6.6 Out of order execution

6.7 Retirement

6.8 Partial register stalls

Partial flags stalls

Flags stalls after shifts and rotates

6.9 Store forwarding stalls

6.10 Bottlenecks in PPro, P2, P3

7 Pentium M pipeline

7.1 The pipeline in PM

7.2 The pipeline in Core Solo and Duo

7.3 Instruction fetch

7.4 Instruction decoding

7.5 Loop buffer

7.6 Micro-op fusion

7.7 Stack engine

7.8 Register renaming

7.9 Register read stalls

7.10 Execution units

7.11 Execution units that are connected to both port 0 and 1

7.12 Retirement

7.13 Partial register access

Partial flags stall

7.14 Store forwarding stalls

7.15 Bottlenecks in PM

Memory access

Instruction fetch and decode

Micro-operation fusion

Register read stalls

Execution ports

Execution latencies and dependency chains

Partial register access

Branch prediction

Retirement

8 Core 2 and Nehalem pipeline

8.1 Pipeline

8.2 Instruction fetch and predecoding

Loopback buffer

Length-changing prefixes

8.3 Instruction decoding

8.4 Micro-op fusion

8.5 Macro-op fusion

8.6 Stack engine

8.7 Register renaming

8.8 Register read stalls

8.9 Execution units

Data bypass delays on Core2

Data bypass delays on Nehalem

Mixing µops with different latencies

8.10 Retirement

8.11 Partial register access

Partial access to general purpose registers

Partial flags stall

Partial access to XMM registers

8.12 Store forwarding stalls

8.13 Cache and memory access

Cache bank conflicts

Misaligned memory accesses

8.14 Breaking dependency chains

8.15 Multithreading in Nehalem

8.16 Bottlenecks in Core2 and Nehalem

Instruction fetch and predecoding

Instruction decoding

Register read stalls

Execution ports and execution units

Execution latency and dependency chains

Partial register access

Retirement

Branch prediction

Memory access

Literature

9 Sandy Bridge and Ivy Bridge pipeline

9.1 Pipeline

9.2 Instruction fetch and decoding

9.3 µop cache

9.4 Loopback buffer

9.5 Micro-op fusion

9.6 Macro-op fusion

9.7 Stack engine

9.8 Register allocation and renaming

Special cases of independence

Instructions that need no execution unit

Elimination of move instructions

9.9 Register read stalls

9.10 Execution units

Read and write bandwidth

Data bypass delays

Mixing µops with different latencies

256-bit vectors

Underflow and subnormals

9.11 Partial register access

Partial flags stall

Partial access to vector registers

9.12 Transitions between VEX and non-VEX modes

9.13 Cache and memory access

Cache bank conflicts

Misaligned memory accesses

Prefetch instructions

9.14 Store forwarding stalls

9.15 Multithreading

9.16 Bottlenecks in Sandy Bridge and Ivy Bridge

Instruction fetch and predecoding

µop cache

Register read stalls

Execution ports and execution units

Execution latency and dependency chains

Partial register access

Retirement

Branch prediction

Memory access

Multithreading

Literature

10 Haswell and Broadwell pipeline

10.1 Pipeline

10.2 Instruction fetch and decoding

10.3 µop cache

10.4 Loopback buffer

10.5 Micro-op fusion

10.6 Macro-op fusion

10.7 Stack engine

10.8 Register allocation and renaming

Special cases of independence

Instructions that need no execution unit

Elimination of move instructions

10.9 Execution units

Fused multiply and add

How many input dependencies can a µop have?

Read and write bandwidth

Data bypass delays

256-bit vectors

Mixing µops with different latencies

Underflow and subnormals

10.10 Partial register access

Partial flags access

Partial access to vector registers

10.11 Cache and memory access

Cache bank conflicts

Misaligned memory accesses

10.12 Store forwarding stalls

10.13 Multithreading

10.14 Bottlenecks in Haswell and Broadwell

Instruction fetch and predecoding

µop cache

Execution ports and execution units

Floating point addition has lower throughput than multiplication

Execution latency and dependency chains

Branch prediction

Memory access

Multithreading

Literature

11 Skylake pipeline

11.1 Pipeline

11.2 Instruction fetch and decoding

11.3 µop cache

11.4 Loopback buffer

11.5 Micro-op fusion

11.6 Macro-op fusion

11.7 Stack engine

11.8 Register allocation and renaming

Special cases of independence

Instructions that need no execution unit

Elimination of move instructions

11.9 Execution units

Fused multiply and add

How many input dependencies can a µop have?

Read and write bandwidth

Data bypass delays

256-bit vectors

Warm-up period for 256-bit vector operations

Underflow and subnormals

11.10 Partial register access

Partial flags access

Partial access to vector registers

11.11 Cache and memory access

Cache bank conflicts

11.12 Store forwarding stalls

11.13 Multithreading

11.14 Bottlenecks in Skylake

Instruction fetch and predecoding

µop cache

Execution ports and execution units

Execution latency and dependency chains

Branch prediction

Memory access

Multithreading

Literature

12 Intel Atom pipeline

12.1 Instruction fetch

12.2 Instruction decoding

12.3 Execution units

12.4 Instruction pairing

12.5 X87 floating point instructions

12.6 Instruction latencies

12.7 Memory access

12.8 Branches and loops

12.9 Multithreading

12.10 Bottlenecks in Atom

13 Intel Silvermont pipeline

13.1 Pipeline

13.2 Instruction fetch and decoding

13.3 Loop buffer

13.4 Macro-op fusion

13.5 Register allocation and out of order execution

13.6 Special cases of independence

13.7 Execution units

Read and write bandwidth

Data bypass delays

Underflow and subnormals

13.8 Partial register access

13.9 Cache and memory access

13.10 Store forwarding

13.11 Multithreading

13.12 Bottlenecks in Silvermont

Instruction fetch and decoding

Execution ports and execution units

Out of order execution

Branch prediction

Memory access

Multithreading

Literature

14 Intel Knights Corner pipeline

Literature

15 Intel Knights Landing pipeline

15.1 Pipeline

15.2 Instruction fetch and decoding

15.3 Loop buffer

15.4 Execution units

Latencies of the f.p./vector unit

Mask operations

Data bypass delays

Underflow and subnormals

Mathematical functions

15.5 Partial register access

15.6 Partial access to vector registers and VEX / non-VEX transitions

15.7 Special cases of independence

15.8 Cache and memory access

Read and write bandwidth

15.9 Store forwarding

15.10 Multithreading

15.11 Bottlenecks in Knights Landing

Instruction fetch and decoding

Microcode

Execution ports and execution units

Out of order execution

Branch prediction

Memory access

Multithreading

Literature

16 VIA Nano pipeline

16.1 Performance monitor counters

16.2 Instruction fetch

16.3 Instruction decoding

16.4 Instruction fusion

16.5 Out of order system

16.6 Execution ports

16.7 Latencies between execution units

Latencies between integer and floating point type XMM instructions

16.8 Partial registers and partial flags

16.9 Breaking dependence

16.10 Memory access

16.11 Branches and loops

16.12 VIA specific instructions

16.13 Bottlenecks in Nano

17 AMD K8 and K10 pipeline

17.1 The pipeline in AMD K8 and K10 processors

17.2 Instruction fetch

17.3 Predecoding and instruction length decoding

17.4 Single, double and vector path instructions

17.5 Stack engine

17.6 Integer execution pipes

17.7 Floating point execution pipes

17.8 Mixing instructions with different latency

17.9 64 bit versus 128 bit instructions

17.10 Data delay between differently typed instructions

17.11 Partial register access

17.12 Partial flag access

17.13 Store forwarding stalls

17.14 Loops

17.15 Cache

Level-2 cache

Level-3 cache

17.16 Bottlenecks in AMD K8 and K10

Instruction fetch

Out-of-order scheduling

Execution units

Mixed latencies

Dependency chains

Jumps and branches

Retirement

18 AMD Bulldozer, Piledriver and Steamroller pipeline

18.1 The pipeline in AMD Bulldozer, Piledriver and Steamroller

18.2 Instruction fetch

18.3 Instruction decoding

18.4 Loop buffer

18.5 Instruction fusion

18.6 Stack engine

18.7 Out-of-order schedulers

18.8 Integer execution pipes

18.9 Floating point execution pipes

Subnormal operands

Fused multiply and add

18.10 AVX instructions

18.11 Data delay between different execution domains

18.12 Instructions that use no execution units

18.13 Partial register access

18.14 Partial flag access

18.15 Dependency-breaking instructions

18.16 Branches and loops

18.17 Cache and memory access

18.18 Store forwarding stalls

18.19 Bottlenecks in AMD Bulldozer, Piledriver and Steamroller

Power saving

Shared resources

Instruction fetch

Instruction decoding

Out-of-order scheduling

Execution units

256-bit memory writes

Mixed latencies

Dependency chains

Jumps and branches

Memory and cache access

Retirement

18.20 Literature

19 AMD Bobcat and Jaguar pipeline

19.1 The pipeline in AMD Bobcat and Jaguar

19.2 Instruction fetch

19.3 Instruction decoding

19.4 Single, double and complex instructions

19.5 Integer execution pipes

19.6 Floating point execution pipes

19.7 Mixing instructions with different latency

19.8 Dependency-breaking instructions

19.9 Data delay between differently typed instructions

19.10 Partial register access

19.11 Cache

19.12 Store forwarding stalls

19.13 Bottlenecks in Bobcat and Jaguar

19.14 Literature:

20 Comparison of microarchitectures

20.1 The AMD K8 and K10 kernel

20.2 The AMD Bulldozer, Piledriver and Steamroller kernel

20.3 The Pentium 4 kernel

20.4 The Pentium M kernel

20.5 Intel Core 2 and Nehalem microarchitecture

20.6 Intel Sandy Bridge and later microarchitectures

21 Comparison of low power microarchitectures

21.1 Intel Atom microarchitecture

21.2 VIA Nano microarchitecture

21.3 AMD Bobcat microarchitecture

21.4 Conclusion

22 Future trends

23 Literature

24 Copyright notice

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc