ISO/IEC JTC 1/SC 29/WG 1 N 2412

Date: September 1, 2001

ISO/IEC JTC 1/SC 29/WG 1

(ITU-T SG8)

Coding of Still Pictures

JBIG

JPEG

Joint Bi-level Image

Joint Photographic

Experts Group

Experts Group

TITLE:

SOURCE:

PROJECT:

STATUS:

The JPEG-2000 Still Image Compression

Standard

Michael D. Adams

Dept. of Electrical and Computer Engineering

University of British Columbia

Vancouver, BC, Canada V6T 1Z4

E-mail: mdadams@ece.ubc.ca

JPEG 2000

REQUESTED ACTION:

DISTRIBUTION:

Public

Contact:

ISO/IEC JTC1/SC29/WG1 Convener—Dr. Daniel Lee

Yahoo!, 3420 Central Expressway, Santa Clara, California 95051, USA

Tel: +1 408 992 7051, Fax: +1 253 830 0372, E-mail: dlee@yahoo-inc.com

�

The JPEG-2000 Still Image Compression Standard∗

(Last Revised: September 1, 2001)

Michael D. Adams

Dept. of Elec. and Comp. Engineering, University of British Columbia

Vancouver, BC, Canada V6T 1Z4

mailto:mdadams@ieee.org http://www.ece.ubc.ca/˜mdadams

1

Abstract—JPEG 2000, a new international standard for still image com-

pression, is discussed at length. A high-level introduction to the JPEG-2000

standard is given, followed by a detailed technical description of the JPEG-

2000 Part-1 codec.

Keywords—JPEG 2000, still image compression/coding, standards.

I. INTRODUCTION

Digital imagery is pervasive in our world today. Conse-

quently, standards for the efficient representation and inter-

change of digital images are essential. To date, some of the

most successful still image compression standards have re-

sulted from the ongoing work of the Joint Photographic Experts

Group (JPEG). This group operates under the auspices of Joint

Technical Committee 1, Subcommittee 29, Working Group 1

(JTC 1/SC 29/WG 1), a collaborative effort between the In-

ternational Organization for Standardization (ISO) and Interna-

tional Telecommunication Union Standardization Sector (ITU-

T). Both the JPEG [1, 2] and JPEG-LS [3] standards were born

from the work of the JPEG committee. For the last few years,

the JPEG committee has been working towards the establish-

ment of a new standard known as JPEG 2000 (i.e., ISO/IEC

15444). The fruits of these labors are now coming to bear, as

JPEG-2000 Part 1 (i.e., ISO/IEC 15444-1 [4]) has recently been

approved as a new international standard.

In this paper, we provide a detailed technical description of

the JPEG-2000 Part-1 codec, in addition to a brief overview of

the JPEG-2000 standard. This exposition is intended to serve as

a reader-friendly starting point for those interested in learning

about JPEG 2000. Although many details are included in our

presentation, some details are necessarily omitted. The reader

should, therefore, refer to the standard [4] before attempting an

implementation. The JPEG-2000 codec realization in the JasPer

software [5–7] may also serve as a practical guide for imple-

mentors. (See Appendix A for more information about JasPer.)

The remainder of this paper is structured as follows. Sec-

tion II begins with a overview of the JPEG-2000 standard. This

is followed, in Section III, by a detailed description of the JPEG-

2000 Part-1 codec. Finally, we conclude with some closing re-

marks in Section IV. Throughout our presentation, a basic un-

derstanding of image coding is assumed.

∗This document is a revised version of the JPEG-2000 tutorial that I wrote

which appeared in the JPEG working group document WG1N1734. The original

tutorial contained numerous inaccuracies, some of which were introduced by

changes in the evolving draft standard while others were due to typographical

errors. Hopefully, most of these inaccuracies have been corrected in this revised

document. In any case, this document will probably continue to evolve over

time. Subsequent versions of this document will be made available from my

home page (the URL for which is provided with my contact information).

II. JPEG 2000

The JPEG-2000 standard supports lossy and lossless com-

pression of single-component (e.g., grayscale) and multi-

component (e.g., color) imagery. In addition to this basic com-

pression functionality, however, numerous other features are

provided, including: 1) progressive recovery of an image by fi-

delity or resolution; 2) region of interest coding, whereby differ-

ent parts of an image can be coded with differing fidelity; 3) ran-

dom access to particular regions of an image without needing to

decode the entire code stream; 4) a flexible file format with pro-

visions for specifying opacity information and image sequences;

and 5) good error resilience. Due to its excellent coding per-

formance and many attractive features, JPEG 2000 has a very

large potential application base. Some possible application ar-

eas include: image archiving, Internet, web browsing, document

imaging, digital photography, medical imaging, remote sensing,

and desktop publishing.

A. Why JPEG 2000?

Work on the JPEG-2000 standard commenced with an initial

call for contributions [8] in March 1997. The purpose of having

a new standard was twofold. First, it would address a number

of weaknesses in the existing JPEG standard. Second, it would

provide a number of new features not available in the JPEG stan-

dard. The preceding points led to several key objectives for the

new standard, namely that it should: 1) allow efficient lossy and

lossless compression within a single unified coding framework,

2) provide superior image quality, both objectively and subjec-

tively, at low bit rates, 3) support additional features such as re-

gion of interest coding, and a more flexible file format, 4) avoid

excessive computational and memory complexity. Undoubtedly,

much of the success of the original JPEG standard can be at-

tributed to its royalty-free nature. Consequently, considerable

effort has been made to ensure that minimally-compliant JPEG-

2000 codec can be implemented free of royalties1.

B. Structure of the Standard

The JPEG-2000 standard is comprised of numerous parts,

several of which are listed in Table I. For convenience, we will

refer to the codec defined in Part 1 of the standard as the baseline

codec. The baseline codec is simply the core (or minimal func-

tionality) coding system for the JPEG-2000 standard. Parts 2

and 3 describe extensions to the baseline codec that are useful

for certain specific applications such as intraframe-style video

1Whether these efforts ultimately prove successful remains to be seen, how-

ever, as there are still some unresolved intellectual property issues at the time of

this writing.

�

2

compression. In this paper, we will, for the most part, limit our

discussion to the baseline codec. Some of the extensions pro-

posed for inclusion in Part 2 will be discussed briefly. Unless

otherwise indicated, our exposition considers only the baseline

system.

For the most part, the JPEG-2000 standard is written from the

point of view of the decoder. That is, the decoder is defined quite

precisely with many details being normative in nature (i.e., re-

quired for compliance), while many parts of the encoder are less

rigidly specified. Obviously, implementors must make a very

clear distinction between normative and informative clauses in

the standard. For the purposes of our discussion, however, we

will only make such distinctions when absolutely necessary.

III. JPEG-2000 CODEC

Having briefly introduced the JPEG-2000 standard, we are

now in a position to begin examining the JPEG-2000 codec in

detail. The codec is based on wavelet/subband coding tech-

niques [9,10]. It handles both lossy and lossless compression us-

ing the same transform-based framework, and borrows heavily

on ideas from the embedded block coding with optimized trun-

cation (EBCOT) scheme [11,12]. In order to facilitate both lossy

and lossless coding in an efficient manner, reversible integer-

to-integer [13, 14] and nonreversible real-to-real transforms are

employed. To code transform data, the codec makes use of bit-

plane coding techniques. For entropy coding, a context-based

adaptive binary arithmetic coder [15] is used—more specifi-

cally, the MQ coder from the JBIG2 standard [16]. Two levels

of syntax are employed to represent the coded image: a code

stream and file format syntax. The code stream syntax is similar

in spirit to that used in the JPEG standard.

The remainder of Section III is structured as follows. First,

Sections III-A to III-C, discuss the source image model and

how an image is internally represented by the codec. Next, Sec-

tion III-D examines the basic structure of the codec. This is fol-

lowed, in Sections III-E to III-M by a detailed explanation of the

coding engine itself. Next, Sections III-N and III-O explain the

syntax used to represent a coded image. Finally, Section III-P

briefly describes some extensions proposed for inclusion in Part

2 of the standard.

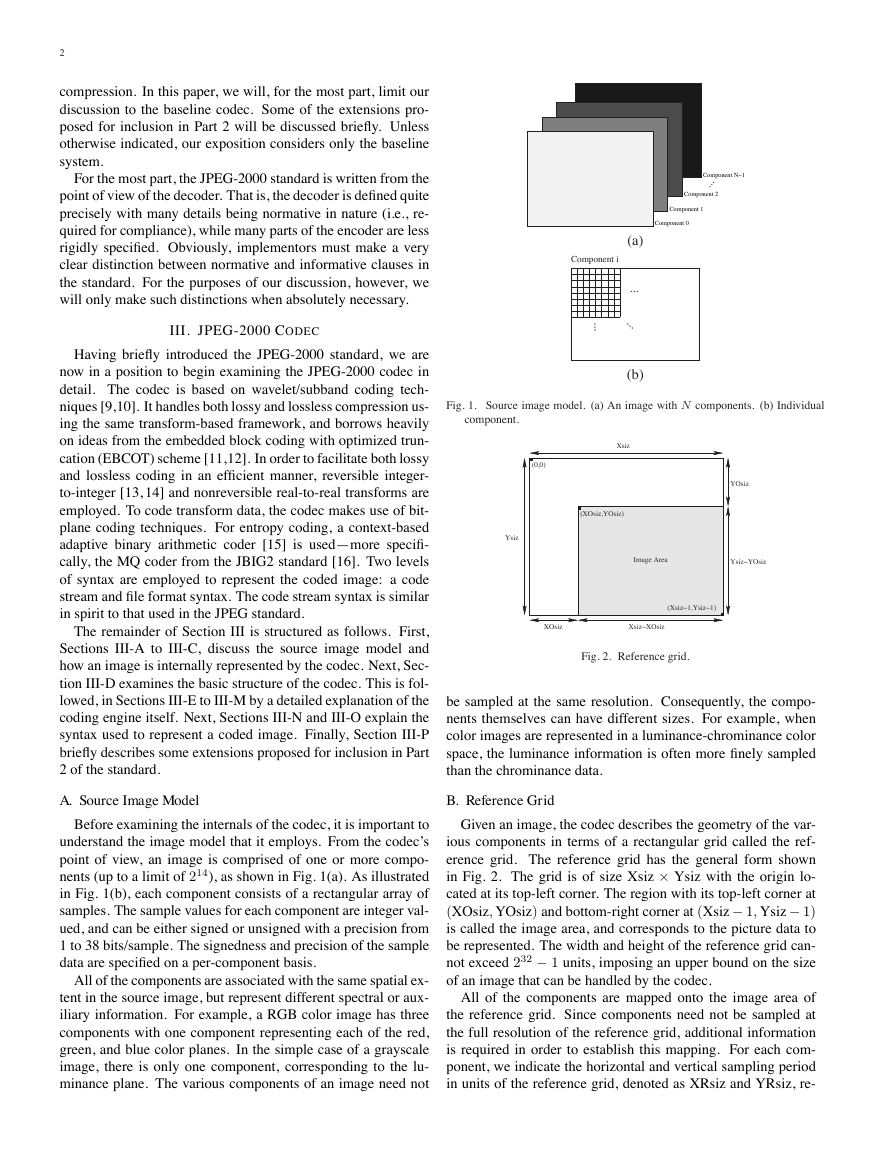

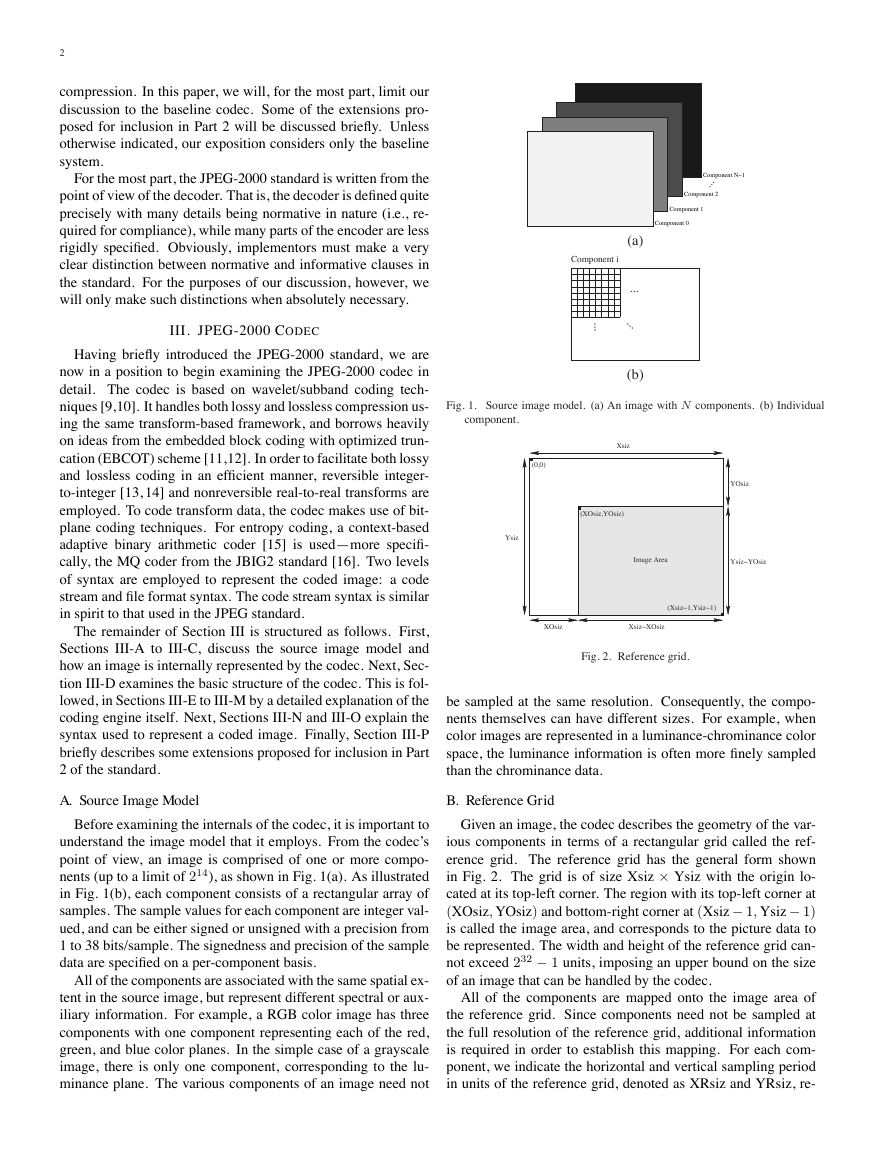

(a)

(b)

Fig. 1. Source image model. (a) An image with N components. (b) Individual

component.

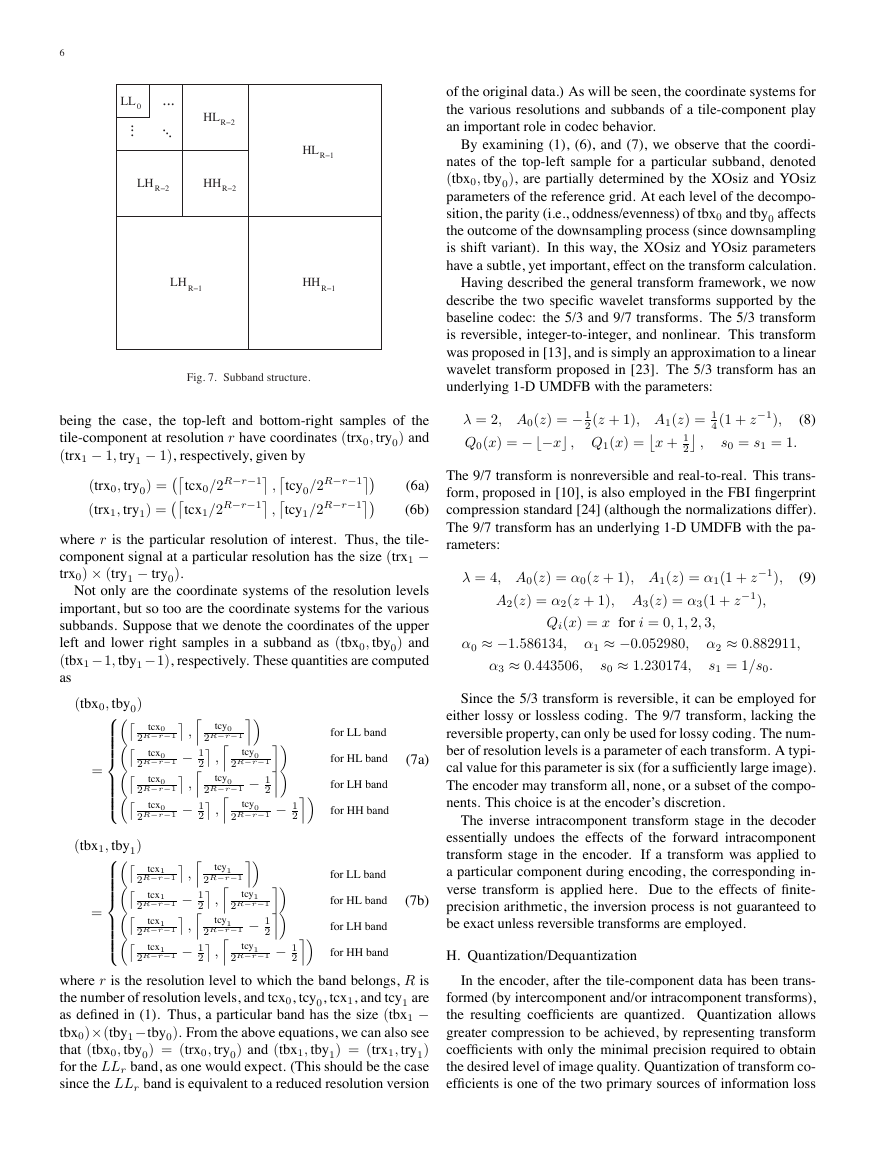

Fig. 2. Reference grid.

be sampled at the same resolution. Consequently, the compo-

nents themselves can have different sizes. For example, when

color images are represented in a luminance-chrominance color

space, the luminance information is often more finely sampled

than the chrominance data.

A. Source Image Model

B. Reference Grid

Before examining the internals of the codec, it is important to

understand the image model that it employs. From the codec’s

point of view, an image is comprised of one or more compo-

nents (up to a limit of 214), as shown in Fig. 1(a). As illustrated

in Fig. 1(b), each component consists of a rectangular array of

samples. The sample values for each component are integer val-

ued, and can be either signed or unsigned with a precision from

1 to 38 bits/sample. The signedness and precision of the sample

data are specified on a per-component basis.

All of the components are associated with the same spatial ex-

tent in the source image, but represent different spectral or aux-

iliary information. For example, a RGB color image has three

components with one component representing each of the red,

green, and blue color planes. In the simple case of a grayscale

image, there is only one component, corresponding to the lu-

minance plane. The various components of an image need not

Given an image, the codec describes the geometry of the var-

ious components in terms of a rectangular grid called the ref-

erence grid. The reference grid has the general form shown

in Fig. 2. The grid is of size Xsiz × Ysiz with the origin lo-

cated at its top-left corner. The region with its top-left corner at

(XOsiz, YOsiz) and bottom-right corner at (Xsiz − 1, Ysiz − 1)

is called the image area, and corresponds to the picture data to

be represented. The width and height of the reference grid can-

not exceed 232 − 1 units, imposing an upper bound on the size

of an image that can be handled by the codec.

All of the components are mapped onto the image area of

the reference grid. Since components need not be sampled at

the full resolution of the reference grid, additional information

is required in order to establish this mapping. For each com-

ponent, we indicate the horizontal and vertical sampling period

in units of the reference grid, denoted as XRsiz and YRsiz, re-

Component 1Component 2...Component 0Component N−1Component i.........(Xsiz−1,Ysiz−1)(XOsiz,YOsiz)Xsiz−XOsizImage Area(0,0)XsizYsiz−YOsizYsizXOsizYOsiz�

TABLE I

PARTS OF THE STANDARD

Part

1

2

Title

Core coding system

Extensions

3

4

5

Motion JPEG-2000

Conformance testing

Reference software

Purpose

Specifies the core (or minimum functionality) codec for the JPEG-2000 family of standards.

Specifies additional functionalities that are useful in some applications but need not be supported

by all codecs.

Specifies extensions to JPEG-2000 for intraframe-style video compression.

Specifies the procedure to be employed for compliance testing.

Provides sample software implementations of the standard to serve as a guide for implementors.

3

XRsiz

YRsiz

YRsiz

XRsiz

,

Xsiz

YOsiz

YRsiz

− XOsiz

XRsiz

× Ysiz

− YOsiz

XOsiz

spectively. These two parameters uniquely specify a (rectangu-

lar) sampling grid consisting of all points whose horizontal and

vertical positions are integer multiples of XRsiz and YRsiz, re-

spectively. All such points that fall within the image area, con-

stitute samples of the component in question. Thus, in terms

of its own coordinate system, a component will have the size

and its top-left sam-

ple will correspond to the point

. Note that

the reference grid also imposes a particular alignment of sam-

ples from the various components relative to one another.

From the diagram, the size of the image area is (Xsiz −

XOsiz)×(Ysiz−YOsiz). For a given image, many combinations

of the Xsiz, Ysiz, XOsiz, and YOsiz parameters can be chosen to

obtain an image area with the same size. Thus, one might won-

der why the XOsiz and YOsiz parameters are not fixed at zero

while the Xsiz and Ysiz parameters are set to the size of the

image. As it turns out, there are subtle implications to chang-

ing the XOsiz and YOsiz parameters (while keeping the size of

the image area constant). Such changes affect codec behavior in

several important ways, as will be described later. This behavior

allows a number of basic operations to be performed efficiently

on coded images, such as cropping, horizontal/vertical flipping,

and rotation by an integer multiple of 90 degrees.

Fig. 3. Tiling on the reference grid.

C. Tiling

In some situations, an image may be quite large in compar-

ison to the amount of memory available to the codec. Conse-

quently, it is not always feasible to code the entire image as a

single atomic unit. To solve this problem, the codec allows an

image to be broken into smaller pieces, each of which is inde-

pendently coded. More specifically, an image is partitioned into

one or more disjoint rectangular regions called tiles. As shown

in Fig. 3, this partitioning is performed with respect to the ref-

erence grid by overlaying the reference grid with a rectangu-

lar tiling grid having horizontal and vertical spacings of XTsiz

and YTsiz, respectively. The origin of the tiling grid is aligned

with the point (XTOsiz, YTOsiz). Tiles have a nominal size of

XTsiz × YTsiz, but those bordering on the edges of the image

area may have a size which differs from the nominal size. The

tiles are numbered in raster scan order (starting at zero).

By mapping the position of each tile from the reference grid

to the coordinate systems of the individual components, a parti-

tioning of the components themselves is obtained. For example,

suppose that a tile has an upper left corner and lower right corner

with coordinates (tx0, ty0) and (tx1 − 1, ty1 − 1), respectively.

Then, in the coordinate space of a particular component, the tile

would have an upper left corner and lower right corner with co-

ordinates (tcx0, tcy0) and (tcx1−1, tcy1−1), respectively, where

Fig. 4. Tile-component coordinate system.

(tcx0, tcy0) = (tx0/XRsiz ,ty0/YRsiz)

(tcx1, tcy1) = (tx1/XRsiz ,ty1/YRsiz) .

(1a)

(1b)

These equations correspond to the illustration in Fig. 4. The por-

tion of a component that corresponds to a single tile is referred

to as a tile-component. Although the tiling grid is regular with

respect to the reference grid, it is important to note that the grid

may not necessarily be regular with respect to the coordinate

systems of the components.

T7T0T1T2T3T4T5T6T8(XOsiz,YOsiz)(0,0)(XTOsiz,YTOsiz)XTOsizXTsizXTsizXTsizYTsizYTsizYTsizYTOsizYsizXsiztcx0tcy0tcx1tcy1(0,0)Tile−Component Data( , )( −1, −1)�

4

D. Codec Structure

The general structure of the codec is shown in Fig. 5 with

the form of the encoder given by Fig. 5(a) and the decoder

given by Fig. 5(b). From these diagrams, the key processes

associated with the codec can be identified: 1) preprocess-

ing/postprocessing, 2) intercomponent transform, 3) intracom-

ponent transform, 4) quantization/dequantization, 5) tier-1 cod-

ing, 6) tier-2 coding, and 7) rate control. The decoder structure

essentially mirrors that of the encoder. That is, with the excep-

tion of rate control, there is a one-to-one correspondence be-

tween functional blocks in the encoder and decoder. Each func-

tional block in the decoder either exactly or approximately in-

verts the effects of its corresponding block in the encoder. Since

tiles are coded independently of one another, the input image

is (conceptually, at least) processed one tile at a time. In the

sections that follow, each of the above processes is examined in

more detail.

E. Preprocessing/Postprocessing

The codec expects its input sample data to have a nominal

dynamic range that is approximately centered about zero. The

preprocessing stage of the encoder simply ensures that this ex-

pectation is met. Suppose that a particular component has P

bits/sample. The samples may be either signed or unsigned,

leading to a nominal dynamic range of [−2P−1, 2P−1 − 1] or

[0, 2P − 1], respectively.

If the sample values are unsigned,

the nominal dynamic range is clearly not centered about zero.

Thus, the nominal dynamic range of the samples is adjusted by

subtracting a bias of 2P−1 from each of the sample values. If

the sample values for a component are signed, the nominal dy-

namic range is already centered about zero, and no processing

is required. By ensuring that the nominal dynamic range is cen-

tered about zero, a number of simplifying assumptions could be

made in the design of the codec (e.g., with respect to context

modeling, numerical overflow, etc.).

The postprocessing stage of the decoder essentially undoes

the effects of preprocessing in the encoder. If the sample val-

ues for a component are unsigned, the original nominal dynamic

range is restored. Lastly, in the case of lossy coding, clipping is

performed to ensure that the sample values do not exceed the

allowable range.

F. Intercomponent Transform

In the encoder, the preprocessing stage is followed by the for-

ward intercomponent transform stage. Here, an intercomponent

transform can be applied to the tile-component data. Such a

transform operates on all of the components together, and serves

to reduce the correlation between components, leading to im-

proved coding efficiency.

Only two intercomponent transforms are defined in the base-

line JPEG-2000 codec: the irreversible color transform (ICT)

and reversible color transform (RCT). The ICT is nonreversible

and real-to-real in nature, while the RCT is reversible and

integer-to-integer. Both of these transforms essentially map im-

age data from the RGB to YCrCb color space. The transforms

are defined to operate on the first three components of an image,

with the assumption that components 0, 1, and 2 correspond

to the red, green, and blue color planes. Due to the nature of

these transforms, the components on which they operate must

be sampled at the same resolution (i.e., have the same size). As

a consequence of the above facts, the ICT and RCT can only be

employed when the image being coded has at least three com-

ponents, and the first three components are sampled at the same

resolution. The ICT may only be used in the case of lossy cod-

ing, while the RCT can be used in either the lossy or lossless

case. Even if a transform can be legally employed, it is not

necessary to do so. That is, the decision to use a multicompo-

nent transform is left at the discretion of the encoder. After the

intercomponent transform stage in the encoder, data from each

component is treated independently.

The ICT is nothing more than the classic RGB to YCrCb color

space transform. The forward transform is defined as

V0(x, y)

V1(x, y)

V2(x, y)

=

0.299

0.5

−0.16875 −0.33126

0.587

0.114

0.5

−0.41869 −0.08131

U0(x, y)

U1(x, y)

U2(x, y)

(2)

where U0(x, y), U1(x, y), and U2(x, y) are the input compo-

nents corresponding to the red, green, and blue color planes,

respectively, and V0(x, y), V1(x, y), and V2(x, y) are the output

components corresponding to the Y, Cr, and Cb planes, respec-

tively. The inverse transform can be shown to be

U0(x, y)

=

U1(x, y)

U2(x, y)

1

0

1 −0.34413 −0.71414

1

−1.772

0

1.402

V0(x, y)

V1(x, y)

V2(x, y)

(3)

The RCT is simply a reversible integer-to-integer approxima-

tion to the ICT (similar to that proposed in [14]). The forward

transform is given by

4 (U0(x, y) + 2U1(x, y) + U2(x, y))

1

V0(x, y) =

V1(x, y) = U2(x, y) − U1(x, y)

V2(x, y) = U0(x, y) − U1(x, y)

where U0(x, y), U1(x, y), U2(x, y), V0(x, y), V1(x, y), and

V2(x, y) are defined as above. The inverse transform can be

shown to be

U1(x, y) = V0(x, y) −

1

4 (V1(x, y) + V2(x, y))

U0(x, y) = V2(x, y) + U1(x, y)

U2(x, y) = V1(x, y) + U1(x, y)

The inverse intercomponent transform stage in the decoder

essentially undoes the effects of the forward intercomponent

transform stage in the encoder. If a multicomponent transform

was applied during encoding, its inverse is applied here. Unless

the transform is reversible, however, the inversion may only be

approximate due to the effects of finite-precision arithmetic.

G. Intracomponent Transform

Following the intercomponent transform stage in the encoder

is the intracomponent transform stage. In this stage, transforms

that operate on individual components can be applied. The par-

ticular type of operator employed for this purpose is the wavelet

transform. Through the application of the wavelet transform,

a component is split into numerous frequency bands (i.e., sub-

bands). Due to the statistical properties of these subband signals,

(4a)

(4b)

(4c)

(5a)

(5b)

(5c)

�

5

h+

-

y0[n]

-

s0

6

Qλ−1

6

Aλ−1(z)

- -

6

-

-

?

Aλ−2(z)

?

Qλ−2

?

h+

h+

-

+

−

6

Q1

- -

?

A0(z)

?

Q0

+ −

?-

h+

6

A1(z)

- -6

···

···

···

···

(a)

···

-

···

···

···

(b)

y1[n]

-

s1

h+

↑ 2

-

x[n]

-

6

z−1

6

↑ 2

-

Fig. 6. Lifting realization of 1-D 2-channel PR UMDFB. (a) Analysis side. (b)

Synthesis side.

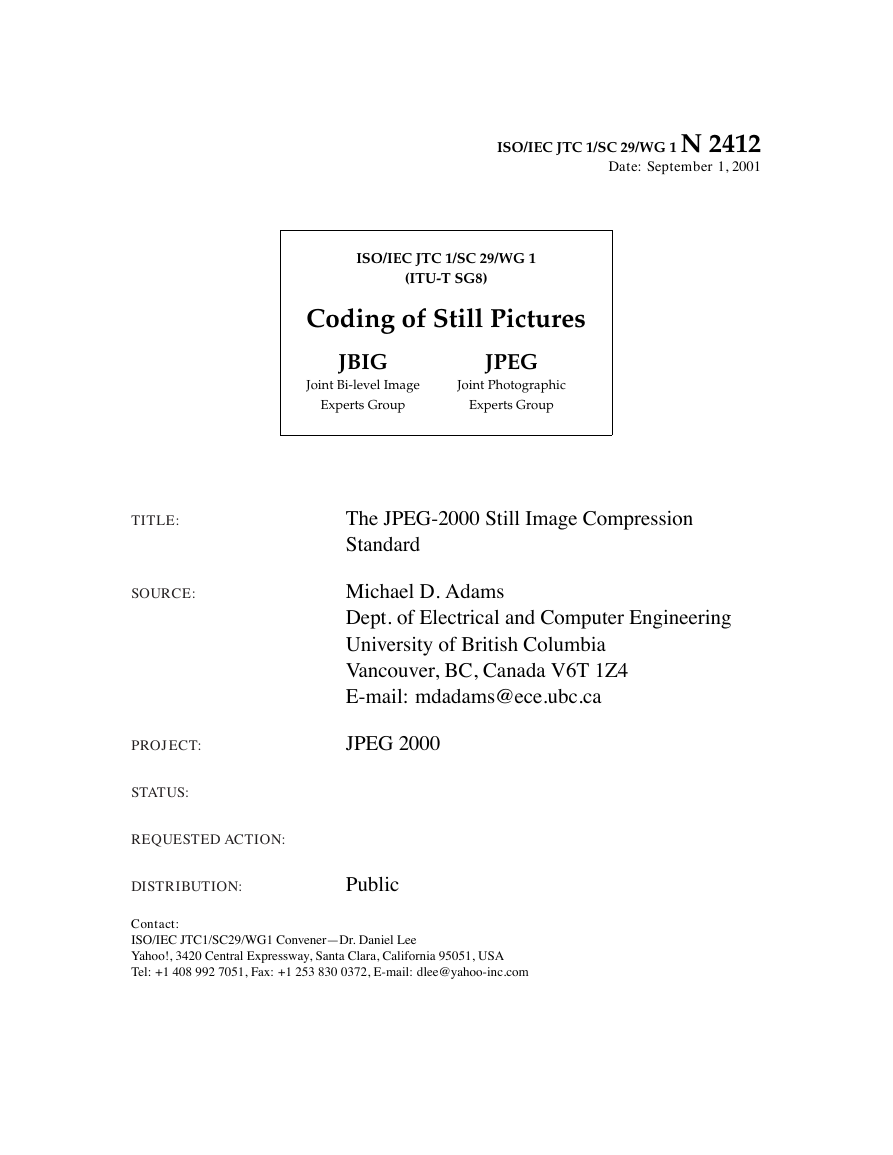

wavelet decomposition is associated with R resolution levels,

numbered from 0 to R − 1, with 0 and R − 1 corresponding

to the coarsest and finest resolutions, respectively. Each sub-

band of the decomposition is identified by its orientation (e.g.,

LL, LH, HL, HH) and its corresponding resolution level (e.g.,

0, 1, . . . , R − 1). The input tile-component signal is consid-

ered to be the LLR−1 band. At each resolution level (except

the lowest) the LL band is further decomposed. For example,

the LLR−1 band is decomposed to yield the LLR−2, LHR−2,

HLR−2, and HHR−2 bands. Then, at the next level, the LLR−2

band is decomposed, and so on. This process repeats until the

LL0 band is obtained, and results in the subband structure illus-

trated in Fig. 7. In the degenerate case where no transform is

applied, R = 1, and we effectively have only one subband (i.e.,

the LL0 band).

As described above, the wavelet decomposition can be as-

sociated with data at R different resolutions. Suppose that the

top-left and bottom-right samples of a tile-component have co-

ordinates (tcx0, tcy0) and (tcx1−1, tcy1−1), respectively. This

Fig. 5. Codec structure. The structure of the (a) encoder and (b) decoder.

(a)

(b)

↓ 2

x[n]

- -

?

z

?

-

↓ 2

h+

-

6

Q1

6

A1(z)

--

6

--

?

A0(z)

?

Q0

?

h+

h+

-

y0[n]

-

s−1

0

y1[n]

-

s−1

1

-

+

−

6

Qλ−1

6

Aλ−1(z)

-

6

- -

?

Aλ−2(z)

?

Qλ−2

−

?-

h+

-

+

the transformed data can usually be coded more efficiently than

the original untransformed data.

Both reversible integer-to-integer [13, 17–19] and nonre-

versible real-to-real wavelet transforms are employed by the

baseline codec. The basic building block for such transforms

is the 1-D 2-channel perfect-reconstruction (PR) uniformly-

maximally-decimated (UMD) filter bank (FB) which has the

general form shown in Fig. 6. Here, we focus on the lifting

realization of the UMDFB [20, 21], as it can be used to imple-

ment the reversible integer-to-integer and nonreversible real-to-

real wavelet transforms employed by the baseline codec. In fact,

for this reason, it is likely that this realization strategy will be

employed by many codec implementations. The analysis side

of the UMDFB, depicted in Fig. 6(a), is associated with the for-

ward transform, while the synthesis side, depicted in Fig. 6(b),

is associated with the inverse transform.

In the diagram, the

{Ai(z)}λ−1

i=0 denote filter transfer

functions, quantization operators, and (scalar) gains, respec-

tively. To obtain integer-to-integer mappings, the {Qi(x)}λ−1

i=0

are selected such that they always yield integer values, and the

{si}1

i=0 are chosen as integers. For real-to-real mappings, the

{Qi(x)}λ−1

i=0 are simply chosen as the identity, and the {si}1

i=0

are selected from the real numbers. To facilitate filtering at sig-

nal boundaries, symmetric extension [22] is employed. Since

an image is a 2-D signal, clearly we need a 2-D UMDFB. By

applying the 1-D UMDFB in both the horizontal and vertical di-

rections, a 2-D UMDFB is effectively obtained. The wavelet

transform is then calculated by recursively applying the 2-D

UMDFB to the lowpass subband signal obtained at each level

in the decomposition.

i=0 , {Qi(x)}λ−1

i=0 , and {si}1

Suppose that a (R − 1)-level wavelet transform is to be em-

ployed. To compute the forward transform, we apply the anal-

ysis side of the 2-D UMDFB to the tile-component data in an

iterative manner, resulting in a number of subband signals be-

ing produced. Each application of the analysis side of the 2-D

UMDFB yields four subbands: 1) horizontally and vertically

lowpass (LL), 2) horizontally lowpass and vertically highpass

(LH), 3) horizontally highpass and vertically lowpass (HL), and

4) horizontally and vertically highpass (HH). A (R − 1)-level

PreprocessingForwardIntercomponentTransformForwardIntracomponentTransformQuantizationTier−1EncoderTier−2EncoderCodedImageRate ControlOriginalImageTier−2DecoderTier−1DecoderInverseIntracomponentTransformPostprocessingDequantizationInverseIntercomponentTransformCodedImageReconstructedImage�

6

of the original data.) As will be seen, the coordinate systems for

the various resolutions and subbands of a tile-component play

an important role in codec behavior.

By examining (1), (6), and (7), we observe that the coordi-

nates of the top-left sample for a particular subband, denoted

(tbx0, tby0), are partially determined by the XOsiz and YOsiz

parameters of the reference grid. At each level of the decompo-

sition, the parity (i.e., oddness/evenness) of tbx0 and tby0 affects

the outcome of the downsampling process (since downsampling

is shift variant). In this way, the XOsiz and YOsiz parameters

have a subtle, yet important, effect on the transform calculation.

Having described the general transform framework, we now

describe the two specific wavelet transforms supported by the

baseline codec: the 5/3 and 9/7 transforms. The 5/3 transform

is reversible, integer-to-integer, and nonlinear. This transform

was proposed in [13], and is simply an approximation to a linear

wavelet transform proposed in [23]. The 5/3 transform has an

underlying 1-D UMDFB with the parameters:

λ = 2, A0(z) = − 1

Q0(x) = −−x , Q1(x) =

2(z + 1), A1(z) = 1

x + 1

2

,

4(1 + z−1),

s0 = s1 = 1.

(8)

The 9/7 transform is nonreversible and real-to-real. This trans-

form, proposed in [10], is also employed in the FBI fingerprint

compression standard [24] (although the normalizations differ).

The 9/7 transform has an underlying 1-D UMDFB with the pa-

rameters:

λ = 4, A0(z) = α0(z + 1), A1(z) = α1(1 + z−1),

A2(z) = α2(z + 1), A3(z) = α3(1 + z−1),

(9)

Qi(x) = x for i = 0, 1, 2, 3,

α0 ≈ −1.586134, α1 ≈ −0.052980, α2 ≈ 0.882911,

α3 ≈ 0.443506,

s0 ≈ 1.230174,

s1 = 1/s0.

Fig. 7. Subband structure.

being the case, the top-left and bottom-right samples of the

tcy0/2R−r−1

tile-component at resolution r have coordinates (trx0, try0) and

(trx1 − 1, try1 − 1), respectively, given by

tcy1/2R−r−1

tcx0/2R−r−1

tcx1/2R−r−1

(trx0, try0) =

(trx1, try1) =

(6a)

(6b)

,

,

where r is the particular resolution of interest. Thus, the tile-

component signal at a particular resolution has the size (trx1 −

trx0) × (try1 − try0).

Not only are the coordinate systems of the resolution levels

important, but so too are the coordinate systems for the various

subbands. Suppose that we denote the coordinates of the upper

left and lower right samples in a subband as (tbx0, tby0) and

(tbx1−1, tby1−1), respectively. These quantities are computed

as

(tbx0, tby0)

,

,

2

tcx0

tcx0

tcx0

tcx0

tcx1

tcx1

tcx1

tcx1

2R−r−1

2R−r−1 − 1

2R−r−1

2R−r−1 − 1

2R−r−1

2R−r−1 − 1

2R−r−1

2R−r−1 − 1

2

2

2

,

,

=

=

(tbx1, tby1)

,

2

tcy0

tcy0

tcy0

,

tcy0

2R−r−1

2R−r−1

2R−r−1 − 1

2R−r−1 − 1

2R−r−1 − 1

2R−r−1

2R−r−1 − 1

2R−r−1

,

tcy1

tcy1

tcy1

tcy1

2

2

2

,

for LL band

for HL band

(7a)

for LH band

for HH band

for LL band

for HL band

(7b)

for LH band

for HH band

Since the 5/3 transform is reversible, it can be employed for

either lossy or lossless coding. The 9/7 transform, lacking the

reversible property, can only be used for lossy coding. The num-

ber of resolution levels is a parameter of each transform. A typi-

cal value for this parameter is six (for a sufficiently large image).

The encoder may transform all, none, or a subset of the compo-

nents. This choice is at the encoder’s discretion.

The inverse intracomponent transform stage in the decoder

essentially undoes the effects of the forward intracomponent

transform stage in the encoder. If a transform was applied to

a particular component during encoding, the corresponding in-

verse transform is applied here. Due to the effects of finite-

precision arithmetic, the inversion process is not guaranteed to

be exact unless reversible transforms are employed.

H. Quantization/Dequantization

where r is the resolution level to which the band belongs, R is

the number of resolution levels, and tcx0, tcy0, tcx1, and tcy1 are

as defined in (1). Thus, a particular band has the size (tbx1 −

tbx0)×(tby1−tby0). From the above equations, we can also see

that (tbx0, tby0) = (trx0, try0) and (tbx1, tby1) = (trx1, try1)

for the LLr band, as one would expect. (This should be the case

since the LLr band is equivalent to a reduced resolution version

In the encoder, after the tile-component data has been trans-

formed (by intercomponent and/or intracomponent transforms),

the resulting coefficients are quantized. Quantization allows

greater compression to be achieved, by representing transform

coefficients with only the minimal precision required to obtain

the desired level of image quality. Quantization of transform co-

efficients is one of the two primary sources of information loss

LL0R−2HHLHR−2HLR−2HLR−1HHR−1LHR−1.........�

in the coding path (the other source being the discarding of cod-

ing pass data as will be described later).

Transform coefficients are quantized using scalar quantiza-

tion with a deadzone. A different quantizer is employed for the

coefficients of each subband, and each quantizer has only one

parameter, its step size. Mathematically, the quantization pro-

cess is defined as

V (x, y) = |U(x, y)| /∆ sgn U(x, y)

(10)

where ∆ is the quantizer step size, U(x, y) is the input sub-

band signal, and V (x, y) denotes the output quantizer indices

for the subband. Since this equation is specified in an infor-

mative clause of the standard, encoders need not use this precise

formula. This said, however, it is likely that many encoders will,

in fact, use the above equation.

The baseline codec has two distinct modes of operation, re-

ferred to herein as integer mode and real mode. In integer mode,

all transforms employed are integer-to-integer in nature (e.g.,

RCT, 5/3 WT). In real mode, real-to-real transforms are em-

ployed (e.g., ICT, 9/7 WT). In integer mode, the quantizer step

sizes are always fixed at one, effectively bypassing quantization

and forcing the quantizer indices and transform coefficients to

be one and the same. In this case, lossy coding is still possible,

but rate control is achieved by another mechanism (to be dis-

cussed later). In the case of real mode (which implies lossy cod-

ing), the quantizer step sizes are chosen in conjunction with rate

control. Numerous strategies are possible for the selection of the

quantizer step sizes, as will be discussed later in Section III-L.

As one might expect, the quantizer step sizes used by the en-

coder are conveyed to the decoder via the code stream. In pass-

ing, we note that the step sizes specified in the code stream are

relative and not absolute quantities. That is, the quantizer step

size for each band is specified relative to the nominal dynamic

range of the subband signal.

In the decoder, the dequantization stage tries to undo the ef-

fects of quantization. Unless all of the quantizer step sizes are

less than or equal to one, the quantization process will normally

result in some information loss, and this inversion process is

only approximate. The quantized transform coefficient values

are obtained from the quantizer indices. Mathematically, the de-

quantization process is defined as

U(x, y) = (V (x, y) + r sgn V (x, y)) ∆

(11)

where ∆ is the quantizer step size, r is a bias parameter, V (x, y)

are the input quantizer indices for the subband, and U(x, y) is

the reconstructed subband signal. Although the value of r is

not normatively specified in the standard, it is likely that many

decoders will use the value of one half.

I. Tier-1 Coding

After quantization is performed in the encoder, tier-1 coding

takes place. This is the first of two coding stages. The quantizer

indices for each subband are partitioned into code blocks. Code

blocks are rectangular in shape, and their nominal size is a free

parameter of the coding process, subject to certain constraints,

most notably: 1) the nominal width and height of a code block

7

Fig. 8. Partitioning of subband into code blocks.

must be an integer power of two, and 2) the product of the nom-

inal width and height cannot exceed 4096.

Suppose that the nominal code block size is tentatively cho-

sen to be 2xcb × 2ycb.

In tier-2 coding, yet to be discussed,

code blocks are grouped into what are called precincts. Since

code blocks are not permitted to cross precinct boundaries, a re-

duction in the nominal code block size may be required if the

precinct size is sufficiently small. Suppose that the nominal

code block size after any such adjustment is 2xcb’ × 2ycb’ where

xcb’ ≤ xcb and ycb’ ≤ ycb. The subband is partitioned into

code blocks by overlaying the subband with a rectangular grid

having horizontal and vertical spacings of 2xcb’ and 2ycb’, respec-

tively, as shown in Fig. 8. The origin of this grid is anchored at

(0, 0) in the coordinate system of the subband. A typical choice

for the nominal code block size is 64 × 64 (i.e., xcb = 6 and

ycb = 6).

Let us, again, denote the coordinates of the top-left sample

in a subband as (tbx0, tby0). As explained in Section III-G,

the quantity (tbx0, tby0) is partially determined by the refer-

ence grid parameters XOsiz and YOsiz.

In turn, the quantity

(tbx0, tby0) affects the position of code block boundaries within

a subband. In this way, the XOsiz and YOsiz parameters have

an important effect on the behavior of the tier-1 coding process

(i.e., they affect the location of code block boundaries).

After a subband has been partitioned into code blocks, each

of the code blocks is independently coded. The coding is per-

formed using the bit-plane coder described later in Section III-J.

For each code block, an embedded code is produced, comprised

of numerous coding passes. The output of the tier-1 encoding

process is, therefore, a collection of coding passes for the vari-

ous code blocks.

On the decoder side, the bit-plane coding passes for the var-

ious code blocks are input to the tier-1 decoder, these passes

are decoded, and the resulting data is assembled into subbands.

In this way, we obtain the reconstructed quantizer indices for

tbx0tby0( , )tbx1tby1( −1, −1)B0B2B5B4B3B1B6B7B82xcb’2xcb’2xcb’2ycb’2ycb’2ycb’2xcb’2ycb’(m , n )(0,0).....................�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc