N a t u r a l l a N g u a g e P r o c e s s iN g

Question Answering

over Knowledge Bases

Kang Liu, Jun Zhao, Shizhu He, and Yuanzhe Zhang, Institute of Automation,

Chinese Academy of Sciences

Deep Web search is on the cusp of a profound change, from simple docu-

ment retrieval to natural language question answering (QA).1 Ultimately,

search needs to precisely understand the meanings of users’ natural language

questions, extract useful facts from all information on the Web, and select

appropriate answers. To fulfill this aim, aca-

demics and industry researchers have put

forth more efforts in knowledge bases (KBs),

where information is organized in a net struc-

ture and semantic relations can be effectively

reflected. Semantic items in the text, including

entities, classes, and their semantic relations,

can be extracted from the raw data—an-

swers corresponding to users’ questions can

be grasped through direct matching in the KB.

Several KBs have been constructed and

published, such as DBpedia,2 Freebase,3

and YAGO.4 These KBs usually have com-

plex structures and are highly heteroge-

neous—accessing them is a big obstacle for

the task of question answering over KBs.

Although structured query languages (such

as SPARQL) have been designed and pro-

vided for visiting these structured data, only

a few experts and developers know how to

use them. In contrast, common users usually

raise questions in natural language forms.

Therefore, determining how to translate

natural language questions into structured

language-based queries is the core goal of

question answering over KBs, which has at-

tracted a lot of attention lately.5–10 For ex-

ample, with respect to the question, “Which

software has been developed by organiza-

tions founded in California?,” the aim is to

automatically convert this utterance into a

SPARQL query that contains the following

subject-property-object (SPO) triple format:

SELECT DISTINCT ?uri

WHERE{

?uri rdf:type dbo:Software.

?uri dbo:developer ?x1.

?x1 rdf:type dbo:Company.

?x1 dbo:foundationPlace

dbr:California.}

The key of such translation is to under-

stand the meaning of the question. The

dominant methods usually convert a natu-

ral language question into a complete and

formal meaning representation (FMR) first,

such as logical form. Based on FMR, the

structured query is then smoothly generated.

However, completing this aim isn’t trivial.

Four questions should be addressed:

• How do we represent the meaning of

questions grounded to a specific KB? This

meaning representation should reflect the

corresponding concepts in the KB and or-

ganize them according to their semantic re-

lations in the question. The representation

Previous research on

question answering

over knowledge bases

has focused on a

constrained domain,

but with the increase

in existing knowledge

bases, understanding

and translating it is

challenging.

26

1541-1672/15/$31.00 © 2015 IEEE

Published by the IEEE Computer Society

IEEE INTELLIGENT SYSTEMS

�

should be unambiguous and adapted

to the machine for automated infer-

ence and processing.

• How do we convert natural language

questions to the predefined formal

meanings? Text is usually ambigu-

ous, which makes this conversion

very difficult. Different formal mean-

ings can be generated from the same

text under different contexts, so effi-

cient disambiguation models or rules

should be constructed.

• How do we scale the QA to a large-

scale, open domain KB? With the in-

crease of Web data, the sizes of KBs

inflate quickly. A KB could contain

billions of entities, millions of rela-

tions, and thousands of domains,

which makes the textual ambiguity

more serious and also makes tradi-

tional rule-based or labeled data-

dependent methods infeasible.

• How do we answer questions over

multiple, interlinked KBs? In many sce-

narios, answering a question requires

synthesizing multiple KBs. The con-

tents from different KBs can make

up each other, although their struc-

tures are heterogeneous. Many

unknown alignments exist among

entities, classes, and relations from

various KBs. However, discovering

such alignments and performing

question conversion over multiple

heterogeneous KBs isn’t easy.

This article gives a rough sketch of

question answering over KBs, looking

at traditional approaches and attempt-

ing to answer these four questions.

Formal Meaning

Representation

Representing the meaning of a text

is an intractable philosophical chal-

lenge.11 According to different perspec-

tives, communities, and people, there

isn’t a harmonious scheme related to

this topic. With a practical perspec-

tive on developing question answering

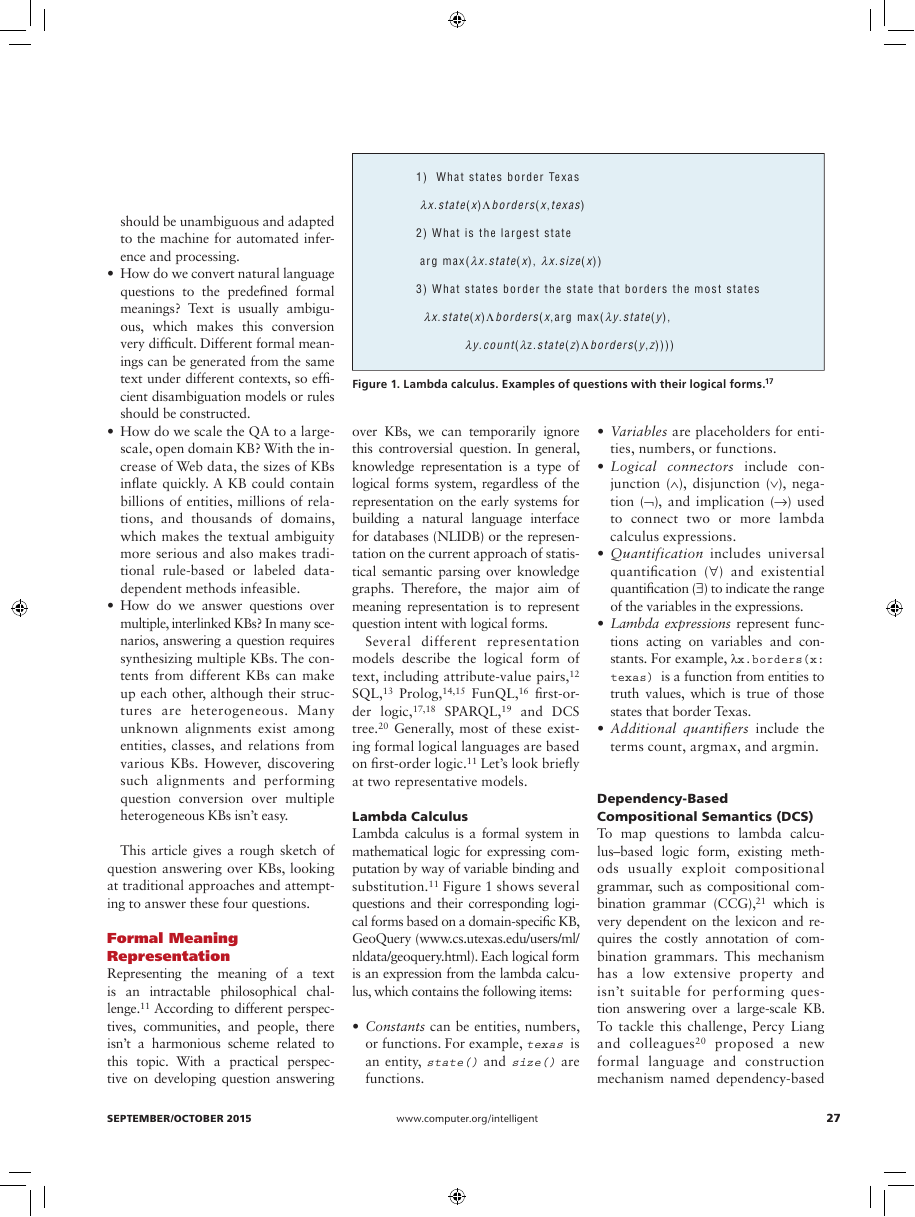

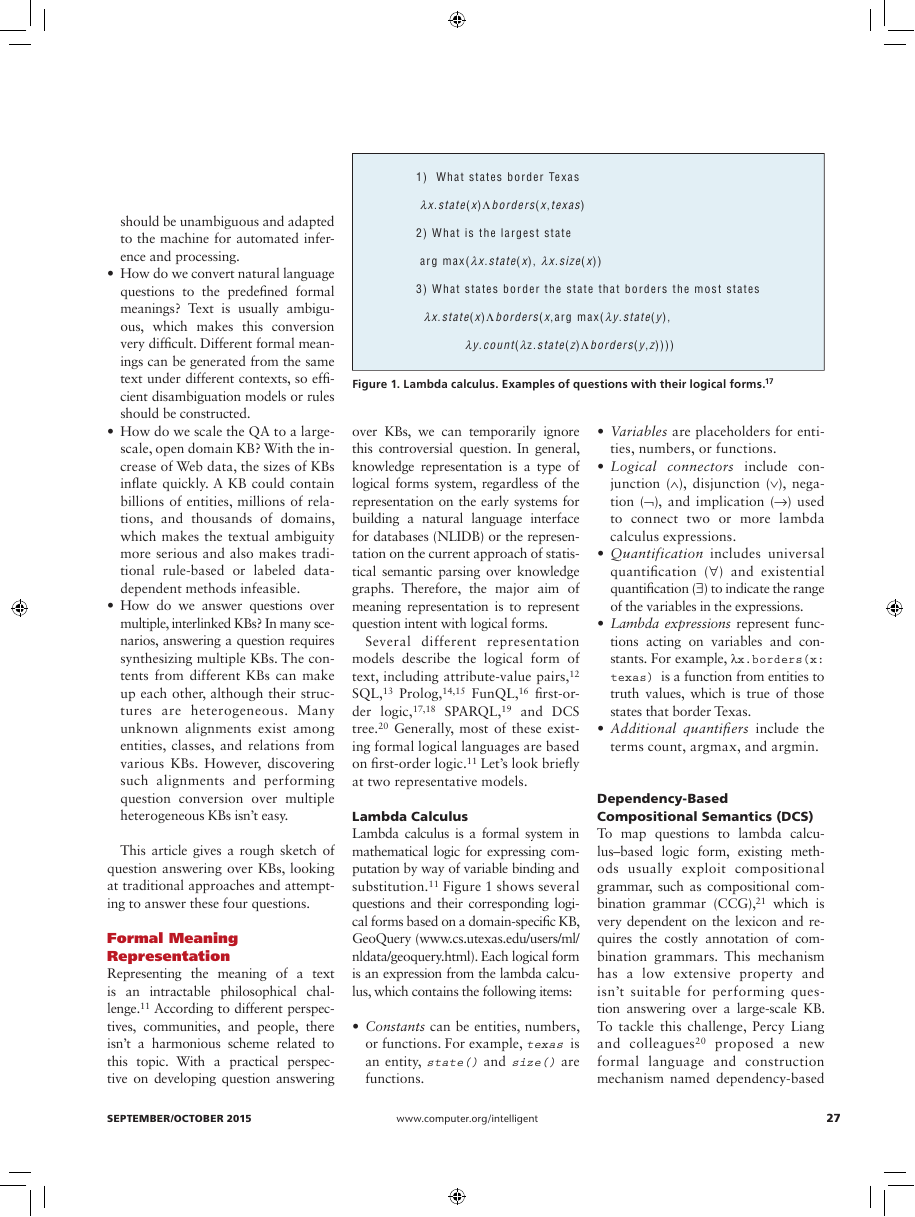

1) What states border Te xas

�x.state( x)Λborders( x,texas)

2) What is the largest state

arg max( �x.state (x), �x.size( x))

3) What states border the state that borders the most states

�x.state( x)Λborders( x,arg max(� y.state( y),

�y.count( �z.state( z)Λborders( y,z))))

Figure 1. Lambda calculus. Examples of questions with their logical forms.17

over KBs, we can temporarily ignore

this controversial question. In general,

knowledge representation is a type of

logical forms system, regardless of the

representation on the early systems for

building a natural language interface

for databases (NLIDB) or the represen-

tation on the current approach of statis-

tical semantic parsing over knowledge

graphs. Therefore, the major aim of

meaning representation is to represent

question intent with logical forms.

Several different representation

models describe the logical form of

text, including attribute-value pairs,12

SQL,13 Prolog,14,15 FunQL,16 first-or-

der logic,17,18 SPARQL,19 and DCS

tree.20 Generally, most of these exist-

ing formal logical languages are based

on first-order logic.11 Let’s look briefly

at two representative models.

Lambda calculus

Lambda calculus is a formal system in

mathematical logic for expressing com-

putation by way of variable binding and

substitution.11 Figure 1 shows several

questions and their corresponding logi-

cal forms based on a domain-specific KB,

GeoQuery (www.cs.utexas.edu/users/ml/

nldata/geoquery.html). Each logical form

is an expression from the lambda calcu-

lus, which contains the following items:

• Constants can be entities, numbers,

or functions. For example, texas is

an entity, state() and size() are

functions.

• Variables are placeholders for enti-

ties, numbers, or functions.

• Logical connectors include con-

junction (∧), disjunction (∨), nega-

tion (¬), and implication (→) used

to connect two or more lambda

calculus expressions.

• Quantification includes universal

quantification (∀) and existential

quantification (∃) to indicate the range

of the variables in the expressions.

• Lambda expressions represent func-

tions acting on variables and con-

stants. For example, λx.borders(x:

texas) is a function from entities to

truth values, which is true of those

states that border Texas.

• Additional quantifiers include the

terms count, argmax, and argmin.

Dependency-based

compositional Semantics (DcS)

To map questions to lambda calcu-

lus–based logic form, existing meth-

ods usually exploit compositional

grammar, such as compositional com-

bination grammar (CCG),21 which is

very dependent on the lexicon and re-

quires the costly annotation of com-

bination grammars. This mechanism

has a low extensive property and

isn’t suitable for performing ques-

tion answering over a large-scale KB.

To tackle this challenge, Percy Liang

and colleagues20 proposed a new

formal language and construction

mechanism named dependency-based

SEpTEMbEr/ocTobEr 2015

www.computer.org/intelligent

27

�

Example: major city in california

z = city;11:

major

city

;11:

loc;21:

CA

1

1

major

1

1

loc

2

1

CA

Figure 2. Dependency-based

compositional semantics tree.20 The

right leaf node CA indicates the entity

California in the knowledge base, and

node loc corresponds to a binary

predicate loc(x,y). The relation 2_1

between loc and CA denotes the

relation between these two predicates,

where the second component of

loc(x,y) (y) must equal the first

component of CA (itself).

compositional semantics (DCS). In DCS,

logical forms are tree structures, called

DCS tree, which are parallel to syntac-

tic dependency trees, which makes them

convenient for parsing and learning.

Moreover, a DCS tree or subtree en-

codes a constraint satisfaction problem

that can efficiently obtain a DCS tree’s

denotation. In addition, DCS provides a

uniform way to deal with scope varia-

tion problem through mark-execute

construction, which addresses the prob-

lem by marking a node low in the tree

with a mark relation (one of E, Q, C),

and invoking it with an execute relation

(Ei) in a higher node.

The DCS formal language’s syntax

is built based on two components:

• Predicates are just symbols and

contain null (meaning empty set),

domain-independent predicates (<,

>, avg, and =), and domain-specific

predicates (the semantic symbol in

the KB, including, entities, classes,

and semantic relations).

• Relations define the relation between

adjacent two tree nodes, which con-

tain several operations, such as

joint (j_i), extract (E), aggregate

(S), quantify (Q), execute (Xi),

and compare (C).

Figure 2 gives an example of a DCS

tree, which is a directed, rooted tree. The

denotation of a DCS tree is the set of fea-

sible values for the root node, so in this

figure, the right leaf node CA indicates

the entity California in the KB, and node

loc corresponds to a binary predicate

loc(x,y). The relation 2_1 between

loc and CA denotes the relation between

these two predicates, where the sec-

ond component of loc(x,y) (y) must

equal the first component of CA (itself).

How to represent a text’s meaning

is a continuing issue for the various ar-

guments about meaning. Current pro-

posals include powerful representation

models that cover meaning from re-

stricted language12 to full language.17,18

Most researchers tend to ignore the dis-

agreements in meaning representation

models and attempt to build practical

systems,20 such as question answering

over KBs.19

Semantic Construction

Mechanism

Aimed at aforementioned semantic

representation, it’s important to exploit

semantic compositional mechanisms

for translating natural language ques-

tions to structured representations.

Based on Frege’s principle of compo-

sitionality in formal semantics,22 ex-

isting approaches usually represent

an utterance’s meaning by composing

the meaning of its parts. In this man-

ner of semantic composition, the domi-

nant paradigm can be divided into two

groups: grammar- and learning-based

approaches.

Grammar-based Approaches

Several grammars are designed for

translation, such as shift-reduce deri-

vations,14 synchronous grammars,23-25

hybrid trees,26 CCGs,17,27,28 and context

free grammars (CFG).29 In this article, we

primarily focus on the most typical one,

CCG, which has an efficient linguistic

parsing formalism, has a transparent

interface between the surface syntax and

underlying semantic representation, and

can be used to model a wide range of

language phenomena. CCG’s core is is

the lexicon, Λ, which encodes all gram-

matical information. Specifically, the en-

tries in Λ consist of word, syntactic, and

semantic categories. The format of each

entry is w := s : l, which indicates a word

or phrase w with a syntactic type s and

its corresponding logical form l. Syntac-

tic categories s can be atomic categories

(NP or S) or complex categories of the

form A/B, where both A and B can be

an atomic or complex category. Logical

forms l are lambda calculus expressions

constructed using predicates from a tar-

geted KB. For example,

Utah := NP : utah

Idaho := NP : idaho

Borders := (S\NP)/NP : lx.ly.borders

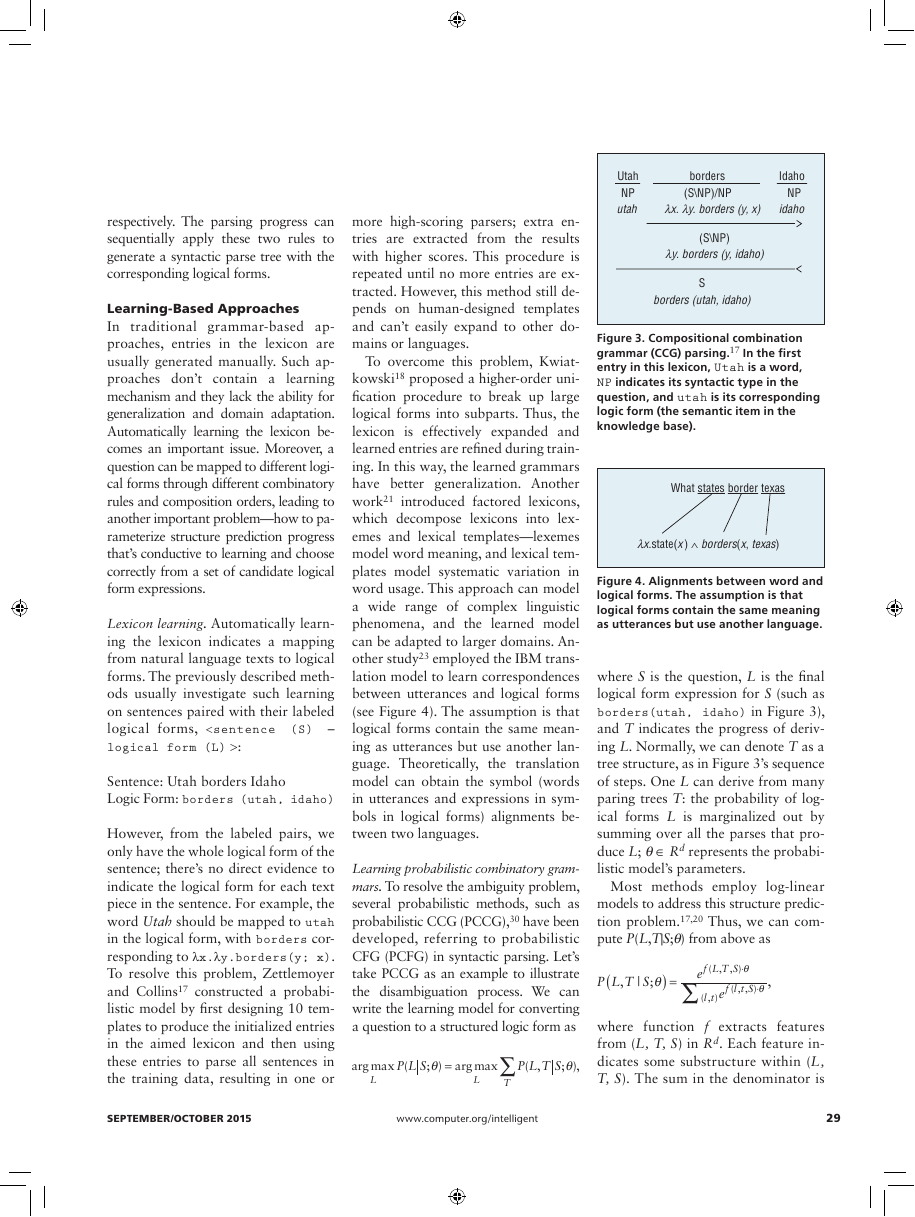

(y; x)

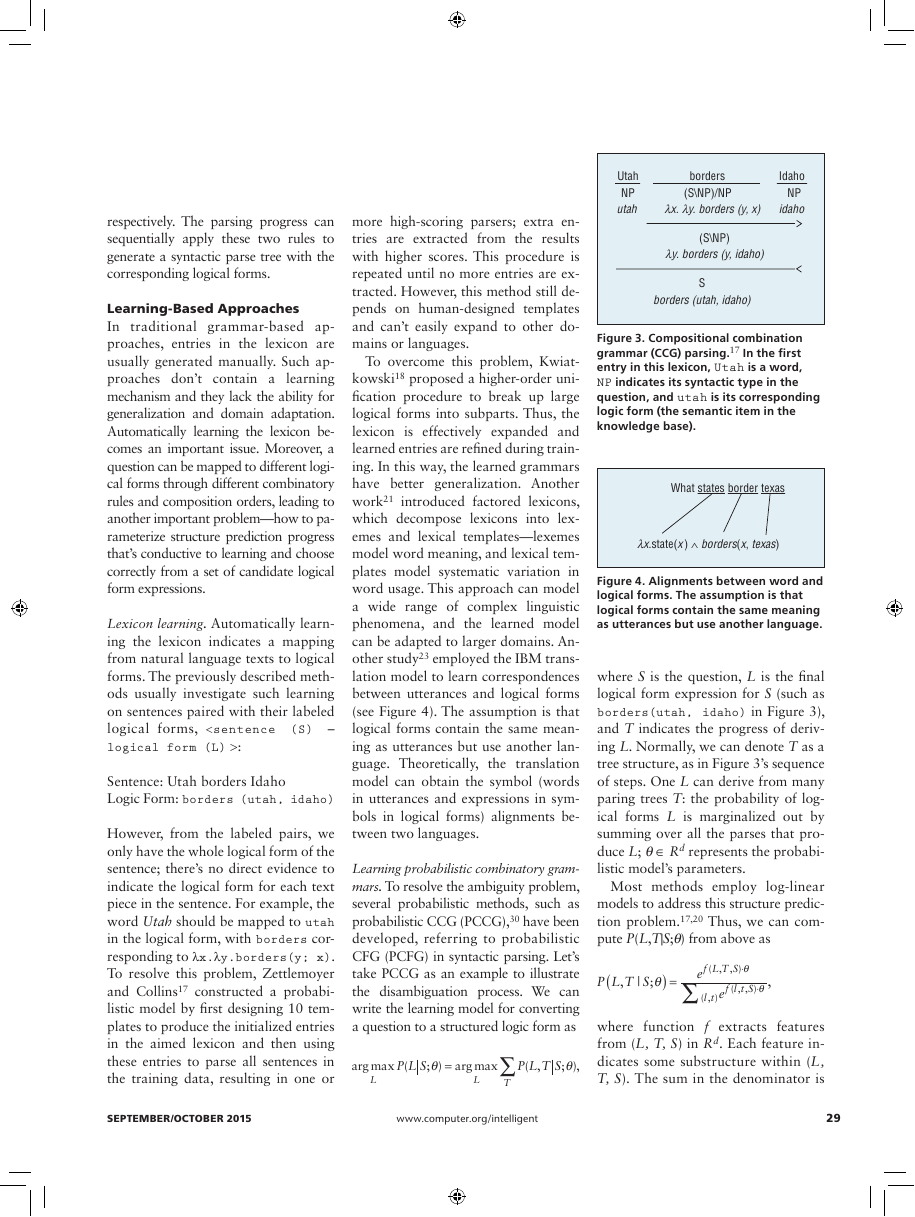

In the first entry in this lexicon,

Utah is a word, NP indicates its syn-

tactic type in the question, and utah

is its corresponding logic form (the

semantic item in the KB).

In addition to the lexicon, CCGs

have a set of combinatory rules that

describe how adjacent syntactic utter-

ances can be recursively integrated. The

logical form combination mechanism is

also indicated. The whole combination

is bottom-up, along with the sentence’s

syntactic structure; Figure 3 provides

an example:

X/Y : f Y : g ⇒ X : f(g) (>)

Y : g X/Y : f ⇒ X : f(g) (<)

According to these rules, the text with

complex category X/Y (X\Y) behaves

like a function that accepts an argument

of category Y on its right (left) and re-

turns a value of category X, where (>)

and (<) are rules of a functional ap-

plication that denotes the forward

and backward combination direction,

28

www.computer.org/intelligent

IEEE INTELLIGENT SYSTEMS

Natural laNguage ProcessiNg�

respectively. The parsing progress can

sequentially apply these two rules to

generate a syntactic parse tree with the

corresponding logical forms.

Learning-based Approaches

In traditional grammar-based ap-

proaches, entries in the lexicon are

usually generated manually. Such ap-

proaches don’t contain a learning

mechanism and they lack the ability for

generalization and domain adaptation.

Automatically learning the lexicon be-

comes an important issue. Moreover, a

question can be mapped to different logi-

cal forms through different combinatory

rules and composition orders, leading to

another important problem—how to pa-

rameterize structure prediction progress

that’s conductive to learning and choose

correctly from a set of candidate logical

form expressions.

Lexicon learning. Automatically learn-

ing the lexicon indicates a mapping

from natural language texts to logical

forms. The previously described meth-

ods usually investigate such learning

on sentences paired with their labeled

logical forms, :

Sentence: Utah borders Idaho

Logic Form: borders (utah, idaho)

However, from the labeled pairs, we

only have the whole logical form of the

sentence; there’s no direct evidence to

indicate the logical form for each text

piece in the sentence. For example, the

word Utah should be mapped to utah

in the logical form, with borders cor-

responding to λx.λy.borders(y; x).

To resolve this problem, Zettlemoyer

and Collins17 constructed a probabi-

listic model by first designing 10 tem-

plates to produce the initialized entries

in the aimed lexicon and then using

these entries to parse all sentences in

the training data, resulting in one or

more high-scoring parsers; extra en-

tries are extracted from the results

with higher scores. This procedure is

repeated until no more entries are ex-

tracted. However, this method still de-

pends on human-designed templates

and can’t easily expand to other do-

mains or languages.

To overcome this problem, Kwiat-

kowski18 proposed a higher-order uni-

fication procedure to break up large

logical forms into subparts. Thus, the

lexicon is effectively expanded and

learned entries are refined during train-

ing. In this way, the learned grammars

have better generalization. Another

work21 introduced factored lexicons,

which decompose lexicons into lex-

emes and lexical templates—lexemes

model word meaning, and lexical tem-

plates model systematic variation in

word usage. This approach can model

a wide range of complex linguistic

phenomena, and the learned model

can be adapted to larger domains. An-

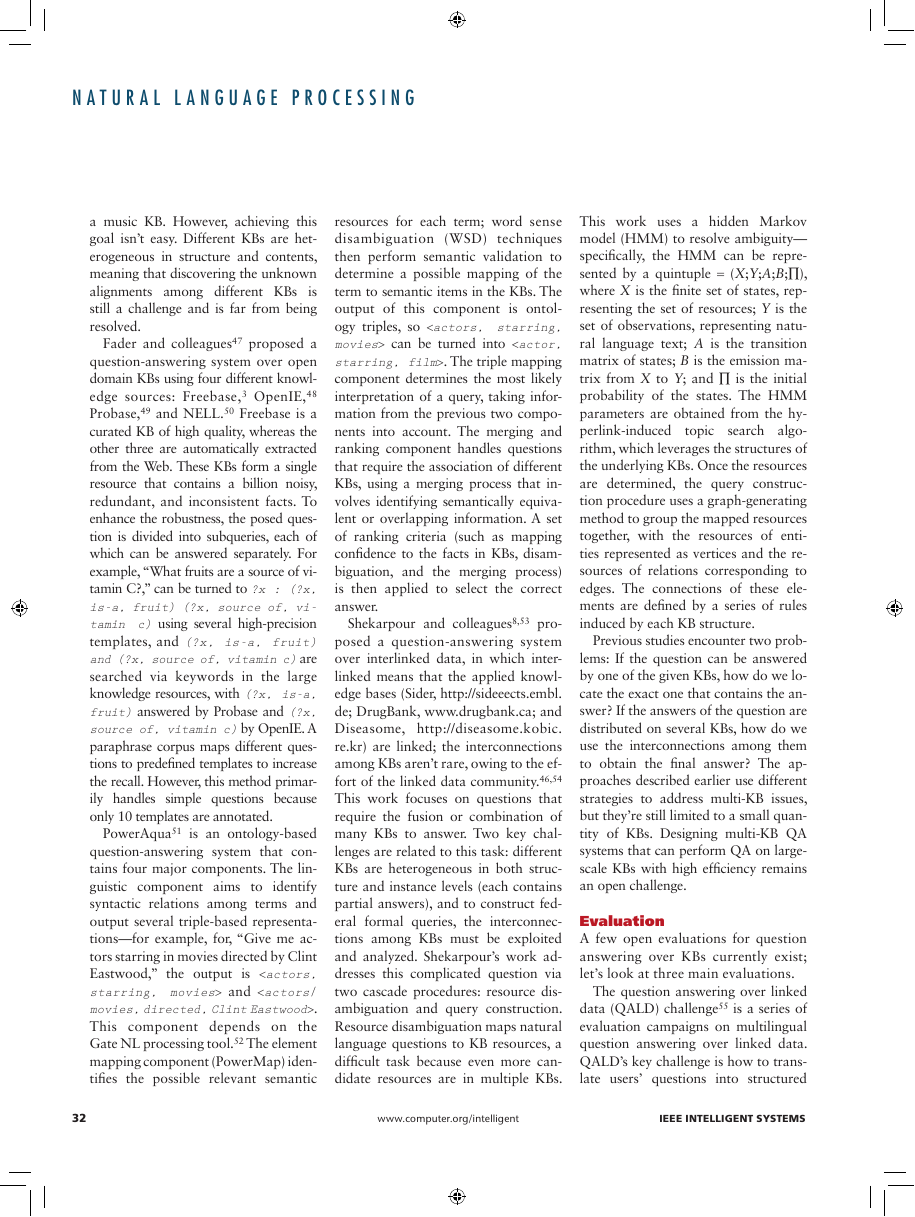

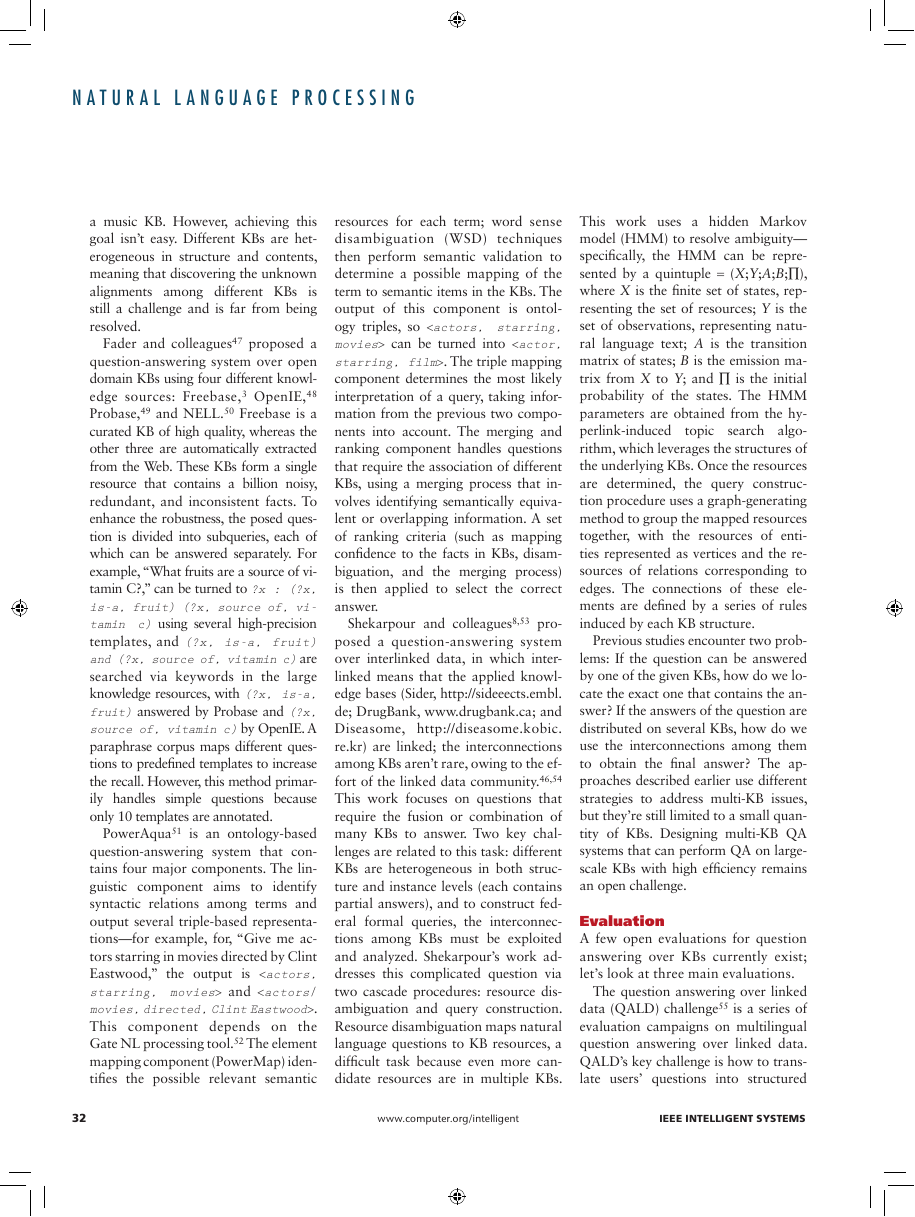

other study23 employed the IBM trans-

lation model to learn correspondences

between utterances and logical forms

(see Figure 4). The assumption is that

logical forms contain the same mean-

ing as utterances but use another lan-

guage. Theoretically, the translation

model can obtain the symbol (words

in utterances and expressions in sym-

bols in logical forms) alignments be-

tween two languages.

Learning probabilistic combinatory gram-

mars. To resolve the ambiguity problem,

several probabilistic methods, such as

probabilistic CCG (PCCG),30 have been

developed, referring to probabilistic

CFG (PCFG) in syntactic parsing. Let’s

take PCCG as an example to illustrate

the disambiguation process. We can

write the learning model for converting

a question to a structured logic form as

arg max (

P L S

L

θ

; )

=

arg max

L

∑

T

P L T S

( ,

θ

; ),

Utah

NP

utah

Idaho

NP

idaho

borders

(S\NP)/NP

�x. �y. borders (y, x)

(S\NP)

�y. borders (y, idaho)

S

borders (utah, idaho)

Figure 3. Compositional combination

grammar (CCG) parsing.17 In the first

entry in this lexicon, Utah is a word,

NP indicates its syntactic type in the

question, and utah is its corresponding

logic form (the semantic item in the

knowledge base).

What states border texas

�x.state(x ) ࢳ borders(x, texas)

Figure 4. Alignments between word and

logical forms. The assumption is that

logical forms contain the same meaning

as utterances but use another language.

where S is the question, L is the final

logical form expression for S (such as

borders(utah, idaho) in Figure 3),

and T indicates the progress of deriv-

ing L. Normally, we can denote T as a

tree structure, as in Figure 3’s sequence

of steps. One L can derive from many

paring trees T: the probability of log-

ical forms L is marginalized out by

summing over all the parses that pro-

duce L; q ∈ Rd represents the probabi-

listic model’s parameters.

Most methods employ log-linear

models to address this structure predic-

tion problem.17,20 Thus, we can com-

pute P(L,T|S;q) from above as

(

P L T S

,

|

) =

θ

;

e

∑

θ

⋅

, )

(

f L T S

,

,

θ

⋅

( , , )

f l t S

e

( , )

l t

where function f extracts features

from (L, T, S) in Rd. Each feature in-

dicates some substructure within (L,

T, S). The sum in the denominator is

SEpTEMbEr/ocTobEr 2015

www.computer.org/intelligent

29

�

over all valid results for S under ei-

ther model (lexicon or grammar).

Based on the training set’s log like-

lihood, we can obtain the best pa-

rameters through an optimization

algorithm (such as SGD, AdaGrad,

AdaDelta, or L-BFGS). Beam-search

and dynamic programming are pop-

ular technologies used for improving

efficiency in semantic parsing. In ad-

dition, several other learning models

exist, such as integer linear program-

ming (ILP),15 the generative model,26

the Markov logic network (MLN),31

and representation learning.32 (Be-

cause of space limitations, this article

omits a detailed description of these.)

Based on Frege’s principle of compo-

sitionality in formal semantics, we can

construct the meaning of a full sen-

tence from its parts. The core issues are

how to obtain the basic formal mean-

ing units, construct semantics from its

pars, and resolve the ambiguity prob-

lems in these two processes.

Question Answering over

Large-Scale Knowledge

Bases

The approaches mentioned earlier are

usually exploited in a limited domain

based on a small-sized KB. They

can’t efficiently fit large-scale occa-

sions—the traditional lexicons used

in these approaches are in a narrow

domain, and the number of items (en-

tities, classes, and relations) in large-

scale KBs is much greater, making it

difficult to learn an effective lexicon

with high coverage. Meanwhile, the

grammars or templates used in the

combination process are also lim-

ited because the questions are usually

more complex in this scenario. More-

over, the manually annotated data re-

quired in the traditional approaches

for training, such as PCCG, are ex-

pensive to acquire for large-scale

KBs. Many efforts attempt to address

these problems.

Lexicon Extension

As mentioned, the lexicons used in tra-

ditional approaches17,18,21,33,34 are usu-

ally effective in limited domains and

smaller KBs. Hence, expanding the lexi-

cons is an important task for construct-

ing a question-answering system over

large-scale KBs.

Cai and Yates35 provided a compo-

nent named Matcher to automatically

find a textual relation rT for a relation

in a KB denoted as rD. For each rD, the

algorithm first identifies its candidate

matches, turning to a Web search engine

to issue queries for database relation rD.

Using snippets of the results, the top 500

word types are chosen as candidates, de-

noted by C(rD). These candidates can

be noisy, so four simple patterns are ap-

plied to filter the noise. Next, point-wise

mutual information (PMI)36 measures

the relatedness between rD and rT. Fi-

nally, a regression model combines the

filtered results and PMI value together

to select the correct candidates.

Berant and colleagues37 proposed a

lexicon construction method to map tex-

tual relations to more than 19,000 predi-

cates in a KB, adopting two strategies for

this challenge. First, the method aligns

a large text corpus to Freebase,35 and,

considering the neighboring predicates,

a bridge operation is made to produce

more difficult alignments. For exam-

ple, in, “What government does Chile

have?,” the predicate is expressed with

the light verb have. Suppose the phrases

Chile and government are parsed as

Chile and Type.FormOfGovernment,

respectively. Using the bridging predi-

cate GovernmentTypeOf, the two parsed

phrases are connected together:

Type.FormOfGovernment ┌┐

GovernmentTypeOf.Chile

Grammar Extension

As mentioned earlier, traditional ap-

proaches usually design specific gram-

mars or templates to construct semantic

representation.6,7 Nevertheless, cover-

age of this type of method is restricted,

especially in large-scale question an-

swering, where questions are more di-

verse in expression. These methods are

also difficult to transfer to various do-

mains and languages.

To settle this problem, He19 pro-

posed a pattern-learning mechanism

to automatically learn new grammar

rules and patterns, designing many

metapatterns instead of specific ones.

These metapatterns are formulated as

first-order logic formulas in MLNs,38

where specific generated patterns are

generated by the training data. The

weights of matching rules are learned

in the training process, so we can eas-

ily select the most effective patterns

for formal query construction.

Supervision reduction

Traditional approaches require manually

annotated logical forms for every ques-

tion as training data. However, this type

of data is expensive to acquire, making it

infeasible for large-scale usage.

One solution is to use weak supervi-

sion data rather than complete, labeled

logical forms. Clarke and colleagues39

took advantage of binary feedback

signals from the external world; this

feedback informs only whether the gen-

erated meaning representation is cor-

rect, and it can be given by nonexperts,

thereby dramatically alleviating the an-

notation burden.

Liang20 used question–answer pairs

rather than question–logic form pairs

as supervision data, utilizing latent

logical forms as intermediate vari-

ables. Although the human effort is

reduced, the learning process be-

comes challenging. As a novel mean-

ing representation, DCS could tackle

this problem: it provides an intuitive

way to model semantics and is expres-

sive for representing complex natural

language questions. Specifically, DCS

trees are recursively constructed from

30

www.computer.org/intelligent

IEEE INTELLIGENT SYSTEMS

Natural laNguage ProcessiNg�

the bottom up. For each span (i..j) in

the question, they build a set of trees

Ci:j(x) corresponding to the final logi-

cal form of span (1..n). Each set of

DCS trees Ci:j(x) is constructed recur-

sively by combining the trees of its sub-

spans Ci:k(x) and Ck′:j(x) for each pair

of split points k, k′. (We must ensure

k < k′ and the words between k and

k′ are ignored.) The construction mech-

anism refers to the Cocke–Younger–

Kasami (CYK) algorithm widely used

in syntactic parsing. (DCS also needs

CFG-like grammars to guide the com-

posite process.)

Fader and colleagues40 proposed the

Paralex system, which merely uses a

paraphrase corpus as supervision. This

approach attempts open domain single-

relation questions, which automatically

induce lexical structures—specifically, it

learns lexical equivalences for relation,

entity, and question templates. Para-

phrase data can be regarded as weaker

supervision, even compared with the

question–answer pairs used elsewhere.20

Berant and Liang41 also used a para-

phrase corpus.

Recently, neural networks have been

applied in question answering over

KBs, with the core idea being to proj-

ect questions and answers (or meaning

representations) to embeddings in the

same low-dimensional space. Conse-

quently, the task of question answering

can be converted to a problem of simi-

larity computation between the embed-

dings of the questions and answers in

this space. Such methods are suitable

for large-scale usage because the em-

beddings are free from domain restric-

tion and require weak supervision.

Bordes and colleagues42 used an ap-

proach that jointly learns representa-

tions of words, entities, and semantic

items in KBs (WordNet43). This work

focuses on utterances that can be rep-

resented by a single relation form,

that is, a relation (subject, direct

object). An utterance is first analyzed

by a semantic role labeler; for exam-

ple, “A musical score accompanies a

television program,” is converted to

accompany_VB (_musical_JJ _score_

NN, _television_program_NN). The

key is to transform these phrases to

meaning representations: _musical_

JJ to _musical_JJ_1 and _score_NN

to _socre_NN_2 (1 in _musical_JJ_1

and 2 in _socre_NN_2 indicate the

sense of the synset in WordNet).

This approach adopts semantic

matching energy ∈ to address the prob-

lem, which uses triples (lhs, rel, rhs) in

the KB as supervision data. Briefly, ∈ is

calculated as follows. First, lhs, rel, and

rhs are mapped to their embeddings,

Elhs, Erel, and Erhs. Then, Elhs and Erel

are associated with Erel, respectively:

Elhs(rel) = gleft(Elhs, Erl), and Erhs(rel)

= gright(Erhs,Erel). Finally, energy ∈ is

computed from Elhs(rel) and Erhs(rel):

∈ (x) = h(Elhs(rel),Erhs(rel)). The train-

ing object is to minimize the energy of

the observed triples in the KB. In this

way, if one of the elements of a tri-

ple is missing, we can predict the cor-

rect element using the above semantic

matching energy. Thus, mapping natu-

ral language phrases to items in a KB

is reduced to finding the lowest energy.

In this manner, KBs themselves become

supervision data.

Yih and colleagues44 presented a

method that takes advantage of a con-

volutional neural networks (CNNs)-

based semantic model (CNNSM) to

build two parsers, one for entity map-

ping and the other for relation map-

ping. The core idea is that the relation

pattern expressed in natural language

and the relation in the KB can be pro-

jected to the same low-dimensional

space through CNNs. The label of en-

tity in the KB is the same as the phrase

of entity in the question. CNNSM

scores relational triples in the KB by

using CNN-provided measures for

each question and selects the top scor-

ing relational triple as the final answer.

Bordes and colleagues45 proposed

a more straightforward technique: in-

stead of mapping entity mentions and

relation patterns to corresponding en-

tities and predicates in the KB, their

work directly maps the question to

a triple tuple in the KB. The natural

language questions and the triples are

represented by low-dimensional em-

beddings, and the similarities between

them are easy and efficient to compute.

A paraphrase corpus is also used for

multitask training, with a goal of ensur-

ing that the similarities between similar

utterances are high. The supervision

data are also question-answer pairs,

but these pairs are automatically gener-

ated by a few patterns, further reducing

the requirement of human effort.

Question Answering over

Multiple Knowledge bases

Another trend is extending question

answering to multiple KBs (multi-KB

QA). With the emergence of linked

data,46 the number of KBs is growing

rapidly, as is the demand for multi-KB

QA. One application scenario is that,

given multiple KBs, the answer for the

posed question is contained in one of

them. This scenario is typical because

many KBs focus on a constrained do-

main—for example, when the question

is about music, a music domain KB

should be located; when the question

is about a movie, a movie domain KB

should be used instead. A second ap-

plication scenario, in which the answer

can’t be derived unless we combine

multiple KBs, is more complicated—

in other words, the clues for the an-

swer are spread across different KBs.

For example, given a question, “What

is the newest album of the actress who

played Raikes in Battleship?,” using a

single KB isn’t enough. First, the movie

KB needs to be accessed to acquire the

answer for, “the actress who played

Raikes in Battleship,” or Rihanna; “the

newest album of Rihanna” comes from

SEpTEMbEr/ocTobEr 2015

www.computer.org/intelligent

31

�

a music KB. However, achieving this

goal isn’t easy. Different KBs are het-

erogeneous in structure and contents,

meaning that discovering the unknown

alignments among different KBs is

still a challenge and is far from being

resolved.

Fader and colleagues47 proposed a

question-answering system over open

domain KBs using four different knowl-

edge sources: Freebase,3 OpenIE,48

Probase,49 and NELL.50 Freebase is a

curated KB of high quality, whereas the

other three are automatically extracted

from the Web. These KBs form a single

resource that contains a billion noisy,

redundant, and inconsistent facts. To

enhance the robustness, the posed ques-

tion is divided into subqueries, each of

which can be answered separately. For

example, “What fruits are a source of vi-

tamin C?,” can be turned to ?x : (?x,

is-a, fruit) (?x, source of, vi-

tamin c) using several high-precision

templates, and (?x, is-a, fruit)

and (?x, source of, vitamin c) are

searched via keywords in the large

knowledge resources, with (?x, is-a,

fruit) answered by Probase and (?x,

source of, vitamin c) by OpenIE. A

paraphrase corpus maps different ques-

tions to predefined templates to increase

the recall. However, this method primar-

ily handles simple questions because

only 10 templates are annotated.

PowerAqua51 is an ontology-based

question-answering system that con-

tains four major components. The lin-

guistic component aims to identify

syntactic relations among terms and

output several triple-based representa-

tions—for example, for, “Give me ac-

tors starring in movies directed by Clint

Eastwood,” the output is

and .

This component depends on the

Gate NL processing tool.52 The element

mapping component (PowerMap) iden-

tifies the possible relevant semantic

resources for each term; word sense

disambiguation (WSD) techniques

then perform semantic validation to

determine a possible mapping of the

term to semantic items in the KBs. The

output of this component is ontol-

ogy triples, so can be turned into . The triple mapping

component determines the most likely

interpretation of a query, taking infor-

mation from the previous two compo-

nents into account. The merging and

ranking component handles questions

that require the association of different

KBs, using a merging process that in-

volves identifying semantically equiva-

lent or overlapping information. A set

of ranking criteria (such as mapping

confidence to the facts in KBs, disam-

biguation, and the merging process)

is then applied to select the correct

answer.

Shekarpour and colleagues8,53 pro-

posed a question-answering system

over interlinked data, in which inter-

linked means that the applied knowl-

edge bases (Sider, http://sideeects.embl.

de; DrugBank, www.drugbank.ca; and

Diseasome, http://diseasome.kobic.

re.kr) are linked; the interconnections

among KBs aren’t rare, owing to the ef-

fort of the linked data community.46,54

This work focuses on questions that

require the fusion or combination of

many KBs to answer. Two key chal-

lenges are related to this task: different

KBs are heterogeneous in both struc-

ture and instance levels (each contains

partial answers), and to construct fed-

eral formal queries, the interconnec-

tions among KBs must be exploited

and analyzed. Shekarpour’s work ad-

dresses this complicated question via

two cascade procedures: resource dis-

ambiguation and query construction.

Resource disambiguation maps natural

language questions to KB resources, a

difficult task because even more can-

didate resources are in multiple KBs.

This work uses a hidden Markov

model (HMM) to resolve ambiguity—

specifically, the HMM can be repre-

sented by a quintuple = (X;Y;A;B;∏),

where X is the finite set of states, rep-

resenting the set of resources; Y is the

set of observations, representing natu-

ral language text; A is the transition

matrix of states; B is the emission ma-

trix from X to Y; and ∏ is the initial

probability of the states. The HMM

parameters are obtained from the hy-

perlink-induced

topic search algo-

rithm, which leverages the structures of

the underlying KBs. Once the resources

are determined, the query construc-

tion procedure uses a graph-generating

method to group the mapped resources

together, with the resources of enti-

ties represented as vertices and the re-

sources of relations corresponding to

edges. The connections of these ele-

ments are defined by a series of rules

induced by each KB structure.

Previous studies encounter two prob-

lems: If the question can be answered

by one of the given KBs, how do we lo-

cate the exact one that contains the an-

swer? If the answers of the question are

distributed on several KBs, how do we

use the interconnections among them

to obtain the final answer? The ap-

proaches described earlier use different

strategies to address multi-KB issues,

but they’re still limited to a small quan-

tity of KBs. Designing multi-KB QA

systems that can perform QA on large-

scale KBs with high efficiency remains

an open challenge.

Evaluation

A few open evaluations for question

answering over KBs currently exist;

let’s look at three main evaluations.

The question answering over linked

data (QALD) challenge55 is a series of

evaluation campaigns on multilingual

question answering over linked data.

QALD’s key challenge is how to trans-

late users’ questions into structured

32

www.computer.org/intelligent

IEEE INTELLIGENT SYSTEMS

Natural laNguage ProcessiNg�forms.56 Participating systems must

return either the correct answers or a

SPARQL query that retrieves the an-

swers. Four QALD sessions have been

held since 2011. The latest, QALD-4,

had three subtasks as follows:

• multilingual question answering

(given a natural language question,

retrieve the correct answers or con-

struct SPARQL queries based on a

given RDF repository [DBpedia]);

• biomedical question answering over

interlinked data (given three inter-

linked biomedical domain KBs, an-

swer the posed questions in natural

language8,53); and

• hybrid question answering (answer

questions using both a structured

knowledge base [DBpedia] and free

text [DBpedia abstracts]).

The linked data track of INEX

(https://inex.mmci.uni-saarland.de/

tracks/lod) aims to combine retrieval

techniques over textual and struc-

tured data. Thus, it decreases the gap

between keyword searches in infor-

mation retrieval and the reasoning

technique for the Semantic Web.

Biomedical Semantic Indexing and

Question Answering (BioASQ; www.

bioasq.org) organizes challenges on

biomedical topics. Large-scale online

biomedical semantic indexing requires

participants to build semantic indexes

of article abstracts. BioASQ requires

participants to give relevant concepts,

articles, snippets, RDF triples, and ex-

act answers, which involve informa-

tion retrieval, QA, summarization, and

other techniques.

Question answering over KBs is

a complex and challenge task.

It comprises many natural language

processing techniques, such as syntac-

tic parsing, entity linking, entity rec-

ognition, and information retrieval. In

the future, we think the following is-

sues for question answering over KBs

need to be further investigated: jointly

modeling KB alignment and question

parsing over interlinked KBs, finding

the implicit relations in questions, re-

ducing the supervision in training pro-

cess or adopting unsupervised learning

for question parsing, and extending

the QA to other languages, such as

Chinese.

Acknowledgments

This work was sponsored by the National

Basic Research Program of China (no.

2014CB340503) and the National Natural

Science Foundation of China (no. 61272332

and no. 61202329).

References

1. O. Etzioni, “Search Needs a Shake-Up,” Na-

ture, vol. 476, no. 7358, 2011, pp. 25–26.

2. J. Lehmann et al., “DBpedia: A Large-

Scale, Multilingual Knowledge Base Ex-

tracted from Wikipedia,” Semantic Webs,

vol. 7, no. 3, 2009, pp. 154–165.

3. K. Bollacker et al., “Freebase: A Col-

laboratively Created Graph Database for

Structuring Human Knowledge,” Proc.

2008 ACM SIGMOD Int’l Conf. Man-

agement of Data, 2008, pp. 1247–1250.

4. F.M. Suchanek, G. Kasneci, and G.

Weikum, “YAGO: A Core of Semantic

Knowledge,” Proc. 16th Int’l Conf.

World Wide Web, 2007, pp. 697–706.

5. A. Freitas and E. Curry, “Natural

Language Queries over Heterogeneous

Linked Data Graphs: A Distributional-

Compositional Semantics Approach,”

Proc. 18th Int’l Conf. Intelligent User

Interfaces, 2014, pp. 279–288.

8. S. Shekarpour, A.-C. Ngonga Ngomo,

and S. Auer, “Question Answering on

Interlinked Data,” Proc. 22nd Int’l Conf.

World Wide Web, 2013, pp. 1145–1156.

9. M. Yahya et al., “Robust Question An-

swering over the Web of Linked Data,”

Proc. 22nd ACM Int’l Conf. Information

and Knowledge Management, 2013,

pp. 1107–1116.

10. J. Bao et al., “Knowledge-Based Question

Answering as Machine Translation,”

Proc. 52nd Ann. Meeting Assoc. Compu-

tational Linguistics, 2014, pp. 967–976.

11. R. Brachman and H. Levesque, Knowl-

edge Representation and Reasoning,

Elsevier, 2004.

12. B.F. Green Jr. et al., “Baseball: An Auto-

matic Question-Answerer,” Proc. Western

Joint IRE-AIEE-ACM Computer Conf.,

1961, pp. 219–224.

13. I. Androutsopoulos, G. Ritchie, and P.

Thanisch, “Masque/SQL: An Efficient

and Portable Natural Language Query

Interface for Relational Databases,” tech.

paper, Dept. of AI, Univ. of Edinburgh,

1993.

14. J.M. Zelle and R.J. Mooney, “Learning

to Parse Database Queries Using Induc-

tive Logic Programming,” Proc. Nat’l

Conf. Artificial Intelligence, 1996,

pp. 1050–1055.

15. L.R. Tang and R.J. Mooney, “Using

Multiple Clause Constructors in Induc-

tive Logic Programming for Semantic

Parsing,” Machine Learning, Springer,

2001, pp. 466–477.

16. R.J. Kate et al., “Learning to Transform

Natural to Formal Languages,” Proc.

20th Nat’l Conf. Artificial Intelligence,

2005, pp. 1062–1068.

6. M. Yahya et al., “Natural Language

17. L.S. Zettlemoyer and M. Collins, “Learn-

Questions for the Web of Data,” Proc.

2012 Joint Conf. Empirical Methods in

Natural Language Processing and Com-

putational Natural Language Learning,

2012, pp. 379–390.

ing to Map Sentences to Logical Form:

Structured Classification with Probabi-

listic Categorial Grammars,” Proc. 21st

Conf. Uncertainty in Artificial Intelli-

gence, 2005, pp. 658–666.

7. C. Unger et al., “Template-Based Ques-

tion Answering over RDF Data,” Proc.

21st Int’l Conf. World Wide Web, 2012,

pp. 639–648.

18. T. Kwiatkowski et al., “Inducing Proba-

bilistic CCG Grammars from Logical

Form with Higher-Order Unification,”

Proc. 2010 Conf. Empirical Methods in

SEpTEMbEr/ocTobEr 2015

www.computer.org/intelligent

33

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc