Abstract

Declaration

Copyright Statement

Acknowledgements

List of Acronyms

List of Symbols

Introduction

Motivation and Aims

Thesis Statement and Hypotheses

Contributions

Papers and Workshops

Papers

Workshops

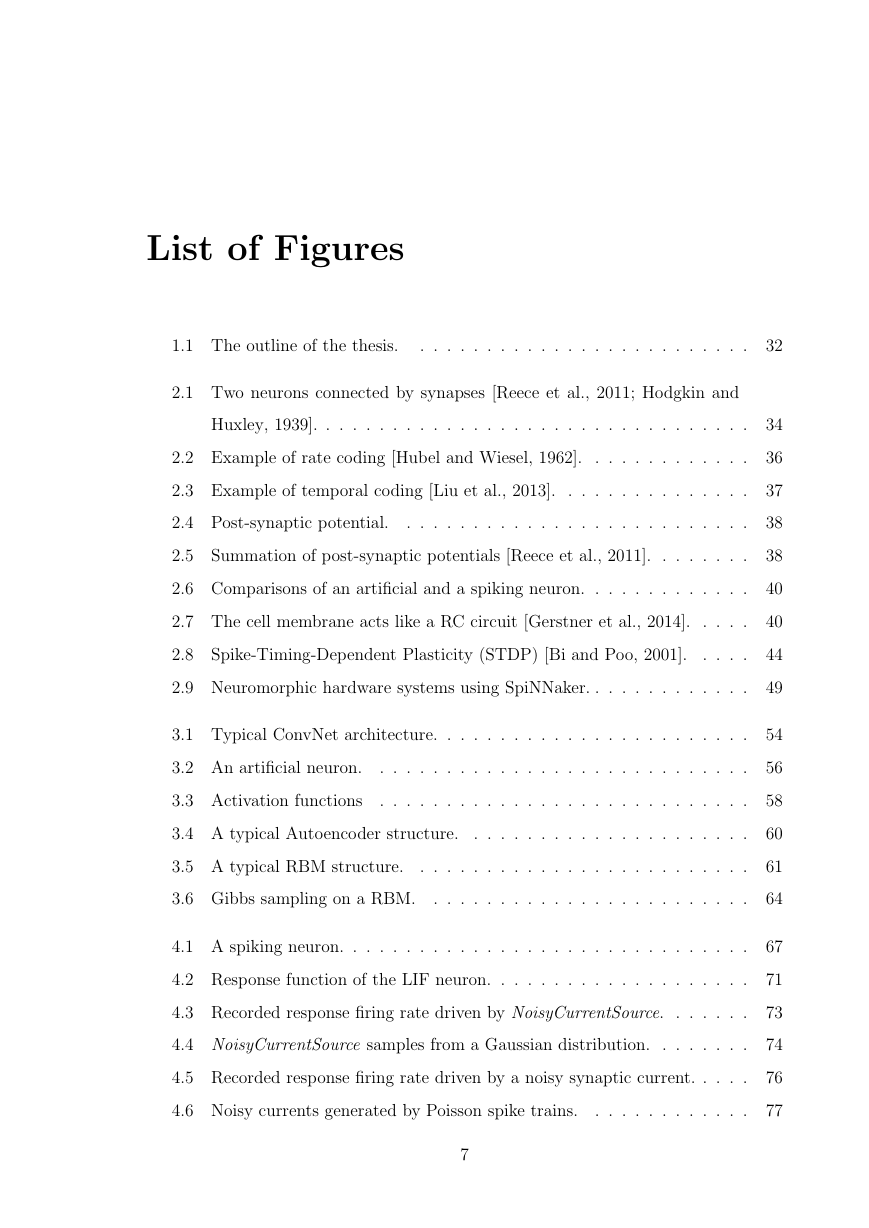

Thesis Structure

Summary

Spiking Neural Networks (SNNs)

Biological Neural Components

Neuron

Neuronal Signals

Signal Transmission

Modelling Spiking Neurons

Neural Dynamics

Neuron Models

Synapse Model

Synaptic Plasticity

Simulating Networks of Spiking Neurons

Software Simulators

Neuromorphic Hardware

Neuromorphic Sensory and Processing Systems

Summary

Deep Learning

Brief Overview

Classical Models

Combined Approaches

Convolutional Networks

Network Architecture

Backpropagation

Activation Function and Vanishing Gradient

Autoencoders (AEs)

Structure

Training

Restricted Boltzmann Machines (RBMs)

Energy-based Model

Objective Function

Contrastive Divergence

Summary

Off-line SNN Training

Introduction

Related Work

Siegert: Modelling the Response Function

Biological Background

Mismatch of The Siegert Function to Practice

Noisy Softplus (NSP)

Generalised Off-line SNN Training

Mapping NSP to Concrete Physical Units

Parametric Activation Functions (PAFs)

Training Method

Fine Tuning

Results

Experiment Description

Single Neuronal Activity

Learning Performance

Recognition Performance

Power Consumption

Summary

On-line SNN Training with SRM

Introduction

Related Work

Spike-based Rate Multiplication (SRM)

Training Deep SNNs

Experimental Setup

AEs

Noisy RBMs

Spiking AEs

Spiking RBMs

Problem of Spike Correlations

Solution 1 (S1): Longer STDP Window

Solution 2 (S2): Noisy Threshold

Solution 3 (S3): Teaching Signal

Combined Solutions (S4)

Case Study

Experimental Setup

Trained Weights

Classification Accuracy

Reconstruction

Summary

Benchmarking Neuromorphic Vision

Introduction

Related Work

NE Dataset

Guiding Principles

The Dataset: NE15-MNIST

Data Description

Performance Evaluation

Model-level Evaluation

Hardware-level Evaluation

Results

Training

Testing

Evaluation

Summary

Conclusion and Future Work

Confirming Research Hypotheses

Future Work

Off-line SNN Training

On-line Biologically-plausible Learning

Evaluation on Neuromorphic Vision

Closing Remarks

Detailed Derivation Process of Equations

Detailed Experimental Results

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc