Foundations and Trends R in Machine Learning

Vol. 8, No. 3-4 (2015) 231–358

c 2015 S. Bubeck

DOI: 10.1561/2200000050

Convex Optimization: Algorithms and

Complexity

Sébastien Bubeck

Theory Group, Microsoft Research

sebubeck@microsoft.com

5

1

0

2

v

o

N

6

1

]

.

C

O

h

t

a

m

[

2

v

0

8

9

4

.

5

0

4

1

:

v

i

X

r

a

�

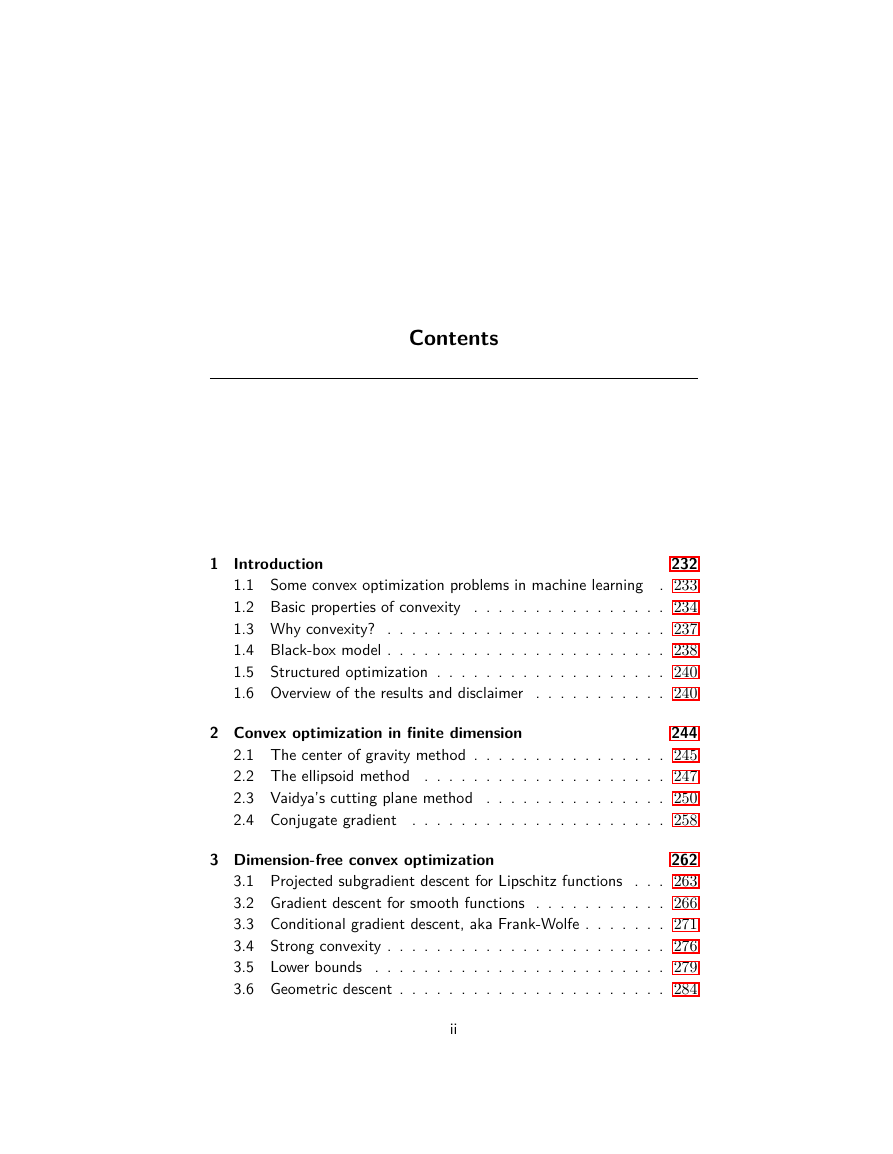

Contents

1 Introduction

232

1.1 Some convex optimization problems in machine learning . 233

1.2 Basic properties of convexity . . . . . . . . . . . . . . . . 234

1.3 Why convexity? . . . . . . . . . . . . . . . . . . . . . . . 237

1.4 Black-box model . . . . . . . . . . . . . . . . . . . . . . . 238

1.5 Structured optimization . . . . . . . . . . . . . . . . . . . 240

1.6 Overview of the results and disclaimer

. . . . . . . . . . . 240

2 Convex optimization in finite dimension

244

2.1 The center of gravity method . . . . . . . . . . . . . . . . 245

2.2 The ellipsoid method . . . . . . . . . . . . . . . . . . . . 247

2.3 Vaidya’s cutting plane method . . . . . . . . . . . . . . . 250

2.4 Conjugate gradient

. . . . . . . . . . . . . . . . . . . . . 258

3 Dimension-free convex optimization

262

3.1 Projected subgradient descent for Lipschitz functions . . . 263

3.2 Gradient descent for smooth functions . . . . . . . . . . . 266

3.3 Conditional gradient descent, aka Frank-Wolfe . . . . . . . 271

3.4 Strong convexity . . . . . . . . . . . . . . . . . . . . . . . 276

3.5 Lower bounds

. . . . . . . . . . . . . . . . . . . . . . . . 279

3.6 Geometric descent . . . . . . . . . . . . . . . . . . . . . . 284

ii

�

iii

3.7 Nesterov’s accelerated gradient descent

. . . . . . . . . . 289

4 Almost dimension-free convex optimization in non-Euclidean

spaces

296

4.1 Mirror maps . . . . . . . . . . . . . . . . . . . . . . . . . 298

4.2 Mirror descent . . . . . . . . . . . . . . . . . . . . . . . . 299

4.3 Standard setups for mirror descent . . . . . . . . . . . . . 301

4.4 Lazy mirror descent, aka Nesterov’s dual averaging . . . . 303

4.5 Mirror prox . . . . . . . . . . . . . . . . . . . . . . . . . . 305

4.6 The vector field point of view on MD, DA, and MP . . . . 307

5 Beyond the black-box model

309

5.1 Sum of a smooth and a simple non-smooth term . . . . . 310

5.2 Smooth saddle-point representation of a non-smooth function312

5.3

Interior point methods . . . . . . . . . . . . . . . . . . . . 318

6 Convex optimization and randomness

329

6.1 Non-smooth stochastic optimization . . . . . . . . . . . . 330

6.2 Smooth stochastic optimization and mini-batch SGD . . . 332

6.3 Sum of smooth and strongly convex functions . . . . . . . 334

6.4 Random coordinate descent . . . . . . . . . . . . . . . . . 338

6.5 Acceleration by randomization for saddle points . . . . . . 342

6.6 Convex relaxation and randomized rounding . . . . . . . . 343

6.7 Random walk based methods . . . . . . . . . . . . . . . . 347

Acknowledgements

References

349

351

�

Abstract

This monograph presents the main complexity theorems in convex op-

timization and their corresponding algorithms. Starting from the fun-

damental theory of black-box optimization, the material progresses to-

wards recent advances in structural optimization and stochastic op-

timization. Our presentation of black-box optimization, strongly in-

fluenced by Nesterov’s seminal book and Nemirovski’s lecture notes,

includes the analysis of cutting plane methods, as well as (acceler-

ated) gradient descent schemes. We also pay special attention to non-

Euclidean settings (relevant algorithms include Frank-Wolfe, mirror

descent, and dual averaging) and discuss their relevance in machine

learning. We provide a gentle introduction to structural optimization

with FISTA (to optimize a sum of a smooth and a simple non-smooth

term), saddle-point mirror prox (Nemirovski’s alternative to Nesterov’s

smoothing), and a concise description of interior point methods. In

stochastic optimization we discuss stochastic gradient descent, mini-

batches, random coordinate descent, and sublinear algorithms. We also

briefly touch upon convex relaxation of combinatorial problems and the

use of randomness to round solutions, as well as random walks based

methods.

S. Bubeck. Convex Optimization: Algorithms and Complexity. Foundations and

Trends R in Machine Learning, vol. 8, no. 3-4, pp. 231–358, 2015.

DOI: 10.1561/2200000050.

�

1

Introduction

The central objects of our study are convex functions and convex sets

in Rn.

Definition 1.1 (Convex sets and convex functions). A set X ⊂ Rn is

said to be convex if it contains all of its segments, that is

∀(x, y, γ) ∈ X × X × [0, 1], (1 − γ)x + γy ∈ X .

A function f : X → R is said to be convex if it always lies below its

chords, that is

∀(x, y, γ) ∈ X × X × [0, 1], f((1 − γ)x + γy) ≤ (1 − γ)f(x) + γf(y).

We are interested in algorithms that take as input a convex set X

and a convex function f and output an approximate minimum of f

over X . We write compactly the problem of finding the minimum of f

over X as

min. f(x)

s.t. x ∈ X .

In the following we will make more precise how the set of constraints X

and the objective function f are specified to the algorithm. Before that

232

�

1.1. Some convex optimization problems in machine learning

233

we proceed to give a few important examples of convex optimization

problems in machine learning.

1.1 Some convex optimization problems in machine learning

Many fundamental convex optimization problems in machine learning

take the following form:

mX

i=1

fi(x) + λR(x),

min.

x∈Rn

(1.1)

where the functions f1, . . . , fm,R are convex and λ ≥ 0 is a fixed

parameter. The interpretation is that fi(x) represents the cost of using

x on the ith element of some data set, and R(x) is a regularization term

which enforces some “simplicity” in x. We discuss now major instances

of (1.1). In all cases one has a data set of the form (wi, yi) ∈ Rn×Y, i =

1, . . . , m and the cost function fi depends only on the pair (wi, yi). We

refer to Hastie et al. [2001], Schölkopf and Smola [2002], Shalev-Shwartz

and Ben-David [2014] for more details on the origin of these important

problems. The mere objective of this section is to expose the reader to a

few concrete convex optimization problems which are routinely solved.

In classification one has Y = {−1, 1}. Taking fi(x) = max(0, 1 −

yix>wi) (the so-called hinge loss) and R(x) = kxk2

2 one obtains the

SVM problem. On the other hand taking fi(x) = log(1+exp(−yix>wi))

(the logistic loss) and again R(x) = kxk2

2 one obtains the (regularized)

logistic regression problem.

In regression one has Y = R. Taking fi(x) = (x>wi − yi)2 and

R(x) = 0 one obtains the vanilla least-squares problem which can be

rewritten in vector notation as

min.

x∈Rn

kW x − Y k2

2,

where W ∈ Rm×n is the matrix with w>

i on the ith row and Y =

(y1, . . . , yn)>. With R(x) = kxk2

2 one obtains the ridge regression prob-

lem, while with R(x) = kxk1 this is the LASSO problem Tibshirani

[1996].

Our last two examples are of a slightly different flavor. In particular

the design variable x is now best viewed as a matrix, and thus we

�

234

Introduction

denote it by a capital letter X. The sparse inverse covariance estimation

problem can be written as follows, given some empirical covariance

matrix Y ,

min. Tr(XY ) − logdet(X) + λkXk1

s.t. X ∈ Rn×n, X> = X, X 0.

Intuitively the above problem is simply a regularized maximum likeli-

hood estimator (under a Gaussian assumption).

Finally we introduce the convex version of the matrix completion

problem. Here our data set consists of observations of some of the

entries of an unknown matrix Y , and we want to “complete" the unob-

served entries of Y in such a way that the resulting matrix is “simple"

(in the sense that it has low rank). After some massaging (see Can-

dès and Recht [2009]) the (convex) matrix completion problem can be

formulated as follows:

min. Tr(X)

s.t. X ∈ Rn×n, X> = X, X 0, Xi,j = Yi,j for (i, j) ∈ Ω,

where Ω ⊂ [n]2 and (Yi,j)(i,j)∈Ω are given.

1.2 Basic properties of convexity

A basic result about convex sets that we shall use extensively is the

Separation Theorem.

Theorem 1.1 (Separation Theorem). Let X ⊂ Rn be a closed convex

set, and x0 ∈ Rn \ X . Then, there exists w ∈ Rn and t ∈ R such that

w>x0 < t, and ∀x ∈ X , w>x ≥ t.

Note that if X is not closed then one can only guarantee that

w>x0 ≤ w>x,∀x ∈ X (and w 6= 0). This immediately implies the Sup-

porting Hyperplane Theorem (∂X denotes the boundary of X , that is

the closure without the interior):

Theorem 1.2 (Supporting Hyperplane Theorem). Let X ⊂ Rn be a con-

vex set, and x0 ∈ ∂X . Then, there exists w ∈ Rn, w 6= 0 such that

∀x ∈ X , w>x ≥ w>x0.

�

1.2. Basic properties of convexity

235

We introduce now the key notion of subgradients.

Definition 1.2 (Subgradients). Let X ⊂ Rn, and f : X → R. Then

g ∈ Rn is a subgradient of f at x ∈ X if for any y ∈ X one has

f(x) − f(y) ≤ g>(x − y).

The set of subgradients of f at x is denoted ∂f(x).

To put it differently, for any x ∈ X and g ∈ ∂f(x), f is above the

linear function y 7→ f(x)+g>(y−x). The next result shows (essentially)

that a convex functions always admit subgradients.

Proposition 1.1 (Existence of subgradients). Let X ⊂ Rn be convex,

and f : X → R. If ∀x ∈ X , ∂f(x) 6= ∅ then f is convex. Conversely

if f is convex then for any x ∈ int(X ), ∂f(x) 6= ∅. Furthermore if f is

convex and differentiable at x then ∇f(x) ∈ ∂f(x).

Before going to the proof we recall the definition of the epigraph of

a function f : X → R:

epi(f) = {(x, t) ∈ X × R : t ≥ f(x)}.

It is obvious that a function is convex if and only if its epigraph is a

convex set.

Proof. The first claim is almost trivial: let g ∈ ∂f((1− γ)x + γy), then

by definition one has

f((1 − γ)x + γy) ≤ f(x) + γg>(y − x),

f((1 − γ)x + γy) ≤ f(y) + (1 − γ)g>(x − y),

which clearly shows that f is convex by adding the two (appropriately

rescaled) inequalities.

Now let us prove that a convex function f has subgradients in the

interior of X . We build a subgradient by using a supporting hyperplane

to the epigraph of the function. Let x ∈ X . Then clearly (x, f(x)) ∈

∂epi(f), and epi(f) is a convex set. Thus by using the Supporting

Hyperplane Theorem, there exists (a, b) ∈ Rn × R such that

a>x + bf(x) ≥ a>y + bt,∀(y, t) ∈ epi(f).

(1.2)

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc