Title page

Copyright

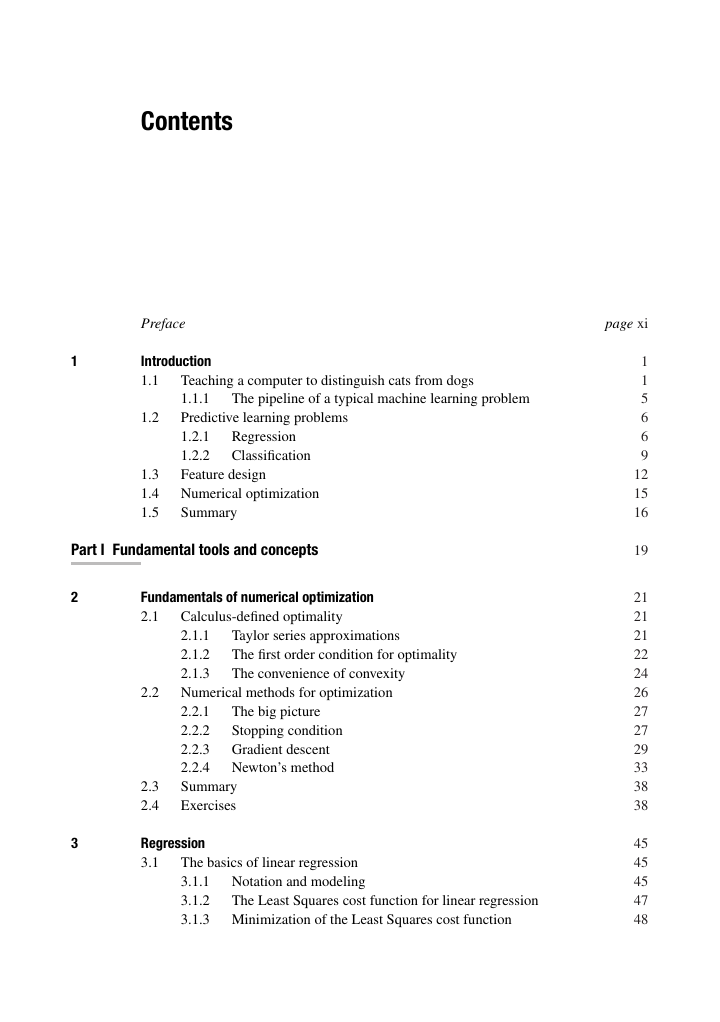

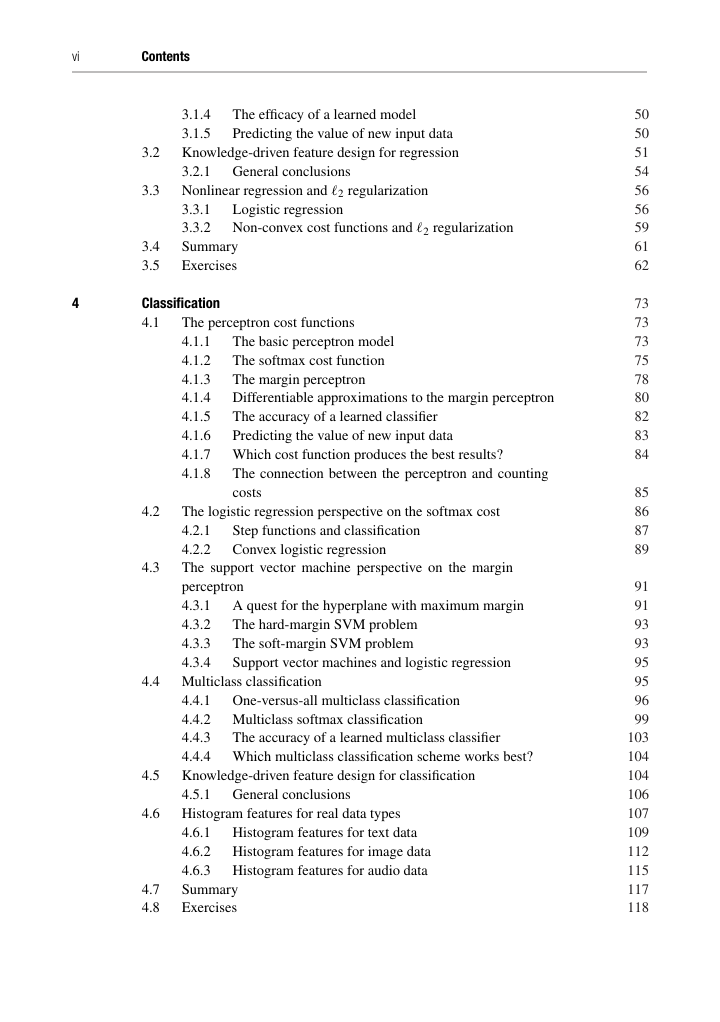

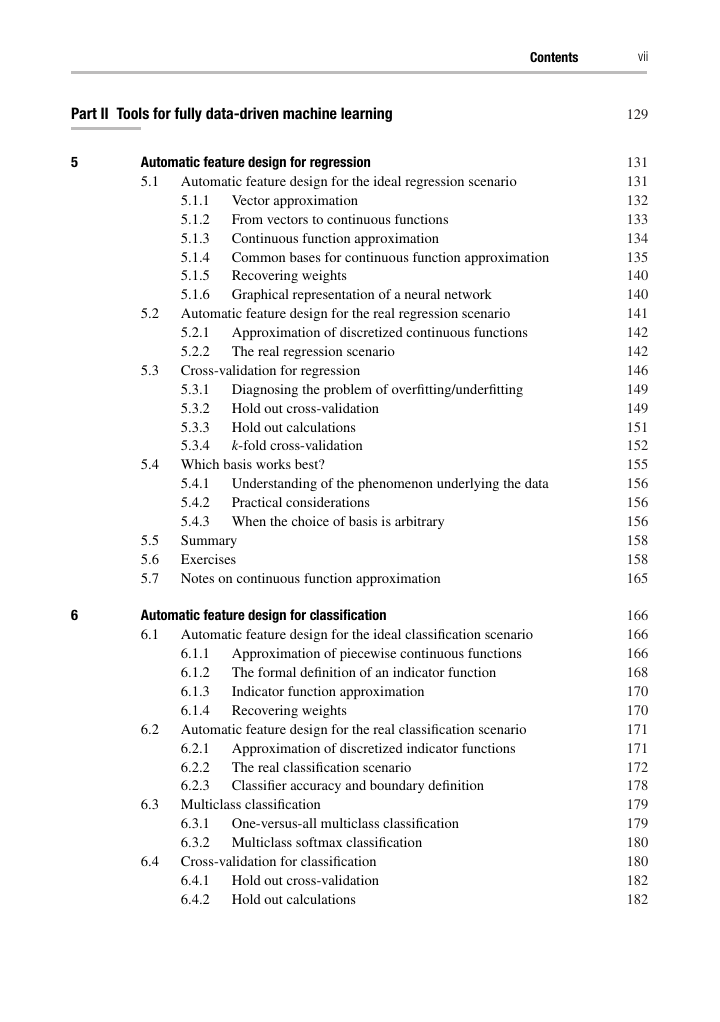

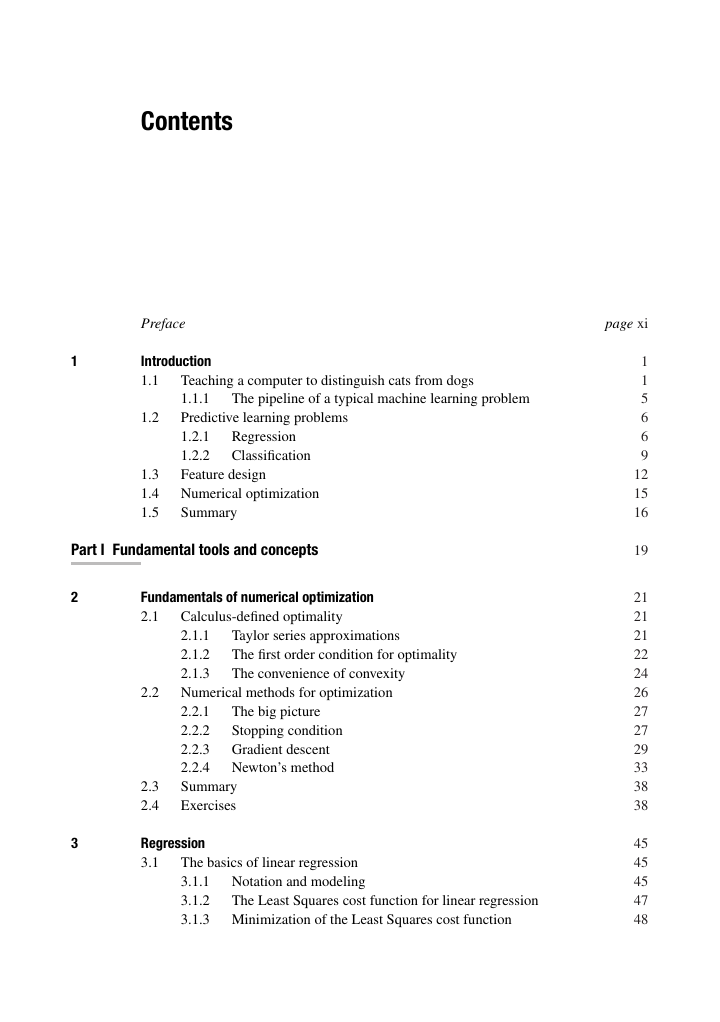

Table of contents

Preface

1 Introduction

1.1 Teaching a computer to distinguish cats from dogs

1.1.1 The pipeline of a typical machine learning problem

1.2 Predictive learning problems

1.2.1 Regression

1.2.2 Classification

1.3 Feature design

1.4 Numerical optimization

1.5 Summary

Part I: Fundamental tools and concepts

Overview of Part I

2 Fundamentals of numerical optimization

2.1 Calculus-defined optimality

2.1.1 Taylor series approximations

2.1.2 The first order condition for optimality

2.1.3 The convenience of convexity

2.2 Numerical methods for optimization

2.2.1 The big picture

2.2.2 Stopping condition

2.2.3 Gradient descent

2.2.4 Newton’s method

2.3 Summary

2.4 Exercises

3 Regression

3.1 The basics of linear regression

3.1.1 Notation and modeling

3.1.2 The Least Squares cost function for linear regression

3.1.3 Minimization of the Least Squares cost function

3.1.4 The efficacy of a learned model

3.1.5 Predicting the value of new input data

3.2 Knowledge-driven feature design for regression

3.2.1 General conclusions

3.3 Nonlinear regression and �l2 regularization

3.3.1 Logistic regression

3.3.2 Non-convex cost functions and �l2 regularization

3.4 Summary

3.5 Exercises

4 Classification

4.1 The perceptron cost functions

4.1.1 The basic perceptron model

4.1.2 The softmax cost function

4.1.3 The margin perceptron

4.1.4 Differentiable approximations to the margin perceptron

4.1.5 The accuracy of a learned classifier

4.1.6 Predicting the value of new input data

4.1.7 Which cost function produces the best results?

4.1.8 The connection between the perceptron and counting costs

4.2 The logistic regression perspective on the softmax cost

4.2.1 Step functions and classification

4.2.2 Convex logistic regression

4.3 The support vector machine perspective on the margin perceptron

4.3.1 A quest for the hyperplane with maximum margin

4.3.2 The hard-margin SVM problem

4.3.3 The soft-margin SVM problem

4.3.4 Support vector machines and logistic regression

4.4 Multiclass classification

4.4.1 One-versus-all multiclass classification

4.4.2 Multiclass softmax classification

4.4.3 The accuracy of a learned multiclass classifier

4.4.4 Which multiclass classification scheme works best?

4.5 Knowledge-driven feature design for classification

4.5.1 General conclusions

4.6 Histogram features for real data types

4.6.1 Histogram features for text data

4.6.2 Histogram features for image data

4.6.3 Histogram features for audio data

4.7 Summary

4.8 Exercises

Part II: Tools for fully data-driven machine learning

Overview of Part II

5 Automatic feature design for regression

5.1 Automatic feature design for the ideal regression scenario

5.1.1 Vector approximation

5.1.2 From vectors to continuous functions

5.1.3 Continuous function approximation

5.1.4 Common bases for continuous function approximation

5.1.5 Recovering weights

5.1.6 Graphical representation of a neural network

5.2 Automatic feature design for the real regression scenario

5.2.1 Approximation of discretized continuous functions

5.2.2 The real regression scenario

5.3 Cross-validation for regression

5.3.1 Diagnosing the problem of overfitting/underfitting

5.3.2 Hold out cross-validation

5.3.3 Hold out calculations

5.3.4 k-fold cross-validation

5.4 Which basis works best?

5.4.1 Understanding of the phenomenon underlying the data

5.4.2 Practical considerations

5.4.3 When the choice of basis is arbitrary

5.5 Summary

5.6 Exercises

6 Automatic feature design for classification

6.1 Automatic feature design for the ideal classification scenario

6.1.1 Approximation of piecewise continuous functions

6.1.2 The formal definition of an indicator function

6.1.3 Indicator function approximation

6.1.4 Recovering weights

6.2 Automatic feature design for the real classification scenario

6.2.1 Approximation of discretized indicator functions

6.2.2 The real classification scenario

6.2.3 Classifier accuracy and boundary definition

6.3 Multiclass classification

6.3.1 One-versus-all multiclass classification

6.3.2 Multiclass softmax classification

6.4 Cross-validation for classification

6.4.1 Hold out cross-validation

6.4.2 Hold out calculations

6.4.3 k-fold cross-validation

6.4.4 k-fold cross-validation for one-versus-all multiclass classification

6.5 Which basis works best?

6.6 Summary

6.7 Exercises

7 Kernels, backpropagation, and regularized cross-validation

7.1 Fixed feature kernels

7.1.1 The fundamental theorem of linear algebra

7.1.2 Kernelizing cost functions

7.1.3 The value of kernelization

7.1.4 Examples of kernels

7.1.5 Kernels as similarity matrices

7.2 The backpropagation algorithm

7.2.1 Computing the gradient of a two layer network cost function

7.2.2 Three layer neural network gradient calculations

7.2.3 Gradient descent with momentum

7.3 Cross-validation via �l2 regularization

7.3.1 �l2 regularization and cross-validation

7.3.2 Regularized k-fold cross-validation for regression

7.3.3 Regularized cross-validation for classification

7.4 Summary

7.5 Further kernel calculations

7.5.1 Kernelizing various cost functions

7.5.2 Fourier kernel calculations – scalar input

7.5.3 Fourier kernel calculations – vector input

Part III: Methods for large scale machine learning

Overview of Part III

8 Advanced gradient schemes

8.1 Fixed step length rules for gradient descent

8.1.1 Gradient descent and simple quadratic surrogates

8.1.2 Functions with bounded curvature and optimally conservative step length rules

8.1.3 How to use the conservative fixed step length rule

8.2 Adaptive step length rules for gradient descent

8.2.1 Adaptive step length rule via backtracking line search

8.2.2 How to use the adaptive step length rule

8.3 Stochastic gradient descent

8.3.1 Decomposing the gradient

8.3.2 The stochastic gradient descent iteration

8.3.3 The value of stochastic gradient descent

8.3.4 Step length rules for stochastic gradient descent

8.3.5 How to use the stochastic gradient method in practice

8.4 Convergence proofs for gradient descent schemes

8.4.1 Convergence of gradient descent with Lipschitz constant fixed step length

8.4.2 Convergence of gradient descent with backtracking line search

8.4.3 Convergence of the stochastic gradient method

8.4.4 Convergence rate of gradient descent for convex functions with fixed step length

8.5 Calculation of computable Lipschitz constants

8.6 Summary

8.7 Exercises

9 Dimension reduction techniques

9.1 Techniques for data dimension reduction

9.1.1 Random subsampling

9.1.2 K-means clustering

9.1.3 Optimization of the K-means problem

9.2 Principal component analysis

9.3 Recommender systems

9.3.1 Matrix completion setup

9.3.2 Optimization of the matrix completion model

9.4 Summary

9.5 Exercises

Part IV: Appendices

A: Basic vector and matrix operations

B: Basics of vector calculus

C: Fundamental matrix factorizations and the pseudo-inverse

D: Convex geometry

References

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc