Apache-hadoop2.7.3

+

Spark2.0

集群搭建步骤

余 辉

版本 V 1

�

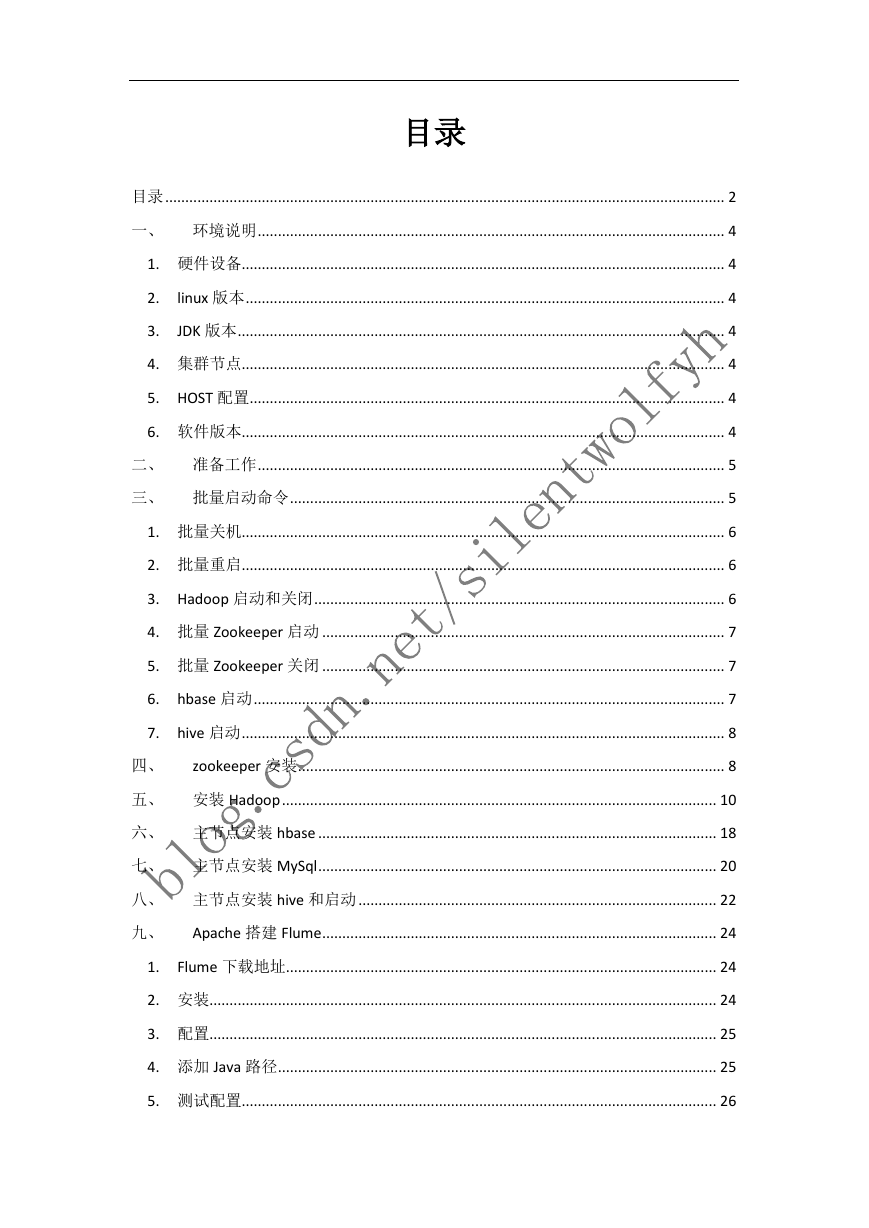

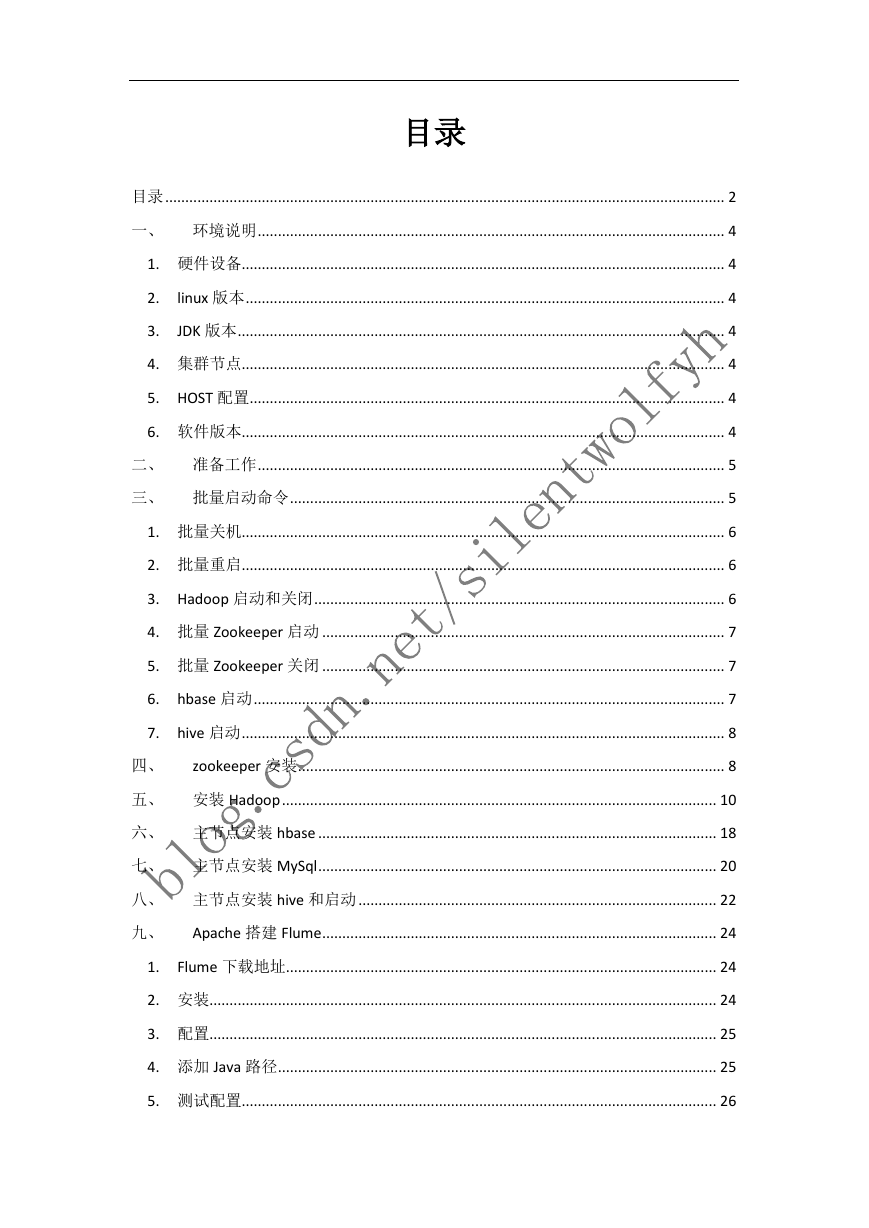

目录

目录 ........................................................................................................................................... 2

一、 环境说明 .................................................................................................................... 4

1. 硬件设备........................................................................................................................ 4

2.

3.

linux 版本 ....................................................................................................................... 4

JDK 版本 ......................................................................................................................... 4

4. 集群节点........................................................................................................................ 4

5. HOST 配置 ...................................................................................................................... 4

6. 软件版本........................................................................................................................ 4

二、 准备工作 .................................................................................................................... 5

三、 批量启动命令 ............................................................................................................ 5

1. 批量关机........................................................................................................................ 6

2. 批量重启........................................................................................................................ 6

3. Hadoop 启动和关闭 ...................................................................................................... 6

4. 批量 Zookeeper 启动 .................................................................................................... 7

5. 批量 Zookeeper 关闭 .................................................................................................... 7

6. hbase 启动 ..................................................................................................................... 7

7. hive 启动 ........................................................................................................................ 8

四、

zookeeper 安装 .......................................................................................................... 8

五、 安装 Hadoop ............................................................................................................ 10

六、 主节点安装 hbase ................................................................................................... 18

七、 主节点安装 MySql ................................................................................................... 20

八、 主节点安装 hive 和启动 ......................................................................................... 22

九、

Apache 搭建 Flume .................................................................................................. 24

1. Flume 下载地址........................................................................................................... 24

2. 安装.............................................................................................................................. 24

3. 配置.............................................................................................................................. 25

4. 添加 Java 路径 ............................................................................................................. 25

5. 测试配置...................................................................................................................... 26

�

十、

Kafka 安装和使用 .................................................................................................... 26

1. 下载解压...................................................................................................................... 26

2. 安装配置...................................................................................................................... 26

3. 启动.............................................................................................................................. 27

4. 测试.............................................................................................................................. 28

十一、

Scala 安装 ............................................................................................................. 29

十二、

Spakr 安装 ............................................................................................................ 30

十三、 启动顺序及进程解说 .......................................................................................... 33

1) 进程解说...................................................................................................................... 33

2) 启动顺序...................................................................................................................... 33

3) 关闭顺序...................................................................................................................... 33

4) 查看.............................................................................................................................. 34

5) hadoop 启动指令 ........................................................................................................ 34

十四、 错误集合 .............................................................................................................. 34

1. 错误:Mysql ................................................................................................................ 34

2. 错误:Hbase ............................................................................................................... 35

3. 错误:Hbase ............................................................................................................... 35

4. 错误:hbase ................................................................................................................ 36

5. 错误:Hbase 连接集群 ............................................................................................... 37

6. 错误:HDFS 连接集群 ................................................................................................ 39

7. 错误:NameNode ....................................................................................................... 41

8. 错误:Hive01 .............................................................................................................. 42

9. 错误:Hive02 .............................................................................................................. 43

10. 错误:Hive03........................................................................................................... 43

11. 错误:Hive04........................................................................................................... 43

�

一、 环境说明

1. 硬件设备

一台物理机需要内存为【16G】

2. linux 版本

[root@hadoop11 app]# cat /etc/issue

CentOS release 6.7 (Final)

Kernel \r on an \m

3. JDK 版本

[root@hadoop11 app]# java -version

java version "1.8.0_77"

Java(TM) SE Runtime Environment (build 1.8.0_77-b03)

Java HotSpot(TM) 64-Bit Server VM (build 25.77-b03, mixed mode)

4. 集群节点

三个 hadoop11(Master),hadoop12(Slave),hadoop13(Slave)

5. HOST 配置

192.168.200.11 hadoop11

192.168.200.12 hadoop12

192.168.200.13 hadoop13

6. 软件版本

�

jdk-8u77-linux-x64.tar.gz

zookeeper-3.4.8.tar.gz

hadoop-2.7.3.tar.gz

hbase-1.2.6-bin.tar

hive-0.12.0-bin.tar

apache-flume-1.6.0-bin.tar

kafka_2.10-0.8.1.1.tar.gz

二、 准备工作

1、安装 Java jdk

[root@hadoop11 app]# java -version

java version "1.8.0_77"

Java(TM) SE Runtime Environment (build 1.8.0_77-b03)

Java HotSpot(TM) 64-Bit Server VM (build 25.77-b03, mixed mode)

hive-0.12.0

jdk1.8.0_77

kafka2.10

2、ssh 免密码验证

3、下载 Hadoop 版本

4、所有软件放在 /app 目录下面

[root@hadoop11 app]# ls

flume1.6

zookeeper-3.4.8

hadoop-2.7.3

hbase-1.2.6

三、 批量启动命令

�

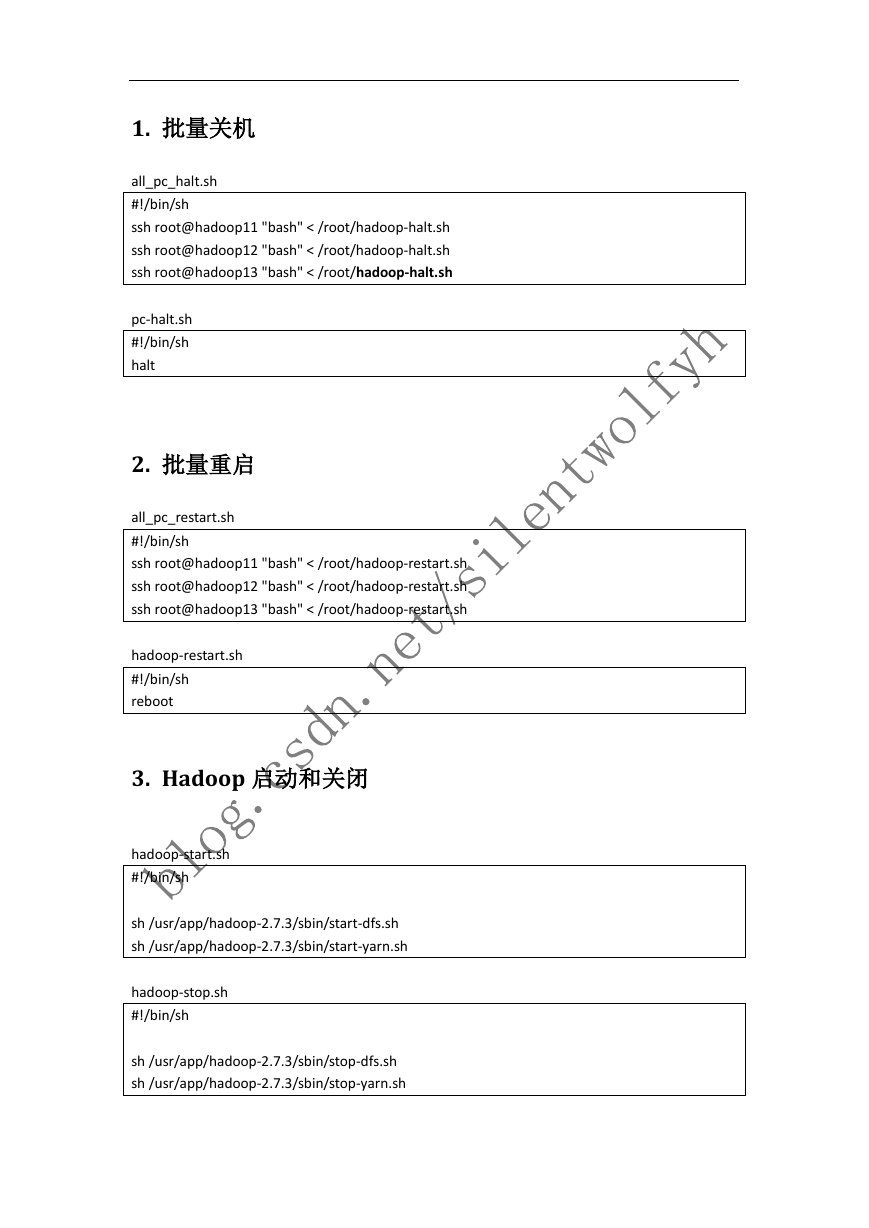

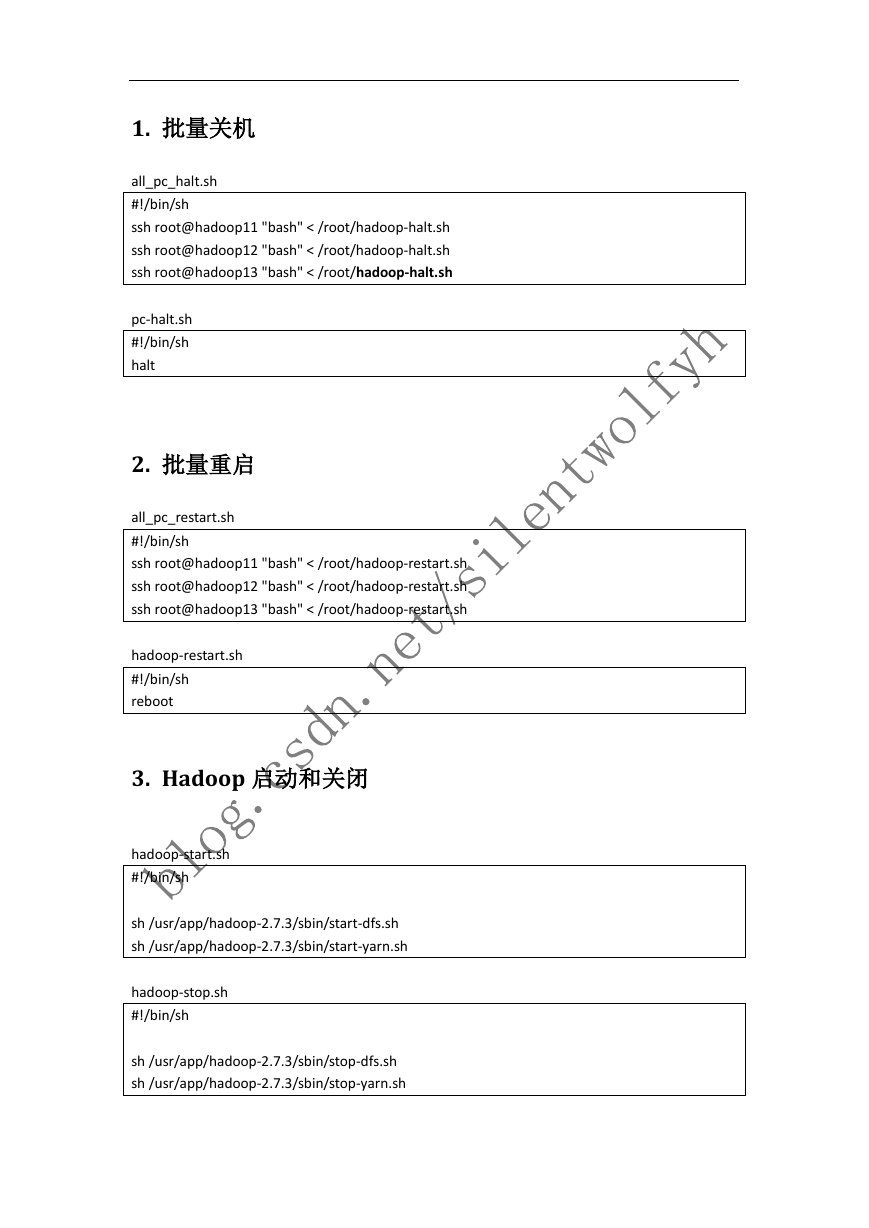

1. 批量关机

all_pc_halt.sh

#!/bin/sh

ssh root@hadoop11 "bash" < /root/hadoop-halt.sh

ssh root@hadoop12 "bash" < /root/hadoop-halt.sh

ssh root@hadoop13 "bash" < /root/hadoop-halt.sh

pc-halt.sh

#!/bin/sh

halt

2. 批量重启

all_pc_restart.sh

#!/bin/sh

ssh root@hadoop11 "bash" < /root/hadoop-restart.sh

ssh root@hadoop12 "bash" < /root/hadoop-restart.sh

ssh root@hadoop13 "bash" < /root/hadoop-restart.sh

hadoop-restart.sh

#!/bin/sh

reboot

3. Hadoop 启动和关闭

hadoop-start.sh

#!/bin/sh

sh /usr/app/hadoop-2.7.3/sbin/start-dfs.sh

sh /usr/app/hadoop-2.7.3/sbin/start-yarn.sh

hadoop-stop.sh

#!/bin/sh

sh /usr/app/hadoop-2.7.3/sbin/stop-dfs.sh

sh /usr/app/hadoop-2.7.3/sbin/stop-yarn.sh

�

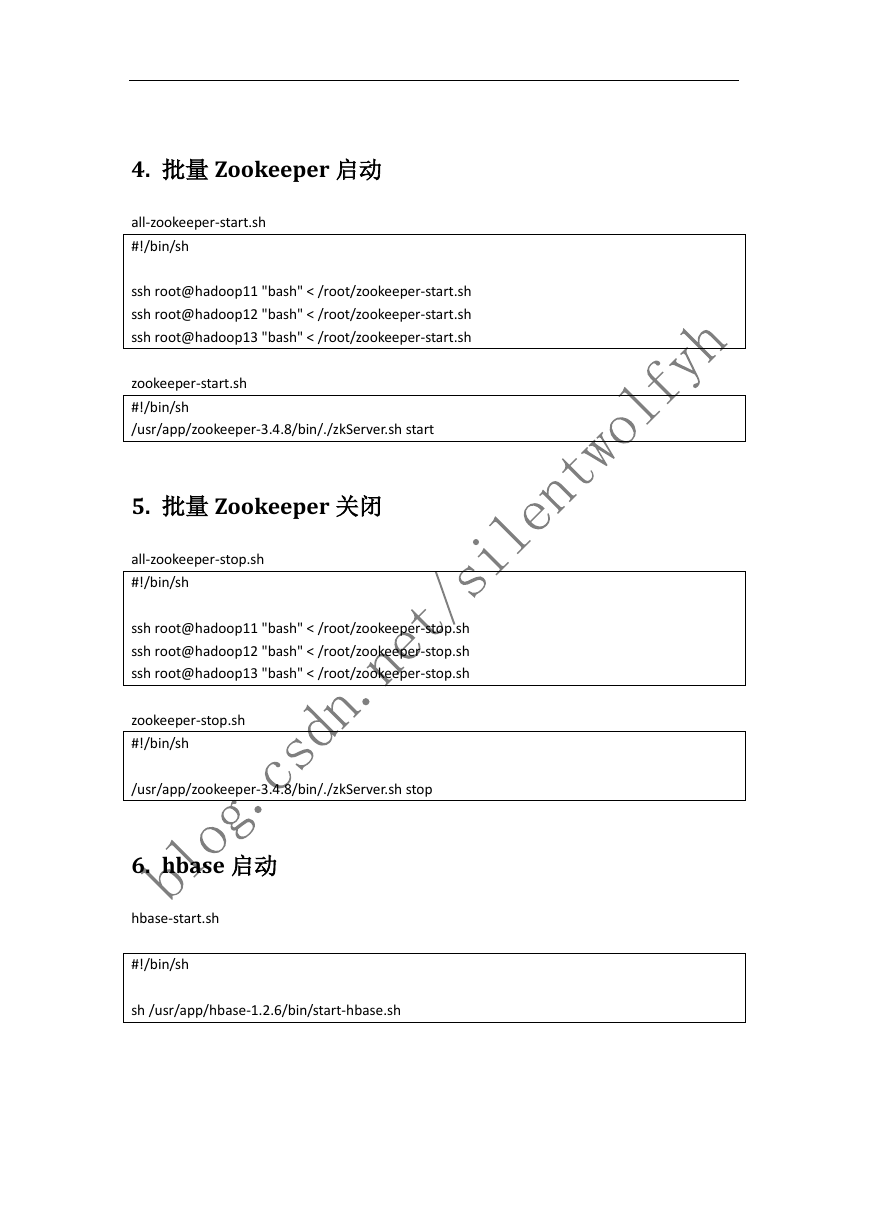

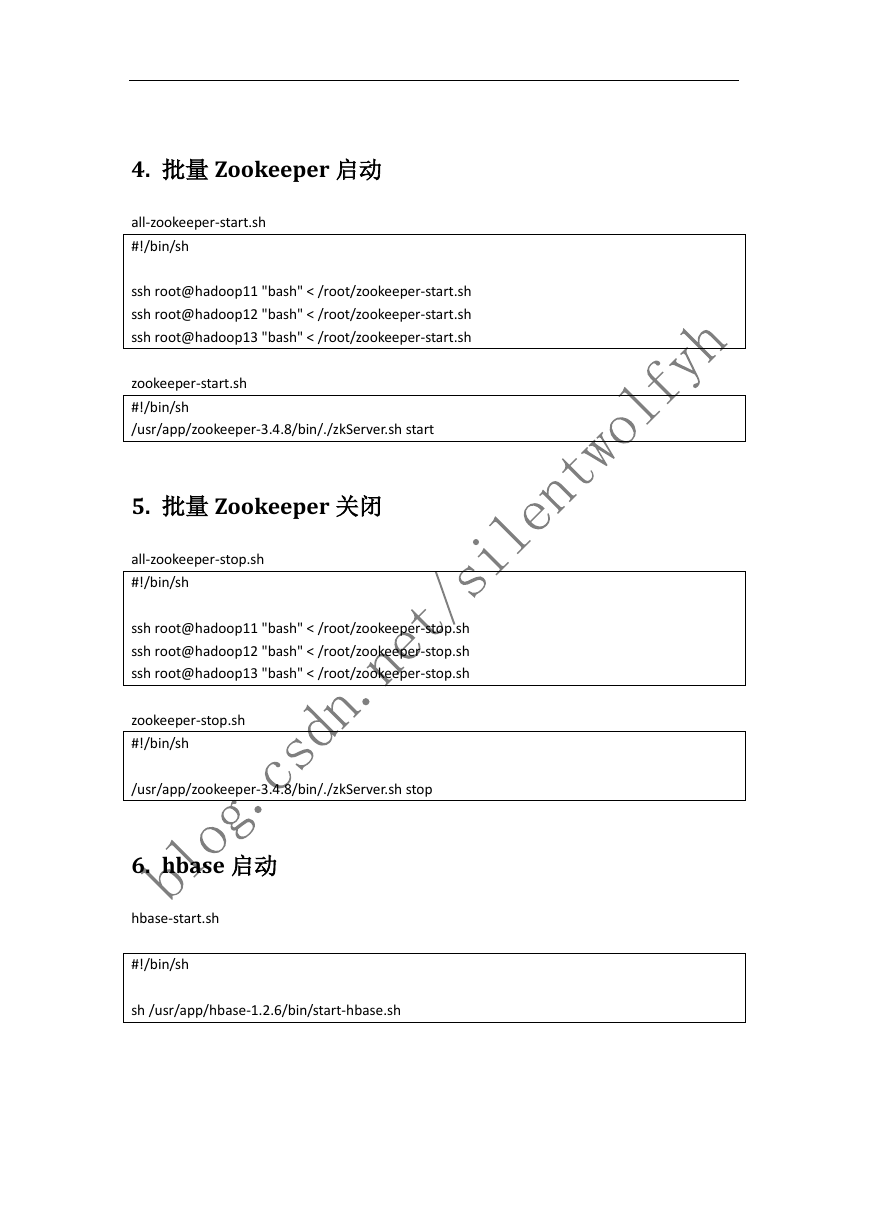

4. 批量 Zookeeper 启动

all-zookeeper-start.sh

#!/bin/sh

ssh root@hadoop11 "bash" < /root/zookeeper-start.sh

ssh root@hadoop12 "bash" < /root/zookeeper-start.sh

ssh root@hadoop13 "bash" < /root/zookeeper-start.sh

zookeeper-start.sh

#!/bin/sh

/usr/app/zookeeper-3.4.8/bin/./zkServer.sh start

5. 批量 Zookeeper 关闭

all-zookeeper-stop.sh

#!/bin/sh

ssh root@hadoop11 "bash" < /root/zookeeper-stop.sh

ssh root@hadoop12 "bash" < /root/zookeeper-stop.sh

ssh root@hadoop13 "bash" < /root/zookeeper-stop.sh

zookeeper-stop.sh

#!/bin/sh

/usr/app/zookeeper-3.4.8/bin/./zkServer.sh stop

6. hbase 启动

hbase-start.sh

#!/bin/sh

sh /usr/app/hbase-1.2.6/bin/start-hbase.sh

�

7. hive 启动

hive-start.sh

#!/bin/sh

sh /usr/app/hive-0.12.0/bin/hive

四、 zookeeper 安装

1.上传 zk 安装包

[root@hadoop11 app]# ls

hadoop-2.7.3.tar.gz jdk1.8.0_77 zookeeper-3.4.8.tar.gz

2.解压

tar -zxvf zookeeper-3.4.8.tar.gz -C /usr/app/

3.配置(先在一台节点上配置)

3.1 添加一个 zoo.cfg 配置文件

cd zookeeper-3.4.8/conf/

cp -r zoo_sample.cfg zoo.cfg

3.2 修改配置文件(zoo.cfg)

建立/usr/app/zookeeper-3.4.8/data 目录,

mkdir /usr/app/zookeeper-3.4.8/data

配置 zoo.cfg

dataDir=/usr/app/zookeeper-3.4.8/data (the directory where the snapshot is stored.)

在最后一行添加

server.1=hadoop11:2888:3888

server.2=hadoop12:2888:3888

server.3=hadoop13:2888:3888

3.3 在(dataDir=/usr/app/zookeeper-3.4.8/data)创建一个 myid 文件,里面内容是 server.N 中

的 N(server.2 里面内容为 2)

echo "1" >myid

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc