Ten Key Ideas for

Reinforcement Learning and Optimal Control

Dimitri P. Bertsekas

Department of Electrical Engineering and Computer Science

Massachusetts Institute of Technology

and

School of Computing, Informatics, and Decision Systems Engineering

Arizona State University

August 2019

Bertsekas (M.I.T.)

Reinforcement Learning

1 / 82

�

Reinforcement Learning (RL): A Happy Union of AI and

Decision/Control/Dynamic Programming (DP) Ideas

Historical highlights

Exact DP, optimal control (Bellman, Shannon, 1950s ...)

First impressive successes: Backgammon programs (Tesauro, 1992, 1996)

Algorithmic progress, analysis, applications, first books (mid 90s ...)

Machine Learning, BIG Data, Robotics, Deep Neural Networks (mid 2000s ...)

Bertsekas (M.I.T.)

Reinforcement Learning

2 / 82

Decision/Control/DPPrinciple of OptimalityMarkov Decision ProblemsPOMDP Policy IterationValue IterationAI/RLLearning through ExperienceSimulation,Model-Free Methods Feature-Based RepresentationsA*/Games/HeuristicsComplementary IdeasLate 80s-Early 90s�

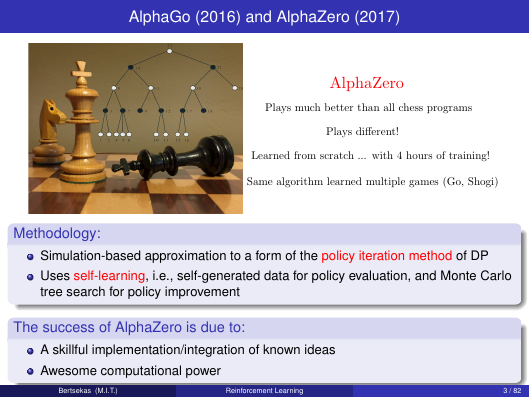

AlphaGo (2016) and AlphaZero (2017)

Methodology:

Simulation-based approximation to a form of the policy iteration method of DP

Uses self-learning, i.e., self-generated data for policy evaluation, and Monte Carlo

tree search for policy improvement

The success of AlphaZero is due to:

A skillful implementation/integration of known ideas

Awesome computational power

Bertsekas (M.I.T.)

Reinforcement Learning

3 / 82

AlphaZero(Google-DeepMind)Playsdifferent!Learnedfromscratch...with4hoursoftraining!PlaysmuchbetterthanallchessprogramsSamealgorithmlearnedmultiplegames(Go,Shogi)�

Approximate DP/RL Methodology is now Ambitious and Universal

Exact DP applies (in principle) to a very broad range of optimization problems

Deterministic <—-> Stochastic

Combinatorial optimization <—-> Optimal control w/ infinite state/control spaces

One decision maker <—-> Two player games

... BUT is plagued by the curse of dimensionality and need for a math model

Approximate DP/RL overcomes the difficulties of exact DP by:

Approximation (use neural nets and other architectures to reduce dimension)

Simulation (use a computer model in place of a math model)

State of the art:

Broadly applicable methodology: Can address broad range of challenging

problems. Deterministic-stochastic-dynamic, discrete-continuous, games, etc

There are no methods that are guaranteed to work for all or even most problems

There are enough methods to try with a reasonable chance of success for most

types of optimization problems

Role of the theory: Guide the art, delineate the sound ideas

Bertsekas (M.I.T.)

Reinforcement Learning

4 / 82

�

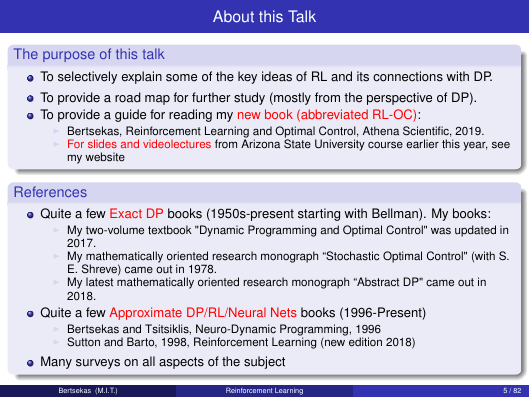

About this Talk

The purpose of this talk

To selectively explain some of the key ideas of RL and its connections with DP.

To provide a road map for further study (mostly from the perspective of DP).

To provide a guide for reading my new book (abbreviated RL-OC):

Bertsekas, Reinforcement Learning and Optimal Control, Athena Scientific, 2019.

For slides and videolectures from Arizona State University course earlier this year, see

my website

References

Quite a few Exact DP books (1950s-present starting with Bellman). My books:

2017.

My two-volume textbook "Dynamic Programming and Optimal Control" was updated in

My mathematically oriented research monograph “Stochastic Optimal Control" (with S.

My latest mathematically oriented research monograph “Abstract DP" came out in

E. Shreve) came out in 1978.

2018.

Quite a few Approximate DP/RL/Neural Nets books (1996-Present)

Bertsekas and Tsitsiklis, Neuro-Dynamic Programming, 1996

Sutton and Barto, 1998, Reinforcement Learning (new edition 2018)

Many surveys on all aspects of the subject

Bertsekas (M.I.T.)

Reinforcement Learning

5 / 82

�

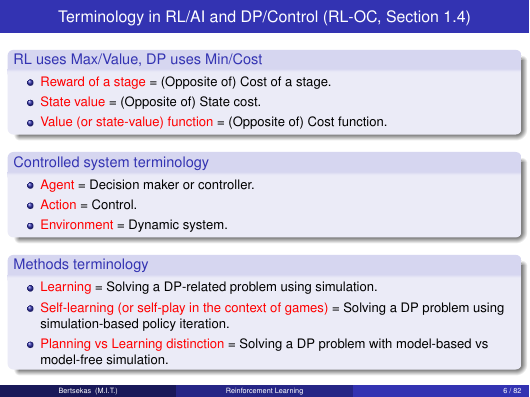

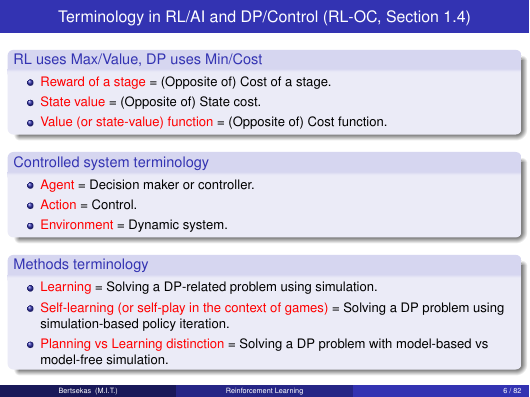

Terminology in RL/AI and DP/Control (RL-OC, Section 1.4)

RL uses Max/Value, DP uses Min/Cost

Reward of a stage = (Opposite of) Cost of a stage.

State value = (Opposite of) State cost.

Value (or state-value) function = (Opposite of) Cost function.

Controlled system terminology

Agent = Decision maker or controller.

Action = Control.

Environment = Dynamic system.

Methods terminology

Learning = Solving a DP-related problem using simulation.

Self-learning (or self-play in the context of games) = Solving a DP problem using

simulation-based policy iteration.

Planning vs Learning distinction = Solving a DP problem with model-based vs

model-free simulation.

Bertsekas (M.I.T.)

Reinforcement Learning

6 / 82

�

AN OUTLINE OF THE SUBJECT - TEN KEY IDEAS

1 Principle of Optimality

2 Approximation in Value Space

3 Approximation in Policy Space

4 Model-Free Methods and Simulation

5 Policy Improvement, Rollout, and Self-Learning

6 Approximate Policy Improvement, Adaptive Simulation, and Q-Learning

7 Features, Approximation Architectures, and Deep Neural Nets

8

Incremental and Stochastic Gradient Optimization

9 Direct Policy Optimization: A More General Approach

10 Gradient and Random Search Methods for Direct Policy Optimization

Bertsekas (M.I.T.)

Reinforcement Learning

7 / 82

�

1. PRINCIPLE OF OPTIMALITY

(RL-OC, Chapter 1)

Bertsekas (M.I.T.)

Reinforcement Learning

9 / 82

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc