Self-Supervised Learning

Andrew Zisserman

July 2019

Slides from: Carl Doersch, Ishan Misra, Andrew Owens, AJ Piergiovanni, Carl Vondrick, Richard Zhang

�

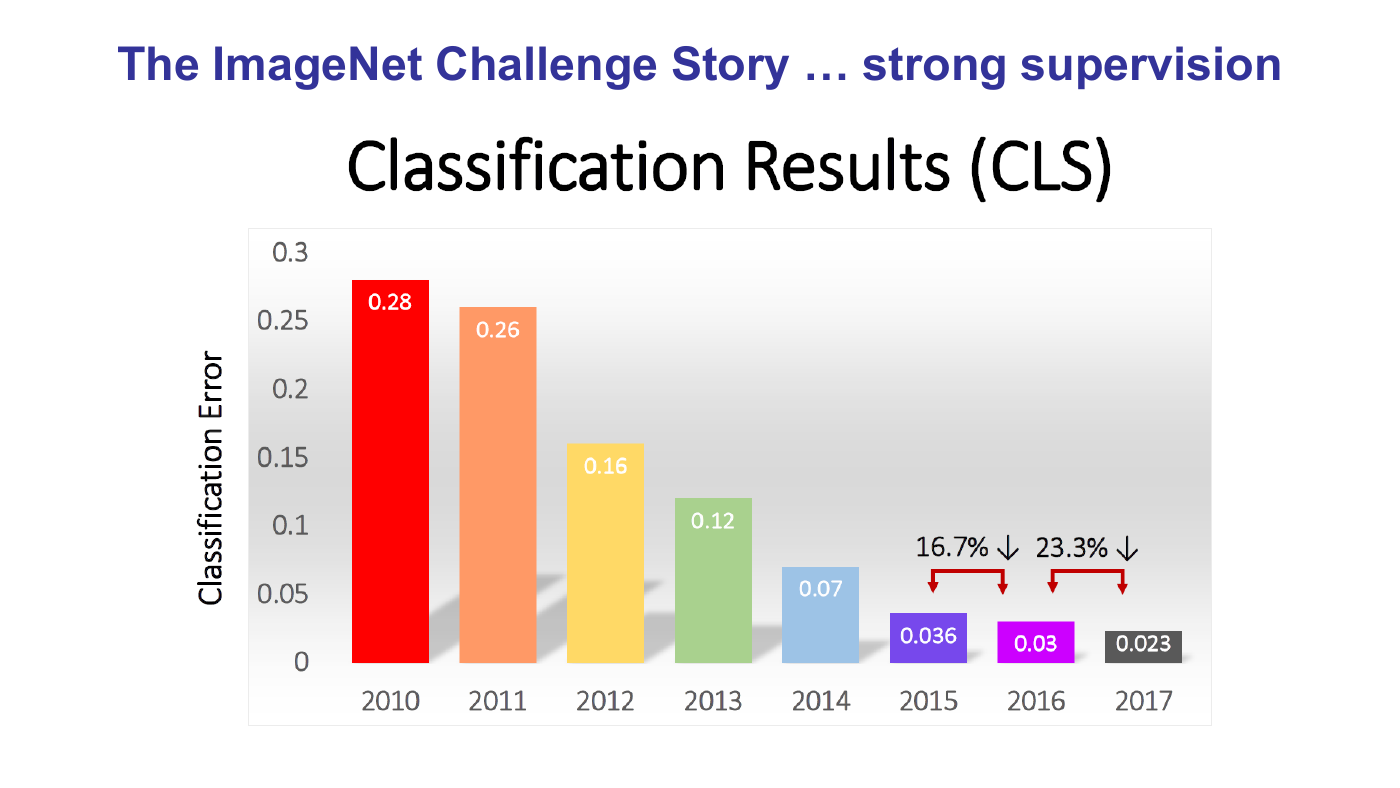

The ImageNet Challenge Story …

1000 categories

• Training: 1000 images for each category

• Testing: 100k images

�

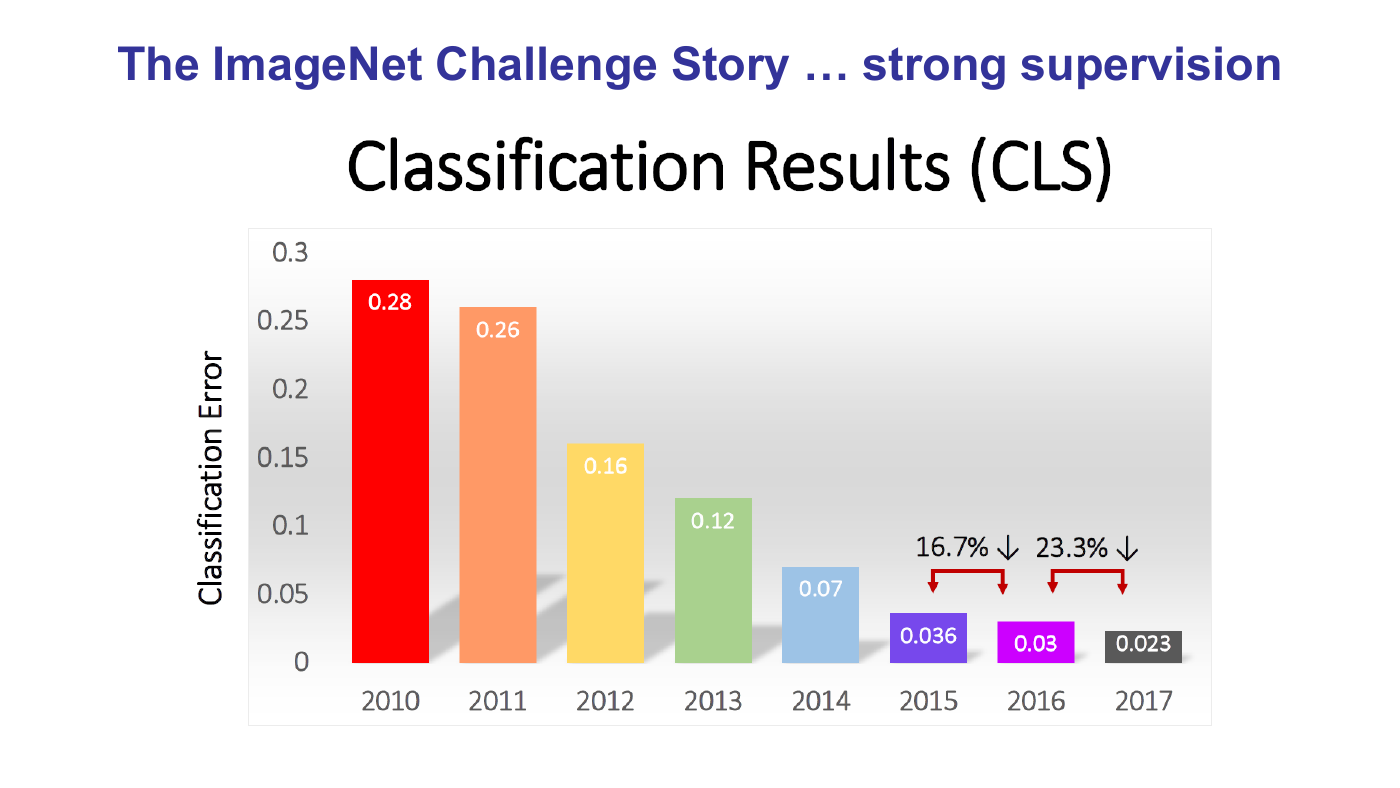

The ImageNet Challenge Story … strong supervision

�

The ImageNet Challenge Story … outcomes

Strong supervision:

• Features from networks trained on ImageNet can be used for other visual tasks, e.g.

detection, segmentation, action recognition, fine grained visual classification

• To some extent, any visual task can be solved now by:

1. Construct a large-scale dataset labelled for that task

2. Specify a training loss and neural network architecture

3. Train the network and deploy

• Are there alternatives to strong supervision for training? Self-Supervised learning ….

�

Why Self-Supervision?

1. Expense of producing a new dataset for each new task

2. Some areas are supervision-starved, e.g. medical data, where it is hard to obtain

annotation

3. Untapped/availability of vast numbers of unlabelled images/videos

– Facebook: one billion images uploaded per day

– 300 hours of video are uploaded to YouTube every minute

4. How infants may learn …

�

Self-Supervised Learning

The Scientist in the Crib: What Early Learning Tells Us About the Mind

by Alison Gopnik, Andrew N. Meltzoff and Patricia K. Kuhl

The Development of Embodied Cognition: Six Lessons from Babies

by Linda Smith and Michael Gasser

�

What is Self-Supervision?

• A form of unsupervised learning where the data provides the supervision

• In general, withhold some part of the data, and task the network with predicting it

• The task defines a proxy loss, and the network is forced to learn what we really

care about, e.g. a semantic representation, in order to solve it

• In recent work we might also choose tasks that we care about ….

�

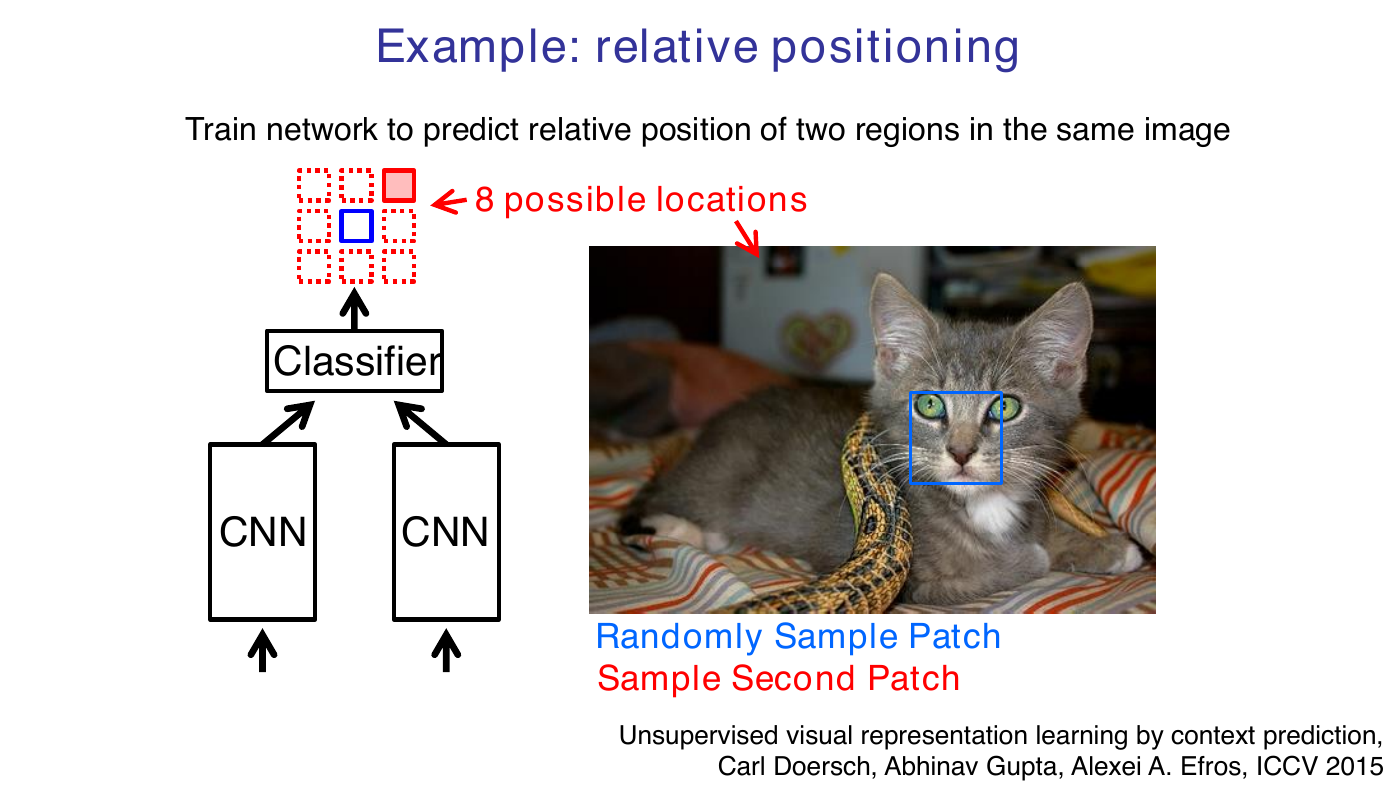

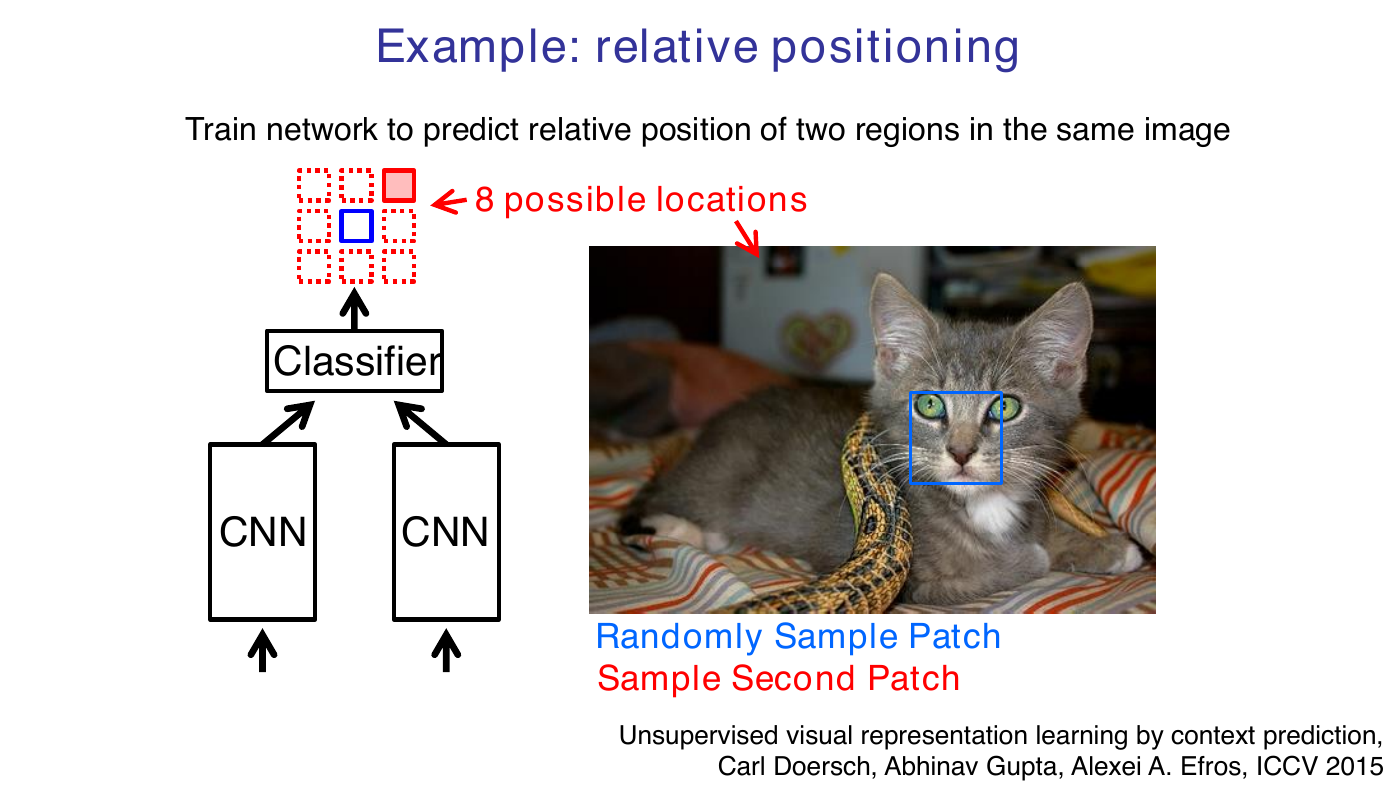

Example: relative positioning

Train network to predict relative position of two regions in the same image

8 possible locations

Classifier

CNN

CNN

Randomly Sample Patch

Sample Second Patch

Unsupervised visual representation learning by context prediction,

Carl Doersch, Abhinav Gupta, Alexei A. Efros, ICCV 2015

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc