i

i

1

i

i

GPOPS − II: A MATLAB Software for Solving Multiple-Phase Optimal

Control Problems Using hp-Adaptive Gaussian Quadrature

Collocation Methods and Sparse Nonlinear Programming

MICHAEL A. PATTERSON and ANIL V. RAO, University of Florida

A general-purpose MATLAB software program called GPOPS − II is described for solving multiple-phase

optimal control problems using variable-order Gaussian quadrature collocation methods. The software em-

ploys a Legendre-Gauss-Radau quadrature orthogonal collocation method where the continuous-time opti-

mal control problem is transcribed to a large sparse nonlinear programming problem (NLP). An adaptive

mesh refinement method is implemented that determines the number of mesh intervals and the degree of

the approximating polynomial within each mesh interval to achieve a specified accuracy. The software can

be interfaced with either quasi-Newton (first derivative) or Newton (second derivative) NLP solvers, and

all derivatives required by the NLP solver are approximated using sparse finite-differencing of the optimal

control problem functions. The key components of the software are described in detail and the utility of the

software is demonstrated on five optimal control problems of varying complexity. The software described in

this article provides researchers a useful platform upon which to solve a wide variety of complex constrained

optimal control problems.

Categories and Subject Descriptors: G.1.4 [Numerical Analysis]: Quadrature and Numerical

Differentiation

General Terms: Algorithms, Design, Verification

Additional Key Words and Phrases: Optimal control, direct collocation, Gaussian quadrature, hp–adaptive

methods, numerical methods, MATLAB, scientific computation, applied mathematics

ACM Reference Format:

Patterson, M. A. and Rao, A. V. 2012. GPOPS-II: A MATLAB software for solving multiple-phase optimal

control problems using hp–adaptive Gaussian quadrature collocation methods and sparse nonlinear pro-

gramming. ACM Trans. Math. Softw. 41, 1, Article 1 (October 2014), 37 pages.

DOI:http://dx.doi.org/10.1145/2558904

1. INTRODUCTION

Optimal control problems arise in a wide variety of subjects including virtually all

branches of engineering, economics, and medicine. Because optimal control applica-

tions have increased in complexity in recent years, over the past two decades the

subject of optimal control has transitioned from theory to computation. In particular,

computational optimal control has become a science in and of itself, resulting in a vari-

ety of numerical methods and corresponding software implementations of these meth-

ods. The vast majority of software implementations of optimal control today are those

that involve the direct transcription of a continuous-time optimal control problem

The authors gratefully acknowledge partial support for this research from the U.S. Office of Naval Research

(ONR) under Grant N00014-11-1-0068 and from the U.S. Defense Advanced Research Projects Agency

(DARPA) Under Contract HR0011-12-C-0011. The views expressed are those of the authors and do not

reflect the official policy or position of the Department of Defense or the U.S. Government.

Author’s addresses: M. A. Patterson and A. V. Rao, Department of Mechanical and Aerospace Engineering,

P.O. Box 116250, University of Florida, Gainesville, FL 32611-6250; e-mail: {mpatterson,anilvrao}@ufl.edu.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted

without fee provided that copies are not made or distributed for profit or commercial advantage and that

copies bear this notice and the full citation on the first page. Copyrights for components of this work owned

by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or repub-

lish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request

permissions from permissions@acm.org.

© 2014 ACM 0098-3500/2014/10-ART1 $15.00

DOI:http://dx.doi.org/10.1145/2558904

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

i

i

i

i

1:2

M. A. Patterson and A. V. Rao

to a nonlinear programming problem (NLP), and the NLP is solved using well-

established techniques. Examples of well-known software for solving optimal control

problems include SOCS [Betts 1998], DIRCOL [von Stryk 2000], GESOP [Jansch et al.

1994], OTIS [Vlases et al. 1990], MISER [Goh and Teo 1988], PSOPT [Becerra 2009],

GPOPS [Rao et al. 2010], ICLOCS [Falugi et al. 2010], JModelica [Åkesson et al. 2010],

ACADO [Houska et al. 2011], and Sparse Optimization Suite (SOS) [Betts 2013].

Over the past two decades, direct collocation methods have become popular in

the numerical solution of nonlinear optimal control problems. In a direct collocation

method, the state and control are discretized at a set of appropriately chosen points

in the time interval of interest. The continuous-time optimal control problem is then

transcribed to a finite-dimensional NLP. The resulting NLP is then solved using well-

known software such as SNOPT [Gill et al. 2002], IPOPT [Wächter and Biegler 2006;

Biegler and Zavala 2008], and KNITRO [Byrd et al. 2006]. Originally, direct collo-

cation methods were developed as h methods (e.g., Euler or Runge-Kutta methods)

where the time interval is divided into a mesh and the state is approximated using

the same fixed-degree polynomial in each mesh interval. Convergence in an h method

is then achieved by increasing the number and placement of the mesh points [Betts

2010; Jain and Tsiotras 2008; Zhao and Tsiotras 2011]. More recently, a great deal

of research as been done in the class of direct Gaussian quadrature orthogonal collo-

cation methods [Benson et al. 2006; Darby et al. 2011a; Elnagar and Razzaghi 1998;

Elnagar et al. 1995; Garg et al. 2010, 2011a, 2011b; Gong et al. 2008b; Huntington

and Rao 2008; Huntington et al. 2007; Kameswaran and Biegler 2008; Patterson and

Rao 2012; Rao et al. 2010]. In a Gaussian quadrature collocation method, the state is

typically approximated using a Lagrange polynomial where the support points of the

Lagrange polynomial are chosen to be points associated with a Gaussian quadrature.

Originally, Gaussian quadrature collocation methods were implemented as p methods

using a single interval. Convergence of the p method was then achieved by increasing

the degree of the polynomial approximation. For problems whose solutions are smooth

and well behaved, a p Gaussian quadrature collocation method has a simple structure

and converges at an exponential rate [Canuto et al. 1988; Fornberg 1998; Trefethen

2000]. The most well-developed p Gaussian quadrature methods are those that employ

either Legendre-Gauss (LG) points [Benson et al. 2006; Rao et al. 2010], Legendre-

Gauss-Radau (LGR) points [Garg et al. 2010, 2011a; Kameswaran and Biegler 2008],

or Legendre-Gauss-Lobatto (LGL) points [Elnagar et al. 1995].

In this article we describe a new optimal control software called GPOPS − II that em-

ploys hp-adaptive Gaussian quadrature collocation methods. An hp-adaptive method

is a hybrid between a p method and an h method in that both the number of mesh

intervals and the degree of the approximating polynomial within each mesh interval

can be varied in order to achieve a specified accuracy in the numerical approxima-

tion of the solution to the continuous-time optimal control problem. As a result, in

an hp-adaptive method, it is possible to take advantage of the exponential conver-

gence of a global Gaussian quadrature method in regions where the solution is smooth

and introduce mesh points only near potential discontinuities or in regions where the

solution changes rapidly. Originally, hp methods were developed as finite-element

methods for solving partial differential equations [Babuska et al. 1986; Babuska and

Rheinboldt 1979, 1981, 1982]. In the past few years, the problem of developing hp

methods for solving optimal control problems has been of interest [Darby et al. 2011b;

2011c]. The work of Darby et al. [2011b, 2011c] provides examples of the benefits of

using an hp-adaptive method over either a p method or an h method. This recent

research has shown that convergence using hp methods can be achieved with a

significantly smaller finite-dimensional approximation than would be required when

using either an h or a p method.

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

i

i

i

i

GPOPS − II: A MATLAB Software for Solving Multiple-Phase Optimal Control Problems

1:3

It is noted that previously the software GPOPS was published in Rao et al. [2010].

While GPOPS is similar to GPOPS − II in that both software programs implement

Gaussian quadrature collocation, GPOPS − II is a fundamentally different software

program from GPOPS. First, GPOPS employs p (global) collocation in each phase of

the optimal control problem. It is known that p collocation schemes are limited in that

they have difficulty solving problems whose solutions change rapidly in certain re-

gions or are discontinuous. Moreover, p methods become computationally intractable

as the degree of the approximating polynomial becomes very large. GPOPS − II, how-

ever, employs hp-adaptive mesh refinement where the polynomial degree, number of

mesh intervals, and width of each mesh interval can be varied. The hp-adaptive meth-

ods allow for placement of collocation points in regions of the solution where additional

information is needed to capture key features of the optimal solution. Next, GPOPS is

limited in that it can be used with only quasi-Newton (first derivative) NLP solvers and

derivative approximations were performed on high dimensional NLP functions. On the

other hand, GPOPS − II implements sparse derivative approximations by approximat-

ing derivatives of the optimal control functions and inserting these derivatives into the

appropriate locations in the NLP derivative functions. Moreover, GPOPS − II imple-

ments approximations to both first and second derivatives. Consequently, GPOPS − II

utilizes in an efficient manner the full capabilities of a much wider range of NLP

solvers (e.g., full Newton NLP solvers such as IPOPT [Biegler and Zavala 2008] and

KNITRO [Byrd et al. 2006]) and, as a result, is capable of solving a much wider range

of optimal control problems as compared with GPOPS.

The objective of this article is to provide researchers with a novel efficient general-

purpose optimal control software that is capable of solving a wide variety of complex

constrained continuous-time optimal control problems. In particular, the software de-

scribed in this article employs a differential and implicit integral form of the multiple-

interval version of the Legendre-Gauss-Radau (LGR) collocation method [Garg et al.

2010, 2011a, 2011b; Patterson and Rao 2012]. The LGR collocation method is chosen

for use in the software because it provides highly accurate state, control, and costate

approximations while maintaining a relatively low-dimensional approximation of the

continuous-time problem. The key components of the software are then described, and

the software is demonstrated on five examples from the open literature that have been

studied extensively and whose solutions are known. Each of these examples demon-

strates different capabilities of the software. The first example is the hypersensitive

optimal control problem from Rao and Mease [2000] and demonstrates the ability of

the software to accurately solve a problem whose optimal solution changes rapidly in

particular regions of the solution. The second example is the reusable launch vehicle

entry problem taken from Betts [2010] and demonstrates the ability of GPOPS − II to

compute an accurate solution using a relatively coarse mesh. The third example is a

space station attitude control problem taken from Pietz [2003] and Betts [2010] and

demonstrates the ability of the software to generate accurate solutions to a problem

whose solution is not intuitive. The fourth example is a chemical kinetic batch reactor

problem taken from Leineweber [1998] and Betts [2010] and shows the ability of the

software to handle a multiple-phase optimal control problem that is poorly scaled. The

fifth example is a launch vehicle ascent problem taken from Benson [2004], Rao et al.

[2010], and Betts [2010] that again demonstrates the ability of the software to solve

a multiple-phase optimal control problem. In order to validate the results, the solu-

tions obtained using GPOPS − II are compared against the solutions obtained using

the software Sparse Optimization Suite (SOS) [Betts 2013] where SOS is based on the

collection of algorithms developed in Betts [2010].

This article is organized as follows: In Section 2, we describe a general multiple-

phase optimal control problem. In Section 3, we describe the Radau collocation method

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

i

i

i

i

1:4

M. A. Patterson and A. V. Rao

that is used as the basis of GPOPS − II. In Section 4, we describe the key components of

GPOPS − II. In Section 5, we show the results obtained using the software on the five

aforementioned examples. In Section 6, we provide a discussion of the results obtained

using the software. In Section 7, we discuss possible limitations of the software. Finally,

in Section 8, we provide conclusions on our work.

2. GENERAL MULTIPLE-PHASE OPTIMAL CONTROL PROBLEMS

The general multiple-phase optimal control problem that can be solved by GPOPS − II

is given as follows. First, let p ∈ [ 1, . . . , P] be the phase number where P as the total

number of phases. The optimal control problem is to determine the state, y(p)(t) ∈ Rn(p)

y ,

control, u(p)(t) ∈ Rn(p)

∈ R, phase terminus

∈ R, in all phases p ∈ [ 1, . . . , P], along with the static parameters s ∈ Rns,

times, t(p)

that minimize the objective functional

J = φ

e(1), . . . , e(P), s

u , integrals, q(p) ∈ Rn(p)

q , start times, t(p)

0

,

f

subject to the dynamic constraints

˙y(p) = a(p)(y(p), u(p), t(p), s),

(p = 1, . . . , P),

e(1), . . . , e(P), s

≤ bmax,

the event constraints

bmin ≤ b

the inequality path constraints

≤ c(p)(y(p), u(p), t(p), s) ≤ c(p)

max,

(p = 1, . . . , P),

c(p)

min

the static parameter constraints

smin ≤ s ≤ smax,

and the integral constraints

where

q(p)

min

t(p)

0

y(p)

≤ q(p) ≤ q(p)

max,

t(p)

f

, t(p)

0 , y(p)

e(p) =

(p = 1, . . . , P),

, t(p)

f

, q(p)

, (p = 1, . . . , P),

and the integrals in each phase are defined as

=

q(p)

i

g(p)

i

(y(p), u(p), t(p), s)dt,

(i = 1, . . . n(p)

q ), (p = 1, . . . , P).

t(p)

f

t(p)

0

(1)

(2)

(3)

(4)

(5)

(6)

(7)

(8)

It is important to note that the event constraints of Eq. (3) can contain any functions

that relate information at the start and/or terminus of any phase (including relation-

ships that include both static parameters and integrals) and that the phases them-

selves need not be sequential. It is noted that the approach to linking phases is based

on well-known formulations in the literature such as those given in Betts [1990, 2010].

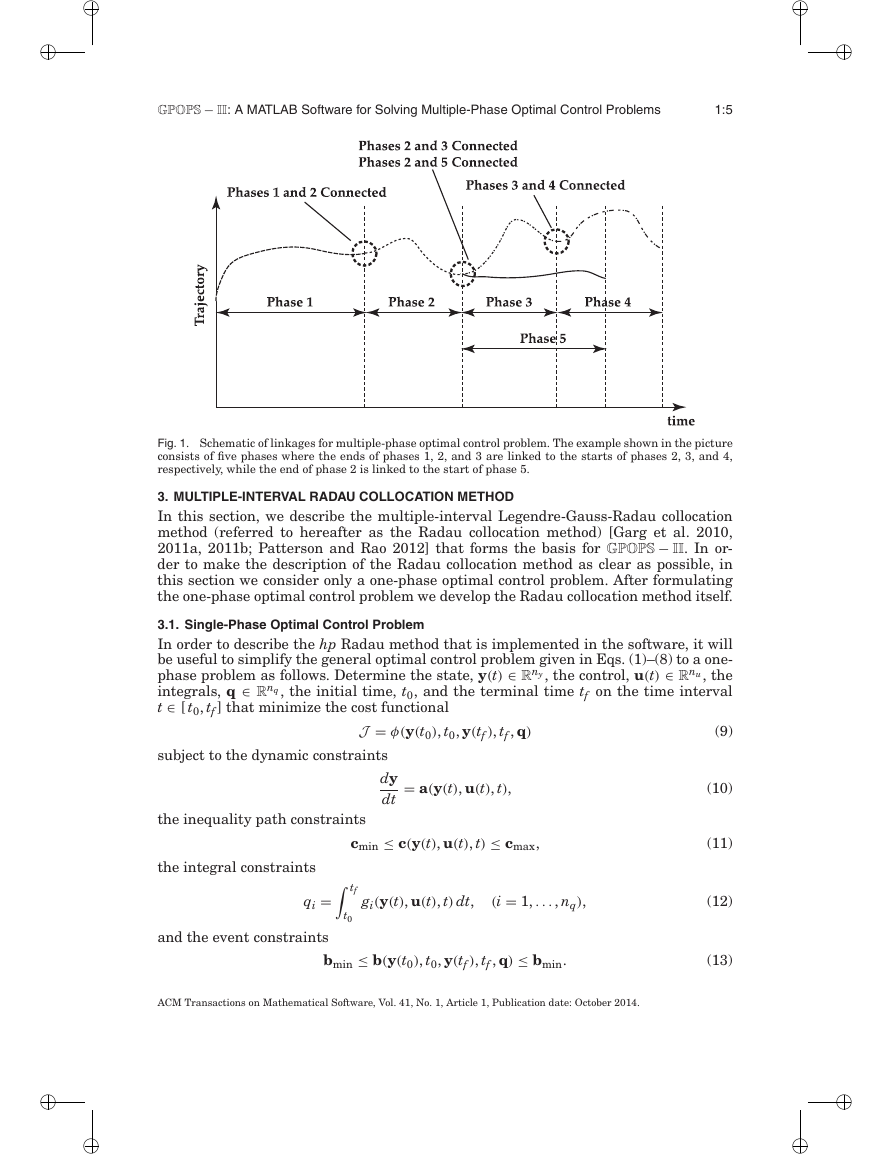

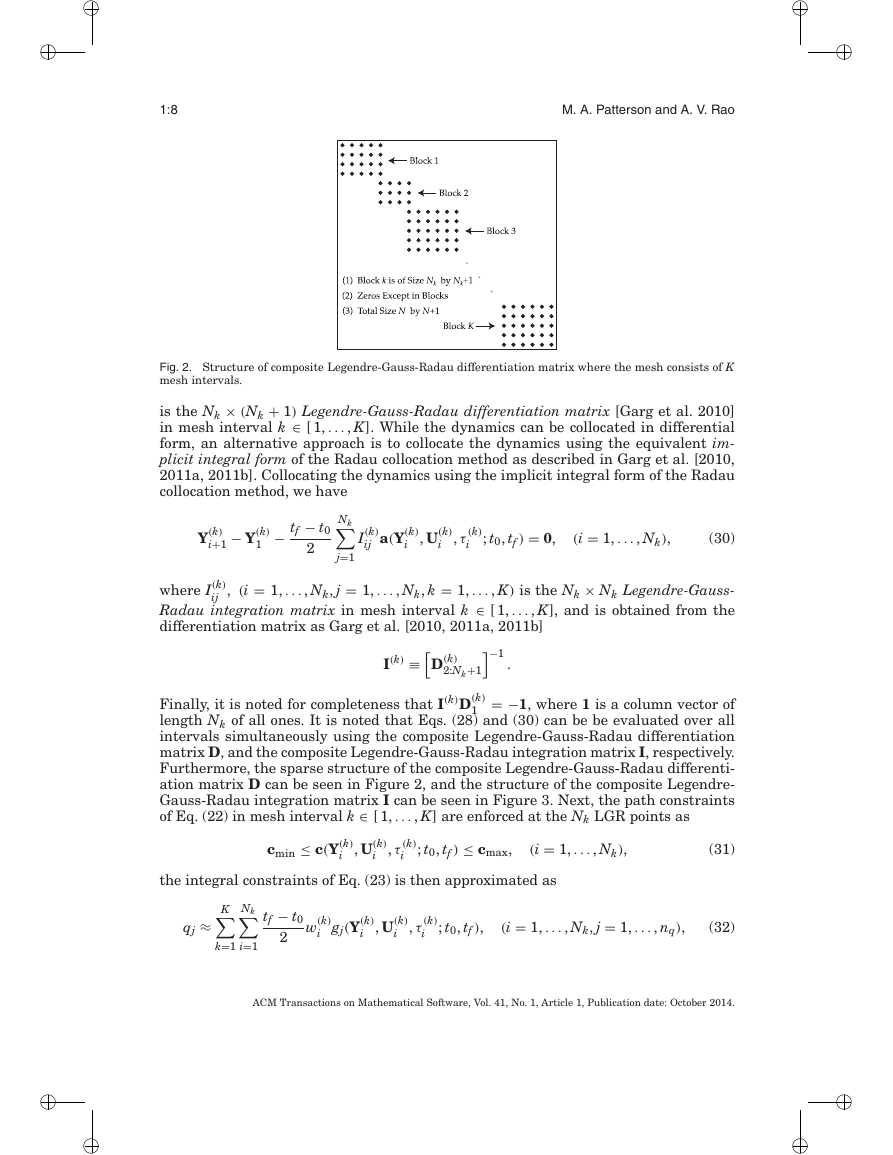

A schematic of how phases can potentially be linked is given in Figure 1.

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

i

i

i

i

GPOPS − II: A MATLAB Software for Solving Multiple-Phase Optimal Control Problems

1:5

Fig. 1. Schematic of linkages for multiple-phase optimal control problem. The example shown in the picture

consists of five phases where the ends of phases 1, 2, and 3 are linked to the starts of phases 2, 3, and 4,

respectively, while the end of phase 2 is linked to the start of phase 5.

3. MULTIPLE-INTERVAL RADAU COLLOCATION METHOD

In this section, we describe the multiple-interval Legendre-Gauss-Radau collocation

method (referred to hereafter as the Radau collocation method) [Garg et al. 2010,

2011a, 2011b; Patterson and Rao 2012] that forms the basis for GPOPS − II. In or-

der to make the description of the Radau collocation method as clear as possible, in

this section we consider only a one-phase optimal control problem. After formulating

the one-phase optimal control problem we develop the Radau collocation method itself.

3.1. Single-Phase Optimal Control Problem

In order to describe the hp Radau method that is implemented in the software, it will

be useful to simplify the general optimal control problem given in Eqs. (1)–(8) to a one-

phase problem as follows. Determine the state, y(t) ∈ Rny, the control, u(t) ∈ Rnu, the

integrals, q ∈ Rnq, the initial time, t0, and the terminal time tf on the time interval

t ∈ [ t0, tf ] that minimize the cost functional

J = φ (y(t0), t0, y(tf ), tf , q)

subject to the dynamic constraints

= a(y(t), u(t), t),

dy

dt

the inequality path constraints

the integral constraints

qi =

and the event constraints

t0

cmin ≤ c(y(t), u(t), t) ≤ cmax,

tf

gi(y(t), u(t), t) dt,

(i = 1, . . . , nq),

bmin ≤ b(y(t0), t0, y(tf ), tf , q) ≤ bmin.

(9)

(10)

(11)

(12)

(13)

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

i

i

i

i

1:6

M. A. Patterson and A. V. Rao

The functions φ, q, a, c and b are defined by the following mappings:

φ : Rny × R × Rny × R × Rnq → R,

q : Rny × Rnu × R

→ Rnq,

a : Rny × Rnu × R

→ Rny,

c : Rny × Rnu × R

→ Rnc,

b : Rny × R × Rny × R × Rnq → Rnb,

where we remind the reader that all vector functions of time are treated as row vectors.

In order to employ the hp Radau collocation method used in GPOPS − II, the con-

tinuous optimal control problem of Eqs. (9)–(13) is modified as follows. First, let

τ ∈ [−1,+1] be a new independent variable. The variable t is then defined in terms of

τ as

t = tf − t0

τ + tf + t0

2

2

.

(14)

The optimal control problem of Eqs. (9)–(13) is then defined in terms of the variable

τ as follows. Determine the state, y(τ ) ∈ Rny, the control u(τ ) ∈ Rnu, the integral

q ∈ Rnq, the initial time, t0, and the terminal time tf on the time interval τ ∈ [−1,+1]

that minimize the cost functional

2

J = φ (y(−1), t0, y(+1), tf , q)

= tf − t0

a(y(τ ), u(τ ), τ; t0, tf ),

dy

dτ

cmin ≤ c(y(τ ), u(τ ), τ; t0, tf ) ≤ cmax,

+1

−1

gi(y(τ ), u(τ ), τ; t0, tf ) dτ,

(i = 1, . . . , nq),

subject to the dynamic constraints

the inequality path constraints

the integral constraints

qi = tf − t0

2

and the event constraints

(15)

(16)

(17)

(18)

(20)

(21)

(22)

bmin ≤ b(y(−1), t0, y(+1), tf , q) ≤ bmin.

(19)

Suppose now that the interval τ ∈ [−1,+1] is divided into a mesh consisting of K

mesh intervals [ Tk−1, Tk] , k = 1, . . . , K, where (T0, . . . , TK ) are the mesh points. The

mesh points have the property that −1 = T0 < T1 < T2 < ··· < TK = Tf = +1.

Next, let y(k)(τ ) and u(k)(τ ) be the state and control in mesh interval k. The optimal

control problem of Eqs. (15)–(19) can then written as follows. First, the cost functional

of Eq. (15) can be written as

J = φ (y(1)(−1), t0, y(K)(+1), tf , q),

Next, the dynamic constraints of Eq. (16) in mesh interval k can be written as

(k = 1, . . . , K).

a(y(k)(τ (k)), u(k)(τ (k)), τ (k); t0, tf ),

= tf − t0

dy(k)(τ (k))

dτ (k)

2

Furthermore, the path constraints of (17) in mesh interval k are given as

(k = 1, . . . , K).

cmin ≤ c(y(k)(τ (k)), u(k)(τ (k)), τ (k); t0, tf ) ≤ cmax,

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

i

i

i

i

GPOPS − II: A MATLAB Software for Solving Multiple-Phase Optimal Control Problems

1:7

the integral constraints of (18) are given as

qj = tf − t0

2

Tk

Tk−1

K

k=1

gj(y(k)(τ (k)), u(k)(τ (k)), τ (k); t0, tf ) dτ,

(j = 1, . . . , nq, k = 1 . . . , K).

(23)

Finally, the event constraints of Eq. (19) are given as

bmin ≤ b(y(1)(−1), t0, y(K)(+1), tf , q) ≤ bmax.

(24)

Because the state must be continuous at each interior mesh point, it is required

that the condition y(k)(Tk) = y(k+1)(Tk) be satisfied at the interior mesh points

(T1, . . . , TK−1).

3.2. Approximation of the Optimal Control Problem via Radau Collocation Method

The method utilized in the software is an implementation of the aforementioned Radau

quadrature orthogonal collocation method [Garg et al. 2010, 2011a, 2011b; Patterson

and Rao 2012]. In the Radau collocation method, the state of the continuous-time op-

timal control problem is approximated in each mesh interval k ∈ [ 1, . . . , K] as

y(k)(τ ) ≈ Y(k)(τ ) = Nk+1

Y(k)

j (k)

j

(τ ),

(k)

j

j=1

(τ ) = Nk+1

l=1

l=j

τ − τ (k)

− τ (k)

τ (k)

j

l

l

,

(25)

j

where τ ∈ [−1,+1], (k)

(τ ), j = 1, . . . , Nk + 1, is a basis of Lagrange polynomials,

(τ (k)

1 , . . . , τ (k)

) are the Legendre-Gauss-Radau [Abramowitz and Stegun 1965] (LGR)

Nk

collocation points in mesh interval k defined on the subinterval τ (k) ∈ [ Tk−1, Tk), and

= Tk is a noncollocated point. Differentiating Y(k)(τ ) in Eq. (25) with respect to

τ (k)

Nk+1

τ, we obtain

dY(k)(τ )

dτ

= Nk+1

j=1

Y(k)

j

(τ )

d(k)

j

dτ

.

(26)

(27)

The cost functional of Eq. (20) is then shown as

1 , t0, Y(K)

J = φ (Y(1)

NK+1, tf , q),

is the approximation of y(T0 = −1), and Y(K)

where Y(1)

NK+1 is the approximation of

y(TK = +1). Collocating the dynamics of Eq. (21) at the Nk LGR points using Eq. (26),

1

we have

where U(k)

in mesh interval k ∈ [ 1, . . . , K], and t(k)

i

2

i

i

i

a(Y(k)

, U(k)

, τ (k)

D(k)

ij Y(k)

j

− tf − t0

; t0, tf ) = 0,

Nk+1

j=1

, i = 1, . . . , Nk, are the approximations of the control at the Nk LGR points

⎡

⎣d(k)

j

dτ

i are obtained from τ (k)

j = 1, . . . , Nk + 1,

k using Eq. (14) and

(i = 1, . . . , Nk).

(i = 1, . . . , Nk,

k = 1, . . . , K),

⎤

⎦

(28)

(29)

(τ )

,

=

D(k)

ij

(k)

τ

i

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

i

i

i

i

1:8

M. A. Patterson and A. V. Rao

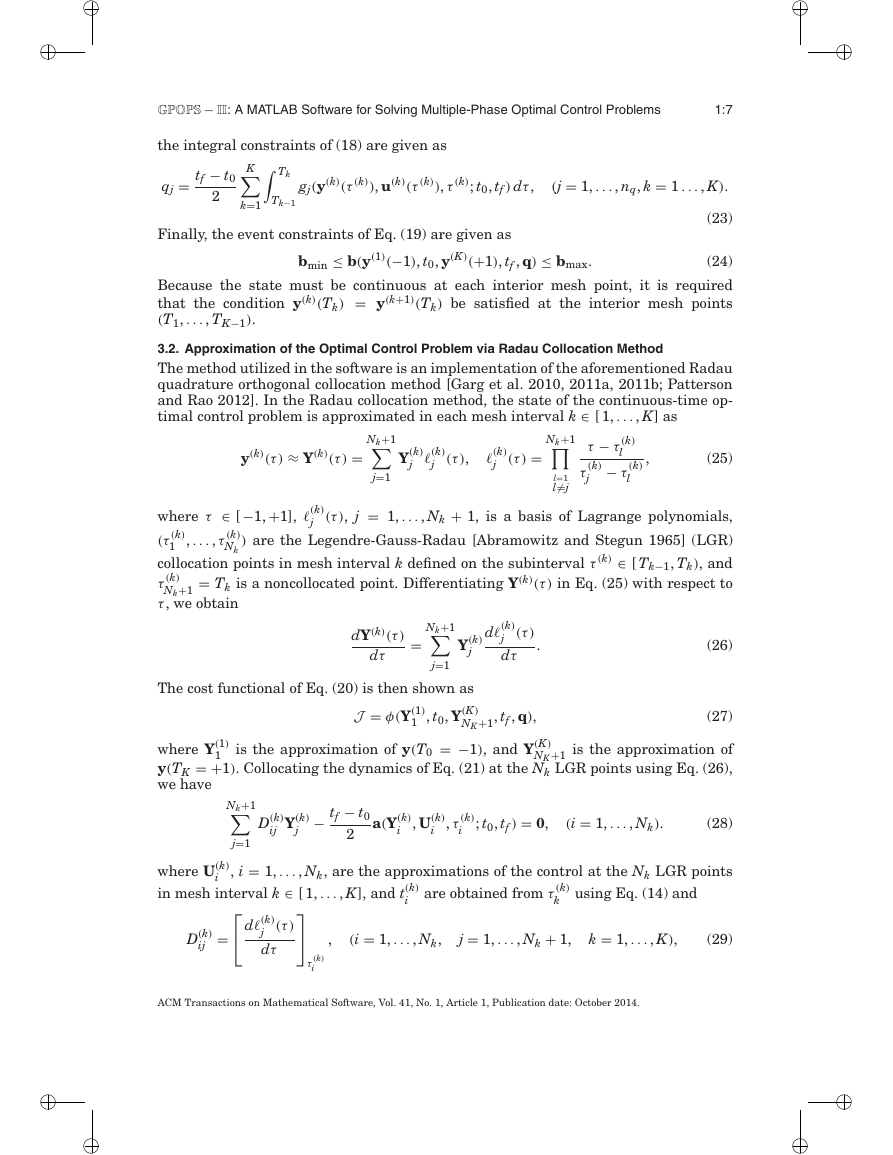

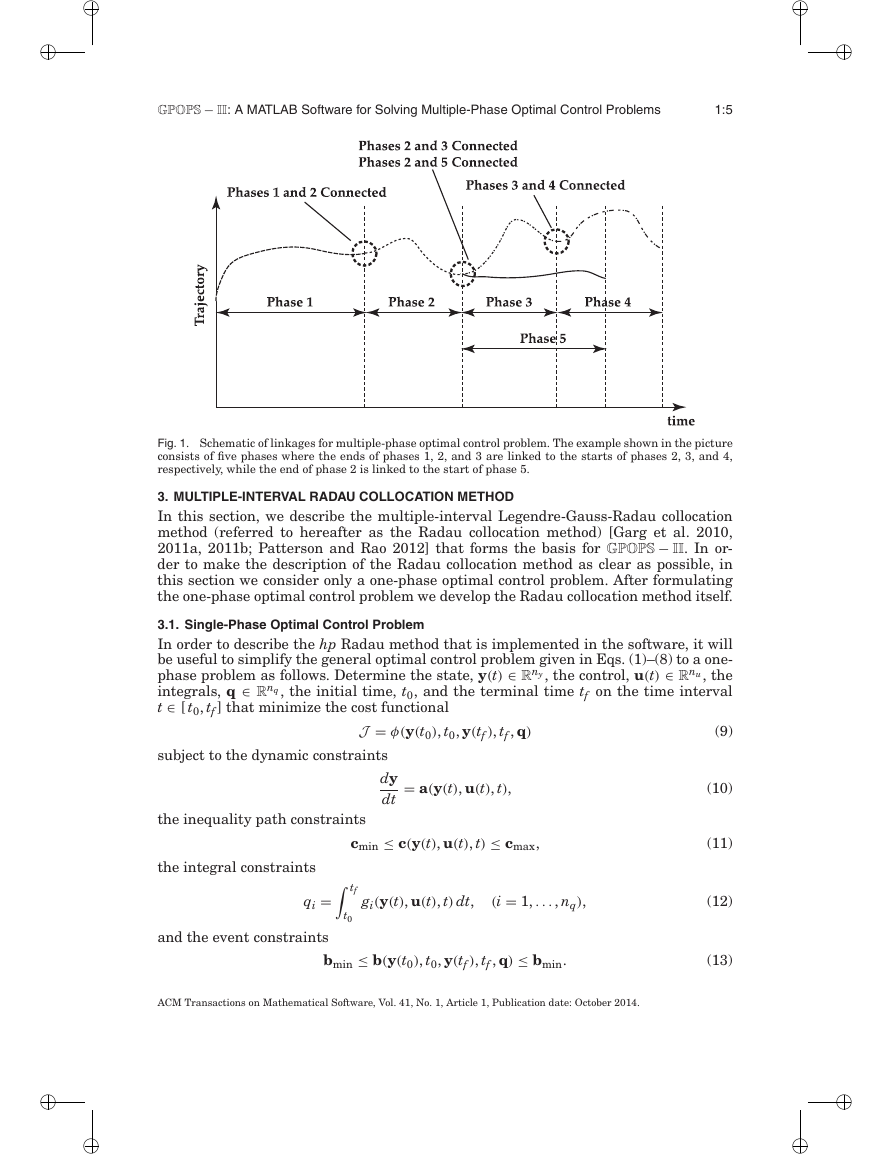

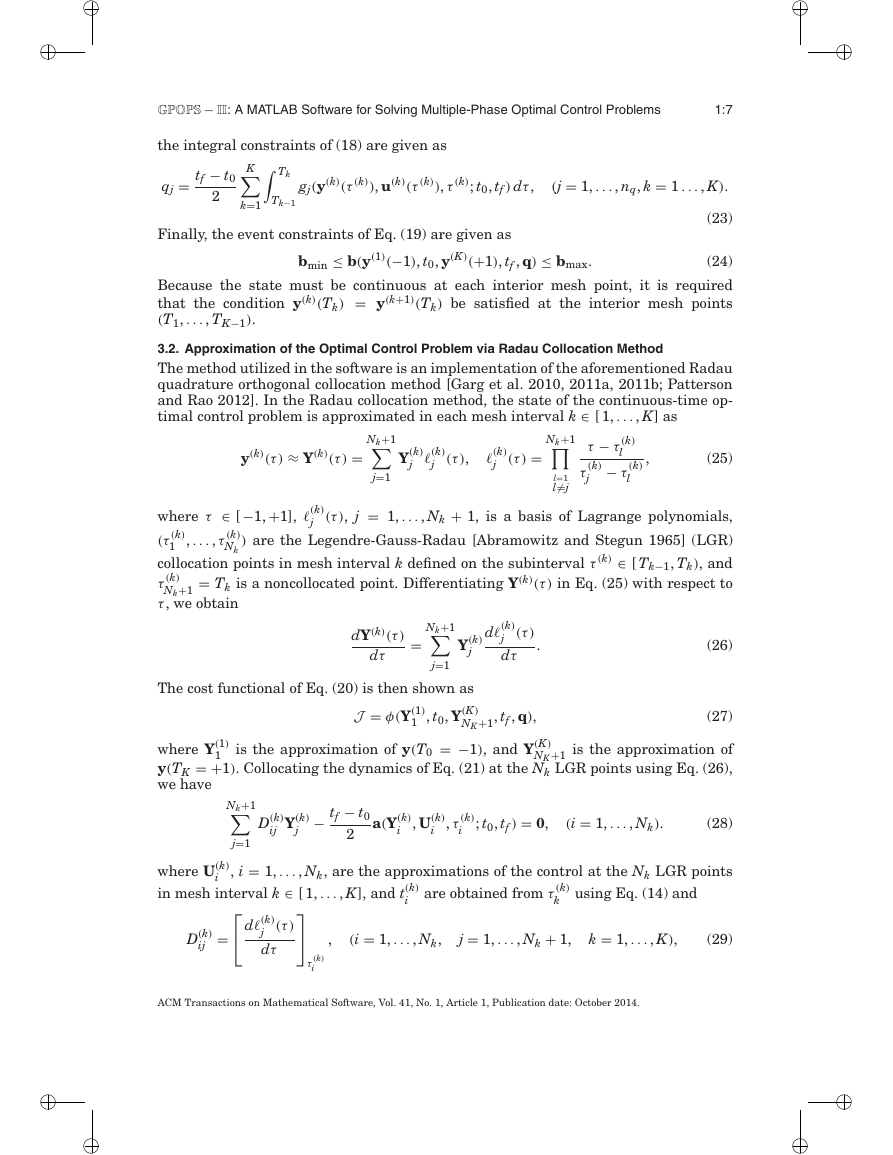

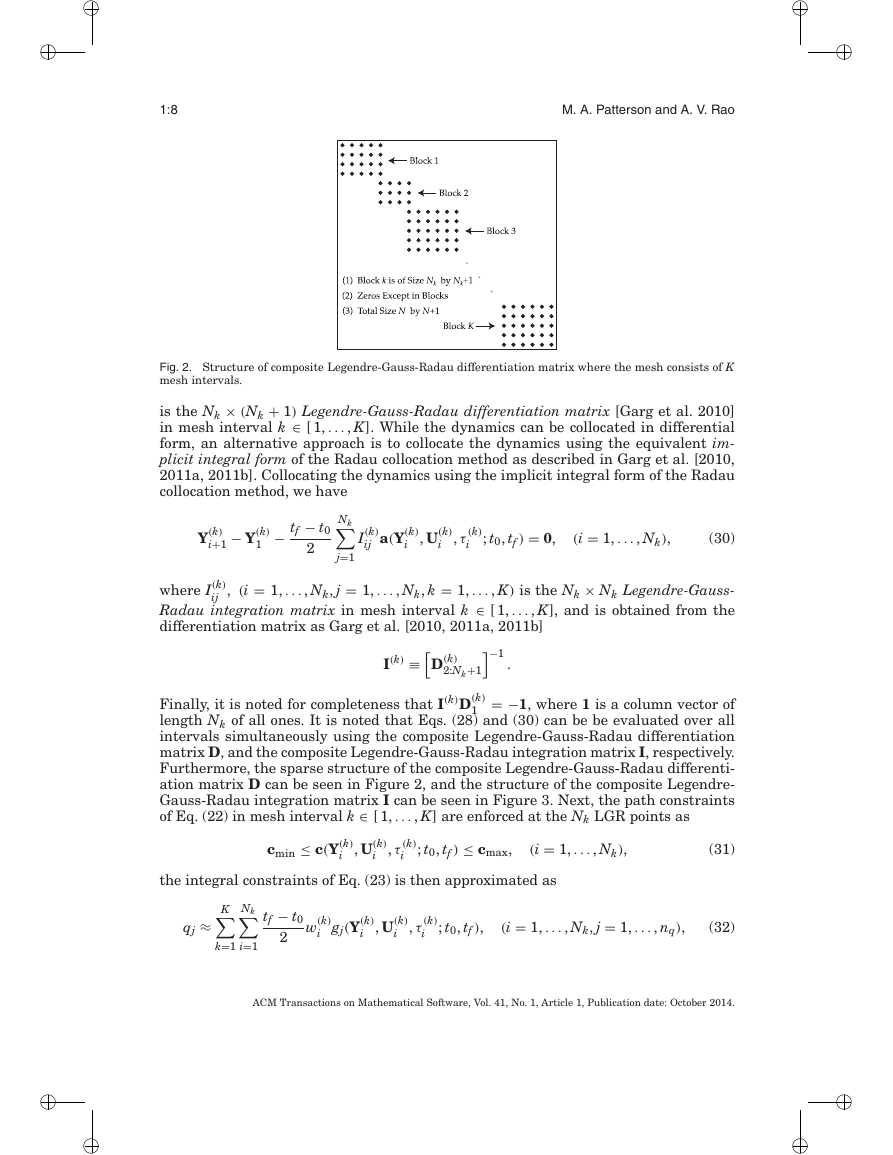

Fig. 2. Structure of composite Legendre-Gauss-Radau differentiation matrix where the mesh consists of K

mesh intervals.

is the Nk × (Nk + 1) Legendre-Gauss-Radau differentiation matrix [Garg et al. 2010]

in mesh interval k ∈ [ 1, . . . , K]. While the dynamics can be collocated in differential

form, an alternative approach is to collocate the dynamics using the equivalent im-

plicit integral form of the Radau collocation method as described in Garg et al. [2010,

2011a, 2011b]. Collocating the dynamics using the implicit integral form of the Radau

collocation method, we have

Nk

j=1

(i = 1, . . . , Nk),

; t0, tf ) = 0,

− tf − t0

I(k)

ij a(Y(k)

i

, U(k)

, τ (k)

− Y(k)

1

Y(k)

i+1

(30)

2

i

i

−1

I(k) ≡

, (i = 1, . . . , Nk, j = 1, . . . , Nk, k = 1, . . . , K) is the Nk × Nk Legendre-Gauss-

where I(k)

Radau integration matrix in mesh interval k ∈ [ 1, . . . , K], and is obtained from the

ij

differentiation matrix as Garg et al. [2010, 2011a, 2011b]

D(k)

2:Nk+1

= −1, where 1 is a column vector of

Finally, it is noted for completeness that I(k)D(k)

1

length Nk of all ones. It is noted that Eqs. (28) and (30) can be be evaluated over all

intervals simultaneously using the composite Legendre-Gauss-Radau differentiation

matrix D, and the composite Legendre-Gauss-Radau integration matrix I, respectively.

Furthermore, the sparse structure of the composite Legendre-Gauss-Radau differenti-

ation matrix D can be seen in Figure 2, and the structure of the composite Legendre-

Gauss-Radau integration matrix I can be seen in Figure 3. Next, the path constraints

of Eq. (22) in mesh interval k ∈ [ 1, . . . , K] are enforced at the Nk LGR points as

.

cmin ≤ c(Y(k)

i

, U(k)

i

, τ (k)

i

; t0, tf ) ≤ cmax,

(i = 1, . . . , Nk),

(31)

the integral constraints of Eq. (23) is then approximated as

qj ≈ K

k=1

Nk

i=1

tf − t0

2

i gj(Y(k)

w(k)

i

, U(k)

i

, τ (k)

i

; t0, tf ),

(i = 1, . . . , Nk, j = 1, . . . , nq),

(32)

ACM Transactions on Mathematical Software, Vol. 41, No. 1, Article 1, Publication date: October 2014.

i

i

i

i

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc