Communication in the Presence of Noise

CLAUDE E. SHANNON, MEMBER, IRE

Classic Paper

A method is developed for representing any communication

system geometrically. Messages and the corresponding signals are

points in two “function spaces,” and the modulation process is a

mapping of one space into the other. Using this representation, a

number of results in communication theory are deduced concern-

ing expansion and compression of bandwidth and the threshold

effect. Formulas are found for the maximum rate of transmission

of binary digits over a system when the signal is perturbed by

various types of noise. Some of the properties of “ideal” systems

which transmit at this maximum rate are discussed. The equivalent

number of binary digits per second for certain information sources

is calculated.

I.

INTRODUCTION

A general communications system is shown schemati-

cally in Fig. 1. It consists essentially of five elements.

1) An Information Source: The source selects one mes-

sage from a set of possible messages to be transmitted to

the receiving terminal. The message may be of various

types; for example, a sequence of letters or numbers, as

in telegraphy or teletype, or a continuous function of time

, as in radio or telephony.

2) The Transmitter: This operates on the message in

some way and produces a signal suitable for transmission

to the receiving point over the channel. In telephony, this

operation consists of merely changing sound pressure into

a proportional electrical current. In telegraphy, we have

a encoding operation which produces a sequence of dots,

dashes, and spaces corresponding to the letters of the

message. To take a more complex example, in the case of

multiplex PCM telephony the different speech functions

must be sampled, compressed, quantized and encoded, and

finally interleaved properly to construct the signal.

3) The Channel: This is merely the medium used to

transmit the signal from the transmitting to the receiving

point. It may be a pair of wires, a coaxial cable, a band

of radio frequencies, etc. During transmission, or at the

receiving terminal, the signal may be perturbed by noise

or distortion. Noise and distortion may be differentiated on

the basis that distortion is a fixed operation applied to the

signal, while noise involves statistical and unpredictable

This paper is reprinted from the PROCEEDINGS OF THE IRE, vol. 37, no.

1, pp. 10–21, Jan. 1949.

Fig. 1. General communications system.

perturbations. Distortion can, in principle, be corrected by

applying the inverse operation, while a perturbation due to

noise cannot always be removed, since the signal does not

always undergo the same change during transmission.

4) The Receiver: This operates on the received signal

and attempts to reproduce, from it, the original message.

Ordinarily it will perform approximately the mathematical

inverse of the operations of the transmitter, although they

may differ somewhat with best design in order to combat

noise.

5) The Destination: This is the person or thing for whom

the message is intended.

Following Nyquist1 and Hartley,2 it is convenient to use

a logarithmic measure of information. If a device has

possible positions it can, by definition, store log

information. The choice of the base

units of

log

log

. We will use the base

2 and call the resulting units binary digits or bits. A group

of

relays or flip-flop circuits has

possible sets of

positions, and can therefore store log

bits.

If it is possible to distinguish reliably

different signal

on a channel, we can say that the

functions of duration

channel can transmit log

bits in time

. More precisely, the channel

. The rate oftransmission is then log

capacity may be defined as

(1)

1 H. Nyquist, “Certain factors affecting telegraph speed,” Bell Syst. Tech.

J., vol. 3, p. 324, Apr. 1924.

2 R. V. L. Hartley, “The transmission of information,” Bell Syst. Tech.

Publisher Item Identifier S 0018-9219(98)01299-7.

J., vol. 3, p. 535–564, July 1928.

0018–9219/98$10.00 ª

1998 IEEE

PROCEEDINGS OF THE IEEE, VOL. 86, NO. 2, FEBRUARY 1998

447

amounts to a choice

�

A precise meaning will be given later to the requirement

of reliable resolution of the

signals.

apart. The function can be simply reconstructed from the

samples by using a pulse of the type

II. THE SAMPLING THEOREM

Let us suppose that the channel has a certain bandwidth

in cps starting at zero frequency, and that we are allowed

to use this channel for a certain period of time

. Without

any further restrictions this would mean that we can use

as signal functions any functions of time whose spectra lie

entirely within the band

, and whose time functions lie

within the interval

. Although it is not possible to fulfill

both of these conditions exactly, it is possible to keep the

spectrum within the band

, and to have the time function

. Can we describe in a more

very small outside the interval

useful way the functions which satisfy these conditions?

One answer is the following.

Theorem 1: If a function

contains no frequencies

cps, it is completely determined by giving

seconds

higher than

its ordinates at a series of points spaced 1/2

apart.

This is a fact which is common knowledge in the

communication art. The intuitive justification is that, if

, it cannot change to

contains no frequencies higher than

a substantially new value in a time less than one-half cycle

of the highest frequency, that is, 1/2

. A mathematical

this is not only approximately, but

proof showing that

exactly, true can be given as follows. Let

be the

spectrum of

. Then

(2)

(3)

since

is assumed zero outside the band

. If we let

where

is any positive or negative integer, we obtain

(4)

(5)

at the sampling points. The

On the left are the values of

integral on the right will be recognized as essentially the

th coefficient in a Fourier-series expansion of the function

as a fundamental

, taking the interval

to

, since

. Thus they determine

, and for lower frequencies

period. This means that the values of the samples

determine the Fourier coefficients in the series expansion

of

is zero for

frequencies greater than

is determined if its Fourier coefficients are determined. But

completely,

since a function is determined if its spectrum is known.

Therefore the original samples determine the function

completely. There is one and only one function whose

spectrum is limited to a band

, and which passes through

given values at sampling points separated 1.2

seconds

determines the original function

sin

(6)

and zero at

,

This function is unity at

i.e., at all other sample points. Furthermore, its spectrum is

constant in the band

and zero outside. At each sample

point a pulse of this type is placed whose amplitude is

adjusted to equal that of the sample. The sum of these pulses

is the required function, since it satisfies the conditions on

the spectrum and passes through the sampled values.

Mathematically, this process can be described as follows.

is

th sample. Then the function

Let

be the

represented by

sin

(7)

A similar result is true if the band

does not start

at zero frequency but at some higher value, and can be

proved by a linear translation (corresponding physically to

single-sideband modulation) of the zero-frequency case. In

this case the elementary pulse is obtained from sin

by

single-side-band modulation.

If the function is limited to the time interval

and the

samples are spaced 1/2

seconds apart, there will be a total

of

samples in the interval. All samples outside will

be substantially zero. To be more precise, we can define a

function to be limited to the time interval

if, and only if,

all the samples outside this interval are exactly zero. Then

we can say that any function limited to the bandwidth

and the time interval

numbers.

can be specified by giving

. This given

Theorem 1 has been given previously in other forms

by mathematicians3 but in spite of its evident importance

seems not

to have appeared explicitly in the literature

of communication theory. Nyquist,4, 5 however, and more

recently Gabor,6 have pointed out that approximately

numbers are sufficient, basing their arguments on a Fourier

series expansion of the function over the time interval

cosine terms up to

frequency

. The slight discrepancy is due to the fact

that the functions obtained in this way will not be strictly

limited to the band

but, because of the sudden starting

and stopping of the sine and cosine components, contain

some frequency content outside the band. Nyquist pointed

out the fundamental importance of the time interval

seconds in connection with telegraphy, and we will call this

the Nyquist interval corresponding to the band

and

.

3 J. M. Whittaker, Interpolatory Function Theory, Cambridge Tracts

in Mathematics and Mathematical Physics, no. 33. Cambridge, U.K.:

Cambridge Univ. Press, ch. IV, 1935.

4 H. Nyquist, “Certain topics in telegraph transmission theory,” AIEE

Trans., p. 617, Apr. 1928.

5 W. R. Bennett, “Time division multiplex systems,” Bell Syst. Tech.

J., vol. 20, p. 199, Apr. 1941, where a result similar to Theorem 1 is

established, but on a steady-state basis.

6 D. Gabor, “Theory of communication,” J. Inst. Elect. Eng. (London),

vol. 93, pt. 3, no. 26, p. 429, 1946.

448

PROCEEDINGS OF THE IEEE, VOL. 86, NO. 2, FEBRUARY 1998

�

The

numbers used to specify the function need not

be the equally spaced samples used above. For example,

the samples can be unevenly spaced, although, if there is

considerable bunching, the samples must be known very

accurately to give a good reconstruction of the function. The

reconstruction process is also more involved with unequal

spacing. One can further show that the value of the function

and its derivative at every other sample point are sufficient.

The value and first and second derivatives at every third

sample point give a still different set of parameters which

uniquely determine the function. Generally speaking, any

set of

independent numbers associated with the

function can be used to describe it.

III. GEOMETRICAL REPRESENTATION OF THE SIGNALS

,

,

A set of three numbers

, regardless of their

source, can always be thought of as coordinates of a point in

three-dimensional space. Similarly, the

evenly spaced

samples of a signal can be thought of as coordinates of

a point in a space of

dimensions. Each particular

selection of these numbers corresponds to a particular point

in this space. Thus there is exactly one point corresponding

to each signal in the band

and with duration

.

The number of dimensions

will be, in general, very

high. A 5-Mc television signal lasting for an hour would be

represented by a point in a space with

dimensions. Needless to say, such a space cannot

be visualized. It is possible, however, to study analytically

the properties of

-dimensional space. To a considerable

extent, these properties are a simple generalization of the

properties of two- and three-dimensional space, and can

often be arrived at by inductive reasoning from these cases.

The advantage of this geometrical representation of the

signals is that we can use the vocabulary and the results of

geometry in the communication problem. Essentially, we

have replaced a complex entity (say, a television signal) in

a simple environment [the signal requires only a plane for

its representation as

] by a simple entity (a point) in a

complex environment (

dimensional space).

If we imagine the

coordinate axes to be at right

angles to each other, then distances in the space have a

simple interpretation. The distance from the origin to a point

is analogous to the two- and three-dimensional cases

where

is the

th sample. Now, since

sin

we have

(8)

(9)

(10)

using the fact that

sin

sin

(11)

times the

Hence, the square of the distance to a point is

energy (more precisely, the energy into a unit resistance)

of the corresponding signal

(12)

is the average power over the time

where

the distance between two points is

discrepancy between the two corresponding signals.

. Similarly,

times the rms

If we consider only signals whose average power is less

, these will correspond to points within a sphere of

than

radius

(13)

If noise is added to the signal in transmission, it means

that the point corresponding to the signal has been moved a

certain distance in the space proportional to the rms value of

the noise. Thus noise produces a small region of uncertainty

about each point in the space. A fixed distortion in the

channel corresponds to a warping of the space, so that each

point is moved, but in a definite fixed way.

In ordinary three-dimensional space it

is possible to

set up many different coordinate systems. This is also

possible in the signal space of

dimensions that we

are considering. A different coordinate system corresponds

to a different way of describing the same signal function.

The various ways of specifying a function given above are

special cases of this. One other way of particular importance

in communication is in terms of frequency components.

The function

can be expanded as a sum of sines and

cosines of frequencies

apart, and the coefficients used

as a different set of coordinates. It can be shown that these

coordinates are all perpendicular to each other and are

obtained by what is essentially a rotation of the original

coordinate system.

Passing a signal through an ideal filter corresponds to

projecting the corresponding point onto a certain region in

the space. In fact, in the frequency-coordinate system those

components lying in the pass band of the filter are retained

and those outside are eliminated, so that the projection is

on one of the coordinate lines, planes, or hyperplanes. Any

filter performs a linear operation on the vectors of the space,

producing a new vector linearly related to the old one.

IV. GEOMETRICAL REPRESENTATION OF MESSAGES

We have associated a space of

dimensions with the

set of possible signals. In a similar way one can associate

a space with the set of possible messages. Suppose we are

considering a speech system and that the messages consist

SHANNON: COMMUNICATION IN THE PRESENCE OF NOISE

449

�

decreased. A similar effect can occur through probability

considerations. Certain messages may be possible, but so

improbable relative to the others that we can, in a certain

sense, neglect them. In a television image, for example,

successive frames are likely to be very nearly identical.

There is a fair probability of a particular picture element

having the same light intensity in successive frames. If

this is analyzed mathematically, it results in an effective

reduction of dimensionality of the message space when

is large.

We will not go further into these two effects at present,

but let us suppose that, when they are taken into account, the

resulting message space has a dimensionality

, which will,

of course, be less than or equal to

. In many cases,

even though the effects are present, their utilization involves

too much complication in the way of equipment. The

system is then designed on the basis that all functions are

different and that there are no limitations on the information

source. In this case, the message space is considered to have

the full

dimensions.

V. GEOMETRICAL REPRESENTATION OF THE

TRANSMITTER AND RECEIVER

We now consider the function of the transmitter from

this geometrical standpoint. The input to the transmitter is

a message; that is, one point in the message space. Its output

is a signal—one point in the signal space. Whatever form

of encoding or modulation is performed, the transmitter

must establish some correspondence between the points in

the two spaces. Every point in the message space must

correspond to a point in the signal space, and no two

messages can correspond to the same signal. If they did,

there would be no way to determine at the receiver which

of the two messages was intended. The geometrical name

for such a correspondence is a mapping. The transmitter

maps the message space into the signal space.

In a similar way, the receiver maps the signal space back

into the message space. Here, however, it is possible to

have more than one point mapped into the same point.

This means that several different signals are demodulated

or decoded into the same message. In AM, for example,

the phase of the carrier is lost in demodulation. Different

signals which differ only in the phase of the carrier are

demodulated into the same message. In FM the shape of

the signal wave above the limiting value of the limiter

does not affect the recovered message. In PCM considerable

distortion of the received pulses is possible, with no effect

on the output of the receiver.

We have so far established a correspondence between a

communication system and certain geometrical ideas. The

correspondence is summarized in Table 1.

VI. MAPPING CONSIDERATIONS

It is possible to draw certain conclusions of a general

nature regarding modulation methods from the geometrical

picture alone. Mathematically, the simplest types of map-

pings are those in which the two spaces have the same

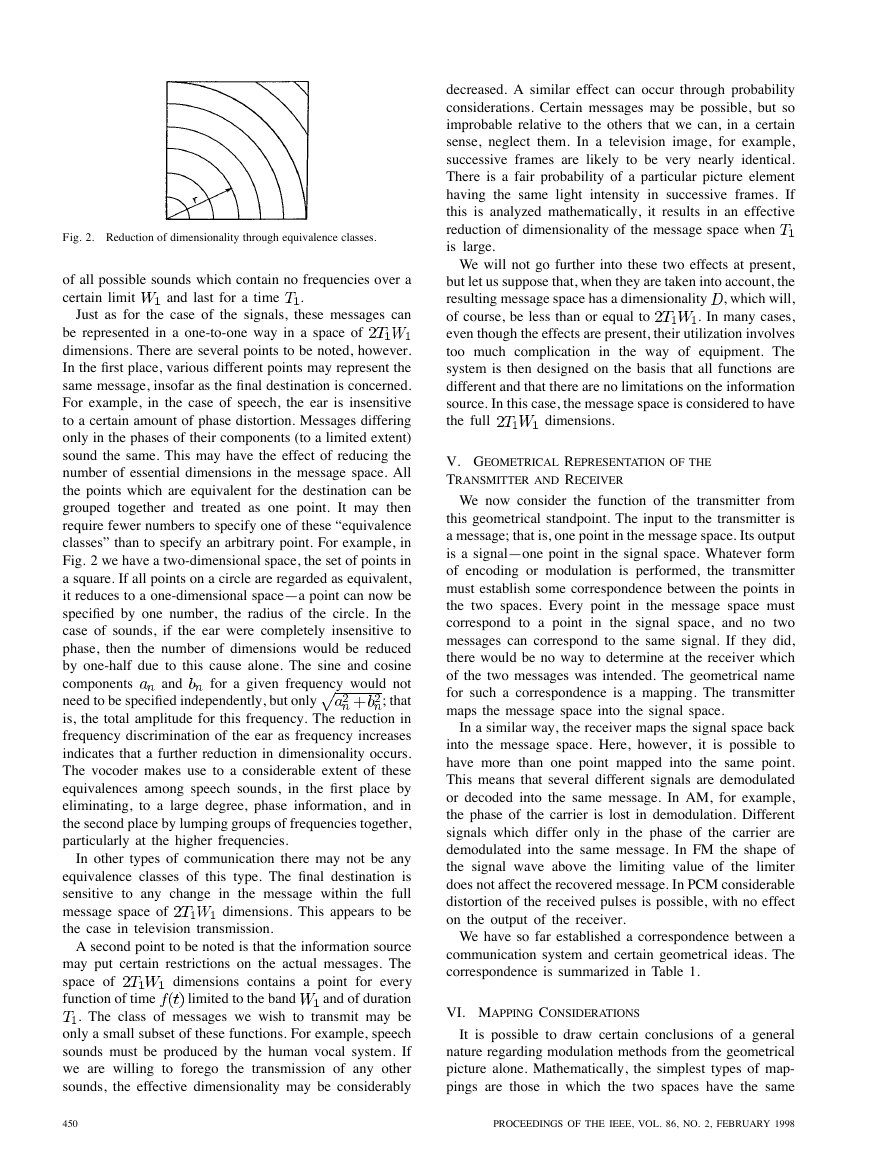

Fig. 2. Reduction of dimensionality through equivalence classes.

of all possible sounds which contain no frequencies over a

certain limit

and last for a time

.

Just as for the case of the signals, these messages can

be represented in a one-to-one way in a space of

dimensions. There are several points to be noted, however.

In the first place, various different points may represent the

same message, insofar as the final destination is concerned.

For example, in the case of speech, the ear is insensitive

to a certain amount of phase distortion. Messages differing

only in the phases of their components (to a limited extent)

sound the same. This may have the effect of reducing the

number of essential dimensions in the message space. All

the points which are equivalent for the destination can be

grouped together and treated as one point. It may then

require fewer numbers to specify one of these “equivalence

classes” than to specify an arbitrary point. For example, in

Fig. 2 we have a two-dimensional space, the set of points in

a square. If all points on a circle are regarded as equivalent,

it reduces to a one-dimensional space—a point can now be

specified by one number, the radius of the circle. In the

case of sounds, if the ear were completely insensitive to

phase, then the number of dimensions would be reduced

by one-half due to this cause alone. The sine and cosine

for a given frequency would not

components

need to be specified independently, but only

; that

is, the total amplitude for this frequency. The reduction in

frequency discrimination of the ear as frequency increases

indicates that a further reduction in dimensionality occurs.

The vocoder makes use to a considerable extent of these

equivalences among speech sounds, in the first place by

eliminating, to a large degree, phase information, and in

the second place by lumping groups of frequencies together,

particularly at the higher frequencies.

and

In other types of communication there may not be any

equivalence classes of this type. The final destination is

sensitive to any change in the message within the full

dimensions. This appears to be

message space of

the case in television transmission.

A second point to be noted is that the information source

may put certain restrictions on the actual messages. The

dimensions contains a point for every

space of

and of duration

function of time

. The class of messages we wish to transmit may be

only a small subset of these functions. For example, speech

sounds must be produced by the human vocal system. If

we are willing to forego the transmission of any other

sounds, the effective dimensionality may be considerably

limited to the band

450

PROCEEDINGS OF THE IEEE, VOL. 86, NO. 2, FEBRUARY 1998

�

Table 1

Fig. 3. Mapping similar to frequency modulation.

number of dimensions. Single-sideband amplitude modula-

tion is an example of this type and an especially simple one,

since the coordinates in the signal space are proportional

to the corresponding coordinates in the message space. In

double-sideband transmission the signal space has twice the

number of coordinates, but they occur in pairs with equal

values. If there were only one dimension in the message

space and two in the signal space, it would correspond to

mapping a line onto a square so that the point

on the line

is represented by

in the square. Thus no significant

use is made of the extra dimensions. All the messages go

into a subspace having only

dimensions.

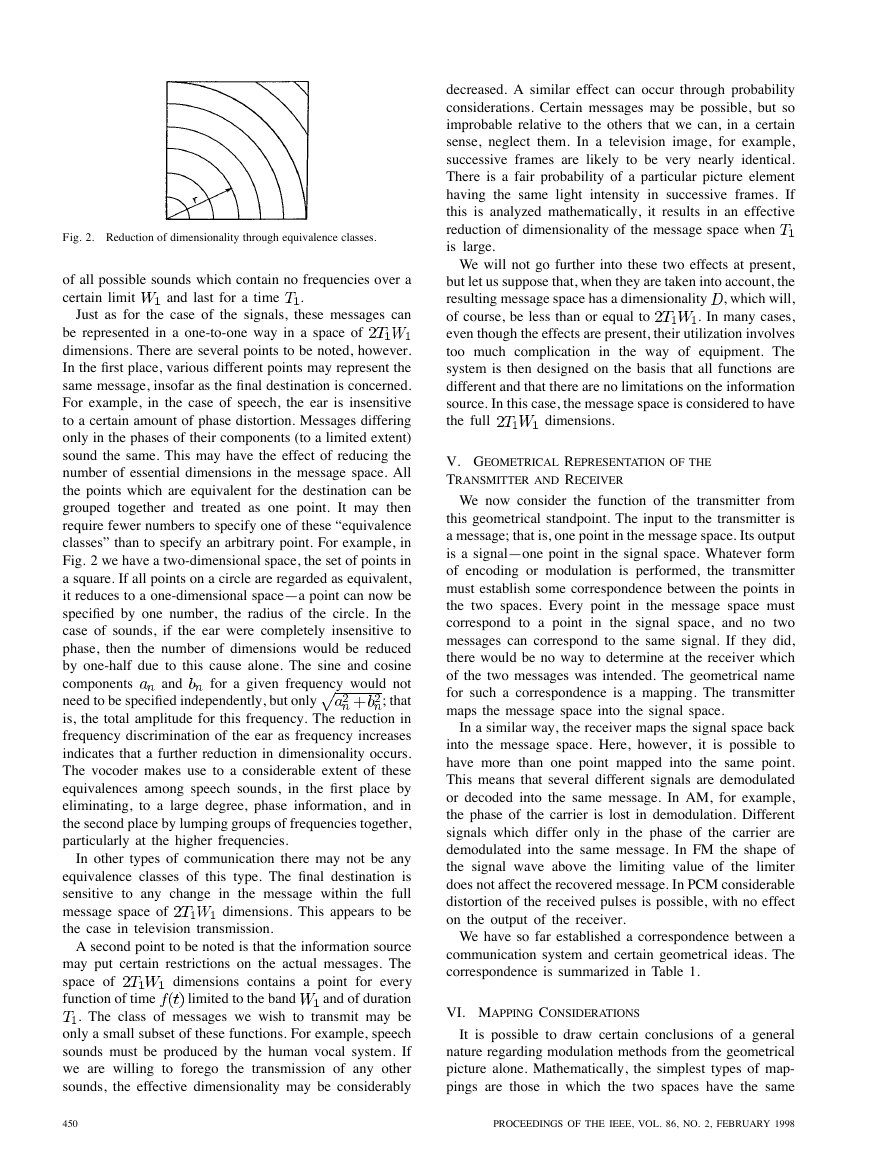

In frequency modulation the mapping is more involved.

The signal space has a much larger dimensionality than

the message space. The type of mapping can be suggested

by Fig. 3, where a line is mapped into a three-dimensional

space. The line starts at unit distance from the origin on the

first coordinate axis, stays at this distance from the origin

on a circle to the next coordinate axis, and then goes to

the third. It can be seen that the line is lengthened in this

mapping in proportion to the total number of coordinates.

It is not, however, nearly as long as it could be if it wound

back and forth through the space, filling up the internal

volume of the sphere it traverses.

This expansion of the line is related to the improved

signal-to-noise ratio obtainable with increased bandwidth.

Since the noise produces a small region of uncertainty about

each point, the effect of this on the recovered message will

be less if the map is in a large scale. To obtain as large

a scale as possible requires that the line wander back and

Fig. 4. Efficient mapping of a line into a square.

forth through the higher dimensional region as indicated

in Fig. 4, where we have mapped a line into a square. It

will be noticed that when this is done the effect of noise is

small relative to the length of the line, provided the noise

is less than a certain critical value. At this value it becomes

uncertain at the receiver as to which portion of the line

contains the message. This holds generally, and it shows

that any system which attempts to use the capacities of a

wider band to the full extent possible will suffer from a

threshold effect when there is noise. If the noise is small,

very little distortion will occur, but at some critical noise

amplitude the message will become very badly distorted.

This effect is well known in PCM.

over a channel with

Suppose, on the other hand, we wish to reduce dimen-

sionality, i.e., to compress bandwidth or time or both. That

is, we wish to send messages of band

and duration

. It has already been

indicated that the effective dimensionality

of the message

space may be less than

due to the properties of the

source and of the destination. Hence we certainly need no

dimension in the signal space for a good

more than

mapping. To make this saving it is necessary, of course, to

isolate the effective coordinates in the message space, and

to send these only. The reduced bandwidth transmission of

speech by the vocoder is a case of this kind.

The question arises, however, as to whether further

reduction is possible. In our geometrical analogy,

is it

possible to map a space of high dimensionality onto one of

lower dimensionality? The answer is that it is possible, with

certain reservations. For example, the points of a square

can be described by their two coordinates which could be

written in decimal notation

From these two numbers we can construct one number by

taking digits alternately from and

(15)

(14)

determines

and . Thus there is a one-to-one correspondence

A knowledge of

both

between the points of a square and the points of a line.

and

determines

, and

SHANNON: COMMUNICATION IN THE PRESENCE OF NOISE

451

�

This type of mapping, due to the mathematician Cantor,

can easily be extended as far as we wish in the direction of

reducing dimensionality. A space of

dimensions can be

mapped in a one-to-one way into a space of one dimension.

Physically, this means that the frequency-time product can

be reduced as far as we wish when there is no noise, with

exact recovery of the original messages.

In a less exact sense, a mapping of the type shown in

Fig. 4 maps a square into a line, provided we are not too

particular about recovering exactly the starting point, but

are satisfied with a nearby one. The sensitivity we noticed

before when increasing dimensionality now takes a different

form. In such a mapping, to reduce

, there will be a

certain threshold effect when we perturb the message. As

we change the message a small amount, the corresponding

signal will change a small amount, until some critical

value is reached. At this point the signal will undergo a

considerable change. In topology it is shown7 that it is

not possible to map a region of higher dimension into a

region of lower dimension continuously. It is the necessary

discontinuity which produces the threshold effects we have

been describing for communication systems.

This discussion is relevant to the well-known “Hartley

law,” which states that “an upper limit

to the amount

of information which may be transmitted is set by the

sum for the various available lines of the product of the

line-frequency range of each by the time during which

it is available for use.” There is a sense in which this

statement is true, and another sense in which it is false.

It

is not possible to map the message space into the

signal space in a one-to-one, continuous manner (this is

known mathematically as a topological mapping) unless

the two spaces have the same dimensionality; i.e., unless

. Hence, if we limit the transmitter and receiver

to continuous one-to-one operations, there is a lower bound

to the product

in the channel. This lower bound is

determined, not by the product

of message bandwidth

and time, but by the number of essential dimension

, as

indicated in Section IV. There is, however, no good reason

for limiting the transmitter and receiver to topological

mappings. In fact, PCM and similar modulation systems

are highly discontinuous and come very close to the type

of mapping given by (14) and (15). It is desirable, then, to

find limits for what can be done with no restrictions on the

type of transmitter and receiver operations. These limits,

which will be derived in the following sections, depend on

the amount and nature of the noise in the channel, and on

the transmitter power, as well as on the bandwidth-time

product.

It is evident that any system, either to compress

, or

to expand it and make full use of the additional volume,

must be highly nonlinear in character and fairly complex

because of the peculiar nature of the mappings involved.

VII. THE CAPACITY OF A CHANNEL IN THE

PRESENCE OF WHITE THERMAL NOISE

It is not difficult to set up certain quantitative relations

that must hold when we change the product

. Let us

assume, for the present, that the noise in the system is a

white thermal-noise band limited to the band

, and that

it is added to the transmitted signal to produce the received

signal. A white thermal noise has the property that each

sample is perturbed independently of all the others, and the

distribution of each amplitude is Gaussian with standard

deviation

is the average noise power.

How many different signals can be distinguished at the

receiving point in spite of the perturbations due to noise?

A crude estimate can be obtained as follows. If the signal

has a power

, then the perturbed signal will have a power

. The number of amplitudes that can be reasonably

where

well distinguished is

(16)

is a small constant

in the neighborhood of

where

unity depending on how the phrase “reasonably well” is

interpreted. If we require very good separation,

will

be small, while toleration of occasional errors allows

to be larger. Since in time

independent

amplitudes, the total number of reasonably distinct signals

is

there are

The number of bits that can be sent in this time is log

and the rate of transmission is

(17)

,

log

(bits per second)

(18)

The difficulty with this argument, apart from its general

approximate character,

lies in the tacit assumption that

for two signals to be distinguishable they must differ at

some sampling point by more than the expected noise.

The argument presupposes that PCM, or something very

similar to PCM, is the best method of encoding binary

digits into signals. Actually, two signals can be reliably

distinguished if they differ by only a small amount, pro-

vided this difference is sustained over a long period of

time. Each sample of the received signal then gives a small

amount of statistical information concerning the transmitted

signal; in combination, these statistical indications result in

near certainty. This possibility allows an improvement of

about 8 dB in power over (18) with a reasonable definition

of reliable resolution of signals, as will appear later. We

will now make use of the geometrical representation to

determine the exact capacity of a noisy channel.

Theorem 2: Let

be the average transmitter power, and

in the

. By sufficiently complicated encoding systems it

suppose the noise is white thermal noise of power

band

is possible to transmit binary digits at a rate

7 W. Hurewitz and H. Wallman, Dimension Theory. Princeton, NJ:

Princeton Univ. Press, 1941.

log

(19)

452

PROCEEDINGS OF THE IEEE, VOL. 86, NO. 2, FEBRUARY 1998

�

with as small a frequency of errors as desired. It is not

possible by any encoding method to send at a higher rate

and have an arbitrarily low frequency of errors.

log

This shows that the rate

measures

in a sharply defined way the capacity of the channel for

transmitting information. It is a rather surprising result,

since one would expect that reducing the frequency of

errors would require reducing the rate of transmission, and

that the rate must approach zero as the error frequency

does. Actually, we can send at

but reduce

errors by using more involved encoding and longer delays

at the transmitter and receiver. The transmitter will take

long sequences of binary digits and represent this entire

sequence by a particular signal function of long duration.

The delay is required because the transmitter must wait for

the full sequence before the signal is determined. Similarly,

the receiver must wait for the full signal function before

decoding into binary digits.

the rate

We now prove Theorem 2. In the geometrical represen-

tation each signal point is surrounded by a small region

of uncertainty due to noise. With white thermal noise, the

perturbations of the different samples (or coordinates) are

all Gaussian and independent. Thus the probability of a

perturbation having coordinates

(these are

the differences between the original and received signal

coordinates) is the product of the individual probabilities

for the different coordinates

exp

exp

Since this depends only on

the probability of a given perturbation depends only on the

distance from the original signal and not on the direction.

In other words, the region of uncertainty is spherical in

nature. Although the limits of this region are not sharply

defined for a small number of dimensions

, the

limits become more and more definite as the dimensionality

increases. This is because the square of the distance a

signal is perturbed is equal to

times the average

noise power during the time

this

average noise power must approach

. Thus, for large

, the perturbation will almost certainly be to some point

centered

the original signal point. More precisely, by taking

sufficiently large we can insure (with probability as

lie

near to one as we wish) that

within a sphere of radius

is

arbitrarily small. The noise regions can therefore be thought

of roughly as sharply defined billiard balls, when

is

very large. The received signals have an average power

, and in the same sense must almost all lie on

near the surface of a sphere of radius

at

the perturbation will

increases,

where

. As

the surface of a sphere of radius

. How

many different transmitted signals can be found which will

be distinguishable? Certainly not more than the volume

of the sphere of radius

divided by the

, since overlap of

volume of a sphere of radius

the noise spheres results in confusion as to the message

at the receiving point. The volume of an

-dimensional

sphere8 of radius

is

(20)

Hence, an upper limit for the number

signals is

of distinguishable

Consequently, the channel capacity is bounded by

This proves the last statement in the theorem.

log

(21)

(22)

log

To prove the first part of the theorem, we must show

that there exists a system of encoding which transmits

binary digits per second with a fre-

quency of errors less than when

is arbitrarily small.

The system to be considered operates as follows. A long

sequence of, say,

binary digits is taken in at the trans-

mitter. There are

such sequences, and each corresponds

to a particular signal function of duration

. Thus there

are

different signal functions. When the sequence

of

is completed, the transmitter starts sending the cor-

responding signal. At the receiver a perturbed signal is

received. The receiver compares this signal with each of the

possible transmitted signals and selects the one which

is nearest the perturbed signal (in the sense of rms error)

as the one actually sent. The receiver then constructs, as its

output, the corresponding sequence of binary digits. There

will be, therefore, an overall delay of

seconds.

To insure a frequency of errors less than , the

signal

functions must be reasonably well separated from each

other. In fact, we must choose them in such a way that,

when a perturbed signal is received, the nearest signal

point (in the geometrical representation) is, with probability

greater than

, the actual original signal.

It turns out, rather surprisingly, that it is possible to

choose our

signal functions at random from the points

inside the sphere of radius

, and achieve the most

that is possible. Physically, this corresponds very nearly to

using

different samples of band-limited white noise with

power

as signal functions.

A particular selection of

points in the sphere corre-

sponds to a particular encoding system. The general scheme

of the proof is to consider all such selections, and to show

that the frequency of errors averaged over all the particular

selections is less than . This will show that there are

8 D. M. Y. Sommerville, An Introduction to the Geometry of N

Dimensions. New York: Dutton, 1929, p. 135.

SHANNON: COMMUNICATION IN THE PRESENCE OF NOISE

453

�

frequency of errors will be less than . This will be true if

Now

positive. Consequently, (25) will be true if

is always greater than

when

is

(25)

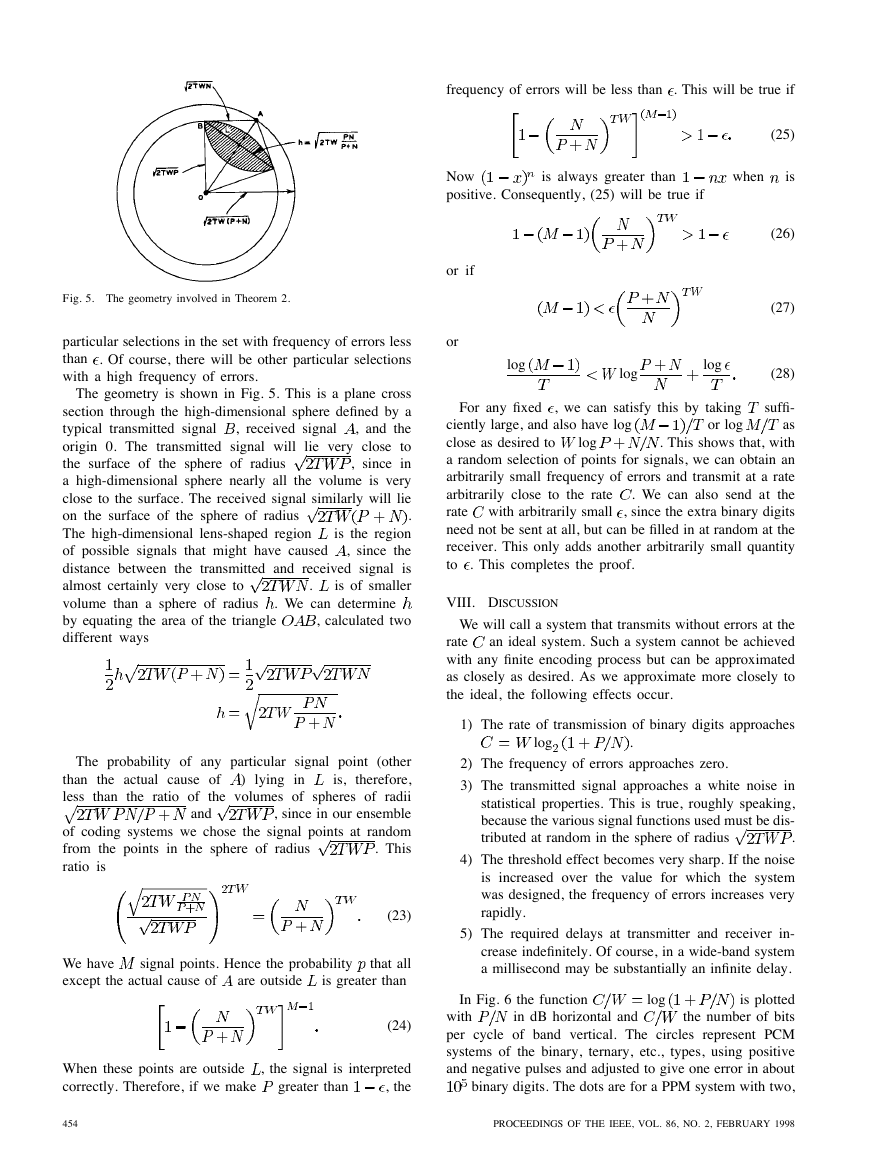

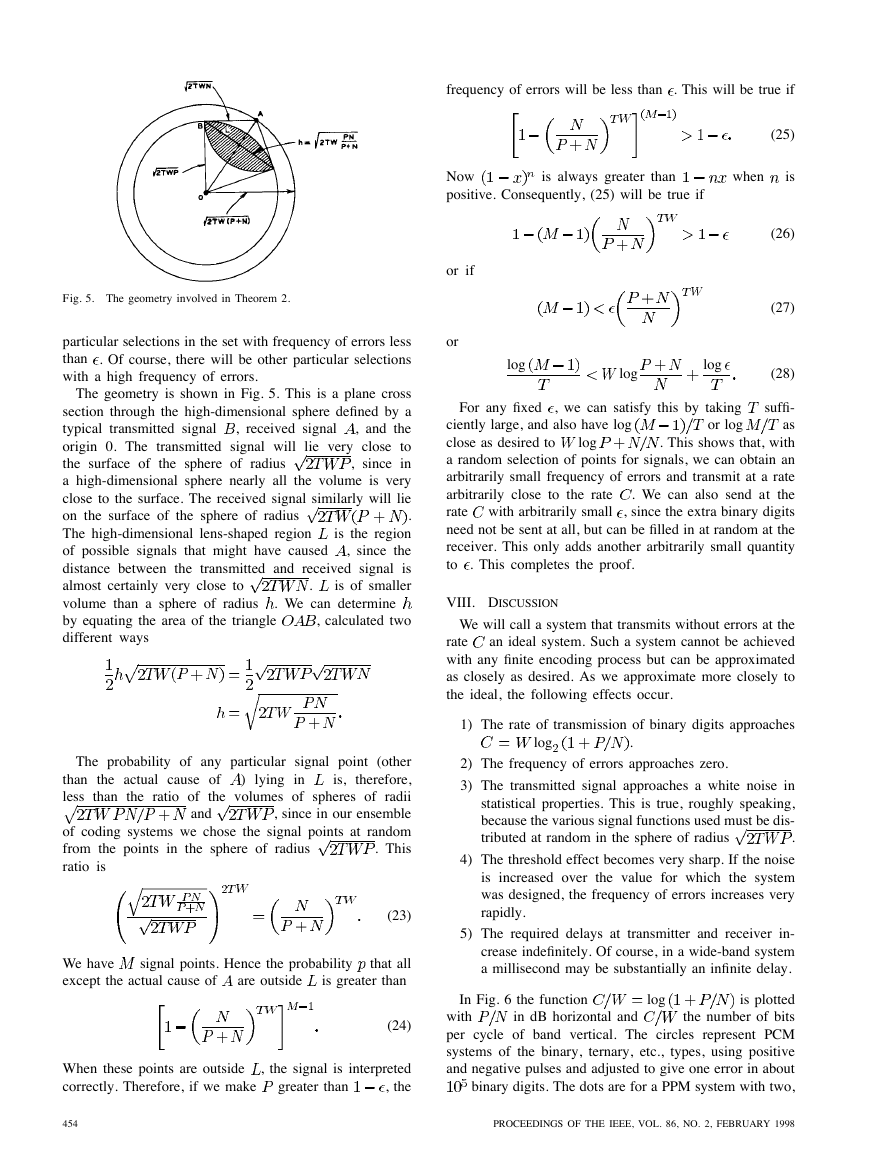

Fig. 5. The geometry involved in Theorem 2.

particular selections in the set with frequency of errors less

than . Of course, there will be other particular selections

with a high frequency of errors.

or if

or

log

log

log

(26)

(27)

(28)

, received signal

The geometry is shown in Fig. 5. This is a plane cross

section through the high-dimensional sphere defined by a

typical transmitted signal

, and the

lie very close to

origin 0. The transmitted signal will

the surface of the sphere of radius

, since in

a high-dimensional sphere nearly all the volume is very

close to the surface. The received signal similarly will lie

on the surface of the sphere of radius

.

The high-dimensional lens-shaped region

is the region

, since the

of possible signals that might have caused

distance between the transmitted and received signal is

almost certainly very close to

is of smaller

volume than a sphere of radius

by equating the area of the triangle

different ways

. We can determine

, calculated two

.

) lying in

The probability of any particular signal point (other

therefore,

than the actual cause of

less than the ratio of the volumes of spheres of radii

, since in our ensemble

of coding systems we chose the signal points at random

. This

from the points in the sphere of radius

ratio is

and

is,

(23)

We have

except the actual cause of

signal points. Hence the probability

are outside

that all

is greater than

When these points are outside

correctly. Therefore, if we make

, the signal is interpreted

, the

greater than

(24)

log

For any fixed , we can satisfy this by taking

or log

suffi-

as

ciently large, and also have log

close as desired to

. This shows that, with

a random selection of points for signals, we can obtain an

arbitrarily small frequency of errors and transmit at a rate

arbitrarily close to the rate

. We can also send at the

rate with arbitrarily small

, since the extra binary digits

need not be sent at all, but can be filled in at random at the

receiver. This only adds another arbitrarily small quantity

to . This completes the proof.

VIII. DISCUSSION

We will call a system that transmits without errors at the

rate

an ideal system. Such a system cannot be achieved

with any finite encoding process but can be approximated

as closely as desired. As we approximate more closely to

the ideal, the following effects occur.

1) The rate of transmission of binary digits approaches

log

.

2) The frequency of errors approaches zero.

3) The transmitted signal approaches a white noise in

statistical properties. This is true, roughly speaking,

because the various signal functions used must be dis-

.

tributed at random in the sphere of radius

4) The threshold effect becomes very sharp. If the noise

is increased over the value for which the system

was designed, the frequency of errors increases very

rapidly.

5) The required delays at transmitter and receiver in-

crease indefinitely. Of course, in a wide-band system

a millisecond may be substantially an infinite delay.

log

in dB horizontal and

In Fig. 6 the function

is plotted

with

the number of bits

per cycle of band vertical. The circles represent PCM

systems of the binary, ternary, etc., types, using positive

and negative pulses and adjusted to give one error in about

binary digits. The dots are for a PPM system with two,

454

PROCEEDINGS OF THE IEEE, VOL. 86, NO. 2, FEBRUARY 1998

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc