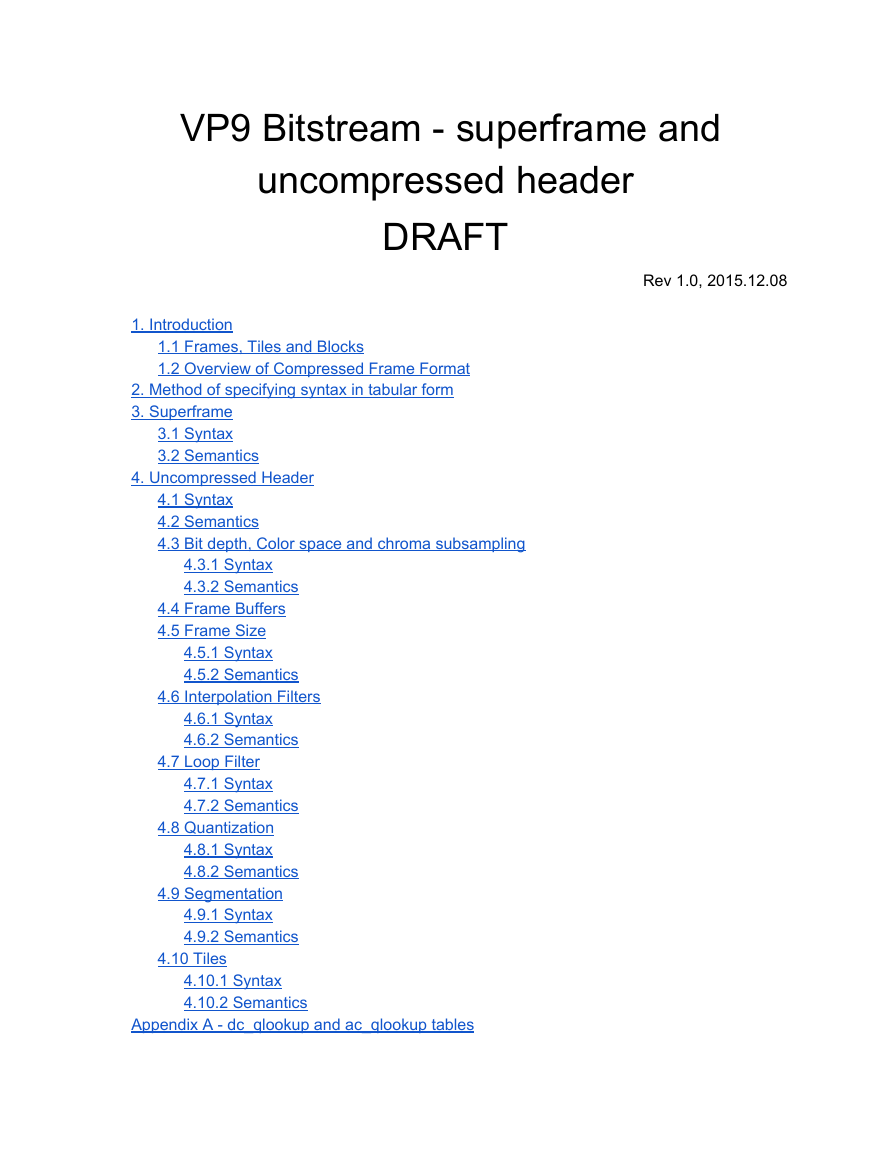

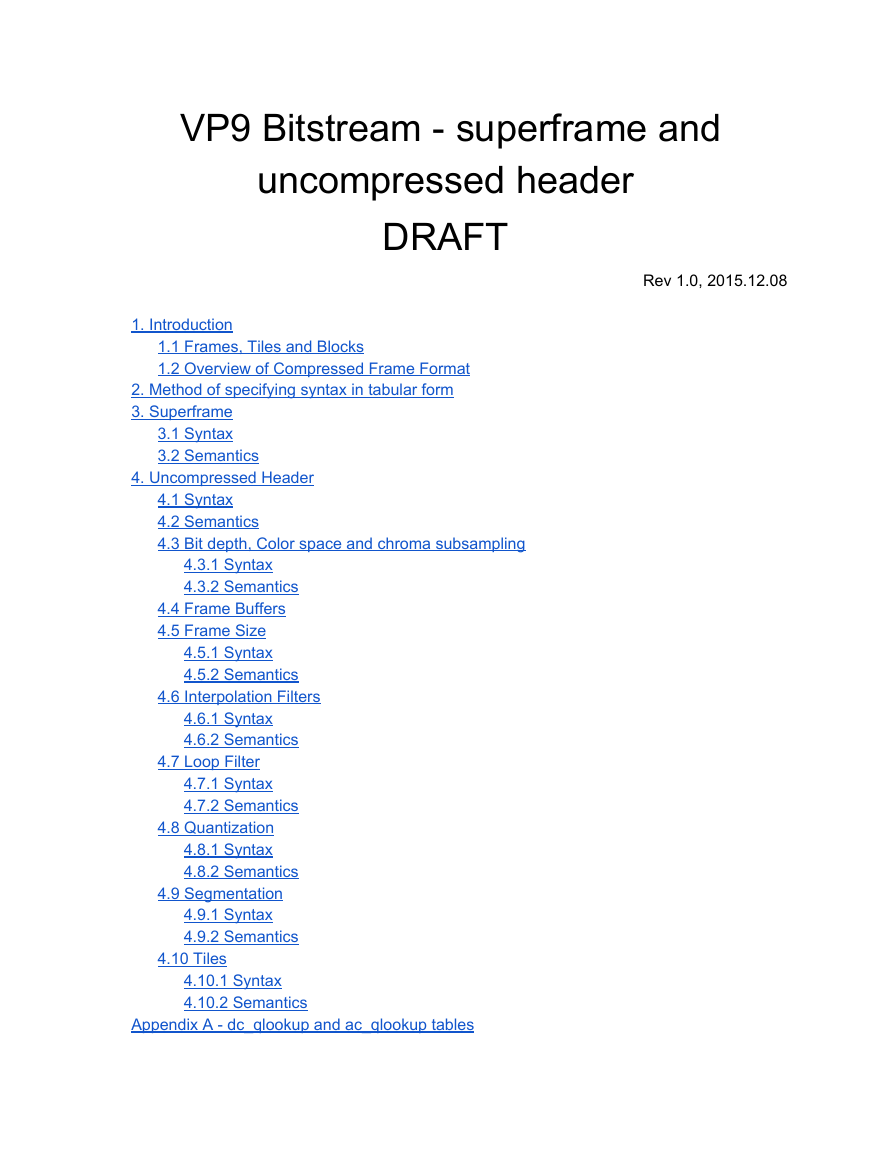

VP9 Bitstream superframe and

uncompressed header

DRAFT

Rev 1.0, 2015.12.08

1. Introduction

1.1 Frames, Tiles and Blocks

1.2 Overview of Compressed Frame Format

2. Method of specifying syntax in tabular form

3. Superframe

3.1 Syntax

3.2 Semantics

4. Uncompressed Header

4.1 Syntax

4.2 Semantics

4.3 Bit depth, Color space and chroma subsampling

4.3.1 Syntax

4.3.2 Semantics

4.4 Frame Buffers

4.5 Frame Size

4.5.1 Syntax

4.5.2 Semantics

4.6 Interpolation Filters

4.6.1 Syntax

4.6.2 Semantics

4.7 Loop Filter

4.7.1 Syntax

4.7.2 Semantics

4.8 Quantization

4.8.1 Syntax

4.8.2 Semantics

4.9 Segmentation

4.9.1 Syntax

4.9.2 Semantics

4.10 Tiles

4.10.1 Syntax

4.10.2 Semantics

Appendix A dc_qlookup and ac_qlookup tables

�

1. Introduction

This document is a description of part of the VP9 bitstream format. It covers the highlevel

format of frames, superframes, as well as the full format of the frame uncompressed header and

superframe index.

1.1 Frames, Tiles and Blocks

VP9 is based on decomposition of video image frames into rectangular pixel blocks of different

sizes, prediction of such blocks using previously reconstructed blocks, and transform coding of

the residual signal.

There are only two frame types in VP9. Intra frames or key frames are decoded without

reference to any other frame in a sequence. Inter frames are encoded with reference to

previously encoded frames or reference buffers.

Video image blocks are grouped in tiles. Based on the layout of the tiles within image frames,

tiles can be categorized into column tiles and row tiles. Column tiles are partitioned from image

frames vertically into columns and row tiles are partitioned from image frames horizontally into

rows. Column tiles are independently coded and decodable units of the video frame. Each

column tile can be decoded completely independently. Row tiles are interdependent. There has

to be at least one tile per frame. Tiles are then broken into 64x64 super blocks that are coded in

raster order within the video frame.

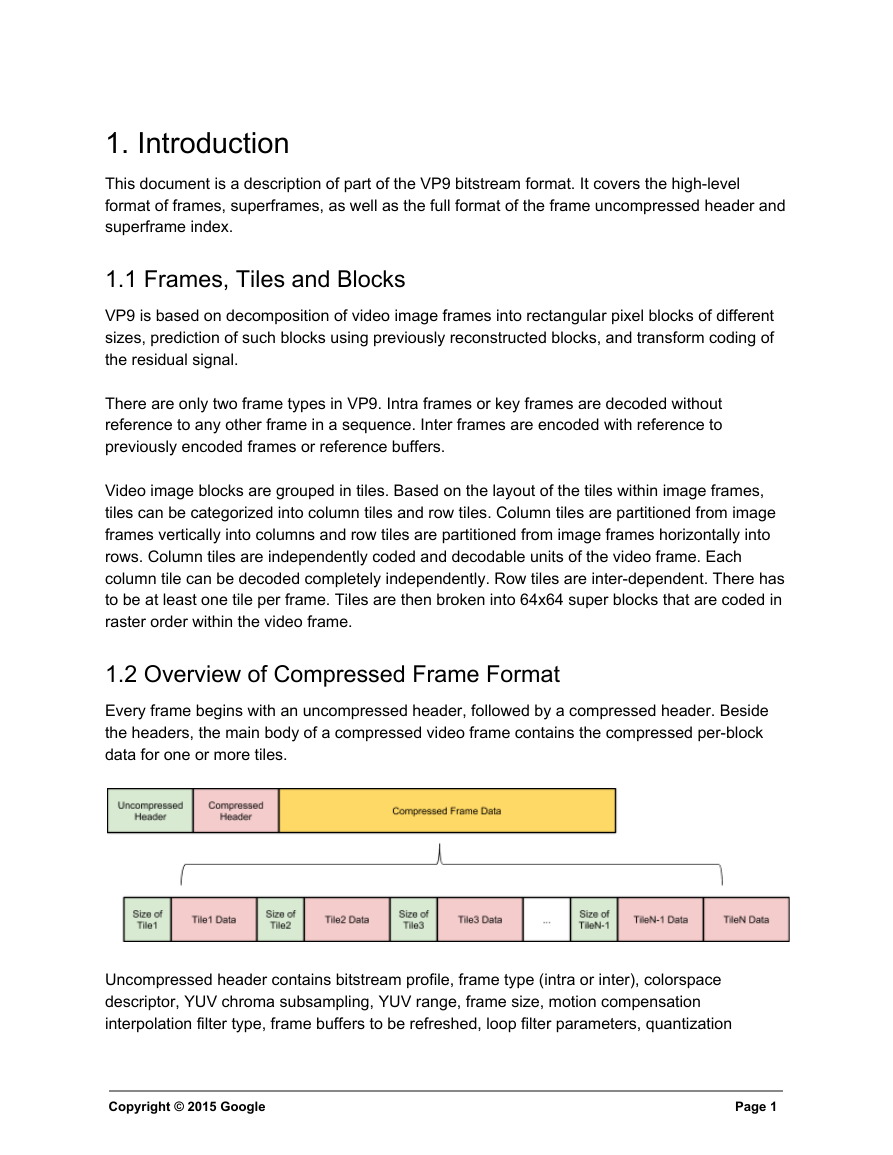

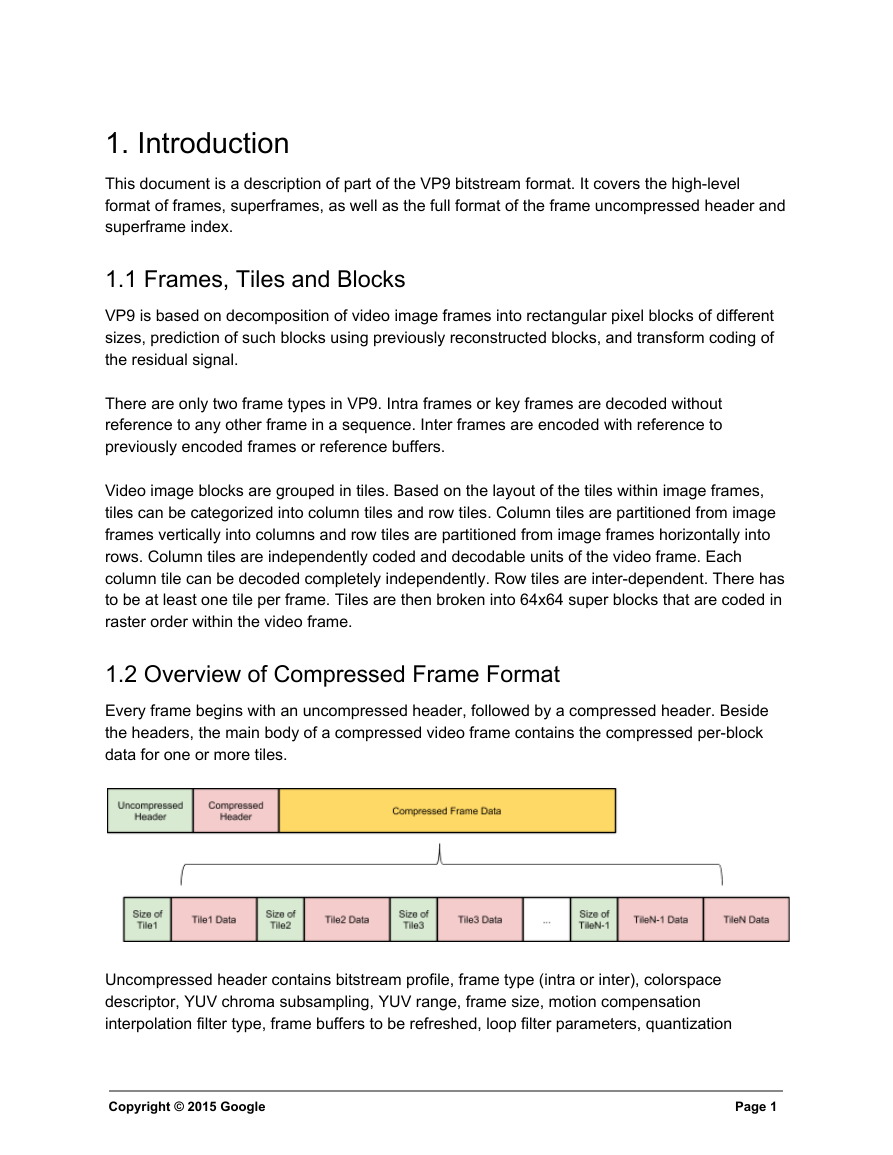

1.2 Overview of Compressed Frame Format

Every frame begins with an uncompressed header, followed by a compressed header. Beside

the headers, the main body of a compressed video frame contains the compressed perblock

data for one or more tiles.

Uncompressed header contains bitstream profile, frame type (intra or inter), colorspace

descriptor, YUV chroma subsampling, YUV range, frame size, motion compensation

interpolation filter type, frame buffers to be refreshed, loop filter parameters, quantization

Copyright © 2015 Google

Page 1

�

parameters, segmentation and tiling information. There is also frame context information and

binary flags indicating the use of error resilient mode, parallel decoding mode, high precision

motion vector mode, and intra only mode. See section 4 for details.

Compressed header contains frame transform mode, probabilities to decode transform size for

each block within the frame, probabilities to decode transform coefficients, probabilities to

decode modes and motion vectors, and so on.

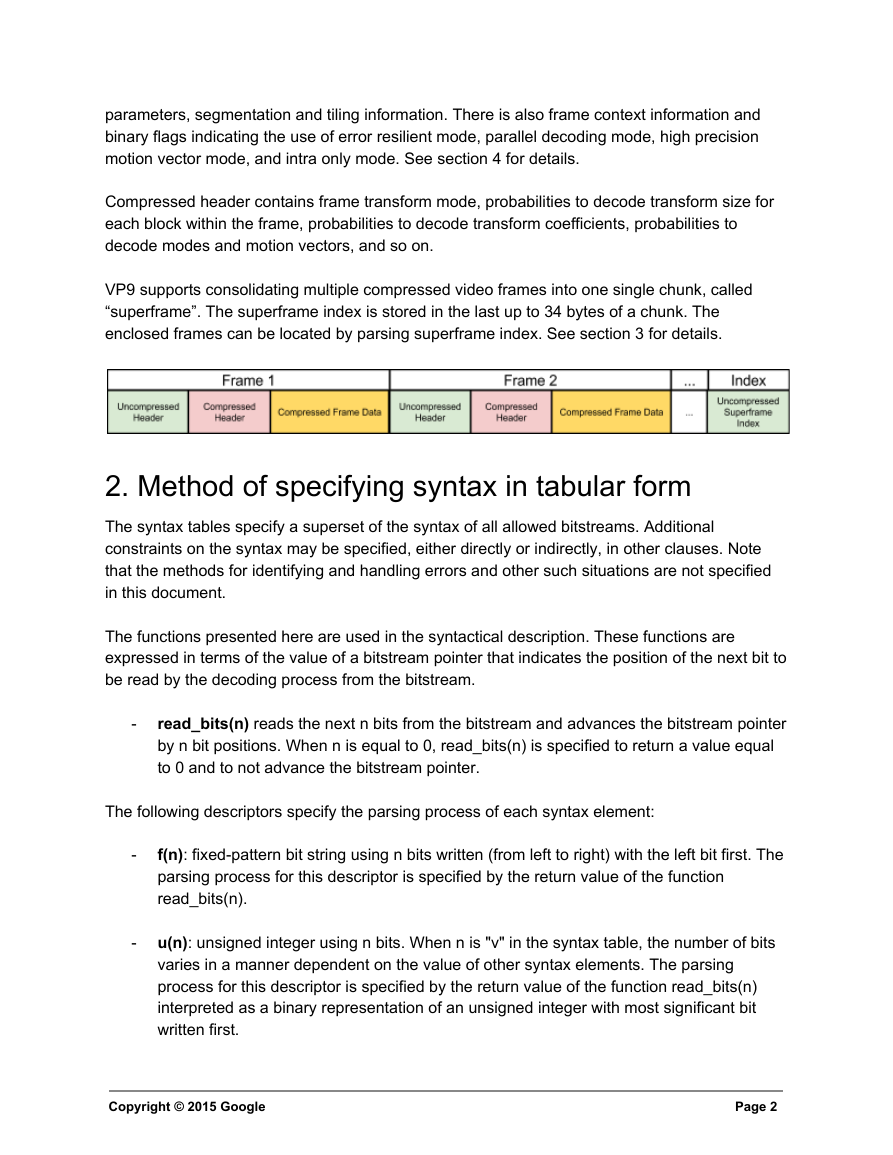

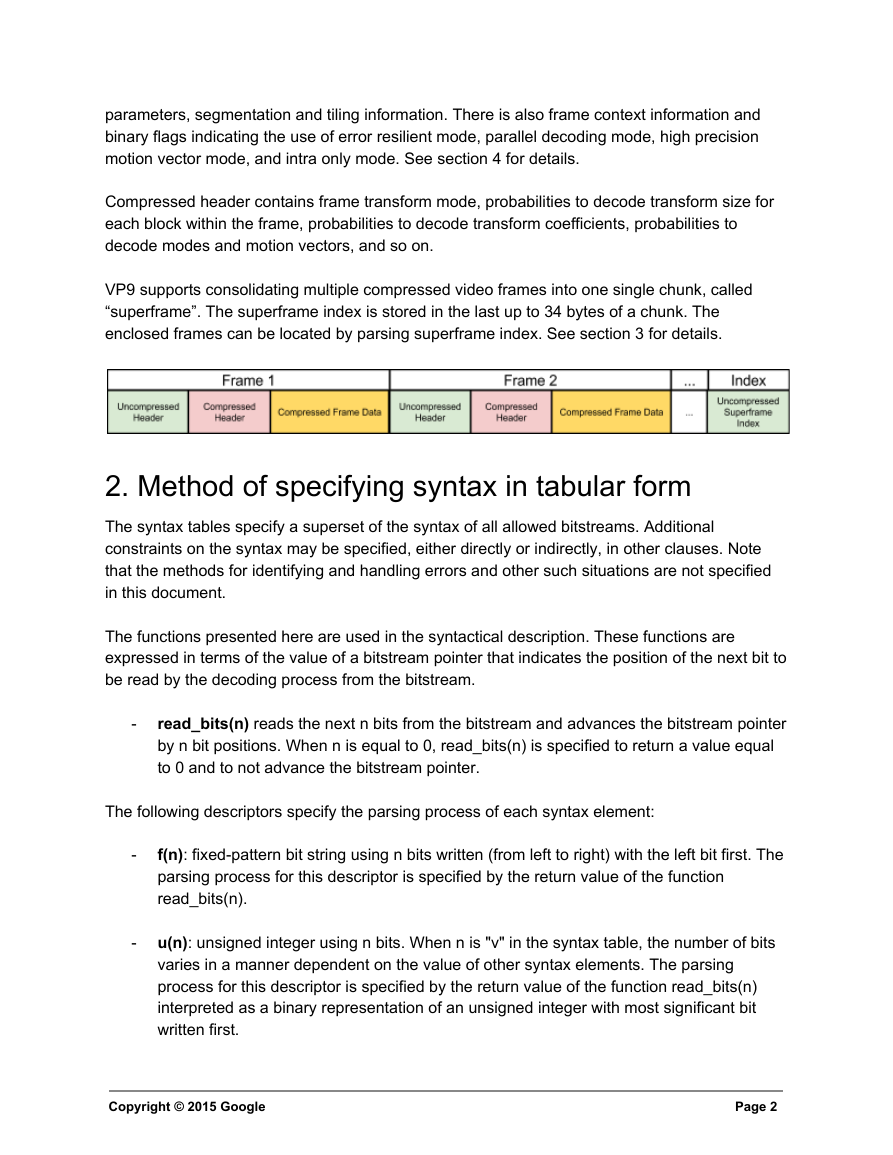

VP9 supports consolidating multiple compressed video frames into one single chunk, called

“superframe”. The superframe index is stored in the last up to 34 bytes of a chunk. The

enclosed frames can be located by parsing superframe index. See section 3 for details.

2. Method of specifying syntax in tabular form

The syntax tables specify a superset of the syntax of all allowed bitstreams. Additional

constraints on the syntax may be specified, either directly or indirectly, in other clauses. Note

that the methods for identifying and handling errors and other such situations are not specified

in this document.

The functions presented here are used in the syntactical description. These functions are

expressed in terms of the value of a bitstream pointer that indicates the position of the next bit to

be read by the decoding process from the bitstream.

read_bits(n) reads the next n bits from the bitstream and advances the bitstream pointer

by n bit positions. When n is equal to 0, read_bits(n) is specified to return a value equal

to 0 and to not advance the bitstream pointer.

The following descriptors specify the parsing process of each syntax element:

f(n): fixedpattern bit string using n bits written (from left to right) with the left bit first. The

parsing process for this descriptor is specified by the return value of the function

read_bits(n).

u(n): unsigned integer using n bits. When n is "v" in the syntax table, the number of bits

varies in a manner dependent on the value of other syntax elements. The parsing

process for this descriptor is specified by the return value of the function read_bits(n)

interpreted as a binary representation of an unsigned integer with most significant bit

written first.

Copyright © 2015 Google

Page 2

�

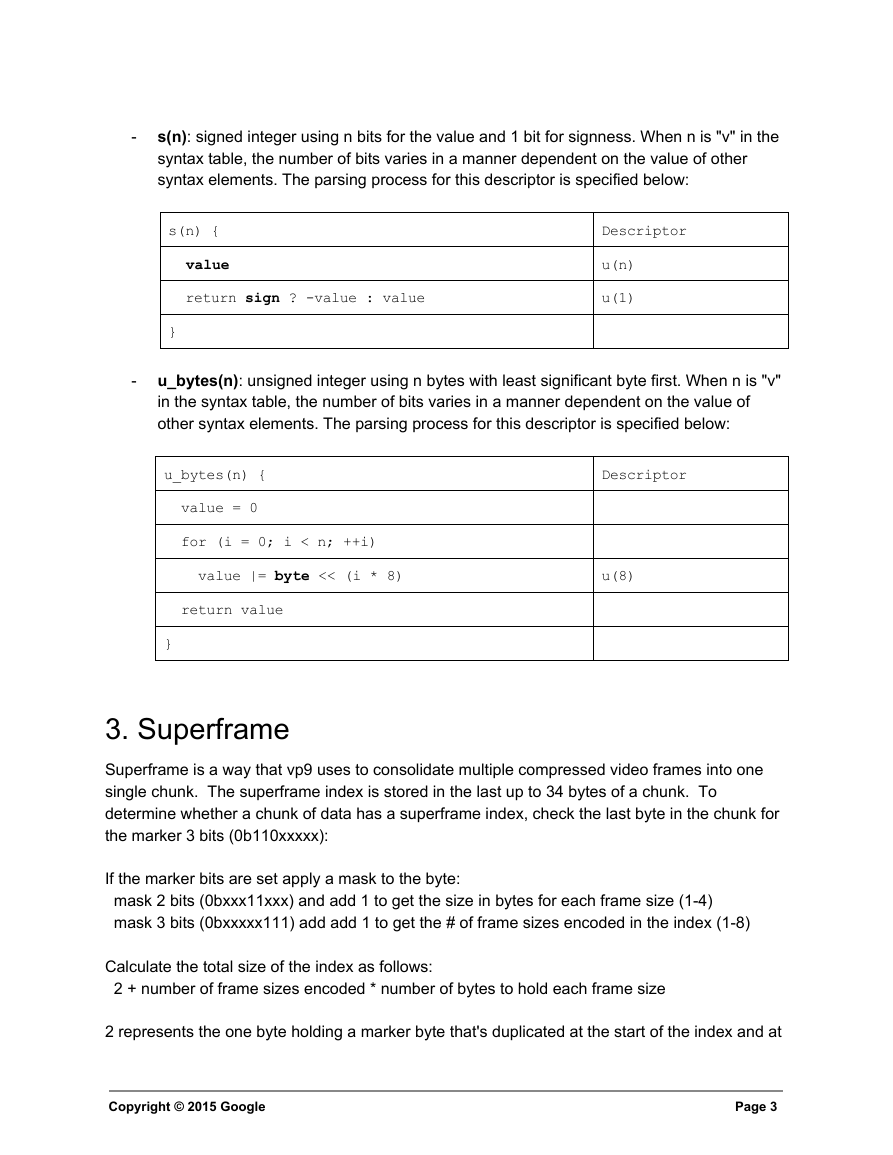

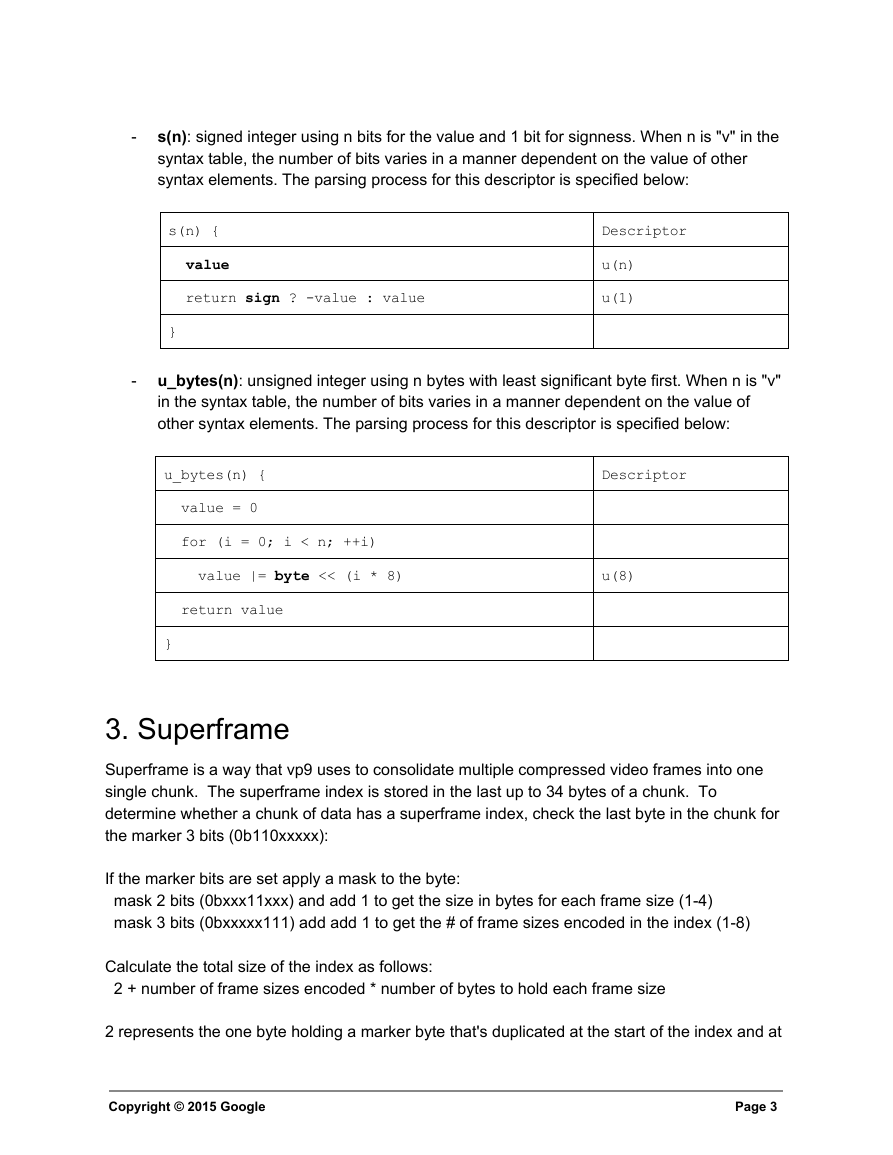

s(n): signed integer using n bits for the value and 1 bit for signness. When n is "v" in the

syntax table, the number of bits varies in a manner dependent on the value of other

syntax elements. The parsing process for this descriptor is specified below:

s(n) {

value

return sign ? value : value

}

Descriptor

u(n)

u(1)

u_bytes(n): unsigned integer using n bytes with least significant byte first. When n is "v"

in the syntax table, the number of bits varies in a manner dependent on the value of

other syntax elements. The parsing process for this descriptor is specified below:

u_bytes(n) {

value = 0

for (i = 0; i < n; ++i)

value |= byte << (i * 8)

return value

}

Descriptor

u(8)

3. Superframe

Superframe is a way that vp9 uses to consolidate multiple compressed video frames into one

single chunk. The superframe index is stored in the last up to 34 bytes of a chunk. To

determine whether a chunk of data has a superframe index, check the last byte in the chunk for

the marker 3 bits (0b110xxxxx):

If the marker bits are set apply a mask to the byte:

mask 2 bits (0bxxx11xxx) and add 1 to get the size in bytes for each frame size (14)

mask 3 bits (0bxxxxx111) add add 1 to get the # of frame sizes encoded in the index (18)

Calculate the total size of the index as follows:

2 + number of frame sizes encoded * number of bytes to hold each frame size

2 represents the one byte holding a marker byte that's duplicated at the start of the index and at

Copyright © 2015 Google

Page 3

�

the end of the index.

Go to the first byte of the superframe index, and check that it matches the last byte of the

superframe index.

No valid frame can have 0b110xxxxx in the last byte. In the encoder we insure this by adding a

byte 0 to the data in this case.

The actual frames are concatenated one frame after each other consecutively. To find the

second piece of compressed data in a chunk, read the index to get the frame size, and offset

from the start of data to that byte using the size pulled from the data.

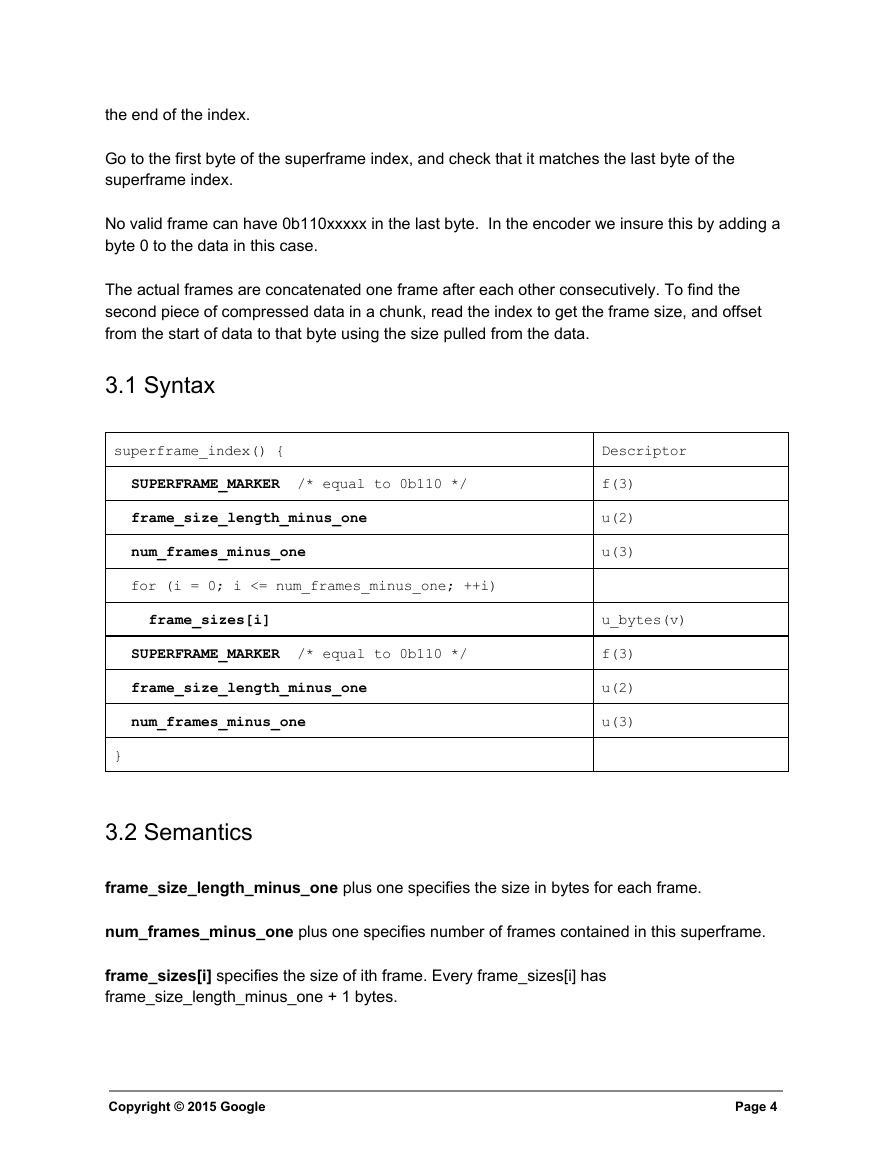

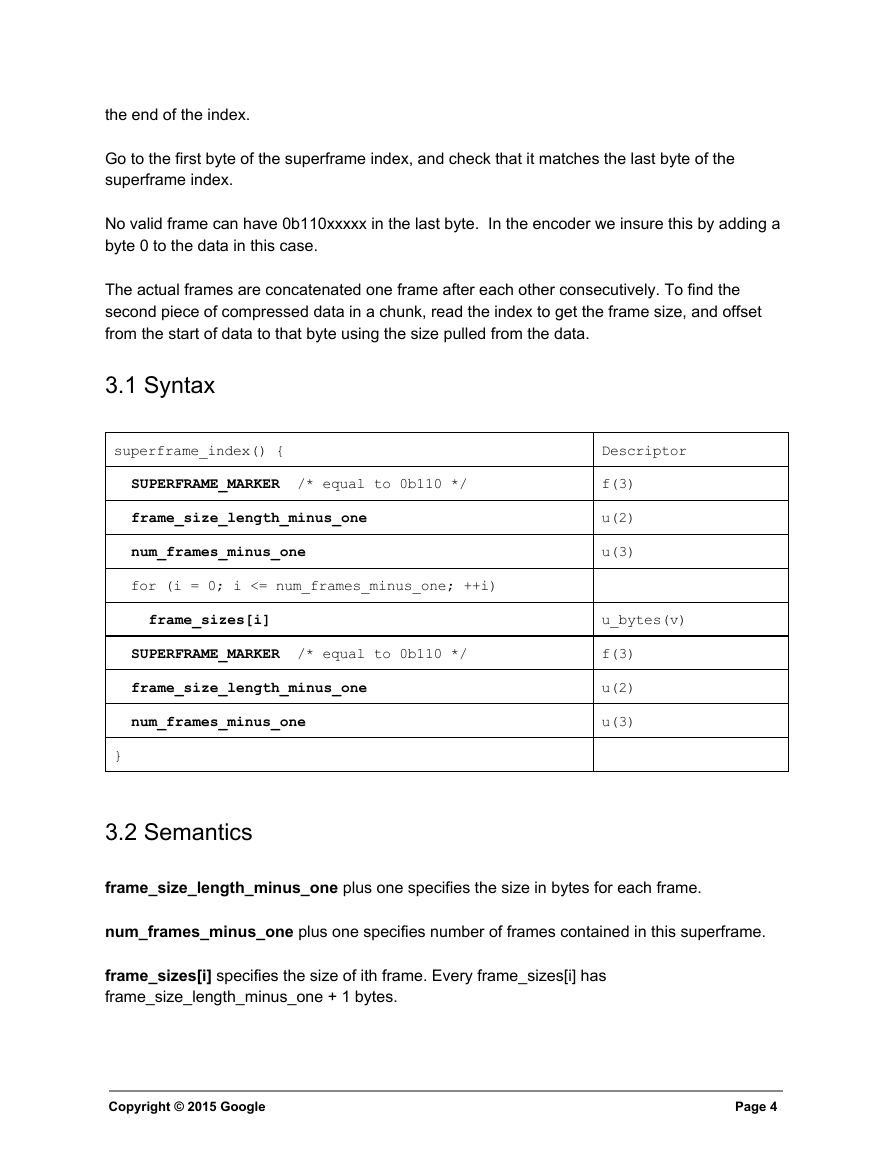

3.1 Syntax

superframe_index() {

SUPERFRAME_MARKER /* equal to 0b110 */

frame_size_length_minus_one

num_frames_minus_one

for (i = 0; i <= num_frames_minus_one; ++i)

frame_sizes[i]

SUPERFRAME_MARKER /* equal to 0b110 */

frame_size_length_minus_one

num_frames_minus_one

}

Descriptor

f(3)

u(2)

u(3)

u_bytes(v)

f(3)

u(2)

u(3)

3.2 Semantics

frame_size_length_minus_one plus one specifies the size in bytes for each frame.

num_frames_minus_one plus one specifies number of frames contained in this superframe.

frame_sizes[i] specifies the size of ith frame. Every frame_sizes[i] has

frame_size_length_minus_one + 1 bytes.

Copyright © 2015 Google

Page 4

�

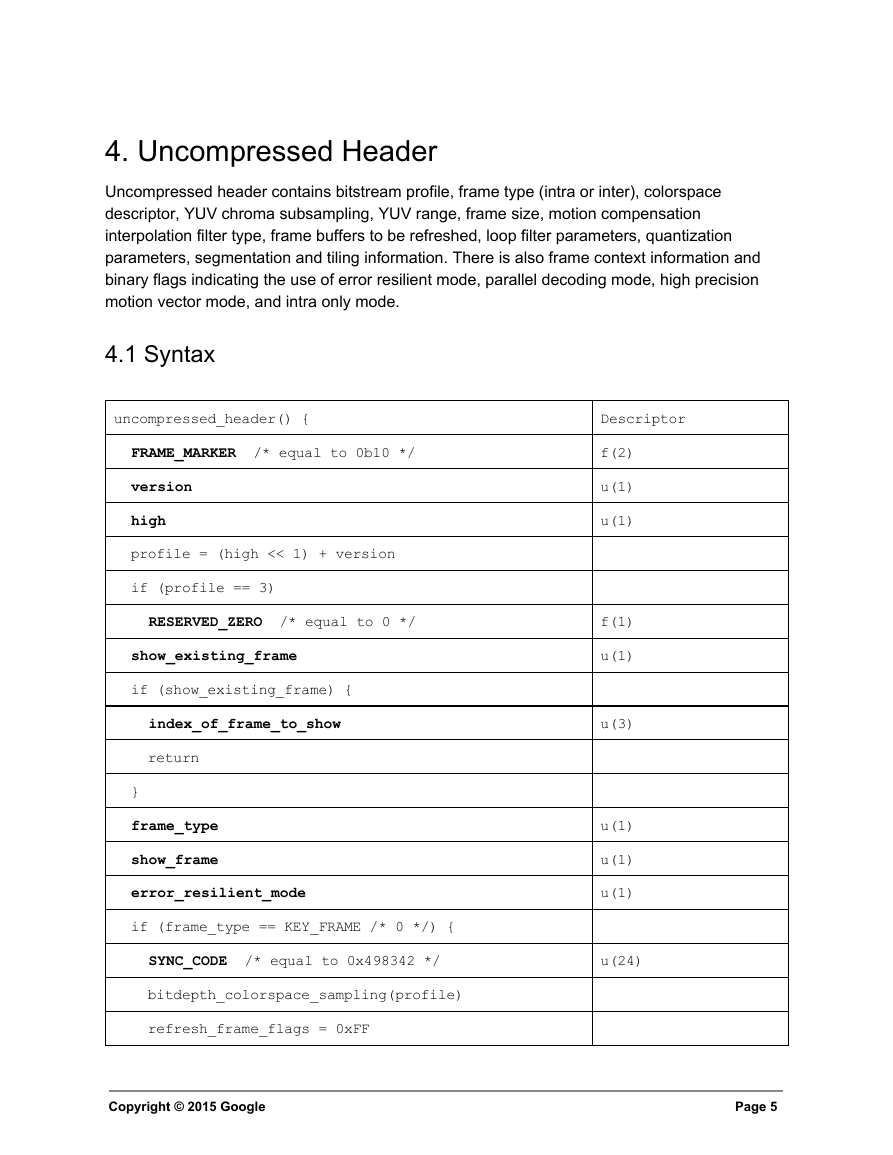

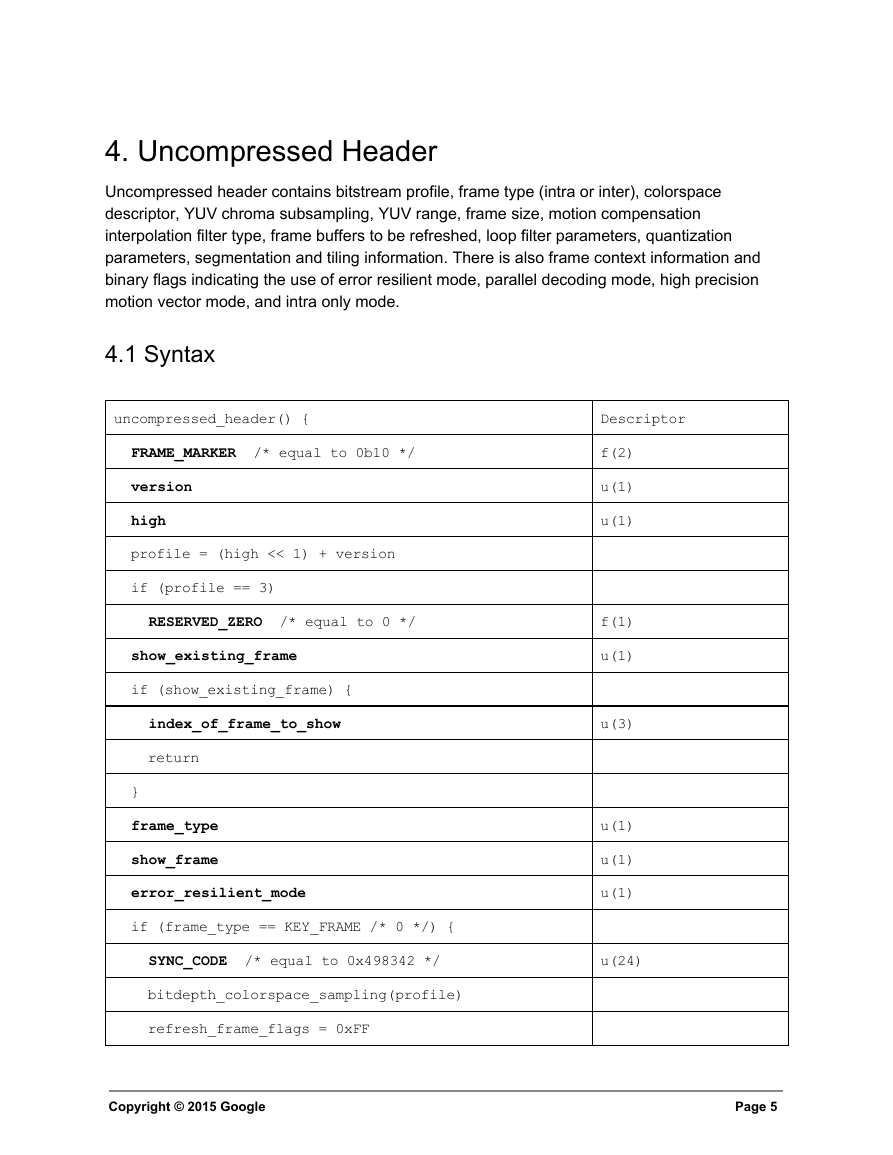

4. Uncompressed Header

Uncompressed header contains bitstream profile, frame type (intra or inter), colorspace

descriptor, YUV chroma subsampling, YUV range, frame size, motion compensation

interpolation filter type, frame buffers to be refreshed, loop filter parameters, quantization

parameters, segmentation and tiling information. There is also frame context information and

binary flags indicating the use of error resilient mode, parallel decoding mode, high precision

motion vector mode, and intra only mode.

4.1 Syntax

uncompressed_header() {

FRAME_MARKER /* equal to 0b10 */

version

high

profile = (high << 1) + version

if (profile == 3)

RESERVED_ZERO /* equal to 0 */

show_existing_frame

if (show_existing_frame) {

index_of_frame_to_show

return

}

frame_type

show_frame

error_resilient_mode

if (frame_type == KEY_FRAME /* 0 */) {

SYNC_CODE /* equal to 0x498342 */

bitdepth_colorspace_sampling(profile)

refresh_frame_flags = 0xFF

Copyright © 2015 Google

Descriptor

f(2)

u(1)

u(1)

f(1)

u(1)

u(3)

u(1)

u(1)

u(1)

u(24)

Page 5

�

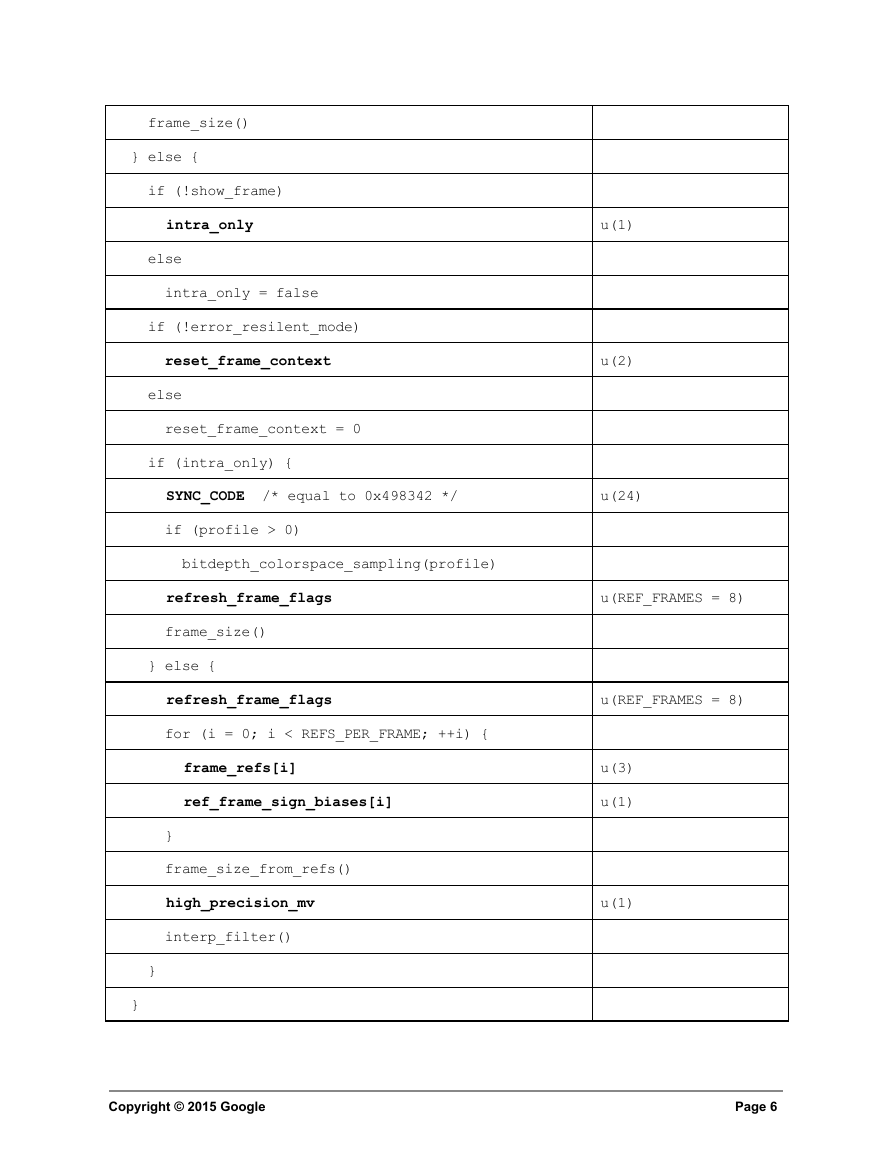

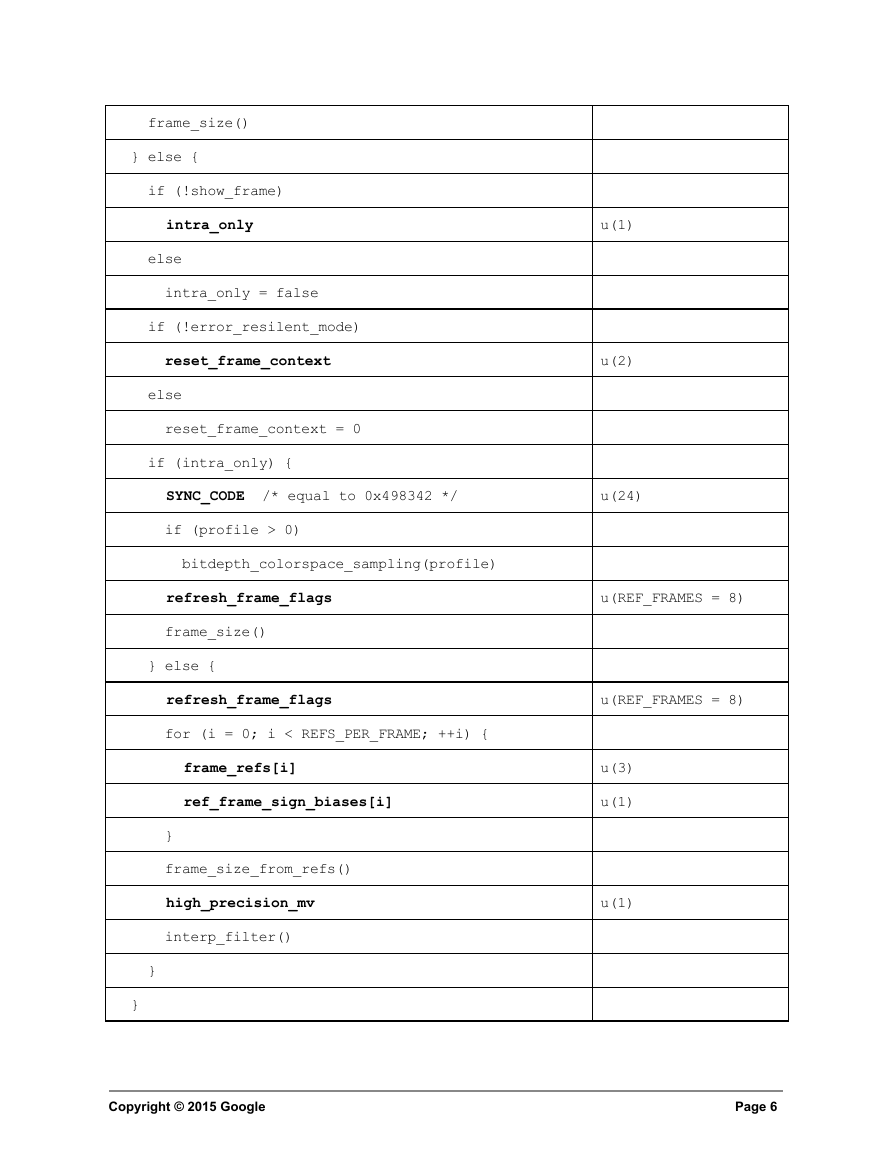

frame_size()

} else {

if (!show_frame)

intra_only

else

intra_only = false

if (!error_resilent_mode)

reset_frame_context

else

reset_frame_context = 0

if (intra_only) {

SYNC_CODE /* equal to 0x498342 */

if (profile > 0)

bitdepth_colorspace_sampling(profile)

refresh_frame_flags

frame_size()

} else {

refresh_frame_flags

for (i = 0; i < REFS_PER_FRAME; ++i) {

frame_refs[i]

ref_frame_sign_biases[i]

}

frame_size_from_refs()

high_precision_mv

interp_filter()

}

}

u(1)

u(2)

u(24)

u(REF_FRAMES = 8)

u(REF_FRAMES = 8)

u(3)

u(1)

u(1)

Copyright © 2015 Google

Page 6

�

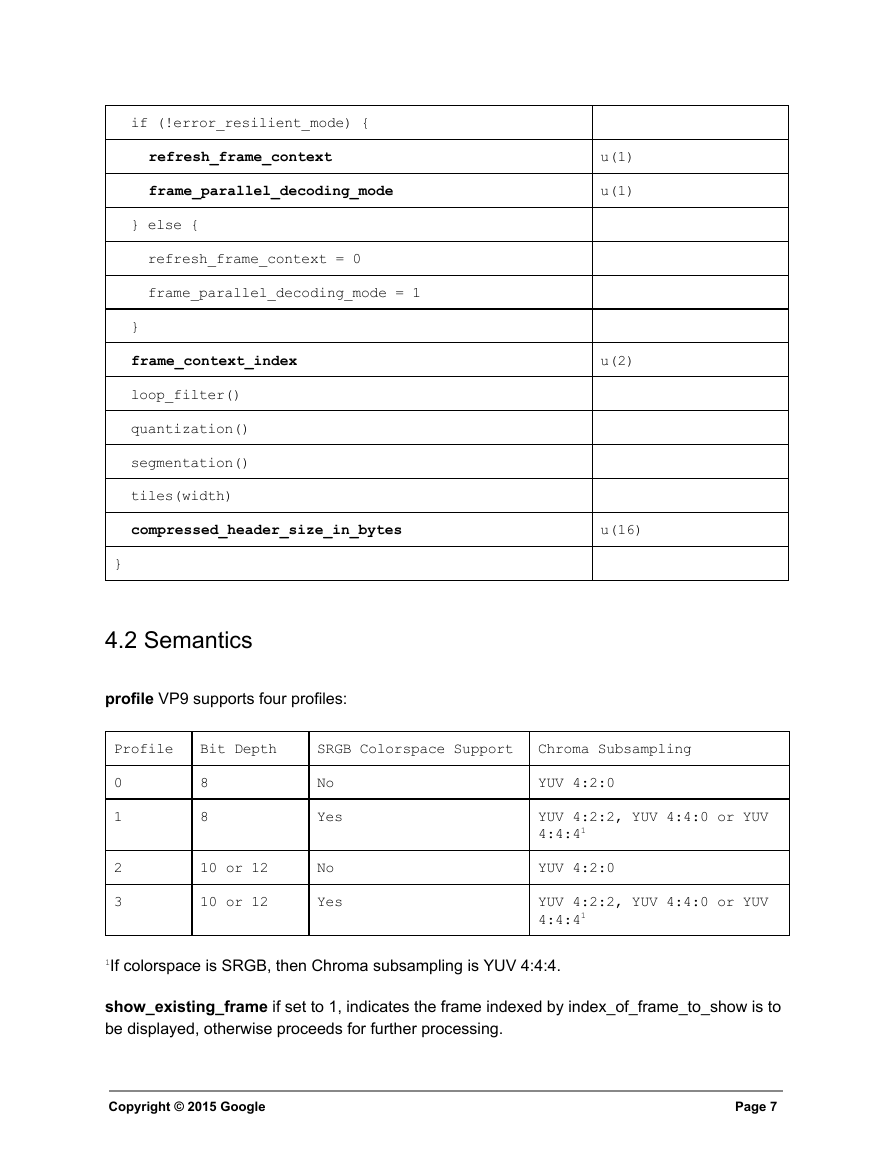

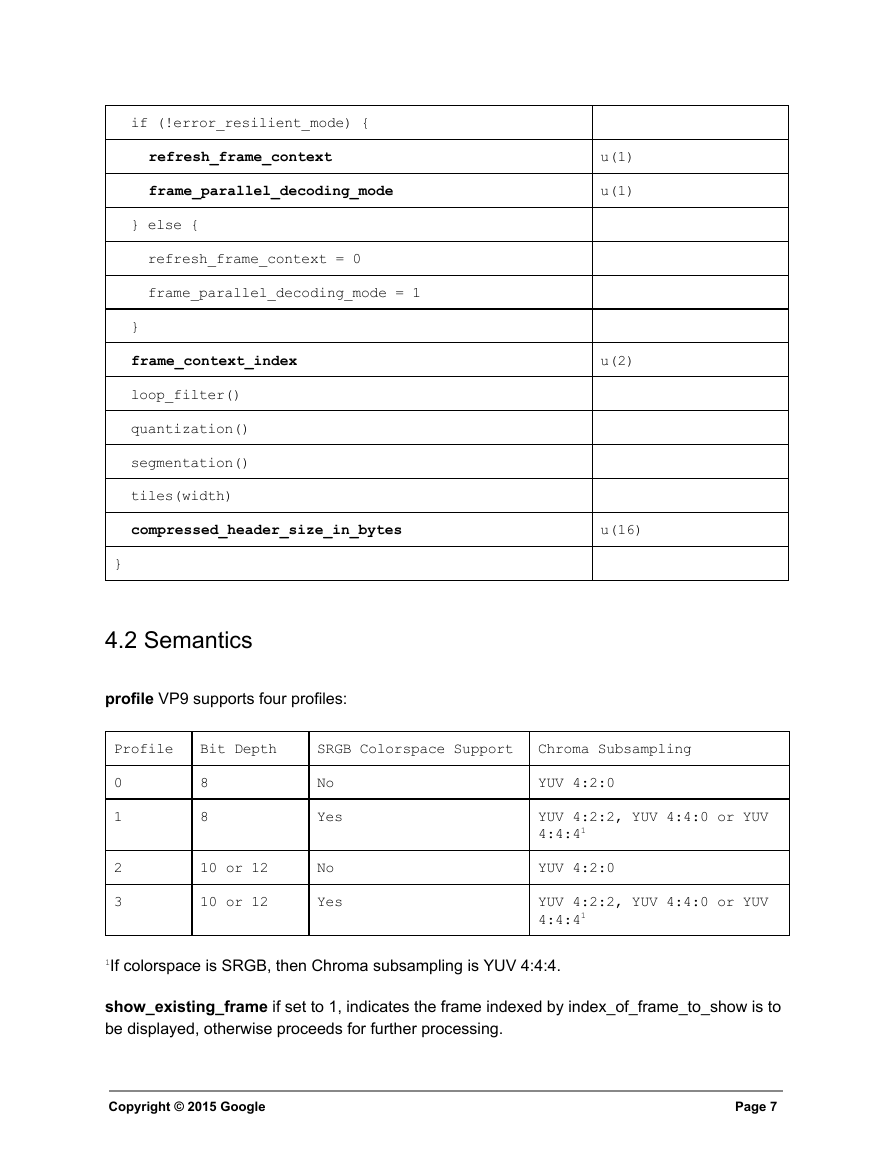

if (!error_resilient_mode) {

refresh_frame_context

frame_parallel_decoding_mode

} else {

refresh_frame_context = 0

frame_parallel_decoding_mode = 1

}

frame_context_index

loop_filter()

quantization()

segmentation()

tiles(width)

compressed_header_size_in_bytes

}

u(1)

u(1)

u(2)

u(16)

4.2 Semantics

profile VP9 supports four profiles:

Profile Bit Depth SRGB Colorspace Support Chroma Subsampling

0

1

No

Yes

8

8

2

3

10 or 12

10 or 12

No

Yes

YUV 4:2:0

YUV 4:2:2, YUV 4:4:0 or YUV

4:4:41

YUV 4:2:0

YUV 4:2:2, YUV 4:4:0 or YUV

4:4:41

1If colorspace is SRGB, then Chroma subsampling is YUV 4:4:4.

show_existing_frame if set to 1, indicates the frame indexed by index_of_frame_to_show is to

be displayed, otherwise proceeds for further processing.

Copyright © 2015 Google

Page 7

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc