Front Cover

Structured Parallel Programming: Patterns for Efficient Computation

Copyright

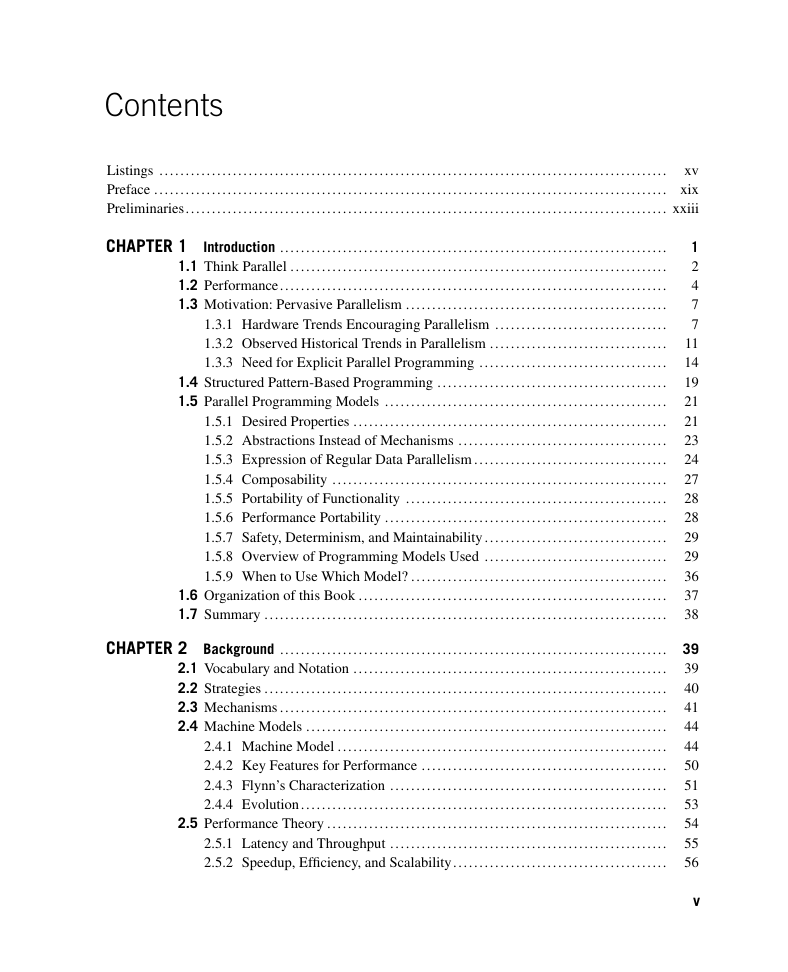

Table of Contents

Listings

Preface

Preliminaries

1 Introduction

1.1 Think Parallel

1.2 Performance

1.3 Motivation: Pervasive Parallelism

1.3.1 Hardware Trends Encouraging Parallelism

1.3.2 Observed Historical Trends in Parallelism

1.3.3 Need for Explicit Parallel Programming

1.4 Structured Pattern-Based Programming

1.5 Parallel Programming Models

1.5.1 Desired Properties

1.5.2 Abstractions Instead of Mechanisms

1.5.3 Expression of Regular Data Parallelism

1.5.4 Composability

1.5.5 Portability of Functionality

1.5.6 Performance Portability

1.5.7 Safety, Determinism, and Maintainability

1.5.8 Overview of Programming Models Used

Cilk Plus

Threading Building Blocks (TBB)

OpenMP

Array Building Blocks (ArBB)

OpenCL

1.5.9 When to Use Which Model?

1.6 Organization of this Book

1.7 Summary

2 Background

2.1 Vocabulary and Notation

2.2 Strategies

2.3 Mechanisms

2.4 Machine Models

2.4.1 Machine Model

Instruction Parallelism

Memory Hierarchy

Virtual Memory

Multiprocessor Systems

Attached Devices

2.4.2 Key Features for Performance

Data Locality

Parallel Slack

2.4.3 Flynn's Characterization

2.4.4 Evolution

2.5 Performance Theory

2.5.1 Latency and Throughput

2.5.2 Speedup, Efficiency, and Scalability

2.5.3 Power

2.5.4 Amdahl's Law

2.5.5 Gustafson-Barsis' Law

2.5.6 Work-Span Model

2.5.7 Asymptotic Complexity

2.5.8 Asymptotic Speedup and Efficiency

2.5.9 Little's Formula

2.6 Pitfalls

2.6.1 Race Conditions

2.6.2 Mutual Exclusion and Locks

2.6.3 Deadlock

2.6.4 Strangled Scaling

2.6.5 Lack of Locality

2.6.6 Load Imbalance

2.6.7 Overhead

2.7 Summary

I Patterns

3 Patterns

3.1 Nesting Pattern

3.2 Structured Serial Control Flow Patterns

3.2.1 Sequence

3.2.2 Selection

3.2.3 Iteration

3.2.4 Recursion

3.3 Parallel Control Patterns

3.3.1 Fork–Join

3.3.2 Map

3.3.3 Stencil

3.3.4 Reduction

3.3.5 Scan

3.3.6 Recurrence

3.4 Serial Data Management Patterns

3.4.1 Random Read and Write

3.4.2 Stack Allocation

3.4.3 Heap Allocation

3.4.4 Closures

3.4.5 Objects

3.5 Parallel Data Management Patterns

3.5.1 Pack

3.5.2 Pipeline

3.5.3 Geometric Decomposition

3.5.4 Gather

3.5.5 Scatter

3.6 Other Parallel Patterns

3.6.1 Superscalar Sequences

3.6.2 Futures

3.6.3 Speculative Selection

3.6.4 Workpile

3.6.5 Search

3.6.6 Segmentation

3.6.7 Expand

3.6.8 Category Reduction

3.6.9 Term Graph Rewriting

3.7 Non-Deterministic Patterns

3.7.1 Branch and Bound

3.7.2 Transactions

3.8 Programming Model Support for Patterns

3.8.1 Cilk Plus

Nesting, Recursion, Fork–Join

Reduction

Map, Workpile

Scatter, Gather

3.8.2 Threading Building Blocks

Nesting, Recursion, Fork–Join

Map

Workpile

Reduction

Scan

Pipeline

Speculative Selection, Branch and Bound

3.8.3 OpenMP

Map, Workpile

Reduction

Fork–Join

Stencil, Geometric Decomposition, Gather, Scatter

3.8.4 Array Building Blocks

Map

Reduction, Scan

Scatter, Gather

Pack

3.8.5 OpenCL

Map

Gather

Scatter

Reduction, Scan, Pack, Expand

Stencil

Workpile

Superscalar Sequences

Geometric Decomposition

Closures

3.9 Summary

4 Map

4.1 Map

4.2 Scaled Vector Addition (SAXPY)

4.2.1 Description of the Problem

4.2.2 Serial Implementation

4.2.3 TBB

4.2.4 Cilk Plus

4.2.5 Cilk Plus with Array Notation

4.2.6 OpenMP

4.2.7 ArBB Using Vector Operations

4.2.8 ArBB Using Elemental Functions

4.2.9 OpenCL

4.3 Mandelbrot

4.3.1 Description of the Problem

4.3.2 Serial Implementation

4.3.3 TBB

4.3.4 Cilk Plus

4.3.5 Cilk Plus with Array Notations

4.3.6 OpenMP

4.3.7 ArBB

4.3.8 OpenCL

4.4 Sequence of Maps versus Map of Sequence

4.5 Comparison of Parallel Models

4.6 Related Patterns

4.6.1 Stencil

4.6.2 Workpile

4.6.3 Divide-and-conquer

4.7 Summary

5 Collectives

5.1 Reduce

5.1.1 Reordering Computations

5.1.2 Vectorization

5.1.3 Tiling

5.1.4 Precision

5.1.5 Implementation

OpenCL

TBB

Cilk Plus

ArBB

OpenMP

5.2 Fusing Map and Reduce

5.2.1 Explicit Fusion in TBB

5.2.2 Explicit Fusion in Cilk Plus

5.2.3 Automatic Fusion in ArBB

5.3 Dot Product

5.3.1 Description of the Problem

5.3.2 Serial Implementation

5.3.3 SSE Intrinsics

5.3.4 TBB

5.3.5 Cilk Plus

5.3.6 OpenMP

5.3.7 ArBB

5.4 Scan

5.4.1 Cilk Plus

5.4.2 TBB

5.4.3 ArBB

5.4.4 OpenMP

5.5 Fusing Map and Scan

5.6 Integration

5.6.1 Description of the Problem

5.6.2 Serial Implementation

5.6.3 Cilk Plus

5.6.4 OpenMP

5.6.5 TBB

5.6.6 ArBB

5.7 Summary

6 Data Reorganization

6.1 Gather

6.1.1 General Gather

6.1.2 Shift

6.1.3 Zip

6.2 Scatter

6.2.1 Atomic Scatter

6.2.2 Permutation Scatter

6.2.3 Merge Scatter

6.2.4 Priority Scatter

6.3 Converting Scatter to Gather

6.4 Pack

6.5 Fusing Map and Pack

6.6 Geometric Decomposition and Partition

6.7 Array of Structures vs. Structures of Arrays

6.8 Summary

7 Stencil and Recurrence

7.1 Stencil

7.2 Implementing Stencil with Shift

7.3 Tiling Stencils for Cache

7.4 Optimizing Stencils for Communication

7.5 Recurrence

7.6 Summary

8 Fork–Join

8.1 Definition

8.2 Programming Model Support for Fork–Join

8.2.1 Cilk Plus Support for Fork–Join

8.2.2 TBB Support for Fork–Join

8.2.3 OpenMP Support for Fork–Join

8.3 Recursive Implementation of Map

8.4 Choosing Base Cases

8.5 Load Balancing

8.6 Complexity of Parallel Divide-and-Conquer

8.7 Karatsuba Multiplication of Polynomials

8.7.1 Note on Allocating Scratch Space

8.8 Cache Locality and Cache-Oblivious Algorithms

8.9 Quicksort

8.9.1 Cilk Quicksort

8.9.2 TBB Quicksort

8.9.3 Work and Span for Quicksort

8.10 Reductions and Hyperobjects

8.11 Implementing Scan with Fork–Join

8.12 Applying Fork–Join to Recurrences

8.12.1 Analysis

8.12.2 Flat Fork–Join

8.13 Summary

9 Pipeline

9.1 Basic Pipeline

9.2 Pipeline with Parallel Stages

9.3 Implementation of a Pipeline

9.4 Programming Model Support for Pipelines

9.4.1 Pipeline in TBB

9.4.2 Pipeline in Cilk Plus

9.5 More General Topologies

9.6 Mandatory versus Optional Parallelism

9.7 Summary

II Examples

10 Forward Seismic Simulation

10.1 Background

10.2 Stencil Computation

10.3 Impact of Caches on Arithmetic Intensity

10.4 Raising Arithmetic Intensity with Space–Time Tiling

10.5 Cilk Plus Code

10.6 ArBB Implementation

10.7 Summary

11 K-Means Clustering

11.1 Algorithm

11.2 K-Means with Cilk Plus

11.2.1 Hyperobjects

11.3 K-Means with TBB

11.4 Summary

12 Bzip2 Data Compression

12.1 The Bzip2 Algorithm

12.2 Three-Stage Pipeline Using TBB

12.3 Four-Stage Pipeline Using TBB

12.4 Three-Stage Pipeline Using Cilk Plus

12.5 Summary

13 Merge Sort

13.1 Parallel Merge

13.1.1 TBB Parallel Merge

13.1.2 Work and Span of Parallel Merge

13.2 Parallel Merge Sort

13.2.1 Work and Span of Merge Sort

13.3 Summary

14 Sample Sort

14.1 Overall Structure

14.2 Choosing the Number of Bins

14.3 Binning

14.3.1 TBB Implementation

14.4 Repacking and Subsorting

14.5 Performance Analysis of Sample Sort

14.6 For C++ Experts

14.7 Summary

15 Cholesky Factorization

15.1 Fortran Rules!

15.2 Recursive Cholesky Decomposition

15.3 Triangular Solve

15.4 Symmetric Rank Update

15.5 Where Is the Time Spent?

15.6 Summary

Appendices

Appendix A: Further Reading

A.1 Parallel Algorithms and Patterns

A.2 Computer Architecture Including Parallel Systems

A.3 Parallel Programming Models

Appendix B: Cilk Plus

B.1 Shared Design Principles with TBB

B.2 Unique Characteristics

B.3 Borrowing Components from TBB

B.4 Keyword Spelling

B.5 cilk_for

B.6 cilk_spawn and cilk_sync

B.7 Reducers (Hyperobjects)

B.7.1 C++ Syntax

B.7.2 C Syntax

B.8 Array Notation

B.8.1 Specifying Array Sections

B.8.2 Operations on Array Sections

B.8.3 Reductions on Array Sections

B.8.4 Implicit Index

B.8.5 Avoid Partial Overlap of Array Sections

B.9 #pragma simd

B.10 Elemental Functions

B.10.1 Attribute Syntax

B.11 Note on C++11

B.12 Notes on Setup

B.13 History

B.14 Summary

Appendix C: TBB

C.1 Unique Characteristics

C.2 Using TBB

C.3 parallel_for

C.3.1 blocked_range

C.3.2 Partitioners

C.4 parallel_reduce

C.5 parallel_deterministic_reduce

C.6 parallel_pipeline

C.7 parallel_invoke

C.8 task_group

C.9 task

C.9.1 empty_task

C.10 atomic

C.11 enumerable_thread_specific

C.12 Notes on C++11

C.13 History

C.14 Summary

Appendix D: C++11

D.1 Declaring with auto

D.2 Lambda Expressions

D.3 std::move

Appendix E: Glossary

Bibliography

Index

A

B

C

D

E

F

G

H

I

K

L

M

N

O

P

Q

R

S

T

U

V

W

Z

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc