S05: High Performance Computing with CUDA

Data-parallel Algorithms &

Data-parallel Algorithms &

Data Structures

Data Structures

John Owens

UC Davis

�

One Slide Summary of Today

One Slide Summary of Today

GPUs are great at running many closely-coupled

but independent threads in parallel

The programming model is SPMD—single program,

multiple data

GPU computing boils down to:

Define a computation domain that generates many parallel

threads

This is the data structure

Iterate in parallel over that computation domain, running a

program over all threads

This is the algorithm

S05: High Performance Computing with CUDA

2

�

Outline

Outline

Data Structures

GPU Memory Model

Taxonomy

Algorithmic Building Blocks

Sample Application

Map

Gather & Scatter

Reductions

Scan (parallel prefix)

Sort, search, …

S05: High Performance Computing with CUDA

3

�

GPU Memory Model

GPU Memory Model

More restricted memory access than CPU

Allocate/free memory only before computation

Transfers to and from CPU are explicit

GPU is controlled by CPU, can’t initiate transfers, access

disk, etc.

To generalize …

GPUs are better at accessing data structures

CPUs are better at building data structures

As CPU-GPU bandwidth improves, consider doing data

structure tasks on their “natural” processor

S05: High Performance Computing with CUDA

4

�

GPU Memory Model

GPU Memory Model

Limited memory access during computation (kernel)

Registers (per fragment/thread)

Read/write

Shared memory (shared among threads)

Does not exist in general

CUDA allows access to shared memory btwn threads

Global memory (historical)

Read-only during computation

Write-only at end of computation (precomputed address)

Global memory (new)

Allows general scatter/gather (read/write)

– No collision rules!

– Exposed in all modern GPUs

S05: High Performance Computing with CUDA

5

�

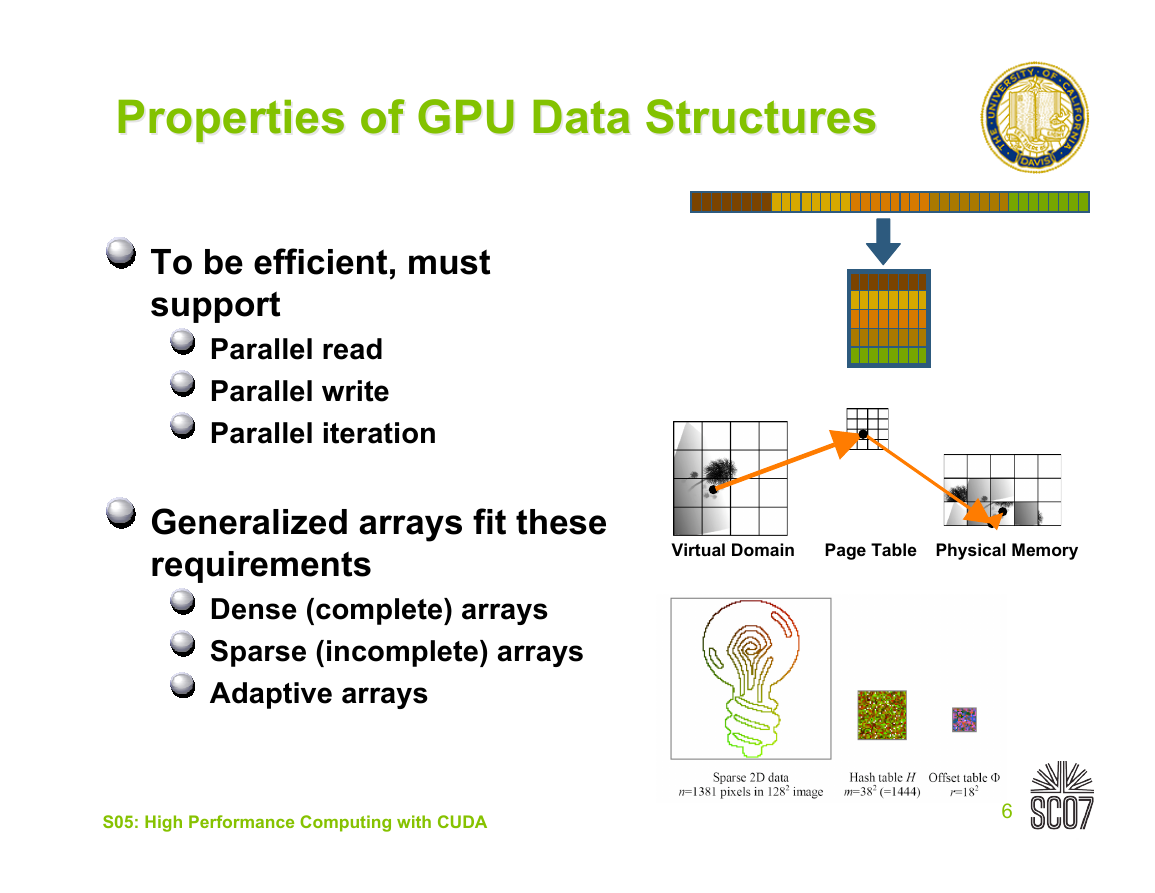

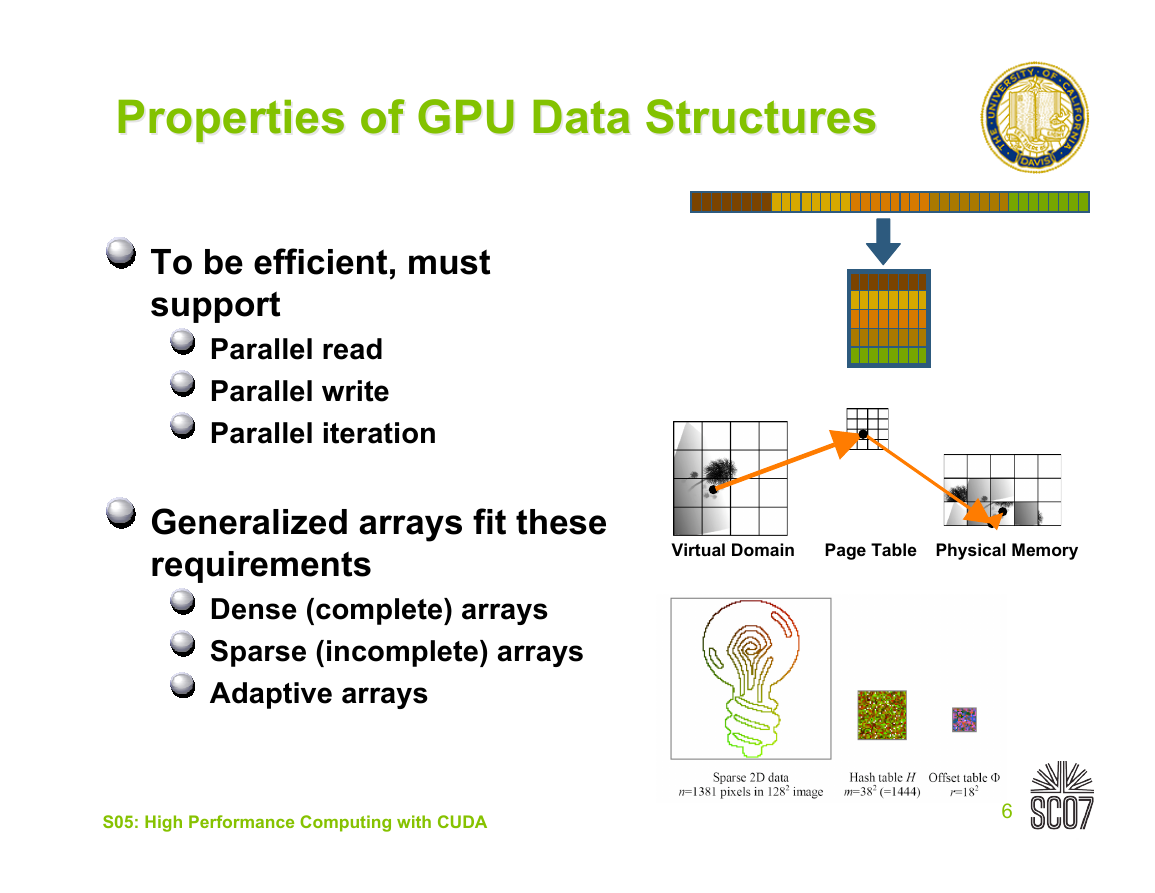

Properties of GPU Data Structures

Properties of GPU Data Structures

To be efficient, must

support

Parallel read

Parallel write

Parallel iteration

Generalized arrays fit these

requirements

Dense (complete) arrays

Sparse (incomplete) arrays

Adaptive arrays

Virtual Domain

Page Table

Physical Memory

S05: High Performance Computing with CUDA

6

�

Think In Parallel

Think In Parallel

The GPU is a data-parallel processor

Thousands of parallel threads

Thousands of data elements to process

All data processed by the same program

SPMD computation model

Contrast with task parallelism and ILP

Best results when you “Think Data Parallel”

Design your algorithm for data-parallelism

Understand parallel algorithmic complexity and efficiency

Use data-parallel algorithmic primitives as building blocks

S05: High Performance Computing with CUDA

7

�

Data-Parallel Algorithms

Data-Parallel Algorithms

Efficient algorithms require efficient building blocks

This talk: data-parallel building blocks

Map

Gather & Scatter

Reduce

Scan

S05: High Performance Computing with CUDA

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc