Machine Learning in Action

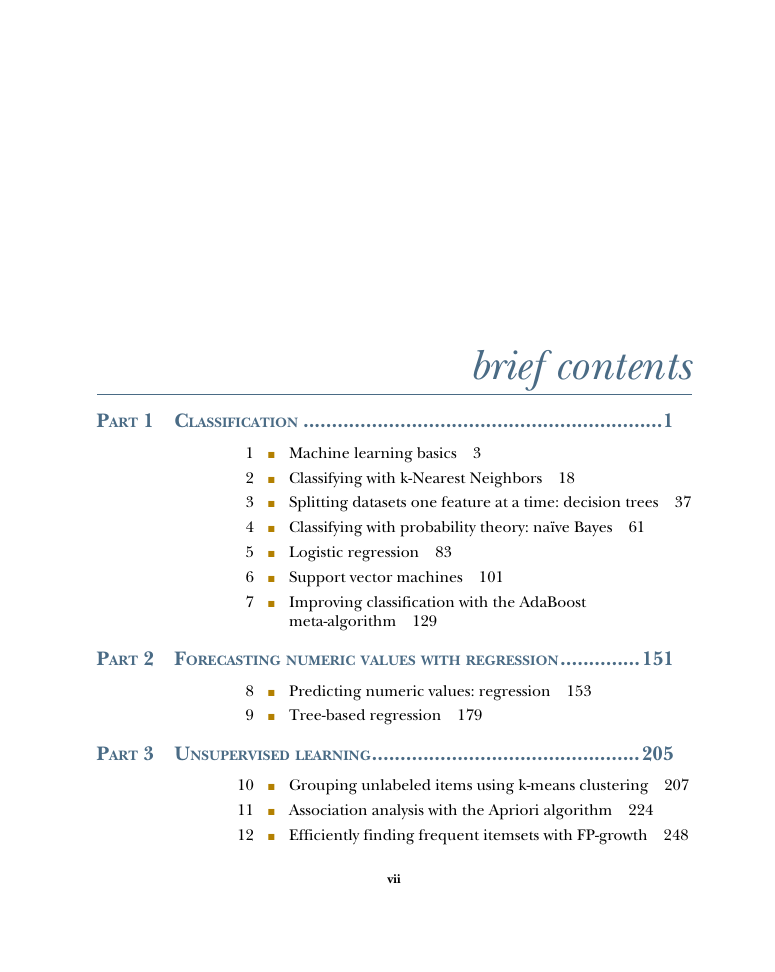

brief contents

contents

preface

acknowledgments

about this book

Audience

Top 10 algorithms in data mining

How the book is organized

Part 1 Machine learning basics

Part 2 Forecasting numeric values with regression

Part 3 Unsupervised learning

Part 4 Additional tools

Examples

Code conventions and downloads

Author Online

about the author

about the cover illustration

Classification

Machine learning basics

1.1 What is machine learning?

1.1.1 Sensors and the data deluge

1.1.2 Machine learning will be more important in the future

1.2 Key terminology

1.3 Key tasks of machine learning

1.4 How to choose the right algorithm

1.5 Steps in developing a machine learning application

1.6 Why Python?

1.6.1 Executable pseudo-code

1.6.2 Python is popular

1.6.3 What Python has that other languages don’t have

1.6.4 Drawbacks

1.7 Getting started with the NumPy library

1.8 Summary

Classifying with k-Nearest Neighbors

2.1 Classifying with distance measurements

2.1.1 Prepare: importing data with Python

2.1.2 Putting the kNN classification algorithm into action

2.1.3 How to test a classifier

2.2 Example: improving matches from a dating site with kNN

2.2.1 Prepare: parsing data from a text file

2.2.2 Analyze: creating scatter plots with Matplotlib

2.2.3 Prepare: normalizing numeric values

2.2.4 Test: testing the classifier as a whole program

2.2.5 Use: putting together a useful system

2.3 Example: a handwriting recognition system

2.3.1 Prepare: converting images into test vectors

2.3.2 Test: kNN on handwritten digits

2.4 Summary

Splitting datasets one feature at a time: decision trees

3.1 Tree construction

3.1.1 Information gain

3.1.2 Splitting the dataset

3.1.3 Recursively building the tree

3.2 Plotting trees in Python with Matplotlib annotations

3.2.1 Matplotlib annotations

3.2.2 Constructing a tree of annotations

3.3 Testing and storing the classifier

3.3.1 Test: using the tree for classification

3.3.2 Use: persisting the decision tree

3.4 Example: using decision trees to predict contact lens type

3.5 Summary

Classifying with probability theory: naïve Bayes

4.1 Classifying with Bayesian decision theory

4.2 Conditional probability

4.3 Classifying with conditional probabilities

4.4 Document classification with naïve Bayes

4.5 Classifying text with Python

4.5.1 Prepare: making word vectors from text

4.5.2 Train: calculating probabilities from word vectors

4.5.3 Test: modifying the classifier for real-world conditions

4.5.4 Prepare: the bag-of-words document model

4.6 Example: classifying spam email with naïve Bayes

4.6.1 Prepare: tokenizing text

4.6.2 Test: cross validation with naïve Bayes

4.7 Example: using naïve Bayes to reveal local attitudes from personal ads

4.7.1 Collect: importing RSS feeds

4.7.2 Analyze: displaying locally used words

4.8 Summary

Logistic regression

5.1 Classification with logistic regression and the sigmoid function: a tractable step function

5.2 Using optimization to find the best regression coefficients

5.2.1 Gradient ascent

5.2.2 Train: using gradient ascent to find the best parameters

5.2.3 Analyze: plotting the decision boundary

5.2.4 Train: stochastic gradient ascent

5.3 Example: estimating horse fatalities from colic

5.3.1 Prepare: dealing with missing values in the data

5.3.2 Test: classifying with logistic regression

5.4 Summary

Support vector machines

6.1 Separating data with the maximum margin

6.2 Finding the maximum margin

6.2.1 Framing the optimization problem in terms of our classifier

6.2.2 Approaching SVMs with our general framework

6.3 Efficient optimization with the SMO algorithm

6.3.1 Platt’s SMO algorithm

6.3.2 Solving small datasets with the simplified SMO

6.4 Speeding up optimization with the full Platt SMO

6.5 Using kernels for more complex data

6.5.1 Mapping data to higher dimensions with kernels

6.5.2 The radial bias function as a kernel

6.5.3 Using a kernel for testing

6.6 Example: revisiting handwriting classification

6.7 Summary

Improving classification with the AdaBoost meta-algorithm

7.1 Classifiers using multiple samples of the dataset

7.1.1 Building classifiers from randomly resampled data: bagging

7.1.2 Boosting

7.2 Train: improving the classifier by focusing on errors

7.3 Creating a weak learner with a decision stump

7.4 Implementing the full AdaBoost algorithm

7.5 Test: classifying with AdaBoost

7.6 Example: AdaBoost on a difficult dataset

7.7 Classification imbalance

7.7.1 Alternative performance metrics: precision, recall, and ROC

7.7.2 Manipulating the classifier’s decision with a cost function

7.7.3 Data sampling for dealing with classification imbalance

7.8 Summary

Forecasting numeric values with regression

Predicting numeric values: regression

8.1 Finding best-fit lines with linear regression

8.2 Locally weighted linear regression

8.3 Example: predicting the age of an abalone

8.4 Shrinking coefficients to understand our data

8.4.1 Ridge regression

8.4.2 The lasso

8.4.3 Forward stagewise regression

8.5 The bias/variance tradeoff

8.6 Example: forecasting the price of LEGO sets

8.6.1 Collect: using the Google shopping API

8.6.2 Train: building a model

8.7 Summary

Tree-based regression

9.1 Locally modeling complex data

9.2 Building trees with continuous and discrete features

9.3 Using CART for regression

9.3.1 Building the tree

9.3.2 Executing the code

9.4 Tree pruning

9.4.1 Prepruning

9.4.2 Postpruning

9.5 Model trees

9.6 Example: comparing tree methods to standard regression

9.7 Using Tkinter to create a GUI in Python

9.7.1 Building a GUI in Tkinter

9.7.2 Interfacing Matplotlib and Tkinter

9.8 Summary

Unsupervised learning

Grouping unlabeled items using k-means clustering

10.1 The k-means clustering algorithm

10.2 Improving cluster performance with postprocessing

10.3 Bisecting k-means

10.4 Example: clustering points on a map

10.4.1 The Yahoo! PlaceFinder API

10.4.2 Clustering geographic coordinates

10.5 Summary

Association analysis with the Apriori algorithm

11.1 Association analysis

11.2 The Apriori principle

11.3 Finding frequent itemsets with the Apriori algorithm

11.3.1 Generating candidate itemsets

11.3.2 Putting together the full Apriori algorithm

11.4 Mining association rules from frequent item sets

11.5 Example: uncovering patterns in congressional voting

11.5.1 Collect: build a transaction data set of congressional voting records

11.5.2 Test: association rules from congressional voting records

11.6 Example: finding similar features in poisonous mushrooms

11.7 Summary

Efficiently finding frequent itemsets with FP-growth

12.1 FP-trees: an efficient way to encode a dataset

12.2 Build an FP-tree

12.2.1 Creating the FP-tree data structure

12.2.2 Constructing the FP-tree

12.3 Mining frequent items from an FP-tree

12.3.1 Extracting conditional pattern bases

12.3.2 Creating conditional FP-trees

12.4 Example: finding co-occurring words in a Twitter feed

12.5 Example: mining a clickstream from a news site

12.6 Summary

Additional tools

Using principal component analysis to simplify data

13.1 Dimensionality reduction techniques

13.2 Principal component analysis

13.2.1 Moving the coordinate axes

13.2.2 Performing PCA in NumPy

13.3 Example: using PCA to reduce the dimensionality of semiconductor manufacturing data

13.4 Summary

Simplifying data with the singular value decomposition

14.1 Applications of the SVD

14.1.1 Latent semantic indexing

14.1.2 Recommendation systems

14.2 Matrix factorization

14.3 SVD in Python

14.4 Collaborative filtering–based recommendation engines

14.4.1 Measuring similarity

14.4.2 Item-based or user-based similarity?

14.4.3 Evaluating recommendation engines

14.5 Example: a restaurant dish recommendation engine

14.5.1 Recommending untasted dishes

14.5.2 Improving recommendations with the SVD

14.5.3 Challenges with building recommendation engines

14.6 Example: image compression with the SVD

14.7 Summary

Big data and MapReduce

15.1 MapReduce: a framework for distributed computing

15.2 Hadoop Streaming

15.2.1 Distributed mean and variance mapper

15.2.2 Distributed mean and variance reducer

15.3 Running Hadoop jobs on Amazon Web Services

15.3.1 Services available on AWS

15.3.2 Getting started with Amazon Web Services

15.3.3 Running a Hadoop job on EMR

15.4 Machine learning in MapReduce

15.5 Using mrjob to automate MapReduce in Python

15.5.1 Using mrjob for seamless integration with EMR

15.5.2 The anatomy of a MapReduce script in mrjob

15.6 Example: the Pegasos algorithm for distributed SVMs

15.6.1 The Pegasos algorithm

15.6.2 Training: MapReduce support vector machines with mrjob

15.7 Do you really need MapReduce?

15.8 Summary

appendix A: Getting started with Python

A.1 Installing Python

A.1.1 Windows

A.1.2 Mac OS X

A.1.3 Linux

A.2 A quick introduction to Python

A.2.1 Collection types

A.2.2 Control structures

A.2.3 List comprehensions

A.3 A quick introduction to NumPy

A.4 Beautiful Soup

A.5 Mrjob

A.6 Vote Smart

A.7 Python-Twitter

appendix B: Linear algebra

B.1 Matrices

B.2 Matrix inverse

B.3 Norms

B.4 Matrix calculus

appendix C: Probability refresher

C.1 Intro to probability

C.2 Joint probability

C.3 Basic rules of probability

appendix D: Resources

index

Numerics

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

R

S

T

U

V

W

X

Y

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc