POMDP Tutorial

�

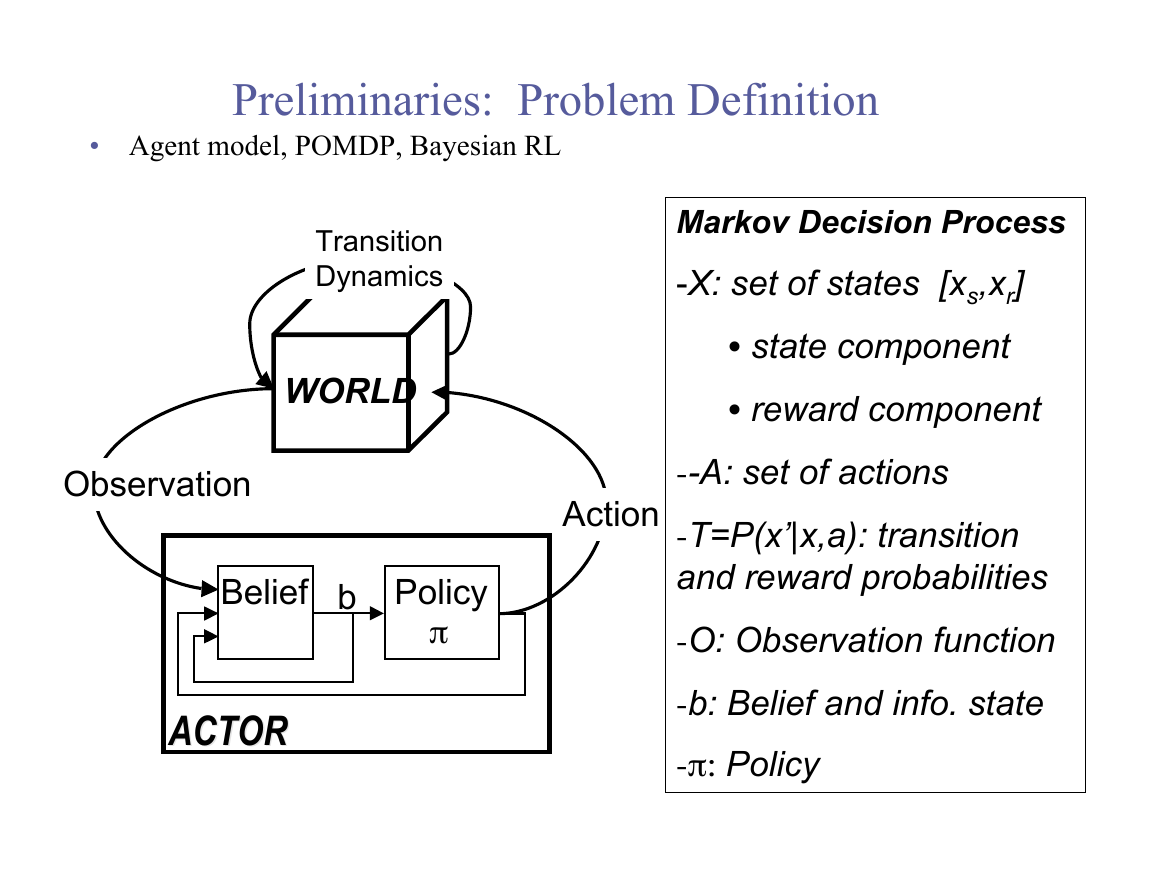

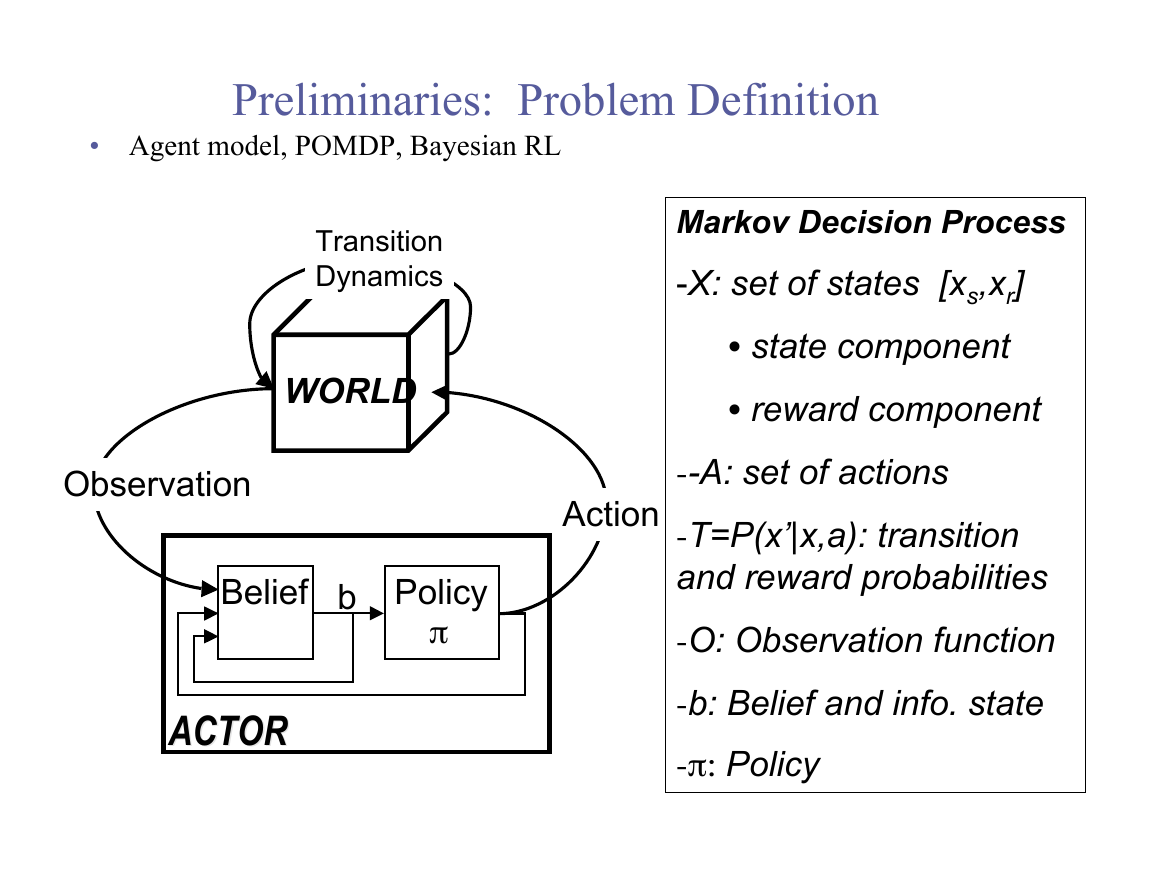

Preliminaries: Problem Definition

• Agent model, POMDP, Bayesian RL

Transition

Dynamics

WORLD

Observation

Action

Belief

b

Policy

π

ACTOR

ACTOR

Markov Decision Process

-X: set of states [xs,xr]

• state component

• reward component

--A: set of actions

-T=P(x’|x,a): transition

and reward probabilities

-O: Observation function

-b: Belief and info. state

-π: Policy

�

�

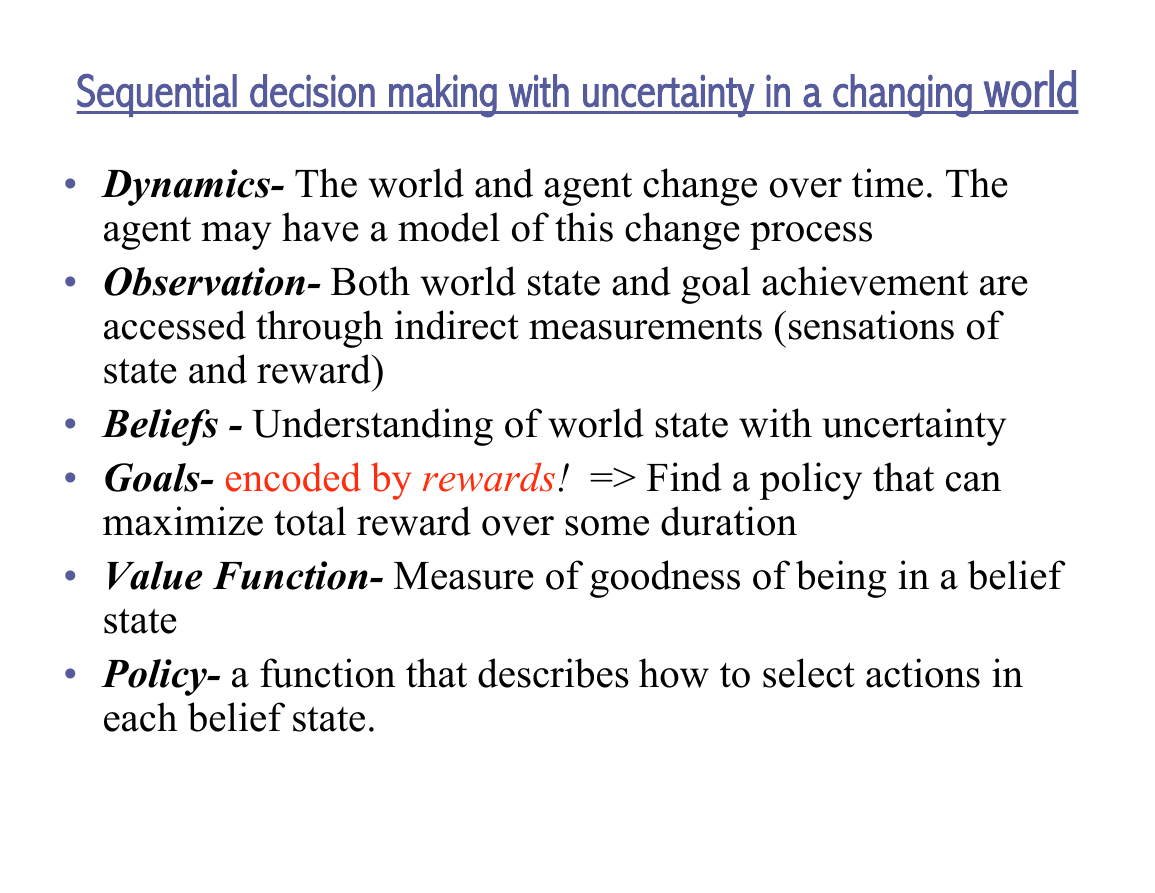

Sequential decision making with uncertainty in a changing world

• Dynamics- The world and agent change over time. The

agent may have a model of this change process

• Observation- Both world state and goal achievement are

accessed through indirect measurements (sensations of

state and reward)

• Beliefs - Understanding of world state with uncertainty

• Goals- encoded by rewards! => Find a policy that can

maximize total reward over some duration

• Value Function- Measure of goodness of being in a belief

• Policy- a function that describes how to select actions in

state

each belief state.

�

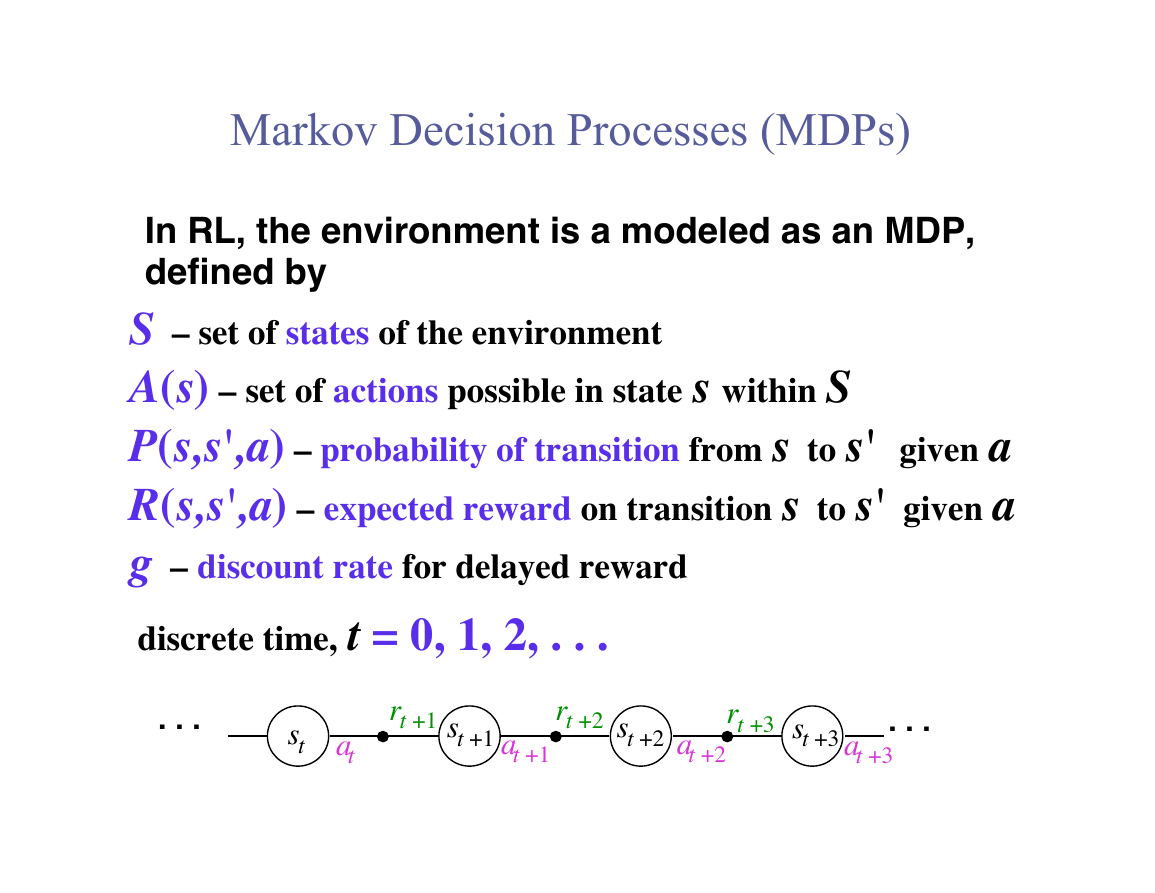

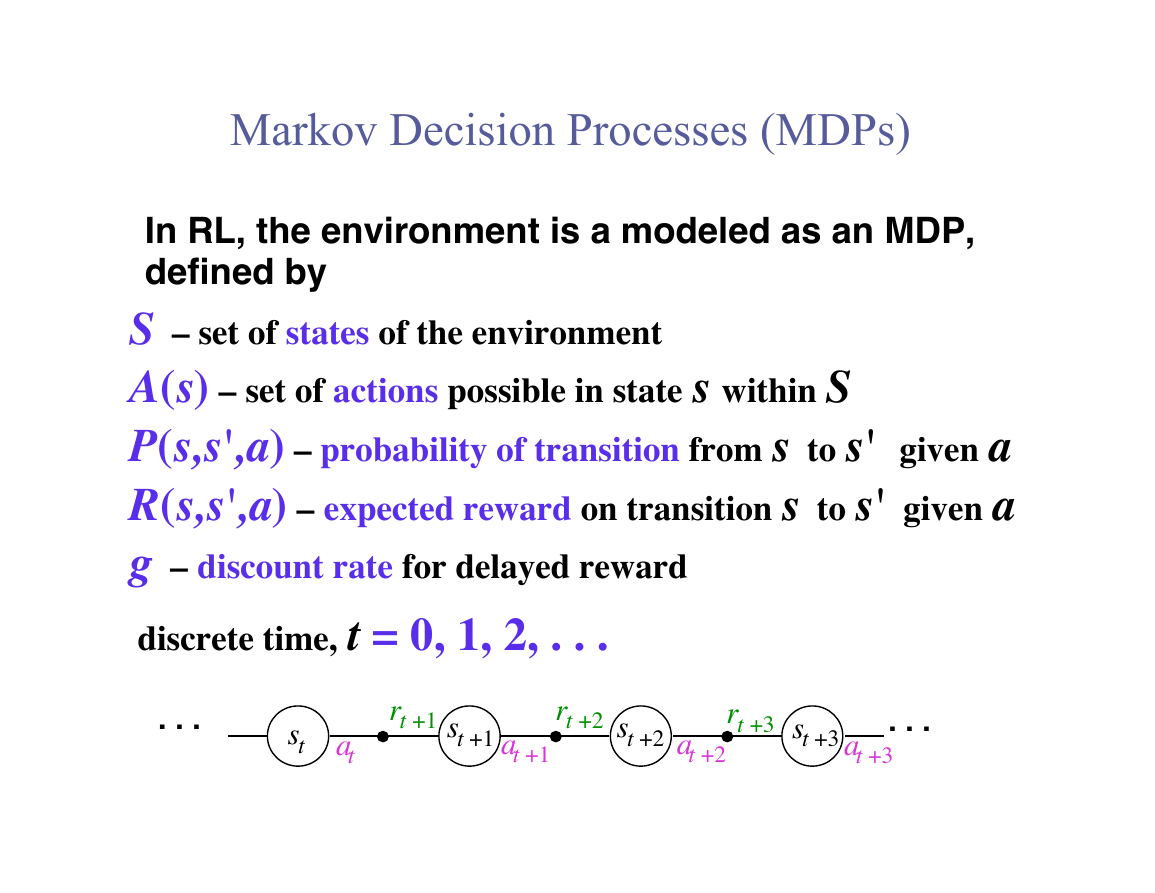

Markov Decision Processes (MDPs)

In RL, the environment is a modeled as an MDP,

defined by

S – set of states of the environment

A(s) – set of actions possible in state s within S

P(s,s',a) – probability of transition from s to s' given a

R(s,s',a) – expected reward on transition s to s' given a

g – discount rate for delayed reward

discrete time, t = 0, 1, 2, . . .

. . .

. . .

rt +3 st +3

t +2a

t +3a

st

a

t

rt +1 st +1

rt +2 st +2

t +1a

�

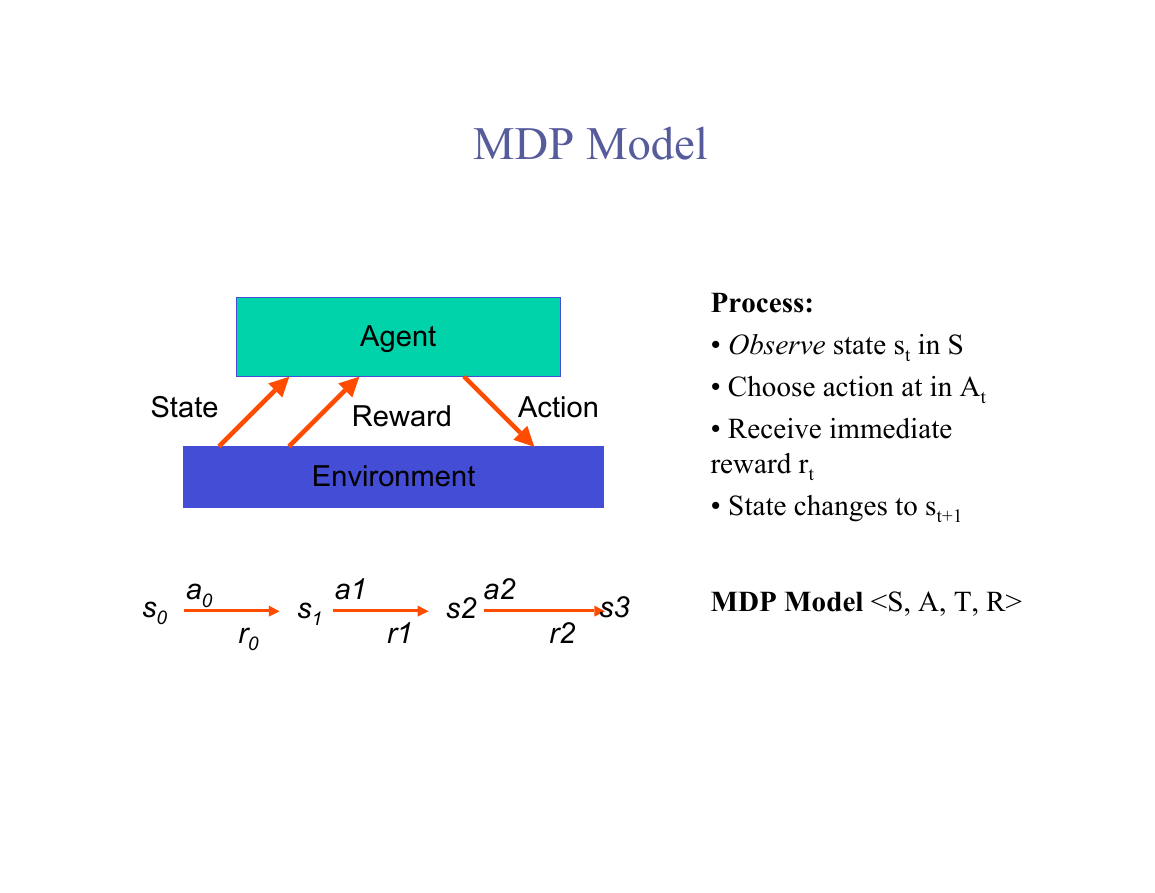

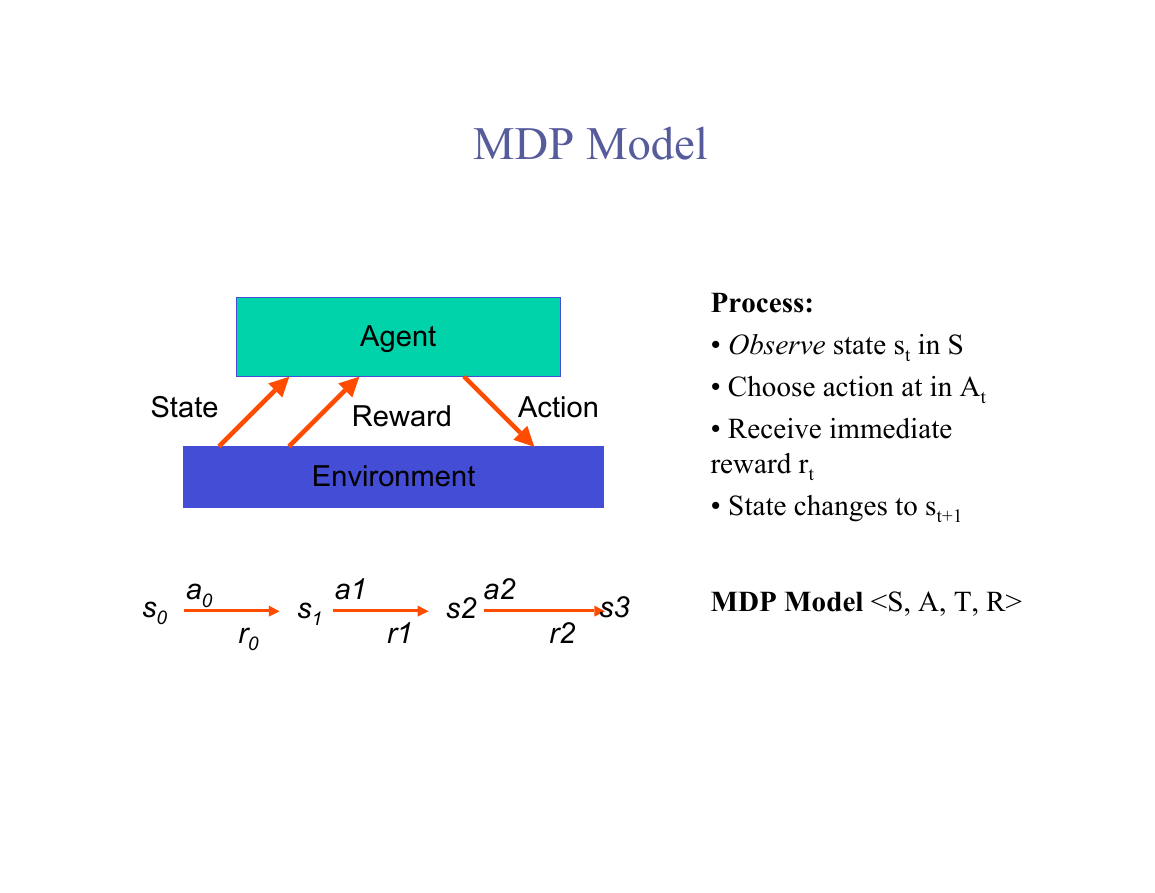

MDP Model

Agent

State

Reward

Action

Environment

Process:

• Observe state st in S

• Choose action at in At

• Receive immediate

reward rt

• State changes to st+1

a0

s0

r0

a1

s1

r1

s2 a2

s3

r2

MDP Model

�

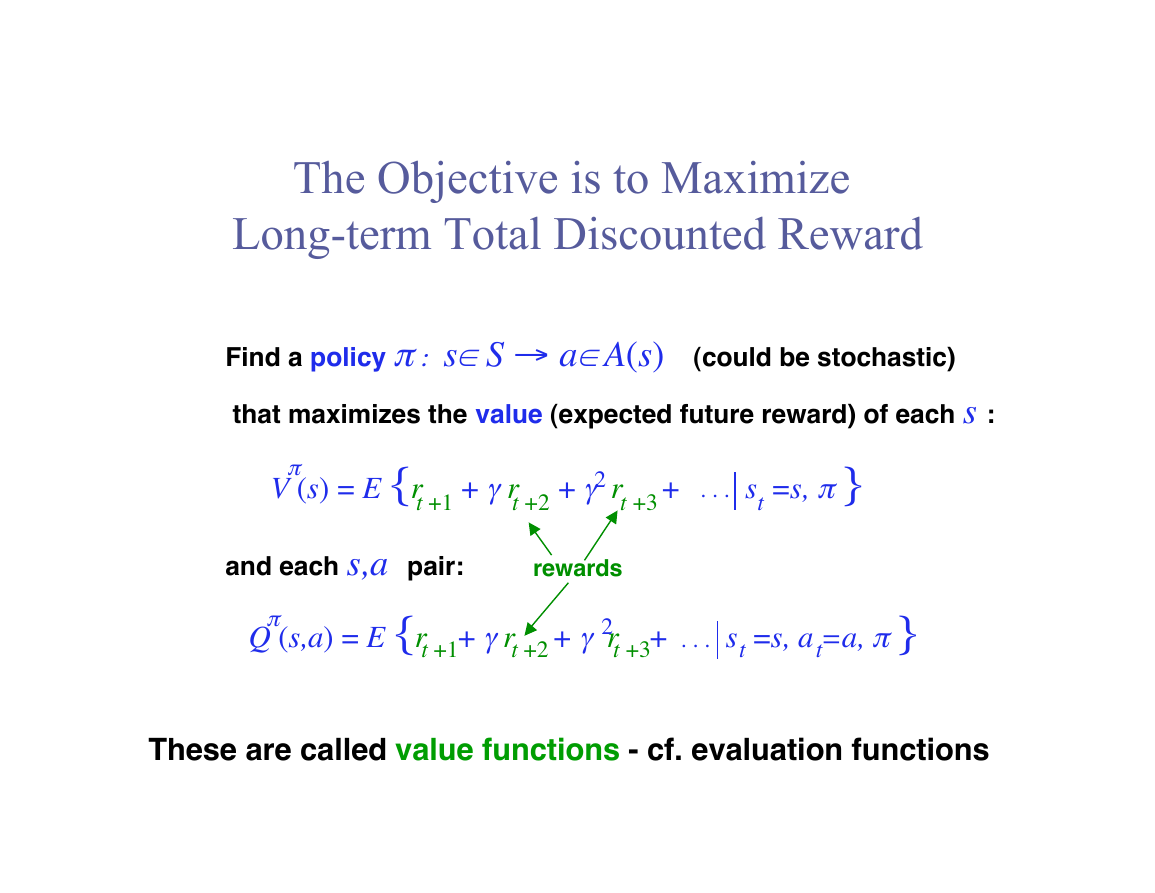

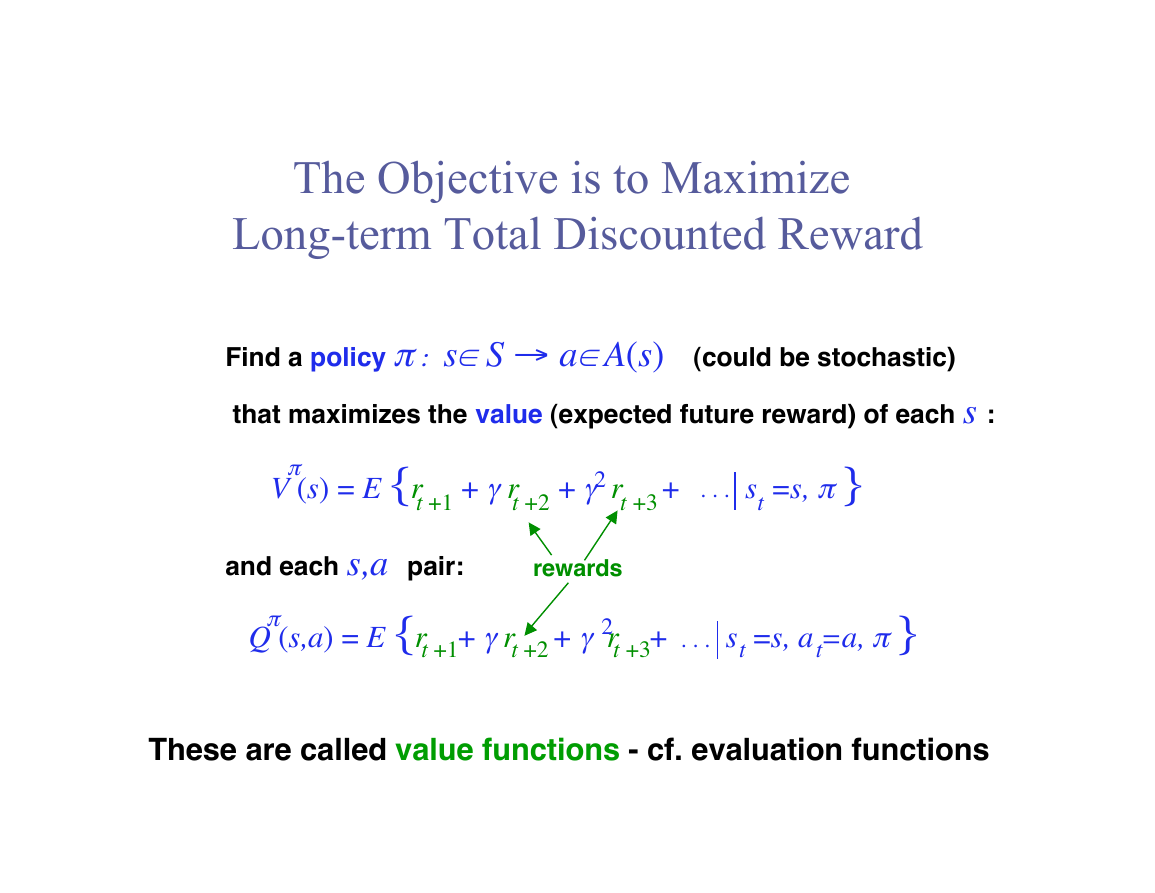

The Objective is to Maximize

Long-term Total Discounted Reward

Find a policy π : s∈ S → a∈ A(s) (could be stochastic)

that maximizes the value (expected future reward) of each s :

π

V (s) = E {r + γ r + γ r + s =s, π }

t +1 t +2 t +3 t

. . .

2

and each s,a pair:

Q (s,a) = E {r + γ r + γ r + s =s, a =a, π }

t +1 t +2 t +3 t t

rewards

. . .

π

2

These are called value functions - cf. evaluation functions

�

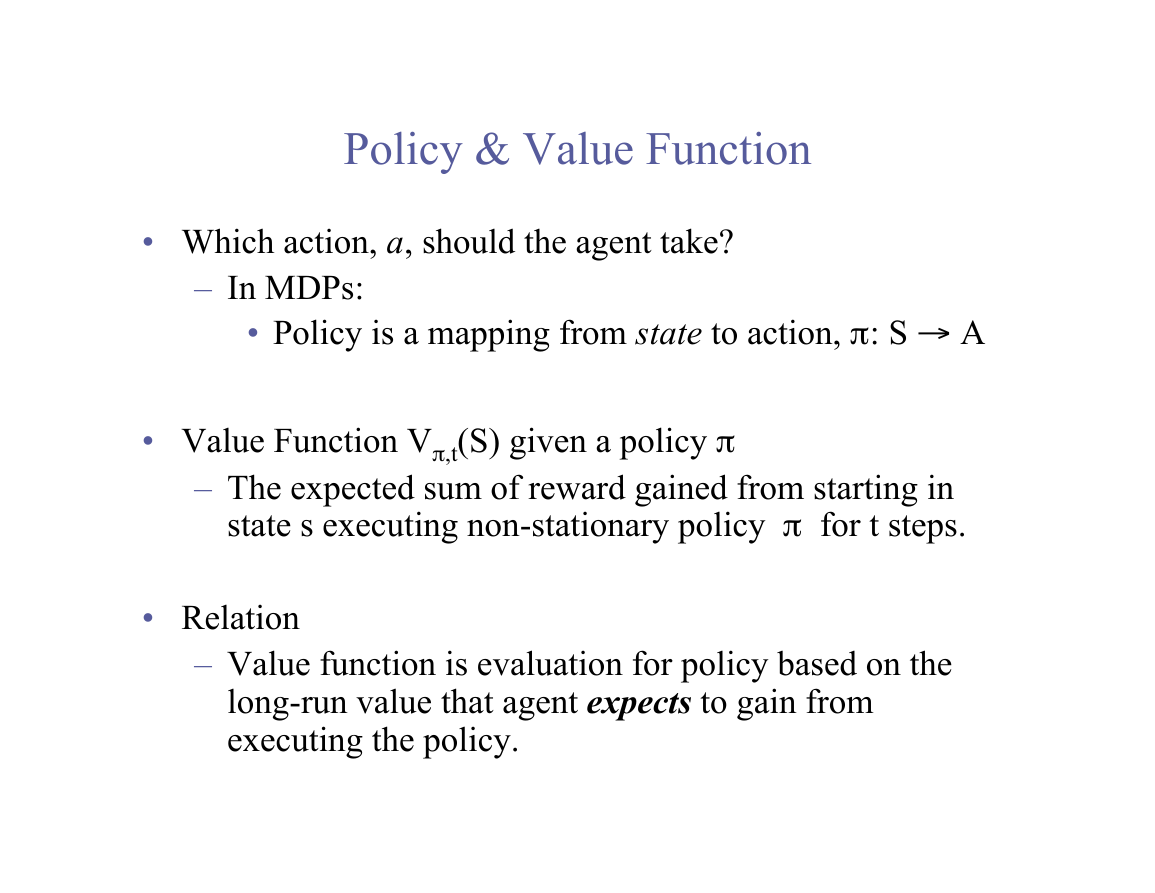

Policy & Value Function

• Which action, a, should the agent take?

– In MDPs:

• Policy is a mapping from state to action, π: S → A

• Value Function Vπ,t(S) given a policy π

– The expected sum of reward gained from starting in

state s executing non-stationary policy π for t steps.

• Relation

– Value function is evaluation for policy based on the

long-run value that agent expects to gain from

executing the policy.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc