Section 1 Introduction

1.1 Purpose

1.2 Scope

1.3 Applicable Documents

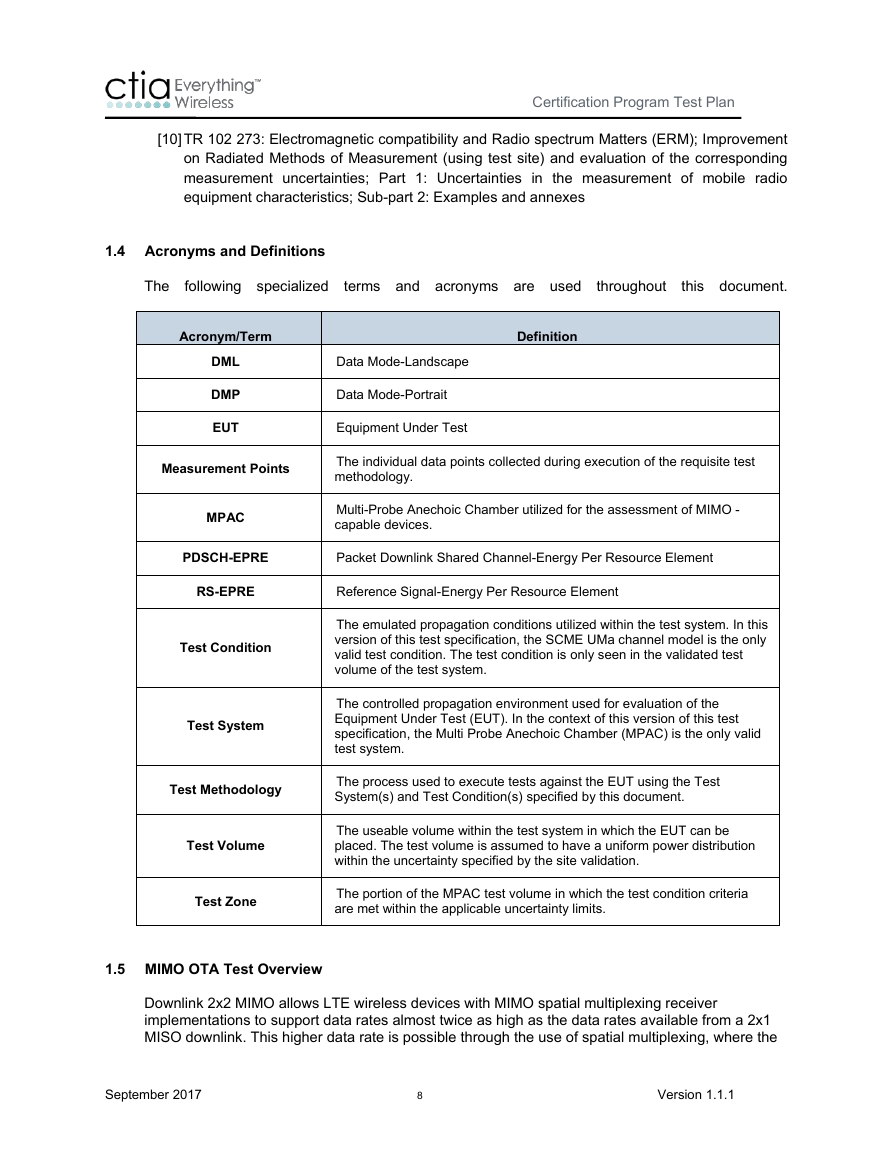

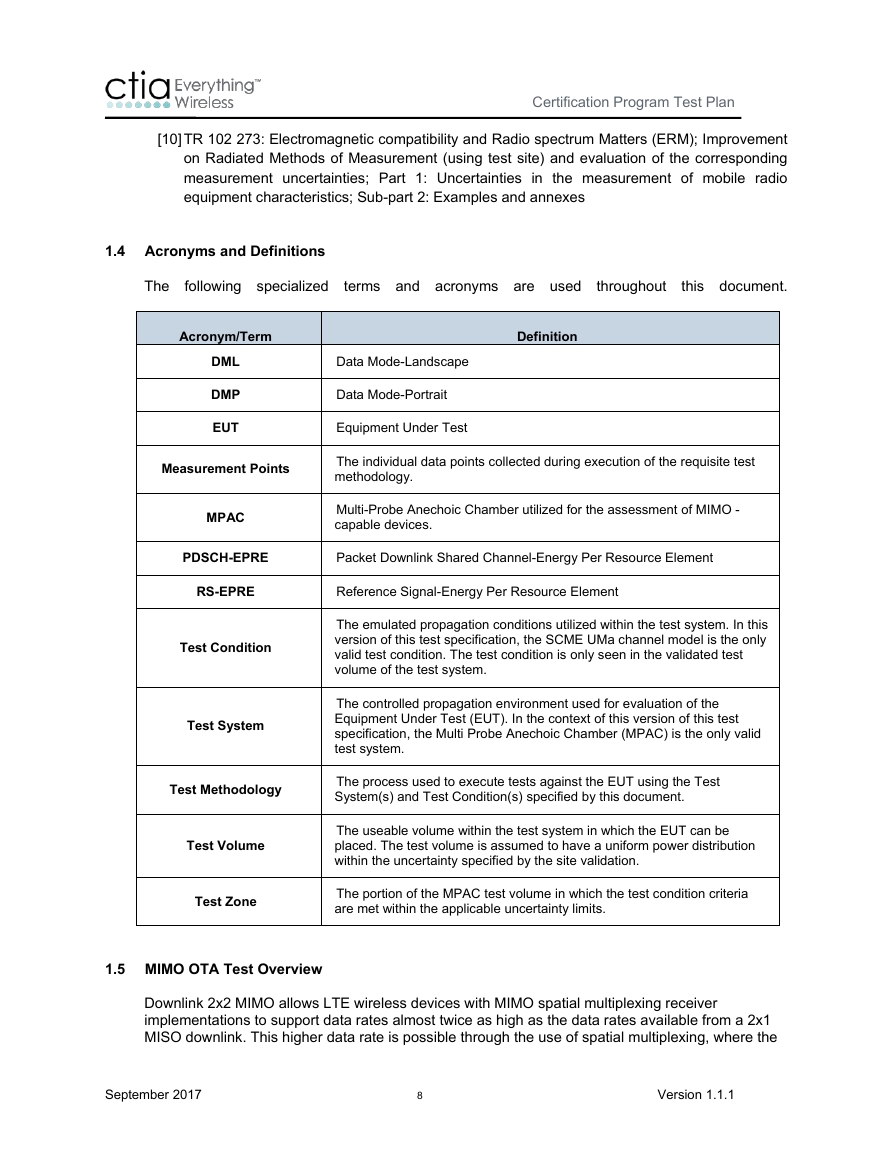

1.4 Acronyms and Definitions

1.5 MIMO OTA Test Overview

1.6 MIMO Equipment under Test and Accessories-The Wireless Device

1.7 Wireless Device Documentation

1.8 Over-the-Air Test System

1.8.1 Multi-Probe Anechoic Chamber (MPAC)

Section 2 MIMO Receiver Performance Assessment (Open-Loop Spatial Multiplexing)

2.1 MPAC General Description

2.2 MPAC System Setup

2.2.1 MPAC Ripple Test

2.2.2 MPAC Calibration

2.2.2.1 Input Calibration

2.2.2.2 Output Calibration

2.2.2.3 Channel Emulator Input Phase Calibration

2.3 EUT Positioning within the MPAC Test Volume

2.3.1 EUT Free-Space Orientation within the MPAC Test Zone

2.3.2 MPAC EUT Orientation within the Test Zone using Phantoms

2.3.3 Maximum EUT Antenna Spacing and Placement of EUT within the Test Zone

2.3.3.1 EUT Placement, Frequency of Operation < 1GHz

2.3.3.2 EUT Placement, Frequency of Operation > 1GHz

2.4 MIMO Average Radiated SIR Sensitivity (MARSS)

2.4.1 Introduction

2.4.2 eNodeB Emulator Configuration

2.4.3 Channel Model Definition

2.4.4 Channel Model Emulation of the Base Station Antenna Pattern

2.4.5 Signal to Interference Ratio (SIR) Control for MARSS Measurement

2.4.5.1 SIR Control for the MPAC Test Environment

2.4.5.2 SIR Validation within the MPAC Test Zone

2.5 MPAC MIMO OTA Test Requirements

2.5.1 Introduction

2.5.2 Throughput Calculation

2.5.3 MIMO OTA Test Frequencies

2.6 MPAC MIMO OTA Test Methodology

2.6.1 Introduction

2.6.2 SIR-Controlled Test Procedure Using the MPAC

2.6.3 MARSS Figure of Merit

Section 3 Transmit Diversity Receiver Performance Evaluation

Section 4 Implementation Conformance Statements Applicable to MIMO and Transmit Diversity OTA Performance Measurement

Section 5 Measurement Uncertainty

5.1 Introduction

5.2 Common Uncertainty Contributions due to Mismatch

5.3 Common Uncertainty Contributions for a Receiving Device

5.4 Common Uncertainty Contributions for a Signal Source

5.5 Common Uncertainty Contributions for Measurement/Probe Antennas

5.5.1 Substitution Components

5.6 Common Uncertainty Contributions for Measurement Setup

5.6.1 Offset of the Phase Center of the Calibrated Reference Antenna from Axis(es) of Rotation

5.6.2 Influence of the Ambient Temperature on the Test Equipment

5.6.3 Miscellaneous Uncertainty

5.6.4 Minimum Downlink/Interference Power Step Size:

5.7 Typical Uncertainty Contributions for External Amplifier

5.7.1 Gain

5.7.2 Mismatch

5.7.3 Stability

5.7.4 Linearity

5.7.5 Amplifier Noise Figure/Noise Floor

5.8 Common Uncertainty Contributions for EUT

5.8.1 Measurement Setup Repeatability

5.8.2 Effect of Ripple on EUT Measurement

5.9 Typical Uncertainty Contributions for Reference Measurement

5.9.1 Effect of Ripple on Range Reference Measurements

5.10 Path Loss Measurement Uncertainty

5.10.1 Substitution Components:

5.11 Summary of Common Uncertainty Contributions for MIMO Receiver Performance

5.12 Combined and Expanded Uncertainties for Overall MIMO Receiver Performance

5.12.1 Compliance Criteria for the Overall MIMO Receiver Performance Uncertainty

5.13 Combined and Expanded Uncertainties and Compliance Criteria for the Transmit Diversity Receiver Performance

Appendix A—Validation and Verification of Test Environments and Test Conditions (Normative)

A.1 Measurement Instrument Overview

A.1.1 Measurement instruments and Setup

A.1.2 Network Analyzer (VNA) Setup

A.1.3 Spectrum Analyzer (SA) Setup

A.2 Validation of the MPAC MIMO OTA Test Environment and Test Conditions

A.2.1 Validation of SIR-Controlled MPAC Test Environment

A.2.1.1 Validation of MPAC Power Delay Profile (PDP)

A.2.1.2 Power Delay Profile Result Analysis

A.2.1.3 Measurement Antenna

A.2.1.4 Pass/Fail Criteria

A.2.2 Validation of Doppler/Temporal Correlation for MPAC

A.2.2.1 MPAC Doppler/Temporal Correlation Method of Measurement

A.2.2.2 MPAC Doppler/Temporal Correlation Measurement Antenna

A.2.3 Validation of MPAC Spatial Correlation

A.2.3.1 MPAC Spatial Correlation Method of Measurement

A.2.3.2 MPAC Spatial Correlation Measurement Results Analysis

A.2.3.3 MPAC Spatial Correlation Measurement Antenna

A.2.4 Validation of Cross-Polarization for MPAC

A.2.4.1 MPAC Cross Polarization Method of Measurement

A.2.4.2 MPAC Cross Polarization Measurement Procedure

A.2.4.3 MPAC Cross Polarization Expected Measurement Results

A.2.5 Input Phase Calibration Validation (Normative)

Appendix B — Validation of Transmit Diversity Receiver Performance Test Environments and Test Conditions

Appendix C — Reporting Test Results (Normative)

C.1 MARSS Radiated Measurement Data Format

C.2 Transmit Diversity Radiated Measurement Data Format

Appendix D— EUT Orientation Conditions (Normative)

D.1 Scope

D.2 Testing Environment Conditions

Appendix E — EUT Orientation Conditions (Informative)

E.1 Scope

E.2 Testing Environment Conditions

Appendix F — Test Zone Dimension Definitions for Optional Bands

Appendix G—Change History

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc