Multiple Object Tracking and Attentional Processing

CHRISTOPHER R. SEARS, University of Calgary

ZENON W. PYLYSHYN, Rutgers University

Abstract How are attentional priorities set when multiple

stimuli compete for access to the limited-capacity visual

attention system? According to Pylyshyn (1989) and Yantis

and Johnson (1990), a small number of visual objects can be

preattentively indexed or tagged and thereby accessed more

rapidly by a subsequent attentional process (e.g., the tradi-

tional "spotlight of attention"). In the present study, we

used the multiple object tracking methodology of Pylyshyn

and Storm (1988) to investigate the relation between what

we call "visual indexing" and attentional processing. Partici-

pants visually tracked a subset of a set of identical, independ-

ently randomly moving objects in a display (the targets), and

made a speeded identification response when they noticed a

target or a nontarget (distractor) object undergo a subtle

form transformation. We found that target form changes

were identified more rapidly than nontarget form changes,

and that the speed of responding to target form changes was

unaffected by the number of nontargets in the display when

the form-changing targets were successfully tracked. We also

found that this enhanced processing only applied to the

targets themselves and not to nearby nontarget distractors,

showing that the allocation of a broadened region of visual

attention (as in the zoom-lens model of attentional alloca-

tion) could not account for these findings. These results

confirm that visual indexing bestows a processing priority to

a number of objects in the visual field.

It is widely accepted that visual attention can be shifted from

one location to another independently of eye movements,

and that the processing of stimuli appearing at attended

locations is enhanced. The methodological paradigm that

produced much of the evidence for this is the attentional

cueing procedure (Posner, Snyder, & Davidson, 1980). In a

cueing experiment, visual attention is shifted to a predeter-

mined location either endogenously, in which the shift is

under the volition of the observer, or exogenously, in which

the shift is involuntarily elicited by a highly salient cue. A

substantial number of studies have demonstrated that the

speed and accuracy of processing at cued locations is supe-

rior to that at uncued locations. For example, Downing

(1988) found that perceptual sensitivity was enhanced at the

location of a cue, and concluded that focused attention

serves to facilitate visual information processing.

that

this

enhanced processing

Previous research suggests that, whether controlled

endogenously or exogenously, there can be only one focus

of attention at any one time. Posner, Snyder, and Davidson

(1980) provided evidence that visual attention is allocated to

single contiguous regions of the visual field, enhancing the

processing of stimuli falling within the single contiguous

"spotlight." Eriksen and St. James (1986) subsequently

showed

falls off

monotonically as one moves out from the locus of visual

attention, and that the resolution of the spotlight varies

inversely with the size of the region encompassed (the

"zoom-lens" model). Many investigators have concluded that

the spotlight is the primary processing bottleneck of the

attentional system, as only stimuli falling within this region

undergo extensive perceptual analysis (e.g., Eriksen &

Hoffman, 1974; Yantis & Johnston, 1990), and only one

such region can be attended to at any one time (Eriksen &

Yeh, 1985). There is also evidence that focal attention may

be directed at objects in the field of view rather than at

spatial regions (Baylis & Driver, 1993) and that the objects

selected in this way continue to be identified as the same

objects as they move about (Kahneman & Treisman, 1992;

Pylyshyn, 1989).

Although the evidence for the unitary nature of focal

attention is somewhat contentious (e.g., Castiello & Umilta,

1990; Joula, Bouwhuis, Cooper, & Warner, 1991; Pashler,

1998), there do seem to be severe limitations on the alloca-

tion of focal attention. Thus, when multiple stimuli compete

for attention, there must be some mechanism that priorizes

the stimuli in some way so that stimulus selection can

proceed in an efficient manner. Consequently, an under-

standing of the means by which attentional priorities are

assigned is of some importance.

Yantis and Johnson (1990) sought to determine how

attentional priorities are set under conditions in which

attention is allocated in a stimulus-driven manner. They had

Canadian Journal of Experimental Psychology, 2000, 54:1, 1-14

�

participants search for a target letter in static multielement

displays composed of multiple abrupt onset and no-onset

items. Abrupt onset and no-onset items are distinguished by

their attentional saliency: No-onset items are presented by

removing camouflaging line segments from an existing

object in the display until the target is revealed, whereas

onset items abruptly appear in previously empty locations

of the display. In previous studies, Yantis and his colleagues

(Jonides & Yantis, 1988; Yantis & Jones, 1991; Yantis &

Jonides, 1984) found that single abrupt onsets automatically

capture attention, and that abrupt onset items are processed

before other no-onset items in a display. Yantis and Johnson

(1990) found that when multiple abrupt onsets are present,

a limited number of them (approximately four) can be

processed before other no-onset items. They proposed that

attentional priorities are set by means of attentional "tags"

that are bound to the representations of highly salient

objects (such as abrupt onsets), and that a limited number of

abrupt onsets can be attentionally tagged and then given

priority access to focused attention.

Like Yantis' priority tag model, Pylyshyn's (1989) FINST

model of visual indexing provides a means for setting

attentional priorities among multiple stimuli. In the FINST

model, a limited number of objects can be preattentively and

simultaneously indexed independently of their retinal

locations or identities (FINST is an acronym for Fingers of

iNSTantiation, a reference to the idea that these indexes

point to objects and provide a way to bind objects to

internal symbolic arguments). Pylyshyn (1989) suggested

that a primary function of visual indexing is to individuate

a small number of objects so that they may be directly

accessed and subjected to focused attentional processing.

Indexing provides direct access to the objects, so that once

an object is indexed it is not necessary to use attentional

scanning to find that object (Pylyshyn et al., 1994). In

contrast, in order to selectively attend to a nonindexed

object, its position must first be ascertained through

attentional scanning (e.g., Treisman & Gelade, 1980; Tsal &

Lavie, 1993). Visual indexing thus provides a means of

setting attentional priorities when multiple stimuli compete

for attention, as indexed objects can be accessed and at-

tended before other objects in the visual field.

In the FINST model, visual indexes are assigned primarily

in a stimulus-driven manner, so that salient feature charac-

teristics or changes are automatically indexed. Typical

stimulus events that would be indexed roughly correspond

to stimuli that automatically attract focal attention, such as

peripheral cues (Posner & Cohen, 1984), abrupt visual

events such as onset stimuli (Yantis & Jonides, 1984), and

"pop-out" visual features (e.g., a red circle embedded in a

display of black circles; Treisman & Gelade, 1980).

Burkell and Pylyshyn (1997) have provided more direct

evidence of the ability of several simultaneous onsets to

attract indexes which could then be used to access and

Sears and Pylyshyn

examine the indexed items. They used Treisman-type visual

search tasks to study the selection of search subsets by onset

cues, and they appealed to the fact that visual search behav-

iour depends on the nature of the set through which one has

to search. In the original visual search studies, Treisman and

Gelade (1980) showed that search targets that differed from

each nontarget in the search set by a single feature (called the

"single-feature" search condition) were easily recognized and

the time it took to recognize them was very nearly inde-

pendent of the number of nontargets in the search set. In

contrast, displays in which each nontarget shared some

feature with the target, so that only a combination of two

features defined the target (called the "conjunction-feature"

search condition), resulted in generally slower searches as

well as reaction times that increased substantially with

increasing numbers of nontargets in the display. Burkell and

Pylyshyn used displays which were of the "conjunction

search" type. However, they selected subsets through which

participants were required to search, and the subsets could

constitute either a single-feature or a conjunction-feature

search.

The subsets were selected as follows. All members of the

search set were precued by "X" place holders, but a subset of

variable size that was to contain the target was cued by

placeholders that occurred about one second later than the

other placeholders, and about LOO ms before the search

began. The precued subset itself could constitute either a

single-feature or a conjunction-feature search task (i.e., the

target could be distinguished from members of the subset by

one feature or only by a conjunction of two features).

Burkell and Pylyshyn found that a subset precued by these

late onset cues could, for the purposes of speeded visual

search, be separated from the rest of the display and treated

as though they were the only items present. Burkell and

Pylyshyn showed, for example, that only the single-feature

versus conjunction-feature property of the subset was

relevant to the pattern of search reaction times (the overall

display always provided a conjunction-search condition

because it contained items with all combinations of proper-

ties). Moreover, they found that increasing the relative

distance between search targets did not increase the search

time, showing that the indexed targets could be accessed

without having to find them first by scanning the display.

Visual indexes point to features or objects, not to the

locations that these stimuli occupy. Like the object files of

Kahneman, Treisman, and Gibbs (1992), visual indexes are

object-centred and continue to reference objects despite

changes in their location. According to the visual indexing

model, a visual index automatically individuates and tracks

moving objects. Because there are a small number (around

four or five) of such indexes, observers can track around

four or five independently moving distinct visual objects in

parallel. In an empirical test of this hypothesis, Pylyshyn

and Storm (1988) had participants visually track a

�

Multiple Object Tracking

prespecified subset of a larger number of identical, randomly

moving objects in a display. The members of the subset to

be tracked (the targets) were identified by briefly flashing

them several times, prior to the onset of movement. Accord-

ing to the model, targets designated in this fashion are

automatically indexed. During the tracking task, the targets

were indistinguishable from the other distractor objects,

which made the historical continuity of each target's motion

the only clue to its identity. Participants tracked the target

objects for 5 to 10 seconds, after which either a target or a

distractor was probed by superimposing a bright square over

it. The participants' task was to determine whether the

probed object was a target or a distractor. According to

Pylyshyn and Storm (1988), the indexing of the target

objects would allow each of them to be simultaneously

tracked and identified throughout the motion phase of the

experiment, despite the fact that the targets were perceptu-

ally indistinguishable from the distractors.

Pylyshyn and Storm (1988) found that performance in

this multiple object tracking task was extremely high for

subsets of up to five elements — in fact, participants could

simultaneously track up to five target objects at an accuracy

approaching 90%. McKeever and Pylyshyn (1993), Yantis

(1992), Scholl and Pylyshyn (1999), Viswanathan and

Mingolla (1989), Cavanagh (1999), and Culham et al. (1998)

have all reported similar results. Moreover, using a simula-

tion of the task, analyses by Pylyshyn and Storm (1988) and

McKeever and Pylyshyn (1993) indicate that a single

spotlight of focused attention moving rapidly among the

target objects and updating a record of their locations could

not produce this level of tracking performance in the setup

used in their study. In the Pylyshyn and Storm (1989)

simulations, for example, tracking performance was no

higher than 50% based on extremely conservative assump-

tions. One such assumption was an attentional scan velocity

as high as 250 degrees per second (the highest estimated scan

velocity in the previous literature). Another assumption was

that participants stored (with zero encoding time) the

predicted locations based on the direction and speed of the

targets' motion, and that they used a guessing strategy when

they were uncertain. These results suggest that it is not

possible for the task to be performed at the observed level of

accuracy without some parallel tracking of the target

objects. Pylyshyn and Storm (1988) concluded that their

results confirmed a key prediction of the visual indexing

model, namely, that a small number of objects can be

indexed and that the indexes are used to keep track of them

in parallel without attentional scanning and without

encoding their locations.

We should note that Yantis (1992) has added an addi-

tional mechanism to explain how multiple target objects are

tracked in this task. Yantis (1992) argued that participants

spontaneously group the targets together to form a virtual

polygon, whose vertices correspond to the continually

changing positions of the targets, and that it is this single

"object" that is tracked throughout the trial. While it may

be that observers conceptually group elements into a

polygon, it is still the case that the individual targets them-

selves must be tracked in order to keep track of the location

of the vertices of such a virtual polygon. Consequently, we

do not consider Yantis's (1992) account to be incompatible

with Pylyshyn and Storm's (1988) analysis. Indeed, we have

offered a closely related proposal for what we call an "error

recovery" stage of the tracking process, wherein a polygon-

like representation of the relative location of targets is

maintained and referred to when the loss of a target is

detected (McKeever & Pylyshyn, 1993; Pylyshyn et al.,

1994).

The purpose of the present investigation was to explore

the relation between visual indexing and attentional process-

ing in the context of the multiple-object tracking paradigm.

According to Pylyshyn (1989), visual indexing provides a

means of keeping track of a number of objects, in the sense

of providing a means for querying them, without first

having to ascertain their positions through attentional

scanning. We assume that, in order to focus unitary atten-

tion on an object, one must first find the object and move

focal attention to it. However, if the object has already been

indexed, focal attention can be allocated to it directly,

without prior search. Consequently, shifting attention to

indexed objects should be faster than shifting attention to

nonindexed objects. Because of this we expect that in a task

requiring focal attention, a response to an indexed object

will generally occur before a response to other objects in the

visual field.

This hypothesis was investigated in Experiment 1.

Participants tracked a set of target objects and made a

speeded identification response when they detected that a

target or a distractor object underwent a subtle form

transformation. According to our hypothesis, targets are

indexed during the tracking task, and therefore unitary focal

attention can be moved directly to them. If focal attention

is shifted to a target, either on some regular basis or when

some global change-detector reports a change, then any

change at that target is more readily recognized. Thus, if

targets undergoing changes are found to be more rapidly

identified, this would support our hypothesis that visual

indexing facilitates attentional processing.

Experiment 1

METHOD

Participants. Seventeen individuals participated in this

experiment. All had normal or corrected-to-normal vision,

and were paid $15 for their participation.

Apparatus and stimuli. Stimuli were presented on a 19-inch

Sun MicroSystems monochrome monitor with a resolution

of 1,056 by 900 pixels. A Sun MicroSystems 3/50 microcom-

�

puter controlled the stimulus presentation and randomiza-

tion of trials. Response times were collected with a three-

button mouse and a dedicated Zytec timing board (Danzig,

1988) which provided response latencies accurate to one

millisecond.

Stimuli consisted of rectangular, seven-segment box figure

eights (B), and the capital letters E and H. The E and H

characters were created by removing lines from the figure

eights. The line segments used to construct these stimuli

were a single pixel in width. The E and H figures were used

because of their high degree of similarity to the figure eight

character.

All the stimuli were created as pixel-drawn "objects" in

video memory that could be moved across the screen

without being continually recreated. Each object subtended

a visual angle of 0.84° in height and 0.63° in width from a

viewing distance of 50 cm. Stimuli were white and were

presented on a dark background.

The animation of the objects in the display was con-

trolled by an algorithm which simulated brownian motion,

creating a random, independent, and continuous pattern of

movement for each object. Motion sequences were generated

in real-time during each trial and all the participants re-

ported the perception of smooth and continuous motion

during the practice trials. Each object was surrounded by an

invisible circular barrier which insured that no two objects

could collide or superimpose themselves over one another.

Each object moved at a randomly determined velocity of

between 4° and 8° per second. Once determined, the

velocity of each object did not vary throughout a trial. A

wall repulsion force retained all the objects within a 15° by

15° area by bouncing them off these invisible borders.

Although the total number of target and distractor objects

displayed varied according to the trial type, the movement

and velocity of these objects did not vary as a function of

the number of targets and distractors present in a given trial.

This was accomplished by having the maximum number of

objects (16) moving in the display on every trial, but making

only a subset of them visible for the particular condition

concerned. This technique eliminated any relation between

the density of objects in the display and the freedom of their

movement.

Procedure. Participants were seated in a darkened room

approximately 50 cm from the display and used a chinrest to

reduce head movements and control viewing distance. A

three-button mouse was used to collect responses. Partici-

pants were given written instructions prior to the experi-

ment, which outlined the general procedure and emphasized

the importance of maintaining fixation throughout the

session. Each participant was then given a demonstration

and explanation of a trial sequence. They were instructed to

note the positions of the blinking target objects at the start

of each trial, because the task was to keep track of these

Sears and Pylyshyn

objects when they began moving. During the motion phase,

they were instructed to track the target objects without

moving their gaze from the fixation cross (eye position was

not monitored). At some point in the trial, one of the target

or distractor objects would transform into an E or an H, and

participants were to respond by pressing the E or H button

as quickly as possible when they had identified this form

change. Participants were informed that the target and

distractor objects were equally likely to undergo form

changes. Each participant completed 20 practice trials prior

to the experiment.

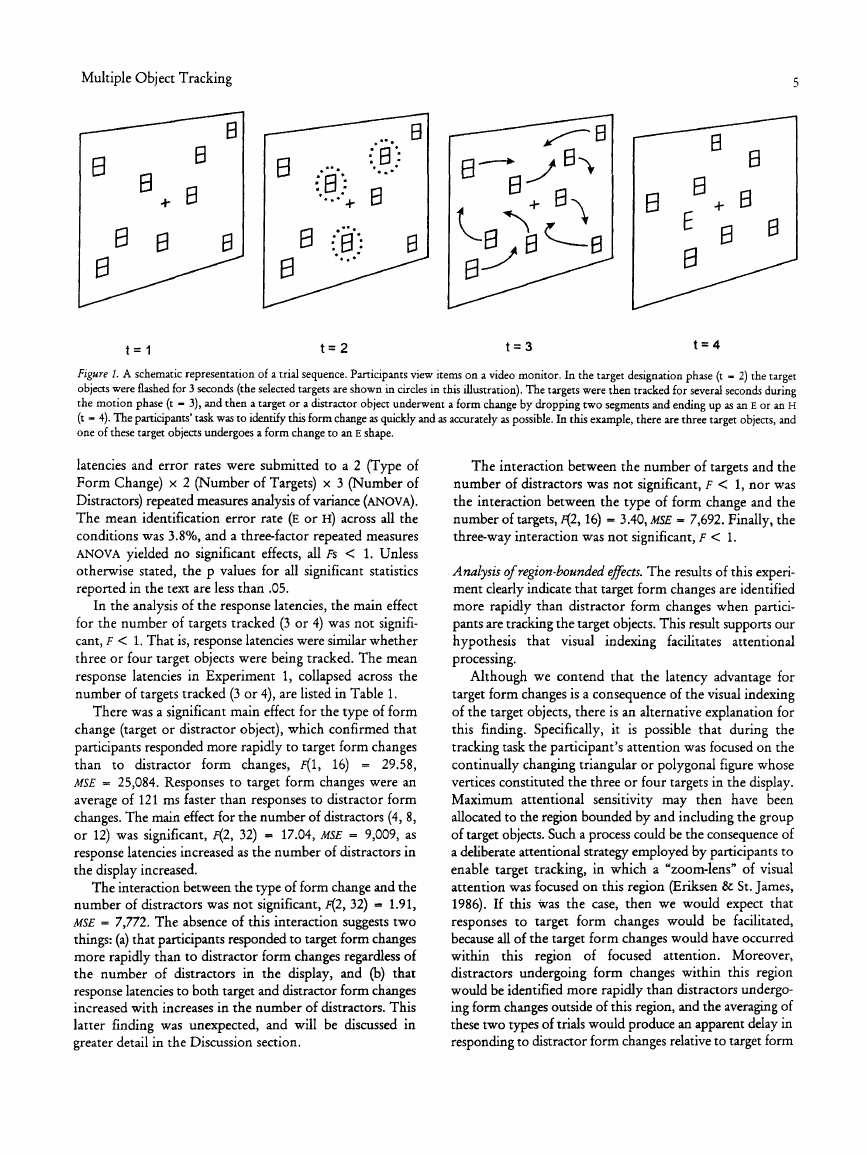

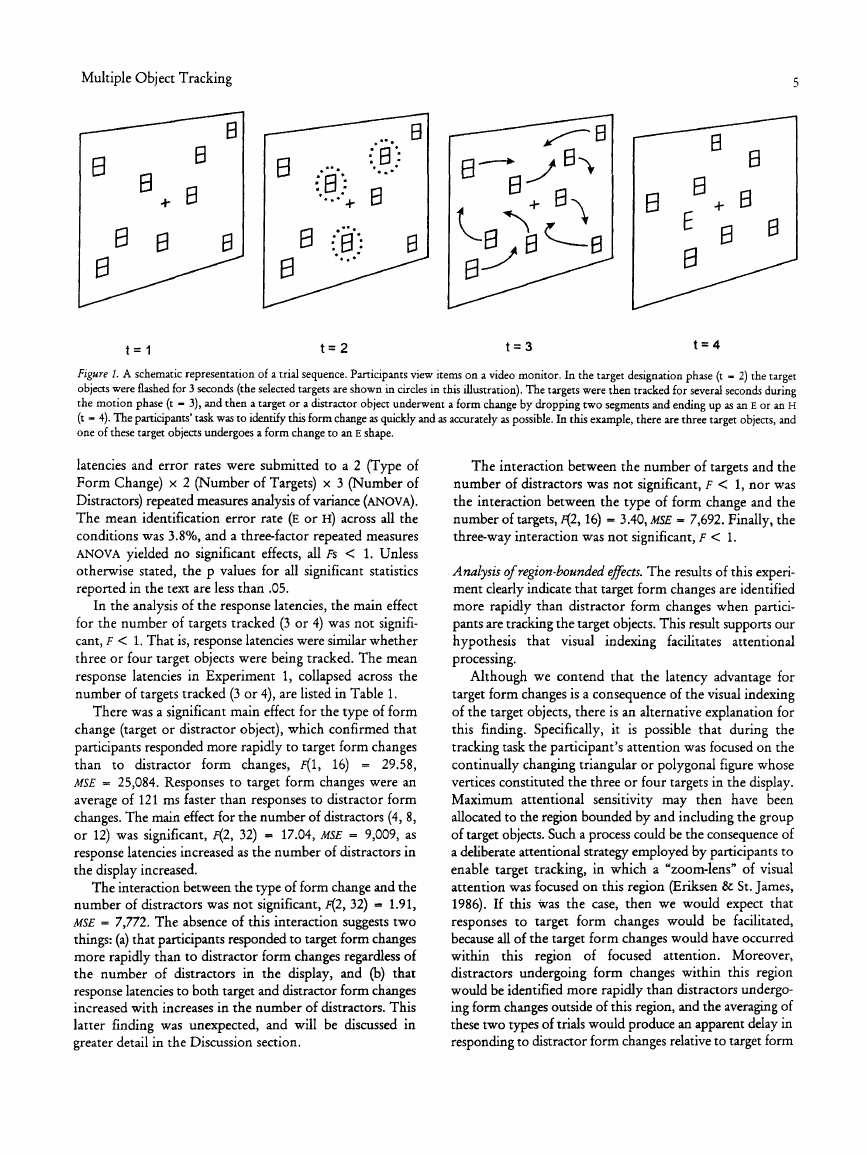

Figure 1 depicts a trial sequence. Each trial began with

the presentation of an isolated fixation cross for 2 s. Then,

depending upon the condition, from 7 to 16 figure-eight

objects appeared on the screen. The placement of the objects

was randomly determined on each trial, subject to the

constraint that none could overlap one another or their

invisible "barriers." Three or four of these objects then

began to blink on and off for 3 s, designating them as the

target objects to be tracked. The appearance of the remain-

ing objects (the distractors) did not change during this target

designation phase. All of the objects then began to move in

a random and continuous fashion about the screen (subject

to the previously mentioned constraints), and the partici-

pant attempted to simultaneously track each of the target

objects. The target and distractor objects were indistinguish-

able from one another during this tracking phase. Partici-

pants tracked the targets for a randomly determined interval

of between 5 and 9 s, after which either a target or a

distractor object was transformed into an E or an H. On 50%

of the trials, a target object underwent a form change, and

on the remaining 50% of the trials a distractor underwent a

form change. The remaining targets and distractors, as well

as the new letter in the display (E or H), maintained their

movement during the transformation and continued moving

until the participant responded. After a response had been

made, the screen was cleared and a new trial was initiated

following a 3-s inter-trial interval.

Each participant completed eight blocks of 45 trials. The

order of trials was randomized separately for each partici-

pant, and there was a five-minute rest period between each

block.

Design. There were three factors manipulated in this experi-

ment: the type of form change (target or distractor form

change), the number of targets tracked (3 or 4) and the

number of distractors present in the display (4, 8, or 12).

There were 30 trials in each of the 12 conditions, for a total

of 360 trials.

RESULTS

Response latencies greater than three seconds were classified

as outliers and were removed from the dataset. This proce-

dure resulted in the removal of 3.6% of the data. Response

�

Multiple Object Tracking

Figure 1. A schematic representation of a trial sequence. Participants view items on a video monitor. In the target designation phase (t - 2) the target

objects were flashed for 3 seconds (the selected targets are shown in circles in this illustration). The targets were then tracked for several seconds during

the motion phase (t — 3), and then a target or a distractor object underwent a form change by dropping two segments and ending up as an E or an H

(t - 4). The participants' task was to identify this form change as quickly and as accurately as possible. In this example, there are three target objects, and

one of these target objects undergoes a form change to an E shape.

latencies and error rates were submitted to a 2 (Type of

Form Change) x 2 (Number of Targets) x 3 (Number of

Distractors) repeated measures analysis of variance (ANOVA).

The mean identification error rate (E or H) across all the

conditions was 3.8%, and a three-factor repeated measures

ANOVA yielded no significant effects, all Fs < 1. Unless

otherwise stated, the p values for all significant statistics

reported in the text are less than .05.

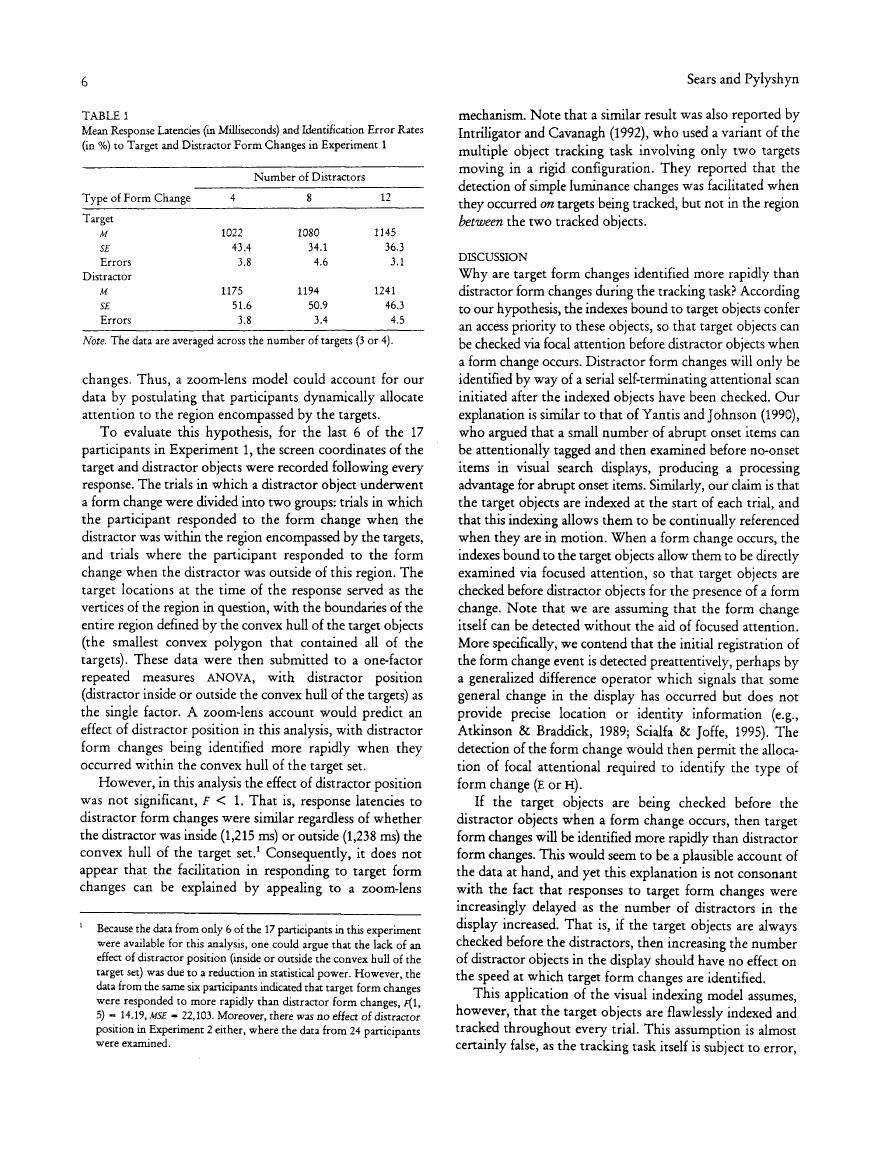

In the analysis of the response latencies, the main effect

for the number of targets tracked (3 or 4) was not signifi-

cant, F < 1. That is, response latencies were similar whether

three or four target objects were being tracked. The mean

response latencies in Experiment 1, collapsed across the

number of targets tracked (3 or 4), are listed in Table 1.

There was a significant main effect for the type of form

change (target or distractor object), which confirmed that

participants responded more rapidly to target form changes

than to distractor

form changes, f(l, 16) = 29.58,

MSB = 25,084. Responses to target form changes were an

average of 121 ms faster than responses to distractor form

changes. The main effect for the number of distractors (4, 8,

or 12) was significant, f(2, 32) = 17.04, MSE = 9,009, as

response latencies increased as the number of distractors in

the display increased.

The interaction between the type of form change and the

number of distractors was not significant, F(2, 32) = 1.91,

MSE = 7,772. The absence of this interaction suggests two

things: (a) that participants responded to target form changes

more rapidly than to distractor form changes regardless of

the number of distractors in the display, and (b) that

response latencies to both target and distractor form changes

increased with increases in the number of distractors. This

latter finding was unexpected, and will be discussed in

greater detail in the Discussion section.

The interaction between the number of targets and the

number of distractors was not significant, F < 1, nor was

the interaction between the type of form change and the

number of targets, F(2,16) = 3.40, MSE = 7,692. Finally, the

three-way interaction was not significant, / < 1.

Analysis of region-bounded effects. The results of this experi-

ment clearly indicate that target form changes are identified

more rapidly than distractor form changes when partici-

pants are tracking the target objects. This result supports our

hypothesis

that visual indexing facilitates attentional

processing.

Although we contend that the latency advantage for

target form changes is a consequence of the visual indexing

of the target objects, there is an alternative explanation for

this finding. Specifically, it is possible that during the

tracking task the participant's attention was focused on the

continually changing triangular or polygonal figure whose

vertices constituted the three or four targets in the display.

Maximum attentional sensitivity may then have been

allocated to the region bounded by and including the group

of target objects. Such a process could be the consequence of

a deliberate attentional strategy employed by participants to

enable target tracking, in which a "zoom-lens" of visual

attention was focused on this region (Eriksen & St. James,

1986). If this was the case, then we would expect that

responses to target form changes would be facilitated,

because all of the target form changes would have occurred

within

this region of focused attention. Moreover,

distractors undergoing form changes within this region

would be identified more rapidly than distractors undergo-

ing form changes outside of this region, and the averaging of

these two types of trials would produce an apparent delay in

responding to distractor form changes relative to target form

�

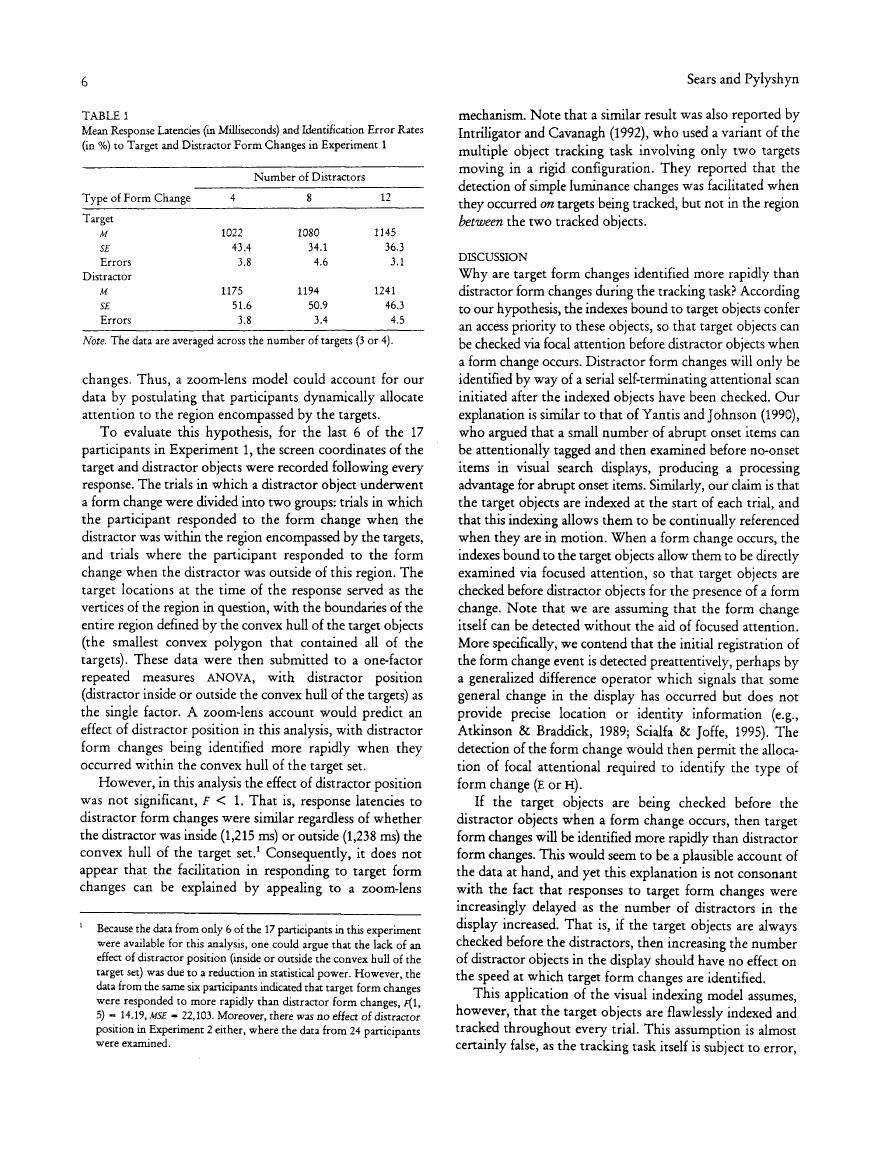

TABLE 1

Mean Response Latencies (in Milliseconds) and Identification Error Rates

(in %) to Target and Distractor Form Changes in Experiment 1

Type of Form Change

Number of Distractors

Target

M

SE

Errors

Distractor

M

SE

Errors

1022

43.4

3.8

1175

51.6

3.8

1080

34.1

4.6

1194

50.9

3.4

12

1145

36.3

3.1

1241

46.3

4.5

Note. The data are averaged across the number of targets (3 or 4).

changes. Thus, a zoom-lens model could account for our

data by postulating that participants dynamically allocate

attention to the region encompassed by the targets.

To evaluate this hypothesis, for the last 6 of the 17

participants in Experiment 1, the screen coordinates of the

target and distractor objects were recorded following every

response. The trials in which a distractor object underwent

a form change were divided into two groups: trials in which

the participant responded to the form change when the

distractor was within the region encompassed by the targets,

and trials where the participant responded to the form

change when the distractor was outside of this region. The

target locations at the time of the response served as the

vertices of the region in question, with the boundaries of the

entire region defined by the convex hull of the target objects

(the smallest convex polygon that contained all of the

targets). These data were then submitted to a one-factor

repeated measures ANOVA, with distractor position

(distractor inside or outside the convex hull of the targets) as

the single factor. A zoom-lens account would predict an

effect of distractor position in this analysis, with distractor

form changes being identified more rapidly when they

occurred within the convex hull of the target set.

However, in this analysis the effect of distractor position

was not significant, F < 1. That is, response latencies to

distractor form changes were similar regardless of whether

the distractor was inside (1,215 ms) or outside (1,238 ms) the

convex hull of the target set.1 Consequently, it does not

appear that the facilitation in responding to target form

changes can be explained by appealing to a zoom-lens

Because the data from only 6 of the 17 participants in this experiment

were available for this analysis, one could argue that the lack of an

effect of distractor position (inside or outside the convex hull of the

target set) was due to a reduction in statistical power. However, the

data from the same six participants indicated that target form changes

were responded to more rapidly than distractor form changes, f(\,

5) - 14.19, MSE - 22,103. Moreover, there was no effect of distractor

position in Experiment 2 either, where the data from 24 participants

were examined.

Sears and Pylyshyn

mechanism. Note that a similar result was also reported by

Intriligator and Cavanagh (1992), who used a variant of the

multiple object tracking task involving only two targets

moving in a rigid configuration. They reported that the

detection of simple luminance changes was facilitated when

they occurred on targets being tracked, but not in the region

between the two tracked objects.

DISCUSSION

Why are target form changes identified more rapidly than

distractor form changes during the tracking task? According

to our hypothesis, the indexes bound to target objects confer

an access priority to these objects, so that target objects can

be checked via focal attention before distractor objects when

a form change occurs. Distractor form changes will only be

identified by way of a serial self-terminating attentional scan

initiated after the indexed objects have been checked. Our

explanation is similar to that of Yantis and Johnson (1990),

who argued that a small number of abrupt onset items can

be attentionally tagged and then examined before no-onset

items in visual search displays, producing a processing

advantage for abrupt onset items. Similarly, our claim is that

the target objects are indexed at the start of each trial, and

that this indexing allows them to be continually referenced

when they are in motion. When a form change occurs, the

indexes bound to the target objects allow them to be directly

examined via focused attention, so that target objects are

checked before distractor objects for the presence of a form

change. Note that we are assuming that the form change

itself can be detected without the aid of focused attention.

More specifically, we contend that the initial registration of

the form change event is detected preattentively, perhaps by

a generalized difference operator which signals that some

general change in the display has occurred but does not

provide precise location or identity information (e.g.,

Atkinson & Braddick, 1989; Scialfa & Joffe, 1995). The

detection of the form change would then permit the alloca-

tion of focal attentional required to identify the type of

form change (E or H).

If the target objects are being checked before the

distractor objects when a form change occurs, then target

form changes will be identified more rapidly than distractor

form changes. This would seem to be a plausible account of

the data at hand, and yet this explanation is not consonant

with the fact that responses to target form changes were

increasingly delayed as the number of distractors in the

display increased. That is, if the target objects are always

checked before the distractors, then increasing the number

of distractor objects in the display should have no effect on

the speed at which target form changes are identified.

This application of the visual indexing model assumes,

however, that the target objects are flawlessly indexed and

tracked throughout every trial. This assumption is almost

certainly false, as the tracking task itself is subject to error,

�

Multiple Object Tracking

and failures of tracking do occur. It is probable that indexes

do not always remain permanently bound to the target

objects throughout the tracking task. Although we maintain

that indexing and tracking a small number of objects is

preattentive, maintaining those indexes over an extended

period of time requires some effort or reactivation to

prevent their decay or loss. A reasonable assumption is that

the probability of an index being lost or misplaced would

increase with the number and/or density of distractors in

the display, so that increases in the number of distractors

would lead to decreases in tracking performance. It is

known that the attentional resolution required for individu-

ating objects drops off rapidly with eccentricity (Intriligator,

1998), increasing the chances that when a target and a

distractor object pass close to one another in the periphery

of the display, the two objects may then momentarily fail to

be resolved. When the target and distractor subsequently

move away from each other, the index could be shifted to

the wrong object or lost altogether. If an index was shifted

to a distractor, the participant might then consider this

object a target, while the object that had been tracked might

then become a distractor.

If the index bound to a target object was lost or shifted,

and the same target subsequently underwent a form

change, then responses to this form change would be

delayed. In effect, this target would now be a distractor, and

responses to these nonindexed target form changes would be

slower than responses to indexed target form changes.

During the course of the experiment, a fair number of these

events may have occurred, increasing the average response

latencies to target form changes. More specifically, as the

number of distractors in the display increased, the probabil-

ity that an index would be lost or shifted to a distractor

would also have increased. Averaging the response latencies

to form-changing targets that were tracked with those that

were not tracked would create a spurious increase in

response latencies to target form changes with increases in

the number of distractors. In Experiment 2 we tested this

possibility.

Experiment 2

If imperfections in the process of indexing and tracking the

target objects was responsible for the display size effect

witnessed in Experiment 1, then such an effect should not

occur if the targets are perfectly tracked throughout every

trial. Although it is not possible to ensure that all the targets

are perfectly tracked, we have designed a procedure that

may, given certain assumptions, help us to determine

whether a particular target that underwent a form change

had in fact been correctly tracked on that particular trial. To

do this, we developed a dual-response procedure in which

we asked participants to indicate, on each trial, whether the

object that underwent a form change was a target or a

distractor. Thus, the participant was required to indicate the

identity of the changed object and also whether it was a

target or a distractor.

In this experiment, participants tracked four target

objects and made a speeded identification response to a form

change in the display (E or H), after which they made a two-

alternative forced choice categorization decision as to what

type of object underwent a form change (target or

distractor). Because participants had to indicate what type of

object underwent a form change on each trial, the trials

where participants had lost a form-changing target could be

distinguished from the trials where a form-changing target

was accurately tracked. If failures in tracking performance

produced the display size effect for target form changes in

Experiment 1, then excluding from the RT analyses those

trials where participants had lost a form-changing target

should greatly attenuate and perhaps even eliminate this

display size effect.

Of course, the success of this method depends on partici-

pants being able to categorize the changed objects as ones

they had or had not tracked. Because there will be some

trials on which participants may not be certain as to

whether the object that underwent a form change was a

tracked object, their category assignment may contain both

errors of omission and errors of commission. Such errors

may occur for several reasons. For example, a target may be

lost because the index simply becomes dissociated from it —

in which case the forced-choice categorization response may

merely reflect a guessing strategy. On the other hand, if the

target is lost because the index shifts to a nearby distractor,

the participant may unknowingly take the newly indexed

object to be a target or might even be aware of the shift and

exercise some wariness. Clearly, our ability to isolate those

trials in which the participant correctly tracked a form-

changing target will not be without some error. But so long

as the errors are relatively infrequent and the bias in the use

of the target versus distractor categories remains relatively

fixed over the conditions of the experiment, this method

may provide a useful way of restricting the trials used to

compute the R T measure to those which the participant has

actually tracked the form-changing target.

In summary, we can state our specific predictions as

follows: (a) If participants are more likely to lose target

objects as the number of distractors in the display increases,

then target categorization errors (incorrectly attributing

target form changes to distractors) should increase with

increases in the number of distractors. (b) Response latencies

to form-changing targets that are no longer tracked (and

thus not indexed) should be slower than those for accurately

tracked targets, (c) When the accuracy of the participants'

categorization decisions (target or distractor form change) is

ignored, response latencies to both target and distractor

form changes should increase with increases in the number

of distractors in the display (as in Experiment 1). (d) Exclud-

ing those trials where participants had lost a form-changing

�

target should greatly attenuate the effect of display size on

identification RT.

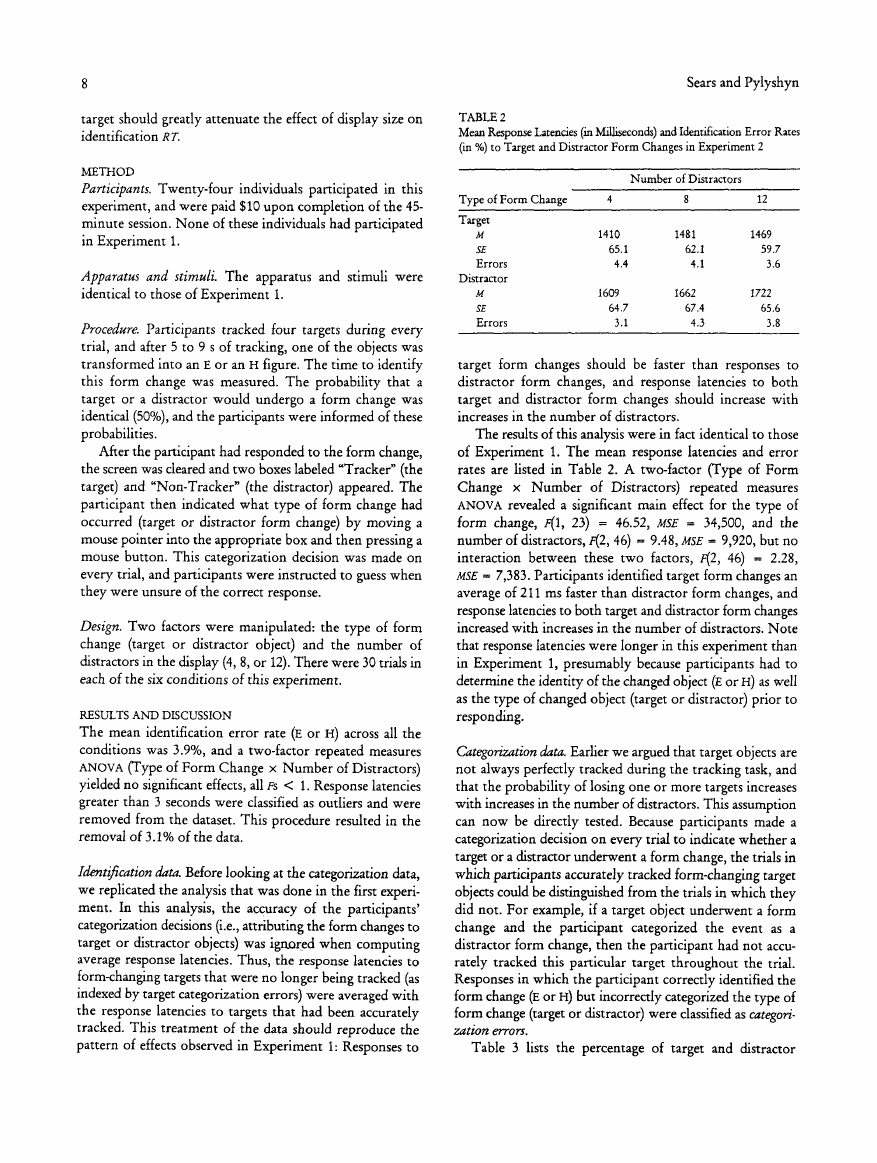

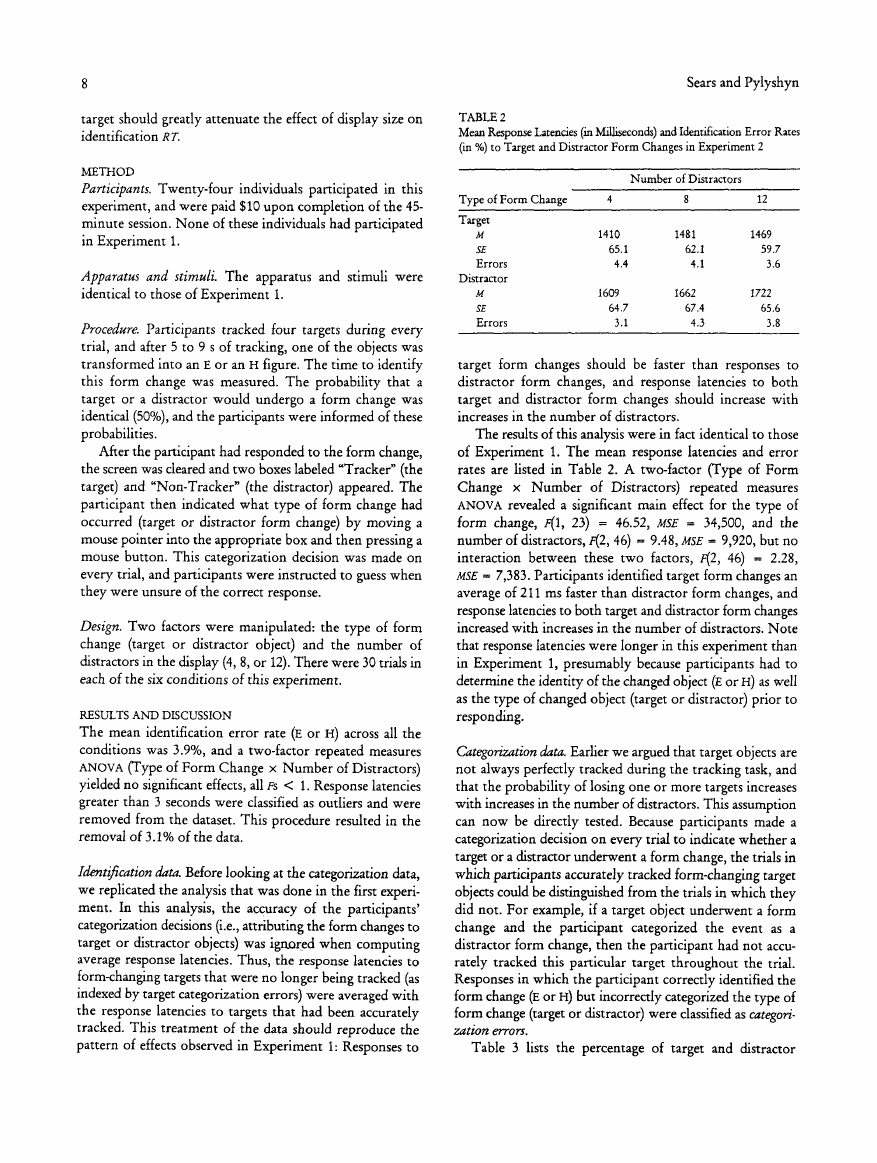

TABLE 2

Mean Response Latencies (in Milliseconds) and Identification Error Rates

(in %) to Target and Distractor Form Changes in Experiment 2

Sears and Pylyshyn

METHOD

Participants. Twenty-four individuals participated in this

experiment, and were paid $10 upon completion of the 45-

minute session. None of these individuals had participated

in Experiment 1.

Apparatus and stimuli. The apparatus and stimuli were

identical to those of Experiment 1.

Procedure. Participants tracked four targets during every

trial, and after 5 to 9 s of tracking, one of the objects was

transformed into an E or an H figure. The time to identify

this form change was measured. The probability that a

target or a distractor would undergo a form change was

identical (50%), and the participants were informed of these

probabilities.

After the participant had responded to the form change,

the screen was cleared and two boxes labeled "Tracker" (the

target) and "Non-Tracker" (the distractor) appeared. The

participant then indicated what type of form change had

occurred (target or distractor form change) by moving a

mouse pointer into the appropriate box and then pressing a

mouse button. This categorization decision was made on

every trial, and participants were instructed to guess when

they were unsure of the correct response.

Design. Two factors were manipulated: the type of form

change (target or distractor object) and the number of

distractors in the display (4, 8, or 12). There were 30 trials in

each of the six conditions of this experiment.

RESULTS AND DISCUSSION

The mean identification error rate (E or H) across all the

conditions was 3.9%, and a two-factor repeated measures

ANOVA (Type of Form Change x Number of Distractors)

yielded no significant effects, all Fs < 1. Response latencies

greater than 3 seconds were classified as outliers and were

removed from the dataset. This procedure resulted in the

removal of 3.1% of the data.

Identification data. Before looking at the categorization data,

we replicated the analysis that was done in the first experi-

ment. In this analysis, the accuracy of the participants'

categorization decisions (i.e., attributing the form changes to

target or distractor objects) was ignored when computing

average response latencies. Thus, the response latencies to

form-changing targets that were no longer being tracked (as

indexed by target categorization errors) were averaged with

the response latencies to targets that had been accurately

tracked. This treatment of the data should reproduce the

pattern of effects observed in Experiment 1: Responses to

Type of Form Change

4

8

Number

of Distractors

Target

M

SE

Errors

Distractor

M

SE

Errors

1410

65.1

4.4

1609

64.7

3.1

1481

62.1

4.1

1662

67.4

4.3

12

1469

59.7

3.6

1722

65.6

3.8

target form changes should be faster than responses to

distractor form changes, and response latencies to both

target and distractor form changes should increase with

increases in the number of distractors.

The results of this analysis were in fact identical to those

of Experiment 1. The mean response latencies and error

rates are listed in Table 2. A two-factor (Type of Form

Change x Number of Distractors) repeated measures

ANOVA revealed a significant main effect for the type of

form change, f(l, 23) = 46.52, MSE = 34,500, and the

number of distractors, F(2, 46) = 9.48, MSE - 9,920, but no

interaction between these two factors, F(2, 46) = 2.28,

MSE = 7,383. Participants identified target form changes an

average of 211 ms faster than distractor form changes, and

response latencies to both target and distractor form changes

increased with increases in the number of distractors. Note

that response latencies were longer in this experiment than

in Experiment 1, presumably because participants had to

determine the identity of the changed object (E or H) as well

as the type of changed object (target or distractor) prior to

responding.

Categorization data. Earlier we argued that target objects are

not always perfectly tracked during the tracking task, and

that the probability of losing one or more targets increases

with increases in the number of distractors. This assumption

can now be directly tested. Because participants made a

categorization decision on every trial to indicate whether a

target or a distractor underwent a form change, the trials in

which participants accurately tracked form-changing target

objects could be distinguished from the trials in which they

did not. For example, if a target object underwent a form

change and the participant categorized the event as a

distractor form change, then the participant had not accu-

rately tracked this particular target throughout the trial.

Responses in which the participant correctly identified the

form change (E or H) but incorrectly categorized the type of

form change (target or distractor) were classified as categori-

zation errors.

Table 3 lists the percentage of target and distractor

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc