1/41

Shumeet Baluja

June 2, 1994

CMU-CS-94-163

School of Computer Science

Carnegie Mellon University

Pittsburgh, Pennsylvania 15213

Genetic algorithms (GAs) are biologically motivated adaptive systems which have been used,

with varying degrees of success, for function optimization. In this study, an abstraction of the

basic genetic algorithm, the Equilibrium Genetic Algorithm (EGA), and the GA in turn, are

reconsidered within the framework of competitive learning. This new perspective reveals a

number of different possibilities for performance improvements. This paper explores popula-

tion-based incremental learning (PBIL), a method of combining the mechanisms of a genera-

tional genetic algorithm with simple competitive learning. The combination of these two

methods reveals a tool which is far simpler than a GA, and which out-performs a GA on large

set of optimization problems in terms of both speed and accuracy. This paper presents an

empirical analysis of where the proposed technique will outperform genetic algorithms, and

describes a class of problems in which a genetic algorithm may be able to perform better.

Extensions to this algorithm are discussed and analyzed. PBIL and extensions are compared

with a standard GA on twelve problems, including standard numerical optimization func-

tions, traditional GA test suite problems, and NP-Complete problems.

Shumeet Baluja is supported by a National Science Foundation Graduate Fellowship. The views

and conclusions contained in this document are those of the author and should not be inter-

preted as representing the official policies, either expressed or implied, of the National Science

Foundation or the U.S. government.

Population Based Incremental Learning

�

Population Based Incremental Learning

�

3/41

baluja@cs.cmu.edu

School of Computer Science

Carnegie Mellon University

Genetic algorithms (GAs) are biologically motivated adaptive systems which have been used,

with varying degrees of success, for function optimization. In this study, an abstraction of the

basic genetic algorithm, the Equilibrium Genetic Algorithm (EGA), and the GA in turn, are

reconsidered within the framework of competitive learning. This new perspective reveals a

number of different possibilities for performance improvements. This paper explores popula-

tion-based incremental learning (PBIL), a method of combining the mechanisms of a genera-

tional genetic algorithm with simple competitive learning. The combination of these two

methods reveals a tool which is far simpler than a GA, and which out-performs a GA on large

set of optimization problems in terms of both speed and accuracy. This paper presents an

empirical analysis of where the proposed technique will outperform genetic algorithms, and

describes a class of problems in which a genetic algorithm may be able to perform better.

Extensions to this algorithm are discussed and analyzed. PBIL and extensions are compared

with a standard GA on twelve problems, including standard numerical optimization func-

tions, traditional GA test suite problems, and NP-Complete problems.

Keywords:

Genetic Algorithms, Competitive Learning, Equilibrium Genetic Algorithm, Learning Vector

Quantization.

Genetic algorithms and artificial neural networks are two recently developed techniques that have

received a large amount of attention in the artificial intelligence research communities. Many of the current

efforts in genetic algorithm research have stressed their role as general purpose function optimizers, which

have been applied to functions in the fields of biological modelling, NP-complete problem approximation

heuristics and standard numerical optimization. A large part of the research effort in the field of neural

networks and competitive learning has been devoted to clustering and classification tasks, with a wide

variety of applications, such as feature mapping, speech recognition [Waibel, 1989], scene analysis and

robot control [Pomerleau, 1989] and data compression [Hertz, Krogh, Palmer, 1993]. There are also many

continuing efforts to perform classification and concept learning tasks using genetic algorithms [De Jong et

al., 1993][Janikow, 1993][Booker et al., 1990][Goldberg,1989]. Similarly, optimization using artificial neural

networks is a widely researched area. Perhaps the most widely known attempt is that of the Hopfield and

Tank Traveling Salesman model [Hopfield & Tank, 1985][Hertz,Krogh, Palmer, 1993].

Population Based Incremental Learning

�

4/41

An abstraction of the basic GA, termed the “Equilibrium Genetic Algorithm” (EGA), has been developed

in conjunction with [Juels, 1993,1994]. The EGA attempts to describe the limit population of a genetic algo-

rithm by an equilibrium point, under the assumption that the population is always “mated to conver-

gence.” This process can be viewed as a form of eliminating the explicit crossover step in standard genetic

algorithm search. In the work with Juels, the representation of the population by using an equilibrium

point was explored. The work presented in this paper concentrates on the operators needed to make this

new representation effective for search; the operators are presented in the context of supervised competi-

tive learning. Issues regarding the use of the resulting algorithm, such as the settings of the parameters, the

contribution of a mutation operator, as well as the algorithm’s relation to more traditional genetic search,

are examined. It is determined that the framework of competitive learning provides a basis from which to

explain the performance of the EGA. The new framework also provides the mechanisms for many exten-

sions to the basic algorithm which improve the performance of the EGA. 1 Several of the extensions are

explored in this paper, and many more are presented as directions for future research.

This paper presents population-based incremental learning (PBIL), a method of combining genetic algo-

rithms and competitive learning for function optimization. PBIL is an extension to the EGA algorithm

achieved through the re-examination of the performance of the EGA in terms of competitive learning. This

paper also presents a detailed empirical examination of the EGA and PBIL algorithms on a suite of prob-

lems designed to test their abilities in a wide variety of function optimization domains. Although this

work has ties with other areas of research involving genetic algorithms, to limit the scope of this paper,

PBIL is explored exclusively in the context of function optimization. Other recent work in combining GAs

with techniques related to artificial neural networks has been lar gely directed in alternative directions,

such as using GAs for optimizing neural network structure and performance [Whitley & Schaffer,

1992][Gruau, 1993][Polani & Uthamann, 1993][Whitley & Hanson, 1989]. One of the closest related works,

which also employed an underlying artificial neural network str ucture to augment genetic search, can be

found in Ackley’s , [Ackley, 1987][Ackley, 1985].

The remainder of this section describes the principles of genetic based optimization and competitive learn-

ing. Section 2 describes the combination of both of the techniques by examining the role of the population

in a genetic algorithm. Section 3 examines the effects of changing some of the parameters in the PBIL algo-

rithm. Section 4 presents extensions of the algorithm, based on Kohonen’s learning vector quantization

algorithm [Kohonen, 1988,1989]. In section 5, the basic PBIL and its extensions are empirically examined

on a suite of twelve test problems. Section 6 discusses the applicability of the results reported in section 5

and concludes with a discussion of the benefits of using PBIL in comparison to standar d genetic algo-

rithms for function optimization. Finally, section 7 closes this report with suggestions for future research.

Genetic algorithms (GAs) are biologically motivated adaptive systems which are based upon the princi-

ples of natural selection and genetic recombination. Although GAs have often been used for function opti-

mization, some of the pioneering work [Holland, 1975] suggests an alternative view of GA behavior. De

Jong summarizes this view in the following paragraph:

“Holland [Holland, 1975] has suggested that a better way to view GAs is in terms of optimiz-

ing a sequential decision process involving uncertainty in the form of lack of a priori knowl-

edge, noisy feedback and a time-varying payoff function. More specifically , given a limited

number of trials, how should one allocate them so as to maximize the cumulative payoff in the

face of all this uncertainty?” [De Jong, 92].

This has important implications to the use of genetic algorithms as function optimizers. Although genetic

algorithms may have the ability to quickly find r egions of high performance in the face of noise and time-

1. To avoid confusion, the term “EGA” will be used only when referencing [Juels, 1993] explanation of the algorithm.

Population Based Incremental Learning

�

5/41

varying payoff functions, they may be unable to find the absolute optimal solution, in time-varying or sta-

tionary environments.

The GAs described in the current literature are highly modified to r econcile the differences between func-

tion optimization, in which the effectiveness is measured by the best solution found, and maximizing

cumulative returns, in which finding the absolute best solution may not be the only critical issue. The basic

genetic algorithm and some of the common modifications which have been made for ef fective function

optimization are described below.

A GA combines the principles of survival of the fittest with a randomized information exchange. Although

the information exchange is randomized, the GA is far different than a simple random walk. A GA has the

ability to recognize trends toward optimal solutions, and exploit such information by guiding the search

toward them.

A genetic algorithm maintains a population of potential solutions to the objective function being opti-

mized. The initial group of potential solutions is determined randomly. These potential solutions, called

“chr omosomes,” ar e allowed to evolve over a number of generations. At every generation, the fitness of

each chromosome is calculated. The fitness is a measur e of how well the potential solution optimizes the

objective function. The subsequent generation is created through a process of selection and recombination.

The chromosomes are probabilistically chosen for recombination based upon their fitness; this is a measur e

of how well the chromosomes achieve the desired goal (e.g. find the minimum in a specified function, etc.).

The recombination operator combines the information contained within pairs of selected “par ents”, and

places a mixture of the information from both parents into a member of the subsequent generation. Selec-

tion and recombination are the mechanisms through which the population “evolves.” Although the chr o-

mosomes with high fitness values will have a higher pr obability of being selected for recombination than

those which do not, they are not guaranteed to appear in the next generation. The “childr en” chr omo-

somes produced by the genetic recombination are not necessarily better than their “par ent” chr omosomes.

Nevertheless, because of the selective pressure applied through a number of generations, the overall trend

is towards better chromosomes.

In order to perform extensive search, genetic diversity must be maintained. When diversity is lost, it is

possible for the GA to settle into a sub-optimal state. There are two fundamental mechanisms which the

basic GA uses to maintain diversity. The first, mentioned above, is a pr obabilistic scheme for selecting

which chromosomes to recombine. This ensures that information other than that represented in the best

chromosomes appears in the subsequent generation. Recombining only good chromosomes will very

quickly converge the population without extensive exploration, thereby increasing the possibility of find-

ing only a local optimum. The second mechanism is mutation; mutations are used to help preserve diver-

sity and to escape from local optima. Mutations introduce random changes into the population.

The genetic algorithm is typically allowed to continue for a fixed number of generations. At the conclusion

of the specified number of generations, the best chr omosome in the final population, or the best chr omo-

some ever found, is returned. Unlike the majority of other search heuristics, genetic algorithms do not

work from a single point in the function space. GAs continually maintain a population of points from

which the function space is explored.

In using GAs for function optimization, many issues, such as proper scaling of functions, ensuring that

good information is not lost due to random chance, and efficient pr oblem representation, need to be

resolved. Although GAs can often find r egions of high performance, it is much harder for the GA to move

to the optimal solution. One potential reason for this inability is that the differential between good and

optimal solutions may be very small in comparison with the differential between good and bad solutions.

In designing an effective genetic based function optimizer, it is necessary to be able to provide a large

enough “incentive” for the GA to make pr ogress given only small differentials. One method of maintain-

ing a large differential between potential solutions is to employ a method of dynamic scaling, so that the

fitness of each solution is measur ed relative to the fitness of the other solutions in the curr ent population.

Population Based Incremental Learning

�

6/41

As a genetic algorithm is randomized, it is possible to lose the best solution due to random chance. There is

no guarantee that the best solution in the current population will be selected for recombination, or that if it

is selected, that the mutation and crossover operators will not destroy some of its information as it is

passed to its successors. If the best solution is lost from the population, there is no guarantee that the solu-

tion will be found again. Methods such as elitist strategies have been proposed to address this problem.

Elitist strategies ensure that the best solution in the previous population is transferred to the current gener-

ation unaltered. A common implementation of this replaces the worst chromosome in the current genera-

tion with the best from the previous generation.

Beyond the mechanisms inherent to the GA which influence its ability to optimize functions, there is also

the issue of problem representation. Although the majority of GA research has been conducted using a

binary solution representation, this is not the only method of encoding problem solutions. Different meth-

ods which alter the cardinality of the alphabet used for encoding, or the interpretation of the encoding, can

have an enormous impact on the performance of the GA [Liepins & Vose, 1990]. A good overview of the

issues and difficulties involved in using GAs for function optimization can be found in [De Jong, 1992].

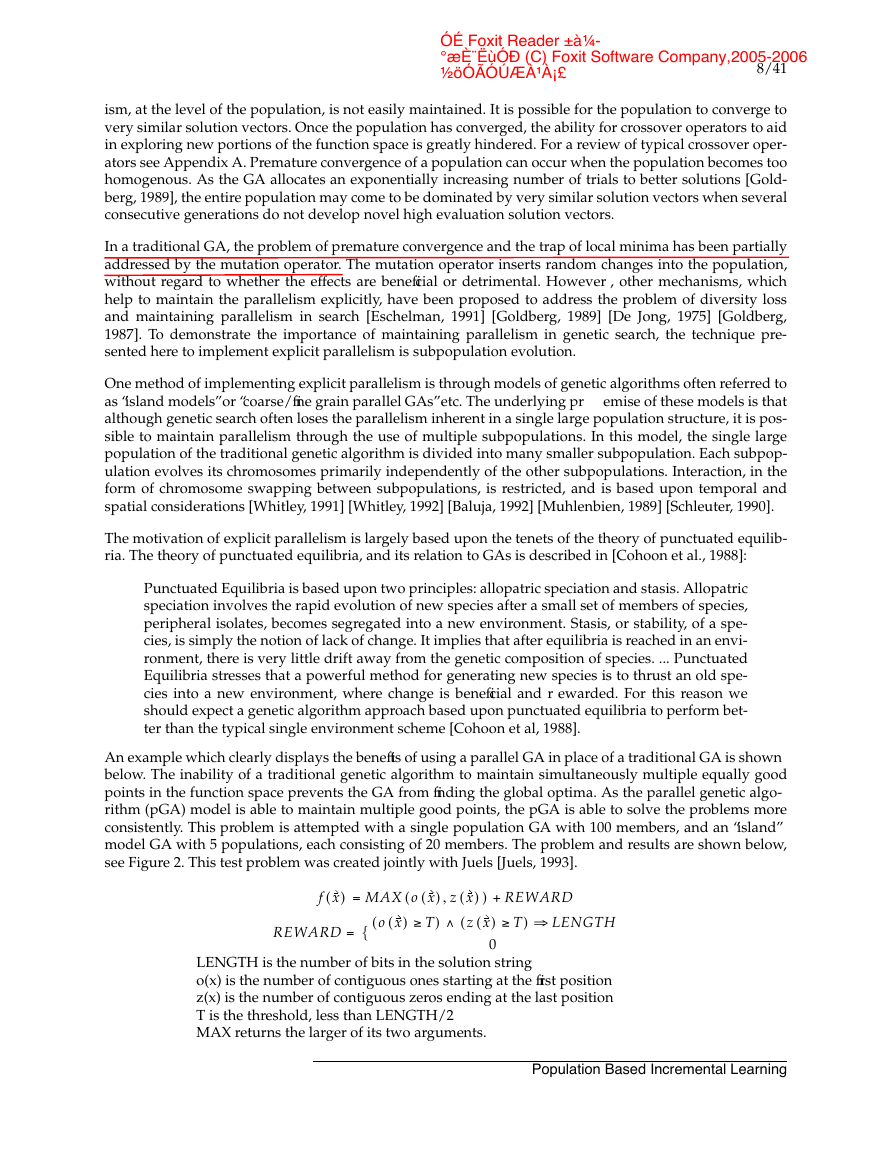

Competitive learning (CL) is often used to cluster a number of unlabeled points into distinct groups. Mem-

bership into each group is based upon the similarity of points with respect to the characteristics in study.

The procedure is unsupervised, as there is no knowledge of how many groups exist, or what each

group’s distinguishing features may be. The hope is that the CL procedure will be able to determine the

most relevant features for class formation and then be able to cluster points into distinct groups based on

these features.

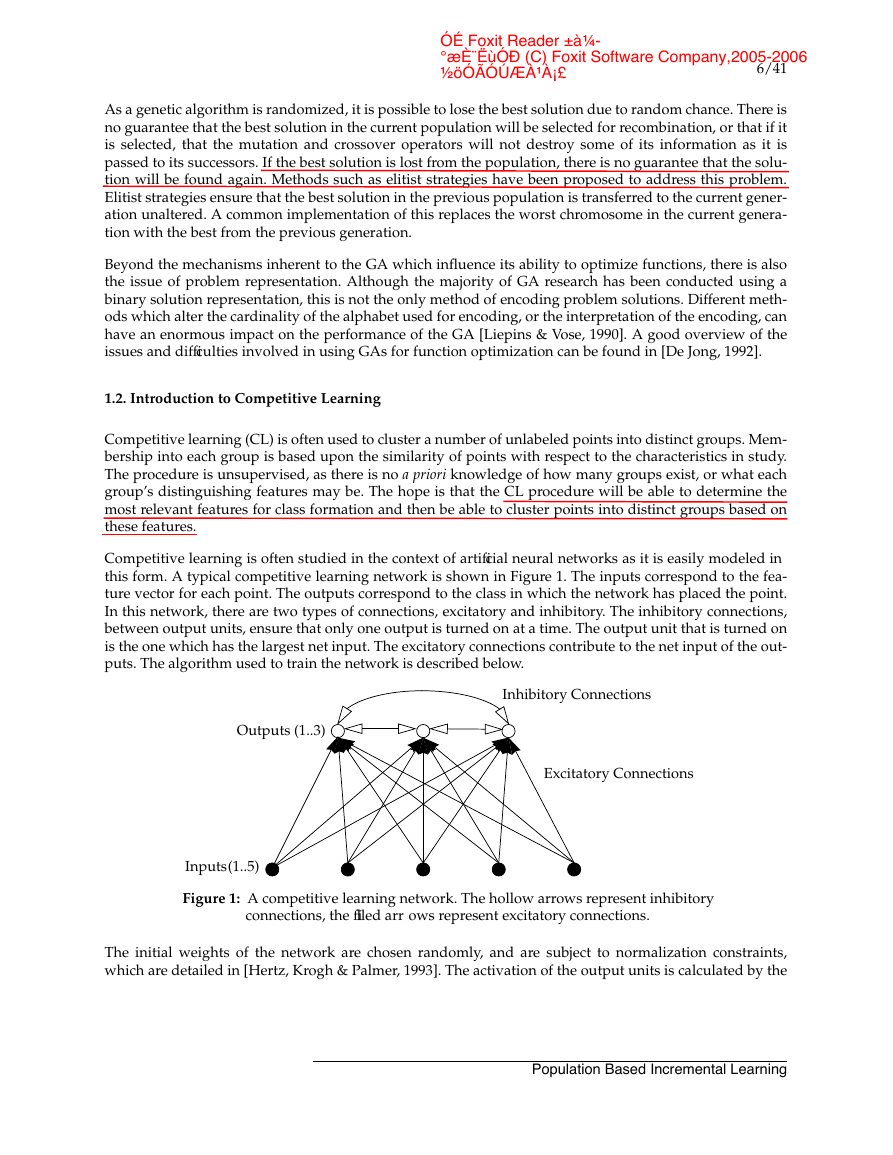

Competitive learning is often studied in the context of artificial neural networks as it is easily modeled in

this form. A typical competitive learning network is shown in Figure 1. The inputs correspond to the fea-

ture vector for each point. The outputs correspond to the class in which the network has placed the point.

In this network, there are two types of connections, excitatory and inhibitory. The inhibitory connections,

between output units, ensure that only one output is turned on at a time. The output unit that is turned on

is the one which has the largest net input. The excitatory connections contribute to the net input of the out-

puts. The algorithm used to train the network is described below.

Outputs (1..3)

Inhibitory Connections

Excitatory Connections

Inputs

(1..5)

A competitive learning network. The hollow arrows represent inhibitory

connections, the filled arr ows represent excitatory connections.

The initial weights of the network are chosen randomly, and are subject to normalization constraints,

which are detailed in [Hertz, Krogh & Palmer, 1993]. The activation of the output units is calculated by the

Population Based Incremental Learning

�

7/41

following formula (in which is the weight of the connection between and ):

=

In a competitive learning network, only the output unit with the largest activation is allowed to fir e for

each point presented. The winning output unit corresponds to the classification of the input point. During

training, the weights of the winning output unit are moved closer to the presented point by adjusting the

weights according to the following rule (LR is the learning rate parameter):

D

=

(

)

The process of training the CL network involves repeatedly presenting each point to the network until the

network has stabilized. Although, in general, the network cannot be guaranteed to stabilize if weight

updates are made after each point is presented to the network, other heuristics may be used to determine

when to stop training. One possible method of ensuring stability is to reduce the learning rate gradually to

0, as the total number of pattern presentation increases [Hertz, Krogh, Palmer, 1993].

After the network training is complete, the weight vectors for each of the output units can be considered

prototype vectors for one of the discovered classes. The attributes with the large weights are the defining

characteristics of the class represented by the output. It is the notion of creating a prototype vector which

will be central to the discussions of PBIL. The unsupervised nature of the algorithm will not be main-

tained, as the members of the class of interest will be easily determinable; this will be returned to in much

greater detail in the next section. Although the method of training described in this section has been unsu-

pervised, supervised competitive learning has been explored in artificial neural network literatur e, and is

central to the discussion in Section 2 [Kohonen et. al, 1988] [Kohonen, 1989]. The examination of PBIL

through the perspective of supervised competitive learning yields insights which can lead to a much more

efficient algorithm than the base-line PBIL described in the next section. Impr oved versions of PBIL are

described in section 4, and are empirically examined in section 5.

One of the fundamental attributes of the genetic algorithm is its ability to search the function space from

multiple points in parallel. In this context, parallelism does not refer to the ability to parallelize the imple-

mentation of genetic algorithms; rather, it refers to the ability to represent a very large number of potential

solutions in the population of a single generation. This section describes the implicit parallelism of GAs,

and explicit methods of ensuring parallelism. Because of the failure of the implicit parallelism to exist in

the latter parts of genetic search in the simple genetic algorithm, the utility of maintaining multiple points,

through the use of a population, decreases. The limited effectiveness of the population in the latter por-

tions of search allows it to be modeled by a probability vector, specifying the probability of each position

containing a particular value. This concept is central to the PBIL algorithm, and is the focus of this section.

In every generation during the run of a GA, a population of potential solutions exists. The search of the

function space progresses from these points, and the schemata represented in these points, in parallel. This

is in contrast to other search techniques, such as simulated annealing [Kirkpatrick et al., 1983], or hill-

climbing, in which a single point in the function space is used as the basis for search. The ability to search

multiple schemata in each solution vector has been termed implicit parallelism. However, useful parallel-

Population Based Incremental Learning

·

-

·

�

8/41

ism, at the level of the population, is not easily maintained. It is possible for the population to converge to

very similar solution vectors. Once the population has converged, the ability for crossover operators to aid

in exploring new portions of the function space is greatly hindered. For a review of typical crossover oper-

ators see Appendix A. Premature convergence of a population can occur when the population becomes too

homogenous. As the GA allocates an exponentially increasing number of trials to better solutions [Gold-

berg, 1989], the entire population may come to be dominated by very similar solution vectors when several

consecutive generations do not develop novel high evaluation solution vectors.

In a traditional GA, the problem of premature convergence and the trap of local minima has been partially

addressed by the mutation operator. The mutation operator inserts random changes into the population,

without regard to whether the effects are beneficial or detrimental. However , other mechanisms, which

help to maintain the parallelism explicitly, have been proposed to address the problem of diversity loss

and maintaining parallelism in search [Eschelman, 1991] [Goldberg, 1989] [De Jong, 1975] [Goldberg,

1987]. To demonstrate the importance of maintaining parallelism in genetic search, the technique pre-

sented here to implement explicit parallelism is subpopulation evolution.

One method of implementing explicit parallelism is through models of genetic algorithms often referred to

as “island models” or “coarse/fine grain parallel GAs” etc. The underlying pr

emise of these models is that

although genetic search often loses the parallelism inherent in a single large population structure, it is pos-

sible to maintain parallelism through the use of multiple subpopulations. In this model, the single large

population of the traditional genetic algorithm is divided into many smaller subpopulation. Each subpop-

ulation evolves its chromosomes primarily independently of the other subpopulations. Interaction, in the

form of chromosome swapping between subpopulations, is restricted, and is based upon temporal and

spatial considerations [Whitley, 1991] [Whitley, 1992] [Baluja, 1992] [Muhlenbien, 1989] [Schleuter, 1990].

The motivation of explicit parallelism is largely based upon the tenets of the theory of punctuated equilib-

ria. The theory of punctuated equilibria, and its relation to GAs is described in [Cohoon et al., 1988]:

Punctuated Equilibria is based upon two principles: allopatric speciation and stasis. Allopatric

speciation involves the rapid evolution of new species after a small set of members of species,

peripheral isolates, becomes segregated into a new environment. Stasis, or stability, of a spe-

cies, is simply the notion of lack of change. It implies that after equilibria is reached in an envi-

ronment, there is very little drift away from the genetic composition of species. ... Punctuated

Equilibria stresses that a powerful method for generating new species is to thrust an old spe-

cies into a new environment, where change is beneficial and r ewarded. For this reason we

should expect a genetic algorithm approach based upon punctuated equilibria to perform bet-

ter than the typical single environment scheme [Cohoon et al, 1988].

An example which clearly displays the benefits of using a parallel GA in place of a traditional GA is shown

below. The inability of a traditional genetic algorithm to maintain simultaneously multiple equally good

points in the function space prevents the GA from finding the global optima. As the parallel genetic algo-

rithm (pGA) model is able to maintain multiple good points, the pGA is able to solve the problems more

consistently. This problem is attempted with a single population GA with 100 members, and an “island”

model GA with 5 populations, each consisting of 20 members. The problem and results are shown below,

see Figure 2. This test problem was created jointly with Juels [Juels, 1993].

( ) ( ) ( )

=

(

,

+

)

{=

(

( ) ‡

)

(

( ) ‡

)

0

LENGTH is the number of bits in the solution string

o(x) is the number of contiguous ones starting at the first position

z(x) is the number of contiguous zeros ending at the last position

T is the threshold, less than LENGTH/2

MAX returns the larger of its two arguments.

Population Based Incremental Learning

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc