Introduction to Boosted Trees

Tianqi Chen

Oct. 22 2014

�

Outline

• Review of key concepts of supervised learning

• Regression Tree and Ensemble (What are we Learning)

• Gradient Boosting (How do we Learn)

• Summary

�

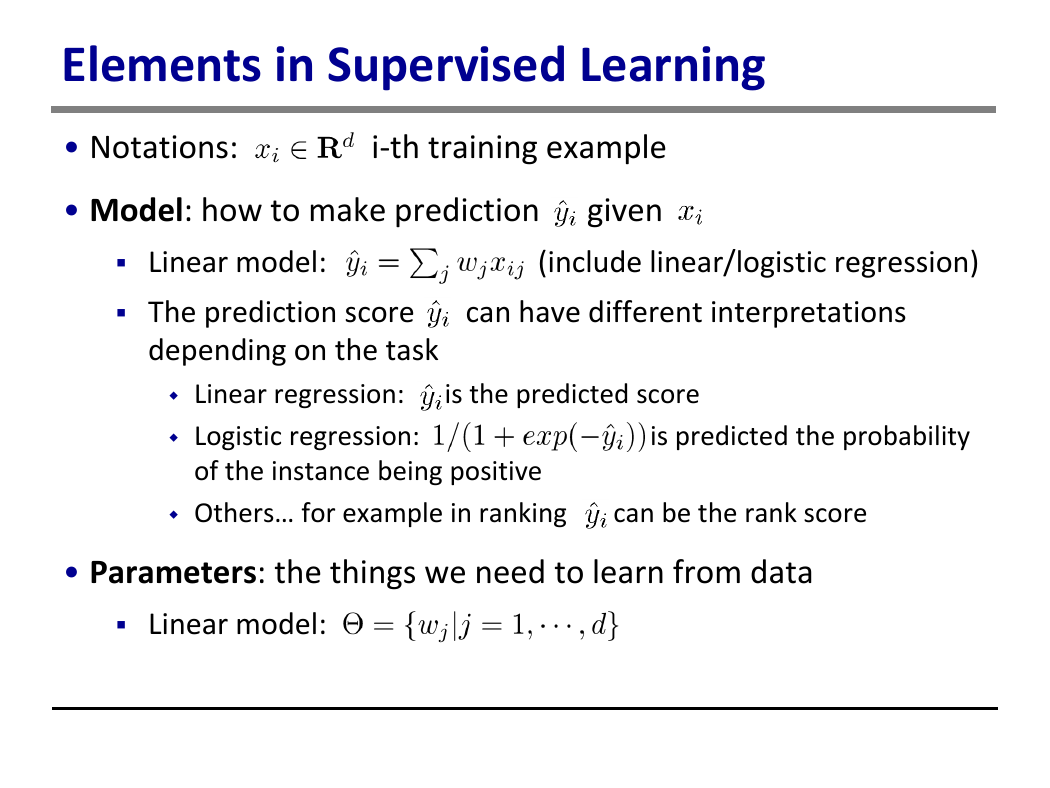

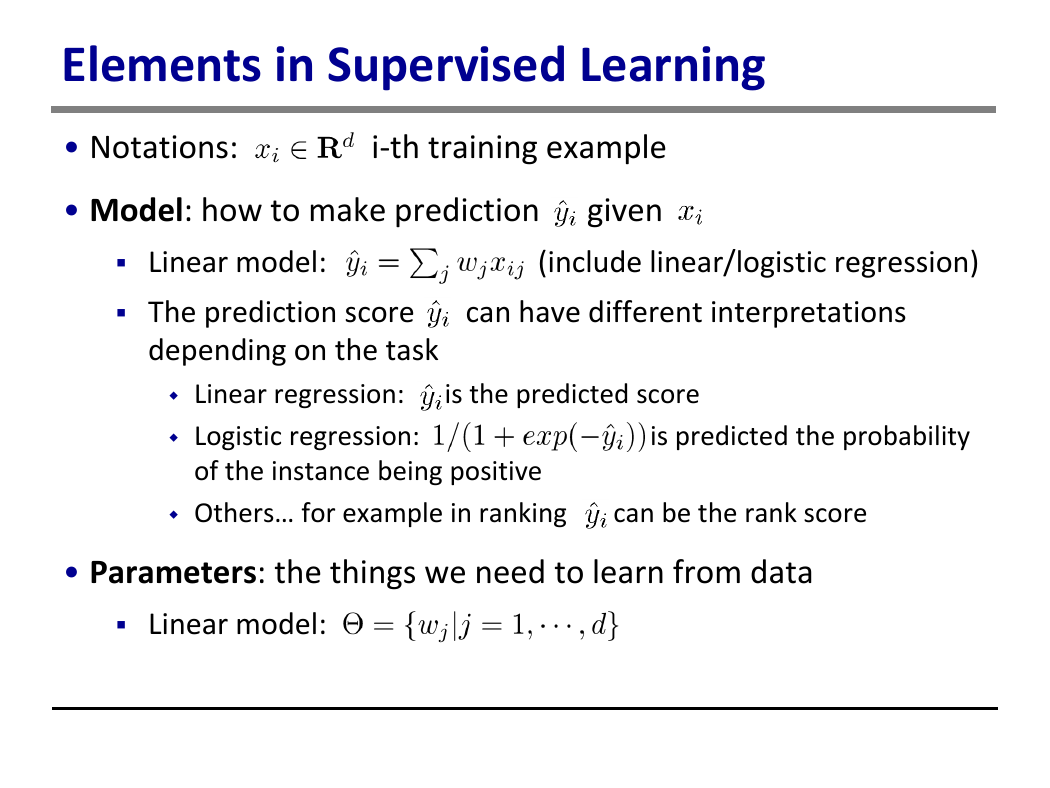

Elements in Supervised Learning

• Notations: i-th training example

• Model: how to make prediction given

Linear model: (include linear/logistic regression)

The prediction score can have different interpretations

depending on the task

Linear regression: is the predicted score

Logistic regression: is predicted the probability

of the instance being positive

Others… for example in ranking can be the rank score

• Parameters: the things we need to learn from data

Linear model:

�

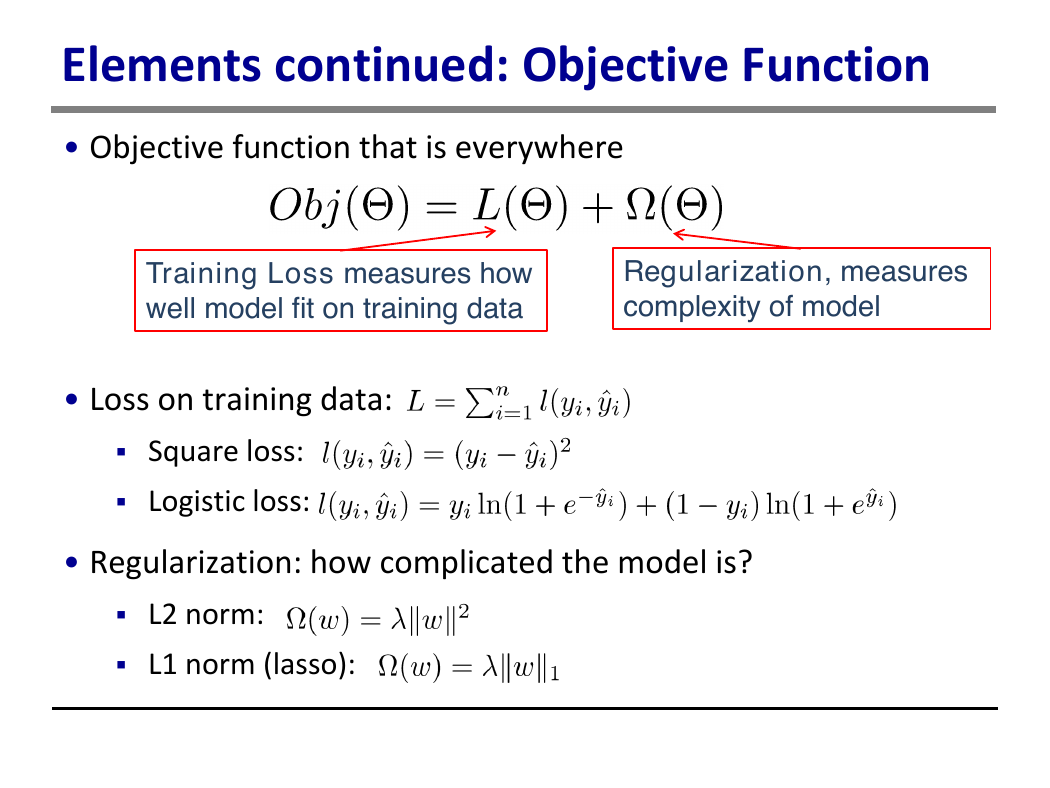

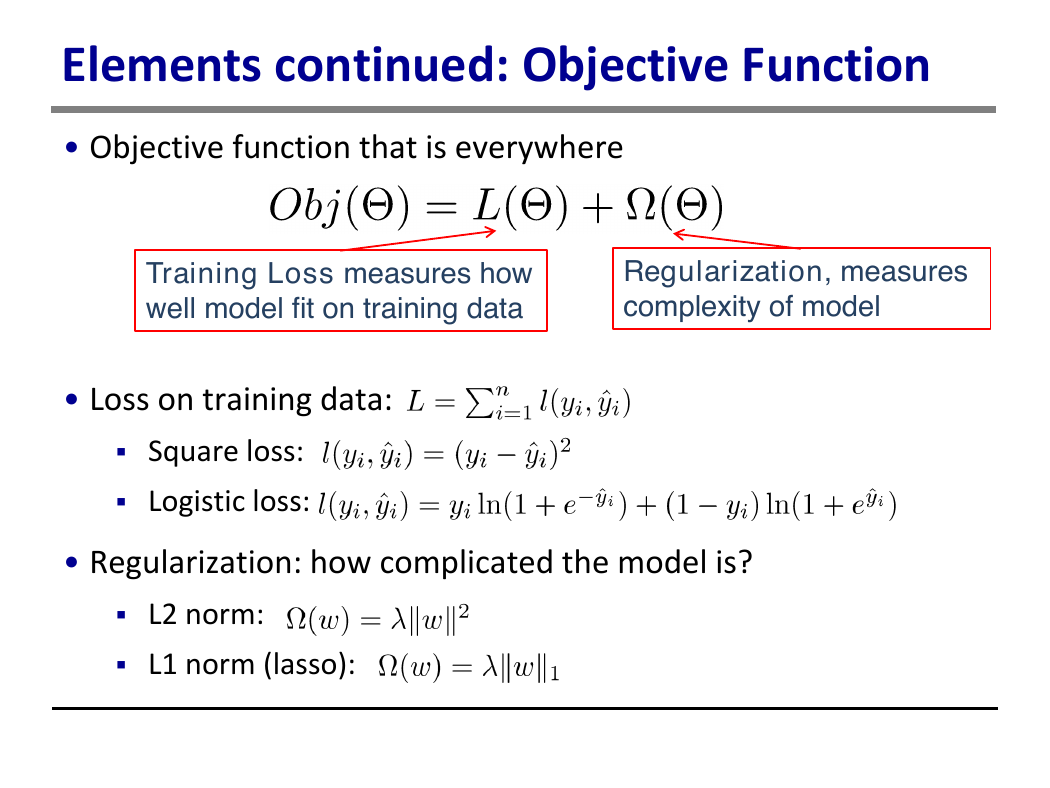

Elements continued: Objective Function

• Objective function that is everywhere

Training Loss measures how

well model fit on training data

Regularization, measures

complexity of model

• Loss on training data:

Square loss:

Logistic loss:

• Regularization: how complicated the model is?

L2 norm:

L1 norm (lasso):

�

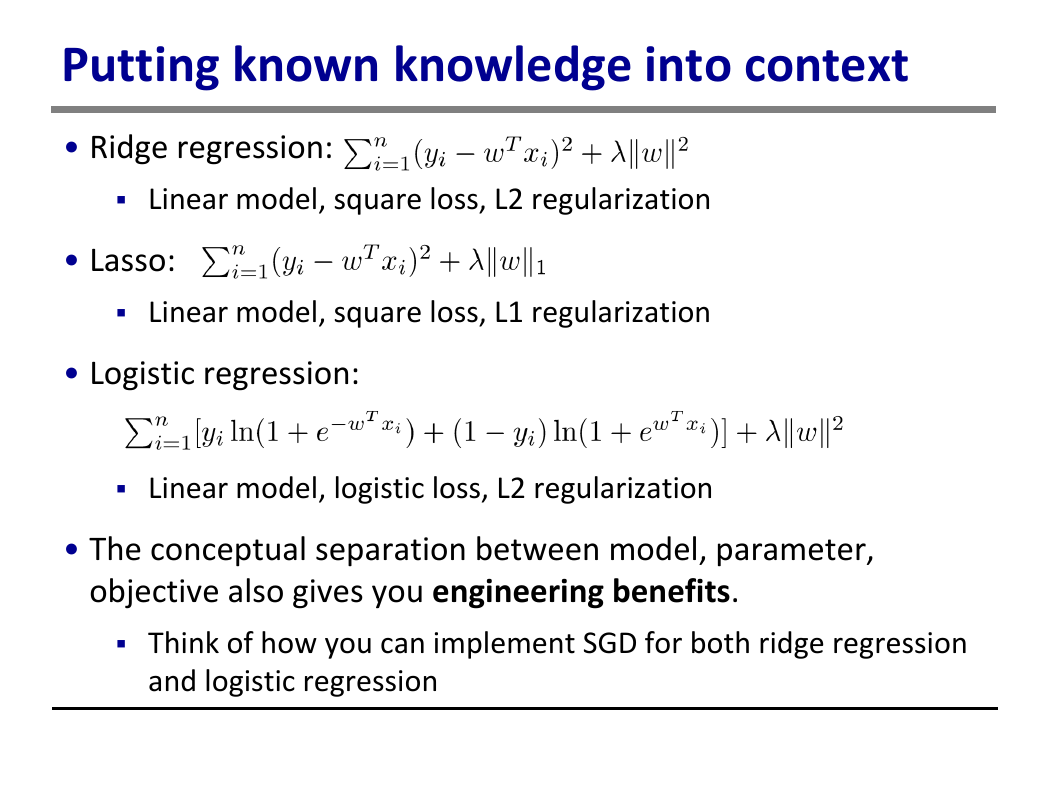

Putting known knowledge into context

• Ridge regression:

Linear model, square loss, L2 regularization

• Lasso:

Linear model, square loss, L1 regularization

• Logistic regression:

Linear model, logistic loss, L2 regularization

• The conceptual separation between model, parameter,

objective also gives you engineering benefits.

Think of how you can implement SGD for both ridge regression

and logistic regression

�

Objective and Bias Variance Trade-off

Training Loss measures how

well model fit on training data

Regularization, measures

complexity of model

• Why do we want to contain two component in the objective?

• Optimizing training loss encourages predictive models

Fitting well in training data at least get you close to training data

which is hopefully close to the underlying distribution

• Optimizing regularization encourages simple models

Simpler models tends to have smaller variance in future

predictions, making prediction stable

�

Outline

• Review of key concepts of supervised learning

• Regression Tree and Ensemble (What are we Learning)

• Gradient Boosting (How do we Learn)

• Summary

�

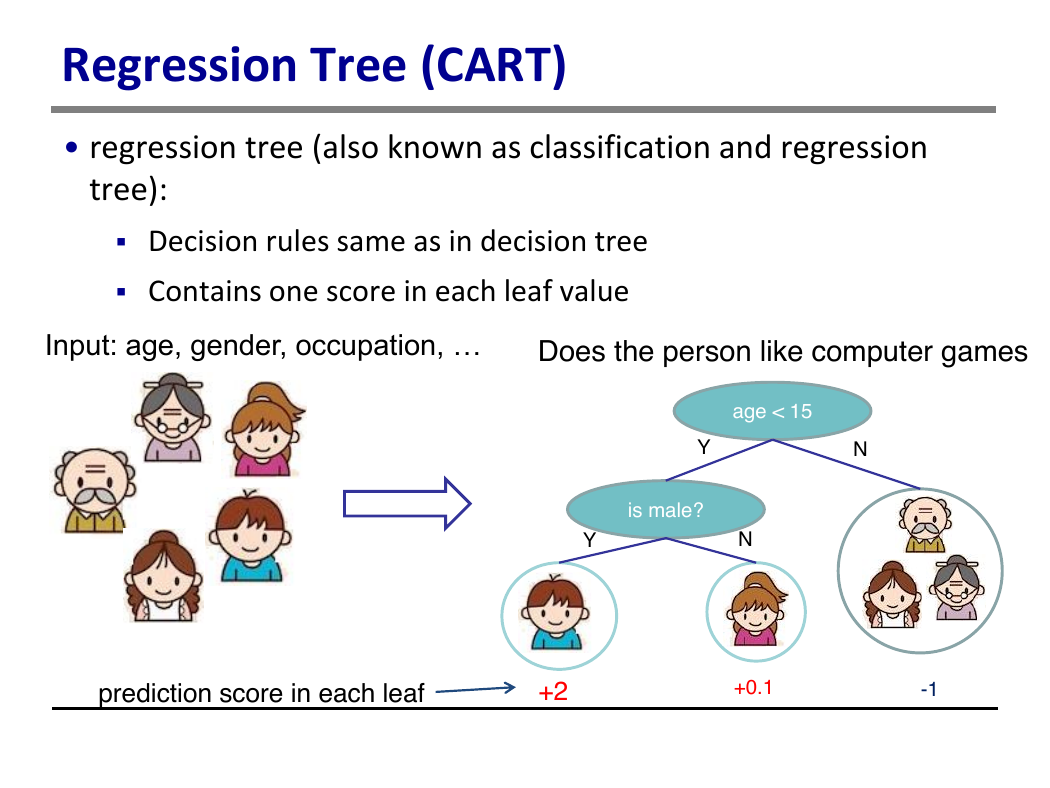

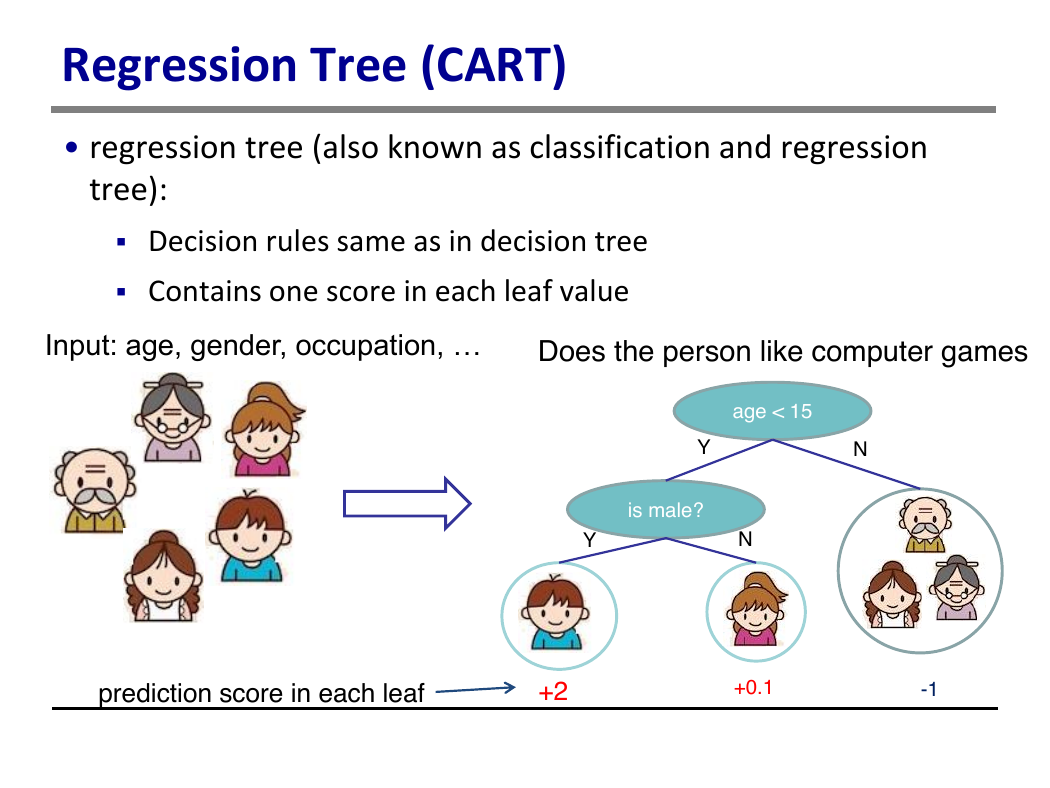

Regression Tree (CART)

• regression tree (also known as classification and regression

tree):

Decision rules same as in decision tree

Contains one score in each leaf value

Input: age, gender, occupation, …

Does the person like computer games

age < 15

Y

N

is male?

Y

N

prediction score in each leaf

+2

+0.1

-1

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc