Creating Capsule Wardrobes from Fashion Images

Wei-Lin Hsiao

UT-Austin

Kristen Grauman

UT-Austin

kimhsiao@cs.utexas.edu

grauman@cs.utexas.edu

Abstract

We propose to automatically create capsule wardrobes.

Given an inventory of candidate garments and accessories,

the algorithm must assemble a minimal set of items that pro-

vides maximal mix-and-match outfits. We pose the task as

a subset selection problem. To permit efficient subset selec-

tion over the space of all outfit combinations, we develop

submodular objective functions capturing the key ingredi-

ents of visual compatibility, versatility, and user-specific

preference. Since adding garments to a capsule only ex-

pands its possible outfits, we devise an iterative approach

to allow near-optimal submodular function maximization.

Finally, we present an unsupervised approach to learn vi-

sual compatibility from “in the wild” full body outfit pho-

tos; the compatibility metric translates well to cleaner cat-

alog photos and improves over existing methods. Our re-

sults on thousands of pieces from popular fashion websites

show that automatic capsule creation has potential to mimic

skilled fashionistas in assembling flexible wardrobes, while

being significantly more scalable.

1. Introduction

The fashion domain is a magnet for computer vision.

New vision problems are emerging in step with the fash-

ion industry’s rapid evolution towards an online, social, and

personalized business. Style models [22, 38, 34, 17, 27],

trend forecasting [1], interactive search [44, 24], and rec-

ommendation [41, 18, 31] all require visual understanding

with rich detail and subtlety. Research in this area is poised

to have great influence on what people buy, how they shop,

and how the fashion industry analyzes its enterprise.

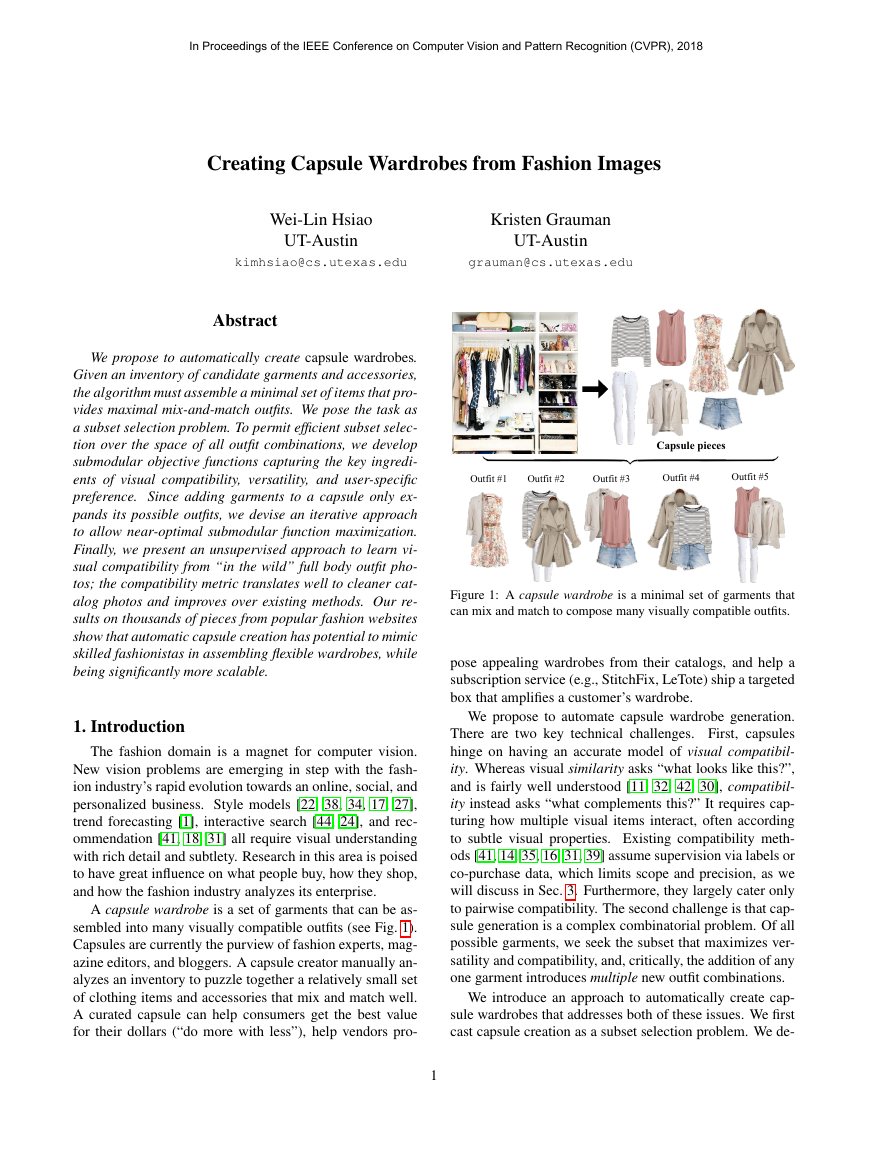

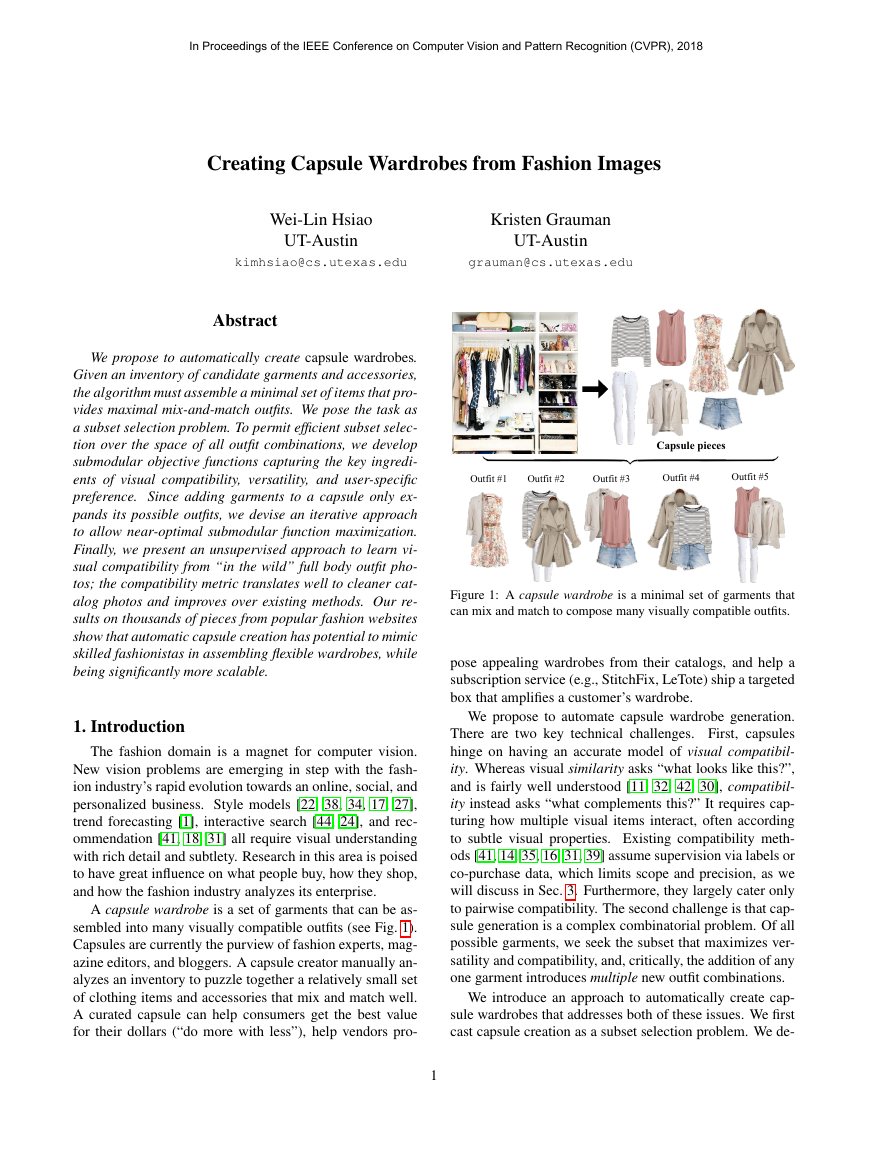

A capsule wardrobe is a set of garments that can be as-

sembled into many visually compatible outfits (see Fig. 1).

Capsules are currently the purview of fashion experts, mag-

azine editors, and bloggers. A capsule creator manually an-

alyzes an inventory to puzzle together a relatively small set

of clothing items and accessories that mix and match well.

A curated capsule can help consumers get the best value

for their dollars (“do more with less”), help vendors pro-

Figure 1: A capsule wardrobe is a minimal set of garments that

can mix and match to compose many visually compatible outfits.

pose appealing wardrobes from their catalogs, and help a

subscription service (e.g., StitchFix, LeTote) ship a targeted

box that amplifies a customer’s wardrobe.

We propose to automate capsule wardrobe generation.

There are two key technical challenges. First, capsules

hinge on having an accurate model of visual compatibil-

ity. Whereas visual similarity asks “what looks like this?”,

and is fairly well understood [11, 32, 42, 30], compatibil-

ity instead asks “what complements this?” It requires cap-

turing how multiple visual items interact, often according

to subtle visual properties. Existing compatibility meth-

ods [41, 14, 35, 16, 31, 39] assume supervision via labels or

co-purchase data, which limits scope and precision, as we

will discuss in Sec. 3. Furthermore, they largely cater only

to pairwise compatibility. The second challenge is that cap-

sule generation is a complex combinatorial problem. Of all

possible garments, we seek the subset that maximizes ver-

satility and compatibility, and, critically, the addition of any

one garment introduces multiple new outfit combinations.

We introduce an approach to automatically create cap-

sule wardrobes that addresses both of these issues. We first

cast capsule creation as a subset selection problem. We de-

1

Capsule piecesOutfit #1Outfit #2Outfit #3Outfit #4Outfit #5In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018�

fine an objective function characterizing a capsule based on

its pieces’ mutual compatibility, the resulting outfits’ versa-

tility, and (optionally) its faithfulness to a user’s preferred

style. Then, we develop an efficient algorithm that maps a

large inventory of candidate garments into the best capsule

of the desired size. We design objectives that are submod-

ular for the addition of new outfits, ensuring the “diminish-

ing returns” property that facilitates near-optimal set selec-

tion [36, 28]. Then, since each garment added to a capsule

expands the possible outfits, we further develop an iterative

approach that exploits outfit submodularity to alternate be-

tween fixing and selecting each layer of clothing.

As a second main contribution, we introduce an unsuper-

vised approach to learn visual compatibility from full-body

images “in the wild”. We learn a generative model for outfit

compositions from unlabeled images that can score k-way

compatibility. Because it is built on predicted attributes, our

model can translate compatibility learned from the “in the

wild” photos to cleaner catalog photos of individual items,

where users need most guidance on mixing and matching.

We evaluate our approach on thousands of garments

from Polyvore, popular social commerce websites for fash-

ion. We compare our algorithm’s capsule creations to those

manually defined by fashionistas, as well as subjective user

studies. Furthermore, we show our underlying compatibil-

ity model offers advantages over some state of the art meth-

ods. Finally, we demonstrate the practical value of our al-

gorithm, which in seconds finds near-optimal capsules for

problem scales that are otherwise intractable.

2. Related Work

Attributes for fashion Attributes offer a natural repre-

sentation for clothing, since they can describe relevant pat-

terns (checked, paisley), colors (rose, teal), fit (loose), and

cut (V-neck, flowing) [4, 2, 8, 43, 6, 23, 33]. Topic models

on attributes are indicative of styles [20, 40, 17]. Inspired

by [17], we employ topic models. However, whereas [17]

seeks a style-coherent image embedding, we use correlated

topic models to score novel combinations of garments for

their compatibility. Domain adaptation [6, 19] and multi-

task curriculum learning [9] are valuable to overcome the

gap between street and shop photos. We devise a simple

curriculum learning approach to train attributes effectively

in our setting. None of the above methods explore visual

compatibility or capsule wardrobes.

Style and fashionability Beyond recognition tasks,

fashion also demands answering: How do we represent

style? What makes an outfit fashionable? The style of an

outfit is typically learned in a supervised manner. Lever-

aging style-labeled data like HipsterWars [22] or Deep-

Fashion [33], classifiers built on body keypoints [22],

weak meta-data [38], or contextual embeddings [27] show

promise. Fashionability refers specifically to a style’s pop-

ularity. It can also be learned from supervised data, e.g.,

online data for user “likes” [29, 37]. Unsupervised style

discovery methods instead mine unlabeled photos to detect

common themes in people’s outfits, with topic models [17],

non-negative matrix factorization [1], or clustering [34]. We

also leverage unlabeled images to discover “what people

wear”; however, our goal is to infer visual compatibility for

unseen garments, rather than trend analysis [1, 34] or image

retrieval [17] on a fixed corpus.

Compatibility and recommendation Substantial prior

work explores ways to link images containing the same or

very similar garment [11, 32, 42, 21, 30]. In contrast, com-

patibility requires judging how well-coordinated or comple-

mentary a given set of garments is. Compatibility can be

posed as a metric learning problem [41, 35, 16], addressable

with Siamese embeddings [41] or link prediction [35]. Text

data can aid compatibility [29, 39, 14]. As an alternative

to metric learning, a recurrent neural network models outfit

composition as a sequential process that adds one garment

at a time, implicitly learning compatibility via the transition

function [14]. Compatibility has applications in recommen-

dation [31, 18], but prior work recommends a garment at a

time, as opposed to constructing a wardrobe.

To our knowledge, all prior work requires labeled data

to learn compatibility, whether from human annotators cu-

rating matches [14, 18], co-purchase data [41, 35, 16], or

implicit crowd labels [29]. In contrast, we propose an un-

supervised approach, which has the advantages of scalabil-

ity, privacy, and continually refreshable models as fashion

evolves, and also avoids awkwardly generating “negative”

training pairs (see Sec. 3). Most importantly, our work

is the first to develop an algorithm for generating capsule

wardrobes. Capsules require going beyond pairwise com-

patibility to represent k-way interactions and versatility, and

they present a challenging combinatorial problem.

Subset selection We pose capsule wardrobe generation

as a subset selection problem. Probabilistic determinantal

point processes (DPP) can identify the subset of items that

maximize individual item “quality” while also maximizing

total “diversity” of the set [25], and have been applied for

document and video summarization [25, 12]. Alternatively,

submodular function maximization exploits “diminishing

returns” to select an optimal subset subject to a budget [36].

For submodular objectives, an efficient greedy selection cri-

terion is near optimal [36], e.g., as exploited for sensor

placement [13] and outbreak detection [28]. We show how

to adapt such solutions to permit accurate and efficient se-

lection for capsule wardrobes; furthermore, we develop an

iterative EM-like algorithm to enable non-submodular ob-

jectives for mix-and-match outfits.

�

3. Approach

We first formally define the capsule wardrobe problem

and introduce our approach (Sec. 3.1). Then in Sec. 3.2

we present our unsupervised approach to learn compatibil-

ity and personalized styles, two key ingredients in capsule

wardrobes. Finally, in Sec. 3.3, we overview our training

procedure for cross-domain attribute recognition.

3.1. Subset selection for capsule wardrobes

i

s0

i , s1

i , ..., sNi−1

A capsule wardrobe is a minimal set of garments that

combine in versatile ways to create many compatible out-

fits (see Fig. 1). We cast capsule creation as the problem of

selecting a subset from a large set of candidates that maxi-

mizes quality (compatibility) and diversity (versatility).

3.1.1 Problem formulation and objective

We formulate the subset selection problem as follows.

Let i = 0, . . . , (m − 1) index the m layers of clothing

(e.g., outerwear, upper body, lower body, hosiery). Let

denote the set of candidate gar-

Ai =

ments/pieces in layer i, where sj

i , j = 0, . . . , (Ni − 1) is

the j-th piece in layer i, and Ni is the number of candidate

pieces for that layer. For example, the candidates could be

the inventory of a given catalog. If an outfit is composed of

one and only one piece from each layer, the candidate pieces

in total could generate a set Y of

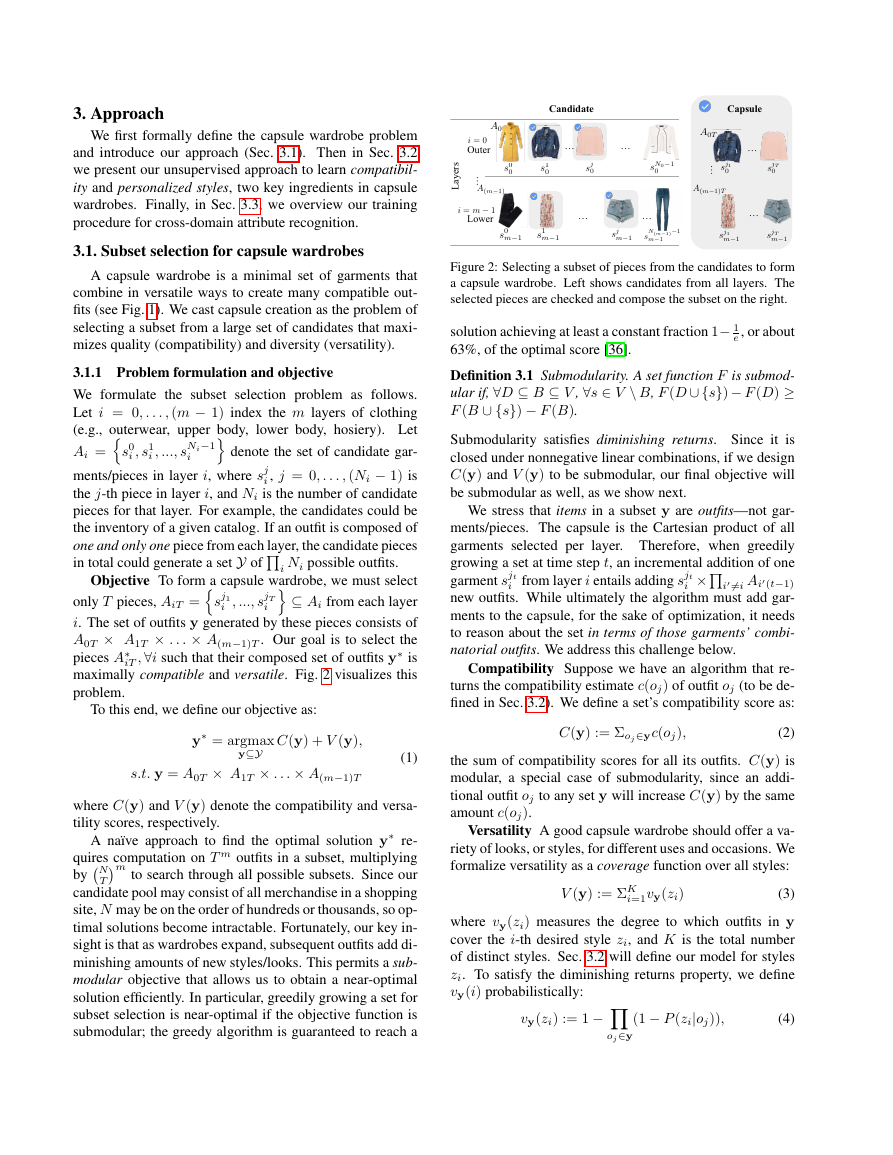

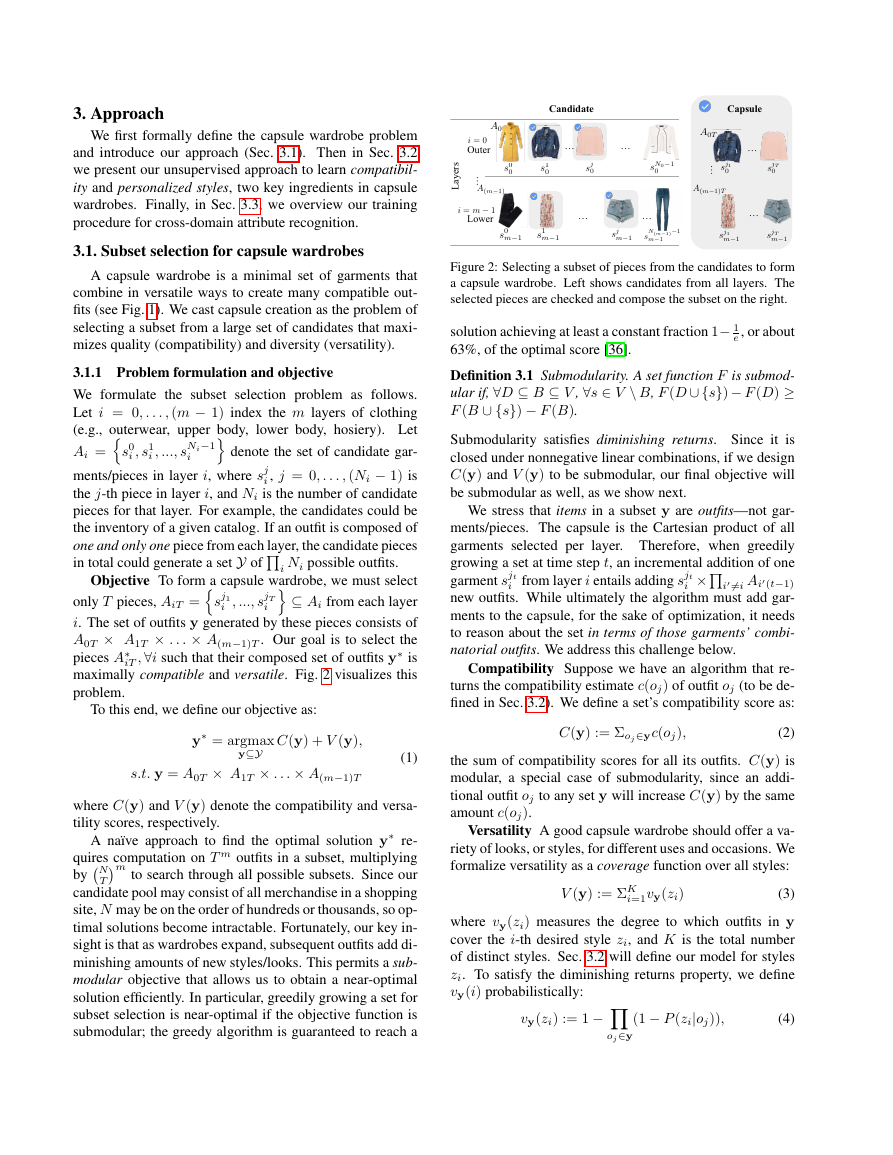

Objective To form a capsule wardrobe, we must select

only T pieces, AiT =

⊆ Ai from each layer

i. The set of outfits y generated by these pieces consists of

A0T × A1T × . . . × A(m−1)T . Our goal is to select the

pieces A∗iT ,∀i such that their composed set of outfits y∗ is

maximally compatible and versatile. Fig. 2 visualizes this

problem.

i Ni possible outfits.

sj1

i , ..., sjT

i

To this end, we define our objective as:

y∗ = argmax

C(y) + V (y),

y⊆Y

(1)

s.t. y = A0T × A1T × . . . × A(m−1)T

T

where C(y) and V (y) denote the compatibility and versa-

tility scores, respectively.

A na¨ıve approach to find the optimal solution y∗ re-

quires computation on T m outfits in a subset, multiplying

m to search through all possible subsets. Since our

byN

candidate pool may consist of all merchandise in a shopping

site, N may be on the order of hundreds or thousands, so op-

timal solutions become intractable. Fortunately, our key in-

sight is that as wardrobes expand, subsequent outfits add di-

minishing amounts of new styles/looks. This permits a sub-

modular objective that allows us to obtain a near-optimal

solution efficiently. In particular, greedily growing a set for

subset selection is near-optimal if the objective function is

submodular; the greedy algorithm is guaranteed to reach a

Figure 2: Selecting a subset of pieces from the candidates to form

a capsule wardrobe. Left shows candidates from all layers. The

selected pieces are checked and compose the subset on the right.

e , or about

solution achieving at least a constant fraction 1− 1

63%, of the optimal score [36].

Definition 3.1 Submodularity. A set function F is submod-

ular if, ∀D ⊆ B ⊆ V , ∀s ∈ V \ B, F (D ∪{s})− F (D) ≥

F (B ∪ {s}) − F (B).

Submodularity satisfies diminishing returns. Since it is

closed under nonnegative linear combinations, if we design

C(y) and V (y) to be submodular, our final objective will

be submodular as well, as we show next.

We stress that items in a subset y are outfits—not gar-

ments/pieces. The capsule is the Cartesian product of all

garments selected per layer. Therefore, when greedily

growing a set at time step t, an incremental addition of one

garment sjt

i=i Ai(t−1)

new outfits. While ultimately the algorithm must add gar-

ments to the capsule, for the sake of optimization, it needs

to reason about the set in terms of those garments’ combi-

natorial outfits. We address this challenge below.

i from layer i entails adding sjt

i ×

Compatibility Suppose we have an algorithm that re-

turns the compatibility estimate c(oj) of outfit oj (to be de-

fined in Sec. 3.2). We define a set’s compatibility score as:

C(y) := Σoj∈yc(oj),

(2)

the sum of compatibility scores for all its outfits. C(y) is

modular, a special case of submodularity, since an addi-

tional outfit oj to any set y will increase C(y) by the same

amount c(oj).

Versatility A good capsule wardrobe should offer a va-

riety of looks, or styles, for different uses and occasions. We

formalize versatility as a coverage function over all styles:

V (y) := ΣK

i=1vy(zi)

(3)

where vy(zi) measures the degree to which outfits in y

cover the i-th desired style zi, and K is the total number

of distinct styles. Sec. 3.2 will define our model for styles

zi. To satisfy the diminishing returns property, we define

vy(i) probabilistically:

vy(zi) := 1 −

(1 − P (zi|oj)),

(4)

oj∈y

OuterLower………………CandidateCapsule……Layersi=m�1i=0s00sj0s10sN0�10s0m�1s1m�1sjm�1sN(m�1)�1m�1A0A0TA(m�1)A(m�1)Tsj10sjT0sjTm�1sj1m�1�

where P (zi|oj) denotes the probability of a style zi given

an outfit oj. We define a generative model for P (zi|oj) be-

low. The idea is that each outfit “tries” to cover a style with

probability P (zi|oj), and the style is covered by a capsule

if at least one of the outfits in it successfully covers that

style. Thus, as y expands, subsequent outfits add diminish-

ing amounts of style coverage. A probabilistic expression

for coverage is also used in [10] for blog posts.

We have thus far defined versatility in terms of uniform

coverage over all K styles. However, each user has his/her

own preferences, and a universally versatile capsule may

contain pieces that do not meet one’s taste. Thus, as a per-

sonalized variant of our approach, we adjust each style’s

proportion in the coverage function by a user’s style prefer-

ence. This extends our capsules with personalized versatil-

ity:

i=1wivy(zi),

V (y) := ΣK

(5)

where wi denotes a personalized preference for each style

i. Sec. 3.2 explains how the personalization weights are

discovered from user data.

3.1.2 Optimization

A key challenge of subset selection for capsule wardrobes

is that our subsets are on outfits, but we must form the sub-

set by selecting garments. With each garment addition, the

subset of outfits y grows superlinearly, since every new gar-

ment can combine with all previous garments to form new

outfits. Submodularity requires each addition to diminish a

set function’s gain, but adding more garments yields more

outfits, so the gain actually increases. Thus, while our ob-

jective is submodular for adding outfits, it is not submodular

for adding individual garments. However, we can make the

following claim:

Claim 3.2 When fixing all other layers (i.e., upper, lower,

outer) and selecting a subset of pieces one layer at a time,

the probabilistic versatility coverage function in Eqn (3) is

submodular, and the compatibility function in Eqn (2) is

modular. See Supplementary File for proof.

Thus, given a single layer, our objective function is sub-

modular for garments. By fixing all selected pieces in other

layers, any additional garment will be combined with the

same set of garments and form the same amount of new

outfits. Thus subsets in a given layer no longer grow super-

linearly. So the guarantee of a greedy solution on that layer

being near-optimal [36] still holds.

To exploit this, we develop an EM-like iterative approach

to approximate a greedy solution over all layers: we itera-

tively fix the subsets selected in other layers, and focus the

current selection in a single layer. After sufficient iterations,

our subsets converge to a fixed set. Algorithm 1 gives the

complete steps.

Our algorithm is quite efficient. Whereas a na¨ıve search

would take more than 1B hours for our data with N = 150,

cur := 0

Reset selected pieces in layer i

ε is the tolerance degree for convergence

for each layer i = 0, 1, ...(m − 1) do

yt−1 = Ai(t−1) ×

obj ≥ ε do

AiT = Ai0 := ∅

obji

for each time step t = 1, 2, ...T do

i=i AiT

δs

Algorithm 1 Proposed iterative greedy algorithm for sub-

modular maximization, where obj(y) := C(y) + V (y).

1: AiT := ∅, ∀i

2: ∆obj := ε + 1

3: objm−1

prev := 0

4: while ∆m−1

5:

6:

7:

8:

9:

10:

11:

12:

13:

14:

15:

16:

17:

18: end while

19: procedure INCREMENTAL ADDITION (yt := yt−1 s)

20:

21:

22:

23:

24:

25:

26:

27: end procedure

t := s, s ∈ Ai \ Ai(t−1)

y+

for j ∈ {1, . . . , m} , j = i do

if AjT = ∅ then

:= argmaxs∈Ai\Ai(t−1)

sjt

i

where δs = obj(yt−1 s) − obj(yt−1)

Ait := sjt

obji

end for

i ∪ Ai(t−1)

cur + δ

end for

∆m−1

objm−1

:= objm−1

prev := objm−1

cur − objm−1

prev

y+

t := y+

end if

end for

yt := yt−1 ∪ y+

t

cur := obji

sjt

i

Max increment

Update layer i

obj

cur

t × AjT

our algorithm returns an approximate capsule in only 200

seconds. Most computation is devoted to computing the ob-

jective function, which requires topic model inference (see

below). A na¨ıve greedy approach on garments would re-

quire O(N T 4) time for m = 4 layers, while our itera-

tive approach requires O(N T 3) time per iteration (details

in Supp.) For our datasets, it requires just 5 iterations.

3.2. Style topic models for compatibility

Having defined the capsule selection objective and opti-

mization, we now present our approach to model versatility

(via P (zi|oj)) and compatibility c(oj) simultaneously.

Prior work on compatibility takes a supervised approach.

Given ground truth compatible items (either by manual la-

bels like curated sets of product images on Polyvore [29, 39,

14] or by using Amazon co-purchase data as a proxy [35,

41, 16]), a (usually discriminative) compatibility metric is

trained. See Fig. 3. However, the supervised strategy has

weaknesses. First, items purchased at the same time can be

a weak proxy for visual compatibility. Second, user-created

sets often focus on the visualization of the collage, usually

contain fewer than two main (non-accessory) pieces, and

lack layers like hosiery. Third, sources like Amazon and

Polyvore are limited to brands selected by vendors, a frac-

tion of the wide variety of clothing people wear in real life.

Fourth, obtaining the negative non-compatible examples re-

�

Figure 3: L to R: Amazon co-purchase example; Polyvore user-

curated set; Chictopia full-body outfit (our training source).

quired by supervised discriminative methods is problem-

atic. Previous work [35, 41, 16, 29, 39] generates negative

examples by randomly swapping items in positive pairs, but

there is no guarantee that the random combinations are true

negatives. Not observing a pair of items together does not

necessarily mean they do not go well together.

To address these issues, we propose a generative compat-

ibility model that is learned from unlabeled images of peo-

ple wearing outfits “in the wild” (Fig. 3, right). We explore a

topic model—namely Correlated Topic Models (CTM) [26]

—from text analysis. CTM is a Bayesian multinomial mix-

ture model that supposes a small number of K latent topics

account for the distribution of observed words in any given

document.

It uses the following generative process for a

corpus D consisting of M documents each of length Li:

1. Choose ηi ∼ N (µ, Σ), where i ∈ {1, . . . , M} and

µ, Σ a K-dimensional mean and covariance matrix.

θik = eηik

k=1eηik maps ηi to a simplex.

2. Choose ϕk ∼ Dir(β), where k ∈ {1, . . . , K} and

Dir(β) is the Dirichlet distribution with parameter β.

3. For each word indexed by (i, j),

ΣK

where j ∈ {1, . . . , Li}, and i ∈ {1, . . . , M}.

(a) Choose a topic zi,j ∼ Multinomial(θi).

(b) Choose a word xi,j ∼ Multinomial(ϕzi,j ).

Only the word occurrences are observed. Following [17],

we map textual topic models to visual ones: a “document”

is an outfit, a “word” is an inferred visual attribute (e.g., flo-

ral, chiffon), and a “topic” is a style. The model discovers

the compositions of visual cues (i.e., attributes) that char-

acterize styles. A topic might capture plaid blue blouses,

or tight leather skirts. Prior work [17] models styles with a

Dirichlet prior, which treats topics within an image as inde-

pendent. For compatibility, we find CTM’s logistic normal

prior above [26] beneficial to account for style correlations

(e.g., a formal blazer is more likely to be combined with a

skirt than sporty leggings.)

CTM estimates the latent variables by maximizing the

Li

posterior distribution given a corpus D:

p(θ, z|D, µ, Σ, β) =

p(θi|µ, Σ)

M

i=1

j=1

p(zij|θi)p(xij|zij, β).

(6)

First we find the latent variables that fit assembled outfits

on full-body images. Next, given an arbitrary combination

of catalog pieces, we predict their attributes, and take the

union of attributes on all pieces to form an outfit oj. Finally

we infer its compatibility by the likelihood:

c(oj) := p(oj|µ, Σ, β).

(7)

Combinations similar to previously assembled outfits D

will have higher probability. For this generative model, no

negative examples need to be contrived. The training pool

should be outfits like those we want the model to emulate;

we use full-body photos posted to a fashion website.

Given a database of unlabeled outfit images, we pre-

dict their attributes. Then we apply CTM to obtain a set

of styles, where each style k is an attribute distribution

ϕk. Given an outfit oj, CTM infers its style composition

θoj = [θoj 1, . . . , θoj K]. Then we have:

P (zi|oj) := P (zi|θoj ) = θoj i,

(8)

p

p

which is used to compute our versatility coverage in Eq (4).

We personalize the styles emphasized for a user in Eq (5)

as follows. Given a collection of outfits {oj1 , . . . , ojU}

owned by a user p, e.g., as shown in that user’s purchase his-

tory catalog photos or his/her album on a fashion website,

we learn his/her style preference θ(user)

by aggregating all

U Σjθoj . Hence, a user’s personalized

outfits θ(user)

weight wi for each style i is θ(user)

= 1

Unlike previous work that uses supervision from human

created matches or co-purchase information, our model is

fully unsupervised. While we train attribute models on a

disjoint pool of attribute labeled images, our topic model

runs on “inferred” attributes, and annotators do not touch

the images from which we learn compatibility.

3.3. Cross-domain attribute recognition

pi

.

Finally, we describe our approach to infer cross-domain

attributes on both catalog and outfit images.

Vocabulary and data collection Since fine-grained at-

tributes are valuable for style, we build our vocabulary on

the 195 attributes enumerated in [17]. Their dataset has at-

tributes labeled only on outfit images, so we collect cata-

log images labeled with the same attribute vocabulary. To

gather images, we use keyword search with the attribute

name on Google, then manually prune those where the at-

tribute is absent. This yields 100 to 300 positive training

images per attribute, in total 12K images. (Details in Supp.)

Curriculum learning from shop to street Catalog im-

ages usually have a clear background, and are free of tricky

lighting conditions and deformations from body pose. As

a result, attributes are more readily recognizable in cat-

alog images than full-body outfit images. We propose

a two stage curriculum learning approach for cross do-

main attribute recognition.

In the first stage, we finetune

�

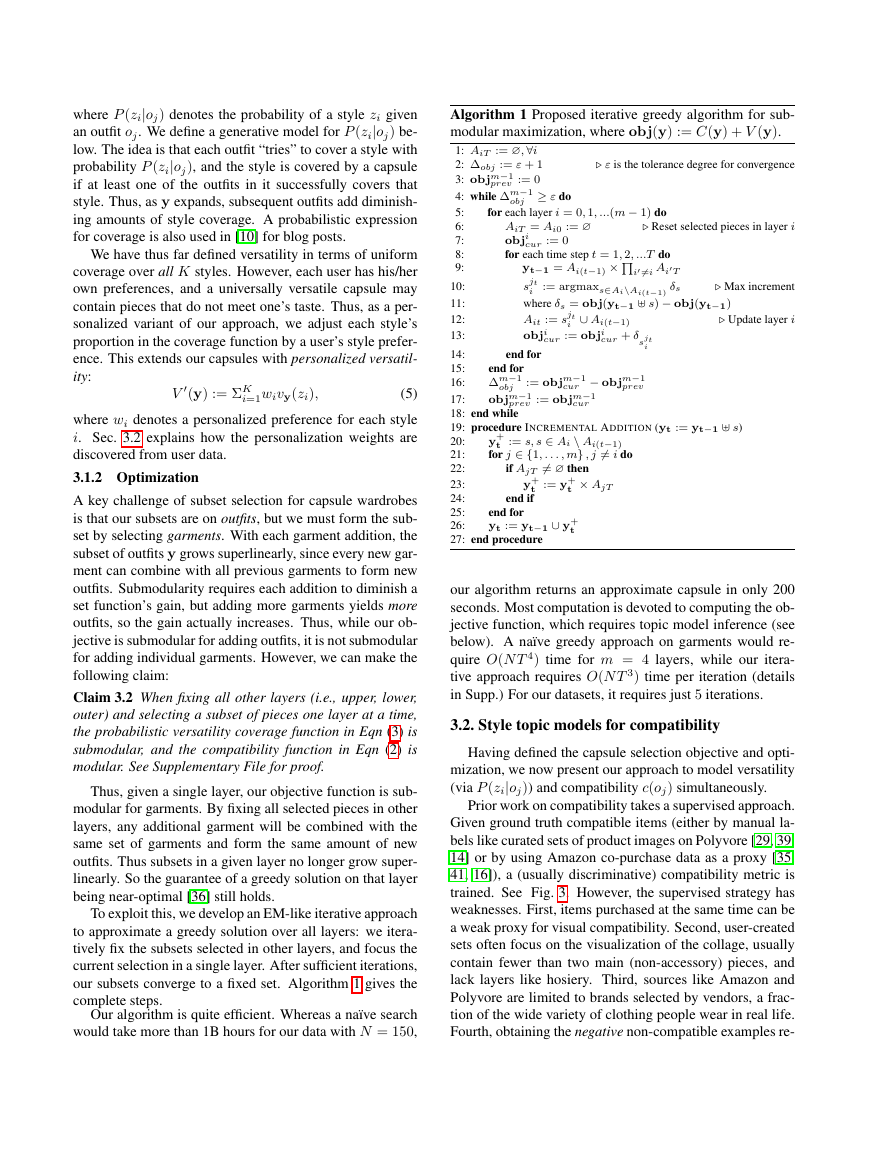

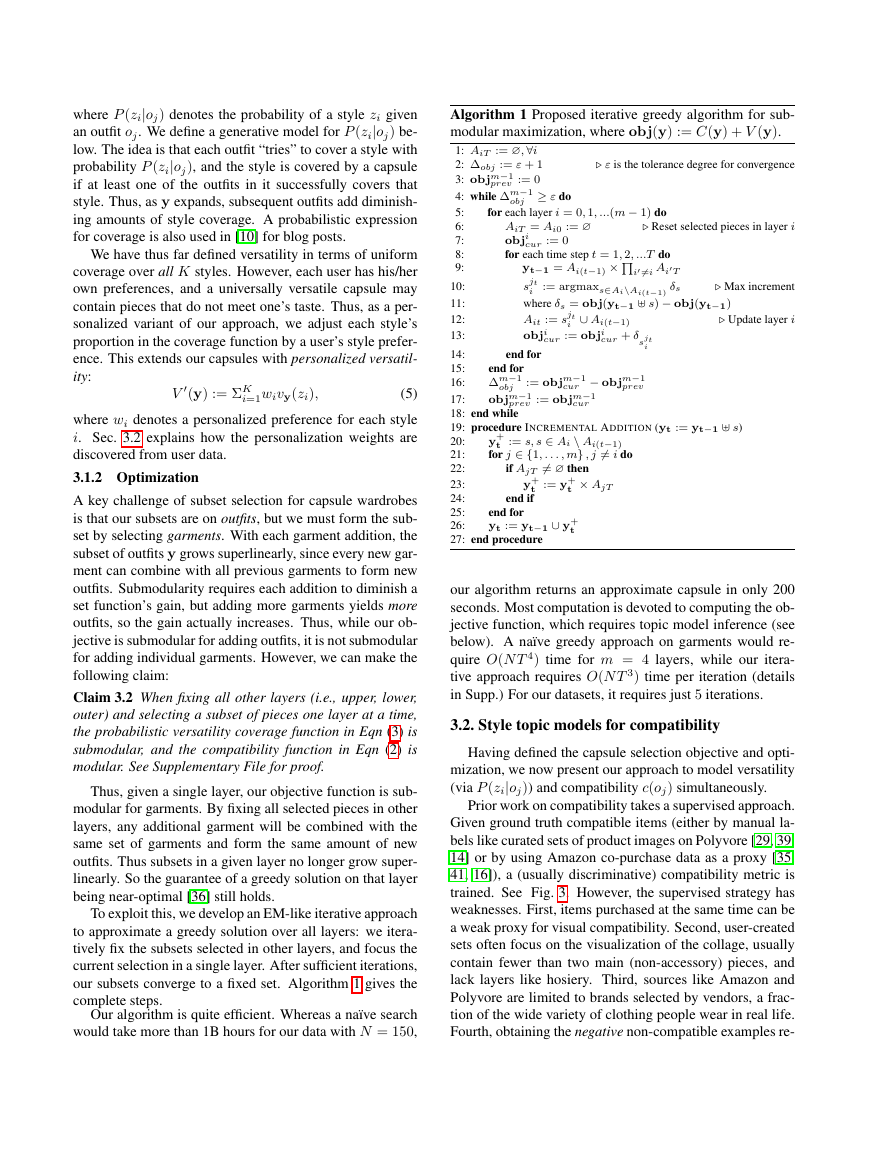

Figure 4: Qualitative examples of most (left) and least (right) compatible outfits as scored by our model. Here we show both outfits with 2

pieces (top) and 3 pieces (bottom).

a deep neural network, ResNet-50 [15] pretrained on Ima-

geNet [7], for catalog attribute recognition. In the second

stage, we first detect and crop images into upper and lower

body instances, and then we finetune the network from the

first stage for outfit attribute recognition. Evaluating on the

validation split from the 19K image dataset [17], we see

a significant 15% mAP improvement, especially on chal-

lenging attributes such as material and neckline, which are

subtle or occupy a small region on outfit images.

4. Experiments

We first evaluate our compatibility estimation in isola-

tion (Sec. 4.1). Then, we evaluate our algorithm’s capsule

wardrobes, for both quality and efficiency (Sec. 4.2).

4.1. Compatibility

Dataset Previous work [29, 39, 14] studying com-

patibility each collects a dataset from polyvore.com.

Polyvore is a platform where fashion-conscious users create

sets of clothing pieces that go well with each other. While

these compatible sets are valuable supervision, as discussed

above, collecting “incompatible” outfits is problematic.

While our method uses no “incompatible” examples for

training, to facilitate evaluation, we devise a more reli-

able mechanism to avoid false negatives. We collect 3, 759

Polyvore outfits, composed of 7, 478 pieces, each with

meta-labels such as season (winter, spring, summer, fall),

occasion (work, vacation), and function (date, hike). Tab. 1

summarizes the dataset breakdown. We exploit the meta-

labels to generate incompatible outfits. For each compatible

outfit, we generate an incompatible one by randomly swap-

ping one piece to another piece in the same layer from an

exclusive meta-label. For example, each winter (work) out-

fit will swap a piece with a summer (vacation) outfit. We

use outfits that have at least 2 pieces from different layers

as positives, and for each positive outfit, we generate 5 neg-

atives. In total, our test set has 2, 574 positives and 12, 870

negatives. In short, by swapping with the guidance of meta-

label, the negatives are more likely to be true negatives. See

Supp. for examples.

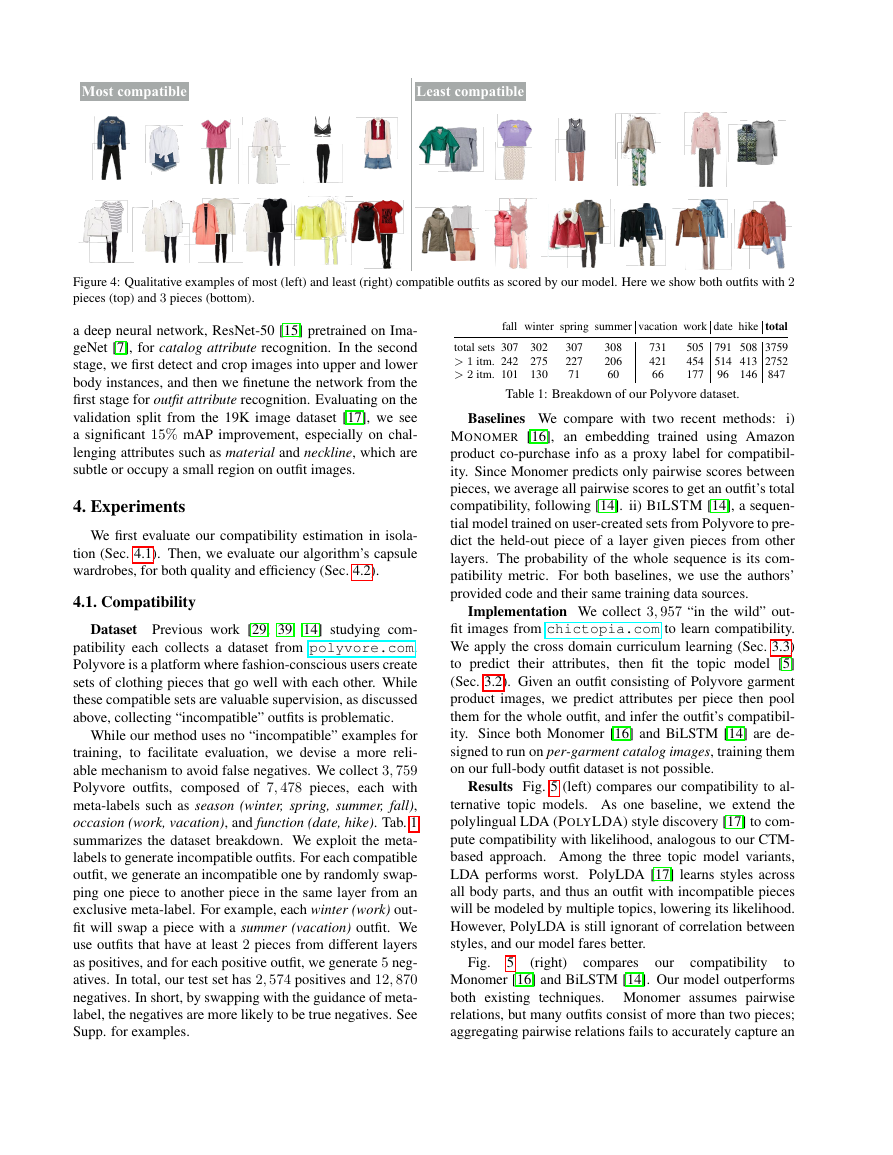

fall winter spring summer vacation work date hike total

505 791 508 3759

454 514 413 2752

177

96 146 847

302

275

130

307

227

71

308

206

60

731

421

66

total sets 307

> 1 itm. 242

> 2 itm. 101

Table 1: Breakdown of our Polyvore dataset.

Baselines We compare with two recent methods:

i)

MONOMER [16], an embedding trained using Amazon

product co-purchase info as a proxy label for compatibil-

ity. Since Monomer predicts only pairwise scores between

pieces, we average all pairwise scores to get an outfit’s total

compatibility, following [14]. ii) BILSTM [14], a sequen-

tial model trained on user-created sets from Polyvore to pre-

dict the held-out piece of a layer given pieces from other

layers. The probability of the whole sequence is its com-

patibility metric. For both baselines, we use the authors’

provided code and their same training data sources.

Implementation We collect 3, 957 “in the wild” out-

fit images from chictopia.com to learn compatibility.

We apply the cross domain curriculum learning (Sec. 3.3)

to predict their attributes,

then fit the topic model [5]

(Sec. 3.2). Given an outfit consisting of Polyvore garment

product images, we predict attributes per piece then pool

them for the whole outfit, and infer the outfit’s compatibil-

ity. Since both Monomer [16] and BiLSTM [14] are de-

signed to run on per-garment catalog images, training them

on our full-body outfit dataset is not possible.

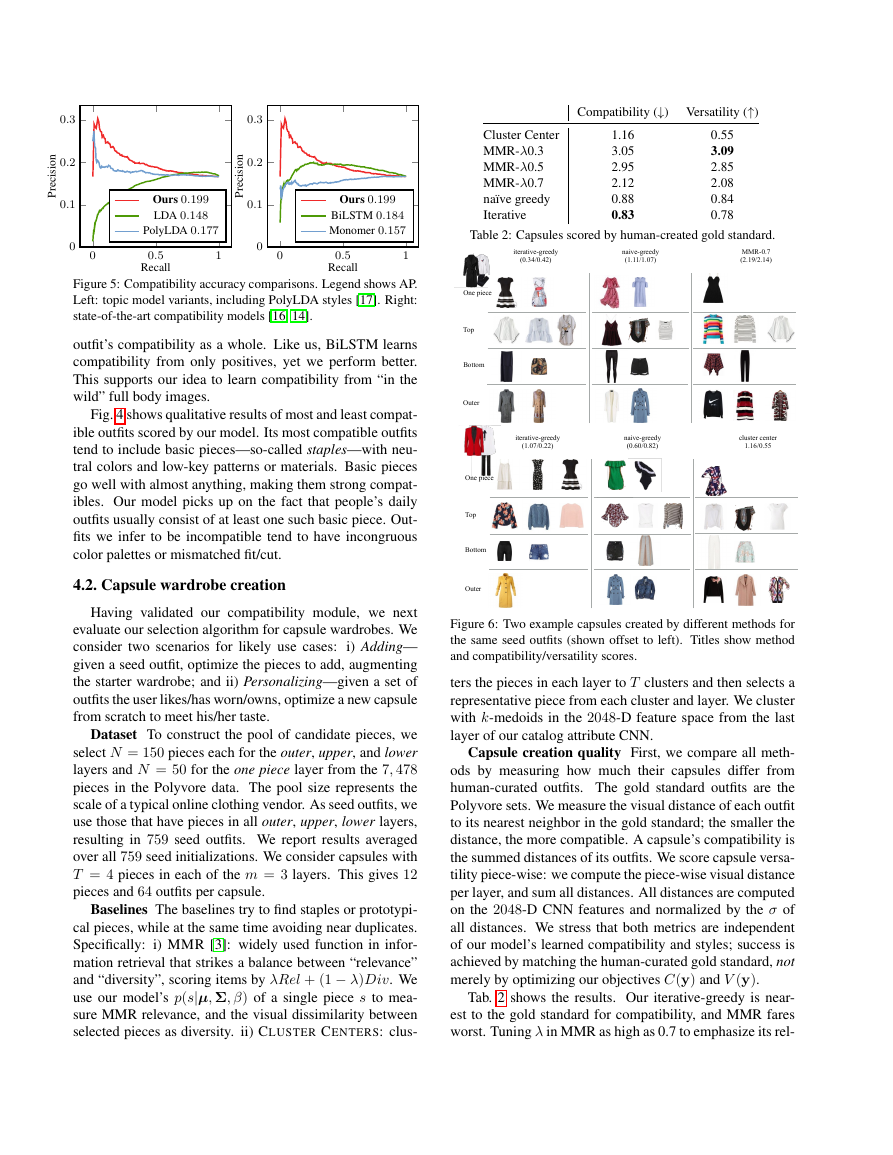

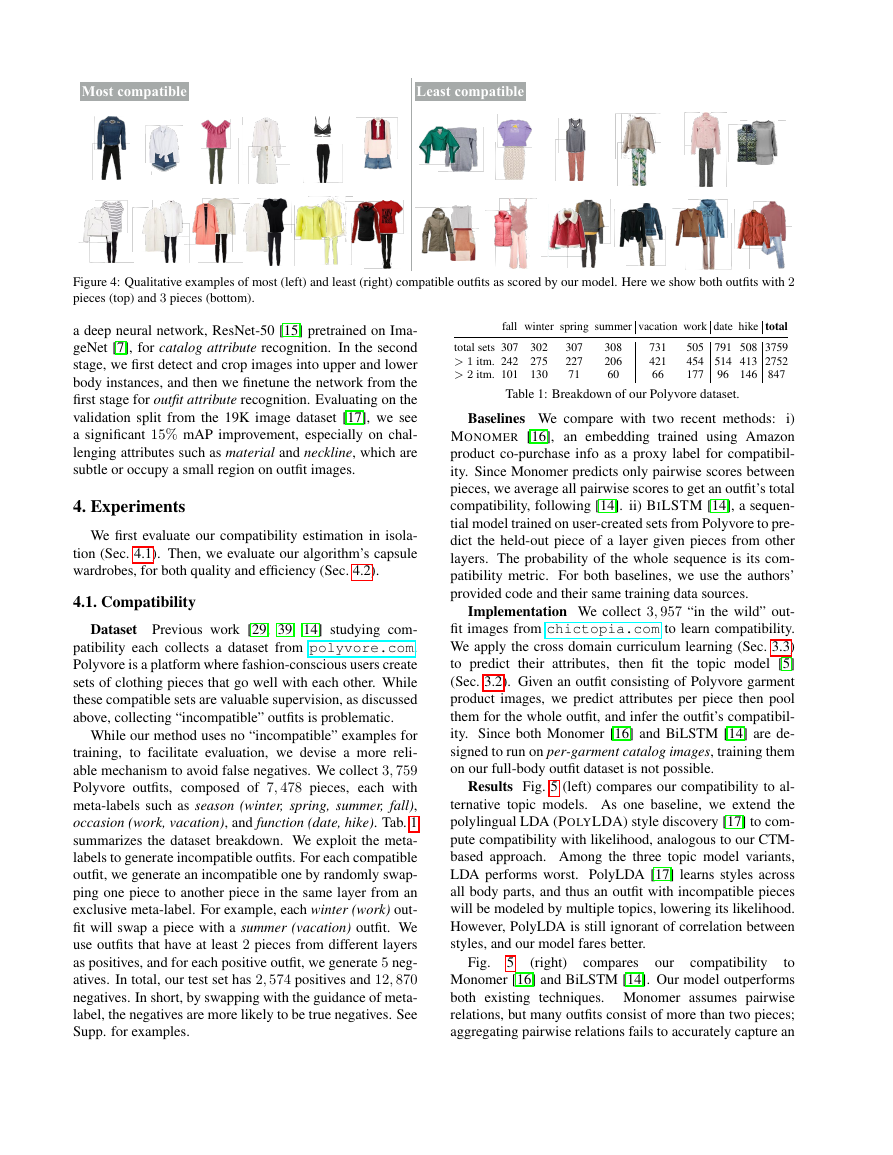

Results Fig. 5 (left) compares our compatibility to al-

ternative topic models. As one baseline, we extend the

polylingual LDA (POLYLDA) style discovery [17] to com-

pute compatibility with likelihood, analogous to our CTM-

based approach. Among the three topic model variants,

LDA performs worst. PolyLDA [17] learns styles across

all body parts, and thus an outfit with incompatible pieces

will be modeled by multiple topics, lowering its likelihood.

However, PolyLDA is still ignorant of correlation between

styles, and our model fares better.

compares

to

Monomer [16] and BiLSTM [14]. Our model outperforms

both existing techniques. Monomer assumes pairwise

relations, but many outfits consist of more than two pieces;

aggregating pairwise relations fails to accurately capture an

compatibility

(right)

Fig.

our

5

Most compatibleLeast compatible�

0.3

0.2

0.1

n

o

i

s

i

c

e

r

P

0.3

0.2

0.1

n

o

i

s

i

c

e

r

P

Ours 0.199

LDA 0.148

PolyLDA 0.177

0

0

0.5

Recall

1

0

0

Ours 0.199

BiLSTM 0.184

Monomer 0.157

0.5

Recall

1

Figure 5: Compatibility accuracy comparisons. Legend shows AP.

Left: topic model variants, including PolyLDA styles [17]. Right:

state-of-the-art compatibility models [16, 14].

outfit’s compatibility as a whole. Like us, BiLSTM learns

compatibility from only positives, yet we perform better.

This supports our idea to learn compatibility from “in the

wild” full body images.

Fig. 4 shows qualitative results of most and least compat-

ible outfits scored by our model. Its most compatible outfits

tend to include basic pieces—so-called staples—with neu-

tral colors and low-key patterns or materials. Basic pieces

go well with almost anything, making them strong compat-

ibles. Our model picks up on the fact that people’s daily

outfits usually consist of at least one such basic piece. Out-

fits we infer to be incompatible tend to have incongruous

color palettes or mismatched fit/cut.

4.2. Capsule wardrobe creation

Having validated our compatibility module, we next

evaluate our selection algorithm for capsule wardrobes. We

i) Adding—

consider two scenarios for likely use cases:

given a seed outfit, optimize the pieces to add, augmenting

the starter wardrobe; and ii) Personalizing—given a set of

outfits the user likes/has worn/owns, optimize a new capsule

from scratch to meet his/her taste.

Dataset To construct the pool of candidate pieces, we

select N = 150 pieces each for the outer, upper, and lower

layers and N = 50 for the one piece layer from the 7, 478

pieces in the Polyvore data. The pool size represents the

scale of a typical online clothing vendor. As seed outfits, we

use those that have pieces in all outer, upper, lower layers,

resulting in 759 seed outfits. We report results averaged

over all 759 seed initializations. We consider capsules with

T = 4 pieces in each of the m = 3 layers. This gives 12

pieces and 64 outfits per capsule.

Baselines The baselines try to find staples or prototypi-

cal pieces, while at the same time avoiding near duplicates.

Specifically: i) MMR [3]: widely used function in infor-

mation retrieval that strikes a balance between “relevance”

and “diversity”, scoring items by λRel + (1 − λ)Div. We

use our model’s p(s|µ, Σ, β) of a single piece s to mea-

sure MMR relevance, and the visual dissimilarity between

selected pieces as diversity. ii) CLUSTER CENTERS: clus-

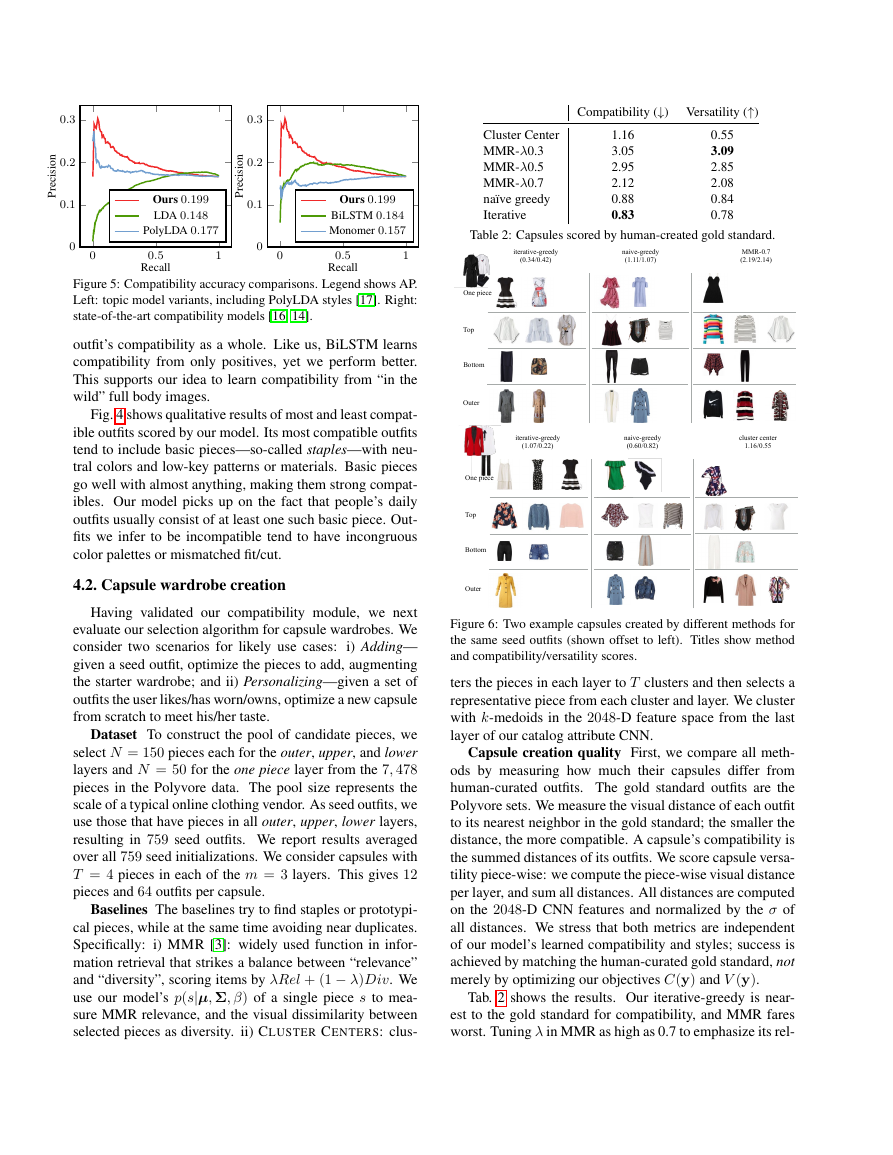

Compatibility (↓) Versatility (↑)

Cluster Center

MMR-λ0.3

MMR-λ0.5

MMR-λ0.7

na¨ıve greedy

Iterative

1.16

3.05

2.95

2.12

0.88

0.83

0.55

3.09

2.85

2.08

0.84

0.78

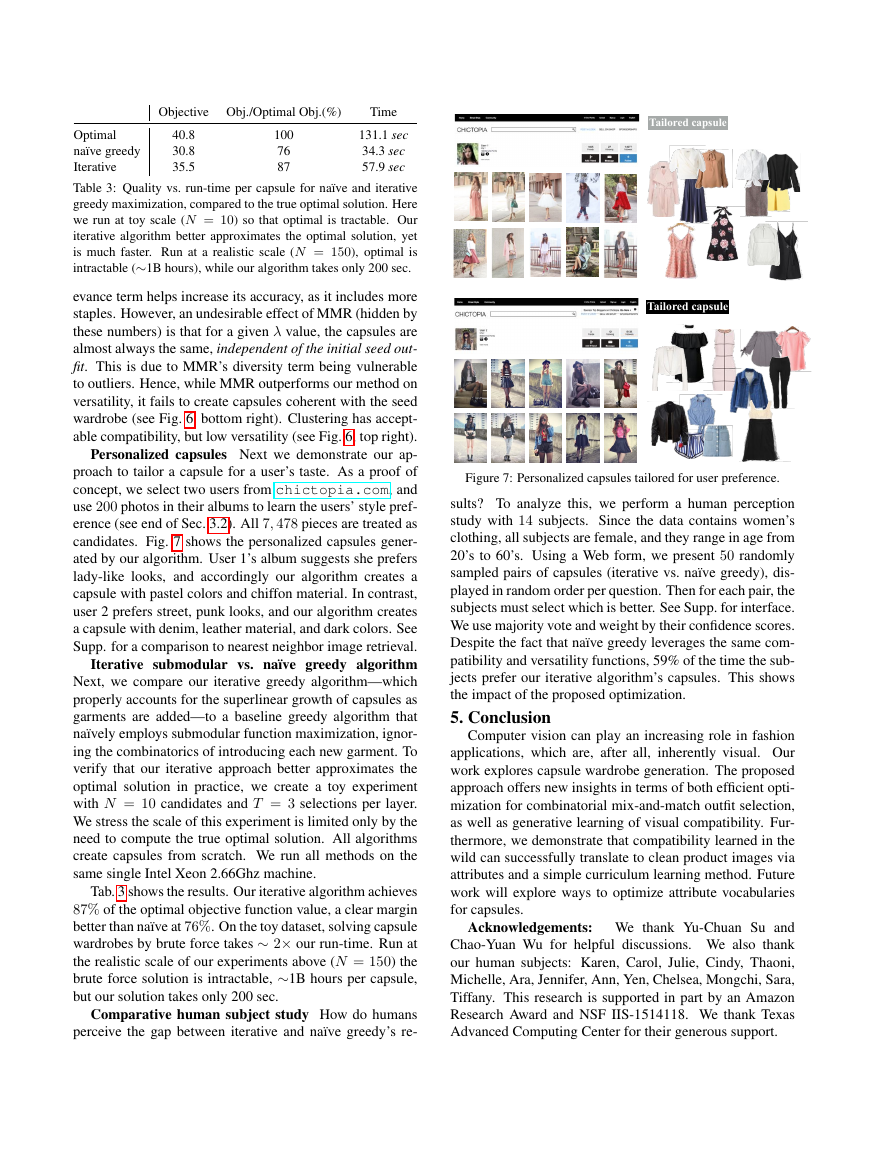

Table 2: Capsules scored by human-created gold standard.

Figure 6: Two example capsules created by different methods for

the same seed outfits (shown offset to left). Titles show method

and compatibility/versatility scores.

ters the pieces in each layer to T clusters and then selects a

representative piece from each cluster and layer. We cluster

with k-medoids in the 2048-D feature space from the last

layer of our catalog attribute CNN.

Capsule creation quality First, we compare all meth-

ods by measuring how much their capsules differ from

human-curated outfits. The gold standard outfits are the

Polyvore sets. We measure the visual distance of each outfit

to its nearest neighbor in the gold standard; the smaller the

distance, the more compatible. A capsule’s compatibility is

the summed distances of its outfits. We score capsule versa-

tility piece-wise: we compute the piece-wise visual distance

per layer, and sum all distances. All distances are computed

on the 2048-D CNN features and normalized by the σ of

all distances. We stress that both metrics are independent

of our model’s learned compatibility and styles; success is

achieved by matching the human-curated gold standard, not

merely by optimizing our objectives C(y) and V (y).

Tab. 2 shows the results. Our iterative-greedy is near-

est to the gold standard for compatibility, and MMR fares

worst. Tuning λ in MMR as high as 0.7 to emphasize its rel-

One pieceTopBottomOuteriterative-greedy (0.34/0.42)naive-greedy (1.11/1.07)MMR-0.7 (2.19/2.14)One pieceTopBottomOuteriterative-greedy (1.07/0.22)naive-greedy (0.60/0.82)cluster center 1.16/0.55�

Objective Obj./Optimal Obj.(%)

Time

100

76

87

40.8

30.8

35.5

131.1 sec

34.3 sec

57.9 sec

Optimal

na¨ıve greedy

Iterative

Table 3: Quality vs. run-time per capsule for na¨ıve and iterative

greedy maximization, compared to the true optimal solution. Here

we run at toy scale (N = 10) so that optimal is tractable. Our

iterative algorithm better approximates the optimal solution, yet

is much faster. Run at a realistic scale (N = 150), optimal is

intractable (∼1B hours), while our algorithm takes only 200 sec.

evance term helps increase its accuracy, as it includes more

staples. However, an undesirable effect of MMR (hidden by

these numbers) is that for a given λ value, the capsules are

almost always the same, independent of the initial seed out-

fit. This is due to MMR’s diversity term being vulnerable

to outliers. Hence, while MMR outperforms our method on

versatility, it fails to create capsules coherent with the seed

wardrobe (see Fig. 6, bottom right). Clustering has accept-

able compatibility, but low versatility (see Fig. 6, top right).

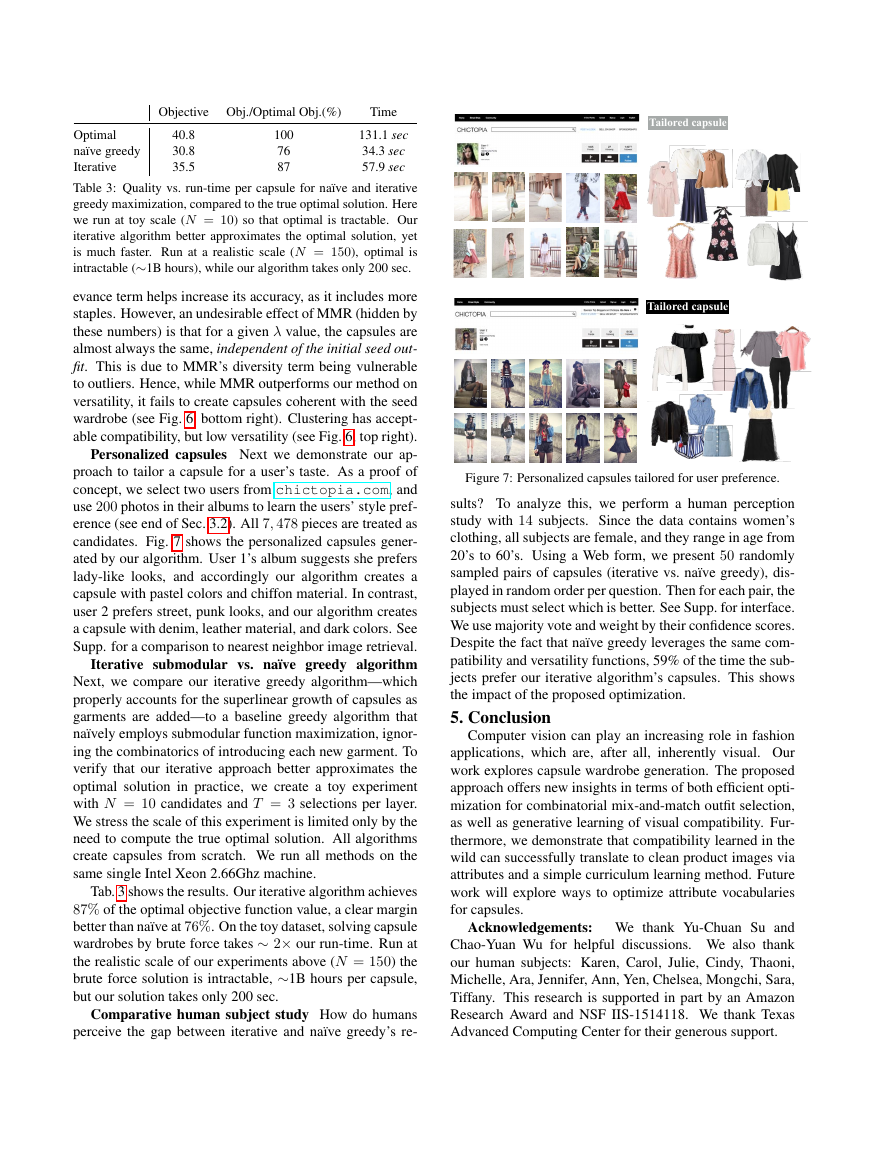

Personalized capsules Next we demonstrate our ap-

proach to tailor a capsule for a user’s taste. As a proof of

concept, we select two users from chictopia.com, and

use 200 photos in their albums to learn the users’ style pref-

erence (see end of Sec. 3.2). All 7, 478 pieces are treated as

candidates. Fig. 7 shows the personalized capsules gener-

ated by our algorithm. User 1’s album suggests she prefers

lady-like looks, and accordingly our algorithm creates a

capsule with pastel colors and chiffon material. In contrast,

user 2 prefers street, punk looks, and our algorithm creates

a capsule with denim, leather material, and dark colors. See

Supp. for a comparison to nearest neighbor image retrieval.

Iterative submodular vs. na¨ıve greedy algorithm

Next, we compare our iterative greedy algorithm—which

properly accounts for the superlinear growth of capsules as

garments are added—to a baseline greedy algorithm that

na¨ıvely employs submodular function maximization, ignor-

ing the combinatorics of introducing each new garment. To

verify that our iterative approach better approximates the

optimal solution in practice, we create a toy experiment

with N = 10 candidates and T = 3 selections per layer.

We stress the scale of this experiment is limited only by the

need to compute the true optimal solution. All algorithms

create capsules from scratch. We run all methods on the

same single Intel Xeon 2.66Ghz machine.

Tab. 3 shows the results. Our iterative algorithm achieves

87% of the optimal objective function value, a clear margin

better than na¨ıve at 76%. On the toy dataset, solving capsule

wardrobes by brute force takes ∼ 2× our run-time. Run at

the realistic scale of our experiments above (N = 150) the

brute force solution is intractable, ∼1B hours per capsule,

but our solution takes only 200 sec.

Comparative human subject study How do humans

perceive the gap between iterative and na¨ıve greedy’s re-

Figure 7: Personalized capsules tailored for user preference.

sults? To analyze this, we perform a human perception

study with 14 subjects. Since the data contains women’s

clothing, all subjects are female, and they range in age from

20’s to 60’s. Using a Web form, we present 50 randomly

sampled pairs of capsules (iterative vs. na¨ıve greedy), dis-

played in random order per question. Then for each pair, the

subjects must select which is better. See Supp. for interface.

We use majority vote and weight by their confidence scores.

Despite the fact that na¨ıve greedy leverages the same com-

patibility and versatility functions, 59% of the time the sub-

jects prefer our iterative algorithm’s capsules. This shows

the impact of the proposed optimization.

5. Conclusion

Computer vision can play an increasing role in fashion

applications, which are, after all, inherently visual. Our

work explores capsule wardrobe generation. The proposed

approach offers new insights in terms of both efficient opti-

mization for combinatorial mix-and-match outfit selection,

as well as generative learning of visual compatibility. Fur-

thermore, we demonstrate that compatibility learned in the

wild can successfully translate to clean product images via

attributes and a simple curriculum learning method. Future

work will explore ways to optimize attribute vocabularies

for capsules.

Acknowledgements: We thank Yu-Chuan Su and

Chao-Yuan Wu for helpful discussions. We also thank

our human subjects: Karen, Carol, Julie, Cindy, Thaoni,

Michelle, Ara, Jennifer, Ann, Yen, Chelsea, Mongchi, Sara,

Tiffany. This research is supported in part by an Amazon

Research Award and NSF IIS-1514118. We thank Texas

Advanced Computing Center for their generous support.

Tailored capsuleTailored capsule�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc