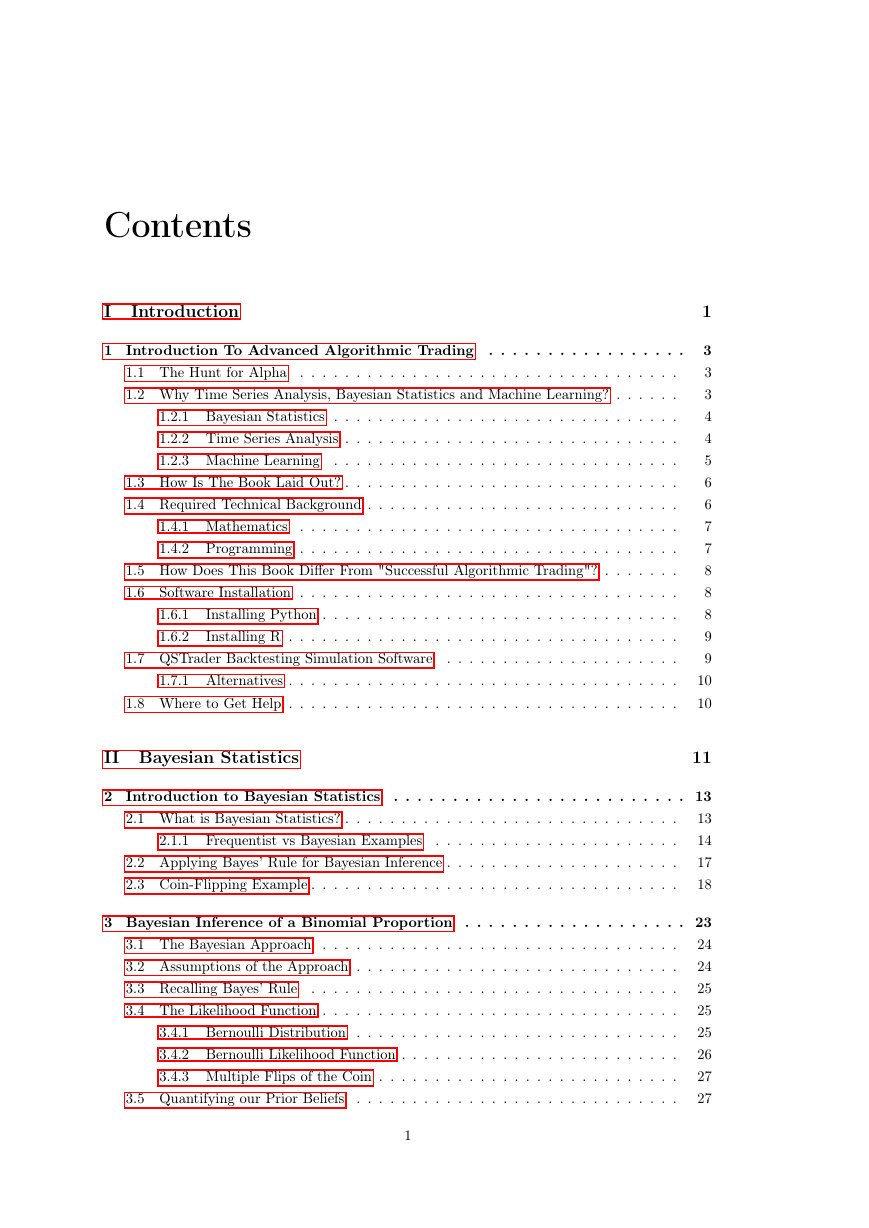

I Introduction

Introduction To Advanced Algorithmic Trading

The Hunt for Alpha

Why Time Series Analysis, Bayesian Statistics and Machine Learning?

Bayesian Statistics

Time Series Analysis

Machine Learning

How Is The Book Laid Out?

Required Technical Background

Mathematics

Programming

How Does This Book Differ From "Successful Algorithmic Trading"?

Software Installation

Installing Python

Installing R

QSTrader Backtesting Simulation Software

Alternatives

Where to Get Help

II Bayesian Statistics

Introduction to Bayesian Statistics

What is Bayesian Statistics?

Frequentist vs Bayesian Examples

Applying Bayes' Rule for Bayesian Inference

Coin-Flipping Example

Bayesian Inference of a Binomial Proportion

The Bayesian Approach

Assumptions of the Approach

Recalling Bayes' Rule

The Likelihood Function

Bernoulli Distribution

Bernoulli Likelihood Function

Multiple Flips of the Coin

Quantifying our Prior Beliefs

Beta Distribution

Why Is A Beta Prior Conjugate to the Bernoulli Likelihood?

Multiple Ways to Specify a Beta Prior

Using Bayes' Rule to Calculate a Posterior

Markov Chain Monte Carlo

Bayesian Inference Goals

Why Markov Chain Monte Carlo?

Markov Chain Monte Carlo Algorithms

The Metropolis Algorithm

Introducing PyMC3

Inferring a Binomial Proportion with Markov Chain Monte Carlo

Inferring a Binonial Proportion with Conjugate Priors Recap

Inferring a Binomial Proportion with PyMC3

Bibliographic Note

Bayesian Linear Regression

Frequentist Linear Regression

Bayesian Linear Regression

Bayesian Linear Regression with PyMC3

What are Generalised Linear Models?

Simulating Data and Fitting the Model with PyMC3

Bibliographic Note

Full Code

Bayesian Stochastic Volatility Model

Stochastic Volatility

Bayesian Stochastic Volatility

PyMC3 Implementation

Obtaining the Price History

Model Specification in PyMC3

Fitting the Model with NUTS

Full Code

III Time Series Analysis

Introduction to Time Series Analysis

What is Time Series Analysis?

How Can We Apply Time Series Analysis in Quantitative Finance?

Time Series Analysis Software

Time Series Analysis Roadmap

How Does This Relate to Other Statistical Tools?

Serial Correlation

Expectation, Variance and Covariance

Example: Sample Covariance in R

Correlation

Example: Sample Correlation in R

Stationarity in Time Series

Serial Correlation

The Correlogram

Example 1 - Fixed Linear Trend

Example 2 - Repeated Sequence

Next Steps

Random Walks and White Noise Models

Time Series Modelling Process

Backward Shift and Difference Operators

White Noise

Second-Order Properties

Correlogram

Random Walk

Second-Order Properties

Correlogram

Fitting Random Walk Models to Financial Data

Autoregressive Moving Average Models

How Will We Proceed?

Strictly Stationary

Akaike Information Criterion

Autoregressive (AR) Models of order p

Rationale

Stationarity for Autoregressive Processes

Second Order Properties

Simulations and Correlograms

Financial Data

Moving Average (MA) Models of order q

Rationale

Definition

Second Order Properties

Simulations and Correlograms

Financial Data

Next Steps

Autogressive Moving Average (ARMA) Models of order p, q

Bayesian Information Criterion

Ljung-Box Test

Rationale

Definition

Simulations and Correlograms

Choosing the Best ARMA(p,q) Model

Financial Data

Next Steps

Autoregressive Integrated Moving Average and Conditional Heteroskedastic Models

Quick Recap

Autoregressive Integrated Moving Average (ARIMA) Models of order p, d, q

Rationale

Definitions

Simulation, Correlogram and Model Fitting

Financial Data and Prediction

Next Steps

Volatility

Conditional Heteroskedasticity

Autoregressive Conditional Heteroskedastic Models

ARCH Definition

Why Does This Model Volatility?

When Is It Appropriate To Apply ARCH(1)?

ARCH(p) Models

Generalised Autoregressive Conditional Heteroskedastic Models

GARCH Definition

Simulations, Correlograms and Model Fittings

Financial Data

Next Steps

Cointegrated Time Series

Mean Reversion Trading Strategies

Cointegration

Unit Root Tests

Augmented Dickey-Fuller Test

Phillips-Perron Test

Phillips-Ouliaris Test

Difficulties with Unit Root Tests

Simulated Cointegrated Time Series with R

Cointegrated Augmented Dickey Fuller Test

CADF on Simulated Data

CADF on Financial Data

EWA and EWC

RDS-A and RDS-B

Full Code

Johansen Test

Johansen Test on Simulated Data

Johansen Test on Financial Data

Full Code

State Space Models and the Kalman Filter

Linear State-Space Model

The Kalman Filter

A Bayesian Approach

Prediction

Dynamic Hedge Ratio Between ETF Pairs Using the Kalman Filter

Linear Regression via the Kalman Filter

Applying the Kalman Filter to a Pair of ETFs

TLT and ETF

Scatterplot of ETF Prices

Time-Varying Slope and Intercept

Next Steps

Bibliographic Note

Full Code

Hidden Markov Models

Markov Models

Markov Model Mathematical Specification

Hidden Markov Models

Hidden Markov Model Mathematical Specification

Filtering of Hidden Markov Models

Regime Detection with Hidden Markov Models

Market Regimes

Simulated Data

Financial Data

Next Steps

Bibliographic Note

Full Code

IV Statistical Machine Learning

Introduction to Machine Learning

What is Machine Learning?

Machine Learning Domains

Supervised Learning

Unsupervised Learning

Reinforcement Learning

Machine Learning Techniques

Linear Regression

Linear Classification

Tree-Based Methods

Support Vector Machines

Artificial Neural Networks and Deep Learning

Bayesian Networks

Clustering

Dimensionality Reduction

Machine Learning Applications

Forecasting and Prediction

Natural Language Processing

Factor Models

Image Classification

Model Accuracy

Parametric and Non-Parametric Models

Statistical Framework for Machine Learning Domains

Supervised Learning

Mathematical Framework

Classification

Regression

Financial Example

Training

Linear Regression

Linear Regression

Probabilistic Interpretation

Basis Function Expansion

Maximum Likelihood Estimation

Likelihood and Negative Log Likelihood

Ordinary Least Squares

Simulated Data Example with Scikit-Learn

Full Code

Bibliographic Note

Tree-Based Methods

Decision Trees - Mathematical Overview

Decision Trees for Regression

Creating a Regression Tree and Making Predictions

Pruning The Tree

Decision Trees for Classification

Classification Error Rate/Hit Rate

Gini Index

Cross-Entropy/Deviance

Advantages and Disadvantages of Decision Trees

Advantages

Disadvantages

Ensemble Methods

The Bootstrap

Bootstrap Aggregation

Random Forests

Boosting

Python Scikit-Learn Implementation

Bibliographic Note

Full Code

Support Vector Machines

Motivation for Support Vector Machines

Advantages and Disadvantages of SVMs

Advantages

Disadvantages

Linear Separating Hyperplanes

Classification

Deriving the Classifier

Constructing the Maximal Margin Classifier

Support Vector Classifiers

Support Vector Machines

Biblographic Notes

Model Selection and Cross-Validation

Bias-Variance Trade-Off

Machine Learning Models

Model Selection

The Bias-Variance Tradeoff

Cross-Validation

Overview of Cross-Validation

Forecasting Example

Validation Set Approach

k-Fold Cross Validation

Python Implementation

k-Fold Cross Validation

Full Python Code

Unsupervised Learning

High Dimensional Data

Mathematical Overview of Unsupervised Learning

Unsupervised Learning Algorithms

Dimensionality Reduction

Clustering

Bibliographic Note

Clustering Methods

K-Means Clustering

The Algorithm

Issues

Simulated Data

OHLC Clustering

Bibliographic Note

Full Code

Natural Language Processing

Overview

Supervised Document Classification

Preparing a Dataset for Classification

Vectorisation

Term-Frequency Inverse Document-Frequency

Training the Support Vector Machine

Performance Metrics

Full Code

V Quantitative Trading Techniques

Introduction to QSTrader

Motivation for QSTrader

Design Considerations

Installation

Introductory Portfolio Strategies

Motivation

The Trading Strategies

Data

Python QSTrader Implementation

MonthlyLiquidateRebalanceStrategy

LiquidateRebalancePositionSizer

Backtest Interface

Strategy Results

Transaction Costs

US Equities/Bonds 60/40 ETF Portfolio

"Strategic" Weight ETF Portfolio

Equal Weight ETF Portfolio

Full Code

ARIMA+GARCH Trading Strategy on Stock Market Indexes Using R

Strategy Overview

Strategy Implementation

Strategy Results

Full Code

Cointegration-Based Pairs Trading using QSTrader

The Hypothesis

Cointegration Tests in R

The Trading Strategy

Data

Python QSTrader Implementation

Strategy Results

Transaction Costs

Tearsheet

Full Code

Kalman Filter-Based Pairs Trading using QSTrader

The Trading Strategy

Data

Python QSTrader Implementation

Strategy Results

Next Steps

Full Code

Supervised Learning for Intraday Returns Prediction using QSTrader

Prediction Goals with Machine Learning

Class Imbalance

Building a Prediction Model on Historical Data

QSTrader Strategy Object

QSTrader Backtest Script

Results

Next Steps

Full Code

Sentiment Analysis via Sentdex Vendor Sentiment Data with QSTrader

Sentiment Analysis

Sentdex API and Sample File

The Trading Strategy

Data

Python Implementation

Sentiment Handling with QSTrader

Sentiment Analysis Strategy Code

Strategy Results

Transaction Costs

Sentiment on S&P500 Tech Stocks

Sentiment on S&P500 Energy Stocks

Sentiment on S&P500 Defence Stocks

Full Code

Market Regime Detection with Hidden Markov Models using QSTrader

Regime Detection with Hidden Markov Models

The Trading Strategy

Data

Python Implementation

Returns Calculation with QSTrader

Regime Detection Implementation

Strategy Results

Transaction Costs

No Regime Detection Filter

HMM Regime Detection Filter

Full Code

Strategy Decay

Calculating the Annualised Rolling Sharpe Ratio

Python QSTrader Implementation

Strategy Results

Kalman Filter Pairs Trade

Aluminum Smelting Cointegration Strategy

Sentdex Sentiment Analysis Strategy

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc