INTRODUCTION

Message Passing Programming

MPI Users’ Guide in

FORTRAN

�

I N T R O D U C T I O N T O M E S S A G E P A S S I N G P R O G R A M M I N G

MPI User Guide in FORTRAN

Dr Peter S. Pacheco

Department of Mathematics

University of San Francisco

San Francisco, CA 94117

March 26, 1995

Woo Chat Ming

Computer Centre

University of Hong Kong

Hong Kong

March 17, 1997

�

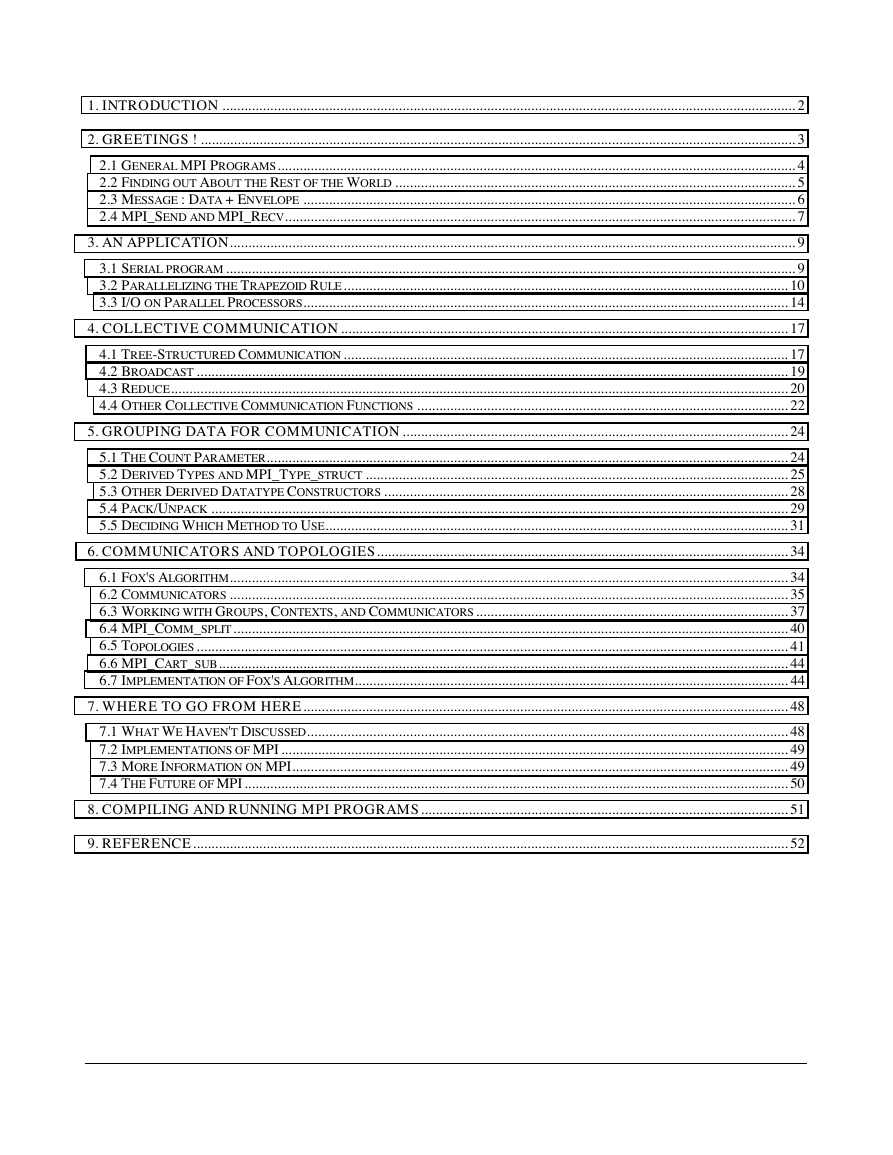

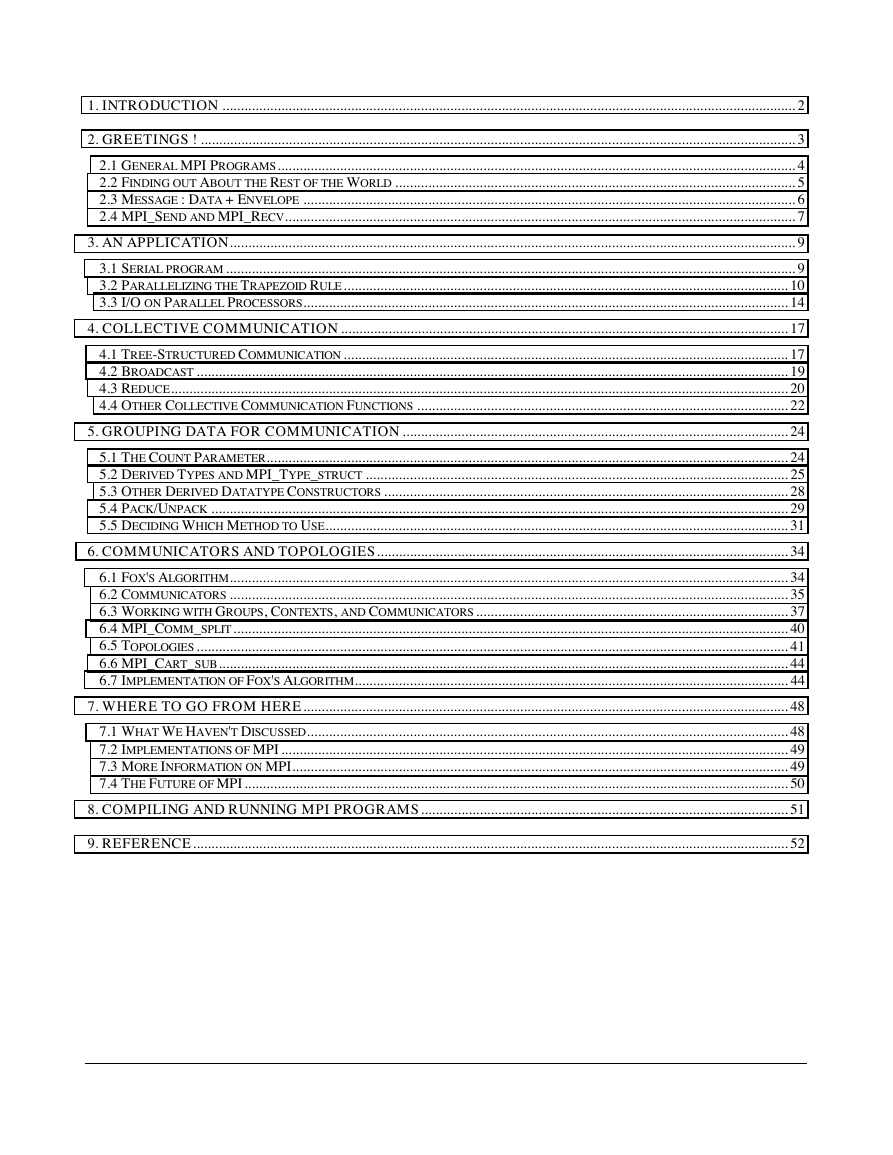

1. INTRODUCTION ............................................................................................................................................................ 2

2. GREETINGS ! .................................................................................................................................................................. 3

2.1 GENERAL MPI PROGRAMS............................................................................................................................................. 4

2.2 FINDING OUT ABOUT THE REST OF THE WORLD ............................................................................................................. 5

2.3 MESSAGE : DATA + ENVELOPE ...................................................................................................................................... 6

2.4 MPI_SEND AND MPI_RECV........................................................................................................................................... 7

3. AN APPLICATION.......................................................................................................................................................... 9

3.1 SERIAL PROGRAM ........................................................................................................................................................... 9

3.2 PARALLELIZING THE TRAPEZOID RULE......................................................................................................................... 10

3.3 I/O ON PARALLEL PROCESSORS.................................................................................................................................... 14

4. COLLECTIVE COMMUNICATION .......................................................................................................................... 17

4.1 TREE-STRUCTURED COMMUNICATION ......................................................................................................................... 17

4.2 BROADCAST ................................................................................................................................................................. 19

4.3 REDUCE........................................................................................................................................................................ 20

4.4 OTHER COLLECTIVE COMMUNICATION FUNCTIONS ..................................................................................................... 22

5. GROUPING DATA FOR COMMUNICATION ......................................................................................................... 24

5.1 THE COUNT PARAMETER.............................................................................................................................................. 24

5.2 DERIVED TYPES AND MPI_TYPE_STRUCT ................................................................................................................... 25

5.3 OTHER DERIVED DATATYPE CONSTRUCTORS .............................................................................................................. 28

5.4 PACK/UNPACK ............................................................................................................................................................. 29

5.5 DECIDING WHICH METHOD TO USE.............................................................................................................................. 31

6. COMMUNICATORS AND TOPOLOGIES................................................................................................................ 34

6.1 FOX'S ALGORITHM........................................................................................................................................................ 34

6.2 COMMUNICATORS ........................................................................................................................................................ 35

6.3 WORKING WITH GROUPS, CONTEXTS, AND COMMUNICATORS..................................................................................... 37

6.4 MPI_COMM_SPLIT....................................................................................................................................................... 40

6.5 TOPOLOGIES................................................................................................................................................................. 41

6.6 MPI_CART_SUB........................................................................................................................................................... 44

6.7 IMPLEMENTATION OF FOX'S ALGORITHM...................................................................................................................... 44

7. WHERE TO GO FROM HERE.................................................................................................................................... 48

7.1 WHAT WE HAVEN'T DISCUSSED................................................................................................................................... 48

7.2 IMPLEMENTATIONS OF MPI.......................................................................................................................................... 49

7.3 MORE INFORMATION ON MPI....................................................................................................................................... 49

7.4 THE FUTURE OF MPI.................................................................................................................................................... 50

8. COMPILING AND RUNNING MPI PROGRAMS.................................................................................................... 51

9. REFERENCE.................................................................................................................................................................. 52

�

1. Introduction

T

he Message-Passing Interface or MPI is a library of functions and macros that can be

used in C, FORTRAN, and C++ programs, As its name implies, MPI is intended for use

in programs that exploit the existence of multiple processors by message-passing.

MPI was developed in 1993-1994 by a group of researchers from industry, government, and

academia. As such, it is one of the first standards for programming parallel processors, and it is

the first that is based on message-passing.

In 1995, A User’s Guide to MPI has been written by Dr Peter S. Pacheco. This is a brief tutorial

introduction to some of the more important feature of the MPI for C programmers. It is a nicely

written documentation and users in our university find it very concise and easy to read.

However, many users of parallel computer are in the scientific and engineers community and

most of them use FORTRAN as their primary computer language. Most of them don’t use C

language proficiently. This situation occurs very frequently in Hong Kong. A a result, the “A

User’s Guide to MPI” is translated to this guide in Fortran to address for the need of scientific

programmers.

Acknowledgments. I gratefully acknowledge Dr Peter S. Pacheco for the use of C version of the

user guide on which this guide is based. I would also gratefully thanks to the Computer Centre of

the University of Hong Kong for their human resource support of this work. And I also thanks

to all the research institution which supported the original work by Dr Pacheco.

�

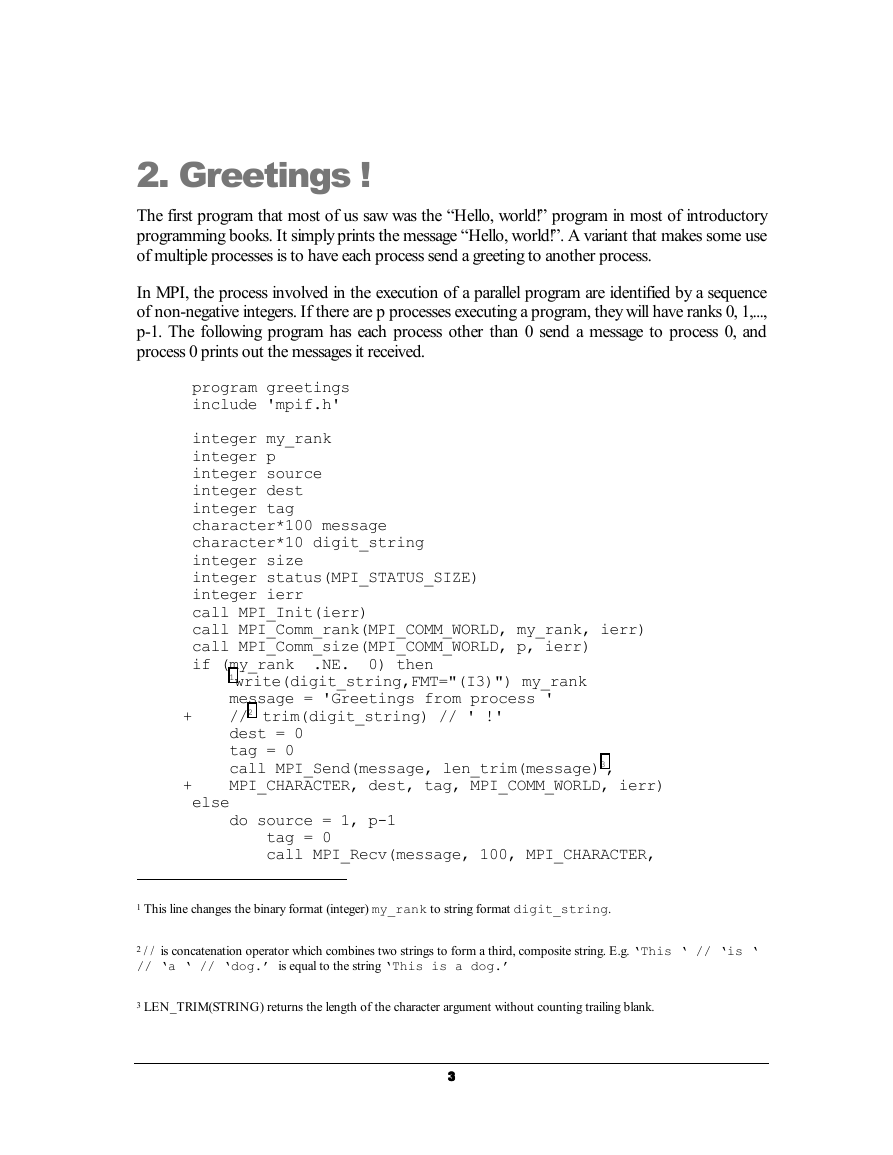

2. Greetings !

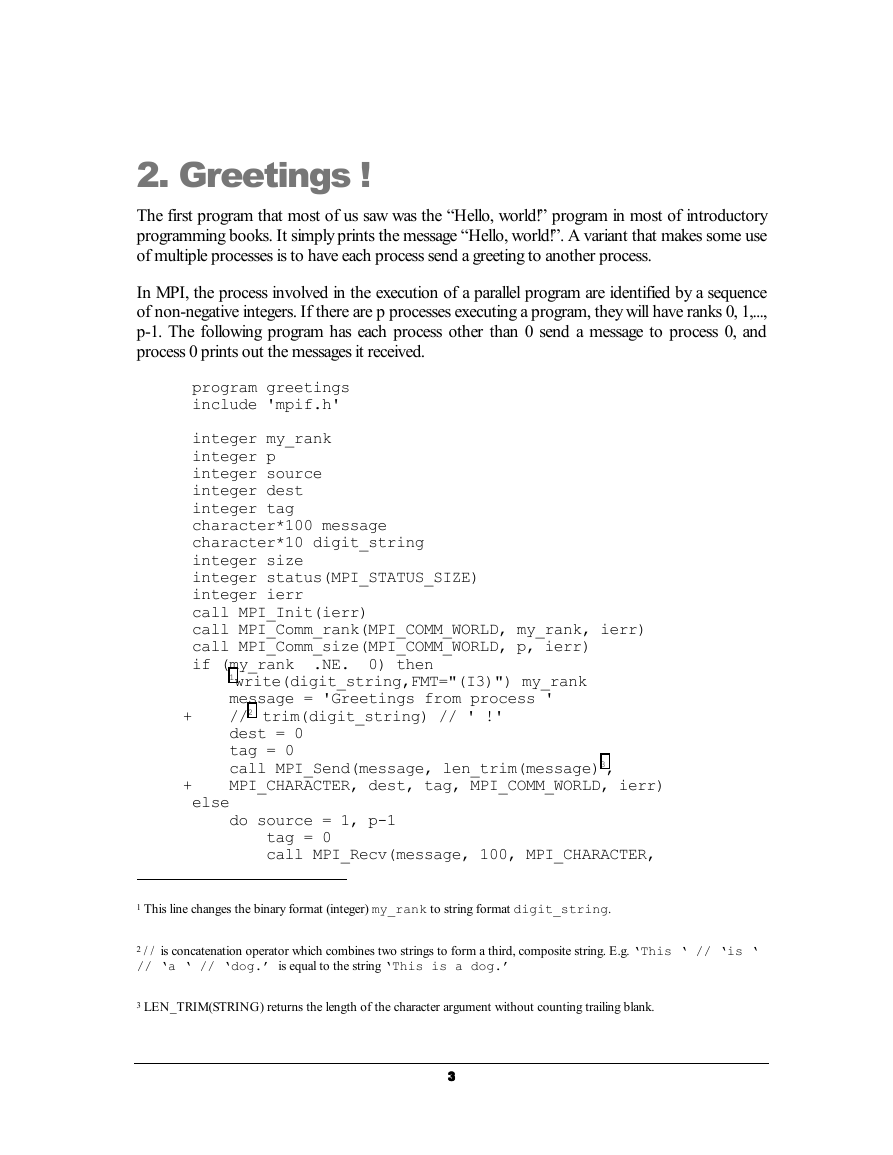

The first program that most of us saw was the “Hello, world!” program in most of introductory

programming books. It simply prints the message “Hello, world!”. A variant that makes some use

of multiple processes is to have each process send a greeting to another process.

In MPI, the process involved in the execution of a parallel program are identified by a sequence

of non-negative integers. If there are p processes executing a program, they will have ranks 0, 1,...,

p-1. The following program has each process other than 0 send a message to process 0, and

process 0 prints out the messages it received.

program greetings

include 'mpif.h'

integer my_rank

integer p

integer source

integer dest

integer tag

character*100 message

character*10 digit_string

integer size

integer status(MPI_STATUS_SIZE)

integer ierr

call MPI_Init(ierr)

call MPI_Comm_rank(MPI_COMM_WORLD, my_rank, ierr)

call MPI_Comm_size(MPI_COMM_WORLD, p, ierr)

if (my_rank .NE. 0) then

1write(digit_string,FMT="(I3)") my_rank

message = 'Greetings from process '

//2 trim(digit_string) // ' !'

dest = 0

tag = 0

call MPI_Send(message, len_trim(message)3,

MPI_CHARACTER, dest, tag, MPI_COMM_WORLD, ierr)

+

+

else

do source = 1, p-1

tag = 0

call MPI_Recv(message, 100, MPI_CHARACTER,

1 This line changes the binary format (integer) my_rank to string format digit_string.

2 // is concatenation operator which combines two strings to form a third, composite string. E.g. ‘This ‘ // ‘is ‘

// ‘a ‘ // ‘dog.’ is equal to the string ‘This is a dog.’

3 LEN_TRIM(STRING) returns the length of the character argument without counting trailing blank.

3333

�

+

source, tag, MPI_COMM_WORLD, status, ierr)

write(6,FMT="(A)") message

enddo

endif

call MPI_Finalize(ierr)

end program greetings

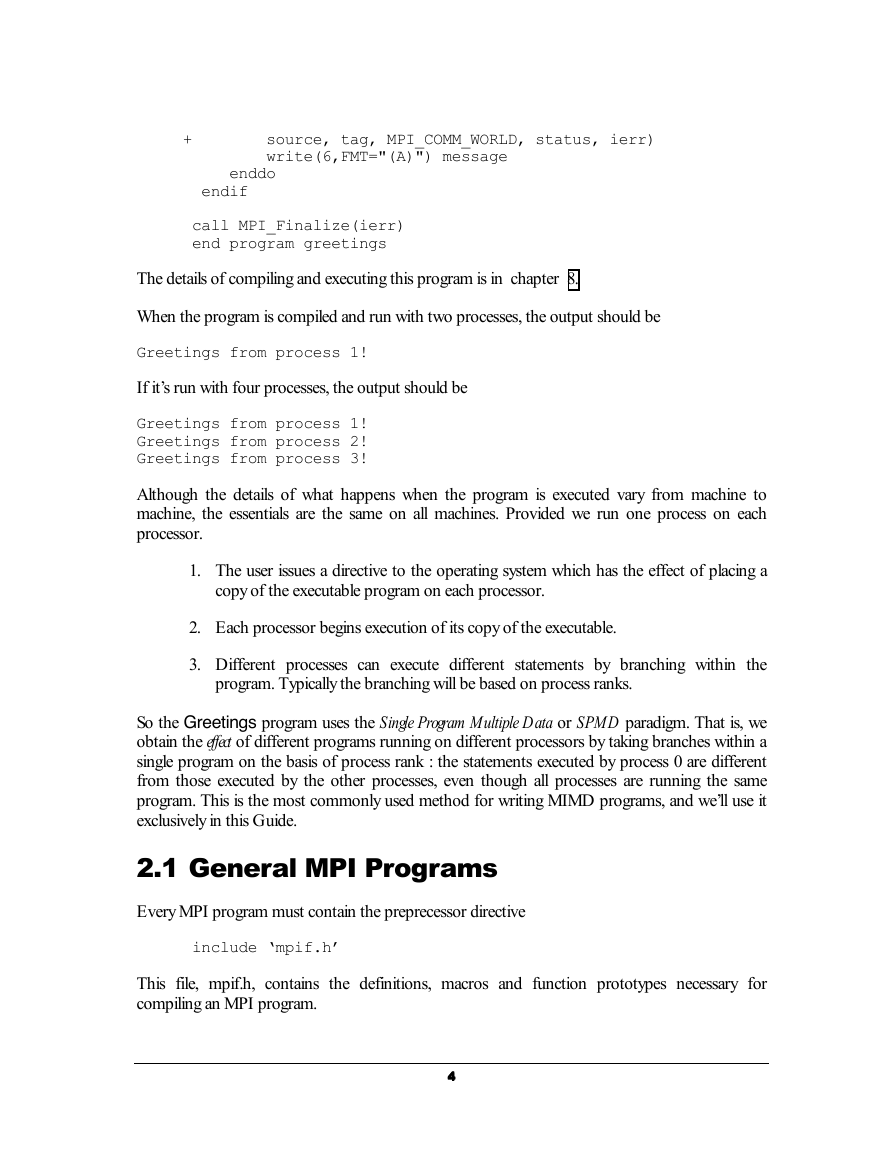

The details of compiling and executing this program is in chapter 8.

When the program is compiled and run with two processes, the output should be

Greetings from process 1!

If it’s run with four processes, the output should be

Greetings from process 1!

Greetings from process 2!

Greetings from process 3!

Although the details of what happens when the program is executed vary from machine to

machine, the essentials are the same on all machines. Provided we run one process on each

processor.

1. The user issues a directive to the operating system which has the effect of placing a

copy of the executable program on each processor.

2. Each processor begins execution of its copy of the executable.

3. Different processes can execute different statements by branching within the

program. Typically the branching will be based on process ranks.

So the Greetings program uses the Single Program Multiple Data or SPMD paradigm. That is, we

obtain the effect of different programs running on different processors by taking branches within a

single program on the basis of process rank : the statements executed by process 0 are different

from those executed by the other processes, even though all processes are running the same

program. This is the most commonly used method for writing MIMD programs, and we’ll use it

exclusively in this Guide.

2.1 General MPI Programs

Every MPI program must contain the preprecessor directive

include ‘mpif.h’

This file, mpif.h, contains the definitions, macros and function prototypes necessary for

compiling an MPI program.

4444

�

Before any other MPI functions can be called, the function MPI_Init must be called, and it

should only be called once. Fortran MPI routines have an IERROR argument - this contains the

error code. After a program has finished using MPI library, it must call MPI_Finialize. This

cleans up any “unfinished business” left by MPI - e.g. pending receives that were never

completed. So a typical MPI program has the following layout.

.

.

.

include 'mpif.h'

.

.

.

call MPI_Init(ierr)

.

.

.

call MPI_Finialize(ierr)

.

.

.

end program

2.2 Finding out About the Rest of the World

MPI provides the function MPI_Comm_rank, which returns the rank of a process in its second

in its second argument, Its syntax is

CALL MPI_COMM_RANK(COMM, RANK, IERROR)

INTEGER COMM, RANK, IERROR

The first argument is a communicator. Essentially a communicator is a collection of processes that

can send message to each other. For basic programs, the only communicator needed is

MPI_COMM_WORLD. It is predefined in MPI and consists of all the processes running when

program execution begins.

Many of the constructs in our programs also depend on the number of processes executing the

program. So MPI provides the functions MPI_Comm_size for determining this. Its first

argument is a communicator. It returns the number of processes in a communicator in its second

argument. Its syntax is

CALL MPI_COMM_SIZE(COMM, P, IERROR)

INTEGER COMM, P, IERROR

5555

�

2.3 Message : Data + Envelope

The actual message-passing in our program is carried out by the MPI functions MPI_Send and

MPI_Recv. The first command sends a message to a designated process. The second receives a

message from a process. These are the most basic message-passing commands in MPI. In order

for the message to be successfully communicated the system must append some information to

the data that the application program wishes to transmit. This additional information forms the

envelope of the message. In MPI it contains the following information.

1. The rank of the receiver.

2. The rank of the sender.

3. A tag.

4. A communicator.

These items can be used by the receiver to distinguish among incoming messages. The source

argument can be used to distinguish messages received from different processes. The tag is a user-

specified integer that can be used to distinguish messages received form a single process. For

example, suppose process A is sending two messages to process B; both messages contains a

single real number. One of the real number is to be used in a calculation, while the other is to be

printed. In order to determine which is which, A can use different tags for the two messages. If B

uses the same two tags in the corresponding receives, when it receives the messages, it will

“know” what to do with them. MPI guarantees that the integers 0-32767 can be used as tags.

Most implementations allow much larger values.

As we noted above, a communicator is basically a collection of processes that can send messages

to each other. When two processes are communicating using MPI_Send and MPI_Recv, its

importance arises when separate modules of a program have been written independently of each

other. For example, suppose we wish to solve a system of differential equations, and, in the

course of solving the system, we need to solve a system of linear equation. Rather than writing the

linear system solver from scratch, we might want to use a library of functions for solving linear

systems that was written by someone else and that has been highly optimized for the system we’re

using. How do we avoid confusing the messages we send from process A to process B with those

sent by the library functions ? Before the advent of communicators, we would probably have to

partition the set of valid tags, setting aside some of them for exclusive use by the library functions.

This is tedious and it will cause problems if we try to run our program on another system : the

other system’s linear solver may not (probably won’t) require the same set of tags. With the

advent of communicators, we simply create a communicator that can be used exclusively by the

linear solver, and pass it as an argument in calls to the solver. We’ll discuss the details of this later.

For now, we can get away with using the predefined communicator MPI_COMM_WORLD. It

consists of all the processes running the program when execution begins.

6666

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc