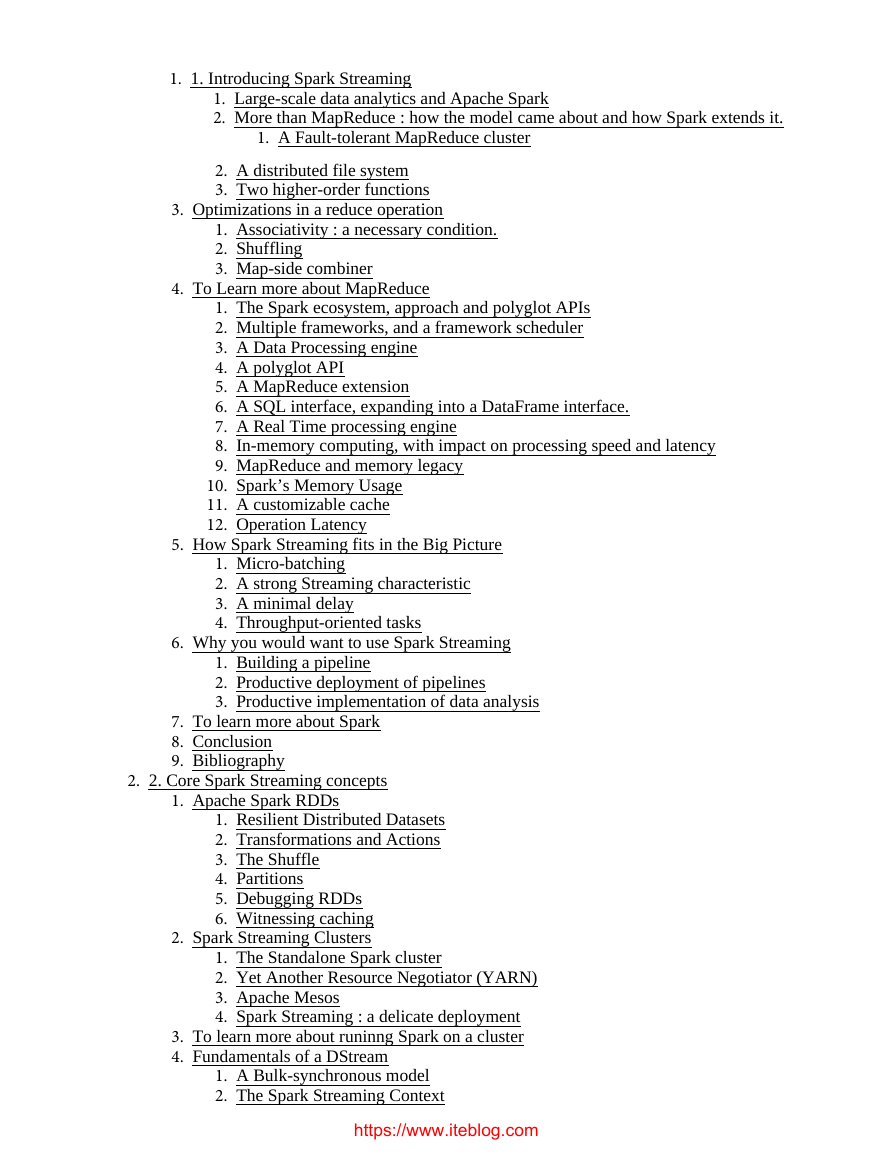

Chapter 1. Introducing Spark Streaming Large-scale data analytics and Apache Spark This book focuses on Spark Streaming, a streaming module for the Apache Spark computation engine, used in distributed data analytics. Spark’s Streaming capabilities came essentially as an addition throughout the thesis of its first and main developer, Tathagata Das. The concepts that Spark Streaming embodies were not all new at the time of its implementation, and it carries a rich legacy of learnings on how to expose an easy way to do distributed programming at a massive scale. Chief among these heirlooms is MapReduce, the Google-created work born at Google, and that gave rise to Hadoop - and which concepts we will sketch in a few pages. Users familiar with MapReduce may want to skip to “More than MapReduce : how the model came about and how Spark extends it.”. For others, we will introduce here the main tenets of Spark Streaming: an expressive, MapReduce-inspired programming API (Spark) and its resilien

Chapter 1. Introducing Spark Streaming

Chapter 2. Core Spark Streaming concepts Apache Spark RDDs A Spark Hello World The reader unfamiliar with how to test a Spark application will refer to ???. However, let us give a quick rundown of how to launch a spark shell for testing. Most distributions of Spark come with the spark-shell executable. Launching this executable creates an instance of the Scala REPL, with a custom spark context started for you. This creates an instance of a SparkSession object, by default named spark and an instance of the legacy SparkContext with the alias sc. Since Spark 2.0, the SparkSession is the recommended way to interact with Spark, except for Spark Streaming, which relies on the StreamingContext as we will see later on. For the sake of this small intro, we will use the SparkSession or spark to interact with the Spark API. The Spark Session needs a cluster to work. The easiest way to get started is using the default local cluster which gets automatically initialized at the start of the shell whe

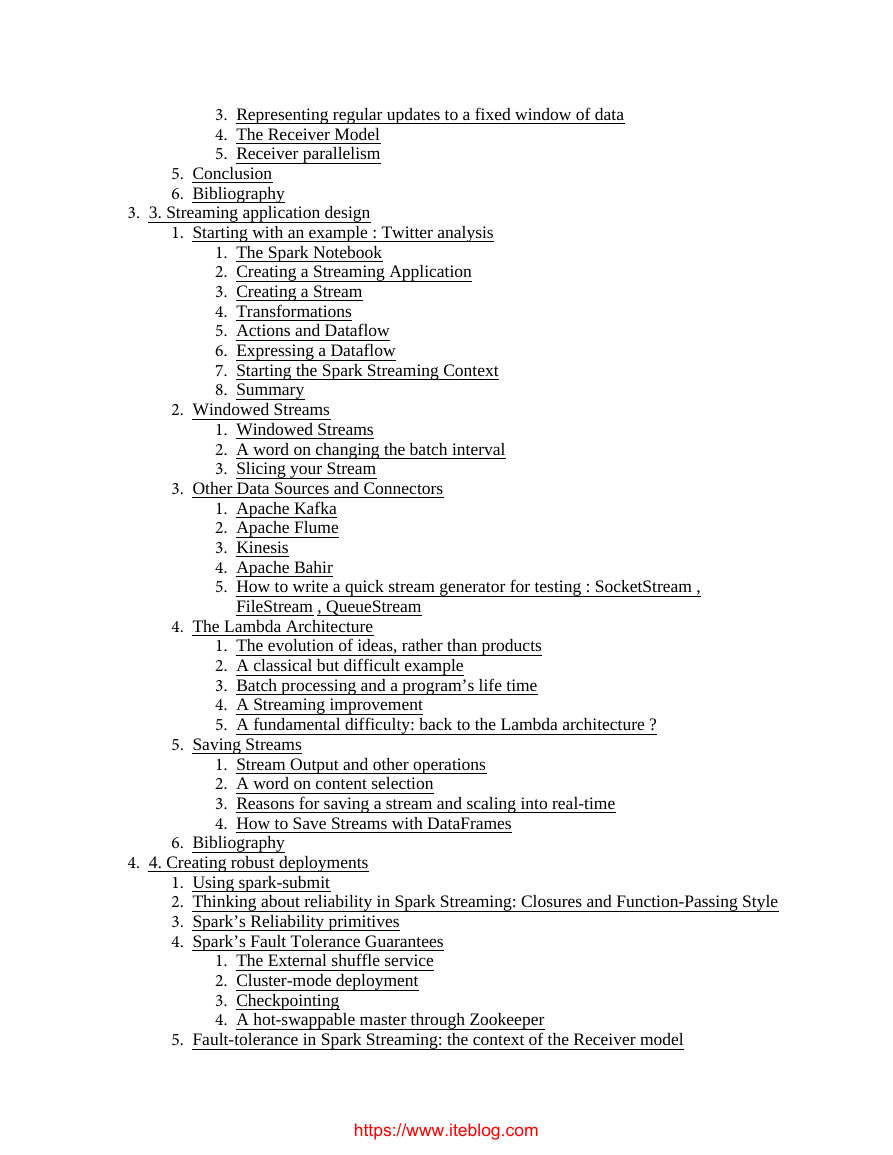

Chapter 2. Core Spark Streaming concepts

Chapter 3. Streaming application design Starting with an example : Twitter analysis Until now, we have developed mostly generalities on the functioning of a DStream and snippets of Scala code leading to stream usage. Now has come the time to put them together in an application. As we progress further, we will then study how to run this application most efficiently. The Spark Notebook Until now, we have created simple examples in the Spark shell. Beyond interactive shells, there is another way of approaching the development of Spark scripts, and that is interactive notebooks. So-called notebooks are web applications tied to a REPL (Read-Eval-Print Loop) — otherwise known as an interpreter —, They offer the ability to author code in an interactive web-based editor. The code can be immediately executed and the results are displayed back on the page. In contrast with the spark-shell, previoulsy executed commands become part of a single page, which can be read as a document or executed as a

Chapter 3. Streaming application design

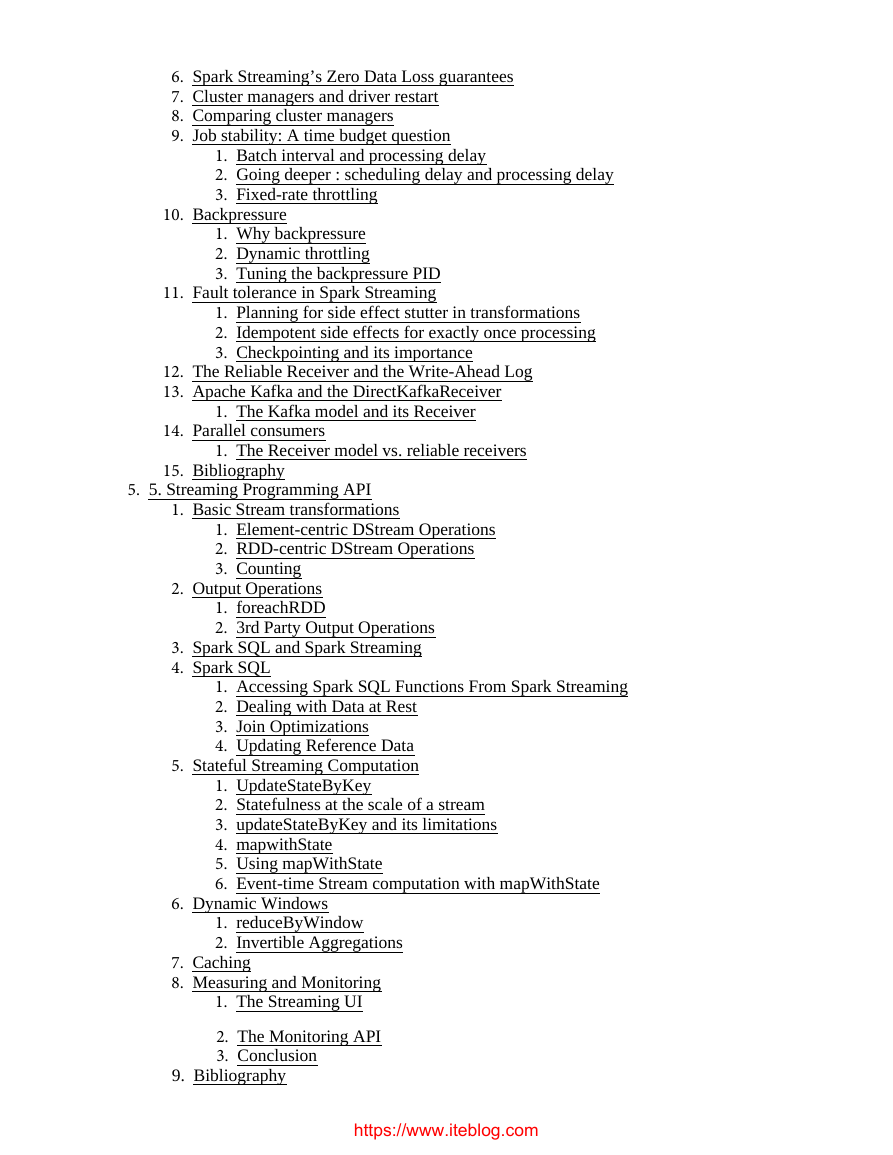

Chapter 4. Creating robust deployments Using spark-submit In the last chapter, we have studied how to create an interactive applicaiton using the Spark Notebook. To get a clearer idea of how Streaming applications are really used in production, we are now going to focus on doing this with Spark’s spark-submit script. Note that the stages used in this are important notions that will be reused in the application. Let’s consider again our Twitter streaming application from [Link to Come], that counted the most frequent hashtags in tweets. 1 package learning.streaming.demo 2 3 import org.apache.spark.streaming.{Seconds, StreamingContext} 4 import org.apache.spark.SparkContext._ 5 import StreamingContext._ 6 import org.apache.spark.streaming.twitter._ 7 8 /** 9 * Collect at least the specified number of tweets into json text files. 10 */ 11 object BestHashTags { 12 13 def configureTwitterCredentials(apiKey: String, 14 apiSecret: String, 15 accessToken: String, 16 accessTokenSecret: String)

Chapter 4. Creating robust deployments

Chapter 5. Streaming Programming API In this chapter we will take a detailed look at the Spark Streaming API. After a detailed review of the operations that constitute the DStream API we will learn how to interact with Spark SQL and get insights about the measuring and monitoring capabilities that will help us understand the performance characteristics of our Spark Streaming applications. In the Spark Streaming programming model, we can observe two broad levels of interaction: - Operations that apply to a single element of the stream and, - Operations that apply to the underlying RDD of each micro-batch. As we will learn through this chapter, these two levels correspond to the split of responsibilities in the interaction between the Spark core engine and Spark Streaming. We have seen how DStreams or Discreatized Streams are a streaming abstraction where the elements of the stream are grouped into micro batches. In turn, each micro-batch is represented by an RDD. At the execution level,

Chapter 5. Streaming Programming API

test

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc